Upload 28 files

Browse files- .gitignore +1 -0

- README.md +2 -0

- app.py +58 -0

- diffusion_module/__pycache__/nn.cpython-39.pyc +0 -0

- diffusion_module/__pycache__/unet.cpython-39.pyc +0 -0

- diffusion_module/__pycache__/unet_2d_blocks.cpython-39.pyc +0 -0

- diffusion_module/__pycache__/unet_2d_sdm.cpython-39.pyc +0 -0

- diffusion_module/nn.py +183 -0

- diffusion_module/unet.py +1315 -0

- diffusion_module/unet_2d_blocks.py +0 -0

- diffusion_module/unet_2d_sdm.py +357 -0

- diffusion_module/utils/LSDMPipeline_expandDataset.py +179 -0

- diffusion_module/utils/Pipline.py +361 -0

- diffusion_module/utils/__pycache__/LSDMPipeline_expandDataset.cpython-39.pyc +0 -0

- diffusion_module/utils/__pycache__/Pipline.cpython-310.pyc +0 -0

- diffusion_module/utils/__pycache__/Pipline.cpython-39.pyc +0 -0

- diffusion_module/utils/__pycache__/loss.cpython-39.pyc +0 -0

- diffusion_module/utils/loss.py +149 -0

- diffusion_module/utils/noise_sampler.py +16 -0

- diffusion_module/utils/scheduler_factory.py +300 -0

- evolution.py +102 -0

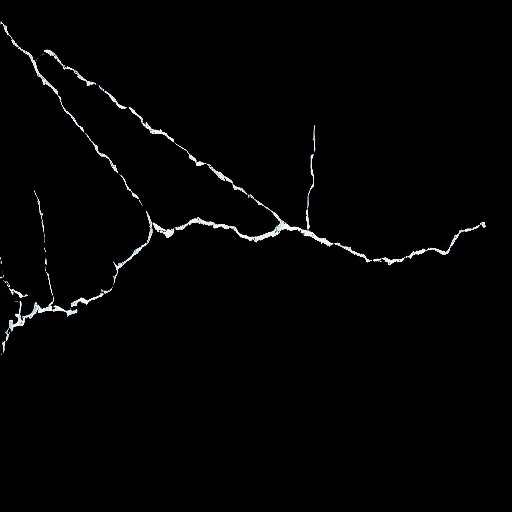

- figs/4.png +0 -0

- figs/4_1.jpg +0 -0

- figs/4_1.png +0 -0

- figs/4_1_mask.png +0 -0

- generate.py +106 -0

- requirements.txt +137 -0

.gitignore

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

pretrain_weights/

|

README.md

CHANGED

|

@@ -1,3 +1,5 @@

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

title: LSDM

|

| 3 |

emoji: 💻

|

|

|

|

| 1 |

+

-- LSDM for Crack Segmentation dataset expending

|

| 2 |

+

|

| 3 |

---

|

| 4 |

title: LSDM

|

| 5 |

emoji: 💻

|

app.py

ADDED

|

@@ -0,0 +1,58 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

from evolution import random_walk

|

| 3 |

+

from generate import generate

|

| 4 |

+

|

| 5 |

+

def process_random_walk(img):

|

| 6 |

+

img1, _ = random_walk(img)

|

| 7 |

+

|

| 8 |

+

return img1

|

| 9 |

+

|

| 10 |

+

def process_first_generation(img1, model_path="pretrain_weights/b2m/unet_ema"):

|

| 11 |

+

|

| 12 |

+

generated_images = generate(img1, model_path)

|

| 13 |

+

return generated_images[0]

|

| 14 |

+

|

| 15 |

+

def process_second_generation(img1, model_path="pretrain_weights/m2i/unet_ema"):

|

| 16 |

+

|

| 17 |

+

generated_images = generate(img1, model_path)

|

| 18 |

+

return generated_images[0]

|

| 19 |

+

|

| 20 |

+

# 创建 Gradio 接口

|

| 21 |

+

with gr.Blocks() as app:

|

| 22 |

+

with gr.Row():

|

| 23 |

+

with gr.Column():

|

| 24 |

+

input_image = gr.Image(value="figs/4.png", image_mode='L', type='numpy', label="Upload Grayscale Image")

|

| 25 |

+

|

| 26 |

+

process_button_1 = gr.Button("1. Process Evolution")

|

| 27 |

+

|

| 28 |

+

with gr.Column():

|

| 29 |

+

output_image_1 = gr.Image(value="figs/4_1.png", image_mode='L', type="numpy", label="After Evolution Image",sources=[])

|

| 30 |

+

process_button_2 = gr.Button("2. Generate Masks")

|

| 31 |

+

|

| 32 |

+

with gr.Row():

|

| 33 |

+

with gr.Column():

|

| 34 |

+

output_image_3 = gr.Image(value="figs/4_1_mask.png", image_mode='L', type="numpy", label="Generated Mask Image",sources=[])

|

| 35 |

+

process_button_3 = gr.Button("3. Generate Images")

|

| 36 |

+

with gr.Column():

|

| 37 |

+

output_image_5 = gr.Image(value="figs/4_1.jpg", type="numpy", image_mode='RGB', label="Final Generated Image 1",sources=[])

|

| 38 |

+

|

| 39 |

+

|

| 40 |

+

process_button_1.click(

|

| 41 |

+

process_random_walk,

|

| 42 |

+

inputs=[input_image],

|

| 43 |

+

outputs=[output_image_1]

|

| 44 |

+

)

|

| 45 |

+

|

| 46 |

+

process_button_2.click(

|

| 47 |

+

process_first_generation,

|

| 48 |

+

inputs=[output_image_1],

|

| 49 |

+

outputs=[output_image_3]

|

| 50 |

+

)

|

| 51 |

+

|

| 52 |

+

process_button_3.click(

|

| 53 |

+

process_second_generation,

|

| 54 |

+

inputs=[output_image_3],

|

| 55 |

+

outputs=[output_image_5]

|

| 56 |

+

)

|

| 57 |

+

|

| 58 |

+

app.launch()

|

diffusion_module/__pycache__/nn.cpython-39.pyc

ADDED

|

Binary file (6.26 kB). View file

|

|

|

diffusion_module/__pycache__/unet.cpython-39.pyc

ADDED

|

Binary file (29.6 kB). View file

|

|

|

diffusion_module/__pycache__/unet_2d_blocks.cpython-39.pyc

ADDED

|

Binary file (57.3 kB). View file

|

|

|

diffusion_module/__pycache__/unet_2d_sdm.cpython-39.pyc

ADDED

|

Binary file (10.7 kB). View file

|

|

|

diffusion_module/nn.py

ADDED

|

@@ -0,0 +1,183 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

Various utilities for neural networks.

|

| 3 |

+

"""

|

| 4 |

+

|

| 5 |

+

import math

|

| 6 |

+

|

| 7 |

+

import torch as th

|

| 8 |

+

import torch.nn as nn

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

def convert_module_to_f16(l):

|

| 12 |

+

"""

|

| 13 |

+

Convert primitive modules to float16.

|

| 14 |

+

"""

|

| 15 |

+

if isinstance(l, (nn.Conv1d, nn.Conv2d, nn.Conv3d)):

|

| 16 |

+

l.weight.data = l.weight.data.half()

|

| 17 |

+

if l.bias is not None:

|

| 18 |

+

l.bias.data = l.bias.data.half()

|

| 19 |

+

|

| 20 |

+

# PyTorch 1.7 has SiLU, but we support PyTorch 1.5.

|

| 21 |

+

class SiLU(nn.Module):

|

| 22 |

+

def forward(self, x):

|

| 23 |

+

return x * th.sigmoid(x)

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

class GroupNorm32(nn.GroupNorm):

|

| 27 |

+

def forward(self, x):

|

| 28 |

+

#print(x.float().dtype)

|

| 29 |

+

return super().forward(x).type(x.dtype)

|

| 30 |

+

|

| 31 |

+

|

| 32 |

+

def conv_nd(dims, *args, **kwargs):

|

| 33 |

+

"""

|

| 34 |

+

Create a 1D, 2D, or 3D convolution module.

|

| 35 |

+

"""

|

| 36 |

+

if dims == 1:

|

| 37 |

+

return nn.Conv1d(*args, **kwargs)

|

| 38 |

+

elif dims == 2:

|

| 39 |

+

return nn.Conv2d(*args, **kwargs)

|

| 40 |

+

elif dims == 3:

|

| 41 |

+

return nn.Conv3d(*args, **kwargs)

|

| 42 |

+

raise ValueError(f"unsupported dimensions: {dims}")

|

| 43 |

+

|

| 44 |

+

|

| 45 |

+

def linear(*args, **kwargs):

|

| 46 |

+

"""

|

| 47 |

+

Create a linear module.

|

| 48 |

+

"""

|

| 49 |

+

return nn.Linear(*args, **kwargs)

|

| 50 |

+

|

| 51 |

+

|

| 52 |

+

def avg_pool_nd(dims, *args, **kwargs):

|

| 53 |

+

"""

|

| 54 |

+

Create a 1D, 2D, or 3D average pooling module.

|

| 55 |

+

"""

|

| 56 |

+

if dims == 1:

|

| 57 |

+

return nn.AvgPool1d(*args, **kwargs)

|

| 58 |

+

elif dims == 2:

|

| 59 |

+

return nn.AvgPool2d(*args, **kwargs)

|

| 60 |

+

elif dims == 3:

|

| 61 |

+

return nn.AvgPool3d(*args, **kwargs)

|

| 62 |

+

raise ValueError(f"unsupported dimensions: {dims}")

|

| 63 |

+

|

| 64 |

+

|

| 65 |

+

def update_ema(target_params, source_params, rate=0.99):

|

| 66 |

+

"""

|

| 67 |

+

Update target parameters to be closer to those of source parameters using

|

| 68 |

+

an exponential moving average.

|

| 69 |

+

|

| 70 |

+

:param target_params: the target parameter sequence.

|

| 71 |

+

:param source_params: the source parameter sequence.

|

| 72 |

+

:param rate: the EMA rate (closer to 1 means slower).

|

| 73 |

+

"""

|

| 74 |

+

for targ, src in zip(target_params, source_params):

|

| 75 |

+

targ.detach().mul_(rate).add_(src, alpha=1 - rate)

|

| 76 |

+

|

| 77 |

+

|

| 78 |

+

def zero_module(module):

|

| 79 |

+

"""

|

| 80 |

+

Zero out the parameters of a module and return it.

|

| 81 |

+

"""

|

| 82 |

+

for p in module.parameters():

|

| 83 |

+

p.detach().zero_()

|

| 84 |

+

return module

|

| 85 |

+

|

| 86 |

+

|

| 87 |

+

def scale_module(module, scale):

|

| 88 |

+

"""

|

| 89 |

+

Scale the parameters of a module and return it.

|

| 90 |

+

"""

|

| 91 |

+

for p in module.parameters():

|

| 92 |

+

p.detach().mul_(scale)

|

| 93 |

+

return module

|

| 94 |

+

|

| 95 |

+

|

| 96 |

+

def mean_flat(tensor):

|

| 97 |

+

"""

|

| 98 |

+

Take the mean over all non-batch dimensions.

|

| 99 |

+

"""

|

| 100 |

+

return tensor.mean(dim=list(range(1, len(tensor.shape))))

|

| 101 |

+

|

| 102 |

+

|

| 103 |

+

def normalization(channels):

|

| 104 |

+

"""

|

| 105 |

+

Make a standard normalization layer.

|

| 106 |

+

|

| 107 |

+

:param channels: number of input channels.

|

| 108 |

+

:return: an nn.Module for normalization.

|

| 109 |

+

"""

|

| 110 |

+

return GroupNorm32(32, channels)

|

| 111 |

+

|

| 112 |

+

|

| 113 |

+

def timestep_embedding(timesteps, dim, max_period=10000):

|

| 114 |

+

"""

|

| 115 |

+

Create sinusoidal timestep embeddings.

|

| 116 |

+

|

| 117 |

+

:param timesteps: a 1-D Tensor of N indices, one per batch element.

|

| 118 |

+

These may be fractional.

|

| 119 |

+

:param dim: the dimension of the output.

|

| 120 |

+

:param max_period: controls the minimum frequency of the embeddings.

|

| 121 |

+

:return: an [N x dim] Tensor of positional embeddings.

|

| 122 |

+

"""

|

| 123 |

+

half = dim // 2

|

| 124 |

+

freqs = th.exp(

|

| 125 |

+

-math.log(max_period) * th.arange(start=0, end=half, dtype=th.float32) / half

|

| 126 |

+

).to(device=timesteps.device)

|

| 127 |

+

args = timesteps[:, None].float() * freqs[None]

|

| 128 |

+

embedding = th.cat([th.cos(args), th.sin(args)], dim=-1)

|

| 129 |

+

if dim % 2:

|

| 130 |

+

embedding = th.cat([embedding, th.zeros_like(embedding[:, :1])], dim=-1)

|

| 131 |

+

return embedding

|

| 132 |

+

|

| 133 |

+

|

| 134 |

+

def checkpoint(func, inputs, params, flag):

|

| 135 |

+

"""

|

| 136 |

+

Evaluate a function without caching intermediate activations, allowing for

|

| 137 |

+

reduced memory at the expense of extra compute in the backward pass.

|

| 138 |

+

|

| 139 |

+

:param func: the function to evaluate.

|

| 140 |

+

:param inputs: the argument sequence to pass to `func`.

|

| 141 |

+

:param params: a sequence of parameters `func` depends on but does not

|

| 142 |

+

explicitly take as arguments.

|

| 143 |

+

:param flag: if False, disable gradient checkpointing.

|

| 144 |

+

"""

|

| 145 |

+

if flag:

|

| 146 |

+

args = tuple(inputs) + tuple(params)

|

| 147 |

+

#return th.utils.checkpoint.checkpoint.apply(func, inputs)

|

| 148 |

+

return CheckpointFunction.apply(func, len(inputs), *args)

|

| 149 |

+

else:

|

| 150 |

+

return func(*inputs)

|

| 151 |

+

|

| 152 |

+

|

| 153 |

+

class CheckpointFunction(th.autograd.Function):

|

| 154 |

+

@staticmethod

|

| 155 |

+

def forward(ctx, run_function, length, *args):

|

| 156 |

+

ctx.run_function = run_function

|

| 157 |

+

ctx.input_tensors = list(args[:length])

|

| 158 |

+

ctx.input_params = list(args[length:])

|

| 159 |

+

breakpoint()

|

| 160 |

+

with th.no_grad():

|

| 161 |

+

output_tensors = ctx.run_function(*ctx.input_tensors)

|

| 162 |

+

return output_tensors

|

| 163 |

+

|

| 164 |

+

@staticmethod

|

| 165 |

+

def backward(ctx, *output_grads):

|

| 166 |

+

ctx.input_tensors = [x.detach().requires_grad_(True) for x in ctx.input_tensors]

|

| 167 |

+

with th.enable_grad():

|

| 168 |

+

# Fixes a bug where the first op in run_function modifies the

|

| 169 |

+

# Tensor storage in place, which is not allowed for detach()'d

|

| 170 |

+

# Tensors.

|

| 171 |

+

shallow_copies = [x.view_as(x) for x in ctx.input_tensors]

|

| 172 |

+

breakpoint()

|

| 173 |

+

output_tensors = ctx(*shallow_copies)

|

| 174 |

+

input_grads = th.autograd.grad(

|

| 175 |

+

output_tensors,

|

| 176 |

+

ctx.input_tensors + ctx.input_params,

|

| 177 |

+

output_grads,

|

| 178 |

+

allow_unused=True,

|

| 179 |

+

)

|

| 180 |

+

del ctx.input_tensors

|

| 181 |

+

del ctx.input_params

|

| 182 |

+

del output_tensors

|

| 183 |

+

return (None, None) + input_grads

|

diffusion_module/unet.py

ADDED

|

@@ -0,0 +1,1315 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from abc import abstractmethod

|

| 2 |

+

|

| 3 |

+

import math

|

| 4 |

+

|

| 5 |

+

import numpy as np

|

| 6 |

+

import torch as th

|

| 7 |

+

import torch.nn as nn

|

| 8 |

+

import torch.nn.functional as F

|

| 9 |

+

|

| 10 |

+

from .nn import (

|

| 11 |

+

SiLU,

|

| 12 |

+

checkpoint,

|

| 13 |

+

conv_nd,

|

| 14 |

+

linear,

|

| 15 |

+

avg_pool_nd,

|

| 16 |

+

zero_module,

|

| 17 |

+

normalization,

|

| 18 |

+

timestep_embedding,

|

| 19 |

+

convert_module_to_f16

|

| 20 |

+

)

|

| 21 |

+

|

| 22 |

+

from diffusers.configuration_utils import ConfigMixin, register_to_config

|

| 23 |

+

from diffusers.utils import BaseOutput

|

| 24 |

+

from diffusers.models.modeling_utils import ModelMixin

|

| 25 |

+

from dataclasses import dataclass

|

| 26 |

+

|

| 27 |

+

@dataclass

|

| 28 |

+

class UNet2DOutput(BaseOutput):

|

| 29 |

+

"""

|

| 30 |

+

Args:

|

| 31 |

+

sample (`torch.FloatTensor` of shape `(batch_size, num_channels, height, width)`):

|

| 32 |

+

Hidden states output. Output of last layer of model.

|

| 33 |

+

"""

|

| 34 |

+

|

| 35 |

+

sample: th.FloatTensor

|

| 36 |

+

|

| 37 |

+

|

| 38 |

+

class AttentionPool2d(nn.Module):

|

| 39 |

+

"""

|

| 40 |

+

Adapted from CLIP: https://github.com/openai/CLIP/blob/main/clip/model.py

|

| 41 |

+

"""

|

| 42 |

+

|

| 43 |

+

def __init__(

|

| 44 |

+

self,

|

| 45 |

+

spacial_dim: int,

|

| 46 |

+

embed_dim: int,

|

| 47 |

+

num_heads_channels: int,

|

| 48 |

+

output_dim: int = None,

|

| 49 |

+

):

|

| 50 |

+

super().__init__()

|

| 51 |

+

self.positional_embedding = nn.Parameter(

|

| 52 |

+

th.randn(embed_dim, spacial_dim ** 2 + 1) / embed_dim ** 0.5

|

| 53 |

+

)

|

| 54 |

+

self.qkv_proj = conv_nd(1, embed_dim, 3 * embed_dim, 1)

|

| 55 |

+

self.c_proj = conv_nd(1, embed_dim, output_dim or embed_dim, 1)

|

| 56 |

+

self.num_heads = embed_dim // num_heads_channels

|

| 57 |

+

self.attention = QKVAttention(self.num_heads)

|

| 58 |

+

|

| 59 |

+

def forward(self, x):

|

| 60 |

+

b, c, *_spatial = x.shape

|

| 61 |

+

x = x.reshape(b, c, -1) # NC(HW)

|

| 62 |

+

x = th.cat([x.mean(dim=-1, keepdim=True), x], dim=-1) # NC(HW+1)

|

| 63 |

+

x = x + self.positional_embedding[None, :, :].to(x.dtype) # NC(HW+1)

|

| 64 |

+

x = self.qkv_proj(x)

|

| 65 |

+

x = self.attention(x)

|

| 66 |

+

x = self.c_proj(x)

|

| 67 |

+

return x[:, :, 0]

|

| 68 |

+

|

| 69 |

+

|

| 70 |

+

class TimestepBlock(nn.Module):

|

| 71 |

+

"""

|

| 72 |

+

Any module where forward() takes timestep embeddings as a second argument.

|

| 73 |

+

"""

|

| 74 |

+

|

| 75 |

+

@abstractmethod

|

| 76 |

+

def forward(self, x, emb):

|

| 77 |

+

"""

|

| 78 |

+

Apply the module to `x` given `emb` timestep embeddings.

|

| 79 |

+

"""

|

| 80 |

+

|

| 81 |

+

class CondTimestepBlock(nn.Module):

|

| 82 |

+

"""

|

| 83 |

+

Any module where forward() takes timestep embeddings as a second argument.

|

| 84 |

+

"""

|

| 85 |

+

|

| 86 |

+

@abstractmethod

|

| 87 |

+

def forward(self, x, cond, emb):

|

| 88 |

+

"""

|

| 89 |

+

Apply the module to `x` given `emb` timestep embeddings.

|

| 90 |

+

"""

|

| 91 |

+

"""

|

| 92 |

+

class TimestepEmbedSequential(nn.Sequential, TimestepBlock, CondTimestepBlock):

|

| 93 |

+

|

| 94 |

+

def forward(self, x, cond, emb):

|

| 95 |

+

for layer in self:

|

| 96 |

+

if isinstance(layer, CondTimestepBlock):

|

| 97 |

+

x = layer(x, cond, emb)

|

| 98 |

+

elif isinstance(layer, TimestepBlock):

|

| 99 |

+

x = layer(x, emb)

|

| 100 |

+

else:

|

| 101 |

+

x = layer(x)

|

| 102 |

+

return x

|

| 103 |

+

"""

|

| 104 |

+

|

| 105 |

+

class TimestepEmbedSequential(nn.Sequential, TimestepBlock, CondTimestepBlock):

|

| 106 |

+

def forward(self, x, cond, emb):

|

| 107 |

+

outputs_list = [] # 创建一个空列表来存储第二个输出

|

| 108 |

+

for layer in self:

|

| 109 |

+

if isinstance(layer, CondTimestepBlock):

|

| 110 |

+

# 调用layer并检查输出是否为一个元组

|

| 111 |

+

result = layer(x, cond, emb)

|

| 112 |

+

if isinstance(result, tuple) and len(result) == 2:

|

| 113 |

+

x, additional_output = result

|

| 114 |

+

outputs_list.append(additional_output) # 将第二个输出添加到列表

|

| 115 |

+

else:

|

| 116 |

+

x = result

|

| 117 |

+

elif isinstance(layer, TimestepBlock):

|

| 118 |

+

x = layer(x, emb)

|

| 119 |

+

else:

|

| 120 |

+

x = layer(x)

|

| 121 |

+

|

| 122 |

+

if outputs_list == []:

|

| 123 |

+

return x

|

| 124 |

+

else:

|

| 125 |

+

return x, outputs_list # 返回最终的x和所有附加输出的列表

|

| 126 |

+

|

| 127 |

+

|

| 128 |

+

|

| 129 |

+

class Upsample(nn.Module):

|

| 130 |

+

"""

|

| 131 |

+

An upsampling layer with an optional convolution.

|

| 132 |

+

|

| 133 |

+

:param channels: channels in the inputs and outputs.

|

| 134 |

+

:param use_conv: a bool determining if a convolution is applied.

|

| 135 |

+

:param dims: determines if the signal is 1D, 2D, or 3D. If 3D, then

|

| 136 |

+

upsampling occurs in the inner-two dimensions.

|

| 137 |

+

"""

|

| 138 |

+

|

| 139 |

+

def __init__(self, channels, use_conv, dims=2, out_channels=None):

|

| 140 |

+

super().__init__()

|

| 141 |

+

self.channels = channels

|

| 142 |

+

self.out_channels = out_channels or channels

|

| 143 |

+

self.use_conv = use_conv

|

| 144 |

+

self.dims = dims

|

| 145 |

+

if use_conv:

|

| 146 |

+

self.conv = conv_nd(dims, self.channels, self.out_channels, 3, padding=1)

|

| 147 |

+

|

| 148 |

+

def forward(self, x):

|

| 149 |

+

assert x.shape[1] == self.channels

|

| 150 |

+

if self.dims == 3:

|

| 151 |

+

x = F.interpolate(

|

| 152 |

+

x, (x.shape[2], x.shape[3] * 2, x.shape[4] * 2), mode="nearest"

|

| 153 |

+

)

|

| 154 |

+

else:

|

| 155 |

+

x = F.interpolate(x, scale_factor=2, mode="nearest")

|

| 156 |

+

if self.use_conv:

|

| 157 |

+

x = self.conv(x)

|

| 158 |

+

return x

|

| 159 |

+

|

| 160 |

+

|

| 161 |

+

class Downsample(nn.Module):

|

| 162 |

+

"""

|

| 163 |

+

A downsampling layer with an optional convolution.

|

| 164 |

+

|

| 165 |

+

:param channels: channels in the inputs and outputs.

|

| 166 |

+

:param use_conv: a bool determining if a convolution is applied.

|

| 167 |

+

:param dims: determines if the signal is 1D, 2D, or 3D. If 3D, then

|

| 168 |

+

downsampling occurs in the inner-two dimensions.

|

| 169 |

+

"""

|

| 170 |

+

|

| 171 |

+

def __init__(self, channels, use_conv, dims=2, out_channels=None):

|

| 172 |

+

super().__init__()

|

| 173 |

+

self.channels = channels

|

| 174 |

+

self.out_channels = out_channels or channels

|

| 175 |

+

self.use_conv = use_conv

|

| 176 |

+

self.dims = dims

|

| 177 |

+

stride = 2 if dims != 3 else (1, 2, 2)

|

| 178 |

+

if use_conv:

|

| 179 |

+

self.op = conv_nd(

|

| 180 |

+

dims, self.channels, self.out_channels, 3, stride=stride, padding=1

|

| 181 |

+

)

|

| 182 |

+

else:

|

| 183 |

+

assert self.channels == self.out_channels

|

| 184 |

+

self.op = avg_pool_nd(dims, kernel_size=stride, stride=stride)

|

| 185 |

+

|

| 186 |

+

def forward(self, x):

|

| 187 |

+

assert x.shape[1] == self.channels

|

| 188 |

+

return self.op(x)

|

| 189 |

+

|

| 190 |

+

|

| 191 |

+

class SPADEGroupNorm(nn.Module):

|

| 192 |

+

def __init__(self, norm_nc, label_nc, eps = 1e-5,debug = False):

|

| 193 |

+

super().__init__()

|

| 194 |

+

self.debug = debug

|

| 195 |

+

self.norm = nn.GroupNorm(32, norm_nc, affine=False) # 32/16

|

| 196 |

+

|

| 197 |

+

self.eps = eps

|

| 198 |

+

nhidden = 128

|

| 199 |

+

self.mlp_shared = nn.Sequential(

|

| 200 |

+

nn.Conv2d(label_nc, nhidden, kernel_size=3, padding=1),

|

| 201 |

+

nn.ReLU(),

|

| 202 |

+

)

|

| 203 |

+

self.mlp_gamma = nn.Conv2d(nhidden, norm_nc, kernel_size=3, padding=1)

|

| 204 |

+

self.mlp_beta = nn.Conv2d(nhidden, norm_nc, kernel_size=3, padding=1)

|

| 205 |

+

|

| 206 |

+

def forward(self, x, segmap):

|

| 207 |

+

# Part 1. generate parameter-free normalized activations

|

| 208 |

+

x = self.norm(x)

|

| 209 |

+

|

| 210 |

+

# Part 2. produce scaling and bias conditioned on semantic map

|

| 211 |

+

segmap = F.interpolate(segmap, size=x.size()[2:], mode='nearest')

|

| 212 |

+

actv = self.mlp_shared(segmap)

|

| 213 |

+

gamma = self.mlp_gamma(actv)

|

| 214 |

+

beta = self.mlp_beta(actv)

|

| 215 |

+

|

| 216 |

+

# apply scale and bias

|

| 217 |

+

if self.debug:

|

| 218 |

+

return x * (1 + gamma) + beta, (beta.detach().cpu(), gamma.detach().cpu())

|

| 219 |

+

else:

|

| 220 |

+

return x * (1 + gamma) + beta

|

| 221 |

+

|

| 222 |

+

|

| 223 |

+

class AdaIN(nn.Module):

|

| 224 |

+

def __init__(self, num_features):

|

| 225 |

+

super().__init__()

|

| 226 |

+

self.instance_norm = th.nn.InstanceNorm2d(num_features, affine=False, track_running_stats=False)

|

| 227 |

+

|

| 228 |

+

def forward(self, x, alpha, gamma):

|

| 229 |

+

assert x.shape[:2] == alpha.shape[:2] == gamma.shape[:2]

|

| 230 |

+

norm = self.instance_norm(x)

|

| 231 |

+

return alpha * norm + gamma

|

| 232 |

+

|

| 233 |

+

class RESAILGroupNorm(nn.Module):

|

| 234 |

+

def __init__(self, norm_nc, label_nc, guidance_nc, eps = 1e-5):

|

| 235 |

+

super().__init__()

|

| 236 |

+

|

| 237 |

+

self.norm = nn.GroupNorm(32, norm_nc, affine=False) # 32/16

|

| 238 |

+

|

| 239 |

+

# SPADE

|

| 240 |

+

self.eps = eps

|

| 241 |

+

nhidden = 128

|

| 242 |

+

self.mask_mlp_shared = nn.Sequential(

|

| 243 |

+

nn.Conv2d(label_nc, nhidden, kernel_size=3, padding=1),

|

| 244 |

+

nn.ReLU(),

|

| 245 |

+

)

|

| 246 |

+

|

| 247 |

+

self.mask_mlp_gamma = nn.Conv2d(nhidden, norm_nc, kernel_size=3, padding=1)

|

| 248 |

+

self.mask_mlp_beta = nn.Conv2d(nhidden, norm_nc, kernel_size=3, padding=1)

|

| 249 |

+

|

| 250 |

+

|

| 251 |

+

# Guidance

|

| 252 |

+

|

| 253 |

+

self.conv_s = th.nn.Conv2d(label_nc, nhidden * 2, 3, 2)

|

| 254 |

+

self.pool_s = th.nn.AdaptiveAvgPool2d(1)

|

| 255 |

+

self.conv_s2 = th.nn.Conv2d(nhidden * 2, nhidden * 2, 1, 1)

|

| 256 |

+

|

| 257 |

+

self.conv1 = th.nn.Conv2d(guidance_nc, nhidden, 3, 1, padding=1)

|

| 258 |

+

self.adaIn1 = AdaIN(norm_nc * 2)

|

| 259 |

+

self.relu1 = nn.ReLU()

|

| 260 |

+

|

| 261 |

+

self.conv2 = th.nn.Conv2d(nhidden, nhidden, 3, 1, padding=1)

|

| 262 |

+

self.adaIn2 = AdaIN(norm_nc * 2)

|

| 263 |

+

self.relu2 = nn.ReLU()

|

| 264 |

+

self.conv3 = th.nn.Conv2d(nhidden, nhidden, 3, 1, padding=1)

|

| 265 |

+

|

| 266 |

+

self.guidance_mlp_gamma = nn.Conv2d(nhidden, norm_nc, kernel_size=3, padding=1)

|

| 267 |

+

self.guidance_mlp_beta = nn.Conv2d(nhidden, norm_nc, kernel_size=3, padding=1)

|

| 268 |

+

|

| 269 |

+

self.blending_gamma = nn.Parameter(th.zeros(1), requires_grad=True)

|

| 270 |

+

self.blending_beta = nn.Parameter(th.zeros(1), requires_grad=True)

|

| 271 |

+

self.norm_nc = norm_nc

|

| 272 |

+

|

| 273 |

+

def forward(self, x, segmap, guidance):

|

| 274 |

+

# Part 1. generate parameter-free normalized activations

|

| 275 |

+

x = self.norm(x)

|

| 276 |

+

# Part 2. produce scaling and bias conditioned on semantic map

|

| 277 |

+

segmap = F.interpolate(segmap, size=x.size()[2:], mode='nearest')

|

| 278 |

+

mask_actv = self.mask_mlp_shared(segmap)

|

| 279 |

+

mask_gamma = self.mask_mlp_gamma(mask_actv)

|

| 280 |

+

mask_beta = self.mask_mlp_beta(mask_actv)

|

| 281 |

+

|

| 282 |

+

|

| 283 |

+

# Part 3. produce scaling and bias conditioned on feature guidance

|

| 284 |

+

guidance = F.interpolate(guidance, size=x.size()[2:], mode='bilinear')

|

| 285 |

+

|

| 286 |

+

f_s_1 = self.conv_s(segmap)

|

| 287 |

+

c1 = self.pool_s(f_s_1)

|

| 288 |

+

c2 = self.conv_s2(c1)

|

| 289 |

+

|

| 290 |

+

f1 = self.conv1(guidance)

|

| 291 |

+

|

| 292 |

+

f1 = self.adaIn1(f1, c1[:, : 128, ...], c1[:, 128:, ...])

|

| 293 |

+

f2 = self.relu1(f1)

|

| 294 |

+

|

| 295 |

+

f2 = self.conv2(f2)

|

| 296 |

+

f2 = self.adaIn2(f2, c2[:, : 128, ...], c2[:, 128:, ...])

|

| 297 |

+

f2 = self.relu2(f2)

|

| 298 |

+

guidance_actv = self.conv3(f2)

|

| 299 |

+

|

| 300 |

+

guidance_gamma = self.guidance_mlp_gamma(guidance_actv)

|

| 301 |

+

guidance_beta = self.guidance_mlp_beta(guidance_actv)

|

| 302 |

+

|

| 303 |

+

gamma_alpha = F.sigmoid(self.blending_gamma)

|

| 304 |

+

beta_alpha = F.sigmoid(self.blending_beta)

|

| 305 |

+

|

| 306 |

+

gamma_final = gamma_alpha * guidance_gamma + (1 - gamma_alpha) * mask_gamma

|

| 307 |

+

beta_final = beta_alpha * guidance_beta + (1 - beta_alpha) * mask_beta

|

| 308 |

+

out = x * (1 + gamma_final) + beta_final

|

| 309 |

+

|

| 310 |

+

# apply scale and bias

|

| 311 |

+

return out

|

| 312 |

+

|

| 313 |

+

class SPMGroupNorm(nn.Module):

|

| 314 |

+

def __init__(self, norm_nc, label_nc, feature_nc, eps = 1e-5):

|

| 315 |

+

super().__init__()

|

| 316 |

+

print("use SPM")

|

| 317 |

+

|

| 318 |

+

self.norm = nn.GroupNorm(32, norm_nc, affine=False) # 32/16

|

| 319 |

+

|

| 320 |

+

# SPADE

|

| 321 |

+

self.eps = eps

|

| 322 |

+