Spaces:

Sleeping

Sleeping

add bkse

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- diffusion-posterior-sampling/bkse/LICENSE +203 -0

- diffusion-posterior-sampling/bkse/README.md +181 -0

- diffusion-posterior-sampling/bkse/data/GOPRO_dataset.py +135 -0

- diffusion-posterior-sampling/bkse/data/REDS_dataset.py +139 -0

- diffusion-posterior-sampling/bkse/data/__init__.py +53 -0

- diffusion-posterior-sampling/bkse/data/data_sampler.py +72 -0

- diffusion-posterior-sampling/bkse/data/mix_dataset.py +104 -0

- diffusion-posterior-sampling/bkse/data/util.py +574 -0

- diffusion-posterior-sampling/bkse/data_augmentation.py +145 -0

- diffusion-posterior-sampling/bkse/domain_specific_deblur.py +89 -0

- diffusion-posterior-sampling/bkse/experiments/pretrained/kernel.pth +0 -0

- diffusion-posterior-sampling/bkse/generate_blur.py +53 -0

- diffusion-posterior-sampling/bkse/generic_deblur.py +28 -0

- diffusion-posterior-sampling/bkse/imgs/blur_faces/face01.png +0 -0

- diffusion-posterior-sampling/bkse/imgs/blur_imgs/blur1.png +0 -0

- diffusion-posterior-sampling/bkse/imgs/blur_imgs/blur2.png +0 -0

- diffusion-posterior-sampling/bkse/imgs/results/augmentation.jpg +0 -0

- diffusion-posterior-sampling/bkse/imgs/results/domain_specific_deblur.jpg +0 -0

- diffusion-posterior-sampling/bkse/imgs/results/general_deblurring.jpg +0 -0

- diffusion-posterior-sampling/bkse/imgs/results/generate_blur.jpg +0 -0

- diffusion-posterior-sampling/bkse/imgs/results/kernel_encoding_wGT.png +0 -0

- diffusion-posterior-sampling/bkse/imgs/sharp_imgs/mushishi.png +0 -0

- diffusion-posterior-sampling/bkse/imgs/teaser.jpg +0 -0

- diffusion-posterior-sampling/bkse/models/__init__.py +15 -0

- diffusion-posterior-sampling/bkse/models/arch_util.py +58 -0

- diffusion-posterior-sampling/bkse/models/backbones/resnet.py +89 -0

- diffusion-posterior-sampling/bkse/models/backbones/skip/concat.py +39 -0

- diffusion-posterior-sampling/bkse/models/backbones/skip/downsampler.py +241 -0

- diffusion-posterior-sampling/bkse/models/backbones/skip/non_local_dot_product.py +130 -0

- diffusion-posterior-sampling/bkse/models/backbones/skip/skip.py +133 -0

- diffusion-posterior-sampling/bkse/models/backbones/skip/util.py +65 -0

- diffusion-posterior-sampling/bkse/models/backbones/unet_parts.py +109 -0

- diffusion-posterior-sampling/bkse/models/deblurring/image_deblur.py +71 -0

- diffusion-posterior-sampling/bkse/models/deblurring/joint_deblur.py +63 -0

- diffusion-posterior-sampling/bkse/models/dips.py +83 -0

- diffusion-posterior-sampling/bkse/models/dsd/bicubic.py +76 -0

- diffusion-posterior-sampling/bkse/models/dsd/dsd.py +194 -0

- diffusion-posterior-sampling/bkse/models/dsd/dsd_stylegan.py +81 -0

- diffusion-posterior-sampling/bkse/models/dsd/dsd_stylegan2.py +78 -0

- diffusion-posterior-sampling/bkse/models/dsd/op/__init__.py +0 -0

- diffusion-posterior-sampling/bkse/models/dsd/op/fused_act.py +107 -0

- diffusion-posterior-sampling/bkse/models/dsd/op/fused_bias_act.cpp +21 -0

- diffusion-posterior-sampling/bkse/models/dsd/op/fused_bias_act_kernel.cu +99 -0

- diffusion-posterior-sampling/bkse/models/dsd/op/upfirdn2d.cpp +23 -0

- diffusion-posterior-sampling/bkse/models/dsd/op/upfirdn2d.py +184 -0

- diffusion-posterior-sampling/bkse/models/dsd/op/upfirdn2d_kernel.cu +369 -0

- diffusion-posterior-sampling/bkse/models/dsd/spherical_optimizer.py +29 -0

- diffusion-posterior-sampling/bkse/models/dsd/stylegan.py +474 -0

- diffusion-posterior-sampling/bkse/models/dsd/stylegan2.py +621 -0

- diffusion-posterior-sampling/bkse/models/kernel_encoding/base_model.py +131 -0

diffusion-posterior-sampling/bkse/LICENSE

ADDED

|

@@ -0,0 +1,203 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Apache License

|

| 2 |

+

Version 2.0, January 2004

|

| 3 |

+

http://www.apache.org/licenses/

|

| 4 |

+

|

| 5 |

+

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

| 6 |

+

|

| 7 |

+

1. Definitions.

|

| 8 |

+

|

| 9 |

+

"License" shall mean the terms and conditions for use, reproduction,

|

| 10 |

+

and distribution as defined by Sections 1 through 9 of this document.

|

| 11 |

+

|

| 12 |

+

"Licensor" shall mean the copyright owner or entity authorized by

|

| 13 |

+

the copyright owner that is granting the License.

|

| 14 |

+

|

| 15 |

+

"Legal Entity" shall mean the union of the acting entity and all

|

| 16 |

+

other entities that control, are controlled by, or are under common

|

| 17 |

+

control with that entity. For the purposes of this definition,

|

| 18 |

+

"control" means (i) the power, direct or indirect, to cause the

|

| 19 |

+

direction or management of such entity, whether by contract or

|

| 20 |

+

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

| 21 |

+

outstanding shares, or (iii) beneficial ownership of such entity.

|

| 22 |

+

|

| 23 |

+

"You" (or "Your") shall mean an individual or Legal Entity

|

| 24 |

+

exercising permissions granted by this License.

|

| 25 |

+

|

| 26 |

+

"Source" form shall mean the preferred form for making modifications,

|

| 27 |

+

including but not limited to software source code, documentation

|

| 28 |

+

source, and configuration files.

|

| 29 |

+

|

| 30 |

+

"Object" form shall mean any form resulting from mechanical

|

| 31 |

+

transformation or translation of a Source form, including but

|

| 32 |

+

not limited to compiled object code, generated documentation,

|

| 33 |

+

and conversions to other media types.

|

| 34 |

+

|

| 35 |

+

"Work" shall mean the work of authorship, whether in Source or

|

| 36 |

+

Object form, made available under the License, as indicated by a

|

| 37 |

+

copyright notice that is included in or attached to the work

|

| 38 |

+

(an example is provided in the Appendix below).

|

| 39 |

+

|

| 40 |

+

"Derivative Works" shall mean any work, whether in Source or Object

|

| 41 |

+

form, that is based on (or derived from) the Work and for which the

|

| 42 |

+

editorial revisions, annotations, elaborations, or other modifications

|

| 43 |

+

represent, as a whole, an original work of authorship. For the purposes

|

| 44 |

+

of this License, Derivative Works shall not include works that remain

|

| 45 |

+

separable from, or merely link (or bind by name) to the interfaces of,

|

| 46 |

+

the Work and Derivative Works thereof.

|

| 47 |

+

|

| 48 |

+

"Contribution" shall mean any work of authorship, including

|

| 49 |

+

the original version of the Work and any modifications or additions

|

| 50 |

+

to that Work or Derivative Works thereof, that is intentionally

|

| 51 |

+

submitted to Licensor for inclusion in the Work by the copyright owner

|

| 52 |

+

or by an individual or Legal Entity authorized to submit on behalf of

|

| 53 |

+

the copyright owner. For the purposes of this definition, "submitted"

|

| 54 |

+

means any form of electronic, verbal, or written communication sent

|

| 55 |

+

to the Licensor or its representatives, including but not limited to

|

| 56 |

+

communication on electronic mailing lists, source code control systems,

|

| 57 |

+

and issue tracking systems that are managed by, or on behalf of, the

|

| 58 |

+

Licensor for the purpose of discussing and improving the Work, but

|

| 59 |

+

excluding communication that is conspicuously marked or otherwise

|

| 60 |

+

designated in writing by the copyright owner as "Not a Contribution."

|

| 61 |

+

|

| 62 |

+

"Contributor" shall mean Licensor and any individual or Legal Entity

|

| 63 |

+

on behalf of whom a Contribution has been received by Licensor and

|

| 64 |

+

subsequently incorporated within the Work.

|

| 65 |

+

|

| 66 |

+

2. Grant of Copyright License. Subject to the terms and conditions of

|

| 67 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 68 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 69 |

+

copyright license to reproduce, prepare Derivative Works of,

|

| 70 |

+

publicly display, publicly perform, sublicense, and distribute the

|

| 71 |

+

Work and such Derivative Works in Source or Object form.

|

| 72 |

+

|

| 73 |

+

3. Grant of Patent License. Subject to the terms and conditions of

|

| 74 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 75 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 76 |

+

(except as stated in this section) patent license to make, have made,

|

| 77 |

+

use, offer to sell, sell, import, and otherwise transfer the Work,

|

| 78 |

+

where such license applies only to those patent claims licensable

|

| 79 |

+

by such Contributor that are necessarily infringed by their

|

| 80 |

+

Contribution(s) alone or by combination of their Contribution(s)

|

| 81 |

+

with the Work to which such Contribution(s) was submitted. If You

|

| 82 |

+

institute patent litigation against any entity (including a

|

| 83 |

+

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

| 84 |

+

or a Contribution incorporated within the Work constitutes direct

|

| 85 |

+

or contributory patent infringement, then any patent licenses

|

| 86 |

+

granted to You under this License for that Work shall terminate

|

| 87 |

+

as of the date such litigation is filed.

|

| 88 |

+

|

| 89 |

+

4. Redistribution. You may reproduce and distribute copies of the

|

| 90 |

+

Work or Derivative Works thereof in any medium, with or without

|

| 91 |

+

modifications, and in Source or Object form, provided that You

|

| 92 |

+

meet the following conditions:

|

| 93 |

+

|

| 94 |

+

(a) You must give any other recipients of the Work or

|

| 95 |

+

Derivative Works a copy of this License; and

|

| 96 |

+

|

| 97 |

+

(b) You must cause any modified files to carry prominent notices

|

| 98 |

+

stating that You changed the files; and

|

| 99 |

+

|

| 100 |

+

(c) You must retain, in the Source form of any Derivative Works

|

| 101 |

+

that You distribute, all copyright, patent, trademark, and

|

| 102 |

+

attribution notices from the Source form of the Work,

|

| 103 |

+

excluding those notices that do not pertain to any part of

|

| 104 |

+

the Derivative Works; and

|

| 105 |

+

|

| 106 |

+

(d) If the Work includes a "NOTICE" text file as part of its

|

| 107 |

+

distribution, then any Derivative Works that You distribute must

|

| 108 |

+

include a readable copy of the attribution notices contained

|

| 109 |

+

within such NOTICE file, excluding those notices that do not

|

| 110 |

+

pertain to any part of the Derivative Works, in at least one

|

| 111 |

+

of the following places: within a NOTICE text file distributed

|

| 112 |

+

as part of the Derivative Works; within the Source form or

|

| 113 |

+

documentation, if provided along with the Derivative Works; or,

|

| 114 |

+

within a display generated by the Derivative Works, if and

|

| 115 |

+

wherever such third-party notices normally appear. The contents

|

| 116 |

+

of the NOTICE file are for informational purposes only and

|

| 117 |

+

do not modify the License. You may add Your own attribution

|

| 118 |

+

notices within Derivative Works that You distribute, alongside

|

| 119 |

+

or as an addendum to the NOTICE text from the Work, provided

|

| 120 |

+

that such additional attribution notices cannot be construed

|

| 121 |

+

as modifying the License.

|

| 122 |

+

|

| 123 |

+

You may add Your own copyright statement to Your modifications and

|

| 124 |

+

may provide additional or different license terms and conditions

|

| 125 |

+

for use, reproduction, or distribution of Your modifications, or

|

| 126 |

+

for any such Derivative Works as a whole, provided Your use,

|

| 127 |

+

reproduction, and distribution of the Work otherwise complies with

|

| 128 |

+

the conditions stated in this License.

|

| 129 |

+

|

| 130 |

+

5. Submission of Contributions. Unless You explicitly state otherwise,

|

| 131 |

+

any Contribution intentionally submitted for inclusion in the Work

|

| 132 |

+

by You to the Licensor shall be under the terms and conditions of

|

| 133 |

+

this License, without any additional terms or conditions.

|

| 134 |

+

Notwithstanding the above, nothing herein shall supersede or modify

|

| 135 |

+

the terms of any separate license agreement you may have executed

|

| 136 |

+

with Licensor regarding such Contributions.

|

| 137 |

+

|

| 138 |

+

6. Trademarks. This License does not grant permission to use the trade

|

| 139 |

+

names, trademarks, service marks, or product names of the Licensor,

|

| 140 |

+

except as required for reasonable and customary use in describing the

|

| 141 |

+

origin of the Work and reproducing the content of the NOTICE file.

|

| 142 |

+

|

| 143 |

+

7. Disclaimer of Warranty. Unless required by applicable law or

|

| 144 |

+

agreed to in writing, Licensor provides the Work (and each

|

| 145 |

+

Contributor provides its Contributions) on an "AS IS" BASIS,

|

| 146 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

| 147 |

+

implied, including, without limitation, any warranties or conditions

|

| 148 |

+

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

| 149 |

+

PARTICULAR PURPOSE. You are solely responsible for determining the

|

| 150 |

+

appropriateness of using or redistributing the Work and assume any

|

| 151 |

+

risks associated with Your exercise of permissions under this License.

|

| 152 |

+

|

| 153 |

+

8. Limitation of Liability. In no event and under no legal theory,

|

| 154 |

+

whether in tort (including negligence), contract, or otherwise,

|

| 155 |

+

unless required by applicable law (such as deliberate and grossly

|

| 156 |

+

negligent acts) or agreed to in writing, shall any Contributor be

|

| 157 |

+

liable to You for damages, including any direct, indirect, special,

|

| 158 |

+

incidental, or consequential damages of any character arising as a

|

| 159 |

+

result of this License or out of the use or inability to use the

|

| 160 |

+

Work (including but not limited to damages for loss of goodwill,

|

| 161 |

+

work stoppage, computer failure or malfunction, or any and all

|

| 162 |

+

other commercial damages or losses), even if such Contributor

|

| 163 |

+

has been advised of the possibility of such damages.

|

| 164 |

+

|

| 165 |

+

9. Accepting Warranty or Additional Liability. While redistributing

|

| 166 |

+

the Work or Derivative Works thereof, You may choose to offer,

|

| 167 |

+

and charge a fee for, acceptance of support, warranty, indemnity,

|

| 168 |

+

or other liability obligations and/or rights consistent with this

|

| 169 |

+

License. However, in accepting such obligations, You may act only

|

| 170 |

+

on Your own behalf and on Your sole responsibility, not on behalf

|

| 171 |

+

of any other Contributor, and only if You agree to indemnify,

|

| 172 |

+

defend, and hold each Contributor harmless for any liability

|

| 173 |

+

incurred by, or claims asserted against, such Contributor by reason

|

| 174 |

+

of your accepting any such warranty or additional liability.

|

| 175 |

+

|

| 176 |

+

END OF TERMS AND CONDITIONS

|

| 177 |

+

|

| 178 |

+

APPENDIX: How to apply the Apache License to your work.

|

| 179 |

+

|

| 180 |

+

To apply the Apache License to your work, attach the following

|

| 181 |

+

boilerplate notice, with the fields enclosed by brackets "[]"

|

| 182 |

+

replaced with your own identifying information. (Don't include

|

| 183 |

+

the brackets!) The text should be enclosed in the appropriate

|

| 184 |

+

comment syntax for the file format. We also recommend that a

|

| 185 |

+

file or class name and description of purpose be included on the

|

| 186 |

+

same "printed page" as the copyright notice for easier

|

| 187 |

+

identification within third-party archives.

|

| 188 |

+

|

| 189 |

+

Copyright [yyyy] [name of copyright owner]

|

| 190 |

+

|

| 191 |

+

Licensed under the Apache License, Version 2.0 (the "License");

|

| 192 |

+

you may not use this file except in compliance with the License.

|

| 193 |

+

You may obtain a copy of the License at

|

| 194 |

+

|

| 195 |

+

http://www.apache.org/licenses/LICENSE-2.0

|

| 196 |

+

|

| 197 |

+

Unless required by applicable law or agreed to in writing, software

|

| 198 |

+

distributed under the License is distributed on an "AS IS" BASIS,

|

| 199 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 200 |

+

See the License for the specific language governing permissions and

|

| 201 |

+

limitations under the License.

|

| 202 |

+

'+

|

| 203 |

+

|

diffusion-posterior-sampling/bkse/README.md

ADDED

|

@@ -0,0 +1,181 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

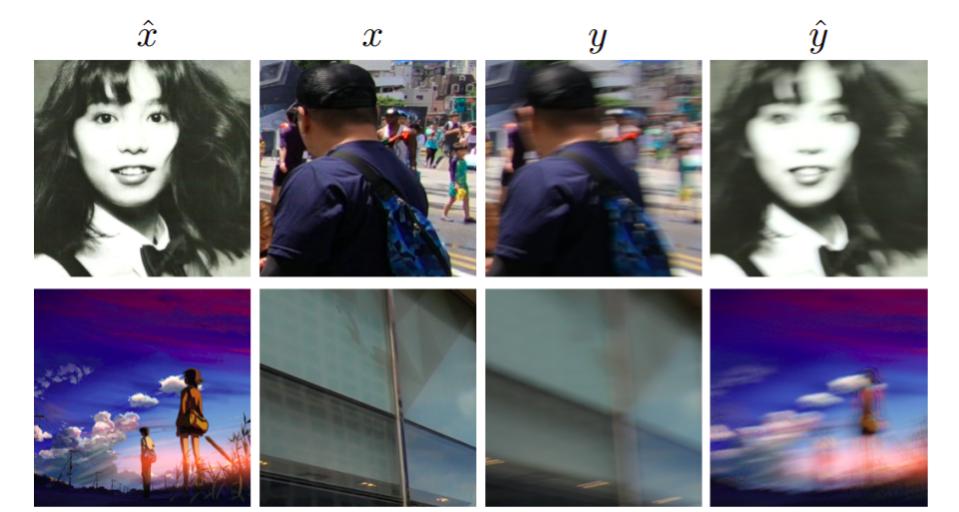

# Exploring Image Deblurring via Encoded Blur Kernel Space

|

| 2 |

+

|

| 3 |

+

## About the project

|

| 4 |

+

|

| 5 |

+

We introduce a method to encode the blur operators of an arbitrary dataset of sharp-blur image pairs into a blur kernel space. Assuming the encoded kernel space is close enough to in-the-wild blur operators, we propose an alternating optimization algorithm for blind image deblurring. It approximates an unseen blur operator by a kernel in the encoded space and searches for the corresponding sharp image. Due to the method's design, the encoded kernel space is fully differentiable, thus can be easily adopted in deep neural network models.

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

Detail of the method and experimental results can be found in [our following paper](https://arxiv.org/abs/2104.00317):

|

| 10 |

+

```

|

| 11 |

+

@inproceedings{m_Tran-etal-CVPR21,

|

| 12 |

+

author = {Phong Tran and Anh Tran and Quynh Phung and Minh Hoai},

|

| 13 |

+

title = {Explore Image Deblurring via Encoded Blur Kernel Space},

|

| 14 |

+

year = {2021},

|

| 15 |

+

booktitle = {Proceedings of the {IEEE} Conference on Computer Vision and Pattern Recognition (CVPR)}

|

| 16 |

+

}

|

| 17 |

+

```

|

| 18 |

+

Please CITE our paper whenever this repository is used to help produce published results or incorporated into other software.

|

| 19 |

+

|

| 20 |

+

[](https://colab.research.google.com/drive/1GDvbr4WQUibaEhQVzYPPObV4STn9NAot?usp=sharing)

|

| 21 |

+

|

| 22 |

+

## Table of Content

|

| 23 |

+

|

| 24 |

+

* [About the Project](#about-the-project)

|

| 25 |

+

* [Getting Started](#getting-started)

|

| 26 |

+

* [Prerequisites](#prerequisites)

|

| 27 |

+

* [Installation](#installation)

|

| 28 |

+

* [Using the pretrained model](#Using-the-pretrained-model)

|

| 29 |

+

* [Training and evaluation](#Training-and-evaluation)

|

| 30 |

+

* [Model Zoo](#Model-zoo)

|

| 31 |

+

|

| 32 |

+

## Getting started

|

| 33 |

+

|

| 34 |

+

### Prerequisites

|

| 35 |

+

|

| 36 |

+

* Python >= 3.7

|

| 37 |

+

* Pytorch >= 1.4.0

|

| 38 |

+

* CUDA >= 10.0

|

| 39 |

+

|

| 40 |

+

### Installation

|

| 41 |

+

|

| 42 |

+

``` sh

|

| 43 |

+

git clone https://github.com/VinAIResearch/blur-kernel-space-exploring.git

|

| 44 |

+

cd blur-kernel-space-exploring

|

| 45 |

+

|

| 46 |

+

|

| 47 |

+

conda create -n BlurKernelSpace -y python=3.7

|

| 48 |

+

conda activate BlurKernelSpace

|

| 49 |

+

conda install --file requirements.txt

|

| 50 |

+

```

|

| 51 |

+

|

| 52 |

+

## Training and evaluation

|

| 53 |

+

### Preparing datasets

|

| 54 |

+

You can download the datasets in the [model zoo section](#model-zoo).

|

| 55 |

+

|

| 56 |

+

To use your customized dataset, your dataset must be organized as follow:

|

| 57 |

+

```

|

| 58 |

+

root

|

| 59 |

+

├── blur_imgs

|

| 60 |

+

├── 000

|

| 61 |

+

├──── 00000000.png

|

| 62 |

+

├──── 00000001.png

|

| 63 |

+

├──── ...

|

| 64 |

+

├── 001

|

| 65 |

+

├──── 00000000.png

|

| 66 |

+

├──── 00000001.png

|

| 67 |

+

├──── ...

|

| 68 |

+

├── sharp_imgs

|

| 69 |

+

├── 000

|

| 70 |

+

├──── 00000000.png

|

| 71 |

+

├──── 00000001.png

|

| 72 |

+

├──── ...

|

| 73 |

+

├── 001

|

| 74 |

+

├──── 00000000.png

|

| 75 |

+

├──── 00000001.png

|

| 76 |

+

├──── ...

|

| 77 |

+

```

|

| 78 |

+

where `root`, `blur_imgs`, and `sharp_imgs` folders can have arbitrary names. For example, let `root, blur_imgs, sharp_imgs` be `REDS, train_blur, train_sharp` respectively (That is, you are using the REDS training set), then use the following scripts to create the lmdb dataset:

|

| 79 |

+

```sh

|

| 80 |

+

python create_lmdb.py --H 720 --W 1280 --C 3 --img_folder REDS/train_sharp --name train_sharp_wval --save_path ../datasets/REDS/train_sharp_wval.lmdb

|

| 81 |

+

python create_lmdb.py --H 720 --W 1280 --C 3 --img_folder REDS/train_blur --name train_blur_wval --save_path ../datasets/REDS/train_blur_wval.lmdb

|

| 82 |

+

```

|

| 83 |

+

where `(H, C, W)` is the shape of the images (note that all images in the dataset must have the same shape), `img_folder` is the folder that contains the images, `name` is the name of the dataset, and `save_path` is the save destination (`save_path` must end with `.lmdb`).

|

| 84 |

+

|

| 85 |

+

When the script is finished, two folders `train_sharp_wval.lmdb` and `train_blur_wval.lmdb` will be created in `./REDS`.

|

| 86 |

+

|

| 87 |

+

|

| 88 |

+

### Training

|

| 89 |

+

To do image deblurring, data augmentation, and blur generation, you first need to train the blur encoding network (The F function in the paper). This is the only network that you need to train. After creating the dataset, change the value of `dataroot_HQ` and `dataroot_LQ` in `options/kernel_encoding/REDS/woVAE.yml` to the paths of the sharp and blur lmdb datasets that were created before, then use the following script to train the model:

|

| 90 |

+

```

|

| 91 |

+

python train.py -opt options/kernel_encoding/REDS/woVAE.yml

|

| 92 |

+

```

|

| 93 |

+

|

| 94 |

+

where `opt` is the path to yaml file that contains training configurations. You can find some default configurations in the `options` folder. Checkpoints, training states, and logs will be saved in `experiments/modelName`. You can change the configurations (learning rate, hyper-parameters, network structure, etc) in the yaml file.

|

| 95 |

+

|

| 96 |

+

### Testing

|

| 97 |

+

#### Data augmentation

|

| 98 |

+

To augment a given dataset, first, create an lmdb dataset using `scripts/create_lmdb.py` as before. Then use the following script:

|

| 99 |

+

```

|

| 100 |

+

python data_augmentation.py --target_H=720 --target_W=1280 \

|

| 101 |

+

--source_H=720 --source_W=1280\

|

| 102 |

+

--augmented_H=256 --augmented_W=256\

|

| 103 |

+

--source_LQ_root=datasets/REDS/train_blur_wval.lmdb \

|

| 104 |

+

--source_HQ_root=datasets/REDS/train_sharp_wval.lmdb \

|

| 105 |

+

--target_HQ_root=datasets/REDS/test_sharp_wval.lmdb \

|

| 106 |

+

--save_path=results/GOPRO_augmented \

|

| 107 |

+

--num_images=10 \

|

| 108 |

+

--yml_path=options/data_augmentation/default.yml

|

| 109 |

+

```

|

| 110 |

+

`(target_H, target_W)`, `(source_H, source_W)`, and `(augmented_H, augmented_W)` are the desired shapes of the target images, source images, and augmented images respectively. `source_LQ_root`, `source_HQ_root`, and `target_HQ_root` are the paths of the lmdb datasets for the reference blur-sharp pairs and the input sharp images that were created before. `num_images` is the size of the augmented dataset. `model_path` is the path of the trained model. `yml_path` is the path to the model configuration file. Results will be saved in `save_path`.

|

| 111 |

+

|

| 112 |

+

|

| 113 |

+

|

| 114 |

+

#### Generate novel blur kernels

|

| 115 |

+

To generate a blur image given a sharp image, use the following command:

|

| 116 |

+

```sh

|

| 117 |

+

python generate_blur.py --yml_path=options/generate_blur/default.yml \

|

| 118 |

+

--image_path=imgs/sharp_imgs/mushishi.png \

|

| 119 |

+

--num_samples=10

|

| 120 |

+

--save_path=./res.png

|

| 121 |

+

```

|

| 122 |

+

where `model_path` is the path of the pre-trained model, `yml_path` is the path of the configuration file. `image_path` is the path of the sharp image. After running the script, a blur image corresponding to the sharp image will be saved in `save_path`. Here is some expected output:

|

| 123 |

+

|

| 124 |

+

**Note**: This only works with models that were trained with `--VAE` flag. The size of input images must be divisible by 128.

|

| 125 |

+

|

| 126 |

+

#### Generic Deblurring

|

| 127 |

+

To deblur a blurry image, use the following command:

|

| 128 |

+

```sh

|

| 129 |

+

python generic_deblur.py --image_path imgs/blur_imgs/blur1.png --yml_path options/generic_deblur/default.yml --save_path ./res.png

|

| 130 |

+

```

|

| 131 |

+

where `image_path` is the path of the blurry image. `yml_path` is the path of the configuration file. The deblurred image will be saved to `save_path`.

|

| 132 |

+

|

| 133 |

+

|

| 134 |

+

|

| 135 |

+

#### Deblurring using sharp image prior

|

| 136 |

+

[mapping]: https://drive.google.com/uc?id=14R6iHGf5iuVx3DMNsACAl7eBr7Vdpd0k

|

| 137 |

+

[synthesis]: https://drive.google.com/uc?id=1TCViX1YpQyRsklTVYEJwdbmK91vklCo8

|

| 138 |

+

[pretrained model]: https://drive.google.com/file/d/1PQutd-JboOCOZqmd95XWxWrO8gGEvRcO/view

|

| 139 |

+

First, you need to download the pre-trained styleGAN or styleGAN2 networks. If you want to use styleGAN, download the [mapping] and [synthesis] networks, then rename and copy them to `experiments/pretrained/stylegan_mapping.pt` and `experiments/pretrained/stylegan_synthesis.pt` respectively. If you want to use styleGAN2 instead, download the [pretrained model], then rename and copy it to `experiments/pretrained/stylegan2.pt`.

|

| 140 |

+

|

| 141 |

+

To deblur a blurry image using styleGAN latent space as the sharp image prior, you can use one of the following commands:

|

| 142 |

+

```sh

|

| 143 |

+

python domain_specific_deblur.py --input_dir imgs/blur_faces \

|

| 144 |

+

--output_dir experiments/domain_specific_deblur/results \

|

| 145 |

+

--yml_path options/domain_specific_deblur/stylegan.yml # Use latent space of stylegan

|

| 146 |

+

python domain_specific_deblur.py --input_dir imgs/blur_faces \

|

| 147 |

+

--output_dir experiments/domain_specific_deblur/results \

|

| 148 |

+

--yml_path options/domain_specific_deblur/stylegan2.yml # Use latent space of stylegan2

|

| 149 |

+

```

|

| 150 |

+

Results will be saved in `experiments/domain_specific_deblur/results`.

|

| 151 |

+

**Note**: Generally, the code still works with images that have the size divisible by 128. However, since our blur kernels are not uniform, the size of the kernel increases as the size of the image increases.

|

| 152 |

+

|

| 153 |

+

|

| 154 |

+

|

| 155 |

+

## Model Zoo

|

| 156 |

+

Pretrained models and corresponding datasets are provided in the below table. After downloading the datasets and models, follow the instructions in the [testing section](#testing) to do data augmentation, generating blur images, or image deblurring.

|

| 157 |

+

|

| 158 |

+

[REDS]: https://seungjunnah.github.io/Datasets/reds.html

|

| 159 |

+

[GOPRO]: https://seungjunnah.github.io/Datasets/gopro

|

| 160 |

+

|

| 161 |

+

[REDS woVAE]: https://drive.google.com/file/d/12ZhjXWcYhAZjBnMtF0ai0R5PQydZct61/view?usp=sharing

|

| 162 |

+

[GOPRO woVAE]: https://drive.google.com/file/d/1WrVALP-woJgtiZyvQ7NOkaZssHbHwKYn/view?usp=sharing

|

| 163 |

+

[GOPRO wVAE]: https://drive.google.com/file/d/1QMUY8mxUMgEJty2Gk7UY0WYmyyYRY7vS/view?usp=sharing

|

| 164 |

+

[GOPRO + REDS woVAE]: https://drive.google.com/file/d/169R0hEs3rNeloj-m1rGS4YjW38pu-LFD/view?usp=sharing

|

| 165 |

+

|

| 166 |

+

|Model name | dataset(s) | status |

|

| 167 |

+

|:-----------------------|:---------------:|-------------------------:|

|

| 168 |

+

|[REDS woVAE] | [REDS] | :heavy_check_mark: |

|

| 169 |

+

|[GOPRO woVAE] | [GOPRO] | :heavy_check_mark: |

|

| 170 |

+

|[GOPRO wVAE] | [GOPRO] | :heavy_check_mark: |

|

| 171 |

+

|[GOPRO + REDS woVAE] | [GOPRO], [REDS] | :heavy_check_mark: |

|

| 172 |

+

|

| 173 |

+

|

| 174 |

+

## Notes and references

|

| 175 |

+

The training code is borrowed from the EDVR project: https://github.com/xinntao/EDVR

|

| 176 |

+

|

| 177 |

+

The backbone code is borrowed from the DeblurGAN project: https://github.com/KupynOrest/DeblurGAN

|

| 178 |

+

|

| 179 |

+

The styleGAN code is borrowed from the PULSE project: https://github.com/adamian98/pulse

|

| 180 |

+

|

| 181 |

+

The stylegan2 code is borrowed from https://github.com/rosinality/stylegan2-pytorch

|

diffusion-posterior-sampling/bkse/data/GOPRO_dataset.py

ADDED

|

@@ -0,0 +1,135 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

GOPRO dataset

|

| 3 |

+

support reading images from lmdb, image folder and memcached

|

| 4 |

+

"""

|

| 5 |

+

import logging

|

| 6 |

+

import os.path as osp

|

| 7 |

+

import pickle

|

| 8 |

+

import random

|

| 9 |

+

|

| 10 |

+

import cv2

|

| 11 |

+

import data.util as util

|

| 12 |

+

import lmdb

|

| 13 |

+

import numpy as np

|

| 14 |

+

import torch

|

| 15 |

+

import torch.utils.data as data

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

try:

|

| 19 |

+

import mc # import memcached

|

| 20 |

+

except ImportError:

|

| 21 |

+

pass

|

| 22 |

+

|

| 23 |

+

logger = logging.getLogger("base")

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

class GOPRODataset(data.Dataset):

|

| 27 |

+

"""

|

| 28 |

+

Reading the training GOPRO dataset

|

| 29 |

+

key example: 000_00000000

|

| 30 |

+

HQ: Ground-Truth;

|

| 31 |

+

LQ: Low-Quality, e.g., low-resolution/blurry/noisy/compressed frames

|

| 32 |

+

support reading N LQ frames, N = 1, 3, 5, 7

|

| 33 |

+

"""

|

| 34 |

+

|

| 35 |

+

def __init__(self, opt):

|

| 36 |

+

super(GOPRODataset, self).__init__()

|

| 37 |

+

self.opt = opt

|

| 38 |

+

# temporal augmentation

|

| 39 |

+

|

| 40 |

+

self.HQ_root, self.LQ_root = opt["dataroot_HQ"], opt["dataroot_LQ"]

|

| 41 |

+

self.N_frames = opt["N_frames"]

|

| 42 |

+

self.data_type = self.opt["data_type"]

|

| 43 |

+

# directly load image keys

|

| 44 |

+

if self.data_type == "lmdb":

|

| 45 |

+

self.paths_HQ, _ = util.get_image_paths(self.data_type, opt["dataroot_HQ"])

|

| 46 |

+

logger.info("Using lmdb meta info for cache keys.")

|

| 47 |

+

elif opt["cache_keys"]:

|

| 48 |

+

logger.info("Using cache keys: {}".format(opt["cache_keys"]))

|

| 49 |

+

self.paths_HQ = pickle.load(open(opt["cache_keys"], "rb"))["keys"]

|

| 50 |

+

else:

|

| 51 |

+

raise ValueError(

|

| 52 |

+

"Need to create cache keys (meta_info.pkl) \

|

| 53 |

+

by running [create_lmdb.py]"

|

| 54 |

+

)

|

| 55 |

+

|

| 56 |

+

assert self.paths_HQ, "Error: HQ path is empty."

|

| 57 |

+

|

| 58 |

+

if self.data_type == "lmdb":

|

| 59 |

+

self.HQ_env, self.LQ_env = None, None

|

| 60 |

+

elif self.data_type == "mc": # memcached

|

| 61 |

+

self.mclient = None

|

| 62 |

+

elif self.data_type == "img":

|

| 63 |

+

pass

|

| 64 |

+

else:

|

| 65 |

+

raise ValueError("Wrong data type: {}".format(self.data_type))

|

| 66 |

+

|

| 67 |

+

def _init_lmdb(self):

|

| 68 |

+

# https://github.com/chainer/chainermn/issues/129

|

| 69 |

+

self.HQ_env = lmdb.open(self.opt["dataroot_HQ"], readonly=True, lock=False, readahead=False, meminit=False)

|

| 70 |

+

self.LQ_env = lmdb.open(self.opt["dataroot_LQ"], readonly=True, lock=False, readahead=False, meminit=False)

|

| 71 |

+

|

| 72 |

+

def _ensure_memcached(self):

|

| 73 |

+

if self.mclient is None:

|

| 74 |

+

# specify the config files

|

| 75 |

+

server_list_config_file = None

|

| 76 |

+

client_config_file = None

|

| 77 |

+

self.mclient = mc.MemcachedClient.GetInstance(server_list_config_file, client_config_file)

|

| 78 |

+

|

| 79 |

+

def _read_img_mc(self, path):

|

| 80 |

+

""" Return BGR, HWC, [0, 255], uint8"""

|

| 81 |

+

value = mc.pyvector()

|

| 82 |

+

self.mclient.Get(path, value)

|

| 83 |

+

value_buf = mc.ConvertBuffer(value)

|

| 84 |

+

img_array = np.frombuffer(value_buf, np.uint8)

|

| 85 |

+

img = cv2.imdecode(img_array, cv2.IMREAD_UNCHANGED)

|

| 86 |

+

return img

|

| 87 |

+

|

| 88 |

+

def _read_img_mc_BGR(self, path, name_a, name_b):

|

| 89 |

+

"""

|

| 90 |

+

Read BGR channels separately and then combine for 1M limits in cluster

|

| 91 |

+

"""

|

| 92 |

+

img_B = self._read_img_mc(osp.join(path + "_B", name_a, name_b + ".png"))

|

| 93 |

+

img_G = self._read_img_mc(osp.join(path + "_G", name_a, name_b + ".png"))

|

| 94 |

+

img_R = self._read_img_mc(osp.join(path + "_R", name_a, name_b + ".png"))

|

| 95 |

+

img = cv2.merge((img_B, img_G, img_R))

|

| 96 |

+

return img

|

| 97 |

+

|

| 98 |

+

def __getitem__(self, index):

|

| 99 |

+

if self.data_type == "mc":

|

| 100 |

+

self._ensure_memcached()

|

| 101 |

+

elif self.data_type == "lmdb" and (self.HQ_env is None or self.LQ_env is None):

|

| 102 |

+

self._init_lmdb()

|

| 103 |

+

|

| 104 |

+

HQ_size = self.opt["HQ_size"]

|

| 105 |

+

key = self.paths_HQ[index]

|

| 106 |

+

|

| 107 |

+

# get the HQ image (as the center frame)

|

| 108 |

+

img_HQ = util.read_img(self.HQ_env, key, (3, 720, 1280))

|

| 109 |

+

|

| 110 |

+

# get LQ images

|

| 111 |

+

img_LQ = util.read_img(self.LQ_env, key, (3, 720, 1280))

|

| 112 |

+

|

| 113 |

+

if self.opt["phase"] == "train":

|

| 114 |

+

_, H, W = 3, 720, 1280 # LQ size

|

| 115 |

+

# randomly crop

|

| 116 |

+

rnd_h = random.randint(0, max(0, H - HQ_size))

|

| 117 |

+

rnd_w = random.randint(0, max(0, W - HQ_size))

|

| 118 |

+

img_LQ = img_LQ[rnd_h : rnd_h + HQ_size, rnd_w : rnd_w + HQ_size, :]

|

| 119 |

+

img_HQ = img_HQ[rnd_h : rnd_h + HQ_size, rnd_w : rnd_w + HQ_size, :]

|

| 120 |

+

|

| 121 |

+

# augmentation - flip, rotate

|

| 122 |

+

imgs = [img_HQ, img_LQ]

|

| 123 |

+

rlt = util.augment(imgs, self.opt["use_flip"], self.opt["use_rot"])

|

| 124 |

+

img_HQ = rlt[0]

|

| 125 |

+

img_LQ = rlt[1]

|

| 126 |

+

|

| 127 |

+

# BGR to RGB, HWC to CHW, numpy to tensor

|

| 128 |

+

img_LQ = img_LQ[:, :, [2, 1, 0]]

|

| 129 |

+

img_HQ = img_HQ[:, :, [2, 1, 0]]

|

| 130 |

+

img_LQ = torch.from_numpy(np.ascontiguousarray(np.transpose(img_LQ, (2, 0, 1)))).float()

|

| 131 |

+

img_HQ = torch.from_numpy(np.ascontiguousarray(np.transpose(img_HQ, (2, 0, 1)))).float()

|

| 132 |

+

return {"LQ": img_LQ, "HQ": img_HQ, "key": key}

|

| 133 |

+

|

| 134 |

+

def __len__(self):

|

| 135 |

+

return len(self.paths_HQ)

|

diffusion-posterior-sampling/bkse/data/REDS_dataset.py

ADDED

|

@@ -0,0 +1,139 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

REDS dataset

|

| 3 |

+

support reading images from lmdb, image folder and memcached

|

| 4 |

+

"""

|

| 5 |

+

import logging

|

| 6 |

+

import os.path as osp

|

| 7 |

+

import pickle

|

| 8 |

+

import random

|

| 9 |

+

|

| 10 |

+

import cv2

|

| 11 |

+

import data.util as util

|

| 12 |

+

import lmdb

|

| 13 |

+

import numpy as np

|

| 14 |

+

import torch

|

| 15 |

+

import torch.utils.data as data

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

try:

|

| 19 |

+

import mc # import memcached

|

| 20 |

+

except ImportError:

|

| 21 |

+

pass

|

| 22 |

+

|

| 23 |

+

logger = logging.getLogger("base")

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

class REDSDataset(data.Dataset):

|

| 27 |

+

"""

|

| 28 |

+

Reading the training REDS dataset

|

| 29 |

+

key example: 000_00000000

|

| 30 |

+

HQ: Ground-Truth;

|

| 31 |

+

LQ: Low-Quality, e.g., low-resolution/blurry/noisy/compressed frames

|

| 32 |

+

support reading N LQ frames, N = 1, 3, 5, 7

|

| 33 |

+

"""

|

| 34 |

+

|

| 35 |

+

def __init__(self, opt):

|

| 36 |

+

super(REDSDataset, self).__init__()

|

| 37 |

+

self.opt = opt

|

| 38 |

+

# temporal augmentation

|

| 39 |

+

|

| 40 |

+

self.HQ_root, self.LQ_root = opt["dataroot_HQ"], opt["dataroot_LQ"]

|

| 41 |

+

self.N_frames = opt["N_frames"]

|

| 42 |

+

self.data_type = self.opt["data_type"]

|

| 43 |

+

# directly load image keys

|

| 44 |

+

if self.data_type == "lmdb":

|

| 45 |

+

self.paths_HQ, _ = util.get_image_paths(self.data_type, opt["dataroot_HQ"])

|

| 46 |

+

logger.info("Using lmdb meta info for cache keys.")

|

| 47 |

+

elif opt["cache_keys"]:

|

| 48 |

+

logger.info("Using cache keys: {}".format(opt["cache_keys"]))

|

| 49 |

+

self.paths_HQ = pickle.load(open(opt["cache_keys"], "rb"))["keys"]

|

| 50 |

+

else:

|

| 51 |

+

raise ValueError(

|

| 52 |

+

"Need to create cache keys (meta_info.pkl) \

|

| 53 |

+

by running [create_lmdb.py]"

|

| 54 |

+

)

|

| 55 |

+

|

| 56 |

+

# remove the REDS4 for testing

|

| 57 |

+

self.paths_HQ = [v for v in self.paths_HQ if v.split("_")[0] not in ["000", "011", "015", "020"]]

|

| 58 |

+

assert self.paths_HQ, "Error: HQ path is empty."

|

| 59 |

+

|

| 60 |

+

if self.data_type == "lmdb":

|

| 61 |

+

self.HQ_env, self.LQ_env = None, None

|

| 62 |

+

elif self.data_type == "mc": # memcached

|

| 63 |

+

self.mclient = None

|

| 64 |

+

elif self.data_type == "img":

|

| 65 |

+

pass

|

| 66 |

+

else:

|

| 67 |

+

raise ValueError("Wrong data type: {}".format(self.data_type))

|

| 68 |

+

|

| 69 |

+

def _init_lmdb(self):

|

| 70 |

+

# https://github.com/chainer/chainermn/issues/129

|

| 71 |

+

self.HQ_env = lmdb.open(self.opt["dataroot_HQ"], readonly=True, lock=False, readahead=False, meminit=False)

|

| 72 |

+

self.LQ_env = lmdb.open(self.opt["dataroot_LQ"], readonly=True, lock=False, readahead=False, meminit=False)

|

| 73 |

+

|

| 74 |

+

def _ensure_memcached(self):

|

| 75 |

+

if self.mclient is None:

|

| 76 |

+

# specify the config files

|

| 77 |

+

server_list_config_file = None

|

| 78 |

+

client_config_file = None

|

| 79 |

+

self.mclient = mc.MemcachedClient.GetInstance(server_list_config_file, client_config_file)

|

| 80 |

+

|

| 81 |

+

def _read_img_mc(self, path):

|

| 82 |

+

""" Return BGR, HWC, [0, 255], uint8"""

|

| 83 |

+

value = mc.pyvector()

|

| 84 |

+

self.mclient.Get(path, value)

|

| 85 |

+

value_buf = mc.ConvertBuffer(value)

|

| 86 |

+

img_array = np.frombuffer(value_buf, np.uint8)

|

| 87 |

+

img = cv2.imdecode(img_array, cv2.IMREAD_UNCHANGED)

|

| 88 |

+

return img

|

| 89 |

+

|

| 90 |

+

def _read_img_mc_BGR(self, path, name_a, name_b):

|

| 91 |

+

"""

|

| 92 |

+

Read BGR channels separately and then combine for 1M limits in cluster

|

| 93 |

+

"""

|

| 94 |

+

img_B = self._read_img_mc(osp.join(path + "_B", name_a, name_b + ".png"))

|

| 95 |

+

img_G = self._read_img_mc(osp.join(path + "_G", name_a, name_b + ".png"))

|

| 96 |

+

img_R = self._read_img_mc(osp.join(path + "_R", name_a, name_b + ".png"))

|

| 97 |

+

img = cv2.merge((img_B, img_G, img_R))

|

| 98 |

+

return img

|

| 99 |

+

|

| 100 |

+

def __getitem__(self, index):

|

| 101 |

+

if self.data_type == "mc":

|

| 102 |

+

self._ensure_memcached()

|

| 103 |

+

elif self.data_type == "lmdb" and (self.HQ_env is None or self.LQ_env is None):

|

| 104 |

+

self._init_lmdb()

|

| 105 |

+

|

| 106 |

+

HQ_size = self.opt["HQ_size"]

|

| 107 |

+

key = self.paths_HQ[index]

|

| 108 |

+

name_a, name_b = key.split("_")

|

| 109 |

+

|

| 110 |

+

# get the HQ image

|

| 111 |

+

img_HQ = util.read_img(self.HQ_env, key, (3, 720, 1280))

|

| 112 |

+

|

| 113 |

+

# get the LQ image

|

| 114 |

+

img_LQ = util.read_img(self.LQ_env, key, (3, 720, 1280))

|

| 115 |

+

|

| 116 |

+

if self.opt["phase"] == "train":

|

| 117 |

+

_, H, W = 3, 720, 1280 # LQ size

|

| 118 |

+

# randomly crop

|

| 119 |

+

rnd_h = random.randint(0, max(0, H - HQ_size))

|

| 120 |

+

rnd_w = random.randint(0, max(0, W - HQ_size))

|

| 121 |

+

img_LQ = img_LQ[rnd_h : rnd_h + HQ_size, rnd_w : rnd_w + HQ_size, :]

|

| 122 |

+

img_HQ = img_HQ[rnd_h : rnd_h + HQ_size, rnd_w : rnd_w + HQ_size, :]

|

| 123 |

+

|

| 124 |

+

# augmentation - flip, rotate

|

| 125 |

+

imgs = [img_HQ, img_LQ]

|

| 126 |

+

rlt = util.augment(imgs, self.opt["use_flip"], self.opt["use_rot"])

|

| 127 |

+

img_HQ = rlt[0]

|

| 128 |

+

img_LQ = rlt[1]

|

| 129 |

+

|

| 130 |

+

# BGR to RGB, HWC to CHW, numpy to tensor

|

| 131 |

+

img_LQ = img_LQ[:, :, [2, 1, 0]]

|

| 132 |

+

img_HQ = img_HQ[:, :, [2, 1, 0]]

|

| 133 |

+

img_LQ = torch.from_numpy(np.ascontiguousarray(np.transpose(img_LQ, (2, 0, 1)))).float()

|

| 134 |

+

img_HQ = torch.from_numpy(np.ascontiguousarray(np.transpose(img_HQ, (2, 0, 1)))).float()

|

| 135 |

+

|

| 136 |

+

return {"LQ": img_LQ, "HQ": img_HQ}

|

| 137 |

+

|

| 138 |

+

def __len__(self):

|

| 139 |

+

return len(self.paths_HQ)

|

diffusion-posterior-sampling/bkse/data/__init__.py

ADDED

|

@@ -0,0 +1,53 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""create dataset and dataloader"""

|

| 2 |

+

import logging

|

| 3 |

+

|

| 4 |

+

import torch

|

| 5 |

+

import torch.utils.data

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

def create_dataloader(dataset, dataset_opt, opt=None, sampler=None):

|

| 9 |

+

phase = dataset_opt["phase"]

|

| 10 |

+

if phase == "train":

|

| 11 |

+

if opt["dist"]:

|

| 12 |

+

world_size = torch.distributed.get_world_size()

|

| 13 |

+

num_workers = dataset_opt["n_workers"]

|

| 14 |

+

assert dataset_opt["batch_size"] % world_size == 0

|

| 15 |

+

batch_size = dataset_opt["batch_size"] // world_size

|

| 16 |

+

shuffle = False

|

| 17 |

+

else:

|

| 18 |

+

num_workers = dataset_opt["n_workers"] * len(opt["gpu_ids"])

|

| 19 |

+

batch_size = dataset_opt["batch_size"]

|

| 20 |

+

shuffle = True

|

| 21 |

+

return torch.utils.data.DataLoader(

|

| 22 |

+

dataset,

|

| 23 |

+

batch_size=batch_size,

|

| 24 |

+

shuffle=shuffle,

|

| 25 |

+

num_workers=num_workers,

|

| 26 |

+

sampler=sampler,

|

| 27 |

+

drop_last=True,

|

| 28 |

+

pin_memory=False,

|

| 29 |

+

)

|

| 30 |

+

else:

|

| 31 |

+

return torch.utils.data.DataLoader(dataset, batch_size=1, shuffle=False, num_workers=1, pin_memory=False)

|

| 32 |

+

|

| 33 |

+

|

| 34 |

+

def create_dataset(dataset_opt):

|

| 35 |

+

mode = dataset_opt["mode"]

|

| 36 |

+

# datasets for image restoration

|

| 37 |

+

if mode == "REDS":

|

| 38 |

+

from data.REDS_dataset import REDSDataset as D

|

| 39 |

+

elif mode == "GOPRO":

|

| 40 |

+

from data.GOPRO_dataset import GOPRODataset as D

|

| 41 |

+

elif mode == "fewshot":

|

| 42 |

+

from data.fewshot_dataset import FewShotDataset as D

|

| 43 |

+

elif mode == "levin":

|

| 44 |

+

from data.levin_dataset import LevinDataset as D

|

| 45 |

+

elif mode == "mix":

|

| 46 |

+

from data.mix_dataset import MixDataset as D

|

| 47 |

+

else:

|

| 48 |

+

raise NotImplementedError(f"Dataset {mode} is not recognized.")

|

| 49 |

+

dataset = D(dataset_opt)

|

| 50 |

+

|

| 51 |

+

logger = logging.getLogger("base")

|

| 52 |

+

logger.info("Dataset [{:s} - {:s}] is created.".format(dataset.__class__.__name__, dataset_opt["name"]))

|

| 53 |

+

return dataset

|

diffusion-posterior-sampling/bkse/data/data_sampler.py

ADDED

|

@@ -0,0 +1,72 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|