Spaces:

Runtime error

Runtime error

Upload 15 files

Browse files- alignscore/LICENSE +21 -0

- alignscore/README.md +216 -0

- alignscore/alignscore_fig.png +0 -0

- alignscore/baselines.py +704 -0

- alignscore/benchmark.py +494 -0

- alignscore/evaluate.py +1793 -0

- alignscore/generate_training_data.py +1519 -0

- alignscore/pyproject.toml +41 -0

- alignscore/requirements.txt +9 -0

- alignscore/src/alignscore/__init__.py +1 -0

- alignscore/src/alignscore/alignscore.py +16 -0

- alignscore/src/alignscore/dataloader.py +610 -0

- alignscore/src/alignscore/inference.py +293 -0

- alignscore/src/alignscore/model.py +308 -0

- alignscore/train.py +144 -0

alignscore/LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

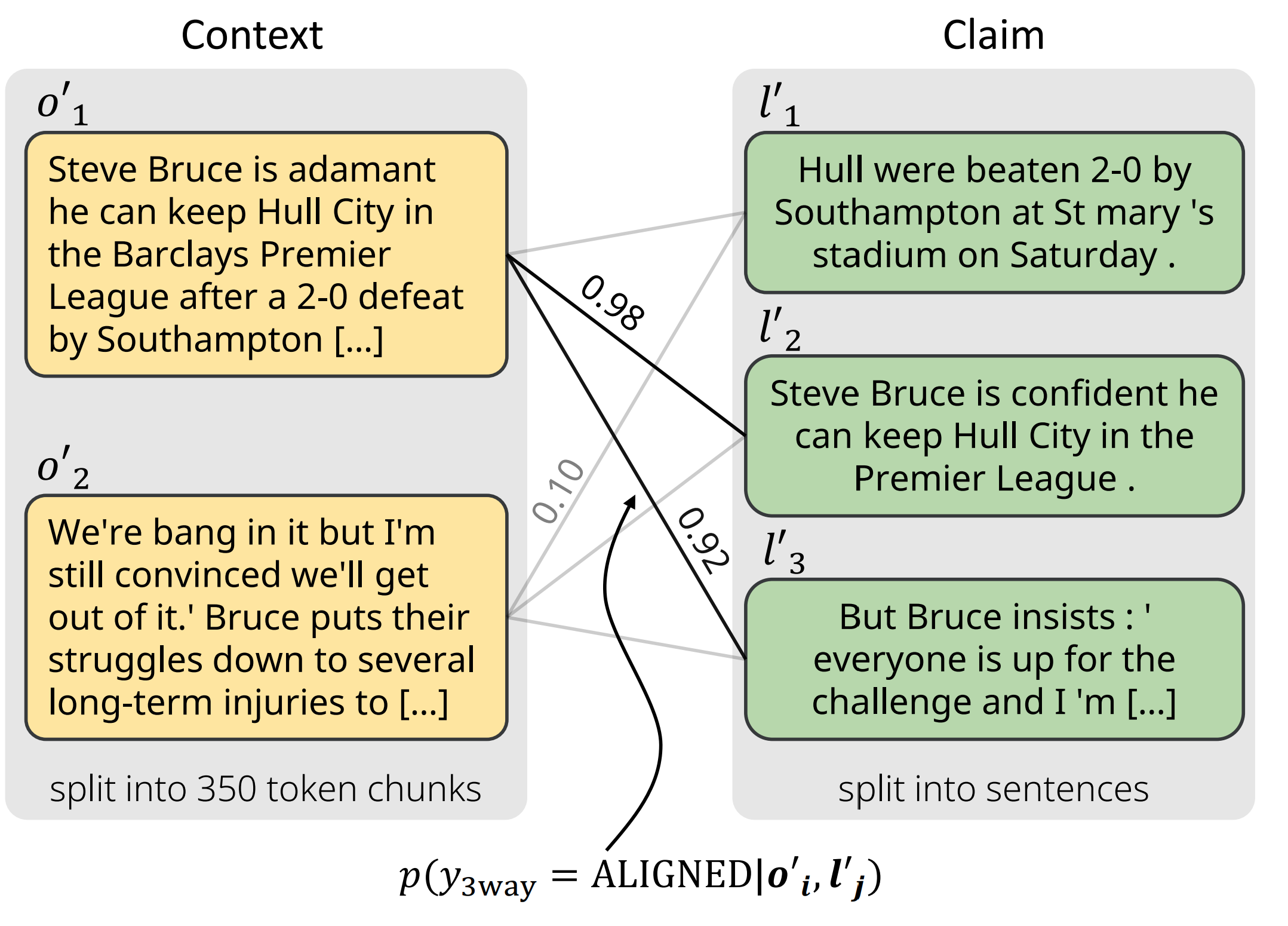

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2023 yuh-zha

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

alignscore/README.md

ADDED

|

@@ -0,0 +1,216 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# AlignScore

|

| 2 |

+

This is the repository for AlignScore, a metric for automatic factual consistency evaluation of text pairs introduced in \

|

| 3 |

+

[AlignScore: Evaluating Factual Consistency with a Unified Alignment Function](https://arxiv.org/abs/2305.16739) \

|

| 4 |

+

Yuheng Zha, Yichi Yang, Ruichen Li and Zhiting Hu \

|

| 5 |

+

ACL 2023

|

| 6 |

+

|

| 7 |

+

**Factual consistency evaluation** is to evaluate whether all the information in **b** is contained in **a** (**b** does not contradict **a**). For example, this is a factual inconsistent case:

|

| 8 |

+

|

| 9 |

+

* **a**: Children smiling and waving at camera.

|

| 10 |

+

* **b**: The kids are frowning.

|

| 11 |

+

|

| 12 |

+

And this is a factual consistent case:

|

| 13 |

+

|

| 14 |

+

* **a**: The NBA season of 1975 -- 76 was the 30th season of the National Basketball Association.

|

| 15 |

+

* **b**: The 1975 -- 76 season of the National Basketball Association was the 30th season of the NBA.

|

| 16 |

+

|

| 17 |

+

Factual consistency evaluation can be applied to many tasks like Summarization, Paraphrase and Dialog. For example, large language models often generate hallucinations when summarizing documents. We wonder if the generated text is factual consistent to its original context.

|

| 18 |

+

|

| 19 |

+

# Leaderboards

|

| 20 |

+

We introduce two leaderboards that compare AlignScore with similar-sized metrics and LLM-based metrics, respectively.

|

| 21 |

+

## Leaderboard --- compare with similar-sized metrics

|

| 22 |

+

|

| 23 |

+

We list the performance of AlignScore as well as other metrics on the SummaC (includes 6 datasets) and TRUE (includes 11 datasets) benchmarks, as well as other popular factual consistency datasets (include 6 datasets).

|

| 24 |

+

|

| 25 |

+

| Rank | Metrics | SummaC* | TRUE** | Other Datasets*** | Average**** | Paper | Code |

|

| 26 |

+

| ---- | :--------------- | :-----: | :----: | :------------: | :-----: | :---: | :--: |

|

| 27 |

+

| 1 | **AlignScore-large** | 88.6 | 83.8 | 49.3 | 73.9 | [:page\_facing\_up:(Zha et al. 2023)](https://arxiv.org/pdf/2305.16739.pdf) | [:octocat:](https://github.com/yuh-zha/AlignScore) |

|

| 28 |

+

| 2 | **AlignScore-base** | 87.4 | 82.5 | 44.9 | 71.6 | [:page\_facing\_up:(Zha et al. 2023)](https://arxiv.org/pdf/2305.16739.pdf) | [:octocat:](https://github.com/yuh-zha/AlignScore) |

|

| 29 |

+

| 3 | QAFactEval | 83.8 | 79.4 | 42.4 | 68.5 | [:page\_facing\_up:(Fabbri et al. 2022)](https://arxiv.org/abs/2112.08542) | [:octocat:](https://github.com/salesforce/QAFactEval) |

|

| 30 |

+

| 4 | UniEval | 84.6 | 78.0 | 41.5 | 68.0 | [:page\_facing\_up:(Zhong et al. 2022)](https://arxiv.org/abs/2210.07197) | [:octocat:](https://github.com/maszhongming/UniEval) |

|

| 31 |

+

| 5 | SummaC-CONV | 81.0 | 78.7 | 34.2 | 64.6 | [:page\_facing\_up:(Laban et al. 2022)](https://arxiv.org/abs/2111.09525) | [:octocat:](https://github.com/tingofurro/summac) |

|

| 32 |

+

| 6 | BARTScore | 80.9 | 73.4 | 34.8 | 63.0 | [:page\_facing\_up:(Yuan et al. 2022)](https://arxiv.org/abs/2106.11520) | [:octocat:](https://github.com/neulab/BARTScore) |

|

| 33 |

+

| 7 | CTC | 81.2 | 72.4 | 35.3 | 63.0 | [:page\_facing\_up:(Deng et al. 2022)](https://arxiv.org/abs/2109.06379) | [:octocat:](https://github.com/tanyuqian/ctc-gen-eval) |

|

| 34 |

+

| 8 | SummaC-ZS | 79.0 | 78.2 | 30.4 | 62.5 | [:page\_facing\_up:(Laban et al. 2022)](https://arxiv.org/abs/2111.09525) | [:octocat:](https://github.com/tingofurro/summac) |

|

| 35 |

+

| 9 | ROUGE-2 | 78.1 | 72.4 | 27.9 | 59.5 | [:page\_facing\_up:(Lin 2004)](https://aclanthology.org/W04-1013/) | [:octocat:](https://github.com/pltrdy/rouge) |

|

| 36 |

+

| 10 | ROUGE-1 | 77.4 | 72.0 | 28.6 | 59.3 | [:page\_facing\_up:(Lin 2004)](https://aclanthology.org/W04-1013/) | [:octocat:](https://github.com/pltrdy/rouge) |

|

| 37 |

+

| 11 | ROUGE-L | 77.3 | 71.8 | 28.3 | 59.1 | [:page\_facing\_up:(Lin 2004)](https://aclanthology.org/W04-1013/) | [:octocat:](https://github.com/pltrdy/rouge) |

|

| 38 |

+

| 12 | QuestEval | 72.5 | 71.4 | 25.0 | 56.3 | [:page\_facing\_up:(Scialom et al. 2021)](https://arxiv.org/abs/2103.12693) | [:octocat:](https://github.com/ThomasScialom/QuestEval) |

|

| 39 |

+

| 13 | BLEU | 76.3 | 67.3 | 24.6 | 56.1 | [:page\_facing\_up:(Papineni et al. 2002)](https://aclanthology.org/P02-1040/) | [:octocat:](https://www.nltk.org/_modules/nltk/translate/bleu_score.html) |

|

| 40 |

+

| 14 | DAE | 66.8 | 65.7 | 35.1 | 55.8 | [:page\_facing\_up:(Goyal and Durrett 2020)](https://aclanthology.org/2020.findings-emnlp.322/) | [:octocat:](https://github.com/tagoyal/dae-factuality) |

|

| 41 |

+

| 15 | BLEURT | 69.2 | 71.9 | 24.9 | 55.4 | [:page\_facing\_up:(Sellam et al. 2020)](https://arxiv.org/abs/2004.04696) | [:octocat:](https://github.com/google-research/bleurt) |

|

| 42 |

+

| 16 | BERTScore | 72.1 | 68.6 | 21.9 | 54.2 | [:page\_facing\_up:(Zhang et al. 2020)](https://arxiv.org/abs/1904.09675) | [:octocat:](https://github.com/Tiiiger/bert_score) |

|

| 43 |

+

| 17 | SimCSE | 67.4 | 70.3 | 23.8 | 53.8 | [:page\_facing\_up:(Gao et al. 2021)](https://arxiv.org/abs/2104.08821) | [:octocat:](https://github.com/princeton-nlp/SimCSE) |

|

| 44 |

+

| 18 | FactCC | 68.8 | 62.7 | 21.2 | 50.9 | [:page\_facing\_up:(Kryscinski et al. 2020)](https://arxiv.org/abs/1910.12840) | [:octocat:](https://github.com/salesforce/factCC) |

|

| 45 |

+

| 19 | BLANC | 65.1 | 64.0 | 14.4 | 47.8 | [:page\_facing\_up:(Vasilyev et al. 2020)](https://arxiv.org/abs/2002.09836) | [:octocat:](https://github.com/PrimerAI/blanc) |

|

| 46 |

+

| 20 | NER-Overlap | 60.4 | 59.3 | 18.9 | 46.2 | [:page\_facing\_up:(Laban et al. 2022)](https://arxiv.org/abs/2111.09525) | [:octocat:](https://github.com/tingofurro/summac) |

|

| 47 |

+

| 21 | MNLI | 47.9 | 60.4 | 3.1 | 37.2 | [:page\_facing\_up:(Williams et al. 2018)](https://arxiv.org/abs/1704.05426) | [:octocat:](https://github.com/nyu-mll/multiNLI) |

|

| 48 |

+

| 22 | FEQA | 48.3 | 52.2 | -1.9 | 32.9 | [:page\_facing\_up:(Durmus et al. 2020)](https://arxiv.org/abs/2005.03754) | [:octocat:](https://github.com/esdurmus/feqa) |

|

| 49 |

+

|

| 50 |

+

\* SummaC Benchmark: [\[Paper\]](https://arxiv.org/abs/2111.09525) \| [\[Github\]](https://github.com/tingofurro/summac). We report AUC ROC on the SummaC benchmark.

|

| 51 |

+

|

| 52 |

+

** TRUE Benchmark: [\[Paper\]](https://arxiv.org/abs/2204.04991) \| [\[Github\]](https://github.com/google-research/true). We report AUC ROC on the TRUE benchmark.

|

| 53 |

+

|

| 54 |

+

*** Besides the SummaC and TRUE benchmarks, we also include other popular factual consistency evaluation datasets: [XSumFaith](https://doi.org/10.18653/v1/2020.acl-main.173), [SummEval](https://doi.org/10.1162/tacl_a_00373), [QAGS-XSum](https://doi.org/10.18653/v1/2020.acl-main.450), [QAGS-CNNDM](https://doi.org/10.18653/v1/2020.acl-main.450), [FRANK-XSum](https://doi.org/10.18653/v1/2021.naacl-main.383), [FRANK-CNNDM](https://doi.org/10.18653/v1/2021.naacl-main.383) and [SamSum](https://doi.org/10.18653/v1/D19-5409). We compute the Spearman Correlation coefficients between the human annotated score and the metric predicted score, following common practice.

|

| 55 |

+

|

| 56 |

+

**** To rank these metrics, we simply compute the average performance of SummaC, TRUE and Other Datasets.

|

| 57 |

+

|

| 58 |

+

## Leaderboard --- compare with LLM-based metrics

|

| 59 |

+

|

| 60 |

+

We also show the performance comparison with large-language-model based metrics below. The rank is based on the average Spearman Correlation coefficients on SummEval, QAGS-XSum and QAGS-CNNDM datasets.*

|

| 61 |

+

|

| 62 |

+

| Rank | Metrics | Base Model | SummEval | QAGS-XSUM | QAGS-CNNDM | Average | Paper | Code |

|

| 63 |

+

| :--- | :-------------------- | :----------------------------------------------------------- | :------: | :-------: | :--------: | :--: | :----------------------------------------------------------: | :----------------------------------------------------------: |

|

| 64 |

+

| 1 | **AlignScore-large** | RoBERTa-l (355M) | 46.6 | 57.2 | 73.9 | 59.3 | [:page\_facing\_up:(Zha et al. 2023)](https://arxiv.org/pdf/2305.16739.pdf) | [:octocat:](https://github.com/yuh-zha/AlignScore) |

|

| 65 |

+

| 2 | G-EVAL-4 | GPT4 | 50.7 | 53.7 | 68.5 | 57.6 | [:page\_facing\_up:(Liu et al. 2023)](https://arxiv.org/pdf/2303.16634.pdf) | [:octocat:](https://github.com/nlpyang/geval) |

|

| 66 |

+

| 3 | **AlignScore-base** | RoBERTa-b (125M) | 43.4 | 51.9 | 69.0 | 54.8 | [:page\_facing\_up:(Zha et al. 2023)](https://arxiv.org/pdf/2305.16739.pdf) | [:octocat:](https://github.com/yuh-zha/AlignScore) |

|

| 67 |

+

| 4 | FActScore (modified)** | GPT3.5-d03 + GPT3.5-turbo | 52.6 | 51.2 | 57.6 | 53.8 | [:page\_facing\_up:(Min et al. 2023)](https://arxiv.org/pdf/2305.14251.pdf) | [:octocat:](https://github.com/shmsw25/FActScore)* |

|

| 68 |

+

| 5 | ChatGPT (Chen et al. 2023) | GPT3.5-turbo | 42.7 | 53.3 | 52.7 | 49.6 | [:page\_facing\_up:(Yi Chen et al. 2023)](https://arxiv.org/pdf/2305.14069.pdf) | [:octocat:](https://github.com/SJTU-LIT/llmeval_sum_factual) |

|

| 69 |

+

| 6 | GPTScore | GPT3.5-d03 | 45.9 | 22.7 | 64.4 | 44.3 | [:page\_facing\_up:(Fu et al. 2023)](https://arxiv.org/pdf/2302.04166.pdf) | [:octocat:](https://github.com/jinlanfu/GPTScore) |

|

| 70 |

+

| 7 | GPTScore | GPT3-d01 | 46.1 | 22.3 | 63.9 | 44.1 | [:page\_facing\_up:(Fu et al. 2023)](https://arxiv.org/pdf/2302.04166.pdf) | [:octocat:](https://github.com/jinlanfu/GPTScore) |

|

| 71 |

+

| 8 | G-EVAL-3.5 | GPT3.5-d03 | 38.6 | 40.6 | 51.6 | 43.6 | [:page\_facing\_up:(Liu et al. 2023)](https://arxiv.org/pdf/2303.16634.pdf) | [:octocat:](https://github.com/nlpyang/geval) |

|

| 72 |

+

| 9 | ChatGPT (Gao et al. 2023) | GPT3.5-turbo | 41.6 | 30.4 | 48.9 | 40.3 | [:page\_facing\_up:(Gao et al. 2023)](https://arxiv.org/pdf/2304.02554.pdf) | - |

|

| 73 |

+

|

| 74 |

+

\* We notice that evaluating factual consistency using GPT-based models is expensive and slow. And we need human labor to interpret the response (generally text) to numerical scores. Therefore, we only benchmark on 3 popular factual consistency evaluation datasets: SummEval, QAGS-XSum and QAGS-CNNDM.

|

| 75 |

+

|

| 76 |

+

*\* We use a modified version of FActScore `retrieval+ChatGPT` where we skip the retrieval stage and use the context documents in SummEval, QAGS-XSUM, and QAGS-CNNDM directly. As samples in theses datasets do not have "topics", we make a small modification to the original FActScore prompt and do not mention `topic` when not available. See [our fork of FActScore](https://github.com/yichi-yang/FActScore) for more details.

|

| 77 |

+

|

| 78 |

+

# Introduction

|

| 79 |

+

|

| 80 |

+

The AlignScore metric is an automatic factual consistency evaluation metric built with the following parts:

|

| 81 |

+

|

| 82 |

+

* Unified information alignment function between two arbitrary text pieces: It is trained on 4.7 million training examples from 7 well-established tasks (NLI, QA, paraphrasing, fact verification, information retrieval, semantic textual similarity and summarization)

|

| 83 |

+

|

| 84 |

+

* The chunk-sentence splitting method: The input context is splitted into chunks (contains roughly 350 tokens each) and the input claim is splitted into sentences. With the help of the alignment function, it's possible to know the alignment score between chunks and sentences. We pick the maximum alignment score for each sentence and then average these scores to get the example-level factual consistency score (AlignScore).

|

| 85 |

+

|

| 86 |

+

<div align=center>

|

| 87 |

+

<img src="./alignscore_fig.png" alt="alignscore_fig" width="500px" />

|

| 88 |

+

</div>

|

| 89 |

+

|

| 90 |

+

We assume there are two inputs to the metric, namely `context` and `claim`. And the metric evaluates whether the `claim` is factual consistent with the `context`. The output of AlignScore is a single numerical value, which shows the degree of the factual consistency.

|

| 91 |

+

# Installation

|

| 92 |

+

|

| 93 |

+

Our models are trained and evaluated using PyTorch 1.12.1. We recommend using this version to reproduce the results.

|

| 94 |

+

|

| 95 |

+

1. Please first install the right version of PyTorch before installing `alignscore`.

|

| 96 |

+

2. You can install `alignscore` by cloning this repository and `pip install .`.

|

| 97 |

+

3. After installing `alignscore`, please use `python -m spacy download en_core_web_sm` to install the required spaCy model (we use `spaCy` for sentenization).

|

| 98 |

+

|

| 99 |

+

# Evaluating Factual Consistency

|

| 100 |

+

To evaluate the factual consistency of the `claim` w.r.t. the `context`, simply use the score method of `AlignScore`.

|

| 101 |

+

```python

|

| 102 |

+

from alignscore import AlignScore

|

| 103 |

+

|

| 104 |

+

scorer = AlignScore(model='roberta-base', batch_size=32, device='cuda:0', ckpt_path='/path/to/checkpoint', evaluation_mode='nli_sp')

|

| 105 |

+

score = scorer.score(contexts=['hello world.'], claims=['hello world.'])

|

| 106 |

+

```

|

| 107 |

+

`model`: the backbone model of the metric. Now, we only provide the metric trained on RoBERTa

|

| 108 |

+

|

| 109 |

+

`batch_size`: the batch size of the inference

|

| 110 |

+

|

| 111 |

+

`device`: which device to run the metric

|

| 112 |

+

|

| 113 |

+

`ckpt_path`: the path to the checkpoint

|

| 114 |

+

|

| 115 |

+

`evaluation_mode`: choose from `'nli_sp', 'nli', 'bin_sp', 'bin'`. `nli` and `bin` refer to the 3-way and binary classficiation head, respectively. `sp` indicates if the chunk-sentence splitting method is used. `nli_sp` is the default setting of AlignScore

|

| 116 |

+

|

| 117 |

+

|

| 118 |

+

# Checkpoints

|

| 119 |

+

We provide two versions of the AlignScore checkpoints: `AlignScore-base` and `AlignScore-large`. The `-base` model is based on RoBERTa-base and has 125M parameters. The `-large` model is based on RoBERTa-large and has 355M parameters.

|

| 120 |

+

|

| 121 |

+

**AlignScore-base**:

|

| 122 |

+

https://huggingface.co/yzha/AlignScore/resolve/main/AlignScore-base.ckpt

|

| 123 |

+

|

| 124 |

+

**AlignScore-large**:

|

| 125 |

+

https://huggingface.co/yzha/AlignScore/resolve/main/AlignScore-large.ckpt

|

| 126 |

+

|

| 127 |

+

# Training

|

| 128 |

+

You can use the above checkpoints directly for factual consistency evaluation. However, if you wish to train an alignment model from scratch / on your own data, use `train.py`.

|

| 129 |

+

```python

|

| 130 |

+

python train.py --seed 2022 --batch-size 32 \

|

| 131 |

+

--num-epoch 3 --devices 0 1 2 3 \

|

| 132 |

+

--model-name roberta-large -- ckpt-save-path ./ckpt/ \

|

| 133 |

+

--data-path ./data/training_sets/ \

|

| 134 |

+

--max-samples-per-dataset 500000

|

| 135 |

+

```

|

| 136 |

+

|

| 137 |

+

`--seed`: the random seed for initialization

|

| 138 |

+

|

| 139 |

+

`--batch-size`: the batch size for training

|

| 140 |

+

|

| 141 |

+

`--num-epoch`: training epochs

|

| 142 |

+

|

| 143 |

+

`--devices`: which devices to train the metric, a list of GPU ids

|

| 144 |

+

|

| 145 |

+

`--model-name`: the backbone model name of the metric, default RoBERTa-large

|

| 146 |

+

|

| 147 |

+

`--ckpt-save-path`: the path to save the checkpoint

|

| 148 |

+

|

| 149 |

+

`--training-datasets`: the names of the training datasets

|

| 150 |

+

|

| 151 |

+

`--data-path`: the path to the training datasets

|

| 152 |

+

|

| 153 |

+

`--max-samples-per-dataset`: the maximum number of samples from a dataset

|

| 154 |

+

|

| 155 |

+

# Benchmarking

|

| 156 |

+

Our benchmark includes the TRUE and SummaC benchmark as well as several popular factual consistency evaluation datasets.

|

| 157 |

+

|

| 158 |

+

To run the benchmark, a few additional dependencies are required and can be installed with `pip install -r requirements.txt`.

|

| 159 |

+

Additionally, some depedencies are not available as packages and need to be downloaded manually (please see `python benchmark.py --help` for instructions).

|

| 160 |

+

|

| 161 |

+

Note installing `summac` may cause dependency conflicts with `alignscore`. Please reinstall `alignscore` to force the correct dependency versions.

|

| 162 |

+

|

| 163 |

+

The relevant arguments for evaluating AlignScore are:

|

| 164 |

+

|

| 165 |

+

`--alignscore`: evaluation the AlignScore metric

|

| 166 |

+

|

| 167 |

+

`--alignscore-model`: the name of the backbone model (either 'roberta-base' or 'roberta-large')

|

| 168 |

+

|

| 169 |

+

`--alignscore-ckpt`: the path to the saved checkpoint

|

| 170 |

+

|

| 171 |

+

`--alignscore-eval-mode`: the evaluation mode, defaults to `nli_sp`

|

| 172 |

+

|

| 173 |

+

`--device`: which device to run the metric, defaults to `cuda:0`

|

| 174 |

+

|

| 175 |

+

`--tasks`: which tasks to benchmark, e.g., SummEval, QAGS-CNNDM, ...

|

| 176 |

+

|

| 177 |

+

For the baselines, please see `python benchmark.py --help` for details.

|

| 178 |

+

|

| 179 |

+

## Training datasets download

|

| 180 |

+

Most datasets are downloadable from Huggingface (refer to [`generate_training_data.py`](https://github.com/yuh-zha/AlignScore/blob/main/generate_training_data.py)). Some datasets that needed to be imported manually are now also avaialable on Huggingface (See [Issue](https://github.com/yuh-zha/AlignScore/issues/6#issuecomment-1695448614)).

|

| 181 |

+

|

| 182 |

+

## Evaluation datasets download

|

| 183 |

+

|

| 184 |

+

The following table shows the links to the evaluation datasets mentioned in the paper

|

| 185 |

+

|

| 186 |

+

| Benchmark/Dataset | Link |

|

| 187 |

+

| ----------------- | ------------------------------------------------------------ |

|

| 188 |

+

| SummaC | https://github.com/tingofurro/summac |

|

| 189 |

+

| TRUE | https://github.com/google-research/true |

|

| 190 |

+

| XSumFaith | https://github.com/google-research-datasets/xsum_hallucination_annotations |

|

| 191 |

+

| SummEval | https://github.com/tanyuqian/ctc-gen-eval/blob/master/train/data/summeval.json |

|

| 192 |

+

| QAGS-Xsum | https://github.com/tanyuqian/ctc-gen-eval/blob/master/train/data/qags_xsum.json |

|

| 193 |

+

| QAGS-CNNDM | https://github.com/tanyuqian/ctc-gen-eval/blob/master/train/data/qags_cnndm.json |

|

| 194 |

+

| FRANK-XSum | https://github.com/artidoro/frank |

|

| 195 |

+

| FRANK-CNNDM | https://github.com/artidoro/frank |

|

| 196 |

+

| SamSum | https://github.com/skgabriel/GoFigure/blob/main/human_eval/samsum.jsonl |

|

| 197 |

+

|

| 198 |

+

# Citation

|

| 199 |

+

If you find the metric and this repo helpful, please consider cite:

|

| 200 |

+

```

|

| 201 |

+

@inproceedings{zha-etal-2023-alignscore,

|

| 202 |

+

title = "{A}lign{S}core: Evaluating Factual Consistency with A Unified Alignment Function",

|

| 203 |

+

author = "Zha, Yuheng and

|

| 204 |

+

Yang, Yichi and

|

| 205 |

+

Li, Ruichen and

|

| 206 |

+

Hu, Zhiting",

|

| 207 |

+

booktitle = "Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers)",

|

| 208 |

+

month = jul,

|

| 209 |

+

year = "2023",

|

| 210 |

+

address = "Toronto, Canada",

|

| 211 |

+

publisher = "Association for Computational Linguistics",

|

| 212 |

+

url = "https://aclanthology.org/2023.acl-long.634",

|

| 213 |

+

pages = "11328--11348",

|

| 214 |

+

abstract = "Many text generation applications require the generated text to be factually consistent with input information. Automatic evaluation of factual consistency is challenging. Previous work has developed various metrics that often depend on specific functions, such as natural language inference (NLI) or question answering (QA), trained on limited data. Those metrics thus can hardly assess diverse factual inconsistencies (e.g., contradictions, hallucinations) that occur in varying inputs/outputs (e.g., sentences, documents) from different tasks. In this paper, we propose AlignScore, a new holistic metric that applies to a variety of factual inconsistency scenarios as above. AlignScore is based on a general function of information alignment between two arbitrary text pieces. Crucially, we develop a unified training framework of the alignment function by integrating a large diversity of data sources, resulting in 4.7M training examples from 7 well-established tasks (NLI, QA, paraphrasing, fact verification, information retrieval, semantic similarity, and summarization). We conduct extensive experiments on large-scale benchmarks including 22 evaluation datasets, where 19 of the datasets were never seen in the alignment training. AlignScore achieves substantial improvement over a wide range of previous metrics. Moreover, AlignScore (355M parameters) matches or even outperforms metrics based on ChatGPT and GPT-4 that are orders of magnitude larger.",

|

| 215 |

+

}

|

| 216 |

+

```

|

alignscore/alignscore_fig.png

ADDED

|

alignscore/baselines.py

ADDED

|

@@ -0,0 +1,704 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from logging import warning

|

| 2 |

+

import torch

|

| 3 |

+

import torch.nn as nn

|

| 4 |

+

import numpy as np

|

| 5 |

+

from tqdm import tqdm

|

| 6 |

+

import spacy

|

| 7 |

+

from sklearn.metrics.pairwise import cosine_similarity

|

| 8 |

+

from nltk.tokenize import sent_tokenize

|

| 9 |

+

import json

|

| 10 |

+

|

| 11 |

+

class CTCScorer():

|

| 12 |

+

def __init__(self, model_type) -> None:

|

| 13 |

+

self.model_type = model_type

|

| 14 |

+

import nltk

|

| 15 |

+

nltk.download('stopwords')

|

| 16 |

+

|

| 17 |

+

from ctc_score import StyleTransferScorer, SummarizationScorer, DialogScorer

|

| 18 |

+

if model_type == 'D-cnndm':

|

| 19 |

+

self.scorer = SummarizationScorer(align='D-cnndm')

|

| 20 |

+

elif model_type =='E-roberta':

|

| 21 |

+

self.scorer = SummarizationScorer(align='E-roberta')

|

| 22 |

+

elif model_type == 'R-cnndm':

|

| 23 |

+

self.scorer = SummarizationScorer(align='R-cnndm')

|

| 24 |

+

def score(self, premise: list, hypo: list):

|

| 25 |

+

assert len(premise) == len(hypo), "Premise and hypothesis should have the same length"

|

| 26 |

+

|

| 27 |

+

output_scores = []

|

| 28 |

+

for one_pre, one_hypo in tqdm(zip(premise, hypo), total=len(premise), desc="Evaluating by ctc"):

|

| 29 |

+

score_for_this_example = self.scorer.score(doc=one_pre, refs=[], hypo=one_hypo, aspect='consistency')

|

| 30 |

+

if score_for_this_example is not None:

|

| 31 |

+

output_scores.append(score_for_this_example)

|

| 32 |

+

else:

|

| 33 |

+

output_scores.append(1e-8)

|

| 34 |

+

output = None, torch.tensor(output_scores), None

|

| 35 |

+

|

| 36 |

+

return output

|

| 37 |

+

|

| 38 |

+

class SimCSEScorer():

|

| 39 |

+

def __init__(self, model_type, device) -> None:

|

| 40 |

+

self.model_type = model_type

|

| 41 |

+

self.device = device

|

| 42 |

+

from transformers import AutoModel, AutoTokenizer

|

| 43 |

+

|

| 44 |

+

# refer to the model list on https://github.com/princeton-nlp/SimCSE for the list of models

|

| 45 |

+

self.tokenizer = AutoTokenizer.from_pretrained(model_type)

|

| 46 |

+

self.model = AutoModel.from_pretrained(model_type).to(self.device)

|

| 47 |

+

self.spacy = spacy.load('en_core_web_sm')

|

| 48 |

+

|

| 49 |

+

self.batch_size = 64

|

| 50 |

+

|

| 51 |

+

def score(self, premise: list, hypo: list):

|

| 52 |

+

assert len(premise) == len(hypo)

|

| 53 |

+

|

| 54 |

+

output_scores = []

|

| 55 |

+

premise_sents = []

|

| 56 |

+

premise_index = [0]

|

| 57 |

+

hypo_sents = []

|

| 58 |

+

hypo_index = [0]

|

| 59 |

+

|

| 60 |

+

for one_pre, one_hypo in tqdm(zip(premise, hypo), desc="Sentenizing", total=len(premise)):

|

| 61 |

+

premise_sent = sent_tokenize(one_pre) #[each.text for each in self.spacy(one_pre).sents]

|

| 62 |

+

hypo_sent = sent_tokenize(one_hypo) #[each.text for each in self.spacy(one_hypo).sents]

|

| 63 |

+

premise_sents.extend(premise_sent)

|

| 64 |

+

premise_index.append(len(premise_sents))

|

| 65 |

+

|

| 66 |

+

hypo_sents.extend(hypo_sent)

|

| 67 |

+

hypo_index.append(len(hypo_sents))

|

| 68 |

+

|

| 69 |

+

all_sents = premise_sents + hypo_sents

|

| 70 |

+

embeddings = []

|

| 71 |

+

with torch.no_grad():

|

| 72 |

+

for batch in tqdm(self.chunks(all_sents, self.batch_size), total=int(len(all_sents)/self.batch_size), desc="Evaluating by SimCSE"):

|

| 73 |

+

inputs = self.tokenizer(batch, padding=True, truncation=True, return_tensors="pt").to(self.device)

|

| 74 |

+

embeddings.append(self.model(**inputs, output_hidden_states=True, return_dict=True).pooler_output)

|

| 75 |

+

embeddings = torch.cat(embeddings)

|

| 76 |

+

|

| 77 |

+

assert len(premise_index) == len(hypo_index)

|

| 78 |

+

for i in range(len(premise_index)-1):

|

| 79 |

+

premise_embeddings = embeddings[premise_index[i]: premise_index[i+1]]

|

| 80 |

+

hypo_embeddings = embeddings[len(premise_sents)+hypo_index[i]:len(premise_sents)+hypo_index[i+1]]

|

| 81 |

+

cos_sim = cosine_similarity(premise_embeddings.cpu(), hypo_embeddings.cpu())

|

| 82 |

+

score_p = cos_sim.max(axis=0).mean()

|

| 83 |

+

score_r = cos_sim.max(axis=1).mean()

|

| 84 |

+

score_f = 2 * score_p * score_r / (score_p + score_r)

|

| 85 |

+

output_scores.append(score_f)

|

| 86 |

+

|

| 87 |

+

return torch.Tensor(output_scores), torch.Tensor(output_scores), None

|

| 88 |

+

|

| 89 |

+

def chunks(self, lst, n):

|

| 90 |

+

"""Yield successive n-sized chunks from lst."""

|

| 91 |

+

for i in range(0, len(lst), n):

|

| 92 |

+

yield lst[i:i + n]

|

| 93 |

+

|

| 94 |

+

class BleurtScorer():

|

| 95 |

+

def __init__(self, checkpoint) -> None:

|

| 96 |

+

self.checkpoint = checkpoint

|

| 97 |

+

|

| 98 |

+

from bleurt import score

|

| 99 |

+

# BLEURT-20 can also be switched to other checkpoints to improve time

|

| 100 |

+

# No avaliable api to specify cuda number

|

| 101 |

+

self.model = score.BleurtScorer(self.checkpoint)

|

| 102 |

+

|

| 103 |

+

def scorer(self, premise:list, hypo: list):

|

| 104 |

+

assert len(premise) == len(hypo)

|

| 105 |

+

|

| 106 |

+

output_scores = self.model.score(references=premise, candidates=hypo, batch_size=8)

|

| 107 |

+

output_scores = [s for s in output_scores]

|

| 108 |

+

return torch.Tensor(output_scores), torch.Tensor(output_scores), torch.Tensor(output_scores)

|

| 109 |

+

|

| 110 |

+

class BertScoreScorer():

|

| 111 |

+

def __init__(self, model_type, metric, device, batch_size) -> None:

|

| 112 |

+

self.model_type = model_type

|

| 113 |

+

self.device = device

|

| 114 |

+

self.metric = metric

|

| 115 |

+

self.batch_size = batch_size

|

| 116 |

+

|

| 117 |

+

from bert_score import score

|

| 118 |

+

self.model = score

|

| 119 |

+

|

| 120 |

+

def scorer(self, premise: list, hypo: list):

|

| 121 |

+

assert len(premise) == len(hypo)

|

| 122 |

+

|

| 123 |

+

precision, recall, f1 = self.model(premise, hypo, model_type=self.model_type, lang='en', rescale_with_baseline=True, verbose=True, device=self.device, batch_size=self.batch_size)

|

| 124 |

+

|

| 125 |

+

f1 = [f for f in f1]

|

| 126 |

+

precision = [p for p in precision]

|

| 127 |

+

recall = [r for r in recall]

|

| 128 |

+

|

| 129 |

+

if self.metric == 'f1':

|

| 130 |

+

return torch.Tensor(f1), torch.Tensor(f1), None

|

| 131 |

+

elif self.metric == 'precision':

|

| 132 |

+

return torch.Tensor(precision), torch.Tensor(precision), None

|

| 133 |

+

elif self.metric == 'recall':

|

| 134 |

+

return torch.Tensor(recall), torch.Tensor(recall), None

|

| 135 |

+

else:

|

| 136 |

+

ValueError("metric type not in f1, precision or recall.")

|

| 137 |

+

|

| 138 |

+

class BartScoreScorer():

|

| 139 |

+

def __init__(self, checkpoint, device) -> None:

|

| 140 |

+

self.checkpoint = checkpoint

|

| 141 |

+

self.device = device

|

| 142 |

+

import os, sys

|

| 143 |

+

sys.path.append('baselines/BARTScore')

|

| 144 |

+

from bart_score import BARTScorer

|

| 145 |

+

self.model = BARTScorer(device=self.device, checkpoint=self.checkpoint)

|

| 146 |

+

|

| 147 |

+

def scorer(self, premise: list, hypo: list):

|

| 148 |

+

assert len(premise) == len(hypo)

|

| 149 |

+

|

| 150 |

+

output_scores = self.model.score(premise, hypo, batch_size=4)

|

| 151 |

+

normed_score = torch.exp(torch.Tensor(output_scores))

|

| 152 |

+

|

| 153 |

+

return normed_score, normed_score, normed_score

|

| 154 |

+

|

| 155 |

+

### Below are baselines in SummaC

|

| 156 |

+

### MNLI, NER, FactCC, DAE, FEQA, QuestEval, SummaC-ZS, SummaC-Conv

|

| 157 |

+

class MNLIScorer():

|

| 158 |

+

def __init__(self, model="roberta-large-mnli", device='cuda:0', batch_size=32) -> None:

|

| 159 |

+

from transformers import AutoTokenizer, AutoModelForSequenceClassification

|

| 160 |

+

self.tokenizer = AutoTokenizer.from_pretrained(model)

|

| 161 |

+

self.model = AutoModelForSequenceClassification.from_pretrained(model).to(device)

|

| 162 |

+

self.device = device

|

| 163 |

+

self.softmax = nn.Softmax(dim=-1)

|

| 164 |

+

self.batch_size = batch_size

|

| 165 |

+

|

| 166 |

+

def scorer(self, premise: list, hypo: list):

|

| 167 |

+

if isinstance(premise, str) and isinstance(hypo, str):

|

| 168 |

+

premise = [premise]

|

| 169 |

+

hypo = [hypo]

|

| 170 |

+

|

| 171 |

+

batch = self.batch_tokenize(premise, hypo)

|

| 172 |

+

output_score_tri = []

|

| 173 |

+

|

| 174 |

+

for mini_batch in tqdm(batch, desc="Evaluating MNLI"):

|

| 175 |

+

# for mini_batch in batch:

|

| 176 |

+

mini_batch = mini_batch.to(self.device)

|

| 177 |

+

with torch.no_grad():

|

| 178 |

+

model_output = self.model(**mini_batch)

|

| 179 |

+

model_output_tri = model_output.logits

|

| 180 |

+

model_output_tri = self.softmax(model_output_tri).cpu()

|

| 181 |

+

|

| 182 |

+

output_score_tri.append(model_output_tri[:,2])

|

| 183 |

+

|

| 184 |

+

output_score_tri = torch.cat(output_score_tri)

|

| 185 |

+

|

| 186 |

+

return output_score_tri, output_score_tri, output_score_tri

|

| 187 |

+

|

| 188 |

+

def batch_tokenize(self, premise, hypo):

|

| 189 |

+

"""

|

| 190 |

+

input premise and hypos are lists

|

| 191 |

+

"""

|

| 192 |

+

assert isinstance(premise, list) and isinstance(hypo, list)

|

| 193 |

+

assert len(premise) == len(hypo), "premise and hypo should be in the same length."

|

| 194 |

+

|

| 195 |

+

batch = []

|

| 196 |

+

for mini_batch_pre, mini_batch_hypo in zip(self.chunks(premise, self.batch_size), self.chunks(hypo, self.batch_size)):

|

| 197 |

+

try:

|

| 198 |

+

mini_batch = self.tokenizer(mini_batch_pre, mini_batch_hypo, truncation='only_first', padding='max_length', max_length=self.tokenizer.model_max_length, return_tensors='pt')

|

| 199 |

+

except:

|

| 200 |

+

warning('text_b too long...')

|

| 201 |

+

mini_batch = self.tokenizer(mini_batch_pre, mini_batch_hypo, truncation=True, padding='max_length', max_length=self.tokenizer.model_max_length, return_tensors='pt')

|

| 202 |

+

batch.append(mini_batch)

|

| 203 |

+

|

| 204 |

+

return batch

|

| 205 |

+

|

| 206 |

+

def chunks(self, lst, n):

|

| 207 |

+

"""Yield successive n-sized chunks from lst."""

|

| 208 |

+

for i in range(0, len(lst), n):

|

| 209 |

+

yield lst[i:i + n]

|

| 210 |

+

|

| 211 |

+

class NERScorer():

|

| 212 |

+

def __init__(self) -> None:

|

| 213 |

+

import os, sys

|

| 214 |

+

sys.path.append('baselines/summac/summac')

|

| 215 |

+

from model_guardrails import NERInaccuracyPenalty

|

| 216 |

+

self.ner = NERInaccuracyPenalty()

|

| 217 |

+

|

| 218 |

+

def scorer(self, premise, hypo):

|

| 219 |

+

score_return = self.ner.score(premise, hypo)['scores']

|

| 220 |

+

oppo_score = [float(not each) for each in score_return]

|

| 221 |

+

|

| 222 |

+

tensor_score = torch.tensor(oppo_score)

|

| 223 |

+

|

| 224 |

+

return tensor_score, tensor_score, tensor_score

|

| 225 |

+

class UniEvalScorer():

|

| 226 |

+

def __init__(self, task='fact', device='cuda:0') -> None:

|

| 227 |

+

import os, sys

|

| 228 |

+

sys.path.append('baselines/UniEval')

|

| 229 |

+

from metric.evaluator import get_evaluator

|

| 230 |

+

|

| 231 |

+

self.evaluator = get_evaluator(task, device=device)

|

| 232 |

+

|

| 233 |

+

def scorer(self, premise, hypo):

|

| 234 |

+

from utils import convert_to_json

|

| 235 |

+

# Prepare data for pre-trained evaluators

|

| 236 |

+

data = convert_to_json(output_list=hypo, src_list=premise)

|

| 237 |

+

# Initialize evaluator for a specific task

|

| 238 |

+

|

| 239 |

+

# Get factual consistency scores

|

| 240 |

+

eval_scores = self.evaluator.evaluate(data, print_result=True)

|

| 241 |

+

score_list = [each['consistency'] for each in eval_scores]

|

| 242 |

+

|

| 243 |

+

return torch.tensor(score_list), torch.tensor(score_list), torch.tensor(score_list)

|

| 244 |

+

|

| 245 |

+

class FEQAScorer():

|

| 246 |

+

def __init__(self) -> None:

|

| 247 |

+

import os, sys

|

| 248 |

+

sys.path.append('baselines/feqa')

|

| 249 |

+

import benepar

|

| 250 |

+

import nltk

|

| 251 |

+

|

| 252 |

+

benepar.download('benepar_en3')

|

| 253 |

+

nltk.download('stopwords')

|

| 254 |

+

|

| 255 |

+

from feqa import FEQA

|

| 256 |

+

self.feqa_model = FEQA(squad_dir=os.path.abspath('baselines/feqa/qa_models/squad1.0'), bart_qa_dir=os.path.abspath('baselines/feqa/bart_qg/checkpoints/'), use_gpu=True)

|

| 257 |

+

|

| 258 |

+

def scorer(self, premise, hypo):

|

| 259 |

+

eval_score = self.feqa_model.compute_score(premise, hypo, aggregate=False)

|

| 260 |

+

|

| 261 |

+

return torch.tensor(eval_score), torch.tensor(eval_score), torch.tensor(eval_score)

|

| 262 |

+

|

| 263 |

+

|

| 264 |

+

class QuestEvalScorer():

|

| 265 |

+

def __init__(self) -> None:

|

| 266 |

+

import os, sys

|

| 267 |

+

sys.path.append('baselines/QuestEval')

|

| 268 |

+

from questeval.questeval_metric import QuestEval

|

| 269 |

+

self.questeval = QuestEval(no_cuda=False)

|

| 270 |

+

|

| 271 |

+

def scorer(self, premise, hypo):

|

| 272 |

+

score = self.questeval.corpus_questeval(

|

| 273 |

+

hypothesis=hypo,

|

| 274 |

+

sources=premise

|

| 275 |

+

)

|

| 276 |

+

final_score = score['ex_level_scores']

|

| 277 |

+

|

| 278 |

+

return torch.tensor(final_score), torch.tensor(final_score), torch.tensor(final_score)

|

| 279 |

+

|

| 280 |

+

class QAFactEvalScorer():

|

| 281 |

+

def __init__(self, model_folder, device='cuda:0') -> None:

|

| 282 |

+

import os, sys

|

| 283 |

+

sys.path.append('baselines/QAFactEval')

|

| 284 |

+

sys.path.append(os.path.abspath('baselines/qaeval/'))

|

| 285 |

+

from qafacteval import QAFactEval

|

| 286 |

+

kwargs = {"cuda_device": int(device.split(':')[-1]), "use_lerc_quip": True, \

|

| 287 |

+

"verbose": True, "generation_batch_size": 32, \

|

| 288 |

+

"answering_batch_size": 32, "lerc_batch_size": 8}

|

| 289 |

+

|

| 290 |

+

self.metric = QAFactEval(

|

| 291 |

+

lerc_quip_path=f"{model_folder}/quip-512-mocha",

|

| 292 |

+

generation_model_path=f"{model_folder}/generation/model.tar.gz",

|

| 293 |

+

answering_model_dir=f"{model_folder}/answering",

|

| 294 |

+

lerc_model_path=f"{model_folder}/lerc/model.tar.gz",

|

| 295 |

+

lerc_pretrained_model_path=f"{model_folder}/lerc/pretraining.tar.gz",

|

| 296 |

+

**kwargs

|

| 297 |

+

)

|

| 298 |

+

def scorer(self, premise, hypo):

|

| 299 |

+

results = self.metric.score_batch_qafacteval(premise, [[each] for each in hypo], return_qa_pairs=True)

|

| 300 |

+

score = [result[0]['qa-eval']['lerc_quip'] for result in results]

|

| 301 |

+

return torch.tensor(score), torch.tensor(score), torch.tensor(score)

|

| 302 |

+

|

| 303 |

+

class MoverScorer():

|

| 304 |

+

def __init__(self) -> None:

|

| 305 |

+

pass

|

| 306 |

+

|

| 307 |

+

class BERTScoreFFCIScorer():

|

| 308 |

+

def __init__(self) -> None:

|

| 309 |

+

pass

|

| 310 |

+

|

| 311 |

+

class DAEScorer():

|

| 312 |

+

def __init__(self, model_dir, device=0) -> None:

|

| 313 |

+

import os, sys

|

| 314 |

+

sys.path.insert(0, "baselines/factuality-datasets/")

|

| 315 |

+

from evaluate_generated_outputs import daefact

|

| 316 |

+

self.dae = daefact(model_dir, model_type='electra_dae', gpu_device=device)

|

| 317 |

+

|

| 318 |

+

def scorer(self, premise, hypo):

|

| 319 |

+

return_score = torch.tensor(self.dae.score_multi_doc(premise, hypo))

|

| 320 |

+

|

| 321 |

+

return return_score, return_score, return_score

|

| 322 |

+

|

| 323 |

+

class SummaCScorer():

|

| 324 |

+

def __init__(self, summac_type='conv', device='cuda:0') -> None:

|

| 325 |

+

self.summac_type = summac_type

|

| 326 |

+

import os, sys

|

| 327 |

+

sys.path.append("baselines/summac")

|

| 328 |

+

from summac.model_summac import SummaCZS, SummaCConv

|

| 329 |

+

|

| 330 |

+

if summac_type == 'conv':

|

| 331 |

+

self.model = SummaCConv(models=["vitc"], bins='percentile', granularity="sentence", nli_labels="e", device=device, start_file="default", agg="mean")

|

| 332 |

+

elif summac_type == 'zs':

|

| 333 |

+

self.model = SummaCZS(granularity="sentence", model_name="vitc", device=device) # If you have a GPU: switch to: device="cuda"

|

| 334 |

+

|

| 335 |

+

def scorer(self, premise, hypo):

|

| 336 |

+

assert len(premise) == len(hypo)

|

| 337 |

+

scores = self.model.score(premise, hypo)['scores']

|

| 338 |

+

return_score = torch.tensor(scores)

|

| 339 |

+

|

| 340 |

+

return return_score, return_score, return_score

|

| 341 |

+

|

| 342 |

+

class FactCCScorer():

|

| 343 |

+

def __init__(self, script_path, test_data_path,result_path) -> None:

|

| 344 |

+

self.script_path = script_path

|

| 345 |

+

self.result_path = result_path

|

| 346 |

+

self.test_data_path = test_data_path

|

| 347 |

+

def scorer(self, premise, hypo):

|

| 348 |

+

import subprocess

|

| 349 |

+

import pickle

|

| 350 |

+

|

| 351 |

+

self.generate_json_file(premise, hypo)

|

| 352 |

+

subprocess.call(f"sh {self.script_path}", shell=True)

|

| 353 |

+

print("Finishing FactCC")

|

| 354 |

+

results = pickle.load(open(self.result_path, 'rb'))

|

| 355 |

+

results = [-each+1 for each in results]

|

| 356 |

+

|

| 357 |

+

return torch.tensor(results), torch.tensor(results), torch.tensor(results)

|

| 358 |

+

|

| 359 |

+

def generate_json_file(self, premise, hypo):

|

| 360 |

+

output = []

|

| 361 |

+

assert len(premise) == len(hypo)

|

| 362 |

+

i = 0

|

| 363 |

+

for one_premise, one_hypo in zip(premise, hypo):

|

| 364 |

+

example = dict()

|

| 365 |

+

example['id'] = i

|

| 366 |

+

example['text'] = one_premise