Spaces:

Runtime error

Runtime error

Commit

•

edc435d

1

Parent(s):

e8046c0

add new files

Browse files

app.py

CHANGED

|

@@ -1,5 +1,7 @@

|

|

| 1 |

import os

|

| 2 |

|

|

|

|

|

|

|

| 3 |

os.system('cd fairseq;'

|

| 4 |

'pip install ./; cd ..')

|

| 5 |

|

|

@@ -74,7 +76,7 @@ def draw_boxes(image, bounds, color='red', width=4):

|

|

| 74 |

draw = ImageDraw.Draw(image)

|

| 75 |

for i, bound in enumerate(bounds):

|

| 76 |

p0, p1, p2, p3 = bound

|

| 77 |

-

draw.text(p0, str(i+1), fill=color)

|

| 78 |

draw.line([*p0, *p1, *p2, *p3, *p0], fill=color, width=width)

|

| 79 |

return image

|

| 80 |

|

|

@@ -179,22 +181,25 @@ def ocr(img):

|

|

| 179 |

|

| 180 |

with torch.no_grad():

|

| 181 |

result, scores = eval_step(task, generator, models, sample)

|

| 182 |

-

ocr_result.append(str(i+1)

|

| 183 |

|

| 184 |

-

result =

|

| 185 |

|

| 186 |

return out_img, result

|

| 187 |

|

| 188 |

|

| 189 |

title = "Chinese OCR"

|

| 190 |

-

description = "Gradio Demo for Chinese OCR

|

| 191 |

-

"

|

| 192 |

-

"

|

|

|

|

| 193 |

article = "<p style='text-align: center'><a href='https://github.com/OFA-Sys/OFA' target='_blank'>OFA Github " \

|

| 194 |

"Repo</a></p> "

|

| 195 |

-

examples = [['shupai.png'], ['chinese.jpg'], ['gaidao.jpeg'], ['qiaodaima.png'],

|

|

|

|

| 196 |

io = gr.Interface(fn=ocr, inputs=gr.inputs.Image(type='filepath', label='Image'),

|

| 197 |

-

outputs=[gr.outputs.Image(type='pil', label='Image'),

|

|

|

|

| 198 |

title=title, description=description, article=article, examples=examples)

|

| 199 |

io.launch()

|

| 200 |

|

|

|

|

| 1 |

import os

|

| 2 |

|

| 3 |

+

import pandas as pd

|

| 4 |

+

|

| 5 |

os.system('cd fairseq;'

|

| 6 |

'pip install ./; cd ..')

|

| 7 |

|

|

|

|

| 76 |

draw = ImageDraw.Draw(image)

|

| 77 |

for i, bound in enumerate(bounds):

|

| 78 |

p0, p1, p2, p3 = bound

|

| 79 |

+

draw.text(p0, str(i+1), fill=color, align='center')

|

| 80 |

draw.line([*p0, *p1, *p2, *p3, *p0], fill=color, width=width)

|

| 81 |

return image

|

| 82 |

|

|

|

|

| 181 |

|

| 182 |

with torch.no_grad():

|

| 183 |

result, scores = eval_step(task, generator, models, sample)

|

| 184 |

+

ocr_result.append([str(i+1), result[0]['ocr'].replace(' ', '')])

|

| 185 |

|

| 186 |

+

result = pd.DataFrame(ocr_result).iloc[:, 1:]

|

| 187 |

|

| 188 |

return out_img, result

|

| 189 |

|

| 190 |

|

| 191 |

title = "Chinese OCR"

|

| 192 |

+

description = "Gradio Demo for Chinese OCR based on OFA. "\

|

| 193 |

+

"Upload your own image or click any one of the examples, and click " \

|

| 194 |

+

"\"Submit\" and then wait for the generated OCR result. " \

|

| 195 |

+

"\n中文OCR体验区。欢迎上传图片,静待检测文字返回~"

|

| 196 |

article = "<p style='text-align: center'><a href='https://github.com/OFA-Sys/OFA' target='_blank'>OFA Github " \

|

| 197 |

"Repo</a></p> "

|

| 198 |

+

examples = [['shupai.png'], ['chinese.jpg'], ['gaidao.jpeg'], ['qiaodaima.png'],

|

| 199 |

+

['benpao.jpeg'], ['wanli.png'], ['xsd.jpg']]

|

| 200 |

io = gr.Interface(fn=ocr, inputs=gr.inputs.Image(type='filepath', label='Image'),

|

| 201 |

+

outputs=[gr.outputs.Image(type='pil', label='Image'),

|

| 202 |

+

gr.outputs.Dataframe(headers=['Box ID', 'Text'], label='OCR Results')],

|

| 203 |

title=title, description=description, article=article, examples=examples)

|

| 204 |

io.launch()

|

| 205 |

|

requirements.txt

CHANGED

|

@@ -9,4 +9,5 @@ einops

|

|

| 9 |

datasets

|

| 10 |

python-Levenshtein

|

| 11 |

zhconv

|

| 12 |

-

transformers

|

|

|

|

|

|

| 9 |

datasets

|

| 10 |

python-Levenshtein

|

| 11 |

zhconv

|

| 12 |

+

transformers

|

| 13 |

+

pandas

|

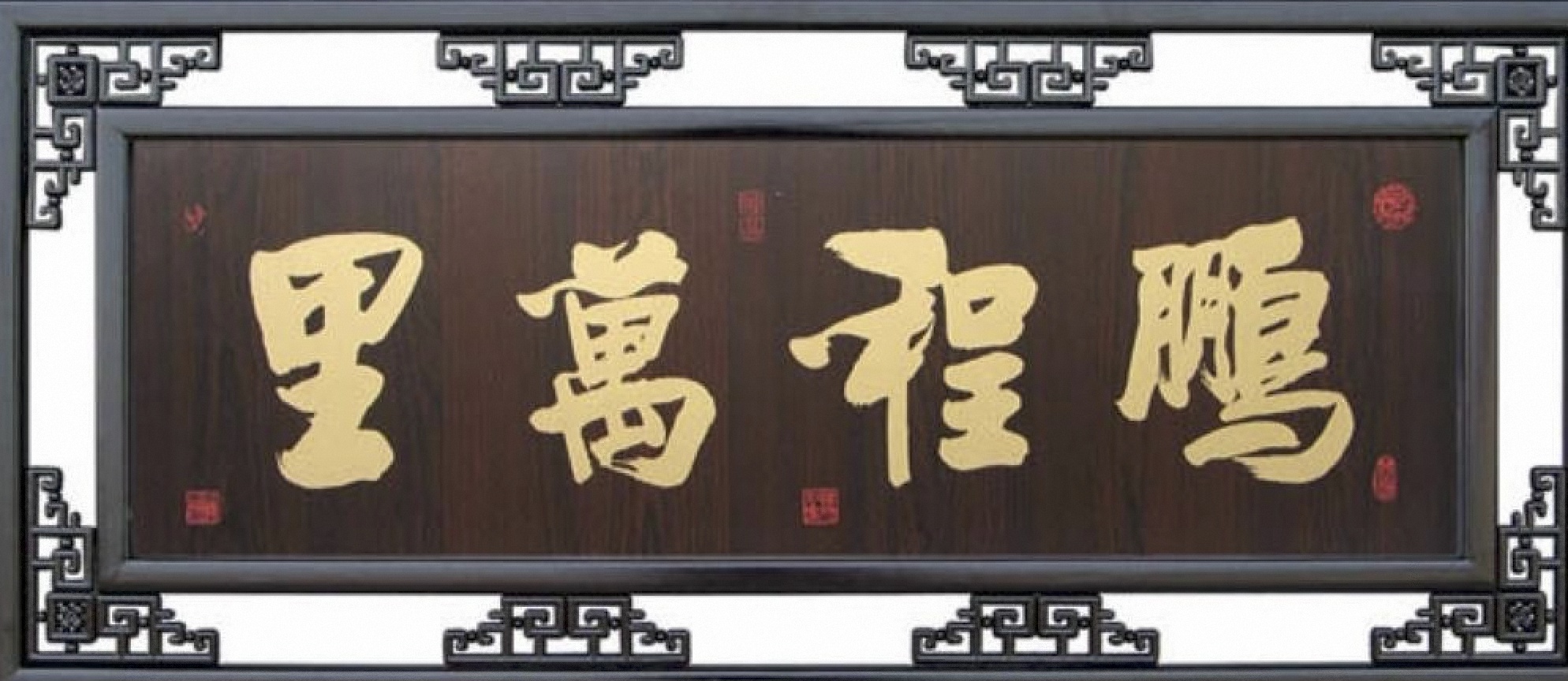

wanli.png

ADDED

|

xsd.jpg

ADDED

|