Spaces:

Runtime error

Runtime error

Commit

·

204969e

1

Parent(s):

945769d

add files

Browse files- chinese.jpg +0 -0

- ezocr/build/lib/easyocrlite/__init__.py +1 -0

- ezocr/build/lib/easyocrlite/reader.py +272 -0

- ezocr/build/lib/easyocrlite/types.py +5 -0

- lihe.png +0 -0

- paibian.jpeg +0 -0

- shupai.png +0 -0

- zuowen.jpg +0 -0

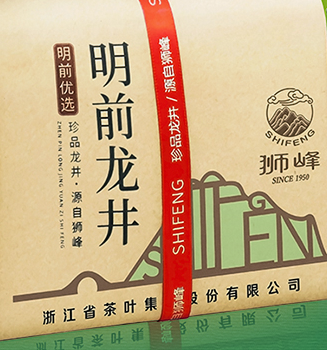

chinese.jpg

ADDED

|

ezocr/build/lib/easyocrlite/__init__.py

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

from easyocrlite.reader import ReaderLite

|

ezocr/build/lib/easyocrlite/reader.py

ADDED

|

@@ -0,0 +1,272 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from __future__ import annotations

|

| 2 |

+

|

| 3 |

+

import logging

|

| 4 |

+

import os

|

| 5 |

+

from pathlib import Path

|

| 6 |

+

from typing import Tuple

|

| 7 |

+

|

| 8 |

+

import cv2

|

| 9 |

+

import numpy as np

|

| 10 |

+

import torch

|

| 11 |

+

from PIL import Image, ImageEnhance

|

| 12 |

+

|

| 13 |

+

from easyocrlite.model import CRAFT

|

| 14 |

+

|

| 15 |

+

from easyocrlite.utils.download_utils import prepare_model

|

| 16 |

+

from easyocrlite.utils.image_utils import (

|

| 17 |

+

adjust_result_coordinates,

|

| 18 |

+

boxed_transform,

|

| 19 |

+

normalize_mean_variance,

|

| 20 |

+

resize_aspect_ratio,

|

| 21 |

+

)

|

| 22 |

+

from easyocrlite.utils.detect_utils import (

|

| 23 |

+

extract_boxes,

|

| 24 |

+

extract_regions_from_boxes,

|

| 25 |

+

box_expand,

|

| 26 |

+

greedy_merge,

|

| 27 |

+

)

|

| 28 |

+

from easyocrlite.types import BoxTuple, RegionTuple

|

| 29 |

+

import easyocrlite.utils.utils as utils

|

| 30 |

+

|

| 31 |

+

logger = logging.getLogger(__name__)

|

| 32 |

+

|

| 33 |

+

MODULE_PATH = (

|

| 34 |

+

os.environ.get("EASYOCR_MODULE_PATH")

|

| 35 |

+

or os.environ.get("MODULE_PATH")

|

| 36 |

+

or os.path.expanduser("~/.EasyOCR/")

|

| 37 |

+

)

|

| 38 |

+

|

| 39 |

+

|

| 40 |

+

class ReaderLite(object):

|

| 41 |

+

def __init__(

|

| 42 |

+

self,

|

| 43 |

+

gpu=True,

|

| 44 |

+

model_storage_directory=None,

|

| 45 |

+

download_enabled=True,

|

| 46 |

+

verbose=True,

|

| 47 |

+

quantize=True,

|

| 48 |

+

cudnn_benchmark=False,

|

| 49 |

+

):

|

| 50 |

+

|

| 51 |

+

self.verbose = verbose

|

| 52 |

+

|

| 53 |

+

model_storage_directory = Path(

|

| 54 |

+

model_storage_directory

|

| 55 |

+

if model_storage_directory

|

| 56 |

+

else MODULE_PATH + "/model"

|

| 57 |

+

)

|

| 58 |

+

self.detector_path = prepare_model(

|

| 59 |

+

model_storage_directory, download_enabled, verbose

|

| 60 |

+

)

|

| 61 |

+

|

| 62 |

+

self.quantize = quantize

|

| 63 |

+

self.cudnn_benchmark = cudnn_benchmark

|

| 64 |

+

if gpu is False:

|

| 65 |

+

self.device = "cpu"

|

| 66 |

+

if verbose:

|

| 67 |

+

logger.warning(

|

| 68 |

+

"Using CPU. Note: This module is much faster with a GPU."

|

| 69 |

+

)

|

| 70 |

+

elif not torch.cuda.is_available():

|

| 71 |

+

self.device = "cpu"

|

| 72 |

+

if verbose:

|

| 73 |

+

logger.warning(

|

| 74 |

+

"CUDA not available - defaulting to CPU. Note: This module is much faster with a GPU."

|

| 75 |

+

)

|

| 76 |

+

elif gpu is True:

|

| 77 |

+

self.device = "cuda"

|

| 78 |

+

else:

|

| 79 |

+

self.device = gpu

|

| 80 |

+

|

| 81 |

+

self.detector = CRAFT()

|

| 82 |

+

|

| 83 |

+

state_dict = torch.load(self.detector_path, map_location=self.device)

|

| 84 |

+

if list(state_dict.keys())[0].startswith("module"):

|

| 85 |

+

state_dict = {k[7:]: v for k, v in state_dict.items()}

|

| 86 |

+

|

| 87 |

+

self.detector.load_state_dict(state_dict)

|

| 88 |

+

|

| 89 |

+

if self.device == "cpu":

|

| 90 |

+

if self.quantize:

|

| 91 |

+

try:

|

| 92 |

+

torch.quantization.quantize_dynamic(

|

| 93 |

+

self.detector, dtype=torch.qint8, inplace=True

|

| 94 |

+

)

|

| 95 |

+

except:

|

| 96 |

+

pass

|

| 97 |

+

else:

|

| 98 |

+

self.detector = torch.nn.DataParallel(self.detector).to(self.device)

|

| 99 |

+

import torch.backends.cudnn as cudnn

|

| 100 |

+

|

| 101 |

+

cudnn.benchmark = self.cudnn_benchmark

|

| 102 |

+

|

| 103 |

+

self.detector.eval()

|

| 104 |

+

|

| 105 |

+

def process(

|

| 106 |

+

self,

|

| 107 |

+

image_path: str,

|

| 108 |

+

max_size: int = 960,

|

| 109 |

+

expand_ratio: float = 1.0,

|

| 110 |

+

sharp: float = 1.0,

|

| 111 |

+

contrast: float = 1.0,

|

| 112 |

+

text_confidence: float = 0.7,

|

| 113 |

+

text_threshold: float = 0.4,

|

| 114 |

+

link_threshold: float = 0.4,

|

| 115 |

+

slope_ths: float = 0.1,

|

| 116 |

+

ratio_ths: float = 0.5,

|

| 117 |

+

center_ths: float = 0.5,

|

| 118 |

+

dim_ths: float = 0.5,

|

| 119 |

+

space_ths: float = 1.0,

|

| 120 |

+

add_margin: float = 0.1,

|

| 121 |

+

min_size: float = 0.01,

|

| 122 |

+

) -> Tuple[BoxTuple, list[np.ndarray]]:

|

| 123 |

+

|

| 124 |

+

image = Image.open(image_path).convert('RGB')

|

| 125 |

+

|

| 126 |

+

tensor, inverse_ratio = self.preprocess(

|

| 127 |

+

image, max_size, expand_ratio, sharp, contrast

|

| 128 |

+

)

|

| 129 |

+

|

| 130 |

+

scores = self.forward_net(tensor)

|

| 131 |

+

|

| 132 |

+

boxes = self.detect(scores, text_confidence, text_threshold, link_threshold)

|

| 133 |

+

|

| 134 |

+

image = np.array(image)

|

| 135 |

+

region_list, box_list = self.postprocess(

|

| 136 |

+

image,

|

| 137 |

+

boxes,

|

| 138 |

+

inverse_ratio,

|

| 139 |

+

slope_ths,

|

| 140 |

+

ratio_ths,

|

| 141 |

+

center_ths,

|

| 142 |

+

dim_ths,

|

| 143 |

+

space_ths,

|

| 144 |

+

add_margin,

|

| 145 |

+

min_size,

|

| 146 |

+

)

|

| 147 |

+

|

| 148 |

+

# get cropped image

|

| 149 |

+

image_list = []

|

| 150 |

+

for region in region_list:

|

| 151 |

+

x_min, x_max, y_min, y_max = region

|

| 152 |

+

crop_img = image[y_min:y_max, x_min:x_max, :]

|

| 153 |

+

image_list.append(

|

| 154 |

+

(

|

| 155 |

+

((x_min, y_min), (x_max, y_min), (x_max, y_max), (x_min, y_max)),

|

| 156 |

+

crop_img,

|

| 157 |

+

)

|

| 158 |

+

)

|

| 159 |

+

|

| 160 |

+

for box in box_list:

|

| 161 |

+

transformed_img = boxed_transform(image, np.array(box, dtype="float32"))

|

| 162 |

+

image_list.append((box, transformed_img))

|

| 163 |

+

|

| 164 |

+

# sort by top left point

|

| 165 |

+

image_list = sorted(image_list, key=lambda x: (x[0][0][1], x[0][0][0]))

|

| 166 |

+

|

| 167 |

+

return image_list

|

| 168 |

+

|

| 169 |

+

def preprocess(

|

| 170 |

+

self,

|

| 171 |

+

image: Image.Image,

|

| 172 |

+

max_size: int,

|

| 173 |

+

expand_ratio: float = 1.0,

|

| 174 |

+

sharp: float = 1.0,

|

| 175 |

+

contrast: float = 1.0,

|

| 176 |

+

) -> torch.Tensor:

|

| 177 |

+

if sharp != 1:

|

| 178 |

+

enhancer = ImageEnhance.Sharpness(image)

|

| 179 |

+

image = enhancer.enhance(sharp)

|

| 180 |

+

if contrast != 1:

|

| 181 |

+

enhancer = ImageEnhance.Contrast(image)

|

| 182 |

+

image = enhancer.enhance(contrast)

|

| 183 |

+

|

| 184 |

+

image = np.array(image)

|

| 185 |

+

|

| 186 |

+

image, target_ratio = resize_aspect_ratio(

|

| 187 |

+

image, max_size, interpolation=cv2.INTER_LINEAR, expand_ratio=expand_ratio

|

| 188 |

+

)

|

| 189 |

+

inverse_ratio = 1 / target_ratio

|

| 190 |

+

|

| 191 |

+

x = np.transpose(normalize_mean_variance(image), (2, 0, 1))

|

| 192 |

+

|

| 193 |

+

x = torch.tensor(np.array([x]), device=self.device)

|

| 194 |

+

|

| 195 |

+

return x, inverse_ratio

|

| 196 |

+

|

| 197 |

+

@torch.no_grad()

|

| 198 |

+

def forward_net(self, tensor: torch.Tensor) -> torch.Tensor:

|

| 199 |

+

scores, feature = self.detector(tensor)

|

| 200 |

+

return scores[0]

|

| 201 |

+

|

| 202 |

+

def detect(

|

| 203 |

+

self,

|

| 204 |

+

scores: torch.Tensor,

|

| 205 |

+

text_confidence: float = 0.7,

|

| 206 |

+

text_threshold: float = 0.4,

|

| 207 |

+

link_threshold: float = 0.4,

|

| 208 |

+

) -> list[BoxTuple]:

|

| 209 |

+

# make score and link map

|

| 210 |

+

score_text = scores[:, :, 0].cpu().data.numpy()

|

| 211 |

+

score_link = scores[:, :, 1].cpu().data.numpy()

|

| 212 |

+

# extract box

|

| 213 |

+

boxes, _ = extract_boxes(

|

| 214 |

+

score_text, score_link, text_confidence, text_threshold, link_threshold

|

| 215 |

+

)

|

| 216 |

+

return boxes

|

| 217 |

+

|

| 218 |

+

def postprocess(

|

| 219 |

+

self,

|

| 220 |

+

image: np.ndarray,

|

| 221 |

+

boxes: list[BoxTuple],

|

| 222 |

+

inverse_ratio: float,

|

| 223 |

+

slope_ths: float = 0.1,

|

| 224 |

+

ratio_ths: float = 0.5,

|

| 225 |

+

center_ths: float = 0.5,

|

| 226 |

+

dim_ths: float = 0.5,

|

| 227 |

+

space_ths: float = 1.0,

|

| 228 |

+

add_margin: float = 0.1,

|

| 229 |

+

min_size: int = 0,

|

| 230 |

+

) -> Tuple[list[RegionTuple], list[BoxTuple]]:

|

| 231 |

+

|

| 232 |

+

# coordinate adjustment

|

| 233 |

+

boxes = adjust_result_coordinates(boxes, inverse_ratio)

|

| 234 |

+

|

| 235 |

+

max_y, max_x, _ = image.shape

|

| 236 |

+

|

| 237 |

+

# extract region and merge

|

| 238 |

+

region_list, box_list = extract_regions_from_boxes(boxes, slope_ths)

|

| 239 |

+

|

| 240 |

+

region_list = greedy_merge(

|

| 241 |

+

region_list,

|

| 242 |

+

ratio_ths=ratio_ths,

|

| 243 |

+

center_ths=center_ths,

|

| 244 |

+

dim_ths=dim_ths,

|

| 245 |

+

space_ths=space_ths,

|

| 246 |

+

verbose=0

|

| 247 |

+

)

|

| 248 |

+

|

| 249 |

+

# add margin

|

| 250 |

+

region_list = [

|

| 251 |

+

region.expand(add_margin, (max_x, max_y)).as_tuple()

|

| 252 |

+

for region in region_list

|

| 253 |

+

]

|

| 254 |

+

|

| 255 |

+

box_list = [box_expand(box, add_margin, (max_x, max_y)) for box in box_list]

|

| 256 |

+

|

| 257 |

+

# filter by size

|

| 258 |

+

if min_size:

|

| 259 |

+

if min_size < 1:

|

| 260 |

+

min_size = int(min(max_y, max_x) * min_size)

|

| 261 |

+

|

| 262 |

+

region_list = [

|

| 263 |

+

i for i in region_list if max(i[1] - i[0], i[3] - i[2]) > min_size

|

| 264 |

+

]

|

| 265 |

+

box_list = [

|

| 266 |

+

i

|

| 267 |

+

for i in box_list

|

| 268 |

+

if max(utils.diff([c[0] for c in i]), utils.diff([c[1] for c in i]))

|

| 269 |

+

> min_size

|

| 270 |

+

]

|

| 271 |

+

|

| 272 |

+

return region_list, box_list

|

ezocr/build/lib/easyocrlite/types.py

ADDED

|

@@ -0,0 +1,5 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from typing import Tuple

|

| 2 |

+

|

| 3 |

+

Point = Tuple[int, int]

|

| 4 |

+

BoxTuple = Tuple[Point, Point, Point, Point]

|

| 5 |

+

RegionTuple = Tuple[int, int, int, int]

|

lihe.png

ADDED

|

paibian.jpeg

ADDED

|

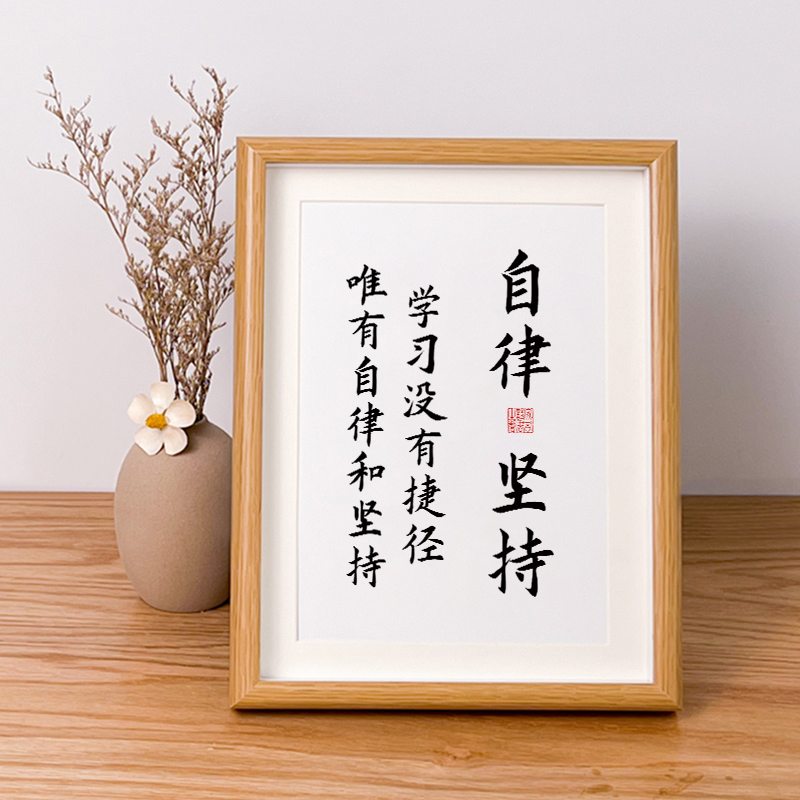

shupai.png

ADDED

|

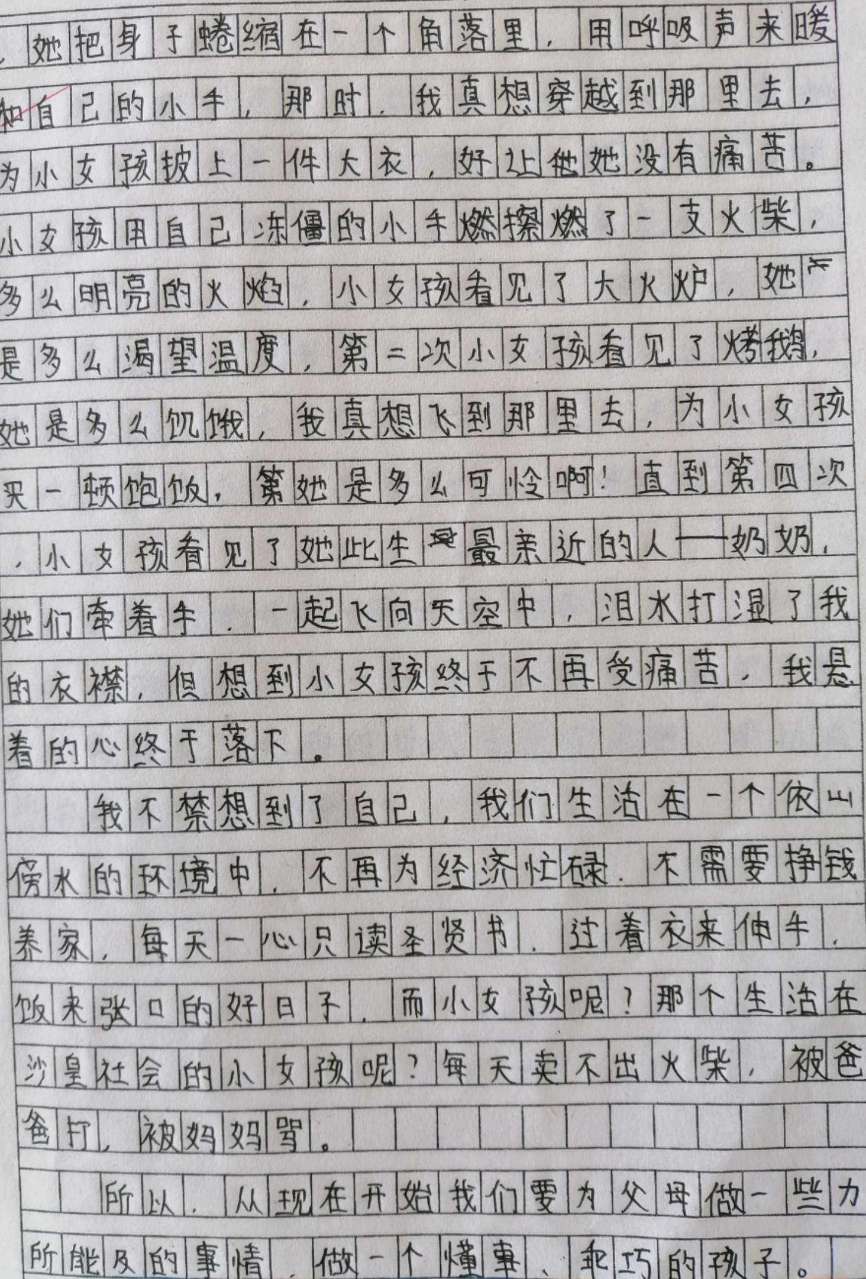

zuowen.jpg

ADDED

|