Spaces:

Sleeping

Sleeping

Commit

·

1f56e42

1

Parent(s):

a7db2b1

Add new blog post!

Browse files- app.py +4 -1

- assets/2_gradio_space.png +0 -0

- assets/2_secret.png +0 -0

- assets/2_thumbnail.png +0 -0

- assets/2_token.png +0 -0

- posts/2_private_models.py +173 -0

- posts/__pycache__/1_blog_in_spaces.cpython-37.pyc +0 -0

app.py

CHANGED

|

@@ -34,7 +34,10 @@ def get_page_data(post_path: Path):

|

|

| 34 |

|

| 35 |

def main():

|

| 36 |

st.set_page_config(layout="wide")

|

| 37 |

-

posts = [

|

|

|

|

|

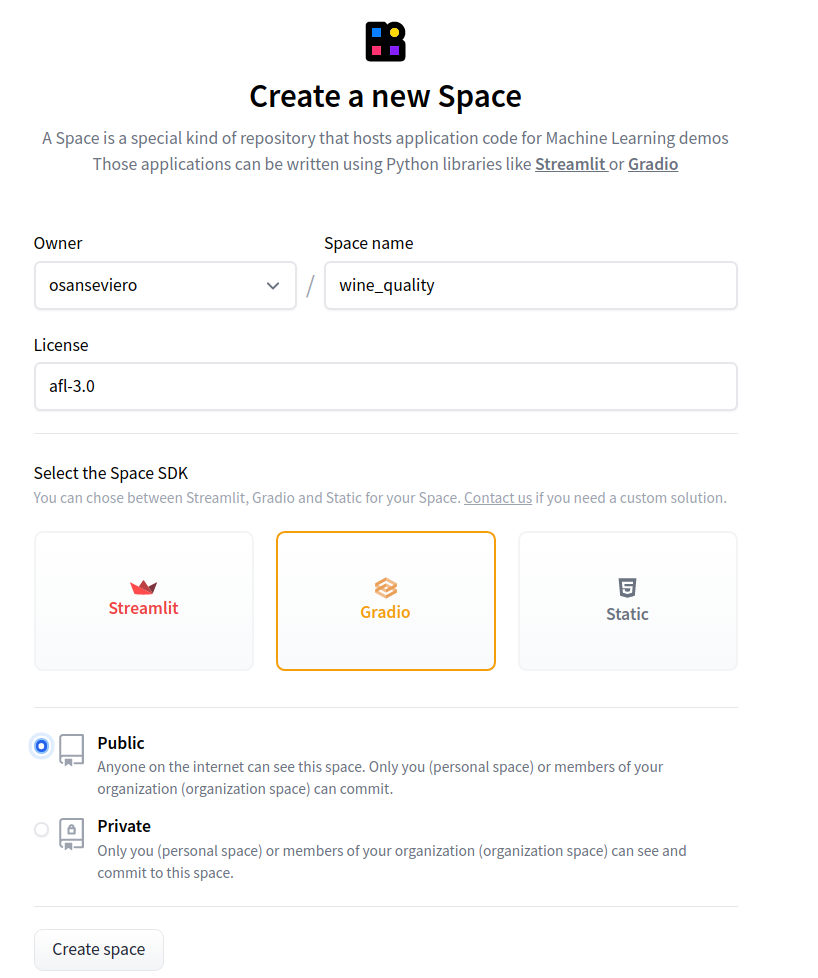

|

|

|

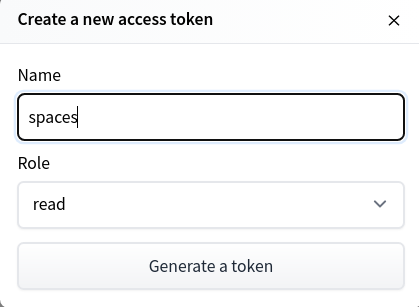

|

|

| 38 |

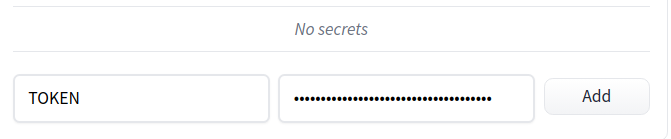

page_to_show = posts[0]

|

| 39 |

with st.sidebar:

|

| 40 |

|

|

|

|

| 34 |

|

| 35 |

def main():

|

| 36 |

st.set_page_config(layout="wide")

|

| 37 |

+

posts = [

|

| 38 |

+

'posts.2_private_models',

|

| 39 |

+

'posts.1_blog_in_spaces'

|

| 40 |

+

]

|

| 41 |

page_to_show = posts[0]

|

| 42 |

with st.sidebar:

|

| 43 |

|

assets/2_gradio_space.png

ADDED

|

assets/2_secret.png

ADDED

|

assets/2_thumbnail.png

ADDED

|

assets/2_token.png

ADDED

|

posts/2_private_models.py

ADDED

|

@@ -0,0 +1,173 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

import streamlit.components.v1 as components

|

| 3 |

+

|

| 4 |

+

title = "T&T2 - Craft demos of private models"

|

| 5 |

+

description = "Build public, shareable models of private models."

|

| 6 |

+

date = "2022-01-27"

|

| 7 |

+

thumbnail = "assets/2_thumbnail.png"

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

def run_article():

|

| 12 |

+

st.markdown("""

|

| 13 |

+

# 🤗 Tips & Tricks Edition 2

|

| 14 |

+

# Using private models in your public ML demos

|

| 15 |

+

|

| 16 |

+

Welcome to a new post of 🤗 Tips and Tricks! Each post can be read in <5 minutes and shares features you might not know about that will allow you

|

| 17 |

+

to leverage the Hub platform to its full extent.

|

| 18 |

+

|

| 19 |

+

In today's post, you'll learn how you can create public demos of your private models. This can be useful if you're not ready to share the model

|

| 20 |

+

or are worried of ethical concerns, but would still like to share the work with the community to try it out.

|

| 21 |

+

|

| 22 |

+

**Is this expensive?**

|

| 23 |

+

|

| 24 |

+

It will cost...nothing! You can host private models on Hugging Face right now in a couple of clicks! Note: This works not just for transformers,

|

| 25 |

+

but for any ML library!

|

| 26 |

+

|

| 27 |

+

**Which is the model?**

|

| 28 |

+

|

| 29 |

+

The super secret model we won't want to share publicly but still showcase is a super powerful Scikit-learn model for...wine quality classification!

|

| 30 |

+

For the purposes of this demo, assume there exists a private model repository with `id=osanseviero/wine-quality`.

|

| 31 |

+

""")

|

| 32 |

+

|

| 33 |

+

col1, col2 = st.columns(2)

|

| 34 |

+

|

| 35 |

+

with col1:

|

| 36 |

+

st.markdown("""

|

| 37 |

+

**🍷Cheers. And what is the demo?**

|

| 38 |

+

|

| 39 |

+

Let's build it right now! You first need to create a [new Space](https://huggingface.co/new-space). I like to use the Gradio SDK, but you are

|

| 40 |

+

also encouraged to try Streamlit and static Spaces.

|

| 41 |

+

|

| 42 |

+

The second step is to create a [read token](https://huggingface.co/settings/token). A read token allows reading repositories, which is useful when

|

| 43 |

+

you don't need to modify them. This token will allow the Space to access the model from the private model repository.

|

| 44 |

+

|

| 45 |

+

The third step is to create a secret in the Space, which you can do in the settings tab.

|

| 46 |

+

|

| 47 |

+

**What's a secret?**

|

| 48 |

+

|

| 49 |

+

If you hardcode your token, other people will be able to access your repository, which is what you're trying to avoid. Remember that the Space is

|

| 50 |

+

public so the code of the Space is also public! By using secrets + tokens, you are having a way in which the Space can read a private model repo

|

| 51 |

+

without exposing the raw model nor the token. Secrets can be very useful as well if you are making calls to APIs and don't want to expose it.

|

| 52 |

+

|

| 53 |

+

So you can add a token, with any name you want, and paste the value you should have coppied from your settings.

|

| 54 |

+

""")

|

| 55 |

+

|

| 56 |

+

with col2:

|

| 57 |

+

st.image("https://github.com/osanseviero/hf-tips-and-tricks/raw/main/assets/2_gradio_space.png", width=300)

|

| 58 |

+

st.image("https://github.com/osanseviero/hf-tips-and-tricks/raw/main/assets/2_secret.png", width=300)

|

| 59 |

+

st.image("https://github.com/osanseviero/hf-tips-and-tricks/raw/main/assets/2_secret.png", width=300)

|

| 60 |

+

|

| 61 |

+

|

| 62 |

+

st.markdown("""

|

| 63 |

+

**🤯 That's neat! What happens next?**

|

| 64 |

+

|

| 65 |

+

The secret is made available available to the gradio Space as a. environment variable. Let's write the code for the Gradio demo.

|

| 66 |

+

|

| 67 |

+

The first step is adding the `requirements.txt` files with used dependencies.

|

| 68 |

+

|

| 69 |

+

```

|

| 70 |

+

scikit-learn

|

| 71 |

+

joblib

|

| 72 |

+

```

|

| 73 |

+

|

| 74 |

+

As always in Spaces, you create a file called `app.py`. Let's go through each section of the file

|

| 75 |

+

|

| 76 |

+

1. Imports...nothing special

|

| 77 |

+

|

| 78 |

+

```python

|

| 79 |

+

import joblib

|

| 80 |

+

import os

|

| 81 |

+

|

| 82 |

+

import gradio as gr

|

| 83 |

+

|

| 84 |

+

from huggingface_hub import hf_hub_download

|

| 85 |

+

```

|

| 86 |

+

|

| 87 |

+

2. Downloading model from private repo

|

| 88 |

+

|

| 89 |

+

You can use `hf_hub_download` from the `huggingface_hub` library to download (and cache) a file from a model repository. Using the

|

| 90 |

+

`use_auth_token` param, you can access the secret `TOKEN`, which has the read token you created before. I want to download the file

|

| 91 |

+

`sklearn_model.joblib`, which is how `sklearn` encourages to save the models.

|

| 92 |

+

|

| 93 |

+

|

| 94 |

+

```python

|

| 95 |

+

file_path = hf_hub_download("osanseviero/wine-quality", "sklearn_model.joblib",

|

| 96 |

+

use_auth_token=os.environ['TOKEN'])

|

| 97 |

+

```

|

| 98 |

+

|

| 99 |

+

3. Loading model

|

| 100 |

+

|

| 101 |

+

The path right now points to the cached local joblib model. You can easily load it now:

|

| 102 |

+

|

| 103 |

+

```python

|

| 104 |

+

model = joblib.load(file_path)

|

| 105 |

+

```

|

| 106 |

+

|

| 107 |

+

4. Inference function

|

| 108 |

+

|

| 109 |

+

One of the most important concepts in Gradio is the inference function. The inference function receives an input and has an output. It can

|

| 110 |

+

receive multiple types of inputs (images, videos, audios, text, etc) and multiple outputs. This is a simple sklearn inference

|

| 111 |

+

|

| 112 |

+

```python

|

| 113 |

+

def predict(data):

|

| 114 |

+

return model.predict(data.to_numpy())

|

| 115 |

+

```

|

| 116 |

+

|

| 117 |

+

5. Build and launch the interface

|

| 118 |

+

|

| 119 |

+

Building Gradio interfaces is very simple. You need to specify the prediction function, the type of input and output. You can add more things such

|

| 120 |

+

as the title and descriptions. In this case, the input is a dataframe since that's the kind of data managed by this model.

|

| 121 |

+

|

| 122 |

+

```

|

| 123 |

+

iface = gr.Interface(

|

| 124 |

+

predict,

|

| 125 |

+

title="Wine Quality predictor with SKLearn",

|

| 126 |

+

inputs=gr.inputs.Dataframe(

|

| 127 |

+

headers=headers,

|

| 128 |

+

default=default,

|

| 129 |

+

),

|

| 130 |

+

outputs="numpy",

|

| 131 |

+

)

|

| 132 |

+

iface.launch()

|

| 133 |

+

```

|

| 134 |

+

|

| 135 |

+

We're done!!!!

|

| 136 |

+

|

| 137 |

+

You can find the Space at [https://huggingface.co/spaces/osanseviero/wine_quality](https://huggingface.co/spaces/osanseviero/wine_quality)

|

| 138 |

+

and try it yourself! It's not great, but the main idea of the article was to showcase a workflow of public demo with private model. This can also

|

| 139 |

+

work for datasets! With Gradio, you can create datasets with flagged content from users!

|

| 140 |

+

|

| 141 |

+

**Wait wait wait! I don't want to click more links!**

|

| 142 |

+

|

| 143 |

+

Ahm...ok. The link above is cool because you can share it with anyone, but you can also show Spaces-hosted Gradio demos with a couple of

|

| 144 |

+

HTML lines in your own website. Here you can see the Gradio Space.

|

| 145 |

+

""")

|

| 146 |

+

|

| 147 |

+

embed_gradio = components.html(

|

| 148 |

+

"""

|

| 149 |

+

<head>

|

| 150 |

+

<link rel="stylesheet" href="https://gradio.s3-us-west-2.amazonaws.com/2.6.2/static/bundle.css">

|

| 151 |

+

</head>

|

| 152 |

+

<body>

|

| 153 |

+

<div id="target"></div>

|

| 154 |

+

<script src="https://gradio.s3-us-west-2.amazonaws.com/2.6.2/static/bundle.js"></script>

|

| 155 |

+

<script>

|

| 156 |

+

launchGradioFromSpaces("osanseviero/wine_quality", "#target")

|

| 157 |

+

</script>

|

| 158 |

+

</body>

|

| 159 |

+

""",

|

| 160 |

+

height=600,

|

| 161 |

+

)

|

| 162 |

+

|

| 163 |

+

st.markdown("""

|

| 164 |

+

**🤯 Is that...a Gradio Space embedded within a Streamlit Space about creating Spaces?**

|

| 165 |

+

|

| 166 |

+

Yes, that's right! I hope this was useful! Until the next time!

|

| 167 |

+

|

| 168 |

+

**A Hacker Llama 🦙**

|

| 169 |

+

|

| 170 |

+

[osanseviero](https://twitter.com/osanseviero)

|

| 171 |

+

""")

|

| 172 |

+

|

| 173 |

+

|

posts/__pycache__/1_blog_in_spaces.cpython-37.pyc

DELETED

|

Binary file (6.91 kB)

|

|

|