Upload 18 files

Browse files- ESC50_class_labels_indices.json +1 -0

- ESC50_class_labels_indices_space.json +1 -0

- FSD50k_class_labels_indices.json +1 -0

- UrbanSound8K_class_labels_indices.json +1 -0

- VGGSound_class_labels_indices.json +1 -0

- audioclip-arch.png +0 -0

- audioset_class_labels_indices.json +1 -0

- bootstrap_pytorch_dist_env.sh +17 -0

- check_ckpt.py +802 -0

- eval_retrieval_freesound.sh +81 -0

- finetune-esc50.sh +88 -0

- finetune-fsd50k.sh +88 -0

- htsat-roberta-large-dataset-fusion.sh +87 -0

- requirements.txt +16 -0

- test_tars.py +120 -0

- train-htsat-roberta.sh +83 -0

- train-pann-roberta.sh +83 -0

- zeroshot_esc50.sh +82 -0

ESC50_class_labels_indices.json

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

{"dog": 0, "rooster": 1, "pig": 2, "cow": 3, "frog": 4, "cat": 5, "hen": 6, "insects": 7, "sheep": 8, "crow": 9, "rain": 10, "sea_waves": 11, "crackling_fire": 12, "crickets": 13, "chirping_birds": 14, "water_drops": 15, "wind": 16, "pouring_water": 17, "toilet_flush": 18, "thunderstorm": 19, "crying_baby": 20, "sneezing": 21, "clapping": 22, "breathing": 23, "coughing": 24, "footsteps": 25, "laughing": 26, "brushing_teeth": 27, "snoring": 28, "drinking_sipping": 29, "door_wood_knock": 30, "mouse_click": 31, "keyboard_typing": 32, "door_wood_creaks": 33, "can_opening": 34, "washing_machine": 35, "vacuum_cleaner": 36, "clock_alarm": 37, "clock_tick": 38, "glass_breaking": 39, "helicopter": 40, "chainsaw": 41, "siren": 42, "car_horn": 43, "engine": 44, "train": 45, "church_bells": 46, "airplane": 47, "fireworks": 48, "hand_saw": 49}

|

ESC50_class_labels_indices_space.json

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

{"dog": 0, "rooster": 1, "pig": 2, "cow": 3, "frog": 4, "cat": 5, "hen": 6, "insects": 7, "sheep": 8, "crow": 9, "rain": 10, "sea waves": 11, "crackling fire": 12, "crickets": 13, "chirping birds": 14, "water drops": 15, "wind": 16, "pouring water": 17, "toilet flush": 18, "thunderstorm": 19, "crying baby": 20, "sneezing": 21, "clapping": 22, "breathing": 23, "coughing": 24, "footsteps": 25, "laughing": 26, "brushing teeth": 27, "snoring": 28, "drinking sipping": 29, "door wood knock": 30, "mouse click": 31, "keyboard typing": 32, "door wood creaks": 33, "can opening": 34, "washing machine": 35, "vacuum cleaner": 36, "clock alarm": 37, "clock tick": 38, "glass breaking": 39, "helicopter": 40, "chainsaw": 41, "siren": 42, "car horn": 43, "engine": 44, "train": 45, "church bells": 46, "airplane": 47, "fireworks": 48, "hand saw": 49}

|

FSD50k_class_labels_indices.json

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

{"Whispering": 0, "Gunshot, gunfire": 1, "Pour": 2, "Wind chime": 3, "Livestock, farm animals, working animals": 4, "Crackle": 5, "Waves, surf": 6, "Chicken, rooster": 7, "Chatter": 8, "Keyboard (musical)": 9, "Bark": 10, "Rail transport": 11, "Gong": 12, "Shatter": 13, "Ratchet, pawl": 14, "Clapping": 15, "Mallet percussion": 16, "Whoosh, swoosh, swish": 17, "Speech synthesizer": 18, "Respiratory sounds": 19, "Sliding door": 20, "Boat, Water vehicle": 21, "Boiling": 22, "Human voice": 23, "Drip": 24, "Thunderstorm": 25, "Male singing": 26, "Sneeze": 27, "Hi-hat": 28, "Guitar": 29, "Crying, sobbing": 30, "Speech": 31, "Slam": 32, "Crack": 33, "Yell": 34, "Drawer open or close": 35, "Run": 36, "Cheering": 37, "Splash, splatter": 38, "Tabla": 39, "Sigh": 40, "Packing tape, duct tape": 41, "Raindrop": 42, "Cymbal": 43, "Fill (with liquid)": 44, "Harp": 45, "Squeak": 46, "Zipper (clothing)": 47, "Tearing": 48, "Alarm": 49, "Skateboard": 50, "Wind instrument, woodwind instrument": 51, "Chink, clink": 52, "Wind": 53, "Ringtone": 54, "Microwave oven": 55, "Power tool": 56, "Dishes, pots, and pans": 57, "Musical instrument": 58, "Door": 59, "Domestic sounds, home sounds": 60, "Subway, metro, underground": 61, "Glockenspiel": 62, "Female speech, woman speaking": 63, "Coin (dropping)": 64, "Mechanical fan": 65, "Male speech, man speaking": 66, "Crowd": 67, "Screech": 68, "Animal": 69, "Human group actions": 70, "Telephone": 71, "Tools": 72, "Giggle": 73, "Crushing": 74, "Thump, thud": 75, "Hammer": 76, "Engine": 77, "Cupboard open or close": 78, "Glass": 79, "Writing": 80, "Clock": 81, "Plucked string instrument": 82, "Fowl": 83, "Water tap, faucet": 84, "Knock": 85, "Trickle, dribble": 86, "Rattle": 87, "Conversation": 88, "Accelerating, revving, vroom": 89, "Fixed-wing aircraft, airplane": 90, "Screaming": 91, "Walk, footsteps": 92, "Stream": 93, "Printer": 94, "Traffic noise, roadway noise": 95, "Motorcycle": 96, "Water": 97, "Scratching (performance technique)": 98, "Tap": 99, "Percussion": 100, "Chuckle, chortle": 101, "Motor vehicle (road)": 102, "Crow": 103, "Vehicle horn, car horn, honking": 104, "Bird vocalization, bird call, bird song": 105, "Drill": 106, "Race car, auto racing": 107, "Meow": 108, "Bass drum": 109, "Drum kit": 110, "Wild animals": 111, "Crash cymbal": 112, "Cough": 113, "Typing": 114, "Bowed string instrument": 115, "Computer keyboard": 116, "Vehicle": 117, "Train": 118, "Applause": 119, "Bicycle": 120, "Tick": 121, "Drum": 122, "Burping, eructation": 123, "Bicycle bell": 124, "Cowbell": 125, "Accordion": 126, "Toilet flush": 127, "Purr": 128, "Church bell": 129, "Cat": 130, "Insect": 131, "Engine starting": 132, "Chewing, mastication": 133, "Sink (filling or washing)": 134, "Dog": 135, "Bird": 136, "Finger snapping": 137, "Child speech, kid speaking": 138, "Wood": 139, "Music": 140, "Sawing": 141, "Bell": 142, "Fireworks": 143, "Crumpling, crinkling": 144, "Ocean": 145, "Gurgling": 146, "Fart": 147, "Mechanisms": 148, "Acoustic guitar": 149, "Singing": 150, "Boom": 151, "Bus": 152, "Cutlery, silverware": 153, "Liquid": 154, "Explosion": 155, "Gull, seagull": 156, "Thunder": 157, "Siren": 158, "Marimba, xylophone": 159, "Female singing": 160, "Tick-tock": 161, "Frog": 162, "Frying (food)": 163, "Buzz": 164, "Car passing by": 165, "Electric guitar": 166, "Gasp": 167, "Rattle (instrument)": 168, "Piano": 169, "Doorbell": 170, "Chime": 171, "Car": 172, "Fire": 173, "Trumpet": 174, "Truck": 175, "Hands": 176, "Domestic animals, pets": 177, "Chirp, tweet": 178, "Breathing": 179, "Cricket": 180, "Tambourine": 181, "Bass guitar": 182, "Idling": 183, "Scissors": 184, "Rain": 185, "Strum": 186, "Shout": 187, "Keys jangling": 188, "Camera": 189, "Hiss": 190, "Growling": 191, "Snare drum": 192, "Brass instrument": 193, "Bathtub (filling or washing)": 194, "Typewriter": 195, "Aircraft": 196, "Organ": 197, "Laughter": 198, "Harmonica": 199}

|

UrbanSound8K_class_labels_indices.json

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

{"air conditioner": 0, "car horn": 1, "children playing": 2, "dog bark": 3, "drilling": 4, "engine idling": 5, "gun shot": 6, "jackhammer": 7, "siren": 8, "street music": 9}

|

VGGSound_class_labels_indices.json

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

{"people crowd": 0, "playing mandolin": 1, "pumping water": 2, "horse neighing": 3, "airplane flyby": 4, "playing drum kit": 5, "pheasant crowing": 6, "duck quacking": 7, "wood thrush calling": 8, "dog bow-wow": 9, "arc welding": 10, "writing on blackboard with chalk": 11, "forging swords": 12, "swimming": 13, "bee, wasp, etc. buzzing": 14, "child singing": 15, "mouse clicking": 16, "playing trombone": 17, "telephone bell ringing": 18, "beat boxing": 19, "cattle mooing": 20, "lions roaring": 21, "ambulance siren": 22, "gibbon howling": 23, "people sniggering": 24, "playing clarinet": 25, "playing bassoon": 26, "playing bongo": 27, "playing electric guitar": 28, "playing badminton": 29, "bull bellowing": 30, "cat caterwauling": 31, "playing sitar": 32, "whale calling": 33, "snake hissing": 34, "people burping": 35, "francolin calling": 36, "fireworks banging": 37, "driving buses": 38, "people belly laughing": 39, "chicken clucking": 40, "playing double bass": 41, "canary calling": 42, "people battle cry": 43, "male singing": 44, "horse clip-clop": 45, "baby crying": 46, "cow lowing": 47, "reversing beeps": 48, "otter growling": 49, "cheetah chirrup": 50, "people running": 51, "ice cream truck, ice cream van": 52, "playing harpsichord": 53, "heart sounds, heartbeat": 54, "pig oinking": 55, "police radio chatter": 56, "cat hissing": 57, "wind chime": 58, "elk bugling": 59, "lions growling": 60, "fly, housefly buzzing": 61, "ferret dooking": 62, "railroad car, train wagon": 63, "church bell ringing": 64, "cat meowing": 65, "wind rustling leaves": 66, "bouncing on trampoline": 67, "mouse squeaking": 68, "sheep bleating": 69, "people eating crisps": 70, "people sneezing": 71, "playing squash": 72, "footsteps on snow": 73, "people humming": 74, "tap dancing": 75, "snake rattling": 76, "elephant trumpeting": 77, "people booing": 78, "disc scratching": 79, "skidding": 80, "cupboard opening or closing": 81, "playing bagpipes": 82, "basketball bounce": 83, "chinchilla barking": 84, "parrot talking": 85, "woodpecker pecking tree": 86, "fire truck siren": 87, "slot machine": 88, "playing french horn": 89, "air conditioning noise": 90, "people finger snapping": 91, "eagle screaming": 92, "playing harmonica": 93, "playing tympani": 94, "zebra braying": 95, "hedge trimmer running": 96, "playing acoustic guitar": 97, "hair dryer drying": 98, "orchestra": 99, "playing darts": 100, "children shouting": 101, "people slurping": 102, "alligators, crocodiles hissing": 103, "mouse pattering": 104, "people marching": 105, "vehicle horn, car horn, honking": 106, "sea lion barking": 107, "people clapping": 108, "hail": 109, "fire crackling": 110, "bathroom ventilation fan running": 111, "opening or closing car doors": 112, "skiing": 113, "dog barking": 114, "race car, auto racing": 115, "subway, metro, underground": 116, "underwater bubbling": 117, "car passing by": 118, "playing tennis": 119, "warbler chirping": 120, "helicopter": 121, "driving motorcycle": 122, "train wheels squealing": 123, "baby laughter": 124, "driving snowmobile": 125, "bird squawking": 126, "cuckoo bird calling": 127, "people whistling": 128, "shot football": 129, "playing tuning fork": 130, "dog howling": 131, "playing violin, fiddle": 132, "people eating": 133, "baltimore oriole calling": 134, "playing timbales": 135, "door slamming": 136, "people shuffling": 137, "typing on typewriter": 138, "magpie calling": 139, "playing harp": 140, "playing hammond organ": 141, "people eating apple": 142, "mosquito buzzing": 143, "playing oboe": 144, "playing volleyball": 145, "using sewing machines": 146, "electric grinder grinding": 147, "cutting hair with electric trimmers": 148, "splashing water": 149, "people sobbing": 150, "female singing": 151, "wind noise": 152, "car engine knocking": 153, "black capped chickadee calling": 154, "people screaming": 155, "cat growling": 156, "penguins braying": 157, "people coughing": 158, "metronome": 159, "train horning": 160, "goat bleating": 161, "playing tambourine": 162, "fox barking": 163, "airplane": 164, "firing cannon": 165, "thunder": 166, "smoke detector beeping": 167, "playing erhu": 168, "ice cracking": 169, "dog growling": 170, "playing saxophone": 171, "owl hooting": 172, "playing trumpet": 173, "sailing": 174, "waterfall burbling": 175, "machine gun shooting": 176, "baby babbling": 177, "playing synthesizer": 178, "donkey, ass braying": 179, "people cheering": 180, "playing shofar": 181, "playing hockey": 182, "playing banjo": 183, "cricket chirping": 184, "playing snare drum": 185, "ripping paper": 186, "child speech, kid speaking": 187, "crow cawing": 188, "sloshing water": 189, "playing zither": 190, "scuba diving": 191, "playing steelpan": 192, "goose honking": 193, "tapping guitar": 194, "spraying water": 195, "playing bass drum": 196, "printer printing": 197, "playing ukulele": 198, "ocean burbling": 199, "playing didgeridoo": 200, "sharpen knife": 201, "typing on computer keyboard": 202, "playing table tennis": 203, "rope skipping": 204, "playing marimba, xylophone": 205, "playing bugle": 206, "playing guiro": 207, "playing flute": 208, "tornado roaring": 209, "stream burbling": 210, "electric shaver, electric razor shaving": 211, "playing gong": 212, "eating with cutlery": 213, "playing piano": 214, "people giggling": 215, "chicken crowing": 216, "female speech, woman speaking": 217, "golf driving": 218, "frog croaking": 219, "people eating noodle": 220, "mynah bird singing": 221, "playing timpani": 222, "playing congas": 223, "dinosaurs bellowing": 224, "playing bass guitar": 225, "turkey gobbling": 226, "chipmunk chirping": 227, "chopping food": 228, "striking bowling": 229, "missile launch": 230, "squishing water": 231, "civil defense siren": 232, "blowtorch igniting": 233, "tractor digging": 234, "lighting firecrackers": 235, "playing theremin": 236, "train whistling": 237, "people nose blowing": 238, "car engine starting": 239, "lathe spinning": 240, "playing cello": 241, "motorboat, speedboat acceleration": 242, "playing vibraphone": 243, "playing washboard": 244, "playing cornet": 245, "pigeon, dove cooing": 246, "roller coaster running": 247, "opening or closing car electric windows": 248, "foghorn": 249, "coyote howling": 250, "hammering nails": 251, "toilet flushing": 252, "strike lighter": 253, "bird wings flapping": 254, "playing steel guitar, slide guitar": 255, "volcano explosion": 256, "people whispering": 257, "bowling impact": 258, "yodelling": 259, "firing muskets": 260, "raining": 261, "singing bowl": 262, "plastic bottle crushing": 263, "chimpanzee pant-hooting": 264, "playing electronic organ": 265, "chainsawing trees": 266, "dog baying": 267, "lawn mowing": 268, "people babbling": 269, "striking pool": 270, "eletric blender running": 271, "playing tabla": 272, "cap gun shooting": 273, "planing timber": 274, "air horn": 275, "sliding door": 276, "cell phone buzzing": 277, "sea waves": 278, "playing castanets": 279, "singing choir": 280, "people slapping": 281, "barn swallow calling": 282, "people hiccup": 283, "vacuum cleaner cleaning floors": 284, "playing lacrosse": 285, "bird chirping, tweeting": 286, "lip smacking": 287, "chopping wood": 288, "police car (siren)": 289, "running electric fan": 290, "cattle, bovinae cowbell": 291, "people gargling": 292, "opening or closing drawers": 293, "playing djembe": 294, "skateboarding": 295, "cat purring": 296, "rowboat, canoe, kayak rowing": 297, "engine accelerating, revving, vroom": 298, "playing glockenspiel": 299, "popping popcorn": 300, "car engine idling": 301, "alarm clock ringing": 302, "dog whimpering": 303, "playing accordion": 304, "playing cymbal": 305, "male speech, man speaking": 306, "rapping": 307, "people farting": 308}

|

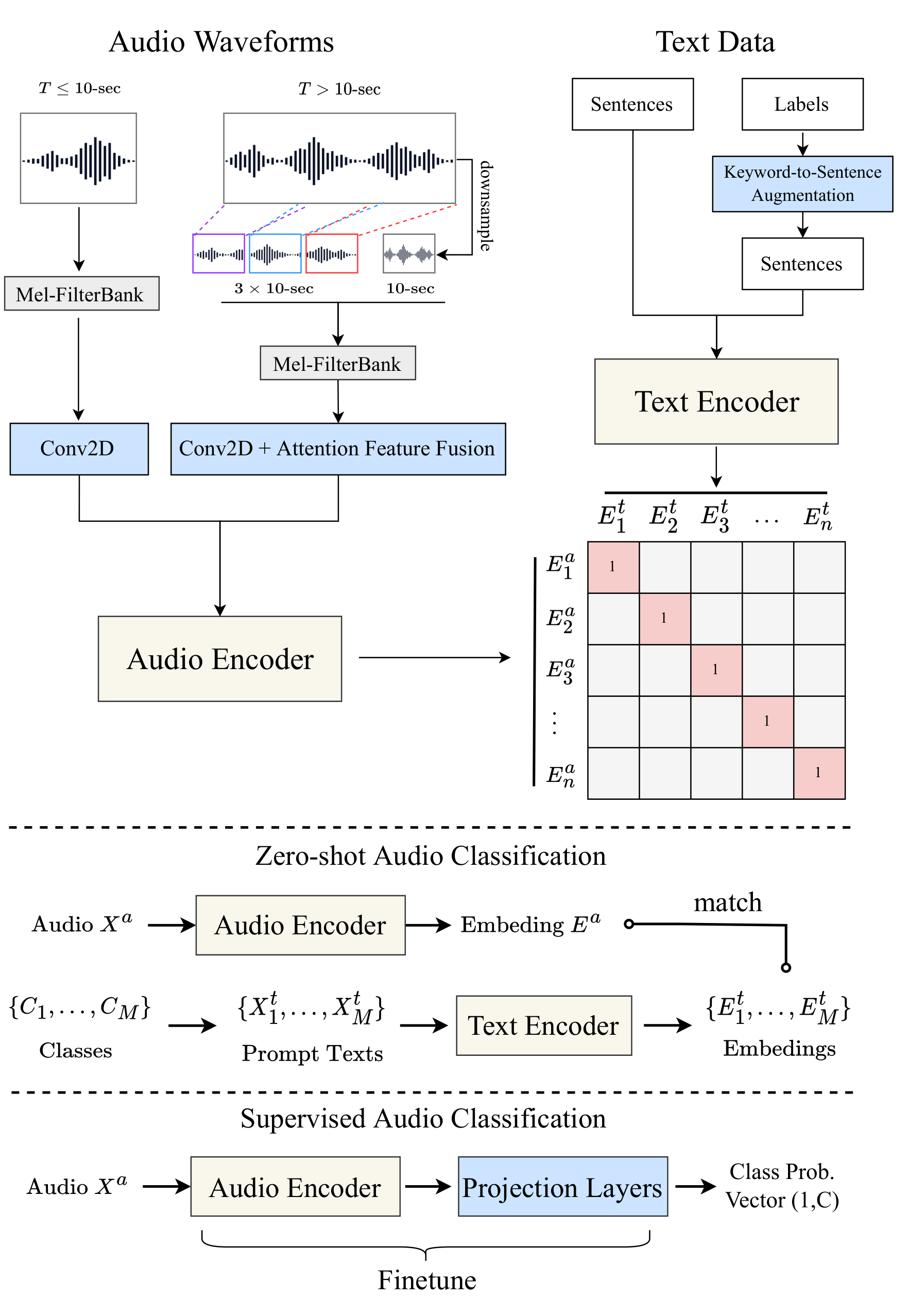

audioclip-arch.png

ADDED

|

audioset_class_labels_indices.json

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

{"Speech": 0, "Male speech, man speaking": 1, "Female speech, woman speaking": 2, "Child speech, kid speaking": 3, "Conversation": 4, "Narration, monologue": 5, "Babbling": 6, "Speech synthesizer": 7, "Shout": 8, "Bellow": 9, "Whoop": 10, "Yell": 11, "Battle cry": 12, "Children shouting": 13, "Screaming": 14, "Whispering": 15, "Laughter": 16, "Baby laughter": 17, "Giggle": 18, "Snicker": 19, "Belly laugh": 20, "Chuckle, chortle": 21, "Crying, sobbing": 22, "Baby cry, infant cry": 23, "Whimper": 24, "Wail, moan": 25, "Sigh": 26, "Singing": 27, "Choir": 28, "Yodeling": 29, "Chant": 30, "Mantra": 31, "Male singing": 32, "Female singing": 33, "Child singing": 34, "Synthetic singing": 35, "Rapping": 36, "Humming": 37, "Groan": 38, "Grunt": 39, "Whistling": 40, "Breathing": 41, "Wheeze": 42, "Snoring": 43, "Gasp": 44, "Pant": 45, "Snort": 46, "Cough": 47, "Throat clearing": 48, "Sneeze": 49, "Sniff": 50, "Run": 51, "Shuffle": 52, "Walk, footsteps": 53, "Chewing, mastication": 54, "Biting": 55, "Gargling": 56, "Stomach rumble": 57, "Burping, eructation": 58, "Hiccup": 59, "Fart": 60, "Hands": 61, "Finger snapping": 62, "Clapping": 63, "Heart sounds, heartbeat": 64, "Heart murmur": 65, "Cheering": 66, "Applause": 67, "Chatter": 68, "Crowd": 69, "Hubbub, speech noise, speech babble": 70, "Children playing": 71, "Animal": 72, "Domestic animals, pets": 73, "Dog": 74, "Bark": 75, "Yip": 76, "Howl": 77, "Bow-wow": 78, "Growling": 79, "Whimper (dog)": 80, "Cat": 81, "Purr": 82, "Meow": 83, "Hiss": 84, "Caterwaul": 85, "Livestock, farm animals, working animals": 86, "Horse": 87, "Clip-clop": 88, "Neigh, whinny": 89, "Cattle, bovinae": 90, "Moo": 91, "Cowbell": 92, "Pig": 93, "Oink": 94, "Goat": 95, "Bleat": 96, "Sheep": 97, "Fowl": 98, "Chicken, rooster": 99, "Cluck": 100, "Crowing, cock-a-doodle-doo": 101, "Turkey": 102, "Gobble": 103, "Duck": 104, "Quack": 105, "Goose": 106, "Honk": 107, "Wild animals": 108, "Roaring cats (lions, tigers)": 109, "Roar": 110, "Bird": 111, "Bird vocalization, bird call, bird song": 112, "Chirp, tweet": 113, "Squawk": 114, "Pigeon, dove": 115, "Coo": 116, "Crow": 117, "Caw": 118, "Owl": 119, "Hoot": 120, "Bird flight, flapping wings": 121, "Canidae, dogs, wolves": 122, "Rodents, rats, mice": 123, "Mouse": 124, "Patter": 125, "Insect": 126, "Cricket": 127, "Mosquito": 128, "Fly, housefly": 129, "Buzz": 130, "Bee, wasp, etc.": 131, "Frog": 132, "Croak": 133, "Snake": 134, "Rattle": 135, "Whale vocalization": 136, "Music": 137, "Musical instrument": 138, "Plucked string instrument": 139, "Guitar": 140, "Electric guitar": 141, "Bass guitar": 142, "Acoustic guitar": 143, "Steel guitar, slide guitar": 144, "Tapping (guitar technique)": 145, "Strum": 146, "Banjo": 147, "Sitar": 148, "Mandolin": 149, "Zither": 150, "Ukulele": 151, "Keyboard (musical)": 152, "Piano": 153, "Electric piano": 154, "Organ": 155, "Electronic organ": 156, "Hammond organ": 157, "Synthesizer": 158, "Sampler": 159, "Harpsichord": 160, "Percussion": 161, "Drum kit": 162, "Drum machine": 163, "Drum": 164, "Snare drum": 165, "Rimshot": 166, "Drum roll": 167, "Bass drum": 168, "Timpani": 169, "Tabla": 170, "Cymbal": 171, "Hi-hat": 172, "Wood block": 173, "Tambourine": 174, "Rattle (instrument)": 175, "Maraca": 176, "Gong": 177, "Tubular bells": 178, "Mallet percussion": 179, "Marimba, xylophone": 180, "Glockenspiel": 181, "Vibraphone": 182, "Steelpan": 183, "Orchestra": 184, "Brass instrument": 185, "French horn": 186, "Trumpet": 187, "Trombone": 188, "Bowed string instrument": 189, "String section": 190, "Violin, fiddle": 191, "Pizzicato": 192, "Cello": 193, "Double bass": 194, "Wind instrument, woodwind instrument": 195, "Flute": 196, "Saxophone": 197, "Clarinet": 198, "Harp": 199, "Bell": 200, "Church bell": 201, "Jingle bell": 202, "Bicycle bell": 203, "Tuning fork": 204, "Chime": 205, "Wind chime": 206, "Change ringing (campanology)": 207, "Harmonica": 208, "Accordion": 209, "Bagpipes": 210, "Didgeridoo": 211, "Shofar": 212, "Theremin": 213, "Singing bowl": 214, "Scratching (performance technique)": 215, "Pop music": 216, "Hip hop music": 217, "Beatboxing": 218, "Rock music": 219, "Heavy metal": 220, "Punk rock": 221, "Grunge": 222, "Progressive rock": 223, "Rock and roll": 224, "Psychedelic rock": 225, "Rhythm and blues": 226, "Soul music": 227, "Reggae": 228, "Country": 229, "Swing music": 230, "Bluegrass": 231, "Funk": 232, "Folk music": 233, "Middle Eastern music": 234, "Jazz": 235, "Disco": 236, "Classical music": 237, "Opera": 238, "Electronic music": 239, "House music": 240, "Techno": 241, "Dubstep": 242, "Drum and bass": 243, "Electronica": 244, "Electronic dance music": 245, "Ambient music": 246, "Trance music": 247, "Music of Latin America": 248, "Salsa music": 249, "Flamenco": 250, "Blues": 251, "Music for children": 252, "New-age music": 253, "Vocal music": 254, "A capella": 255, "Music of Africa": 256, "Afrobeat": 257, "Christian music": 258, "Gospel music": 259, "Music of Asia": 260, "Carnatic music": 261, "Music of Bollywood": 262, "Ska": 263, "Traditional music": 264, "Independent music": 265, "Song": 266, "Background music": 267, "Theme music": 268, "Jingle (music)": 269, "Soundtrack music": 270, "Lullaby": 271, "Video game music": 272, "Christmas music": 273, "Dance music": 274, "Wedding music": 275, "Happy music": 276, "Funny music": 277, "Sad music": 278, "Tender music": 279, "Exciting music": 280, "Angry music": 281, "Scary music": 282, "Wind": 283, "Rustling leaves": 284, "Wind noise (microphone)": 285, "Thunderstorm": 286, "Thunder": 287, "Water": 288, "Rain": 289, "Raindrop": 290, "Rain on surface": 291, "Stream": 292, "Waterfall": 293, "Ocean": 294, "Waves, surf": 295, "Steam": 296, "Gurgling": 297, "Fire": 298, "Crackle": 299, "Vehicle": 300, "Boat, Water vehicle": 301, "Sailboat, sailing ship": 302, "Rowboat, canoe, kayak": 303, "Motorboat, speedboat": 304, "Ship": 305, "Motor vehicle (road)": 306, "Car": 307, "Vehicle horn, car horn, honking": 308, "Toot": 309, "Car alarm": 310, "Power windows, electric windows": 311, "Skidding": 312, "Tire squeal": 313, "Car passing by": 314, "Race car, auto racing": 315, "Truck": 316, "Air brake": 317, "Air horn, truck horn": 318, "Reversing beeps": 319, "Ice cream truck, ice cream van": 320, "Bus": 321, "Emergency vehicle": 322, "Police car (siren)": 323, "Ambulance (siren)": 324, "Fire engine, fire truck (siren)": 325, "Motorcycle": 326, "Traffic noise, roadway noise": 327, "Rail transport": 328, "Train": 329, "Train whistle": 330, "Train horn": 331, "Railroad car, train wagon": 332, "Train wheels squealing": 333, "Subway, metro, underground": 334, "Aircraft": 335, "Aircraft engine": 336, "Jet engine": 337, "Propeller, airscrew": 338, "Helicopter": 339, "Fixed-wing aircraft, airplane": 340, "Bicycle": 341, "Skateboard": 342, "Engine": 343, "Light engine (high frequency)": 344, "Dental drill, dentist's drill": 345, "Lawn mower": 346, "Chainsaw": 347, "Medium engine (mid frequency)": 348, "Heavy engine (low frequency)": 349, "Engine knocking": 350, "Engine starting": 351, "Idling": 352, "Accelerating, revving, vroom": 353, "Door": 354, "Doorbell": 355, "Ding-dong": 356, "Sliding door": 357, "Slam": 358, "Knock": 359, "Tap": 360, "Squeak": 361, "Cupboard open or close": 362, "Drawer open or close": 363, "Dishes, pots, and pans": 364, "Cutlery, silverware": 365, "Chopping (food)": 366, "Frying (food)": 367, "Microwave oven": 368, "Blender": 369, "Water tap, faucet": 370, "Sink (filling or washing)": 371, "Bathtub (filling or washing)": 372, "Hair dryer": 373, "Toilet flush": 374, "Toothbrush": 375, "Electric toothbrush": 376, "Vacuum cleaner": 377, "Zipper (clothing)": 378, "Keys jangling": 379, "Coin (dropping)": 380, "Scissors": 381, "Electric shaver, electric razor": 382, "Shuffling cards": 383, "Typing": 384, "Typewriter": 385, "Computer keyboard": 386, "Writing": 387, "Alarm": 388, "Telephone": 389, "Telephone bell ringing": 390, "Ringtone": 391, "Telephone dialing, DTMF": 392, "Dial tone": 393, "Busy signal": 394, "Alarm clock": 395, "Siren": 396, "Civil defense siren": 397, "Buzzer": 398, "Smoke detector, smoke alarm": 399, "Fire alarm": 400, "Foghorn": 401, "Whistle": 402, "Steam whistle": 403, "Mechanisms": 404, "Ratchet, pawl": 405, "Clock": 406, "Tick": 407, "Tick-tock": 408, "Gears": 409, "Pulleys": 410, "Sewing machine": 411, "Mechanical fan": 412, "Air conditioning": 413, "Cash register": 414, "Printer": 415, "Camera": 416, "Single-lens reflex camera": 417, "Tools": 418, "Hammer": 419, "Jackhammer": 420, "Sawing": 421, "Filing (rasp)": 422, "Sanding": 423, "Power tool": 424, "Drill": 425, "Explosion": 426, "Gunshot, gunfire": 427, "Machine gun": 428, "Fusillade": 429, "Artillery fire": 430, "Cap gun": 431, "Fireworks": 432, "Firecracker": 433, "Burst, pop": 434, "Eruption": 435, "Boom": 436, "Wood": 437, "Chop": 438, "Splinter": 439, "Crack": 440, "Glass": 441, "Chink, clink": 442, "Shatter": 443, "Liquid": 444, "Splash, splatter": 445, "Slosh": 446, "Squish": 447, "Drip": 448, "Pour": 449, "Trickle, dribble": 450, "Gush": 451, "Fill (with liquid)": 452, "Spray": 453, "Pump (liquid)": 454, "Stir": 455, "Boiling": 456, "Sonar": 457, "Arrow": 458, "Whoosh, swoosh, swish": 459, "Thump, thud": 460, "Thunk": 461, "Electronic tuner": 462, "Effects unit": 463, "Chorus effect": 464, "Basketball bounce": 465, "Bang": 466, "Slap, smack": 467, "Whack, thwack": 468, "Smash, crash": 469, "Breaking": 470, "Bouncing": 471, "Whip": 472, "Flap": 473, "Scratch": 474, "Scrape": 475, "Rub": 476, "Roll": 477, "Crushing": 478, "Crumpling, crinkling": 479, "Tearing": 480, "Beep, bleep": 481, "Ping": 482, "Ding": 483, "Clang": 484, "Squeal": 485, "Creak": 486, "Rustle": 487, "Whir": 488, "Clatter": 489, "Sizzle": 490, "Clicking": 491, "Clickety-clack": 492, "Rumble": 493, "Plop": 494, "Jingle, tinkle": 495, "Hum": 496, "Zing": 497, "Boing": 498, "Crunch": 499, "Silence": 500, "Sine wave": 501, "Harmonic": 502, "Chirp tone": 503, "Sound effect": 504, "Pulse": 505, "Inside, small room": 506, "Inside, large room or hall": 507, "Inside, public space": 508, "Outside, urban or manmade": 509, "Outside, rural or natural": 510, "Reverberation": 511, "Echo": 512, "Noise": 513, "Environmental noise": 514, "Static": 515, "Mains hum": 516, "Distortion": 517, "Sidetone": 518, "Cacophony": 519, "White noise": 520, "Pink noise": 521, "Throbbing": 522, "Vibration": 523, "Television": 524, "Radio": 525, "Field recording": 526}

|

bootstrap_pytorch_dist_env.sh

ADDED

|

@@ -0,0 +1,17 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/bin/bash

|

| 2 |

+

# Set env vars for PyTorch

|

| 3 |

+

nodes=($(cat ${LSB_DJOB_HOSTFILE} | sort | uniq | grep -v login | grep -v batch))

|

| 4 |

+

head=${nodes[0]}

|

| 5 |

+

|

| 6 |

+

export RANK=$OMPI_COMM_WORLD_RANK

|

| 7 |

+

export LOCAL_RANK=$OMPI_COMM_WORLD_LOCAL_RANK

|

| 8 |

+

export WORLD_SIZE=$OMPI_COMM_WORLD_SIZE

|

| 9 |

+

export MASTER_ADDR=$head

|

| 10 |

+

export MASTER_PORT=29500 # default from torch launcher

|

| 11 |

+

|

| 12 |

+

echo "Setting env_var RANK=${RANK}"

|

| 13 |

+

echo "Setting env_var LOCAL_RANK=${LOCAL_RANK}"

|

| 14 |

+

echo "Setting env_var WORLD_SIZE=${WORLD_SIZE}"

|

| 15 |

+

echo "Setting env_var MASTER_ADDR=${MASTER_ADDR}"

|

| 16 |

+

echo "Setting env_var MASTER_PORT=${MASTER_PORT}"

|

| 17 |

+

|

check_ckpt.py

ADDED

|

@@ -0,0 +1,802 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

|

| 3 |

+

def keys_in_state_dict(ckpt, device='cpu'):

|

| 4 |

+

if device=="cpu":

|

| 5 |

+

a = torch.load(ckpt, map_location=torch.device('cpu'))["state_dict"]

|

| 6 |

+

else:

|

| 7 |

+

a = torch.load(ckpt)["state_dict"]

|

| 8 |

+

print("keys_in_state_dict", a.keys())

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

def check_ckpt_diff(ckpt_a, ckpt_b, key_include=None, key_exclude=None, device='cpu', verbose=True):

|

| 12 |

+

if device=="cpu":

|

| 13 |

+

a = torch.load(ckpt_a, map_location=torch.device('cpu'))["state_dict"]

|

| 14 |

+

b = torch.load(ckpt_b, map_location=torch.device('cpu'))["state_dict"]

|

| 15 |

+

else:

|

| 16 |

+

a = torch.load(ckpt_a)["state_dict"]

|

| 17 |

+

b = torch.load(ckpt_b)["state_dict"]

|

| 18 |

+

a_sum = 0

|

| 19 |

+

b_sum = 0

|

| 20 |

+

difference_count = 0

|

| 21 |

+

for k in a.keys():

|

| 22 |

+

if key_include is not None and key_include not in k:

|

| 23 |

+

continue

|

| 24 |

+

if key_exclude is not None and key_exclude in k:

|

| 25 |

+

continue

|

| 26 |

+

if k in b.keys():

|

| 27 |

+

a_sum += torch.sum(a[k])

|

| 28 |

+

b_sum += torch.sum(b[k])

|

| 29 |

+

if verbose:

|

| 30 |

+

if torch.sum(a[k]) != torch.sum(b[k]):

|

| 31 |

+

print(f"key {k} is different")

|

| 32 |

+

difference_count += 1

|

| 33 |

+

print("a_sum: ", a_sum)

|

| 34 |

+

print("b_sum: ", b_sum)

|

| 35 |

+

print("diff: ", a_sum - b_sum)

|

| 36 |

+

if verbose:

|

| 37 |

+

print("difference_count: ", difference_count)

|

| 38 |

+

return bool(a_sum - b_sum)

|

| 39 |

+

|

| 40 |

+

# Transformer no freeze:

|

| 41 |

+

# check_ckpt_diff("/fsx/clap_logs/2022_09_11-19_37_08-model_PANN-14-lr_0.001-b_160-j_4-p_fp32/checkpoints/epoch_10.pt", "/fsx/clap_logs/2022_09_11-19_37_08-model_PANN-14-lr_0.001-b_160-j_4-p_fp32/checkpoints/epoch_100.pt", "text_branch.resblocks")

|

| 42 |

+

|

| 43 |

+

check_ckpt_diff("/fsx/clap_logs/2022_09_29-23_42_40-model_PANN-14-lr_0.001-b_160-j_4-p_fp32/checkpoints/epoch_1.pt",

|

| 44 |

+

"/fsx/clap_logs/2022_09_29-23_42_40-model_PANN-14-lr_0.001-b_160-j_4-p_fp32/checkpoints/epoch_2.pt",

|

| 45 |

+

"text_branch.resblocks")

|

| 46 |

+

|

| 47 |

+

# key module.text_branch.resblocks.0.attn.in_proj_weight is different

|

| 48 |

+

# key module.text_branch.resblocks.0.attn.in_proj_bias is different

|

| 49 |

+

# key module.text_branch.resblocks.0.attn.out_proj.weight is different

|

| 50 |

+

# key module.text_branch.resblocks.0.attn.out_proj.bias is different

|

| 51 |

+

# key module.text_branch.resblocks.0.ln_1.weight is different

|

| 52 |

+

# key module.text_branch.resblocks.0.ln_1.bias is different

|

| 53 |

+

# key module.text_branch.resblocks.0.mlp.c_fc.weight is different

|

| 54 |

+

# key module.text_branch.resblocks.0.mlp.c_fc.bias is different

|

| 55 |

+

# key module.text_branch.resblocks.0.mlp.c_proj.weight is different

|

| 56 |

+

# key module.text_branch.resblocks.0.mlp.c_proj.bias is different

|

| 57 |

+

# key module.text_branch.resblocks.0.ln_2.weight is different

|

| 58 |

+

# key module.text_branch.resblocks.0.ln_2.bias is different

|

| 59 |

+

# key module.text_branch.resblocks.1.attn.in_proj_weight is different

|

| 60 |

+

# key module.text_branch.resblocks.1.attn.in_proj_bias is different

|

| 61 |

+

# key module.text_branch.resblocks.1.attn.out_proj.weight is different

|

| 62 |

+

# key module.text_branch.resblocks.1.attn.out_proj.bias is different

|

| 63 |

+

# key module.text_branch.resblocks.1.ln_1.weight is different

|

| 64 |

+

# key module.text_branch.resblocks.1.ln_1.bias is different

|

| 65 |

+

# key module.text_branch.resblocks.1.mlp.c_fc.weight is different

|

| 66 |

+

# key module.text_branch.resblocks.1.mlp.c_fc.bias is different

|

| 67 |

+

# key module.text_branch.resblocks.1.mlp.c_proj.weight is different

|

| 68 |

+

# key module.text_branch.resblocks.1.mlp.c_proj.bias is different

|

| 69 |

+

# key module.text_branch.resblocks.1.ln_2.weight is different

|

| 70 |

+

# key module.text_branch.resblocks.1.ln_2.bias is different

|

| 71 |

+

# key module.text_branch.resblocks.2.attn.in_proj_weight is different

|

| 72 |

+

# key module.text_branch.resblocks.2.attn.in_proj_bias is different

|

| 73 |

+

# key module.text_branch.resblocks.2.attn.out_proj.weight is different

|

| 74 |

+

# key module.text_branch.resblocks.2.attn.out_proj.bias is different

|

| 75 |

+

# key module.text_branch.resblocks.2.ln_1.weight is different

|

| 76 |

+

# key module.text_branch.resblocks.2.ln_1.bias is different

|

| 77 |

+

# key module.text_branch.resblocks.2.mlp.c_fc.weight is different

|

| 78 |

+

# key module.text_branch.resblocks.2.mlp.c_fc.bias is different

|

| 79 |

+

# key module.text_branch.resblocks.2.mlp.c_proj.weight is different

|

| 80 |

+

# key module.text_branch.resblocks.2.mlp.c_proj.bias is different

|

| 81 |

+

# key module.text_branch.resblocks.2.ln_2.weight is different

|

| 82 |

+

# key module.text_branch.resblocks.2.ln_2.bias is different

|

| 83 |

+

# key module.text_branch.resblocks.3.attn.in_proj_weight is different

|

| 84 |

+

# key module.text_branch.resblocks.3.attn.in_proj_bias is different

|

| 85 |

+

# key module.text_branch.resblocks.3.attn.out_proj.weight is different

|

| 86 |

+

# key module.text_branch.resblocks.3.attn.out_proj.bias is different

|

| 87 |

+

# key module.text_branch.resblocks.3.ln_1.weight is different

|

| 88 |

+

# key module.text_branch.resblocks.3.ln_1.bias is different

|

| 89 |

+

# key module.text_branch.resblocks.3.mlp.c_fc.weight is different

|

| 90 |

+

# key module.text_branch.resblocks.3.mlp.c_fc.bias is different

|

| 91 |

+

# key module.text_branch.resblocks.3.mlp.c_proj.weight is different

|

| 92 |

+

# key module.text_branch.resblocks.3.mlp.c_proj.bias is different

|

| 93 |

+

# key module.text_branch.resblocks.3.ln_2.weight is different

|

| 94 |

+

# key module.text_branch.resblocks.3.ln_2.bias is different

|

| 95 |

+

# key module.text_branch.resblocks.4.attn.in_proj_weight is different

|

| 96 |

+

# key module.text_branch.resblocks.4.attn.in_proj_bias is different

|

| 97 |

+

# key module.text_branch.resblocks.4.attn.out_proj.weight is different

|

| 98 |

+

# key module.text_branch.resblocks.4.attn.out_proj.bias is different

|

| 99 |

+

# key module.text_branch.resblocks.4.ln_1.weight is different

|

| 100 |

+

# key module.text_branch.resblocks.4.ln_1.bias is different

|

| 101 |

+

# key module.text_branch.resblocks.4.mlp.c_fc.weight is different

|

| 102 |

+

# key module.text_branch.resblocks.4.mlp.c_fc.bias is different

|

| 103 |

+

# key module.text_branch.resblocks.4.mlp.c_proj.weight is different

|

| 104 |

+

# key module.text_branch.resblocks.4.mlp.c_proj.bias is different

|

| 105 |

+

# key module.text_branch.resblocks.4.ln_2.weight is different

|

| 106 |

+

# key module.text_branch.resblocks.4.ln_2.bias is different

|

| 107 |

+

# key module.text_branch.resblocks.5.attn.in_proj_weight is different

|

| 108 |

+

# key module.text_branch.resblocks.5.attn.in_proj_bias is different

|

| 109 |

+

# key module.text_branch.resblocks.5.attn.out_proj.weight is different

|

| 110 |

+

# key module.text_branch.resblocks.5.attn.out_proj.bias is different

|

| 111 |

+

# key module.text_branch.resblocks.5.ln_1.weight is different

|

| 112 |

+

# key module.text_branch.resblocks.5.ln_1.bias is different

|

| 113 |

+

# key module.text_branch.resblocks.5.mlp.c_fc.weight is different

|

| 114 |

+

# key module.text_branch.resblocks.5.mlp.c_fc.bias is different

|

| 115 |

+

# key module.text_branch.resblocks.5.mlp.c_proj.weight is different

|

| 116 |

+

# key module.text_branch.resblocks.5.mlp.c_proj.bias is different

|

| 117 |

+

# key module.text_branch.resblocks.5.ln_2.weight is different

|

| 118 |

+

# key module.text_branch.resblocks.5.ln_2.bias is different

|

| 119 |

+

# key module.text_branch.resblocks.6.attn.in_proj_weight is different

|

| 120 |

+

# key module.text_branch.resblocks.6.attn.in_proj_bias is different

|

| 121 |

+

# key module.text_branch.resblocks.6.attn.out_proj.weight is different

|

| 122 |

+

# key module.text_branch.resblocks.6.attn.out_proj.bias is different

|

| 123 |

+

# key module.text_branch.resblocks.6.ln_1.weight is different

|

| 124 |

+

# key module.text_branch.resblocks.6.ln_1.bias is different

|

| 125 |

+

# key module.text_branch.resblocks.6.mlp.c_fc.weight is different

|

| 126 |

+

# key module.text_branch.resblocks.6.mlp.c_fc.bias is different

|

| 127 |

+

# key module.text_branch.resblocks.6.mlp.c_proj.weight is different

|

| 128 |

+

# key module.text_branch.resblocks.6.mlp.c_proj.bias is different

|

| 129 |

+

# key module.text_branch.resblocks.6.ln_2.weight is different

|

| 130 |

+

# key module.text_branch.resblocks.6.ln_2.bias is different

|

| 131 |

+

# key module.text_branch.resblocks.7.attn.in_proj_weight is different

|

| 132 |

+

# key module.text_branch.resblocks.7.attn.in_proj_bias is different

|

| 133 |

+

# key module.text_branch.resblocks.7.attn.out_proj.weight is different

|

| 134 |

+

# key module.text_branch.resblocks.7.attn.out_proj.bias is different

|

| 135 |

+

# key module.text_branch.resblocks.7.ln_1.weight is different

|

| 136 |

+

# key module.text_branch.resblocks.7.ln_1.bias is different

|

| 137 |

+

# key module.text_branch.resblocks.7.mlp.c_fc.weight is different

|

| 138 |

+

# key module.text_branch.resblocks.7.mlp.c_fc.bias is different

|

| 139 |

+

# key module.text_branch.resblocks.7.mlp.c_proj.weight is different

|

| 140 |

+

# key module.text_branch.resblocks.7.mlp.c_proj.bias is different

|

| 141 |

+

# key module.text_branch.resblocks.7.ln_2.weight is different

|

| 142 |

+

# key module.text_branch.resblocks.7.ln_2.bias is different

|

| 143 |

+

# key module.text_branch.resblocks.8.attn.in_proj_weight is different

|

| 144 |

+

# key module.text_branch.resblocks.8.attn.in_proj_bias is different

|

| 145 |

+

# key module.text_branch.resblocks.8.attn.out_proj.weight is different

|

| 146 |

+

# key module.text_branch.resblocks.8.attn.out_proj.bias is different

|

| 147 |

+

# key module.text_branch.resblocks.8.ln_1.weight is different

|

| 148 |

+

# key module.text_branch.resblocks.8.ln_1.bias is different

|

| 149 |

+

# key module.text_branch.resblocks.8.mlp.c_fc.weight is different

|

| 150 |

+

# key module.text_branch.resblocks.8.mlp.c_fc.bias is different

|

| 151 |

+

# key module.text_branch.resblocks.8.mlp.c_proj.weight is different

|

| 152 |

+

# key module.text_branch.resblocks.8.mlp.c_proj.bias is different

|

| 153 |

+

# key module.text_branch.resblocks.8.ln_2.weight is different

|

| 154 |

+

# key module.text_branch.resblocks.8.ln_2.bias is different

|

| 155 |

+

# key module.text_branch.resblocks.9.attn.in_proj_weight is different

|

| 156 |

+

# key module.text_branch.resblocks.9.attn.in_proj_bias is different

|

| 157 |

+

# key module.text_branch.resblocks.9.attn.out_proj.weight is different

|

| 158 |

+

# key module.text_branch.resblocks.9.attn.out_proj.bias is different

|

| 159 |

+

# key module.text_branch.resblocks.9.ln_1.weight is different

|

| 160 |

+

# key module.text_branch.resblocks.9.ln_1.bias is different

|

| 161 |

+

# key module.text_branch.resblocks.9.mlp.c_fc.weight is different

|

| 162 |

+

# key module.text_branch.resblocks.9.mlp.c_fc.bias is different

|

| 163 |

+

# key module.text_branch.resblocks.9.mlp.c_proj.weight is different

|

| 164 |

+

# key module.text_branch.resblocks.9.mlp.c_proj.bias is different

|

| 165 |

+

# key module.text_branch.resblocks.9.ln_2.weight is different

|

| 166 |

+

# key module.text_branch.resblocks.9.ln_2.bias is different

|

| 167 |

+

# key module.text_branch.resblocks.10.attn.in_proj_weight is different

|

| 168 |

+

# key module.text_branch.resblocks.10.attn.in_proj_bias is different

|

| 169 |

+

# key module.text_branch.resblocks.10.attn.out_proj.weight is different

|

| 170 |

+

# key module.text_branch.resblocks.10.attn.out_proj.bias is different

|

| 171 |

+

# key module.text_branch.resblocks.10.ln_1.weight is different

|

| 172 |

+

# key module.text_branch.resblocks.10.ln_1.bias is different

|

| 173 |

+

# key module.text_branch.resblocks.10.mlp.c_fc.weight is different

|

| 174 |

+

# key module.text_branch.resblocks.10.mlp.c_fc.bias is different

|

| 175 |

+

# key module.text_branch.resblocks.10.mlp.c_proj.weight is different

|

| 176 |

+

# key module.text_branch.resblocks.10.mlp.c_proj.bias is different

|

| 177 |

+

# key module.text_branch.resblocks.10.ln_2.weight is different

|

| 178 |

+

# key module.text_branch.resblocks.10.ln_2.bias is different

|

| 179 |

+

# key module.text_branch.resblocks.11.attn.in_proj_weight is different

|

| 180 |

+

# key module.text_branch.resblocks.11.attn.in_proj_bias is different

|

| 181 |

+

# key module.text_branch.resblocks.11.attn.out_proj.weight is different

|

| 182 |

+

# key module.text_branch.resblocks.11.attn.out_proj.bias is different

|

| 183 |

+

# key module.text_branch.resblocks.11.ln_1.weight is different

|

| 184 |

+

# key module.text_branch.resblocks.11.ln_1.bias is different

|

| 185 |

+

# key module.text_branch.resblocks.11.mlp.c_fc.weight is different

|

| 186 |

+

# key module.text_branch.resblocks.11.mlp.c_fc.bias is different

|

| 187 |

+

# key module.text_branch.resblocks.11.mlp.c_proj.weight is different

|

| 188 |

+

# key module.text_branch.resblocks.11.mlp.c_proj.bias is different

|

| 189 |

+

# key module.text_branch.resblocks.11.ln_2.weight is different

|

| 190 |

+

# key module.text_branch.resblocks.11.ln_2.bias is different

|

| 191 |

+

# a_sum: tensor(12113.6445)

|

| 192 |

+

# b_sum: tensor(9883.4424)

|

| 193 |

+

# diff: tensor(2230.2021)

|

| 194 |

+

# True

|

| 195 |

+

|

| 196 |

+

|

| 197 |

+

# Transformer freeze:

|

| 198 |

+

# check_ckpt_diff("/fsx/clap_logs/2022_09_16-18_55_10-model_PANN-14-lr_0.001-b_160-j_4-p_fp32/checkpoints/epoch_10.pt", "/fsx/clap_logs/2022_09_16-18_55_10-model_PANN-14-lr_0.001-b_160-j_4-p_fp32/checkpoints/epoch_100.pt", "text_branch.resblocks")

|

| 199 |

+

|

| 200 |

+

# key module.text_branch.resblocks.0.attn.in_proj_weight is different

|

| 201 |

+

# key module.text_branch.resblocks.0.attn.in_proj_bias is different

|

| 202 |

+

# key module.text_branch.resblocks.0.attn.out_proj.weight is different

|

| 203 |

+

# key module.text_branch.resblocks.0.attn.out_proj.bias is different

|

| 204 |

+

# key module.text_branch.resblocks.0.ln_1.weight is different

|

| 205 |

+

# key module.text_branch.resblocks.0.ln_1.bias is different

|

| 206 |

+

# key module.text_branch.resblocks.0.mlp.c_fc.weight is different

|

| 207 |

+

# key module.text_branch.resblocks.0.mlp.c_fc.bias is different

|

| 208 |

+

# key module.text_branch.resblocks.0.mlp.c_proj.weight is different

|

| 209 |

+

# key module.text_branch.resblocks.0.mlp.c_proj.bias is different

|

| 210 |

+

# key module.text_branch.resblocks.0.ln_2.weight is different

|

| 211 |

+

# key module.text_branch.resblocks.0.ln_2.bias is different

|

| 212 |

+

# key module.text_branch.resblocks.1.attn.in_proj_weight is different

|

| 213 |

+

# key module.text_branch.resblocks.1.attn.in_proj_bias is different

|

| 214 |

+

# key module.text_branch.resblocks.1.attn.out_proj.weight is different

|

| 215 |

+

# key module.text_branch.resblocks.1.attn.out_proj.bias is different

|

| 216 |

+

# key module.text_branch.resblocks.1.ln_1.weight is different

|

| 217 |

+

# key module.text_branch.resblocks.1.ln_1.bias is different

|

| 218 |

+

# key module.text_branch.resblocks.1.mlp.c_fc.weight is different

|

| 219 |

+

# key module.text_branch.resblocks.1.mlp.c_fc.bias is different

|

| 220 |

+

# key module.text_branch.resblocks.1.mlp.c_proj.weight is different

|

| 221 |

+

# key module.text_branch.resblocks.1.mlp.c_proj.bias is different

|

| 222 |

+

# key module.text_branch.resblocks.1.ln_2.weight is different

|

| 223 |

+

# key module.text_branch.resblocks.1.ln_2.bias is different

|

| 224 |

+

# key module.text_branch.resblocks.2.attn.in_proj_weight is different

|

| 225 |

+

# key module.text_branch.resblocks.2.attn.in_proj_bias is different

|

| 226 |

+

# key module.text_branch.resblocks.2.attn.out_proj.weight is different

|

| 227 |

+

# key module.text_branch.resblocks.2.attn.out_proj.bias is different

|

| 228 |

+

# key module.text_branch.resblocks.2.ln_1.weight is different

|

| 229 |

+

# key module.text_branch.resblocks.2.ln_1.bias is different

|

| 230 |

+

# key module.text_branch.resblocks.2.mlp.c_fc.weight is different

|

| 231 |

+

# key module.text_branch.resblocks.2.mlp.c_fc.bias is different

|

| 232 |

+

# key module.text_branch.resblocks.2.mlp.c_proj.weight is different

|

| 233 |

+

# key module.text_branch.resblocks.2.mlp.c_proj.bias is different

|

| 234 |

+

# key module.text_branch.resblocks.2.ln_2.weight is different

|

| 235 |

+

# key module.text_branch.resblocks.2.ln_2.bias is different

|

| 236 |

+

# key module.text_branch.resblocks.3.attn.in_proj_weight is different

|

| 237 |

+

# key module.text_branch.resblocks.3.attn.in_proj_bias is different

|

| 238 |

+

# key module.text_branch.resblocks.3.attn.out_proj.weight is different

|

| 239 |

+

# key module.text_branch.resblocks.3.attn.out_proj.bias is different

|

| 240 |

+

# key module.text_branch.resblocks.3.ln_1.weight is different

|

| 241 |

+

# key module.text_branch.resblocks.3.ln_1.bias is different

|

| 242 |

+

# key module.text_branch.resblocks.3.mlp.c_fc.weight is different

|

| 243 |

+

# key module.text_branch.resblocks.3.mlp.c_fc.bias is different

|

| 244 |

+

# key module.text_branch.resblocks.3.mlp.c_proj.weight is different

|

| 245 |

+

# key module.text_branch.resblocks.3.mlp.c_proj.bias is different

|

| 246 |

+

# key module.text_branch.resblocks.3.ln_2.weight is different

|

| 247 |

+

# key module.text_branch.resblocks.3.ln_2.bias is different

|

| 248 |

+

# key module.text_branch.resblocks.4.attn.in_proj_weight is different

|

| 249 |

+

# key module.text_branch.resblocks.4.attn.in_proj_bias is different

|

| 250 |

+

# key module.text_branch.resblocks.4.attn.out_proj.weight is different

|

| 251 |

+

# key module.text_branch.resblocks.4.attn.out_proj.bias is different

|

| 252 |

+

# key module.text_branch.resblocks.4.ln_1.weight is different

|

| 253 |

+

# key module.text_branch.resblocks.4.ln_1.bias is different

|

| 254 |

+

# key module.text_branch.resblocks.4.mlp.c_fc.weight is different

|

| 255 |

+

# key module.text_branch.resblocks.4.mlp.c_fc.bias is different

|

| 256 |

+

# key module.text_branch.resblocks.4.mlp.c_proj.weight is different

|

| 257 |

+

# key module.text_branch.resblocks.4.mlp.c_proj.bias is different

|

| 258 |

+

# key module.text_branch.resblocks.4.ln_2.weight is different

|

| 259 |

+

# key module.text_branch.resblocks.4.ln_2.bias is different

|

| 260 |

+

# key module.text_branch.resblocks.5.attn.in_proj_weight is different

|

| 261 |

+

# key module.text_branch.resblocks.5.attn.in_proj_bias is different

|

| 262 |

+

# key module.text_branch.resblocks.5.attn.out_proj.weight is different

|

| 263 |

+

# key module.text_branch.resblocks.5.attn.out_proj.bias is different

|

| 264 |

+

# key module.text_branch.resblocks.5.ln_1.weight is different

|

| 265 |

+

# key module.text_branch.resblocks.5.ln_1.bias is different

|

| 266 |

+

# key module.text_branch.resblocks.5.mlp.c_fc.weight is different

|

| 267 |

+

# key module.text_branch.resblocks.5.mlp.c_fc.bias is different

|

| 268 |

+

# key module.text_branch.resblocks.5.mlp.c_proj.weight is different

|

| 269 |

+

# key module.text_branch.resblocks.5.mlp.c_proj.bias is different

|

| 270 |

+

# key module.text_branch.resblocks.5.ln_2.weight is different

|

| 271 |

+

# key module.text_branch.resblocks.5.ln_2.bias is different

|

| 272 |

+

# key module.text_branch.resblocks.6.attn.in_proj_weight is different

|

| 273 |

+

# key module.text_branch.resblocks.6.attn.in_proj_bias is different

|

| 274 |

+

# key module.text_branch.resblocks.6.attn.out_proj.weight is different

|

| 275 |

+

# key module.text_branch.resblocks.6.attn.out_proj.bias is different

|

| 276 |

+

# key module.text_branch.resblocks.6.ln_1.weight is different

|

| 277 |

+

# key module.text_branch.resblocks.6.ln_1.bias is different

|

| 278 |

+

# key module.text_branch.resblocks.6.mlp.c_fc.weight is different

|

| 279 |

+

# key module.text_branch.resblocks.6.mlp.c_fc.bias is different

|

| 280 |

+

# key module.text_branch.resblocks.6.mlp.c_proj.weight is different

|

| 281 |

+

# key module.text_branch.resblocks.6.mlp.c_proj.bias is different

|

| 282 |

+

# key module.text_branch.resblocks.6.ln_2.weight is different

|

| 283 |

+

# key module.text_branch.resblocks.6.ln_2.bias is different

|

| 284 |

+

# key module.text_branch.resblocks.7.attn.in_proj_weight is different

|

| 285 |

+

# key module.text_branch.resblocks.7.attn.in_proj_bias is different

|

| 286 |

+

# key module.text_branch.resblocks.7.attn.out_proj.weight is different

|

| 287 |

+

# key module.text_branch.resblocks.7.attn.out_proj.bias is different

|

| 288 |

+

# key module.text_branch.resblocks.7.ln_1.weight is different

|

| 289 |

+

# key module.text_branch.resblocks.7.ln_1.bias is different

|

| 290 |

+

# key module.text_branch.resblocks.7.mlp.c_fc.weight is different

|

| 291 |

+

# key module.text_branch.resblocks.7.mlp.c_fc.bias is different

|

| 292 |

+

# key module.text_branch.resblocks.7.mlp.c_proj.weight is different

|

| 293 |

+

# key module.text_branch.resblocks.7.mlp.c_proj.bias is different

|

| 294 |

+

# key module.text_branch.resblocks.7.ln_2.weight is different

|

| 295 |

+

# key module.text_branch.resblocks.7.ln_2.bias is different

|

| 296 |

+

# key module.text_branch.resblocks.8.attn.in_proj_weight is different

|

| 297 |

+

# key module.text_branch.resblocks.8.attn.in_proj_bias is different

|

| 298 |

+

# key module.text_branch.resblocks.8.attn.out_proj.weight is different

|

| 299 |

+

# key module.text_branch.resblocks.8.attn.out_proj.bias is different

|

| 300 |

+

# key module.text_branch.resblocks.8.ln_1.weight is different

|

| 301 |

+

# key module.text_branch.resblocks.8.ln_1.bias is different

|

| 302 |

+

# key module.text_branch.resblocks.8.mlp.c_fc.weight is different

|

| 303 |

+

# key module.text_branch.resblocks.8.mlp.c_fc.bias is different

|

| 304 |

+

# key module.text_branch.resblocks.8.mlp.c_proj.weight is different

|

| 305 |

+

# key module.text_branch.resblocks.8.mlp.c_proj.bias is different

|

| 306 |

+

# key module.text_branch.resblocks.8.ln_2.weight is different

|

| 307 |

+

# key module.text_branch.resblocks.8.ln_2.bias is different

|

| 308 |

+

# key module.text_branch.resblocks.9.attn.in_proj_weight is different

|

| 309 |

+

# key module.text_branch.resblocks.9.attn.in_proj_bias is different

|

| 310 |

+

# key module.text_branch.resblocks.9.attn.out_proj.weight is different

|

| 311 |

+

# key module.text_branch.resblocks.9.attn.out_proj.bias is different

|

| 312 |

+

# key module.text_branch.resblocks.9.ln_1.weight is different

|

| 313 |

+

# key module.text_branch.resblocks.9.ln_1.bias is different

|

| 314 |

+

# key module.text_branch.resblocks.9.mlp.c_fc.weight is different

|

| 315 |

+

# key module.text_branch.resblocks.9.mlp.c_fc.bias is different

|

| 316 |

+

# key module.text_branch.resblocks.9.mlp.c_proj.weight is different

|

| 317 |

+