Spaces:

Sleeping

Sleeping

VCAM-27 local TTS model loading implement

Browse files- TTS_model/config.yaml +400 -0

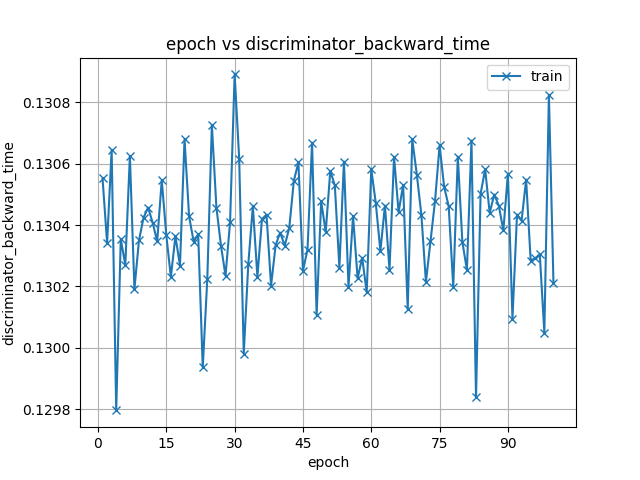

- TTS_model/images/discriminator_backward_time.png +0 -0

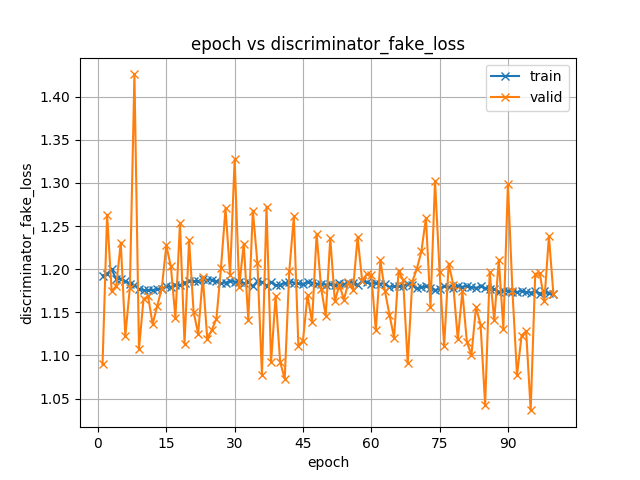

- TTS_model/images/discriminator_fake_loss.png +0 -0

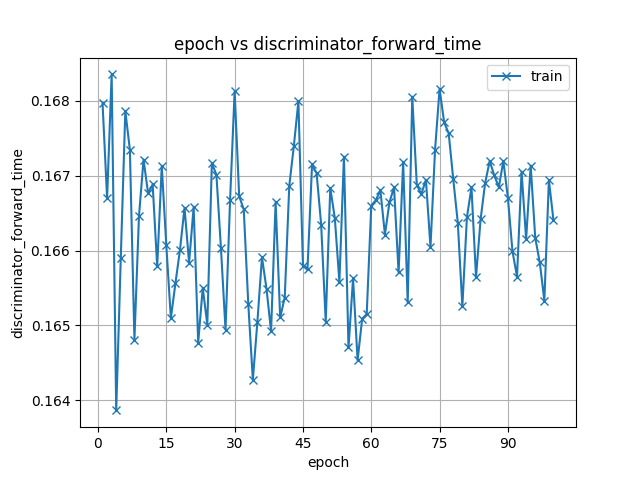

- TTS_model/images/discriminator_forward_time.png +0 -0

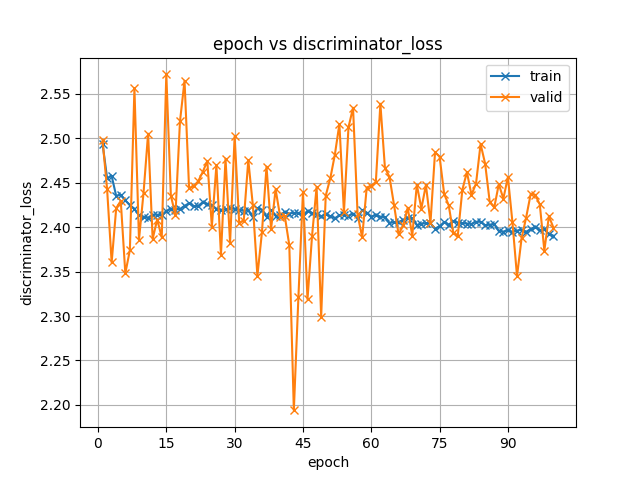

- TTS_model/images/discriminator_loss.png +0 -0

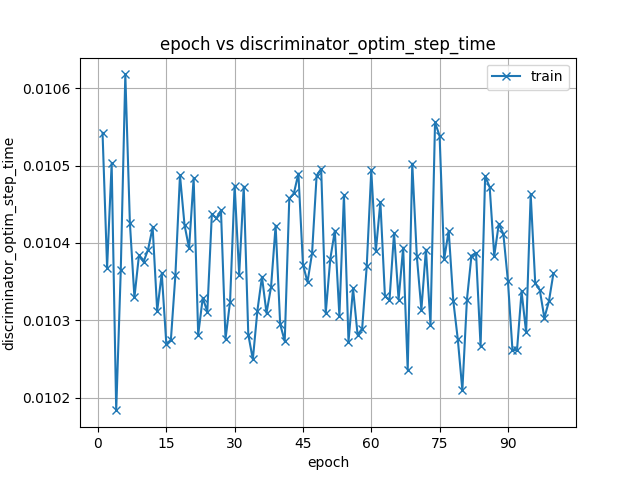

- TTS_model/images/discriminator_optim_step_time.png +0 -0

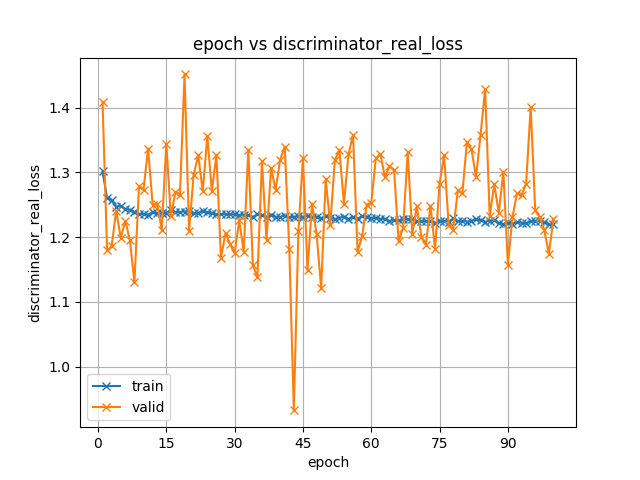

- TTS_model/images/discriminator_real_loss.png +0 -0

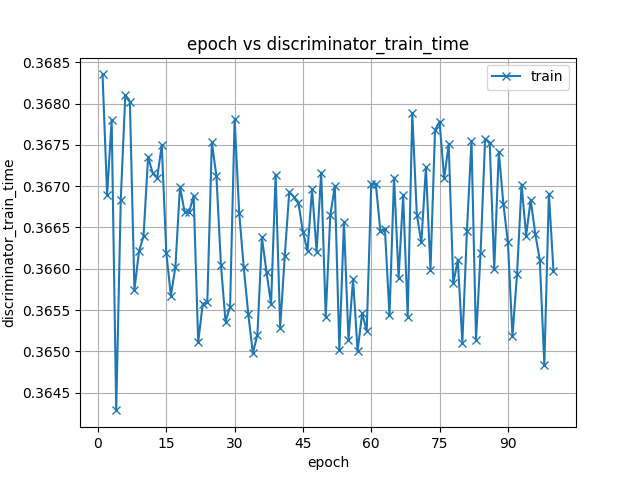

- TTS_model/images/discriminator_train_time.png +0 -0

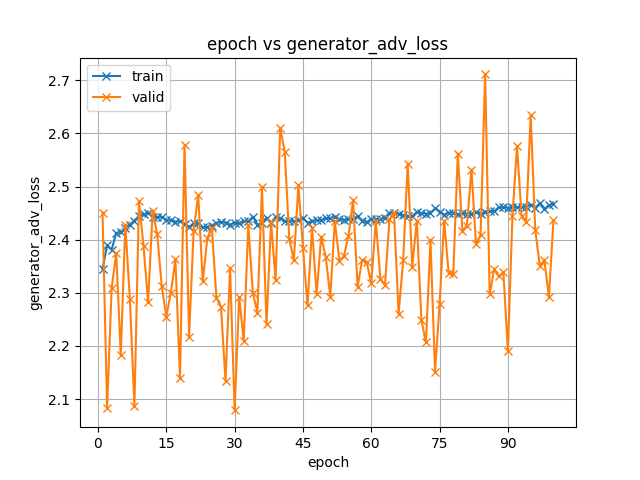

- TTS_model/images/generator_adv_loss.png +0 -0

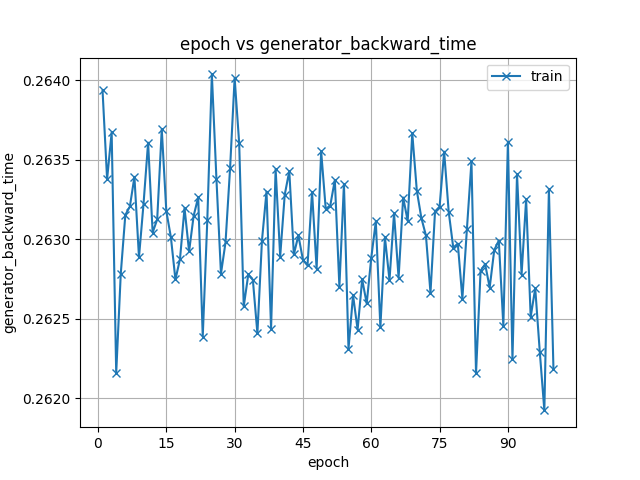

- TTS_model/images/generator_backward_time.png +0 -0

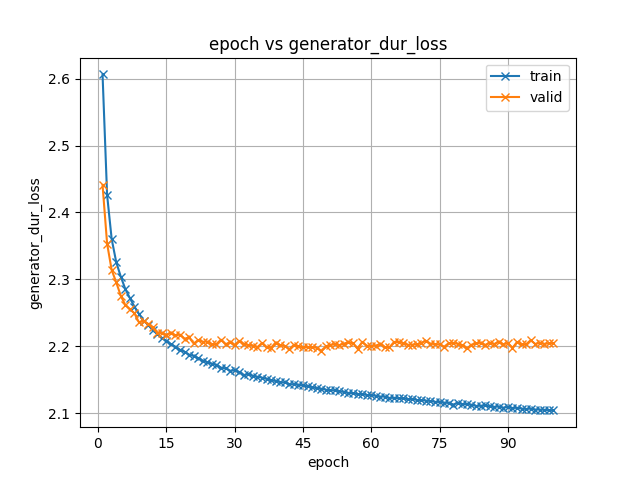

- TTS_model/images/generator_dur_loss.png +0 -0

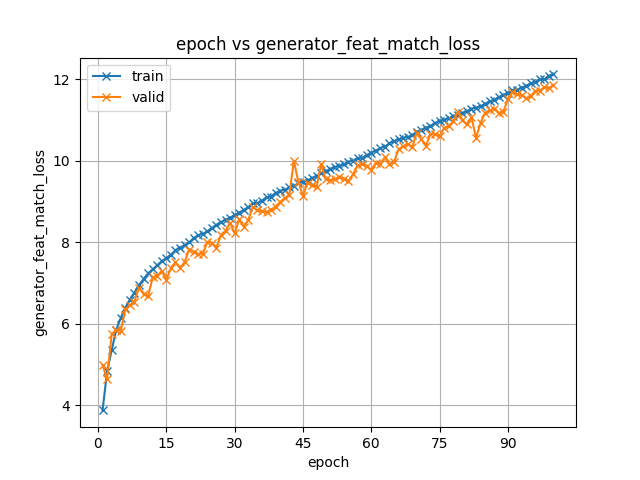

- TTS_model/images/generator_feat_match_loss.png +0 -0

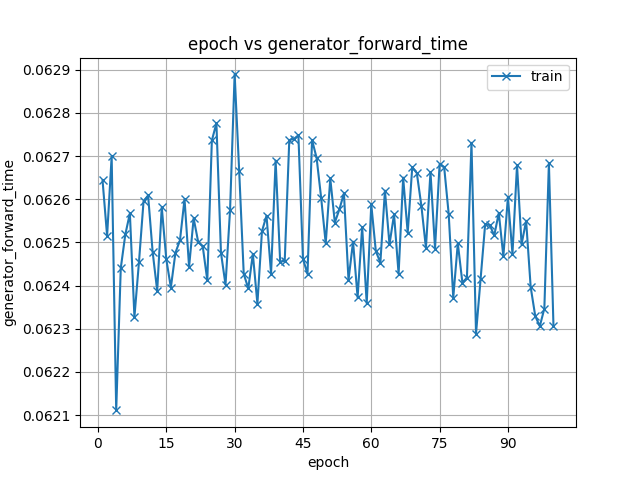

- TTS_model/images/generator_forward_time.png +0 -0

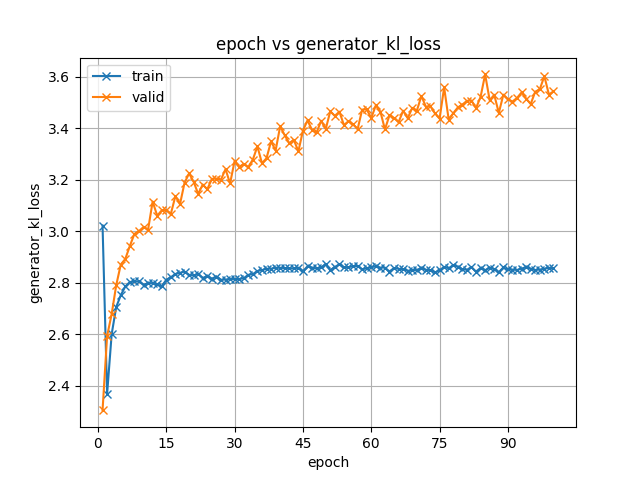

- TTS_model/images/generator_kl_loss.png +0 -0

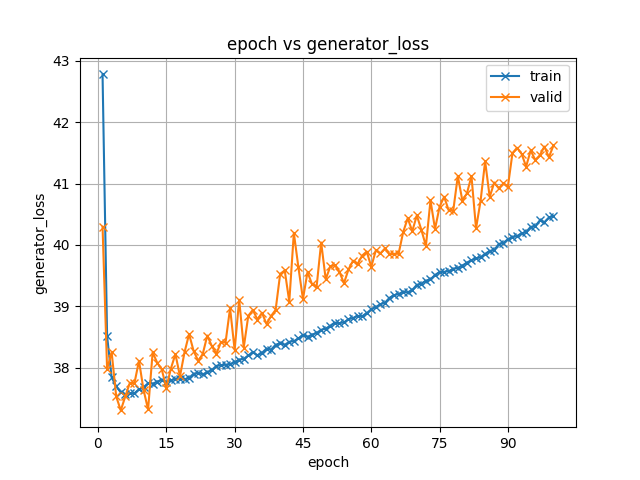

- TTS_model/images/generator_loss.png +0 -0

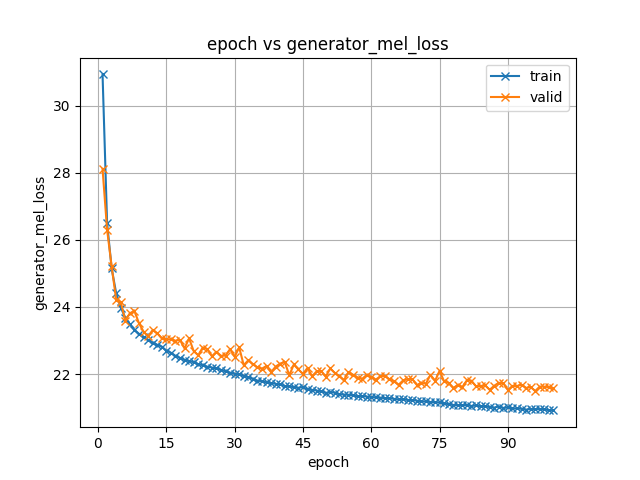

- TTS_model/images/generator_mel_loss.png +0 -0

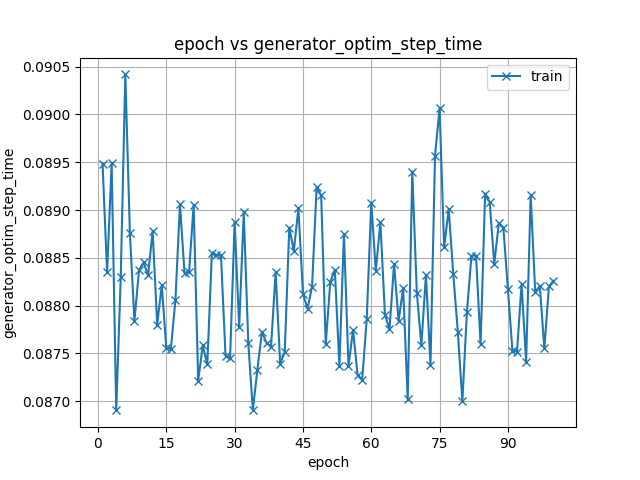

- TTS_model/images/generator_optim_step_time.png +0 -0

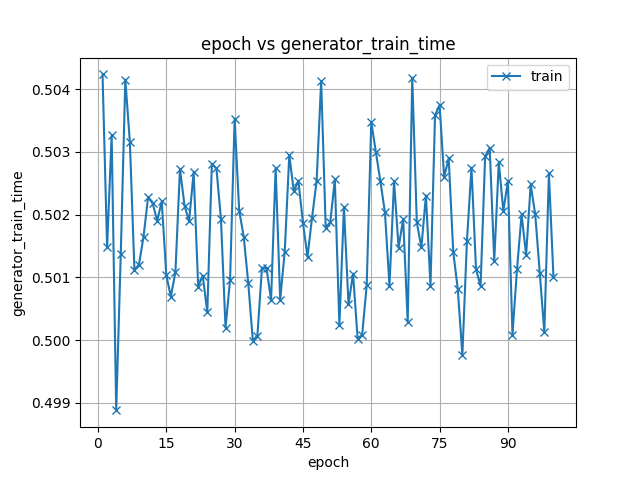

- TTS_model/images/generator_train_time.png +0 -0

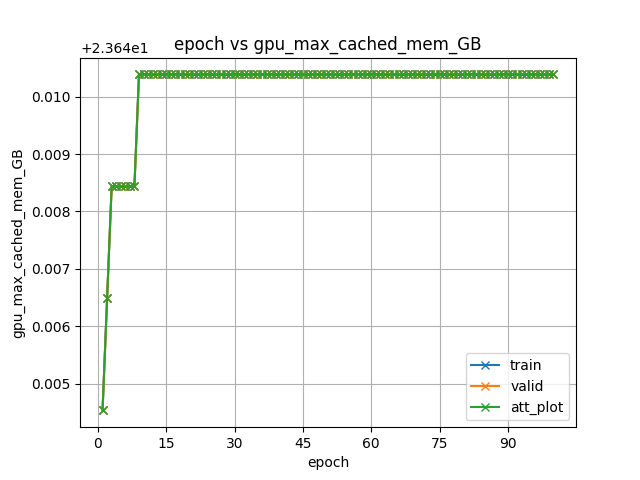

- TTS_model/images/gpu_max_cached_mem_GB.png +0 -0

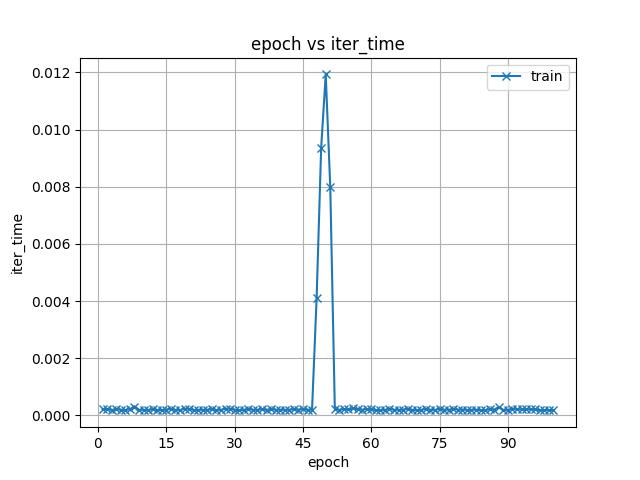

- TTS_model/images/iter_time.png +0 -0

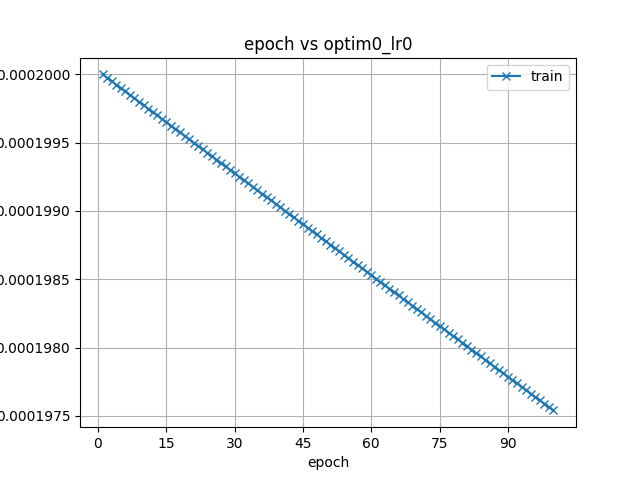

- TTS_model/images/optim0_lr0.png +0 -0

- TTS_model/images/optim1_lr0.png +0 -0

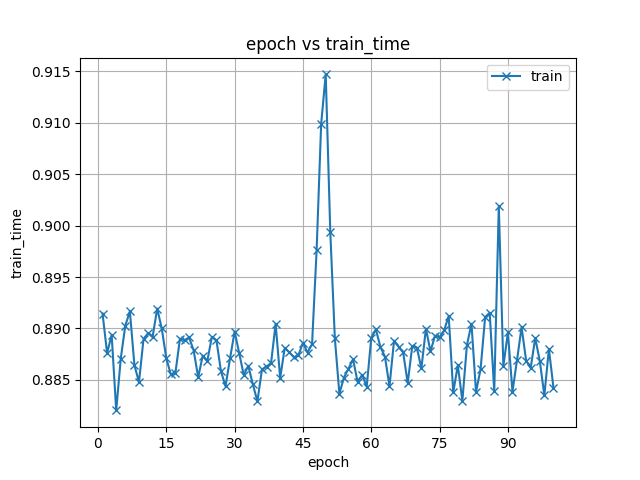

- TTS_model/images/train_time.png +0 -0

- TTS_model/train.total_count.ave_10best.pth +3 -0

- app.py +5 -15

TTS_model/config.yaml

ADDED

|

@@ -0,0 +1,400 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

accum_grad: 1

|

| 2 |

+

allow_variable_data_keys: false

|

| 3 |

+

batch_bins: 5000000

|

| 4 |

+

batch_size: 20

|

| 5 |

+

batch_type: numel

|

| 6 |

+

best_model_criterion:

|

| 7 |

+

- - train

|

| 8 |

+

- total_count

|

| 9 |

+

- max

|

| 10 |

+

bpemodel: null

|

| 11 |

+

chunk_length: 500

|

| 12 |

+

chunk_shift_ratio: 0.5

|

| 13 |

+

cleaner: tacotron

|

| 14 |

+

collect_stats: false

|

| 15 |

+

config: ./conf/tuning/train_xvector_vits.yaml

|

| 16 |

+

cudnn_benchmark: false

|

| 17 |

+

cudnn_deterministic: false

|

| 18 |

+

cudnn_enabled: true

|

| 19 |

+

detect_anomaly: false

|

| 20 |

+

dist_backend: nccl

|

| 21 |

+

dist_init_method: env://

|

| 22 |

+

dist_launcher: null

|

| 23 |

+

dist_master_addr: localhost

|

| 24 |

+

dist_master_port: 60056

|

| 25 |

+

dist_rank: 0

|

| 26 |

+

dist_world_size: 4

|

| 27 |

+

distributed: true

|

| 28 |

+

dry_run: false

|

| 29 |

+

early_stopping_criterion:

|

| 30 |

+

- valid

|

| 31 |

+

- loss

|

| 32 |

+

- min

|

| 33 |

+

energy_extract: null

|

| 34 |

+

energy_extract_conf: {}

|

| 35 |

+

energy_normalize: null

|

| 36 |

+

energy_normalize_conf: {}

|

| 37 |

+

feats_extract: linear_spectrogram

|

| 38 |

+

feats_extract_conf:

|

| 39 |

+

hop_length: 256

|

| 40 |

+

n_fft: 1024

|

| 41 |

+

win_length: null

|

| 42 |

+

fold_length:

|

| 43 |

+

- 150

|

| 44 |

+

- 204800

|

| 45 |

+

freeze_param: []

|

| 46 |

+

g2p: g2p_en_no_space

|

| 47 |

+

generator_first: false

|

| 48 |

+

grad_clip: -1

|

| 49 |

+

grad_clip_type: 2.0

|

| 50 |

+

grad_noise: false

|

| 51 |

+

ignore_init_mismatch: false

|

| 52 |

+

init_param: []

|

| 53 |

+

iterator_type: sequence

|

| 54 |

+

keep_nbest_models: 10

|

| 55 |

+

local_rank: 0

|

| 56 |

+

log_interval: 50

|

| 57 |

+

log_level: INFO

|

| 58 |

+

max_cache_fd: 32

|

| 59 |

+

max_cache_size: 0.0

|

| 60 |

+

max_epoch: 100

|

| 61 |

+

model_conf: {}

|

| 62 |

+

multiple_iterator: false

|

| 63 |

+

multiprocessing_distributed: true

|

| 64 |

+

ngpu: 1

|

| 65 |

+

no_forward_run: false

|

| 66 |

+

non_linguistic_symbols: null

|

| 67 |

+

normalize: null

|

| 68 |

+

normalize_conf: {}

|

| 69 |

+

num_att_plot: 3

|

| 70 |

+

num_cache_chunks: 1024

|

| 71 |

+

num_iters_per_epoch: 10000

|

| 72 |

+

num_workers: 4

|

| 73 |

+

odim: null

|

| 74 |

+

optim: adamw

|

| 75 |

+

optim2: adamw

|

| 76 |

+

optim2_conf:

|

| 77 |

+

betas:

|

| 78 |

+

- 0.8

|

| 79 |

+

- 0.99

|

| 80 |

+

eps: 1.0e-09

|

| 81 |

+

lr: 0.0002

|

| 82 |

+

weight_decay: 0.0

|

| 83 |

+

optim_conf:

|

| 84 |

+

betas:

|

| 85 |

+

- 0.8

|

| 86 |

+

- 0.99

|

| 87 |

+

eps: 1.0e-09

|

| 88 |

+

lr: 0.0002

|

| 89 |

+

weight_decay: 0.0

|

| 90 |

+

output_dir: exp/tts_train_xvector_vits_raw_phn_tacotron_g2p_en_no_space

|

| 91 |

+

patience: null

|

| 92 |

+

pitch_extract: null

|

| 93 |

+

pitch_extract_conf: {}

|

| 94 |

+

pitch_normalize: null

|

| 95 |

+

pitch_normalize_conf: {}

|

| 96 |

+

pretrain_path: null

|

| 97 |

+

print_config: false

|

| 98 |

+

required:

|

| 99 |

+

- output_dir

|

| 100 |

+

- token_list

|

| 101 |

+

resume: true

|

| 102 |

+

scheduler: exponentiallr

|

| 103 |

+

scheduler2: exponentiallr

|

| 104 |

+

scheduler2_conf:

|

| 105 |

+

gamma: 0.999875

|

| 106 |

+

scheduler_conf:

|

| 107 |

+

gamma: 0.999875

|

| 108 |

+

seed: 777

|

| 109 |

+

sharded_ddp: false

|

| 110 |

+

sort_batch: descending

|

| 111 |

+

sort_in_batch: descending

|

| 112 |

+

token_list:

|

| 113 |

+

- <blank>

|

| 114 |

+

- <unk>

|

| 115 |

+

- AH0

|

| 116 |

+

- T

|

| 117 |

+

- N

|

| 118 |

+

- D

|

| 119 |

+

- S

|

| 120 |

+

- R

|

| 121 |

+

- L

|

| 122 |

+

- IH1

|

| 123 |

+

- DH

|

| 124 |

+

- M

|

| 125 |

+

- K

|

| 126 |

+

- Z

|

| 127 |

+

- EH1

|

| 128 |

+

- AE1

|

| 129 |

+

- IH0

|

| 130 |

+

- AH1

|

| 131 |

+

- W

|

| 132 |

+

- ','

|

| 133 |

+

- HH

|

| 134 |

+

- ER0

|

| 135 |

+

- P

|

| 136 |

+

- IY1

|

| 137 |

+

- V

|

| 138 |

+

- F

|

| 139 |

+

- B

|

| 140 |

+

- UW1

|

| 141 |

+

- AA1

|

| 142 |

+

- AY1

|

| 143 |

+

- AO1

|

| 144 |

+

- .

|

| 145 |

+

- EY1

|

| 146 |

+

- IY0

|

| 147 |

+

- OW1

|

| 148 |

+

- NG

|

| 149 |

+

- G

|

| 150 |

+

- SH

|

| 151 |

+

- Y

|

| 152 |

+

- AW1

|

| 153 |

+

- CH

|

| 154 |

+

- ER1

|

| 155 |

+

- UH1

|

| 156 |

+

- TH

|

| 157 |

+

- JH

|

| 158 |

+

- ''''

|

| 159 |

+

- '?'

|

| 160 |

+

- OW0

|

| 161 |

+

- EH2

|

| 162 |

+

- '!'

|

| 163 |

+

- IH2

|

| 164 |

+

- OY1

|

| 165 |

+

- EY2

|

| 166 |

+

- AY2

|

| 167 |

+

- EH0

|

| 168 |

+

- UW0

|

| 169 |

+

- AA2

|

| 170 |

+

- AE2

|

| 171 |

+

- OW2

|

| 172 |

+

- AO2

|

| 173 |

+

- AE0

|

| 174 |

+

- AH2

|

| 175 |

+

- ZH

|

| 176 |

+

- AA0

|

| 177 |

+

- UW2

|

| 178 |

+

- IY2

|

| 179 |

+

- AY0

|

| 180 |

+

- AO0

|

| 181 |

+

- AW2

|

| 182 |

+

- EY0

|

| 183 |

+

- UH2

|

| 184 |

+

- ER2

|

| 185 |

+

- AW0

|

| 186 |

+

- '...'

|

| 187 |

+

- UH0

|

| 188 |

+

- OY2

|

| 189 |

+

- . . .

|

| 190 |

+

- OY0

|

| 191 |

+

- . . . .

|

| 192 |

+

- ..

|

| 193 |

+

- . ...

|

| 194 |

+

- . .

|

| 195 |

+

- . . . . .

|

| 196 |

+

- .. ..

|

| 197 |

+

- '... .'

|

| 198 |

+

- <sos/eos>

|

| 199 |

+

token_type: phn

|

| 200 |

+

train_data_path_and_name_and_type:

|

| 201 |

+

- - dump/22k/raw/train-clean-460/text

|

| 202 |

+

- text

|

| 203 |

+

- text

|

| 204 |

+

- - dump/22k/raw/train-clean-460/wav.scp

|

| 205 |

+

- speech

|

| 206 |

+

- sound

|

| 207 |

+

- - dump/22k/xvector/train-clean-460/xvector.scp

|

| 208 |

+

- spembs

|

| 209 |

+

- kaldi_ark

|

| 210 |

+

train_dtype: float32

|

| 211 |

+

train_shape_file:

|

| 212 |

+

- exp/tts_stats_raw_linear_spectrogram_phn_tacotron_g2p_en_no_space/train/text_shape.phn

|

| 213 |

+

- exp/tts_stats_raw_linear_spectrogram_phn_tacotron_g2p_en_no_space/train/speech_shape

|

| 214 |

+

tts: vits

|

| 215 |

+

tts_conf:

|

| 216 |

+

cache_generator_outputs: true

|

| 217 |

+

discriminator_adv_loss_params:

|

| 218 |

+

average_by_discriminators: false

|

| 219 |

+

loss_type: mse

|

| 220 |

+

discriminator_params:

|

| 221 |

+

follow_official_norm: false

|

| 222 |

+

period_discriminator_params:

|

| 223 |

+

bias: true

|

| 224 |

+

channels: 32

|

| 225 |

+

downsample_scales:

|

| 226 |

+

- 3

|

| 227 |

+

- 3

|

| 228 |

+

- 3

|

| 229 |

+

- 3

|

| 230 |

+

- 1

|

| 231 |

+

in_channels: 1

|

| 232 |

+

kernel_sizes:

|

| 233 |

+

- 5

|

| 234 |

+

- 3

|

| 235 |

+

max_downsample_channels: 1024

|

| 236 |

+

nonlinear_activation: LeakyReLU

|

| 237 |

+

nonlinear_activation_params:

|

| 238 |

+

negative_slope: 0.1

|

| 239 |

+

out_channels: 1

|

| 240 |

+

use_spectral_norm: false

|

| 241 |

+

use_weight_norm: true

|

| 242 |

+

periods:

|

| 243 |

+

- 2

|

| 244 |

+

- 3

|

| 245 |

+

- 5

|

| 246 |

+

- 7

|

| 247 |

+

- 11

|

| 248 |

+

scale_discriminator_params:

|

| 249 |

+

bias: true

|

| 250 |

+

channels: 128

|

| 251 |

+

downsample_scales:

|

| 252 |

+

- 2

|

| 253 |

+

- 2

|

| 254 |

+

- 4

|

| 255 |

+

- 4

|

| 256 |

+

- 1

|

| 257 |

+

in_channels: 1

|

| 258 |

+

kernel_sizes:

|

| 259 |

+

- 15

|

| 260 |

+

- 41

|

| 261 |

+

- 5

|

| 262 |

+

- 3

|

| 263 |

+

max_downsample_channels: 1024

|

| 264 |

+

max_groups: 16

|

| 265 |

+

nonlinear_activation: LeakyReLU

|

| 266 |

+

nonlinear_activation_params:

|

| 267 |

+

negative_slope: 0.1

|

| 268 |

+

out_channels: 1

|

| 269 |

+

use_spectral_norm: false

|

| 270 |

+

use_weight_norm: true

|

| 271 |

+

scale_downsample_pooling: AvgPool1d

|

| 272 |

+

scale_downsample_pooling_params:

|

| 273 |

+

kernel_size: 4

|

| 274 |

+

padding: 2

|

| 275 |

+

stride: 2

|

| 276 |

+

scales: 1

|

| 277 |

+

discriminator_type: hifigan_multi_scale_multi_period_discriminator

|

| 278 |

+

feat_match_loss_params:

|

| 279 |

+

average_by_discriminators: false

|

| 280 |

+

average_by_layers: false

|

| 281 |

+

include_final_outputs: true

|

| 282 |

+

generator_adv_loss_params:

|

| 283 |

+

average_by_discriminators: false

|

| 284 |

+

loss_type: mse

|

| 285 |

+

generator_params:

|

| 286 |

+

aux_channels: 513

|

| 287 |

+

decoder_channels: 512

|

| 288 |

+

decoder_kernel_size: 7

|

| 289 |

+

decoder_resblock_dilations:

|

| 290 |

+

- - 1

|

| 291 |

+

- 3

|

| 292 |

+

- 5

|

| 293 |

+

- - 1

|

| 294 |

+

- 3

|

| 295 |

+

- 5

|

| 296 |

+

- - 1

|

| 297 |

+

- 3

|

| 298 |

+

- 5

|

| 299 |

+

decoder_resblock_kernel_sizes:

|

| 300 |

+

- 3

|

| 301 |

+

- 7

|

| 302 |

+

- 11

|

| 303 |

+

decoder_upsample_kernel_sizes:

|

| 304 |

+

- 16

|

| 305 |

+

- 16

|

| 306 |

+

- 4

|

| 307 |

+

- 4

|

| 308 |

+

decoder_upsample_scales:

|

| 309 |

+

- 8

|

| 310 |

+

- 8

|

| 311 |

+

- 2

|

| 312 |

+

- 2

|

| 313 |

+

flow_base_dilation: 1

|

| 314 |

+

flow_dropout_rate: 0.0

|

| 315 |

+

flow_flows: 4

|

| 316 |

+

flow_kernel_size: 5

|

| 317 |

+

flow_layers: 4

|

| 318 |

+

global_channels: 256

|

| 319 |

+

hidden_channels: 192

|

| 320 |

+

posterior_encoder_base_dilation: 1

|

| 321 |

+

posterior_encoder_dropout_rate: 0.0

|

| 322 |

+

posterior_encoder_kernel_size: 5

|

| 323 |

+

posterior_encoder_layers: 16

|

| 324 |

+

posterior_encoder_stacks: 1

|

| 325 |

+

segment_size: 32

|

| 326 |

+

spk_embed_dim: 512

|

| 327 |

+

spks: -1

|

| 328 |

+

stochastic_duration_predictor_dds_conv_layers: 3

|

| 329 |

+

stochastic_duration_predictor_dropout_rate: 0.5

|

| 330 |

+

stochastic_duration_predictor_flows: 4

|

| 331 |

+

stochastic_duration_predictor_kernel_size: 3

|

| 332 |

+

text_encoder_activation_type: swish

|

| 333 |

+

text_encoder_attention_dropout_rate: 0.1

|

| 334 |

+

text_encoder_attention_heads: 2

|

| 335 |

+

text_encoder_blocks: 6

|

| 336 |

+

text_encoder_conformer_kernel_size: -1

|

| 337 |

+

text_encoder_dropout_rate: 0.1

|

| 338 |

+

text_encoder_ffn_expand: 4

|

| 339 |

+

text_encoder_normalize_before: true

|

| 340 |

+

text_encoder_positional_dropout_rate: 0.0

|

| 341 |

+

text_encoder_positional_encoding_layer_type: rel_pos

|

| 342 |

+

text_encoder_positionwise_conv_kernel_size: 3

|

| 343 |

+

text_encoder_positionwise_layer_type: conv1d

|

| 344 |

+

text_encoder_self_attention_layer_type: rel_selfattn

|

| 345 |

+

use_conformer_conv_in_text_encoder: false

|

| 346 |

+

use_macaron_style_in_text_encoder: true

|

| 347 |

+

use_only_mean_in_flow: true

|

| 348 |

+

use_weight_norm_in_decoder: true

|

| 349 |

+

use_weight_norm_in_flow: true

|

| 350 |

+

use_weight_norm_in_posterior_encoder: true

|

| 351 |

+

vocabs: 86

|

| 352 |

+

generator_type: vits_generator

|

| 353 |

+

lambda_adv: 1.0

|

| 354 |

+

lambda_dur: 1.0

|

| 355 |

+

lambda_feat_match: 2.0

|

| 356 |

+

lambda_kl: 1.0

|

| 357 |

+

lambda_mel: 45.0

|

| 358 |

+

mel_loss_params:

|

| 359 |

+

fmax: null

|

| 360 |

+

fmin: 0

|

| 361 |

+

fs: 22050

|

| 362 |

+

hop_length: 256

|

| 363 |

+

log_base: null

|

| 364 |

+

n_fft: 1024

|

| 365 |

+

n_mels: 80

|

| 366 |

+

win_length: null

|

| 367 |

+

window: hann

|

| 368 |

+

sampling_rate: 22050

|

| 369 |

+

unused_parameters: true

|

| 370 |

+

use_amp: false

|

| 371 |

+

use_preprocessor: true

|

| 372 |

+

use_tensorboard: true

|

| 373 |

+

use_wandb: false

|

| 374 |

+

val_scheduler_criterion:

|

| 375 |

+

- valid

|

| 376 |

+

- loss

|

| 377 |

+

valid_batch_bins: null

|

| 378 |

+

valid_batch_size: null

|

| 379 |

+

valid_batch_type: null

|

| 380 |

+

valid_data_path_and_name_and_type:

|

| 381 |

+

- - dump/22k/raw/dev-clean/text

|

| 382 |

+

- text

|

| 383 |

+

- text

|

| 384 |

+

- - dump/22k/raw/dev-clean/wav.scp

|

| 385 |

+

- speech

|

| 386 |

+

- sound

|

| 387 |

+

- - dump/22k/xvector/dev-clean/xvector.scp

|

| 388 |

+

- spembs

|

| 389 |

+

- kaldi_ark

|

| 390 |

+

valid_max_cache_size: null

|

| 391 |

+

valid_shape_file:

|

| 392 |

+

- exp/tts_stats_raw_linear_spectrogram_phn_tacotron_g2p_en_no_space/valid/text_shape.phn

|

| 393 |

+

- exp/tts_stats_raw_linear_spectrogram_phn_tacotron_g2p_en_no_space/valid/speech_shape

|

| 394 |

+

version: 0.10.3a2

|

| 395 |

+

wandb_entity: null

|

| 396 |

+

wandb_id: null

|

| 397 |

+

wandb_model_log_interval: -1

|

| 398 |

+

wandb_name: null

|

| 399 |

+

wandb_project: null

|

| 400 |

+

write_collected_feats: false

|

TTS_model/images/discriminator_backward_time.png

ADDED

|

TTS_model/images/discriminator_fake_loss.png

ADDED

|

TTS_model/images/discriminator_forward_time.png

ADDED

|

TTS_model/images/discriminator_loss.png

ADDED

|

TTS_model/images/discriminator_optim_step_time.png

ADDED

|

TTS_model/images/discriminator_real_loss.png

ADDED

|

TTS_model/images/discriminator_train_time.png

ADDED

|

TTS_model/images/generator_adv_loss.png

ADDED

|

TTS_model/images/generator_backward_time.png

ADDED

|

TTS_model/images/generator_dur_loss.png

ADDED

|

TTS_model/images/generator_feat_match_loss.png

ADDED

|

TTS_model/images/generator_forward_time.png

ADDED

|

TTS_model/images/generator_kl_loss.png

ADDED

|

TTS_model/images/generator_loss.png

ADDED

|

TTS_model/images/generator_mel_loss.png

ADDED

|

TTS_model/images/generator_optim_step_time.png

ADDED

|

TTS_model/images/generator_train_time.png

ADDED

|

TTS_model/images/gpu_max_cached_mem_GB.png

ADDED

|

TTS_model/images/iter_time.png

ADDED

|

TTS_model/images/optim0_lr0.png

ADDED

|

TTS_model/images/optim1_lr0.png

ADDED

|

TTS_model/images/train_time.png

ADDED

|

TTS_model/train.total_count.ave_10best.pth

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:05eb282aa88c7dfad30305cbb614589ebd9f0aec078fa6bf13befd5a5660ffff

|

| 3 |

+

size 386477966

|

app.py

CHANGED

|

@@ -44,27 +44,21 @@ processor = AutoProcessor.from_pretrained("KevinGeng/whipser_medium_en_PAL300_st

|

|

| 44 |

|

| 45 |

model = AutoModelForSpeechSeq2Seq.from_pretrained("KevinGeng/whipser_medium_en_PAL300_step25")

|

| 46 |

|

| 47 |

-

# feature_extractor = AutoFeatureExtractor.from_pretrained("KevinGeng/PAL_John_128_train_dev_test_seed_1")

|

| 48 |

-

# representation_model = AutoModelForCTC.from_pretrained("KevinGeng/PAL_John_128_train_dev_test_seed_1")

|

| 49 |

-

# tokenizer = AutoTokenizer.from_pretrained("KevinGeng/PAL_John_128_train_dev_test_seed_1")

|

| 50 |

-

|

| 51 |

transcriber = pipeline("automatic-speech-recognition", model="KevinGeng/whipser_medium_en_PAL300_step25")

|

| 52 |

-

# transcriber = pipeline("automatic-speech-recognition", model="KevinGeng/PAL_John_128_p326_300_train_dev_test_seed_1")

|

| 53 |

-

# 【Female】kan-bayashi ljspeech parallel wavegan

|

| 54 |

-

# tts_model = Text2Speech.from_pretrained("espnet/kan-bayashi_ljspeech_vits")

|

| 55 |

-

# 【Male】fastspeech2-en-200_speaker-cv4, hifigan vocoder

|

| 56 |

-

# pdb.set_trace()

|

| 57 |

|

| 58 |

# @title English multi-speaker pretrained model { run: "auto" }

|

| 59 |

lang = "English"

|

| 60 |

tag = "kan-bayashi/libritts_xvector_vits"

|

| 61 |

-

# vits needs no

|

| 62 |

vocoder_tag = "parallel_wavegan/vctk_parallel_wavegan.v1.long" # @param ["none", "parallel_wavegan/vctk_parallel_wavegan.v1.long", "parallel_wavegan/vctk_multi_band_melgan.v2", "parallel_wavegan/vctk_style_melgan.v1", "parallel_wavegan/vctk_hifigan.v1", "parallel_wavegan/libritts_parallel_wavegan.v1.long", "parallel_wavegan/libritts_multi_band_melgan.v2", "parallel_wavegan/libritts_hifigan.v1", "parallel_wavegan/libritts_style_melgan.v1"] {type:"string"}

|

|

|

|

| 63 |

from espnet2.bin.tts_inference import Text2Speech

|

| 64 |

from espnet2.utils.types import str_or_none

|

| 65 |

|

|

|

|

| 66 |

text2speech = Text2Speech.from_pretrained(

|

| 67 |

-

|

|

|

|

| 68 |

vocoder_tag=str_or_none(vocoder_tag),

|

| 69 |

device="cuda",

|

| 70 |

use_att_constraint=False,

|

|

@@ -108,7 +102,6 @@ spks = dict(male_spks, **female_spks)

|

|

| 108 |

spk_names = sorted(spks.keys())

|

| 109 |

|

| 110 |

|

| 111 |

-

## 20230224 Mousa: No reference,

|

| 112 |

def ASRTTS(audio_file, spk_name, ref_text=""):

|

| 113 |

spk = spks[spk_name]

|

| 114 |

spembs = xvectors[spk]

|

|

@@ -189,7 +182,6 @@ examples = [

|

|

| 189 |

["./samples/004.wav", "F2", ""],

|

| 190 |

]

|

| 191 |

|

| 192 |

-

|

| 193 |

def change_audiobox(choice):

|

| 194 |

if choice == "upload":

|

| 195 |

input_audio = gr.Audio.update(source="upload", visible=True)

|

|

@@ -266,7 +258,5 @@ with gr.Blocks(

|

|

| 266 |

outputs=output_audio,

|

| 267 |

api_name="convert"

|

| 268 |

)

|

| 269 |

-

|

| 270 |

-

# download_file("wav/001_F1_spkembs.wav")

|

| 271 |

|

| 272 |

demo.launch(share=False)

|

|

|

|

| 44 |

|

| 45 |

model = AutoModelForSpeechSeq2Seq.from_pretrained("KevinGeng/whipser_medium_en_PAL300_step25")

|

| 46 |

|

|

|

|

|

|

|

|

|

|

|

|

|

| 47 |

transcriber = pipeline("automatic-speech-recognition", model="KevinGeng/whipser_medium_en_PAL300_step25")

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 48 |

|

| 49 |

# @title English multi-speaker pretrained model { run: "auto" }

|

| 50 |

lang = "English"

|

| 51 |

tag = "kan-bayashi/libritts_xvector_vits"

|

| 52 |

+

# vits needs no vocoder

|

| 53 |

vocoder_tag = "parallel_wavegan/vctk_parallel_wavegan.v1.long" # @param ["none", "parallel_wavegan/vctk_parallel_wavegan.v1.long", "parallel_wavegan/vctk_multi_band_melgan.v2", "parallel_wavegan/vctk_style_melgan.v1", "parallel_wavegan/vctk_hifigan.v1", "parallel_wavegan/libritts_parallel_wavegan.v1.long", "parallel_wavegan/libritts_multi_band_melgan.v2", "parallel_wavegan/libritts_hifigan.v1", "parallel_wavegan/libritts_style_melgan.v1"] {type:"string"}

|

| 54 |

+

|

| 55 |

from espnet2.bin.tts_inference import Text2Speech

|

| 56 |

from espnet2.utils.types import str_or_none

|

| 57 |

|

| 58 |

+

# local import

|

| 59 |

text2speech = Text2Speech.from_pretrained(

|

| 60 |

+

train_config = "TTS_model/config.yaml",

|

| 61 |

+

model_file="TTS_model/train.total_count.ave_10best.pth",

|

| 62 |

vocoder_tag=str_or_none(vocoder_tag),

|

| 63 |

device="cuda",

|

| 64 |

use_att_constraint=False,

|

|

|

|

| 102 |

spk_names = sorted(spks.keys())

|

| 103 |

|

| 104 |

|

|

|

|

| 105 |

def ASRTTS(audio_file, spk_name, ref_text=""):

|

| 106 |

spk = spks[spk_name]

|

| 107 |

spembs = xvectors[spk]

|

|

|

|

| 182 |

["./samples/004.wav", "F2", ""],

|

| 183 |

]

|

| 184 |

|

|

|

|

| 185 |

def change_audiobox(choice):

|

| 186 |

if choice == "upload":

|

| 187 |

input_audio = gr.Audio.update(source="upload", visible=True)

|

|

|

|

| 258 |

outputs=output_audio,

|

| 259 |

api_name="convert"

|

| 260 |

)

|

|

|

|

|

|

|

| 261 |

|

| 262 |

demo.launch(share=False)

|