Spaces:

Runtime error

Runtime error

Upload 127 files

Browse filesUpload everything

This view is limited to 50 files because it contains too many changes.

See raw diff

- SCRL_new/Makefile +93 -0

- SCRL_new/README.md +137 -0

- SCRL_new/bin/evaluate.py +144 -0

- SCRL_new/bin/evaluate_hc_output.py +132 -0

- SCRL_new/bin/predict.py +53 -0

- SCRL_new/bin/run_hc.py +80 -0

- SCRL_new/bin/train.py +157 -0

- SCRL_new/config/example.json +30 -0

- SCRL_new/config/gigaword-L8.json +37 -0

- SCRL_new/config/hc.json +16 -0

- SCRL_new/config/newsroom-CR75.json +37 -0

- SCRL_new/config/newsroom-L11.json +37 -0

- SCRL_new/data/test-data/bnc.jsonl +0 -0

- SCRL_new/data/test-data/broadcast.jsonl +0 -0

- SCRL_new/data/test-data/duc2004.jsonl +0 -0

- SCRL_new/data/test-data/gigaword.jsonl +0 -0

- SCRL_new/data/test-data/google.jsonl +0 -0

- SCRL_new/data/test-data/newsroom.jsonl +280 -0

- SCRL_new/example.py +23 -0

- SCRL_new/images/model.png +0 -0

- SCRL_new/loaders/gigaword.py +52 -0

- SCRL_new/loaders/newsroom.py +51 -0

- SCRL_new/requirements.txt +5 -0

- SCRL_new/scrl/__init__.py +0 -0

- SCRL_new/scrl/config.py +65 -0

- SCRL_new/scrl/config_hc.py +50 -0

- SCRL_new/scrl/data.py +24 -0

- SCRL_new/scrl/eval_metrics.py +24 -0

- SCRL_new/scrl/hill_climbing.py +166 -0

- SCRL_new/scrl/model.py +75 -0

- SCRL_new/scrl/rewards.py +330 -0

- SCRL_new/scrl/sampling.py +99 -0

- SCRL_new/scrl/training.py +346 -0

- SCRL_new/scrl/utils.py +86 -0

- SCRL_new/setup.py +8 -0

- abs_compressor.py +44 -0

- kis.py +47 -0

- models/gigaword-L8/checkpoints/best_val_reward-7700/classifier.bin +3 -0

- models/gigaword-L8/checkpoints/best_val_reward-7700/encoder.bin/config.json +3 -0

- models/gigaword-L8/checkpoints/best_val_reward-7700/encoder.bin/pytorch_model.bin +3 -0

- models/gigaword-L8/config.json +37 -0

- models/gigaword-L8/series/argmax_len.npy +3 -0

- models/gigaword-L8/series/argmax_reward.npy +3 -0

- models/gigaword-L8/series/label_variance.npy +3 -0

- models/gigaword-L8/series/loss.npy +3 -0

- models/gigaword-L8/series/mean_max_prob.npy +3 -0

- models/gigaword-L8/series/reward_Fluency.npy +3 -0

- models/gigaword-L8/series/reward_GaussianLength.npy +3 -0

- models/gigaword-L8/series/reward_SentenceMeanSimilarity.npy +3 -0

- models/gigaword-L8/series/sample_prob.npy +3 -0

SCRL_new/Makefile

ADDED

|

@@ -0,0 +1,93 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

CONFIG ?= config/example.json

|

| 2 |

+

DEVICE ?= cpu

|

| 3 |

+

MODELDIR ?= models/newsroom-P75/model-dirs/best_val_reward-7950

|

| 4 |

+

TESTSET ?= data/test-data/broadcast.jsonl

|

| 5 |

+

HC_OUTPUT ?= data/hc-outputs/hc.L11.google.jsonl

|

| 6 |

+

|

| 7 |

+

# TRAINING

|

| 8 |

+

|

| 9 |

+

.PHONY: train

|

| 10 |

+

train:

|

| 11 |

+

python bin/train.py --verbose --config $(CONFIG) --device $(DEVICE)

|

| 12 |

+

|

| 13 |

+

# EVALUATING SCRL MODELS (predict + evaluate)

|

| 14 |

+

|

| 15 |

+

.PHONY: eval-google

|

| 16 |

+

eval-google:

|

| 17 |

+

python bin/evaluate.py \

|

| 18 |

+

--model-dir $(MODELDIR) \

|

| 19 |

+

--device $(DEVICE) \

|

| 20 |

+

--dataset data/test-data/google.jsonl

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

.PHONY: eval-duc2004

|

| 24 |

+

eval-duc2004:

|

| 25 |

+

python bin/evaluate.py \

|

| 26 |

+

--model-dir $(MODELDIR) \

|

| 27 |

+

--device $(DEVICE) \

|

| 28 |

+

--dataset data/test-data/duc2004.jsonl \

|

| 29 |

+

--max-chars 75

|

| 30 |

+

|

| 31 |

+

|

| 32 |

+

.PHONY: eval-gigaword

|

| 33 |

+

eval-gigaword:

|

| 34 |

+

python bin/evaluate.py \

|

| 35 |

+

--model-dir $(MODELDIR) \

|

| 36 |

+

--device $(DEVICE) \

|

| 37 |

+

--dataset data/test-data/gigaword.jsonl \

|

| 38 |

+

--pretokenized

|

| 39 |

+

|

| 40 |

+

|

| 41 |

+

.PHONY: eval-broadcast

|

| 42 |

+

eval-broadcast:

|

| 43 |

+

python bin/evaluate.py \

|

| 44 |

+

--model-dir $(MODELDIR) \

|

| 45 |

+

--device $(DEVICE) \

|

| 46 |

+

--dataset data/test-data/broadcast.jsonl \

|

| 47 |

+

--pretokenized

|

| 48 |

+

|

| 49 |

+

|

| 50 |

+

.PHONY: eval-bnc

|

| 51 |

+

eval-bnc:

|

| 52 |

+

python bin/evaluate.py \

|

| 53 |

+

--model-dir $(MODELDIR) \

|

| 54 |

+

--device $(DEVICE) \

|

| 55 |

+

--dataset data/test-data/bnc.jsonl \

|

| 56 |

+

--pretokenized

|

| 57 |

+

|

| 58 |

+

|

| 59 |

+

# EVALUATE HILL CLIMBING SEARCH

|

| 60 |

+

|

| 61 |

+

.PHONY: hc-eval-google

|

| 62 |

+

hc-eval-google:

|

| 63 |

+

python bin/evaluate_hc_output.py \

|

| 64 |

+

--dataset data/test-data/google.jsonl \

|

| 65 |

+

--outputs $(HC_OUTPUT)

|

| 66 |

+

|

| 67 |

+

|

| 68 |

+

.PHONY: hc-eval-duc2004

|

| 69 |

+

hc-eval-duc2004:

|

| 70 |

+

python bin/evaluate_hc_output.py \

|

| 71 |

+

--dataset data/test-data/duc2004.jsonl \

|

| 72 |

+

--outputs $(HC_OUTPUT)

|

| 73 |

+

|

| 74 |

+

|

| 75 |

+

.PHONY: hc-eval-gigaword

|

| 76 |

+

hc-eval-gigaword:

|

| 77 |

+

python bin/evaluate_hc_output.py \

|

| 78 |

+

--dataset data/test-data/gigaword.jsonl \

|

| 79 |

+

--outputs $(HC_OUTPUT)

|

| 80 |

+

|

| 81 |

+

|

| 82 |

+

.PHONY: hc-eval-broadcast

|

| 83 |

+

hc-eval-broadcast:

|

| 84 |

+

python bin/evaluate_hc_output.py \

|

| 85 |

+

--dataset data/test-data/broadcast.jsonl \

|

| 86 |

+

--outputs $(HC_OUTPUT)

|

| 87 |

+

|

| 88 |

+

|

| 89 |

+

.PHONY: hc-eval-bnc

|

| 90 |

+

hc-eval-bnc:

|

| 91 |

+

python bin/evaluate_hc_output.py \

|

| 92 |

+

--dataset data/test-data/bnc.jsonl \

|

| 93 |

+

--outputs $(HC_OUTPUT)

|

SCRL_new/README.md

ADDED

|

@@ -0,0 +1,137 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

|

| 3 |

+

# Sentence Compression with Reinforcement Learning

|

| 4 |

+

|

| 5 |

+

Code for the ACL 2022 paper [Efficient Unsupervised Sentence Compression by Fine-tuning Transformers with Reinforcement Learning](https://arxiv.org/abs/2205.08221).

|

| 6 |

+

|

| 7 |

+

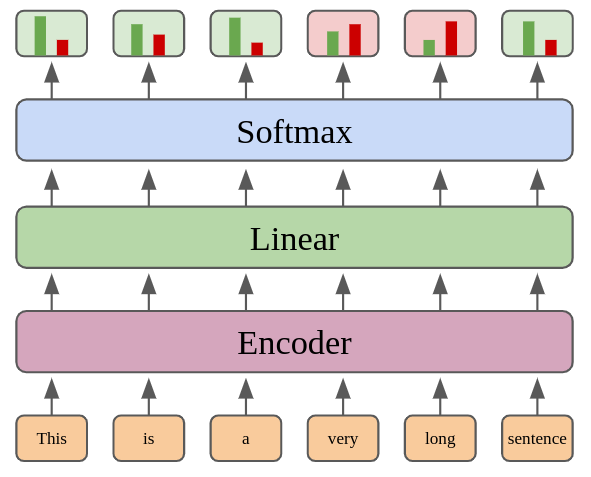

Model architecture used in this work:

|

| 8 |

+

|

| 9 |

+

<img src="images/model.png" alt="drawing" width="350"/>

|

| 10 |

+

|

| 11 |

+

### Install `scrl` library

|

| 12 |

+

The library is used for training, producing summaries with existing models and for evaluation and works with Python 3.7/3.8.

|

| 13 |

+

|

| 14 |

+

1. Create environment <br>

|

| 15 |

+

`conda create -n my_env python=3.8` with conda, or with venv: `python3.8 -m venv <env path>` <br>

|

| 16 |

+

|

| 17 |

+

2. Activate the environment <br>

|

| 18 |

+

`conda activate my_env` with conda, otherwise: `source <env path>/bin/activate`

|

| 19 |

+

|

| 20 |

+

3. Install dependencies & library in development mode: <br>

|

| 21 |

+

`pip install -r requirements.txt` <br>

|

| 22 |

+

`pip install -e .`

|

| 23 |

+

|

| 24 |

+

### Data

|

| 25 |

+

The full contents of the `data` folder can be found in [this google drive folder](https://drive.google.com/drive/folders/1grkgZhtdd-Bw45GAnHza9RRb5OVQG4pK?usp=sharing).

|

| 26 |

+

In particular, `models` are required to use and evaluate our trained models, `train-data` to train new models, and `hc-outputs` to analyse/evaluate outputs of the hill climbing baseline.

|

| 27 |

+

|

| 28 |

+

### Using a model

|

| 29 |

+

|

| 30 |

+

We trained 3 models which were used in our evaluation:

|

| 31 |

+

* `gigaword-L8` - trained to predict summaries of 8 tokens; trained on Gigaword to match preprocessing of test set

|

| 32 |

+

* `newsroom-L11` - trained to predict summaries of 11 tokens

|

| 33 |

+

* `newsroom-P75` - trained to reduce sentences to 75% of their original length

|

| 34 |

+

|

| 35 |

+

To use a trained model in Python, we need its model directory and the correct pretrained model ID for the tokenizer corresponding to the original pretrained model that the sentence compression model was initialised with:

|

| 36 |

+

```python

|

| 37 |

+

from scrl.model import load_model

|

| 38 |

+

from transformers import AutoTokenizer

|

| 39 |

+

|

| 40 |

+

# model_dir = "data/models/gigaword-L8/"

|

| 41 |

+

# model_dir = "data/models/newsroom-L11/"

|

| 42 |

+

model_dir = "data/models/newsroom-P75/"

|

| 43 |

+

device = "cpu"

|

| 44 |

+

model = load_model(model_dir, device)

|

| 45 |

+

tokenizer = AutoTokenizer.from_pretrained("distilroberta-base")

|

| 46 |

+

sources = [

|

| 47 |

+

"""

|

| 48 |

+

Most remaining Covid restrictions in Victoria have now been removed for those who are fully vaccinated, with the state about to hit its 90% vaccinated target.

|

| 49 |

+

""".strip()

|

| 50 |

+

]

|

| 51 |

+

summaries = model.predict(sources, tokenizer, device)

|

| 52 |

+

for s in summaries:

|

| 53 |

+

print(s)

|

| 54 |

+

```

|

| 55 |

+

|

| 56 |

+

You can run this code with [example.py](example.py)

|

| 57 |

+

|

| 58 |

+

|

| 59 |

+

### Training a new model

|

| 60 |

+

|

| 61 |

+

A new model needs a new config file (examples in [config](config)) for various settings, e.g. training dataset, reward functions, model directory, steps.

|

| 62 |

+

|

| 63 |

+

|

| 64 |

+

`python bin/train.py --verbose --config config/example.json --device cuda`

|

| 65 |

+

|

| 66 |

+

You can also change the device to `cpu` to try it out locally.

|

| 67 |

+

|

| 68 |

+

Training can be interrupted with `Ctrl+C` and continued by re-running the same command which will pick up from the latest saved checkpoint. Add `--fresh` to delete the previous training progress and start from scratch.

|

| 69 |

+

|

| 70 |

+

|

| 71 |

+

### Evaluation

|

| 72 |

+

|

| 73 |

+

The evaluation results can be replicated with the following Make commands, which run with slightly different settings depending on the dataset:

|

| 74 |

+

|

| 75 |

+

```bash

|

| 76 |

+

make eval-google MODELDIR=data/models/newsroom-L11

|

| 77 |

+

make eval-duc2004 MODELDIR=data/models/newsroom-L11

|

| 78 |

+

make eval-gigaword MODELDIR=data/models/gigaword-L8

|

| 79 |

+

make eval-broadcast MODELDIR=data/models/newsroom-P75

|

| 80 |

+

make eval-bnc MODELDIR=data/models/newsroom-P75

|

| 81 |

+

```

|

| 82 |

+

|

| 83 |

+

To evaluate on a custom dataset, check out [bin/evaluate.py](bin/evaluate.py) and its arguments.

|

| 84 |

+

|

| 85 |

+

|

| 86 |

+

### Hill Climbing Baseline

|

| 87 |

+

|

| 88 |

+

We implemented a search-based baseline for sentence compression using hill climbing, based on [Discrete Optimization for Unsupervised Sentence Summarization with Word-Level Extraction](https://arxiv.org/abs/2005.01791). A difference to the original method is that we only restart the search if no unknown neighbour state can be found, i.e. dynamically instead of in equal-paced intervals.

|

| 89 |

+

|

| 90 |

+

**Producing summaries**<br>

|

| 91 |

+

The budget of search steps is controlled with `--steps`.

|

| 92 |

+

```bash

|

| 93 |

+

python bin/run_hc.py \

|

| 94 |

+

--config config/hc.json \

|

| 95 |

+

--steps 10 \

|

| 96 |

+

--target-len 11 \

|

| 97 |

+

--dataset data/test-data/google.jsonl \

|

| 98 |

+

--output data/hc-outputs/example.jsonl \

|

| 99 |

+

--device cpu

|

| 100 |

+

```

|

| 101 |

+

|

| 102 |

+

|

| 103 |

+

**Evaluation** <br>

|

| 104 |

+

|

| 105 |

+

For datasets used in the paper:

|

| 106 |

+

```bash

|

| 107 |

+

make hc-eval-google HC_OUTPUT=data/hc-outputs/hc.L11.google.jsonl

|

| 108 |

+

make hc-eval-duc2004 HC_OUTPUT=data/hc-outputs/hc.L11.duc2004.jsonl

|

| 109 |

+

make hc-eval-gigaword HC_OUTPUT=data/hc-outputs/hc.L8.gigaword.jsonl

|

| 110 |

+

make hc-eval-broadcast HC_OUTPUT=data/hc-outputs/hc.P75.broadcast.jsonl

|

| 111 |

+

make hc-eval-bnc HC_OUTPUT=data/hc-outputs/hc.P75.bnc.jsonl

|

| 112 |

+

```

|

| 113 |

+

|

| 114 |

+

Example for custom dataset:

|

| 115 |

+

```

|

| 116 |

+

python bin/evaluate_hc_output.py \

|

| 117 |

+

--dataset data/test-data/google.jsonl \

|

| 118 |

+

--outputs data/hc-outputs/hc.L11.google.jsonl

|

| 119 |

+

```

|

| 120 |

+

|

| 121 |

+

### Citation

|

| 122 |

+

|

| 123 |

+

⚠️ Please refer to the version of the paper on Arxiv, there is a typo in the original ACL version (Table 3, ROUGE-1 column, Gigaword-SCRL-8 row).

|

| 124 |

+

|

| 125 |

+

```

|

| 126 |

+

@inproceedings{ghalandari-etal-2022-efficient,

|

| 127 |

+

title = "Efficient Unsupervised Sentence Compression by Fine-tuning Transformers with Reinforcement Learning",

|

| 128 |

+

author = "Gholipour Ghalandari, Demian and Hokamp, Chris and Ifrim, Georgiana",

|

| 129 |

+

booktitle = "Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers)",

|

| 130 |

+

month = may,

|

| 131 |

+

year = "2022",

|

| 132 |

+

address = "Dublin, Ireland",

|

| 133 |

+

publisher = "Association for Computational Linguistics",

|

| 134 |

+

url = "https://arxiv.org/abs/2205.08221",

|

| 135 |

+

pages = "1267--1280",

|

| 136 |

+

}

|

| 137 |

+

```

|

SCRL_new/bin/evaluate.py

ADDED

|

@@ -0,0 +1,144 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import argparse

|

| 2 |

+

import json

|

| 3 |

+

import numpy as np

|

| 4 |

+

import tqdm

|

| 5 |

+

from pathlib import Path

|

| 6 |

+

from pprint import pprint

|

| 7 |

+

from collections import defaultdict, Counter

|

| 8 |

+

|

| 9 |

+

from transformers import AutoTokenizer

|

| 10 |

+

import sys

|

| 11 |

+

sys.path.append("/home/hdd/lijinyi/CompressionInAvalon/promptcompressor/SCRL_new")

|

| 12 |

+

print(sys.path)

|

| 13 |

+

import scrl.utils as utils

|

| 14 |

+

from scrl.model import load_checkpoint, load_model

|

| 15 |

+

from scrl.eval_metrics import compute_token_f1, rouge_scorer, ROUGE_TYPES

|

| 16 |

+

from nltk import word_tokenize

|

| 17 |

+

import nltk

|

| 18 |

+

|

| 19 |

+

nltk.download('punkt')

|

| 20 |

+

print("punkt done!")

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

def main(args):

|

| 24 |

+

|

| 25 |

+

if args.model_dir is not None and args.checkpoint is None:

|

| 26 |

+

model = load_model(

|

| 27 |

+

Path(args.model_dir), device=args.device, prefix="best"

|

| 28 |

+

)

|

| 29 |

+

elif args.model_dir is None and args.checkpoint is not None:

|

| 30 |

+

model = load_checkpoint(Path(args.checkpoint), device=args.device)

|

| 31 |

+

else:

|

| 32 |

+

raise Exception("Provide either a model directory or checkpoint.")

|

| 33 |

+

|

| 34 |

+

model = load_model(Path(args.model_dir), device=args.device)

|

| 35 |

+

tokenizer = AutoTokenizer.from_pretrained("distilroberta-base")

|

| 36 |

+

|

| 37 |

+

dataset = list(utils.read_jsonl(args.dataset))

|

| 38 |

+

|

| 39 |

+

all_scores = defaultdict(list)

|

| 40 |

+

|

| 41 |

+

for item in tqdm.tqdm(dataset):

|

| 42 |

+

src = item["text"]

|

| 43 |

+

if args.lower_src:

|

| 44 |

+

src = src.lower()

|

| 45 |

+

tgts = item["summaries"]

|

| 46 |

+

pred = model.predict([src], tokenizer, args.device)[0]

|

| 47 |

+

|

| 48 |

+

if args.max_chars > 0:

|

| 49 |

+

pred = pred[:args.max_chars]

|

| 50 |

+

|

| 51 |

+

src_tokens = word_tokenize(src)

|

| 52 |

+

pred_tokens = word_tokenize(pred)

|

| 53 |

+

|

| 54 |

+

if args.lower_summary:

|

| 55 |

+

pred_tokens = [t.lower() for t in pred_tokens]

|

| 56 |

+

|

| 57 |

+

if args.pretokenized:

|

| 58 |

+

src_tokens = src.split()

|

| 59 |

+

else:

|

| 60 |

+

src_tokens = word_tokenize(src)

|

| 61 |

+

|

| 62 |

+

item_scores = defaultdict(list)

|

| 63 |

+

for tgt in tgts:

|

| 64 |

+

if args.pretokenized:

|

| 65 |

+

tgt_tokens = tgt.split()

|

| 66 |

+

else:

|

| 67 |

+

tgt_tokens = word_tokenize(tgt)

|

| 68 |

+

if args.lower_summary:

|

| 69 |

+

tgt_tokens = [t.lower() for t in tgt_tokens]

|

| 70 |

+

|

| 71 |

+

token_fscore = compute_token_f1(tgt_tokens, pred_tokens, use_counts=True)

|

| 72 |

+

|

| 73 |

+

rouge_scores = rouge_scorer.score(tgt, pred)

|

| 74 |

+

for rouge_type, rouge_type_scores in rouge_scores.items():

|

| 75 |

+

item_scores[f"{rouge_type}-p"].append(rouge_type_scores.precision)

|

| 76 |

+

item_scores[f"{rouge_type}-r"].append(rouge_type_scores.recall)

|

| 77 |

+

item_scores[f"{rouge_type}-f"].append(rouge_type_scores.fmeasure)

|

| 78 |

+

|

| 79 |

+

item_scores["token-f1"].append(token_fscore)

|

| 80 |

+

item_scores["tgt-len"].append(len(tgt_tokens))

|

| 81 |

+

item_scores["tgt-cr"].append(len(tgt_tokens) / len(src_tokens))

|

| 82 |

+

|

| 83 |

+

for k, values in item_scores.items():

|

| 84 |

+

item_mean = np.mean(values)

|

| 85 |

+

all_scores[k].append(item_mean)

|

| 86 |

+

|

| 87 |

+

all_scores["pred-len"].append(len(pred_tokens))

|

| 88 |

+

all_scores["src-len"].append(len(src_tokens))

|

| 89 |

+

all_scores["pred-cr"].append(len(pred_tokens) / len(src_tokens))

|

| 90 |

+

|

| 91 |

+

if args.verbose:

|

| 92 |

+

print("SRC:", src)

|

| 93 |

+

print("TGT:", tgts[0])

|

| 94 |

+

print("PRED:", pred)

|

| 95 |

+

print("=" * 100)

|

| 96 |

+

|

| 97 |

+

print("="*100)

|

| 98 |

+

print("RESULTS:")

|

| 99 |

+

|

| 100 |

+

print("="*20, "Length (#tokens):", "="*20)

|

| 101 |

+

for metric in ("src-len", "tgt-len", "pred-len"):

|

| 102 |

+

mean = np.mean(all_scores[metric])

|

| 103 |

+

print(f"{metric}: {mean:.2f}")

|

| 104 |

+

print()

|

| 105 |

+

|

| 106 |

+

print("="*20, "Compression ratio:", "="*20)

|

| 107 |

+

for metric in ("tgt-cr", "pred-cr"):

|

| 108 |

+

mean = np.mean(all_scores[metric])

|

| 109 |

+

print(f"{metric}: {mean:.2f}")

|

| 110 |

+

print()

|

| 111 |

+

|

| 112 |

+

print("="*20, "Token F1-Score:", "="*20)

|

| 113 |

+

mean = np.mean(all_scores["token-f1"])

|

| 114 |

+

print(f"f1-score: {mean:.3f}")

|

| 115 |

+

print()

|

| 116 |

+

|

| 117 |

+

print("="*20, "ROUGE F1-Scores:", "="*20)

|

| 118 |

+

for rouge_type in ROUGE_TYPES:

|

| 119 |

+

mean = np.mean(all_scores[f"{rouge_type}-f"])

|

| 120 |

+

print(f"{rouge_type}: {mean:.4f}")

|

| 121 |

+

print()

|

| 122 |

+

|

| 123 |

+

print("="*20, "ROUGE Recall:", "="*20)

|

| 124 |

+

for rouge_type in ROUGE_TYPES:

|

| 125 |

+

mean = np.mean(all_scores[f"{rouge_type}-r"])

|

| 126 |

+

print(f"{rouge_type}: {mean:.4f}")

|

| 127 |

+

print()

|

| 128 |

+

|

| 129 |

+

def parse_args():

|

| 130 |

+

parser = argparse.ArgumentParser()

|

| 131 |

+

parser.add_argument('--dataset', required=True)

|

| 132 |

+

parser.add_argument('--model-dir', required=False)

|

| 133 |

+

parser.add_argument('--checkpoint', required=False)

|

| 134 |

+

parser.add_argument('--device', default="cpu")

|

| 135 |

+

parser.add_argument('--pretokenized', action="store_true")

|

| 136 |

+

parser.add_argument('--max-chars', type=int, default=-1)

|

| 137 |

+

parser.add_argument('--verbose', action="store_true")

|

| 138 |

+

parser.add_argument('--lower-src', action="store_true")

|

| 139 |

+

parser.add_argument('--lower-summary', action="store_true")

|

| 140 |

+

return parser.parse_args()

|

| 141 |

+

|

| 142 |

+

|

| 143 |

+

if __name__ == '__main__':

|

| 144 |

+

main(parse_args())

|

SCRL_new/bin/evaluate_hc_output.py

ADDED

|

@@ -0,0 +1,132 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import argparse

|

| 2 |

+

import json

|

| 3 |

+

import numpy as np

|

| 4 |

+

import tqdm

|

| 5 |

+

from pathlib import Path

|

| 6 |

+

from pprint import pprint

|

| 7 |

+

from collections import defaultdict, Counter

|

| 8 |

+

|

| 9 |

+

from transformers import AutoTokenizer

|

| 10 |

+

import scrl.utils as utils

|

| 11 |

+

from scrl.model import load_checkpoint

|

| 12 |

+

from scrl.eval_metrics import compute_token_f1, rouge_scorer, ROUGE_TYPES

|

| 13 |

+

from nltk import word_tokenize

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

def get_hc_summary(output):

|

| 17 |

+

i = np.argmax(output["scores"])

|

| 18 |

+

summary = output["summaries"][i]

|

| 19 |

+

mask = output["masks"][i]

|

| 20 |

+

return summary

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

def main(args):

|

| 24 |

+

|

| 25 |

+

outputs = list(utils.read_jsonl(args.outputs))

|

| 26 |

+

dataset = list(utils.read_jsonl(args.dataset))

|

| 27 |

+

|

| 28 |

+

all_scores = defaultdict(list)

|

| 29 |

+

|

| 30 |

+

for i, item in tqdm.tqdm(enumerate(dataset)):

|

| 31 |

+

|

| 32 |

+

src = item["text"]

|

| 33 |

+

if args.lower_src:

|

| 34 |

+

src = src.lower()

|

| 35 |

+

tgts = item["summaries"]

|

| 36 |

+

pred = get_hc_summary(outputs[i])

|

| 37 |

+

|

| 38 |

+

if args.max_chars > 0:

|

| 39 |

+

pred = pred[:args.max_chars]

|

| 40 |

+

|

| 41 |

+

src_tokens = word_tokenize(src)

|

| 42 |

+

pred_tokens = word_tokenize(pred)

|

| 43 |

+

|

| 44 |

+

if args.lower_summary:

|

| 45 |

+

pred_tokens = [t.lower() for t in pred_tokens]

|

| 46 |

+

|

| 47 |

+

if args.pretokenized:

|

| 48 |

+

src_tokens = src.split()

|

| 49 |

+

else:

|

| 50 |

+

src_tokens = word_tokenize(src)

|

| 51 |

+

|

| 52 |

+

item_scores = defaultdict(list)

|

| 53 |

+

for tgt in tgts:

|

| 54 |

+

if args.pretokenized:

|

| 55 |

+

tgt_tokens = tgt.split()

|

| 56 |

+

else:

|

| 57 |

+

tgt_tokens = word_tokenize(tgt)

|

| 58 |

+

if args.lower_summary:

|

| 59 |

+

tgt_tokens = [t.lower() for t in tgt_tokens]

|

| 60 |

+

|

| 61 |

+

token_fscore = compute_token_f1(tgt_tokens, pred_tokens, use_counts=True)

|

| 62 |

+

|

| 63 |

+

rouge_scores = rouge_scorer.score(tgt, pred)

|

| 64 |

+

for rouge_type, rouge_type_scores in rouge_scores.items():

|

| 65 |

+

item_scores[f"{rouge_type}-p"].append(rouge_type_scores.precision)

|

| 66 |

+

item_scores[f"{rouge_type}-r"].append(rouge_type_scores.recall)

|

| 67 |

+

item_scores[f"{rouge_type}-f"].append(rouge_type_scores.fmeasure)

|

| 68 |

+

|

| 69 |

+

item_scores["token-f1"].append(token_fscore)

|

| 70 |

+

item_scores["tgt-len"].append(len(tgt_tokens))

|

| 71 |

+

item_scores["tgt-cr"].append(len(tgt_tokens) / len(src_tokens))

|

| 72 |

+

|

| 73 |

+

for k, values in item_scores.items():

|

| 74 |

+

item_mean = np.mean(values)

|

| 75 |

+

all_scores[k].append(item_mean)

|

| 76 |

+

|

| 77 |

+

all_scores["pred-len"].append(len(pred_tokens))

|

| 78 |

+

all_scores["src-len"].append(len(src_tokens))

|

| 79 |

+

all_scores["pred-cr"].append(len(pred_tokens) / len(src_tokens))

|

| 80 |

+

|

| 81 |

+

if args.verbose:

|

| 82 |

+

print("SRC:", src)

|

| 83 |

+

print("TGT:", tgts[0])

|

| 84 |

+

print("PRED:", pred)

|

| 85 |

+

print("=" * 100)

|

| 86 |

+

|

| 87 |

+

print("="*100)

|

| 88 |

+

print("RESULTS:")

|

| 89 |

+

|

| 90 |

+

print("="*20, "Length (#tokens):", "="*20)

|

| 91 |

+

for metric in ("src-len", "tgt-len", "pred-len"):

|

| 92 |

+

mean = np.mean(all_scores[metric])

|

| 93 |

+

print(f"{metric}: {mean:.2f}")

|

| 94 |

+

print()

|

| 95 |

+

|

| 96 |

+

print("="*20, "Compression ratio:", "="*20)

|

| 97 |

+

for metric in ("tgt-cr", "pred-cr"):

|

| 98 |

+

mean = np.mean(all_scores[metric])

|

| 99 |

+

print(f"{metric}: {mean:.2f}")

|

| 100 |

+

print()

|

| 101 |

+

|

| 102 |

+

print("="*20, "Token F1-Score:", "="*20)

|

| 103 |

+

mean = np.mean(all_scores["token-f1"])

|

| 104 |

+

print(f"f1-score: {mean:.3f}")

|

| 105 |

+

print()

|

| 106 |

+

|

| 107 |

+

print("="*20, "ROUGE F1-Scores:", "="*20)

|

| 108 |

+

for rouge_type in ROUGE_TYPES:

|

| 109 |

+

mean = np.mean(all_scores[f"{rouge_type}-f"])

|

| 110 |

+

print(f"{rouge_type}: {mean:.4f}")

|

| 111 |

+

print()

|

| 112 |

+

|

| 113 |

+

print("="*20, "ROUGE Recall:", "="*20)

|

| 114 |

+

for rouge_type in ROUGE_TYPES:

|

| 115 |

+

mean = np.mean(all_scores[f"{rouge_type}-r"])

|

| 116 |

+

print(f"{rouge_type}: {mean:.4f}")

|

| 117 |

+

print()

|

| 118 |

+

|

| 119 |

+

def parse_args():

|

| 120 |

+

parser = argparse.ArgumentParser()

|

| 121 |

+

parser.add_argument('--dataset', required=True)

|

| 122 |

+

parser.add_argument('--outputs', required=True)

|

| 123 |

+

parser.add_argument('--pretokenized', action="store_true")

|

| 124 |

+

parser.add_argument('--max-chars', type=int, default=-1)

|

| 125 |

+

parser.add_argument('--verbose', action="store_true")

|

| 126 |

+

parser.add_argument('--lower-src', action="store_true")

|

| 127 |

+

parser.add_argument('--lower-summary', action="store_true")

|

| 128 |

+

return parser.parse_args()

|

| 129 |

+

|

| 130 |

+

|

| 131 |

+

if __name__ == '__main__':

|

| 132 |

+

main(parse_args())

|

SCRL_new/bin/predict.py

ADDED

|

@@ -0,0 +1,53 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import argparse

|

| 2 |

+

import json

|

| 3 |

+

import numpy as np

|

| 4 |

+

import tqdm

|

| 5 |

+

from pathlib import Path

|

| 6 |

+

from pprint import pprint

|

| 7 |

+

from collections import defaultdict, Counter

|

| 8 |

+

|

| 9 |

+

from transformers import AutoTokenizer

|

| 10 |

+

import scrl.utils as utils

|

| 11 |

+

from scrl.model import load_checkpoint

|

| 12 |

+

from scrl.metrics import compute_token_f1, rouge_scorer, ROUGE_TYPES

|

| 13 |

+

from nltk import word_tokenize

|

| 14 |

+

|

| 15 |

+

from scrl.rewards import load_rewards

|

| 16 |

+

from scrl.config import load_config

|

| 17 |

+

import time

|

| 18 |

+

|

| 19 |

+

|

| 20 |

+

def main(args):

|

| 21 |

+

model = load_checkpoint(Path(args.checkpoint), device=args.device)

|

| 22 |

+

tokenizer = AutoTokenizer.from_pretrained("distilroberta-base")

|

| 23 |

+

dataset = list(utils.read_jsonl(args.dataset))

|

| 24 |

+

batches = utils.batchify(dataset, args.batch_size)

|

| 25 |

+

outputs = []

|

| 26 |

+

t1 = time.time()

|

| 27 |

+

for items in tqdm.tqdm(batches):

|

| 28 |

+

sources = [x["text"] for x in items]

|

| 29 |

+

summaries = model.predict(sources, tokenizer, args.device)

|

| 30 |

+

for item, summary in zip(items, summaries):

|

| 31 |

+

output = {

|

| 32 |

+

"id": item["id"],

|

| 33 |

+

"pred-summary": summary,

|

| 34 |

+

}

|

| 35 |

+

outputs.append(output)

|

| 36 |

+

t2 = time.time()

|

| 37 |

+

print("Seconds:", t2-t1)

|

| 38 |

+

if args.output:

|

| 39 |

+

utils.write_jsonl(outputs, args.output, "w")

|

| 40 |

+

|

| 41 |

+

|

| 42 |

+

def parse_args():

|

| 43 |

+

parser = argparse.ArgumentParser()

|

| 44 |

+

parser.add_argument('--dataset', required=True)

|

| 45 |

+

parser.add_argument('--output', required=False)

|

| 46 |

+

parser.add_argument('--checkpoint', required=True)

|

| 47 |

+

parser.add_argument('--device', default="cpu")

|

| 48 |

+

parser.add_argument('--batch-size', type=int, default=4)

|

| 49 |

+

return parser.parse_args()

|

| 50 |

+

|

| 51 |

+

|

| 52 |

+

if __name__ == '__main__':

|

| 53 |

+

main(parse_args())

|

SCRL_new/bin/run_hc.py

ADDED

|

@@ -0,0 +1,80 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import argparse

|

| 2 |

+

from scrl.hill_climbing import DynamicRestartHCSC, PunktTokenizer, WhiteSpaceTokenizer

|

| 3 |

+

from scrl.config_hc import load_config

|

| 4 |

+

from scrl.rewards import load_rewards

|

| 5 |

+

from scrl import utils

|

| 6 |

+

import tqdm

|

| 7 |

+

from pathlib import Path

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

def run_on_dataset(

|

| 11 |

+

searcher,

|

| 12 |

+

dataset,

|

| 13 |

+

target_len,

|

| 14 |

+

target_ratio,

|

| 15 |

+

n_steps,

|

| 16 |

+

outpath,

|

| 17 |

+

):

|

| 18 |

+

|

| 19 |

+

outpath = Path(outpath)

|

| 20 |

+

|

| 21 |

+

start = 0

|

| 22 |

+

if outpath.exists():

|

| 23 |

+

for i, x in enumerate(utils.read_jsonl(outpath)):

|

| 24 |

+

start += 1

|

| 25 |

+

passed = 0

|

| 26 |

+

|

| 27 |

+

batches = utils.batchify(dataset, batch_size=4)

|

| 28 |

+

for batch in tqdm.tqdm(batches):

|

| 29 |

+

passed += len(batch)

|

| 30 |

+

if passed <= start:

|

| 31 |

+

continue

|

| 32 |

+

elif passed == start + len(batch):

|

| 33 |

+

print(f"starting at position {passed - len(batch)}")

|

| 34 |

+

|

| 35 |

+

sources = [x["text"] for x in batch]

|

| 36 |

+

if target_len is not None:

|

| 37 |

+

target_lens = [target_len for _ in batch]

|

| 38 |

+

else:

|

| 39 |

+

input_lens = [len(tokens) for tokens in searcher.tokenizer(sources)]

|

| 40 |

+

target_lens = [round(target_ratio * l) for l in input_lens]

|

| 41 |

+

print(input_lens)

|

| 42 |

+

print(target_lens)

|

| 43 |

+

states = searcher(

|

| 44 |

+

sources,

|

| 45 |

+

target_lens=target_lens,

|

| 46 |

+

n_steps=n_steps,

|

| 47 |

+

)

|

| 48 |

+

preds = [s["best_summary"] for s in states]

|

| 49 |

+

utils.write_jsonl(states, outpath, "a")

|

| 50 |

+

|

| 51 |

+

|

| 52 |

+

def main(args):

|

| 53 |

+

config = load_config(args)

|

| 54 |

+

print("DEVICE:", config.device)

|

| 55 |

+

objective = load_rewards(config)

|

| 56 |

+

tokenizer = WhiteSpaceTokenizer() if args.pretokenized else PunktTokenizer()

|

| 57 |

+

searcher = DynamicRestartHCSC(tokenizer, objective)

|

| 58 |

+

dataset = list(utils.read_jsonl(args.dataset))

|

| 59 |

+

assert (args.target_len is None or args.target_ratio is None)

|

| 60 |

+

run_on_dataset(

|

| 61 |

+

searcher,

|

| 62 |

+

dataset,

|

| 63 |

+

args.target_len,

|

| 64 |

+

args.target_ratio,

|

| 65 |

+

args.steps,

|

| 66 |

+

args.output

|

| 67 |

+

)

|

| 68 |

+

|

| 69 |

+

|

| 70 |

+

if __name__ == '__main__':

|

| 71 |

+

parser = argparse.ArgumentParser()

|

| 72 |

+

parser.add_argument("--config", help="path to JSON config file", required=True)

|

| 73 |

+

parser.add_argument("--output", required=True)

|

| 74 |

+

parser.add_argument("--dataset", required=True)

|

| 75 |

+

parser.add_argument("--pretokenized", action="store_true")

|

| 76 |

+

parser.add_argument("--device", default="cuda")

|

| 77 |

+

parser.add_argument("--target-len", type=int, default=None)

|

| 78 |

+

parser.add_argument("--target-ratio", type=float, default=None)

|

| 79 |

+

parser.add_argument("--steps", default=1000, type=int)

|

| 80 |

+

main(load_config(parser.parse_args()))

|

SCRL_new/bin/train.py

ADDED

|

@@ -0,0 +1,157 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import argparse

|

| 2 |

+

import numpy as np

|

| 3 |

+

from pathlib import Path

|

| 4 |

+

import tqdm

|

| 5 |

+

from pprint import pprint

|

| 6 |

+

import torch

|

| 7 |

+

from torch.nn.utils.rnn import pad_sequence

|

| 8 |

+

from scrl.config import load_config

|

| 9 |

+

from scrl.training import setup_and_train

|

| 10 |

+

from scrl.model import labels_to_summary

|

| 11 |

+

from scrl.eval_metrics import compute_token_f1

|

| 12 |

+

import scrl.utils as utils

|

| 13 |

+

from nltk import word_tokenize

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

def evaluate_validation_reward(args, manager, model, tokenizer, reward_generator, dataset):

|

| 17 |

+

device = args.device

|

| 18 |

+

idx_range = list(range(len(dataset)))

|

| 19 |

+

dataset_indices = list(utils.batchify(idx_range, args.batch_size))

|

| 20 |

+

rewards = []

|

| 21 |

+

for i, indices in enumerate(dataset_indices):

|

| 22 |

+

if args.max_val_steps != None and i >= args.max_val_steps:

|

| 23 |

+

break

|

| 24 |

+

batch = dataset[indices]

|

| 25 |

+

input_ids = batch["input_ids"]

|

| 26 |

+

input_ids = pad_sequence(

|

| 27 |

+

[torch.tensor(ids) for ids in input_ids], batch_first=True

|

| 28 |

+

)

|

| 29 |

+

logits = model(input_ids.to(device))

|

| 30 |

+

probs = torch.softmax(logits, dim=2)

|

| 31 |

+

argmax_labels = torch.argmax(logits, dim=2).to(device)

|

| 32 |

+

argmax_summaries = labels_to_summary(input_ids, argmax_labels, tokenizer)

|

| 33 |

+

argmax_rewards, _ = reward_generator(batch["document"], argmax_summaries)

|

| 34 |

+

rewards += argmax_rewards

|

| 35 |

+

avg_reward = np.mean(rewards)

|

| 36 |

+

return avg_reward

|

| 37 |

+

|

| 38 |

+

|

| 39 |

+

|

| 40 |

+

def evaluate_validation_dataset(args, manager, model, tokenizer, reward_generator, dataset_path):

|

| 41 |

+

f1_scores = []

|

| 42 |

+

dataset = list(utils.read_jsonl(dataset_path))

|

| 43 |

+

dump_data = []

|

| 44 |

+

|

| 45 |

+

for item in tqdm.tqdm(dataset):

|

| 46 |

+

src = item["text"]

|

| 47 |

+

tgts = item["summaries"]

|

| 48 |

+

|

| 49 |

+

input_ids = torch.tensor(tokenizer([src])["input_ids"]).to(args.device)

|

| 50 |

+

logits = model.forward(input_ids)

|

| 51 |

+

argmax_labels = torch.argmax(logits, dim=2)

|

| 52 |

+

pred = labels_to_summary(input_ids, argmax_labels, tokenizer)[0]

|

| 53 |

+

|

| 54 |

+

pred_tokens = word_tokenize(pred)

|

| 55 |

+

src_tokens = word_tokenize(src)

|

| 56 |

+

|

| 57 |

+

|

| 58 |

+

item_scores = []

|

| 59 |

+

for tgt in tgts:

|

| 60 |

+

tgt_tokens = word_tokenize(tgt)

|

| 61 |

+

pred_tokens = [t.lower() for t in pred_tokens]

|

| 62 |

+

tgt_tokens = [t.lower() for t in tgt_tokens]

|

| 63 |

+

token_f1 = compute_token_f1(

|

| 64 |

+

tgt_tokens, pred_tokens, use_counts=True

|

| 65 |

+

)

|

| 66 |

+

item_scores.append(token_f1)

|

| 67 |

+

|

| 68 |

+

if args.dump:

|

| 69 |

+

probs = torch.softmax(logits, dim=2)[0].detach().tolist()

|

| 70 |

+

dump_item = {

|

| 71 |

+

"probs": probs,

|

| 72 |

+

"source": src,

|

| 73 |

+

"target": tgts[0],

|

| 74 |

+

"f1-score": item_scores[0],

|

| 75 |

+

"pred_summary": pred,

|

| 76 |

+

"pred_labels": argmax_labels[0].tolist(),

|

| 77 |

+

}

|

| 78 |

+

dump_data.append(dump_item)

|

| 79 |

+

|

| 80 |

+

item_score = np.mean(item_scores)

|

| 81 |

+

f1_scores.append(item_score)

|

| 82 |

+

score = np.mean(f1_scores)

|

| 83 |

+

|

| 84 |

+

|

| 85 |

+

if args.dump:

|

| 86 |

+

dataset_name = dataset_path.name.split(".jsonl")[0]

|

| 87 |

+

dump_dir = manager.dir / f"dump-{dataset_name}"

|

| 88 |

+

dump_dir.mkdir(exist_ok=True)

|

| 89 |

+

utils.write_jsonl(

|

| 90 |

+

dump_data,

|

| 91 |

+

dump_dir / f"step-{manager.step}.jsonl",

|

| 92 |

+

"w"

|

| 93 |

+

)

|

| 94 |

+

return score

|

| 95 |

+

|

| 96 |

+

|

| 97 |

+

def evaluate(args, manager, model, tokenizer, reward_generator, holdout_data):

|

| 98 |

+

step = manager.step

|

| 99 |

+

val_reward = evaluate_validation_reward(args, manager, model, tokenizer, reward_generator, holdout_data)

|

| 100 |

+

|

| 101 |

+

reward_path = manager.dir / "val_rewards.jsonl"

|

| 102 |

+

if reward_path.exists():

|

| 103 |

+

reward_results = list(utils.read_jsonl(reward_path))

|

| 104 |

+

prev_max = max([x["score"] for x in reward_results])

|

| 105 |

+

else:

|

| 106 |

+

reward_results = []

|

| 107 |

+

prev_max = 0

|

| 108 |

+

if val_reward > prev_max:

|

| 109 |

+

manager.save_model(model, step, "best_val_reward")

|

| 110 |

+

reward_results.append({"step": step, "score": val_reward})

|

| 111 |

+

utils.write_jsonl(reward_results, reward_path, "w")

|

| 112 |

+

if args.verbose:

|

| 113 |

+

print("Validation Rewards:")

|

| 114 |

+

pprint(reward_results)

|

| 115 |

+

print()

|

| 116 |

+

|

| 117 |

+

# only used if a validation dataset is specified in config

|

| 118 |

+

for val_data_path in args.validation_datasets:

|

| 119 |

+

val_data_path = Path(val_data_path)

|

| 120 |

+

dataset_name = val_data_path.name.split(".jsonl")[0]

|

| 121 |

+

dataset_score = evaluate_validation_dataset(

|

| 122 |

+

args, manager, model, tokenizer, reward_generator, val_data_path

|

| 123 |

+

)

|

| 124 |

+

result_path = Path(manager.dir / f"val_data_results.{dataset_name}.jsonl")

|

| 125 |

+

if result_path.exists():

|

| 126 |

+

dataset_results = list(utils.read_jsonl(result_path))

|

| 127 |

+

prev_max = max([x["score"] for x in dataset_results])

|

| 128 |

+

else:

|

| 129 |

+

dataset_results = []

|

| 130 |

+

prev_max = 0

|

| 131 |

+

if dataset_score > prev_max:

|

| 132 |

+

manager.save_model(model, step, f"best_on_{dataset_name}")

|

| 133 |

+

dataset_results.append({"step": step, "score": dataset_score})

|

| 134 |

+

utils.write_jsonl(dataset_results, result_path, "w")

|

| 135 |

+

if args.verbose:

|

| 136 |

+

print(f"Validation Dataset Results for {dataset_name}:")

|

| 137 |

+

pprint(dataset_results)

|

| 138 |

+

print()

|

| 139 |

+

|

| 140 |

+

|

| 141 |

+

def main(args):

|

| 142 |

+

utils.set_random_seed(0)

|

| 143 |

+

setup_and_train(args, eval_func=evaluate)

|

| 144 |

+

|

| 145 |

+

|

| 146 |

+

if __name__ == '__main__':

|

| 147 |

+

parser = argparse.ArgumentParser()

|

| 148 |

+

parser.add_argument("--config", help="path to JSON config file")

|

| 149 |

+

parser.add_argument("--device", default="cuda")

|

| 150 |

+

parser.add_argument("--dump", action="store_true")

|

| 151 |

+

parser.add_argument("--verbose", action="store_true")

|

| 152 |

+

parser.add_argument(

|

| 153 |

+

"--fresh",

|

| 154 |

+

action="store_true",

|

| 155 |

+

help="delete model directory and start from scratch"

|

| 156 |

+

)

|

| 157 |

+

main(load_config(parser.parse_args()))

|

SCRL_new/config/example.json

ADDED

|

@@ -0,0 +1,30 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"loader": "loaders/gigaword.py",

|

| 3 |

+

"dataset": "data/train-data/gigaword",

|

| 4 |

+

"indices": "data/train-data/gigaword/indices.npy",

|

| 5 |

+

"model_dir": "data/models/example",

|

| 6 |

+

"verbose": true,

|

| 7 |

+

"print_every": 1,

|

| 8 |

+

"eval_every": 10,

|

| 9 |

+

"save_every": 10,

|

| 10 |

+

"max_val_steps": 8,

|

| 11 |

+

"max_train_seconds": null,

|

| 12 |

+

"max_train_steps": 1000,

|

| 13 |

+

"batch_size": 1,

|

| 14 |

+

"learning_rate": 1e-05,

|

| 15 |

+

"k_samples": 10,

|

| 16 |

+

"sample_aggregation": "max",

|

| 17 |

+

"loss": "pgb",

|

| 18 |

+

"encoder_model_id": "distilroberta-base",

|

| 19 |

+

"rewards": {

|

| 20 |

+

"BiEncoderSimilarity": {

|

| 21 |

+

"weight": 1,

|

| 22 |

+

"model_id": "all-distilroberta-v1"

|

| 23 |

+

},

|

| 24 |

+

"GaussianCR": {

|

| 25 |

+

"weight": 1,

|

| 26 |

+

"mean": 0.5,

|

| 27 |

+

"std": 0.2

|

| 28 |

+

}

|

| 29 |

+

}

|

| 30 |

+

}

|

SCRL_new/config/gigaword-L8.json

ADDED

|

@@ -0,0 +1,37 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|