Commit

•

b66a430

1

Parent(s):

1e4fbc4

Upload 25 files

Browse files- app.py +346 -0

- assets/audio/complete.wav +0 -0

- assets/audio/mixkit-correct-answer-tone-2870.wav +0 -0

- assets/audio/mixkit-message-pop-alert-2354.mp3 +0 -0

- assets/audio/mixkit-positive-notification-951.wav +0 -0

- assets/images/heatmap.png +0 -0

- assets/images/icons/android-chrome-192x192.png +0 -0

- assets/images/icons/android-chrome-512x512.png +0 -0

- assets/images/icons/apple-touch-icon.png +0 -0

- assets/images/icons/favicon-16x16.png +0 -0

- assets/images/icons/favicon-32x32.png +0 -0

- assets/images/icons/favicon.ico +0 -0

- assets/images/icons/favicon.png +0 -0

- assets/images/walmart-logo-459.png +0 -0

- assets/images/walpa-logo.png +0 -0

- assets/images/walpa.png +0 -0

- datasets/Walmart.csv +0 -0

- datasets/test_Walmart.csv +0 -0

- gps.txt +45 -0

- logs.log +0 -0

- models/Walmart.pkl +3 -0

- models/walmart_model.pkl +3 -0

- readme.md +7 -0

- requirements.txt +0 -0

- walmart.ipynb +0 -0

app.py

ADDED

|

@@ -0,0 +1,346 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st # type: ignore

|

| 2 |

+

from streamlit_option_menu import option_menu # type: ignore

|

| 3 |

+

import streamlit_shadcn_ui as ui # type: ignore

|

| 4 |

+

from streamlit_echarts import st_echarts

|

| 5 |

+

import numpy as np

|

| 6 |

+

import pandas as pd

|

| 7 |

+

import seaborn as sns # type: ignore

|

| 8 |

+

import matplotlib.pyplot as plt

|

| 9 |

+

import folium #type:ignore

|

| 10 |

+

from streamlit_folium import st_folium #type:ignore

|

| 11 |

+

import plotly.express as px

|

| 12 |

+

import base64

|

| 13 |

+

import pickle

|

| 14 |

+

import time

|

| 15 |

+

from datetime import datetime

|

| 16 |

+

from pycaret.regression import load_model, predict_model

|

| 17 |

+

|

| 18 |

+

|

| 19 |

+

st.set_page_config(

|

| 20 |

+

page_title="WALPA - Walmart Prediction App",

|

| 21 |

+

page_icon="🧊",

|

| 22 |

+

layout="wide",

|

| 23 |

+

initial_sidebar_state="expanded",

|

| 24 |

+

)

|

| 25 |

+

@st.cache_data

|

| 26 |

+

def load_data(dataset):

|

| 27 |

+

df = pd.read_csv(dataset)

|

| 28 |

+

return df

|

| 29 |

+

def csvdownload(df):

|

| 30 |

+

csv = df.to_csv(index=False)

|

| 31 |

+

b64 = base64.b64encode(csv.encode()).decode() # strings <-> bytes conversions

|

| 32 |

+

href = f'<a href="data:file/csv;base64,{b64}" download="{df}_prediction.csv">Download CSV File</a>'

|

| 33 |

+

return href

|

| 34 |

+

def autoplay_audio(file_path: str):

|

| 35 |

+

with open(file_path, "rb") as f:

|

| 36 |

+

data = f.read()

|

| 37 |

+

b64 = base64.b64encode(data).decode()

|

| 38 |

+

md = f"""

|

| 39 |

+

<audio controls autoplay="true">

|

| 40 |

+

<source src="data:audio/wav;base64,{b64}" type="audio/wav">

|

| 41 |

+

</audio>

|

| 42 |

+

"""

|

| 43 |

+

st.markdown(

|

| 44 |

+

md,

|

| 45 |

+

unsafe_allow_html=True,

|

| 46 |

+

)

|

| 47 |

+

|

| 48 |

+

data = load_data('./datasets/Walmart.csv')

|

| 49 |

+

sumSales = data['Daily_Sales'].sum()

|

| 50 |

+

sumUnem = data['Unemployment'].sum()

|

| 51 |

+

def sum_Sales():

|

| 52 |

+

if sumSales > 999 and sumSales < 9999:

|

| 53 |

+

sum_display = "$" + str(sumSales)[:1] + "K"

|

| 54 |

+

elif sumSales > 9999 and sumSales < 99999:

|

| 55 |

+

sum_display = "$" + str(sumSales)[:2] + "K"

|

| 56 |

+

elif sumSales > 99999 and sumSales < 999999:

|

| 57 |

+

sum_display = "$" + str(sumSales)[:3] + "K"

|

| 58 |

+

elif sumSales > 999999 and sumSales < 9999999:

|

| 59 |

+

sum_display = "$" + str(sumSales)[:1] + "M"

|

| 60 |

+

elif sumSales > 9999999 and sumSales < 99999999:

|

| 61 |

+

sum_display = "$" + str(sumSales)[:2] + "M"

|

| 62 |

+

elif sumSales > 99999999 and sumSales < 999999999:

|

| 63 |

+

sum_display = "$" + str(sumSales)[:3] + "M"

|

| 64 |

+

elif sumSales > 999999999 and sumSales < 9999999999:

|

| 65 |

+

sum_display = "$" + str(sumSales)[:1] + "MD"

|

| 66 |

+

elif sumSales > 9999999999 and sumSales < 99999999999:

|

| 67 |

+

sum_display = "$" + str(sumSales)[:2] + "MD"

|

| 68 |

+

elif sumSales > 99999999999 and sumSales < 99999999999:

|

| 69 |

+

sum_display = "$" + str(sumSales)[:3] + "MD"

|

| 70 |

+

elif sumSales > 999999999999 and sumSales < 999999999999:

|

| 71 |

+

sum_display = "$" + str(sumSales)[:4] + "MD"

|

| 72 |

+

return sum_display

|

| 73 |

+

|

| 74 |

+

def sumUnemp():

|

| 75 |

+

if sumUnem > 999 and sumUnem < 9999:

|

| 76 |

+

sum_Unem = str(sumUnem)[:1] + "K"

|

| 77 |

+

elif sumUnem > 9999 and sumUnem < 99999:

|

| 78 |

+

sum_Unem = str(sumUnem)[:2] + "K"

|

| 79 |

+

elif sumUnem > 99999 and sumUnem < 999999:

|

| 80 |

+

sum_Unem = str(sumUnem)[:3] + "K"

|

| 81 |

+

elif sumUnem > 999999 and sumUnem < 9999999:

|

| 82 |

+

sum_Unem = str(sumUnem)[:1] + "M"

|

| 83 |

+

elif sumUnem > 9999999 and sumUnem < 99999999:

|

| 84 |

+

sum_Unem = str(sumUnem)[:2] + "M"

|

| 85 |

+

elif sumUnem > 99999999 and sumUnem < 999999999:

|

| 86 |

+

sum_Unem = str(sumUnem)[:3] + "M"

|

| 87 |

+

elif sumUnem > 999999999 and sumUnem < 9999999999:

|

| 88 |

+

sum_Unem = str(sumUnem)[:1] + "MD"

|

| 89 |

+

elif sumUnem > 9999999999 and sumUnem < 99999999999:

|

| 90 |

+

sum_Unem = str(sumUnem)[:2] + "MD"

|

| 91 |

+

elif sumUnem > 99999999999 and sumUnem < 99999999999:

|

| 92 |

+

sum_Unem = str(sumUnem)[:3] + "MD"

|

| 93 |

+

elif sumUnem > 999999999999 and sumUnem < 999999999999:

|

| 94 |

+

sum_Unem = str(sumUnem)[:4] + "MD"

|

| 95 |

+

return sum_Unem

|

| 96 |

+

|

| 97 |

+

def main():

|

| 98 |

+

with st.sidebar:

|

| 99 |

+

|

| 100 |

+

selected = option_menu("Main Menu", ['Home', 'Dashboard', 'Analysis', 'Visualization', 'Machine Learning'],

|

| 101 |

+

icons=['house','speedometer2', 'boxes', 'graph-up-arrow', 'easel2'], menu_icon="list", default_index=0,

|

| 102 |

+

styles={

|

| 103 |

+

"container": {"padding": "5px", "background-color": "transparent", "font-weight": "bold"},

|

| 104 |

+

"icon": {"font-size": "17px"},

|

| 105 |

+

"nav-link": {"font-size": "15px", "text-align": "left", "margin":"5px","padding": "10px", "--hover-color": "#1E90FF"},

|

| 106 |

+

"nav-link-selected": {"background-color": "#1E90FF"},

|

| 107 |

+

}

|

| 108 |

+

)

|

| 109 |

+

# Subdivide the page into three columns

|

| 110 |

+

left,middle,right = st.columns((0.5,4,0.5))

|

| 111 |

+

if selected == 'Home':

|

| 112 |

+

with middle:

|

| 113 |

+

col1, col2, col3 = st.columns(3)

|

| 114 |

+

with col2:

|

| 115 |

+

st.image('./assets/images/walpa-logo.png')

|

| 116 |

+

st.subheader('What is Walpa ?')

|

| 117 |

+

st.write("Walpa is a Streamlit Machine Learning App created to assist data engineers in multiple tasks such as datasets Analysis report, visualization, and predictions for the case of Walmart Inc.")

|

| 118 |

+

st.write("This is not an official Walmart Inc app is just for educational purpose")

|

| 119 |

+

st.subheader("Walpa's Team")

|

| 120 |

+

team = [

|

| 121 |

+

{"role": "Founder", "name": "Jason Ntone"},

|

| 122 |

+

{"role": "Developer", "name": "Jason Ntone"},

|

| 123 |

+

{"role": "Designer", "name": "Jason Ntone"}

|

| 124 |

+

]

|

| 125 |

+

st.write(team)

|

| 126 |

+

st.markdown(" - All rights reserved WALPA\u00A9")

|

| 127 |

+

elif selected == 'Dashboard':

|

| 128 |

+

# First row

|

| 129 |

+

with middle:

|

| 130 |

+

col1, col2, col3 = st.columns(3)

|

| 131 |

+

with col2:

|

| 132 |

+

st.image('./assets/images/walpa-logo.png')

|

| 133 |

+

st.title("Walmart Dashboard")

|

| 134 |

+

col4, col5, col6 = st.columns(3)

|

| 135 |

+

with col4:

|

| 136 |

+

temp = st.metric(label="Total Sales", value=sum_Sales(), delta="From 5010 To 2012")

|

| 137 |

+

with col5:

|

| 138 |

+

temp = st.metric(label="Total Unemployemt", value=sumUnemp(), delta="From 2010 To 2012")

|

| 139 |

+

with col6:

|

| 140 |

+

temp = st.metric(label="Total Stores studied", value=45, delta="From 2010 To 2012")

|

| 141 |

+

|

| 142 |

+

with middle:

|

| 143 |

+

st.subheader("Walmart Stores Map")

|

| 144 |

+

|

| 145 |

+

stores = data['Store'].unique()

|

| 146 |

+

longitude_values = [-111.0327, -88.1668, -121.3477, -77.0891, -87.3695, -95.3271, -79.2854, -84.3594, -81.5951, -82.7852, -118.5694, -82.2711, -80.6665, -78.2971, -103.3284, -84.8482, -93.0727, -117.0266, -97.0088, -82.1349, -76.8572, -104.7973, -123.2838, -91.5127, -117.3879, -97.9895, -80.2403, -82.0174, -94.6041, -117.0774, -88.2285, -81.4383, -83.3702, -93.2422, -100.4930, -81.8765, -85.4835, -117.0731, -79.7245, -86.2356, -75.7216, -90.1516, -77.8990, -86.2169, -96.6857]

|

| 147 |

+

latitude_values = [32.1555, 39.4931, 37.9886, 38.7684, 36.5298, 29.5636, 33.3776, 33.7603, 31.8469, 39.9673, 34.2801, 27.9944, 37.1505, 36.0659, 34.1866, 37.8041, 44.8955, 32.9759, 30.6631, 33.5412, 39.6366, 41.1364, 44.5714, 31.5634, 34.1041, 26.1536, 39.0212, 38.9188, 38.8837, 32.6389, 42.9937, 30.2862, 33.3263, 45.1571, 28.7043, 27.2008, 39.3378, 32.6072, 39.9002, 32.3838, 40.8332, 32.4081, 34.1641, 32.3418, 40.7399]

|

| 148 |

+

|

| 149 |

+

# Create a map

|

| 150 |

+

wmap = folium.Map(location=[37.0902, -95.7129], zoom_start=4)

|

| 151 |

+

|

| 152 |

+

# Add markers for each store

|

| 153 |

+

for store, lon, lat in zip(stores, longitude_values, latitude_values):

|

| 154 |

+

folium.Marker([lat, lon], popup=store,icon=folium.Icon(color='blue', icon='shopping-cart', prefix='fa')).add_to(wmap)

|

| 155 |

+

|

| 156 |

+

# Fit the map to the bounds of the USA

|

| 157 |

+

wmap.fit_bounds([[24.396308, -125.000000], [49.384358, -66.934570]])

|

| 158 |

+

# call to render Folium map in Streamlit

|

| 159 |

+

st_data = st_folium(wmap, width=800)

|

| 160 |

+

elif selected == 'Analysis':

|

| 161 |

+

with middle:

|

| 162 |

+

col1,col2,col3 = st.columns((0.5,3,0.5))

|

| 163 |

+

with col2:

|

| 164 |

+

tab = ui.tabs(options=['Overview', 'Sumary', 'Correlation Matrix'], default_value='Overview', key="none")

|

| 165 |

+

st.title("Data Analysis")

|

| 166 |

+

if tab == 'Overview':

|

| 167 |

+

st.subheader('Walmart Daily Sales Overview')

|

| 168 |

+

st.dataframe(data.head())

|

| 169 |

+

elif tab == 'Sumary':

|

| 170 |

+

st.subheader('Walmart Daily Sales Sumary')

|

| 171 |

+

st.dataframe(data.describe())

|

| 172 |

+

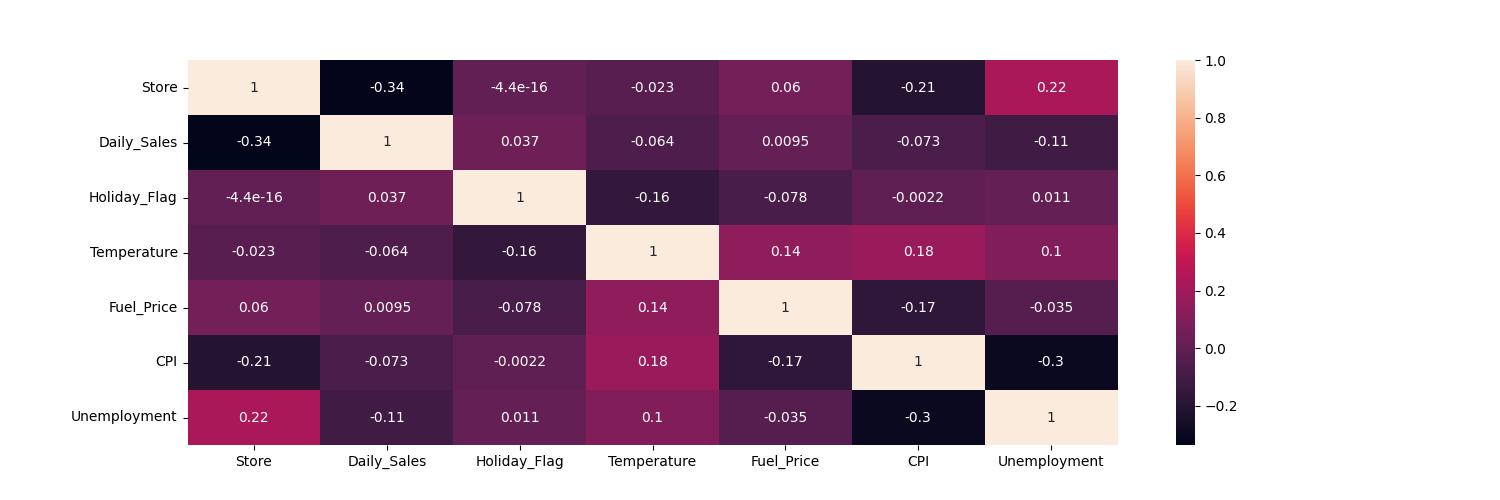

elif tab == 'Correlation Matrix':

|

| 173 |

+

st.subheader('Walmart Correlation Matrix')

|

| 174 |

+

fig = plt.figure(figsize=(15,5))

|

| 175 |

+

st.write(sns.heatmap(data.corr(),annot=True))

|

| 176 |

+

st.pyplot(fig)

|

| 177 |

+

elif selected == 'Visualization':

|

| 178 |

+

with middle:

|

| 179 |

+

tab = ui.tabs(options=['Regplot', 'Barplot', 'Lineplot'], default_value='Barplot', key="none")

|

| 180 |

+

if tab == 'Regplot':

|

| 181 |

+

st.subheader('Walmart Daily Sales Regplot')

|

| 182 |

+

fig = plt.figure(figsize=(15,5))

|

| 183 |

+

st.write(sns.regplot(data=data, x='Store', y='Daily_Sales'))

|

| 184 |

+

st.pyplot(fig)

|

| 185 |

+

elif tab == 'Barplot':

|

| 186 |

+

st.subheader('Walmart Daily Sales Barplot')

|

| 187 |

+

option = {

|

| 188 |

+

"xAxis": {

|

| 189 |

+

"type": "category",

|

| 190 |

+

"data": data['Store'].tolist(),

|

| 191 |

+

},

|

| 192 |

+

"yAxis": {

|

| 193 |

+

"type": "value"

|

| 194 |

+

},

|

| 195 |

+

"series": [{

|

| 196 |

+

"data": data['Daily_Sales'].tolist(), # Replace 'Sales' with the actual column name for sales data

|

| 197 |

+

"type": "bar"

|

| 198 |

+

}]

|

| 199 |

+

}

|

| 200 |

+

st_echarts(

|

| 201 |

+

options=option,

|

| 202 |

+

height="400px",

|

| 203 |

+

)

|

| 204 |

+

elif tab == 'Lineplot':

|

| 205 |

+

st.subheader('Walmart Daily Sales line plot')

|

| 206 |

+

option = {

|

| 207 |

+

"xAxis": {

|

| 208 |

+

"type": "category",

|

| 209 |

+

"data": data['Date'].tolist(),

|

| 210 |

+

},

|

| 211 |

+

"yAxis": {

|

| 212 |

+

"type": "value"

|

| 213 |

+

},

|

| 214 |

+

"series": [{

|

| 215 |

+

"data": data['Daily_Sales'].tolist(), # Replace 'Sales' with the actual column name for sales data

|

| 216 |

+

"type": "line"

|

| 217 |

+

}]

|

| 218 |

+

}

|

| 219 |

+

st_echarts(

|

| 220 |

+

options=option,

|

| 221 |

+

height="400px",

|

| 222 |

+

)

|

| 223 |

+

|

| 224 |

+

elif selected == 'Machine Learning':

|

| 225 |

+

with middle:

|

| 226 |

+

st.subheader('📈🎯 Daily Sales Prediction Widget')

|

| 227 |

+

tab = ui.tabs(options=['Method 1: Upload Dataset', 'Method 2: Fill the form'], default_value='Fill the form', key="none")

|

| 228 |

+

st.write('\n')

|

| 229 |

+

if tab == 'Method 2: Fill the form':

|

| 230 |

+

st.markdown('**Fill the form with correct data to make prediction**')

|

| 231 |

+

col1,col2,col3 = st.columns(3)

|

| 232 |

+

with col1:

|

| 233 |

+

|

| 234 |

+

st.write('Enter the Year')

|

| 235 |

+

year = ui.input(type='number', default_value=0, key="year")

|

| 236 |

+

with col2:

|

| 237 |

+

st.write('Enter the Month')

|

| 238 |

+

month = ui.input(type='number', default_value=0, key="month")

|

| 239 |

+

with col3:

|

| 240 |

+

st.write('Enter the Day')

|

| 241 |

+

day = ui.input(type='number', default_value=0, key="day")

|

| 242 |

+

|

| 243 |

+

|

| 244 |

+

st.write('Enter Store Number')

|

| 245 |

+

store = ui.input(type='number', default_value=0, key="input1")

|

| 246 |

+

|

| 247 |

+

st.write(f'The date : **{year}-{month}-{day}** you have entered is that a holiday ?')

|

| 248 |

+

holiday = [

|

| 249 |

+

{"label": "Yes", "value": 1, "id": "r1"},

|

| 250 |

+

{"label": "No", "value": 0, "id": "r2"},

|

| 251 |

+

]

|

| 252 |

+

holiday_flag = ui.radio_group(options=holiday, default_value=0, key="radio1")

|

| 253 |

+

|

| 254 |

+

col3,col4 = st.columns((2,2))

|

| 255 |

+

with col3:

|

| 256 |

+

st.write('Enter the Temperature')

|

| 257 |

+

temperature = ui.input(type='text', default_value=0, key="input2")

|

| 258 |

+

|

| 259 |

+

with col4:

|

| 260 |

+

st.write('Enter the Fuel Price')

|

| 261 |

+

fuel_price = ui.input(type='text', default_value=0, key="input3")

|

| 262 |

+

|

| 263 |

+

col5,col6 = st.columns((2,2))

|

| 264 |

+

with col5:

|

| 265 |

+

st.write('Enter the CPI')

|

| 266 |

+

cpi = ui.input(type='text', default_value=0, key="input4")

|

| 267 |

+

|

| 268 |

+

with col6:

|

| 269 |

+

st.write('Enter the Unemployment')

|

| 270 |

+

unemployment = ui.input(type='text', default_value=0, key="input5")

|

| 271 |

+

|

| 272 |

+

Store= int(store)

|

| 273 |

+

Holiday_Flag= int(holiday_flag)

|

| 274 |

+

Temperature= float(temperature)

|

| 275 |

+

Fuel_Price= float(fuel_price)

|

| 276 |

+

CPI= float(cpi)

|

| 277 |

+

Unemployment= float(unemployment)

|

| 278 |

+

Year = int(year)

|

| 279 |

+

Month = int(month)

|

| 280 |

+

Day = int(day)

|

| 281 |

+

form_data = pd.DataFrame([[Store, Holiday_Flag, Temperature, Fuel_Price, CPI, Unemployment, Year, Month, Day]],

|

| 282 |

+

columns=['Store', 'Holiday_Flag', 'Temperature',

|

| 283 |

+

'Fuel_Price', 'CPI', 'Unemployment','Year', 'Month', 'Day'])

|

| 284 |

+

st.subheader('Your provided data')

|

| 285 |

+

st.dataframe(form_data)

|

| 286 |

+

submit_btn = ui.button(text="Predict Daily Sales", key="styled_btn_tailwind", className="bg-blue-500 text-white")

|

| 287 |

+

if submit_btn:

|

| 288 |

+

if form_data.empty == False:

|

| 289 |

+

model_path = './models/Walmart'

|

| 290 |

+

model = load_model(model_path)

|

| 291 |

+

|

| 292 |

+

# Make predictions using the loaded model and form_data

|

| 293 |

+

pred = predict_model(model, data=form_data)

|

| 294 |

+

|

| 295 |

+

prediction = pred['prediction_label'].values[0]

|

| 296 |

+

|

| 297 |

+

# Display the prediction

|

| 298 |

+

with st.status("Daily Sales Prediction processing...", expanded=True) as status:

|

| 299 |

+

st.write("Handling data...")

|

| 300 |

+

time.sleep(2)

|

| 301 |

+

st.write("Load Model...")

|

| 302 |

+

time.sleep(1)

|

| 303 |

+

st.write("Load Data...")

|

| 304 |

+

time.sleep(1)

|

| 305 |

+

status.update(label="Daily Sales Prediction processing complete!", state="complete", expanded=False)

|

| 306 |

+

autoplay_audio("./assets/audio/mixkit-positive-notification-951.wav")

|

| 307 |

+

st.success(prediction)

|

| 308 |

+

else:

|

| 309 |

+

st.warning("Fill the form")

|

| 310 |

+

if tab == 'Method 1: Upload Dataset':

|

| 311 |

+

with middle:

|

| 312 |

+

st.header("Load your file")

|

| 313 |

+

uploaded_file = st.file_uploader('Upload your Dataset(.csv file)',

|

| 314 |

+

type=['csv'])

|

| 315 |

+

if uploaded_file:

|

| 316 |

+

df = load_data(uploaded_file)

|

| 317 |

+

df['Year'] = pd.to_datetime(df['Date']).dt.year

|

| 318 |

+

df['Month'] = pd.to_datetime(df['Date']).dt.month

|

| 319 |

+

df['Day'] = pd.to_datetime(df['Date']).dt.day

|

| 320 |

+

df = df.drop(['Date'], axis=1)

|

| 321 |

+

model_path = './models/Walmart'

|

| 322 |

+

model = load_model(model_path)

|

| 323 |

+

preds = predict_model(model, data=df)

|

| 324 |

+

# Assuming 'predictions' is a list or array-like object

|

| 325 |

+

predictions = preds['prediction_label'].values

|

| 326 |

+

|

| 327 |

+

# Create DataFrame

|

| 328 |

+

pp = pd.DataFrame(predictions, columns=['Daily_Sales_Prediction'])

|

| 329 |

+

|

| 330 |

+

ndf = pd.concat([df, pp], axis=1)

|

| 331 |

+

st.subheader("Daily_Sales Predictions")

|

| 332 |

+

with st.status("Daily Sales Prediction processing...", expanded=True) as status:

|

| 333 |

+

st.write("Handling data...")

|

| 334 |

+

time.sleep(2)

|

| 335 |

+

st.write("Load Model...")

|

| 336 |

+

time.sleep(1)

|

| 337 |

+

st.write("Load Data...")

|

| 338 |

+

time.sleep(1)

|

| 339 |

+

status.update(label="Daily Sales Prediction processing complete!", state="complete", expanded=False)

|

| 340 |

+

autoplay_audio("./assets/audio/mixkit-positive-notification-951.wav")

|

| 341 |

+

st.write(ndf)

|

| 342 |

+

|

| 343 |

+

|

| 344 |

+

if __name__ == '__main__':

|

| 345 |

+

main()

|

| 346 |

+

|

assets/audio/complete.wav

ADDED

|

Binary file (235 kB). View file

|

|

|

assets/audio/mixkit-correct-answer-tone-2870.wav

ADDED

|

Binary file (346 kB). View file

|

|

|

assets/audio/mixkit-message-pop-alert-2354.mp3

ADDED

|

Binary file (46.9 kB). View file

|

|

|

assets/audio/mixkit-positive-notification-951.wav

ADDED

|

Binary file (494 kB). View file

|

|

|

assets/images/heatmap.png

ADDED

|

assets/images/icons/android-chrome-192x192.png

ADDED

|

|

assets/images/icons/android-chrome-512x512.png

ADDED

|

|

assets/images/icons/apple-touch-icon.png

ADDED

|

|

assets/images/icons/favicon-16x16.png

ADDED

|

|

assets/images/icons/favicon-32x32.png

ADDED

|

|

assets/images/icons/favicon.ico

ADDED

|

|

assets/images/icons/favicon.png

ADDED

|

|

assets/images/walmart-logo-459.png

ADDED

|

assets/images/walpa-logo.png

ADDED

|

assets/images/walpa.png

ADDED

|

datasets/Walmart.csv

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

datasets/test_Walmart.csv

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

gps.txt

ADDED

|

@@ -0,0 +1,45 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

-111.0327 32.1555

|

| 2 |

+

-88.1668 39.4931

|

| 3 |

+

-121.3477 37.9886

|

| 4 |

+

-77.0891 38.7684

|

| 5 |

+

-87.3695 36.5298

|

| 6 |

+

-95.3271 29.5636

|

| 7 |

+

-79.2854 33.3776

|

| 8 |

+

-84.3594 33.7603

|

| 9 |

+

-81.5951 31.8469

|

| 10 |

+

-82.7852 39.9673

|

| 11 |

+

-118.5694 34.2801

|

| 12 |

+

-82.2711 27.9944

|

| 13 |

+

-80.6665 37.1505

|

| 14 |

+

-78.2971 36.0659

|

| 15 |

+

-103.3284 34.1866

|

| 16 |

+

-84.8482 37.8041

|

| 17 |

+

-93.0727 44.8955

|

| 18 |

+

-117.0266 32.9759

|

| 19 |

+

-97.0088 30.6631

|

| 20 |

+

-82.1349 33.5412

|

| 21 |

+

-76.8572 39.6366

|

| 22 |

+

-104.7973 41.1364

|

| 23 |

+

-123.2838 44.5714

|

| 24 |

+

-91.5127 31.5634

|

| 25 |

+

-117.3879 34.1041

|

| 26 |

+

-97.9895 26.1536

|

| 27 |

+

-80.2403 39.0212

|

| 28 |

+

-82.0174 38.9188

|

| 29 |

+

-94.6041 38.8837

|

| 30 |

+

-117.0774 32.6389

|

| 31 |

+

-88.2285 42.9937

|

| 32 |

+

-81.4383 30.2862

|

| 33 |

+

-83.3702 33.3263

|

| 34 |

+

-93.2422 45.1571

|

| 35 |

+

-100.4930 28.7043

|

| 36 |

+

-81.8765 27.2008

|

| 37 |

+

-85.4835 39.3378

|

| 38 |

+

-117.0731 32.6072

|

| 39 |

+

-79.7245 39.9002

|

| 40 |

+

-86.2356 32.3838

|

| 41 |

+

-75.7216 40.8332

|

| 42 |

+

-90.1516 32.4081

|

| 43 |

+

-77.8990 34.1641

|

| 44 |

+

-86.2169 32.3418

|

| 45 |

+

-96.6857 40.7399

|

logs.log

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

models/Walmart.pkl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:ee3f98b064d0e4dffda99a132a69799512ea663014c512a55c70aa8adb40f03d

|

| 3 |

+

size 408431

|

models/walmart_model.pkl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:f3c065a9745f1c9abae7b33294203b3f09698b0fced29bb590d59e077cf8d4ce

|

| 3 |

+

size 404508

|

readme.md

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

**Walpa** is Walmart Prediction App V1.0.7, Python streamlit machine learning based app developped in the case of Keyce IABD B3 Continous Examination.

|

| 2 |

+

Developpement Team: Jason Ntone

|

| 3 |

+

Owner : Jason Ntone

|

| 4 |

+

|

| 5 |

+

**Important:** This is not an official Walmart Inc app is just for educational purpose. All data used are from 2010 to 2012 and is just from 45 stores. The App will License: MIT License

|

| 6 |

+

# Project Title: Walpa

|

| 7 |

+

# Goal: Predict the daily sales of a targeted store

|

requirements.txt

ADDED

|

Binary file (606 Bytes). View file

|

|

|

walmart.ipynb

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|