Commit

·

c6a1f8c

1

Parent(s):

c4d8fc9

topic cluster and code cluster

Browse files- README.md +12 -6

- app.py +154 -80

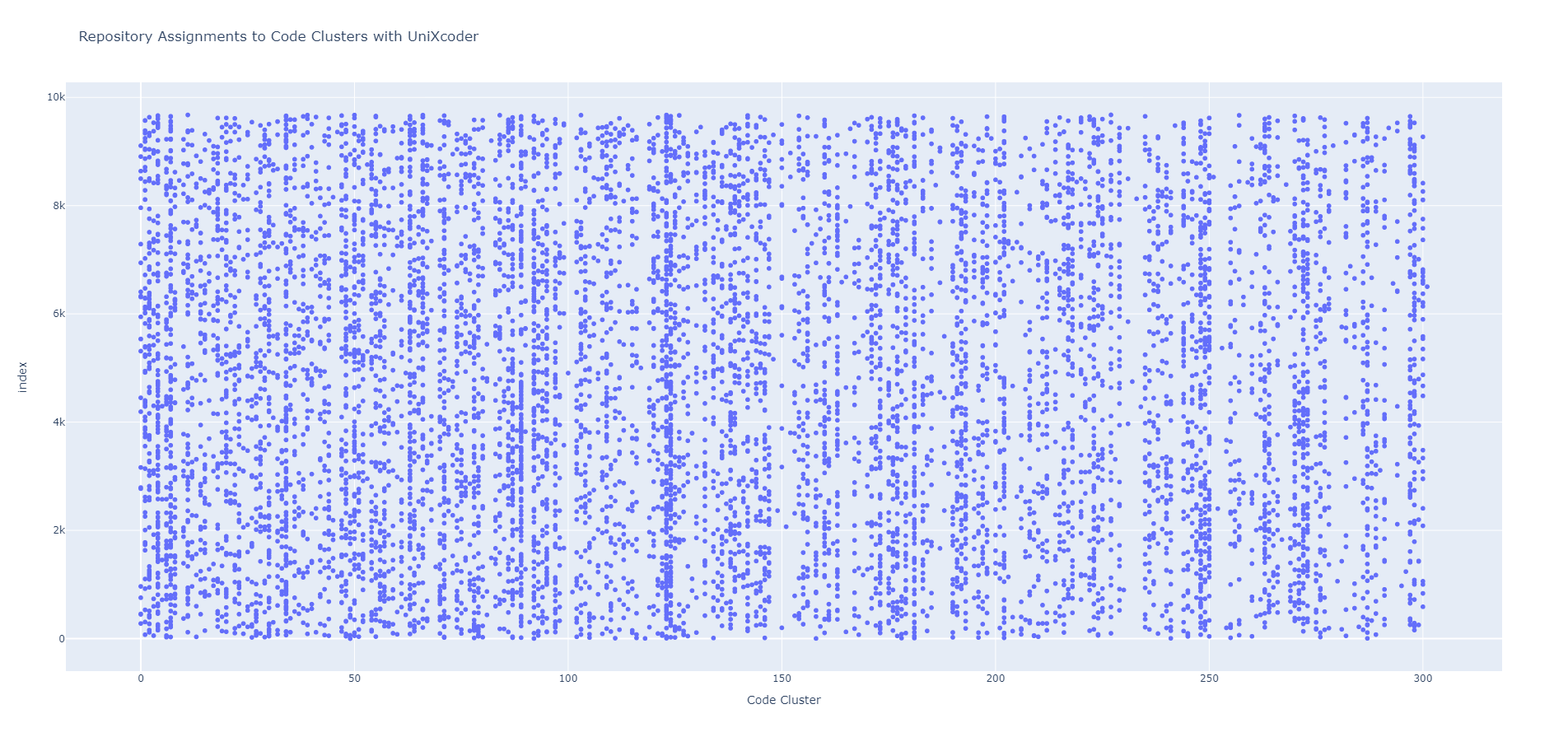

- assets/Repository-Code Cluster Assignments.png +0 -0

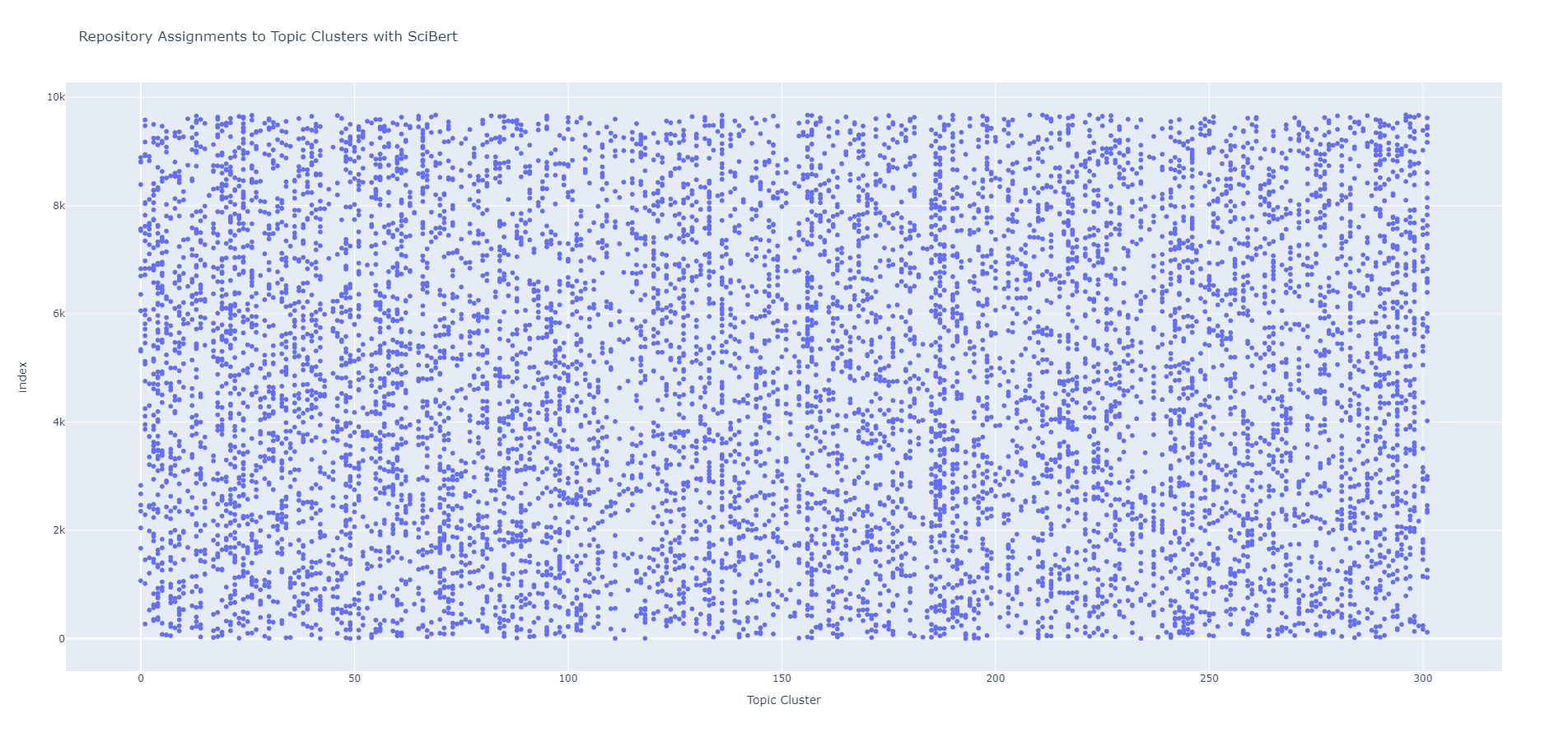

- assets/Repository-Topic Cluster Assignments.png +0 -0

- common/__init__.py +0 -0

- {data → common}/pair_classifier.py +0 -0

- {data → common}/repo_doc.py +0 -0

- data/{kmeans_model_scibert.pkl → kmeans_model_code_unixcoder.pkl} +1 -1

- data/kmeans_model_topic_scibert.pkl +3 -0

- data/repo_code_clusters.json +0 -0

- data/repo_code_clusters_test.json +0 -0

- data/{repo_clusters.json → repo_topic_clusters.json} +0 -0

- data/{repo_clusters_test.json → repo_topic_clusters_test.json} +0 -0

- requirements.txt +2 -1

README.md

CHANGED

|

@@ -27,18 +27,19 @@ RepoSnipy is a neural search engine built with [streamlit](https://github.com/st

|

|

| 27 |

|

| 28 |

Compared to the previous generation of [RepoSnipy](https://github.com/RepoAnalysis/RepoSnipy), the latest version has such new features below:

|

| 29 |

* It uses the [RepoSim4Py](https://github.com/RepoMining/RepoSim4Py), which is based on [RepoSim4Py pipeline](https://huggingface.co/Henry65/RepoSim4Py), to create multi-level embeddings for Python repositories.

|

| 30 |

-

* Multi-level embeddings --- code,

|

| 31 |

* It uses the [SciBERT](https://arxiv.org/abs/1903.10676) model to analyse repository topics and to generate embeddings for topics.

|

| 32 |

-

* Transfer multiple topics into one cluster --- it uses a [

|

| 33 |

-

*

|

|

|

|

| 34 |

More generally, SimilarityCal model seem repositories with same cluster as label 1, otherwise as label 0. The input features of SimilarityCal model are two repositories' embeddings concatenation, and the binary labels are mentioned above.

|

| 35 |

The output of SimilarityCal model are scores of how similar or dissimilar two repositories are.

|

| 36 |

|

| 37 |

-

We have created a [vector dataset](data/index.bin) (stored as docarray index) of approximate 9700 GitHub Python repositories that has license and over 300 stars by the time of

|

| 38 |

|

| 39 |

|

| 40 |

## Dataset

|

| 41 |

-

As mentioned above, RepoSnipy needs [vector](data/index.bin), [json](data/

|

| 42 |

|

| 43 |

|

| 44 |

## License

|

|

@@ -51,4 +52,9 @@ The model and the fine-tuning dataset used:

|

|

| 51 |

|

| 52 |

* [UniXCoder](https://arxiv.org/abs/2203.03850)

|

| 53 |

* [AdvTest](https://arxiv.org/abs/1909.09436)

|

| 54 |

-

* [SciBERT](https://arxiv.org/abs/1903.10676)

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 27 |

|

| 28 |

Compared to the previous generation of [RepoSnipy](https://github.com/RepoAnalysis/RepoSnipy), the latest version has such new features below:

|

| 29 |

* It uses the [RepoSim4Py](https://github.com/RepoMining/RepoSim4Py), which is based on [RepoSim4Py pipeline](https://huggingface.co/Henry65/RepoSim4Py), to create multi-level embeddings for Python repositories.

|

| 30 |

+

* Multi-level embeddings --- code, doc, readme, requirement, and repository.

|

| 31 |

* It uses the [SciBERT](https://arxiv.org/abs/1903.10676) model to analyse repository topics and to generate embeddings for topics.

|

| 32 |

+

* Transfer multiple topics into one cluster --- it uses a KMeans model ([kmeans_model_topic_scibert](data/kmeans_model_topic_scibert.pkl)) to analyse topic embeddings and to cluster repositories based on topics.

|

| 33 |

+

* Clustering by code snippets --- it uses a KMeans model ([kmeans_model_code_unixcoder](data/kmeans_model_code_unixcoder.pkl)) to analyse code embeddings and to cluster repositories based on code snippets.

|

| 34 |

+

* It uses the [SimilarityCal](data/SimilarityCal_model_NO1.pt) model, which is a binary classifier to calculate cluster similarity based on repository-level embeddings and cluster (topic or code cluster number).

|

| 35 |

More generally, SimilarityCal model seem repositories with same cluster as label 1, otherwise as label 0. The input features of SimilarityCal model are two repositories' embeddings concatenation, and the binary labels are mentioned above.

|

| 36 |

The output of SimilarityCal model are scores of how similar or dissimilar two repositories are.

|

| 37 |

|

| 38 |

+

We have created a [vector dataset](data/index.bin) (stored as docarray index) of approximate 9700 GitHub Python repositories that has license and over 300 stars by the time of March 2024. The accordingly generated clusters were putted in two json datasets ([repo_topic_clusters](data/repo_topic_clusters.json) and [repo_code_clusters](data/repo_code_clusters.json)) (stored repo-cluster as key-values accordingly).

|

| 39 |

|

| 40 |

|

| 41 |

## Dataset

|

| 42 |

+

As mentioned above, RepoSnipy needs [vector](data/index.bin), clusters json dataset ([repo_topic_clusters](data/repo_topic_clusters.json) and [repo_code_clusters](data/repo_code_clusters.json)), KMeans models ([kmeans_model_topic_scibert](data/kmeans_model_topic_scibert.pkl) and [kmeans_model_code_unixcoder](data/kmeans_model_code_unixcoder.pkl)) and [SimilarityCal](data/SimilarityCal_model_NO1.pt) model when you start up it. For your convenience, we have uploaded them in the folder [data](data) of this repository.

|

| 43 |

|

| 44 |

|

| 45 |

## License

|

|

|

|

| 52 |

|

| 53 |

* [UniXCoder](https://arxiv.org/abs/2203.03850)

|

| 54 |

* [AdvTest](https://arxiv.org/abs/1909.09436)

|

| 55 |

+

* [SciBERT](https://arxiv.org/abs/1903.10676)

|

| 56 |

+

* [RepoSnipy (old version)](https://github.com/RepoAnalysis/RepoSnipy)

|

| 57 |

+

* [RepoSnipy HuggingFace Spaces (old version)](https://huggingface.co/spaces/Lazyhope/RepoSnipy)

|

| 58 |

+

* [RepoSim4Py](https://github.com/RepoMining/RepoSim4Py)

|

| 59 |

+

* [SimilarityCal](https://github.com/RepoMining/SimilarityCal)

|

| 60 |

+

* [RepoSnipy](https://github.com/RepoMining/RepoSnipy)

|

app.py

CHANGED

|

@@ -12,13 +12,16 @@ from docarray import DocList

|

|

| 12 |

from docarray.index import InMemoryExactNNIndex

|

| 13 |

from transformers import pipeline

|

| 14 |

from transformers import AutoTokenizer, AutoModel

|

| 15 |

-

from

|

| 16 |

-

from

|

| 17 |

from nltk.stem import WordNetLemmatizer

|

| 18 |

|

| 19 |

nltk.download("wordnet")

|

| 20 |

-

|

|

|

|

| 21 |

SIMILARITY_CAL_MODEL_PATH = Path(__file__).parent.joinpath("data/SimilarityCal_model_NO1.pt")

|

|

|

|

|

|

|

| 22 |

device = (

|

| 23 |

"cuda"

|

| 24 |

if torch.cuda.is_available()

|

|

@@ -29,14 +32,13 @@ device = (

|

|

| 29 |

|

| 30 |

# 1. Product environment

|

| 31 |

# INDEX_PATH = Path(__file__).parent.joinpath("data/index.bin")

|

| 32 |

-

#

|

| 33 |

-

|

| 34 |

-

|

| 35 |

|

| 36 |

# 2. Developing environment

|

| 37 |

INDEX_PATH = Path(__file__).parent.joinpath("data/index_test.bin")

|

| 38 |

-

|

| 39 |

-

|

| 40 |

|

| 41 |

|

| 42 |

@st.cache_resource(show_spinner="Loading repositories basic information...")

|

|

@@ -60,16 +62,28 @@ def load_index():

|

|

| 60 |

return InMemoryExactNNIndex[RepoDoc](index_file_path=INDEX_PATH), default_doc

|

| 61 |

|

| 62 |

|

| 63 |

-

@st.cache_resource(show_spinner="Loading repositories clusters...")

|

| 64 |

-

def

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 65 |

"""

|

| 66 |

-

The function to load the repo-

|

| 67 |

-

:return: a dictionary with the repo-

|

| 68 |

"""

|

| 69 |

-

with open(

|

| 70 |

-

|

| 71 |

|

| 72 |

-

return

|

| 73 |

|

| 74 |

|

| 75 |

@st.cache_resource(show_spinner="Loading RepoSim4Py pipeline model...")

|

|

@@ -99,16 +113,26 @@ def load_scibert_model():

|

|

| 99 |

"""

|

| 100 |

tokenizer = AutoTokenizer.from_pretrained(SCIBERT_MODEL_PATH)

|

| 101 |

scibert_model = AutoModel.from_pretrained(SCIBERT_MODEL_PATH).to(device)

|

|

|

|

| 102 |

return tokenizer, scibert_model

|

| 103 |

|

| 104 |

|

| 105 |

-

@st.cache_resource(show_spinner="Loading KMeans model...")

|

| 106 |

-

def

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 107 |

"""

|

| 108 |

-

The function to load KMeans model

|

| 109 |

-

:return: a KMeans model

|

| 110 |

"""

|

| 111 |

-

return joblib.load(

|

| 112 |

|

| 113 |

|

| 114 |

@st.cache_resource(show_spinner="Loading SimilarityCal model...")

|

|

@@ -117,6 +141,7 @@ def load_similaritycal_model():

|

|

| 117 |

sim_cal_model.load_state_dict(torch.load(SIMILARITY_CAL_MODEL_PATH, map_location=device))

|

| 118 |

sim_cal_model = sim_cal_model.to(device)

|

| 119 |

sim_cal_model = sim_cal_model.eval()

|

|

|

|

| 120 |

return sim_cal_model

|

| 121 |

|

| 122 |

|

|

@@ -130,6 +155,7 @@ def generate_scibert_embedding(tokenizer, scibert_model, text):

|

|

| 130 |

outputs = scibert_model(**inputs)

|

| 131 |

# Use mean pooling for sentence representation

|

| 132 |

embeddings = outputs.last_hidden_state.mean(dim=1).cpu().detach().numpy()

|

|

|

|

| 133 |

return embeddings

|

| 134 |

|

| 135 |

|

|

@@ -150,8 +176,10 @@ def run_pipeline_model(_model, repo_name, github_token):

|

|

| 150 |

if not extracted_infos:

|

| 151 |

return None

|

| 152 |

|

|

|

|

| 153 |

with st.spinner(f"Generating embeddings for {repo_name}..."):

|

| 154 |

-

repo_info = _model.forward(extracted_infos)[0]

|

|

|

|

| 155 |

|

| 156 |

return repo_info

|

| 157 |

|

|

@@ -175,36 +203,50 @@ def run_index_search(index, query, search_field, limit):

|

|

| 175 |

return search_results

|

| 176 |

|

| 177 |

|

| 178 |

-

def

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 179 |

"""

|

| 180 |

-

The function to search cluster number for such repositories.

|

| 181 |

-

:param

|

| 182 |

:param repo_name_list: list or array represent repository names

|

| 183 |

-

:return: cluster number list

|

| 184 |

"""

|

| 185 |

-

|

| 186 |

for repo_name in repo_name_list:

|

| 187 |

-

|

| 188 |

-

|

|

|

|

| 189 |

|

| 190 |

|

| 191 |

-

def run_similaritycal_search(index, repo_clusters, model, query_doc, query_cluster_number, limit

|

| 192 |

"""

|

| 193 |

The function to run SimilarityCal model.

|

| 194 |

:param index: index file

|

| 195 |

-

:param repo_clusters: repo-clusters json file

|

| 196 |

:param model: SimilarityCal model

|

| 197 |

:param query_doc: query repo doc

|

| 198 |

-

:param query_cluster_number: query repo cluster number

|

| 199 |

:param limit: limit

|

| 200 |

-

:param same_cluster: whether searching for same cluster

|

| 201 |

:return: result dataframe

|

| 202 |

"""

|

| 203 |

docs = index._docs

|

| 204 |

input_embeddings_list = []

|

| 205 |

result_dl = DocList[RepoDoc]()

|

| 206 |

for doc in docs:

|

| 207 |

-

if

|

| 208 |

continue

|

| 209 |

if doc.name != query_doc.name:

|

| 210 |

e1, e2 = (torch.Tensor(query_doc.repository_embedding),

|

|

@@ -219,17 +261,24 @@ def run_similaritycal_search(index, repo_clusters, model, query_doc, query_clust

|

|

| 219 |

similarity_scores = softmax(model_output)[:, 1].cpu().detach().numpy()

|

| 220 |

df = result_dl.to_dataframe()

|

| 221 |

df["scores"] = similarity_scores

|

| 222 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 223 |

|

| 224 |

|

| 225 |

if __name__ == "__main__":

|

| 226 |

# Loading dataset and models

|

| 227 |

index, default_doc = load_index()

|

| 228 |

-

|

|

|

|

| 229 |

pipeline_model = load_pipeline_model()

|

| 230 |

lemmatizer = WordNetLemmatizer()

|

| 231 |

tokenizer, scibert_model = load_scibert_model()

|

| 232 |

-

|

|

|

|

| 233 |

sim_cal_model = load_similaritycal_model()

|

| 234 |

|

| 235 |

# Setting the sidebar

|

|

@@ -254,8 +303,8 @@ if __name__ == "__main__":

|

|

| 254 |

|

| 255 |

st.multiselect(

|

| 256 |

label="Display columns",

|

| 257 |

-

options=["scores", "name", "topics", "cluster

|

| 258 |

-

default=["scores", "name", "topics", "cluster

|

| 259 |

help="Select columns to display in the search results",

|

| 260 |

key="display_columns",

|

| 261 |

)

|

|

@@ -291,10 +340,11 @@ if __name__ == "__main__":

|

|

| 291 |

records = index.filter({"name": {"$eq": repo_name}})

|

| 292 |

# 1) Building the query information

|

| 293 |

query_doc = default_doc.copy() if not records else records[0]

|

| 294 |

-

# 2) Recording the cluster

|

| 295 |

-

|

|

|

|

| 296 |

|

| 297 |

-

# Importance 1 ---- situation need to update repository information and cluster

|

| 298 |

if st.session_state.update_index or not records:

|

| 299 |

# 1) Updating repository information by using RepoSim4Py pipeline

|

| 300 |

repo_info = run_pipeline_model(pipeline_model, repo_name, st.session_state.github_token)

|

|

@@ -317,13 +367,18 @@ if __name__ == "__main__":

|

|

| 317 |

query_doc.repository_embedding = None if np.all(repo_info["mean_repo_embedding"] == 0) else repo_info[

|

| 318 |

"mean_repo_embedding"].reshape(-1)

|

| 319 |

|

| 320 |

-

# 2) Updating cluster number

|

| 321 |

topics_text = ' '.join(

|

| 322 |

[lemmatizer.lemmatize(topic.lower().replace('-', ' ')) for topic in query_doc.topics])

|

| 323 |

topic_embeddings = generate_scibert_embedding(tokenizer, scibert_model, topics_text)

|

| 324 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 325 |

|

| 326 |

-

# Importance 2 ---- update index file and repository clusters

|

| 327 |

if st.session_state.update_index:

|

| 328 |

if not query_doc.license:

|

| 329 |

st.warning(

|

|

@@ -337,19 +392,24 @@ if __name__ == "__main__":

|

|

| 337 |

)

|

| 338 |

else:

|

| 339 |

index.index(query_doc)

|

| 340 |

-

|

|

|

|

| 341 |

|

| 342 |

-

with st.spinner("Persisting the index and repository clusters..."):

|

| 343 |

index.persist(str(INDEX_PATH))

|

| 344 |

-

with open(

|

| 345 |

-

json.dump(

|

|

|

|

|

|

|

| 346 |

st.success("Repository updated to the index!")

|

| 347 |

|

| 348 |

load_index.clear()

|

| 349 |

-

|

|

|

|

| 350 |

|

| 351 |

st.session_state["query_doc"] = query_doc

|

| 352 |

-

st.session_state["

|

|

|

|

| 353 |

|

| 354 |

# 2. Repository cannot be matched

|

| 355 |

else:

|

|

@@ -358,7 +418,8 @@ if __name__ == "__main__":

|

|

| 358 |

# Starting to query

|

| 359 |

if "query_doc" in st.session_state:

|

| 360 |

query_doc = st.session_state.query_doc

|

| 361 |

-

|

|

|

|

| 362 |

limit = st.session_state.search_results_limit

|

| 363 |

|

| 364 |

# Showing the query repository information

|

|

@@ -368,7 +429,8 @@ if __name__ == "__main__":

|

|

| 368 |

{

|

| 369 |

"name": query_doc.name,

|

| 370 |

"topics": query_doc.topics,

|

| 371 |

-

"cluster

|

|

|

|

| 372 |

"stars": query_doc.stars,

|

| 373 |

"license": query_doc.license,

|

| 374 |

}

|

|

@@ -377,15 +439,18 @@ if __name__ == "__main__":

|

|

| 377 |

)

|

| 378 |

|

| 379 |

display_columns = st.session_state.display_columns

|

| 380 |

-

|

|

|

|

| 381 |

["Code_sim", "Docstring_sim", "Readme_sim", "Requirement_sim",

|

| 382 |

-

"Repository_sim", "

|

| 383 |

|

| 384 |

with code_sim_tab:

|

| 385 |

if query_doc.code_embedding is not None:

|

| 386 |

code_sim_res = run_index_search(index, query_doc, "code_embedding", limit)

|

| 387 |

-

|

| 388 |

-

code_sim_res["cluster

|

|

|

|

|

|

|

| 389 |

st.dataframe(code_sim_res[display_columns])

|

| 390 |

else:

|

| 391 |

st.error("No function code was extracted for this repository!")

|

|

@@ -393,8 +458,10 @@ if __name__ == "__main__":

|

|

| 393 |

with doc_sim_tab:

|

| 394 |

if query_doc.doc_embedding is not None:

|

| 395 |

doc_sim_res = run_index_search(index, query_doc, "doc_embedding", limit)

|

| 396 |

-

|

| 397 |

-

doc_sim_res["cluster

|

|

|

|

|

|

|

| 398 |

st.dataframe(doc_sim_res[display_columns])

|

| 399 |

else:

|

| 400 |

st.error("No function docstring was extracted for this repository!")

|

|

@@ -402,8 +469,10 @@ if __name__ == "__main__":

|

|

| 402 |

with readme_sim_tab:

|

| 403 |

if query_doc.readme_embedding is not None:

|

| 404 |

readme_sim_res = run_index_search(index, query_doc, "readme_embedding", limit)

|

| 405 |

-

|

| 406 |

-

readme_sim_res["cluster

|

|

|

|

|

|

|

| 407 |

st.dataframe(readme_sim_res[display_columns])

|

| 408 |

else:

|

| 409 |

st.error("No readme file was extracted for this repository!")

|

|

@@ -411,8 +480,10 @@ if __name__ == "__main__":

|

|

| 411 |

with requirement_sim_tab:

|

| 412 |

if query_doc.requirement_embedding is not None:

|

| 413 |

requirement_sim_res = run_index_search(index, query_doc, "requirement_embedding", limit)

|

| 414 |

-

|

| 415 |

-

requirement_sim_res["cluster

|

|

|

|

|

|

|

| 416 |

st.dataframe(requirement_sim_res[display_columns])

|

| 417 |

else:

|

| 418 |

st.error("No requirement file was extracted for this repository!")

|

|

@@ -421,31 +492,34 @@ if __name__ == "__main__":

|

|

| 421 |

if query_doc.repository_embedding is not None:

|

| 422 |

# Repo Sim tab

|

| 423 |

repo_sim_res = run_index_search(index, query_doc, "repository_embedding", limit)

|

| 424 |

-

|

| 425 |

-

repo_sim_res["cluster

|

|

|

|

|

|

|

| 426 |

st.dataframe(repo_sim_res[display_columns])

|

| 427 |

else:

|

| 428 |

st.error("No such useful information was extracted for this repository!")

|

| 429 |

|

| 430 |

-

with

|

| 431 |

if query_doc.repository_embedding is not None:

|

| 432 |

-

cluster_df = run_similaritycal_search(index,

|

| 433 |

-

query_doc,

|

| 434 |

-

|

| 435 |

-

|

| 436 |

-

cluster_df["

|

| 437 |

-

|

|

|

|

| 438 |

else:

|

| 439 |

st.error("No such useful information was extracted for this repository!")

|

| 440 |

|

| 441 |

-

with

|

| 442 |

if query_doc.repository_embedding is not None:

|

| 443 |

-

|

| 444 |

-

|

| 445 |

-

|

| 446 |

-

|

| 447 |

-

|

| 448 |

-

|

| 449 |

-

|

| 450 |

else:

|

| 451 |

-

|

|

|

|

| 12 |

from docarray.index import InMemoryExactNNIndex

|

| 13 |

from transformers import pipeline

|

| 14 |

from transformers import AutoTokenizer, AutoModel

|

| 15 |

+

from common.repo_doc import RepoDoc

|

| 16 |

+

from common.pair_classifier import PairClassifier

|

| 17 |

from nltk.stem import WordNetLemmatizer

|

| 18 |

|

| 19 |

nltk.download("wordnet")

|

| 20 |

+

KMEANS_TOPIC_MODEL_PATH = Path(__file__).parent.joinpath("data/kmeans_model_topic_scibert.pkl")

|

| 21 |

+

KMEANS_CODE_MODEL_PATH = Path(__file__).parent.joinpath("data/kmeans_model_code_unixcoder.pkl")

|

| 22 |

SIMILARITY_CAL_MODEL_PATH = Path(__file__).parent.joinpath("data/SimilarityCal_model_NO1.pt")

|

| 23 |

+

SCIBERT_MODEL_PATH = "allenai/scibert_scivocab_uncased"

|

| 24 |

+

# SCIBERT_MODEL_PATH = Path(__file__).parent.joinpath("data/scibert_scivocab_uncased") # Download locally

|

| 25 |

device = (

|

| 26 |

"cuda"

|

| 27 |

if torch.cuda.is_available()

|

|

|

|

| 32 |

|

| 33 |

# 1. Product environment

|

| 34 |

# INDEX_PATH = Path(__file__).parent.joinpath("data/index.bin")

|

| 35 |

+

# TOPIC_CLUSTER_PATH = Path(__file__).parent.joinpath("data/repo_topic_clusters.json")

|

| 36 |

+

# CODE_CLUSTER_PATH = Path(__file__).parent.joinpath("data/repo_code_clusters.json")

|

|

|

|

| 37 |

|

| 38 |

# 2. Developing environment

|

| 39 |

INDEX_PATH = Path(__file__).parent.joinpath("data/index_test.bin")

|

| 40 |

+

TOPIC_CLUSTER_PATH = Path(__file__).parent.joinpath("data/repo_topic_clusters_test.json")

|

| 41 |

+

CODE_CLUSTER_PATH = Path(__file__).parent.joinpath("data/repo_code_clusters_test.json")

|

| 42 |

|

| 43 |

|

| 44 |

@st.cache_resource(show_spinner="Loading repositories basic information...")

|

|

|

|

| 62 |

return InMemoryExactNNIndex[RepoDoc](index_file_path=INDEX_PATH), default_doc

|

| 63 |

|

| 64 |

|

| 65 |

+

@st.cache_resource(show_spinner="Loading repositories topic clusters...")

|

| 66 |

+

def load_repo_topic_clusters():

|

| 67 |

+

"""

|

| 68 |

+

The function to load the repo-topic_clusters file

|

| 69 |

+

:return: a dictionary with the repo-topic_clusters

|

| 70 |

+

"""

|

| 71 |

+

with open(TOPIC_CLUSTER_PATH, "r") as file:

|

| 72 |

+

repo_topic_clusters = json.load(file)

|

| 73 |

+

|

| 74 |

+

return repo_topic_clusters

|

| 75 |

+

|

| 76 |

+

|

| 77 |

+

@st.cache_resource(show_spinner="Loading repositories code clusters...")

|

| 78 |

+

def load_repo_code_clusters():

|

| 79 |

"""

|

| 80 |

+

The function to load the repo-code_clusters file

|

| 81 |

+

:return: a dictionary with the repo-code_clusters

|

| 82 |

"""

|

| 83 |

+

with open(CODE_CLUSTER_PATH, "r") as file:

|

| 84 |

+

repo_code_clusters = json.load(file)

|

| 85 |

|

| 86 |

+

return repo_code_clusters

|

| 87 |

|

| 88 |

|

| 89 |

@st.cache_resource(show_spinner="Loading RepoSim4Py pipeline model...")

|

|

|

|

| 113 |

"""

|

| 114 |

tokenizer = AutoTokenizer.from_pretrained(SCIBERT_MODEL_PATH)

|

| 115 |

scibert_model = AutoModel.from_pretrained(SCIBERT_MODEL_PATH).to(device)

|

| 116 |

+

|

| 117 |

return tokenizer, scibert_model

|

| 118 |

|

| 119 |

|

| 120 |

+

@st.cache_resource(show_spinner="Loading KMeans model (topic clusters)...")

|

| 121 |

+

def load_topic_kmeans_model():

|

| 122 |

+

"""

|

| 123 |

+

The function to load KMeans model (topic clusters)

|

| 124 |

+

:return: a KMeans model (topic clusters)

|

| 125 |

+

"""

|

| 126 |

+

return joblib.load(KMEANS_TOPIC_MODEL_PATH)

|

| 127 |

+

|

| 128 |

+

|

| 129 |

+

@st.cache_resource(show_spinner="Loading KMeans model (code clusters)...")

|

| 130 |

+

def load_code_kmeans_model():

|

| 131 |

"""

|

| 132 |

+

The function to load KMeans model (code clusters)

|

| 133 |

+

:return: a KMeans model (code clusters)

|

| 134 |

"""

|

| 135 |

+

return joblib.load(KMEANS_CODE_MODEL_PATH)

|

| 136 |

|

| 137 |

|

| 138 |

@st.cache_resource(show_spinner="Loading SimilarityCal model...")

|

|

|

|

| 141 |

sim_cal_model.load_state_dict(torch.load(SIMILARITY_CAL_MODEL_PATH, map_location=device))

|

| 142 |

sim_cal_model = sim_cal_model.to(device)

|

| 143 |

sim_cal_model = sim_cal_model.eval()

|

| 144 |

+

|

| 145 |

return sim_cal_model

|

| 146 |

|

| 147 |

|

|

|

|

| 155 |

outputs = scibert_model(**inputs)

|

| 156 |

# Use mean pooling for sentence representation

|

| 157 |

embeddings = outputs.last_hidden_state.mean(dim=1).cpu().detach().numpy()

|

| 158 |

+

|

| 159 |

return embeddings

|

| 160 |

|

| 161 |

|

|

|

|

| 176 |

if not extracted_infos:

|

| 177 |

return None

|

| 178 |

|

| 179 |

+

st_proress_bar = st.progress(0.0)

|

| 180 |

with st.spinner(f"Generating embeddings for {repo_name}..."):

|

| 181 |

+

repo_info = _model.forward(extracted_infos, st_progress=st_proress_bar)[0]

|

| 182 |

+

st_proress_bar.empty()

|

| 183 |

|

| 184 |

return repo_info

|

| 185 |

|

|

|

|

| 203 |

return search_results

|

| 204 |

|

| 205 |

|

| 206 |

+

def run_topic_cluster_search(repo_topic_clusters, repo_name_list):

|

| 207 |

+

"""

|

| 208 |

+

The function to search topic cluster number for such repositories.

|

| 209 |

+

:param repo_topic_clusters: dictionary with repo-topic_clusters

|

| 210 |

+

:param repo_name_list: list or array represent repository names

|

| 211 |

+

:return: topic cluster number list

|

| 212 |

+

"""

|

| 213 |

+

topic_clusters = []

|

| 214 |

+

for repo_name in repo_name_list:

|

| 215 |

+

topic_clusters.append(repo_topic_clusters[repo_name])

|

| 216 |

+

|

| 217 |

+

return topic_clusters

|

| 218 |

+

|

| 219 |

+

|

| 220 |

+

def run_code_cluster_search(repo_code_clusters, repo_name_list):

|

| 221 |

"""

|

| 222 |

+

The function to search code cluster number for such repositories.

|

| 223 |

+

:param repo_code_clusters: dictionary with repo-code_clusters

|

| 224 |

:param repo_name_list: list or array represent repository names

|

| 225 |

+

:return: code cluster number list

|

| 226 |

"""

|

| 227 |

+

code_clusters = []

|

| 228 |

for repo_name in repo_name_list:

|

| 229 |

+

code_clusters.append(repo_code_clusters[repo_name])

|

| 230 |

+

|

| 231 |

+

return code_clusters

|

| 232 |

|

| 233 |

|

| 234 |

+

def run_similaritycal_search(index, repo_clusters, model, query_doc, query_cluster_number, limit):

|

| 235 |

"""

|

| 236 |

The function to run SimilarityCal model.

|

| 237 |

:param index: index file

|

| 238 |

+

:param repo_clusters: repo-clusters (topic_cluster or code_cluster) json file

|

| 239 |

:param model: SimilarityCal model

|

| 240 |

:param query_doc: query repo doc

|

| 241 |

+

:param query_cluster_number: query repo cluster number (code or topic)

|

| 242 |

:param limit: limit

|

|

|

|

| 243 |

:return: result dataframe

|

| 244 |

"""

|

| 245 |

docs = index._docs

|

| 246 |

input_embeddings_list = []

|

| 247 |

result_dl = DocList[RepoDoc]()

|

| 248 |

for doc in docs:

|

| 249 |

+

if query_cluster_number != repo_clusters[doc.name]:

|

| 250 |

continue

|

| 251 |

if doc.name != query_doc.name:

|

| 252 |

e1, e2 = (torch.Tensor(query_doc.repository_embedding),

|

|

|

|

| 261 |

similarity_scores = softmax(model_output)[:, 1].cpu().detach().numpy()

|

| 262 |

df = result_dl.to_dataframe()

|

| 263 |

df["scores"] = similarity_scores

|

| 264 |

+

|

| 265 |

+

sorted_df = df.sort_values(by='scores', ascending=False).reset_index(drop=True).head(limit)

|

| 266 |

+

sorted_df["rankings"] = sorted_df["scores"].rank(ascending=False).astype(int)

|

| 267 |

+

sorted_df.drop(columns="scores", inplace=True)

|

| 268 |

+

|

| 269 |

+

return sorted_df

|

| 270 |

|

| 271 |

|

| 272 |

if __name__ == "__main__":

|

| 273 |

# Loading dataset and models

|

| 274 |

index, default_doc = load_index()

|

| 275 |

+

repo_topic_clusters = load_repo_topic_clusters()

|

| 276 |

+

repo_code_clusters = load_repo_code_clusters()

|

| 277 |

pipeline_model = load_pipeline_model()

|

| 278 |

lemmatizer = WordNetLemmatizer()

|

| 279 |

tokenizer, scibert_model = load_scibert_model()

|

| 280 |

+

topic_kmeans = load_topic_kmeans_model()

|

| 281 |

+

code_kmeans = load_code_kmeans_model()

|

| 282 |

sim_cal_model = load_similaritycal_model()

|

| 283 |

|

| 284 |

# Setting the sidebar

|

|

|

|

| 303 |

|

| 304 |

st.multiselect(

|

| 305 |

label="Display columns",

|

| 306 |

+

options=["scores", "name", "topics", "code cluster", "topic cluster", "stars", "license"],

|

| 307 |

+

default=["scores", "name", "topics", "code cluster", "topic cluster", "stars", "license"],

|

| 308 |

help="Select columns to display in the search results",

|

| 309 |

key="display_columns",

|

| 310 |

)

|

|

|

|

| 340 |

records = index.filter({"name": {"$eq": repo_name}})

|

| 341 |

# 1) Building the query information

|

| 342 |

query_doc = default_doc.copy() if not records else records[0]

|

| 343 |

+

# 2) Recording the topic and code cluster numbers

|

| 344 |

+

topic_cluster_number = -1 if not records else repo_topic_clusters[repo_name]

|

| 345 |

+

code_cluster_number = -1 if not records else repo_code_clusters[repo_name]

|

| 346 |

|

| 347 |

+

# Importance 1 ---- situation need to update repository information and cluster numbers

|

| 348 |

if st.session_state.update_index or not records:

|

| 349 |

# 1) Updating repository information by using RepoSim4Py pipeline

|

| 350 |

repo_info = run_pipeline_model(pipeline_model, repo_name, st.session_state.github_token)

|

|

|

|

| 367 |

query_doc.repository_embedding = None if np.all(repo_info["mean_repo_embedding"] == 0) else repo_info[

|

| 368 |

"mean_repo_embedding"].reshape(-1)

|

| 369 |

|

| 370 |

+

# 2) Updating topic cluster number

|

| 371 |

topics_text = ' '.join(

|

| 372 |

[lemmatizer.lemmatize(topic.lower().replace('-', ' ')) for topic in query_doc.topics])

|

| 373 |

topic_embeddings = generate_scibert_embedding(tokenizer, scibert_model, topics_text)

|

| 374 |

+

topic_cluster_number = int(topic_kmeans.predict(topic_embeddings)[0])

|

| 375 |

+

|

| 376 |

+

# 3) Updating code cluster number

|

| 377 |

+

code_embeddings = np.zeros((768,),

|

| 378 |

+

dtype=np.float32) if query_doc.code_embedding is None else query_doc.code_embedding

|

| 379 |

+

code_cluster_number = int(code_kmeans.predict(code_embeddings.reshape(1, -1))[0])

|

| 380 |

|

| 381 |

+

# Importance 2 ---- update index file and repository clusters (topic and code) files

|

| 382 |

if st.session_state.update_index:

|

| 383 |

if not query_doc.license:

|

| 384 |

st.warning(

|

|

|

|

| 392 |

)

|

| 393 |

else:

|

| 394 |

index.index(query_doc)

|

| 395 |

+

repo_topic_clusters[query_doc.name] = topic_cluster_number

|

| 396 |

+

repo_code_clusters[query_doc.name] = code_cluster_number

|

| 397 |

|

| 398 |

+

with st.spinner("Persisting the index and repository clusters (topic and code)..."):

|

| 399 |

index.persist(str(INDEX_PATH))

|

| 400 |

+

with open(TOPIC_CLUSTER_PATH, "w") as file:

|

| 401 |

+

json.dump(repo_topic_clusters, file, indent=4)

|

| 402 |

+

with open(CODE_CLUSTER_PATH, "w") as file:

|

| 403 |

+

json.dump(repo_code_clusters, file, indent=4)

|

| 404 |

st.success("Repository updated to the index!")

|

| 405 |

|

| 406 |

load_index.clear()

|

| 407 |

+

load_repo_topic_clusters.clear()

|

| 408 |

+

load_repo_code_clusters.clear()

|

| 409 |

|

| 410 |

st.session_state["query_doc"] = query_doc

|

| 411 |

+

st.session_state["topic_cluster_number"] = topic_cluster_number

|

| 412 |

+

st.session_state["code_cluster_number"] = code_cluster_number

|

| 413 |

|

| 414 |

# 2. Repository cannot be matched

|

| 415 |

else:

|

|

|

|

| 418 |

# Starting to query

|

| 419 |

if "query_doc" in st.session_state:

|

| 420 |

query_doc = st.session_state.query_doc

|

| 421 |

+

topic_cluster_number = st.session_state.topic_cluster_number

|

| 422 |

+

code_cluster_number = st.session_state.code_cluster_number

|

| 423 |

limit = st.session_state.search_results_limit

|

| 424 |

|

| 425 |

# Showing the query repository information

|

|

|

|

| 429 |

{

|

| 430 |

"name": query_doc.name,

|

| 431 |

"topics": query_doc.topics,

|

| 432 |

+

"topic cluster": topic_cluster_number,

|

| 433 |

+

"code cluster": code_cluster_number,

|

| 434 |

"stars": query_doc.stars,

|

| 435 |

"license": query_doc.license,

|

| 436 |

}

|

|

|

|

| 439 |

)

|

| 440 |

|

| 441 |

display_columns = st.session_state.display_columns

|

| 442 |

+

modified_display_columns = ["rankings" if col == "scores" else col for col in display_columns]

|

| 443 |

+

code_sim_tab, doc_sim_tab, readme_sim_tab, requirement_sim_tab, repo_sim_tab, code_cluster_tab, topic_cluster_tab, = st.tabs(

|

| 444 |

["Code_sim", "Docstring_sim", "Readme_sim", "Requirement_sim",

|

| 445 |

+

"Repository_sim", "Code_cluster_sim", "Topic_cluster_sim"])

|

| 446 |

|

| 447 |

with code_sim_tab:

|

| 448 |

if query_doc.code_embedding is not None:

|

| 449 |

code_sim_res = run_index_search(index, query_doc, "code_embedding", limit)

|

| 450 |

+

topic_cluster_numbers = run_topic_cluster_search(repo_topic_clusters, code_sim_res["name"])

|

| 451 |

+

code_sim_res["topic cluster"] = topic_cluster_numbers

|

| 452 |

+

code_cluster_numbers = run_code_cluster_search(repo_code_clusters, code_sim_res["name"])

|

| 453 |

+

code_sim_res["code cluster"] = code_cluster_numbers

|

| 454 |

st.dataframe(code_sim_res[display_columns])

|

| 455 |

else:

|

| 456 |

st.error("No function code was extracted for this repository!")

|

|

|

|

| 458 |

with doc_sim_tab:

|

| 459 |

if query_doc.doc_embedding is not None:

|

| 460 |

doc_sim_res = run_index_search(index, query_doc, "doc_embedding", limit)

|

| 461 |

+

topic_cluster_numbers = run_topic_cluster_search(repo_topic_clusters, doc_sim_res["name"])

|

| 462 |

+

doc_sim_res["topic cluster"] = topic_cluster_numbers

|

| 463 |

+

code_cluster_numbers = run_code_cluster_search(repo_code_clusters, doc_sim_res["name"])

|

| 464 |

+

doc_sim_res["code cluster"] = code_cluster_numbers

|

| 465 |

st.dataframe(doc_sim_res[display_columns])

|

| 466 |

else:

|

| 467 |

st.error("No function docstring was extracted for this repository!")

|

|

|

|

| 469 |

with readme_sim_tab:

|

| 470 |

if query_doc.readme_embedding is not None:

|

| 471 |

readme_sim_res = run_index_search(index, query_doc, "readme_embedding", limit)

|

| 472 |

+

topic_cluster_numbers = run_topic_cluster_search(repo_topic_clusters, readme_sim_res["name"])

|

| 473 |

+

readme_sim_res["topic cluster"] = topic_cluster_numbers

|

| 474 |

+

code_cluster_numbers = run_code_cluster_search(repo_code_clusters, readme_sim_res["name"])

|

| 475 |

+

readme_sim_res["code cluster"] = code_cluster_numbers

|

| 476 |

st.dataframe(readme_sim_res[display_columns])

|

| 477 |

else:

|

| 478 |

st.error("No readme file was extracted for this repository!")

|

|

|

|

| 480 |

with requirement_sim_tab:

|

| 481 |

if query_doc.requirement_embedding is not None:

|

| 482 |

requirement_sim_res = run_index_search(index, query_doc, "requirement_embedding", limit)

|

| 483 |

+

topic_cluster_numbers = run_topic_cluster_search(repo_topic_clusters, requirement_sim_res["name"])

|

| 484 |

+

requirement_sim_res["topic cluster"] = topic_cluster_numbers

|

| 485 |

+

code_cluster_numbers = run_code_cluster_search(repo_code_clusters, requirement_sim_res["name"])

|

| 486 |

+

requirement_sim_res["code cluster"] = code_cluster_numbers

|

| 487 |

st.dataframe(requirement_sim_res[display_columns])

|

| 488 |

else:

|

| 489 |

st.error("No requirement file was extracted for this repository!")

|

|

|

|

| 492 |

if query_doc.repository_embedding is not None:

|

| 493 |

# Repo Sim tab

|

| 494 |

repo_sim_res = run_index_search(index, query_doc, "repository_embedding", limit)

|

| 495 |

+

topic_cluster_numbers = run_topic_cluster_search(repo_topic_clusters, repo_sim_res["name"])

|

| 496 |

+

repo_sim_res["topic cluster"] = topic_cluster_numbers

|

| 497 |

+

code_cluster_numbers = run_code_cluster_search(repo_code_clusters, repo_sim_res["name"])

|

| 498 |

+

repo_sim_res["code cluster"] = code_cluster_numbers

|

| 499 |

st.dataframe(repo_sim_res[display_columns])

|

| 500 |

else:

|

| 501 |

st.error("No such useful information was extracted for this repository!")

|

| 502 |

|

| 503 |

+

with code_cluster_tab:

|

| 504 |

if query_doc.repository_embedding is not None:

|

| 505 |

+

cluster_df = run_similaritycal_search(index, repo_code_clusters, sim_cal_model,

|

| 506 |

+

query_doc, code_cluster_number, limit)

|

| 507 |

+

code_cluster_numbers = run_code_cluster_search(repo_code_clusters, cluster_df["name"])

|

| 508 |

+

cluster_df["code cluster"] = code_cluster_numbers

|

| 509 |

+

topic_cluster_numbers = run_topic_cluster_search(repo_topic_clusters, cluster_df["name"])

|

| 510 |

+

cluster_df["topic cluster"] = topic_cluster_numbers

|

| 511 |

+

st.dataframe(cluster_df[modified_display_columns])

|

| 512 |

else:

|

| 513 |

st.error("No such useful information was extracted for this repository!")

|

| 514 |

|

| 515 |

+

with topic_cluster_tab:

|

| 516 |

if query_doc.repository_embedding is not None:

|

| 517 |

+

cluster_df = run_similaritycal_search(index, repo_topic_clusters, sim_cal_model,

|

| 518 |

+

query_doc, topic_cluster_number, limit)

|

| 519 |

+

topic_cluster_numbers = run_topic_cluster_search(repo_topic_clusters, cluster_df["name"])

|

| 520 |

+

cluster_df["topic cluster"] = topic_cluster_numbers

|

| 521 |

+

code_cluster_numbers = run_code_cluster_search(repo_code_clusters, cluster_df["name"])

|

| 522 |

+

cluster_df["code cluster"] = code_cluster_numbers

|

| 523 |

+

st.dataframe(cluster_df[modified_display_columns])

|

| 524 |

else:

|

| 525 |

+

topic_cluster_tab.error("No such useful information was extracted for this repository!")

|

assets/Repository-Code Cluster Assignments.png

ADDED

|

assets/Repository-Topic Cluster Assignments.png

ADDED

|

common/__init__.py

ADDED

|

File without changes

|

{data → common}/pair_classifier.py

RENAMED

|

File without changes

|

{data → common}/repo_doc.py

RENAMED

|

File without changes

|

data/{kmeans_model_scibert.pkl → kmeans_model_code_unixcoder.pkl}

RENAMED

|

@@ -1,3 +1,3 @@

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

-

oid sha256:

|

| 3 |

size 967215

|

|

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:bb534645bce9fb19975873003be27e0b386df7550693caed46ee0f1822b16533

|

| 3 |

size 967215

|

data/kmeans_model_topic_scibert.pkl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:48272b4172b3dba079348462044f72f19a004ff65d6cd9222ef424468261f1fb

|

| 3 |

+

size 967215

|

data/repo_code_clusters.json

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

data/repo_code_clusters_test.json

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

data/{repo_clusters.json → repo_topic_clusters.json}

RENAMED

|

File without changes

|

data/{repo_clusters_test.json → repo_topic_clusters_test.json}

RENAMED

|

File without changes

|

requirements.txt

CHANGED

|

@@ -9,4 +9,5 @@ tqdm

|

|

| 9 |

scikit-learn

|

| 10 |

nltk

|

| 11 |

plotly

|

| 12 |

-

joblib

|

|

|

|

|

|

| 9 |

scikit-learn

|

| 10 |

nltk

|

| 11 |

plotly

|

| 12 |

+

joblib

|

| 13 |

+

matplotlib

|