Spaces:

Runtime error

Runtime error

Commit

•

cd3346a

1

Parent(s):

59a4d93

Upload 65 files

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +1 -0

- Dockerfile +54 -0

- LICENSE +21 -0

- README.md +171 -12

- app.py +62 -0

- configs/b5.json +28 -0

- configs/b7.json +29 -0

- download_weights.sh +9 -0

- images/augmentations.jpg +0 -0

- images/loss_plot.png +0 -0

- kernel_utils.py +361 -0

- libs/shape_predictor_68_face_landmarks.dat +3 -0

- logs/.gitkeep +0 -0

- logs/classifier_tf_efficientnet_b7_ns_1/events.out.tfevents.1671630947.Green.15284.0 +3 -0

- logs/classifier_tf_efficientnet_b7_ns_1/events.out.tfevents.1671631032.Green.22668.0 +3 -0

- logs/classifier_tf_efficientnet_b7_ns_1/events.out.tfevents.1671715958.Green.34292.0 +3 -0

- plot_loss.py +45 -0

- predict_folder.py +47 -0

- predict_submission.sh +13 -0

- predictions_1.json +1 -0

- preprocess_data.sh +15 -0

- preprocessing/__init__.py +1 -0

- preprocessing/__pycache__/face_detector.cpython-39.pyc +0 -0

- preprocessing/__pycache__/utils.cpython-39.pyc +0 -0

- preprocessing/compress_videos.py +45 -0

- preprocessing/detect_original_faces.py +63 -0

- preprocessing/extract_crops.py +88 -0

- preprocessing/extract_images.py +42 -0

- preprocessing/face_detector.py +72 -0

- preprocessing/face_encodings.py +55 -0

- preprocessing/generate_diffs.py +75 -0

- preprocessing/generate_folds.py +117 -0

- preprocessing/generate_landmarks.py +76 -0

- preprocessing/utils.py +51 -0

- train.sh +18 -0

- training/__init__.py +0 -0

- training/__pycache__/__init__.cpython-39.pyc +0 -0

- training/__pycache__/losses.cpython-39.pyc +0 -0

- training/datasets/__init__.py +0 -0

- training/datasets/__pycache__/__init__.cpython-39.pyc +0 -0

- training/datasets/__pycache__/classifier_dataset.cpython-39.pyc +0 -0

- training/datasets/__pycache__/validation_set.cpython-39.pyc +0 -0

- training/datasets/classifier_dataset.py +379 -0

- training/datasets/validation_set.py +60 -0

- training/losses.py +28 -0

- training/pipelines/__init__.py +0 -0

- training/pipelines/logs/classifier_tf_efficientnet_b7_ns_1/events.out.tfevents.1671716506.Green.33248.0 +3 -0

- training/pipelines/train_classifier.py +365 -0

- training/tools/__init__.py +0 -0

- training/tools/__pycache__/__init__.cpython-39.pyc +0 -0

.gitattributes

CHANGED

|

@@ -32,3 +32,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 32 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 33 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 32 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 33 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

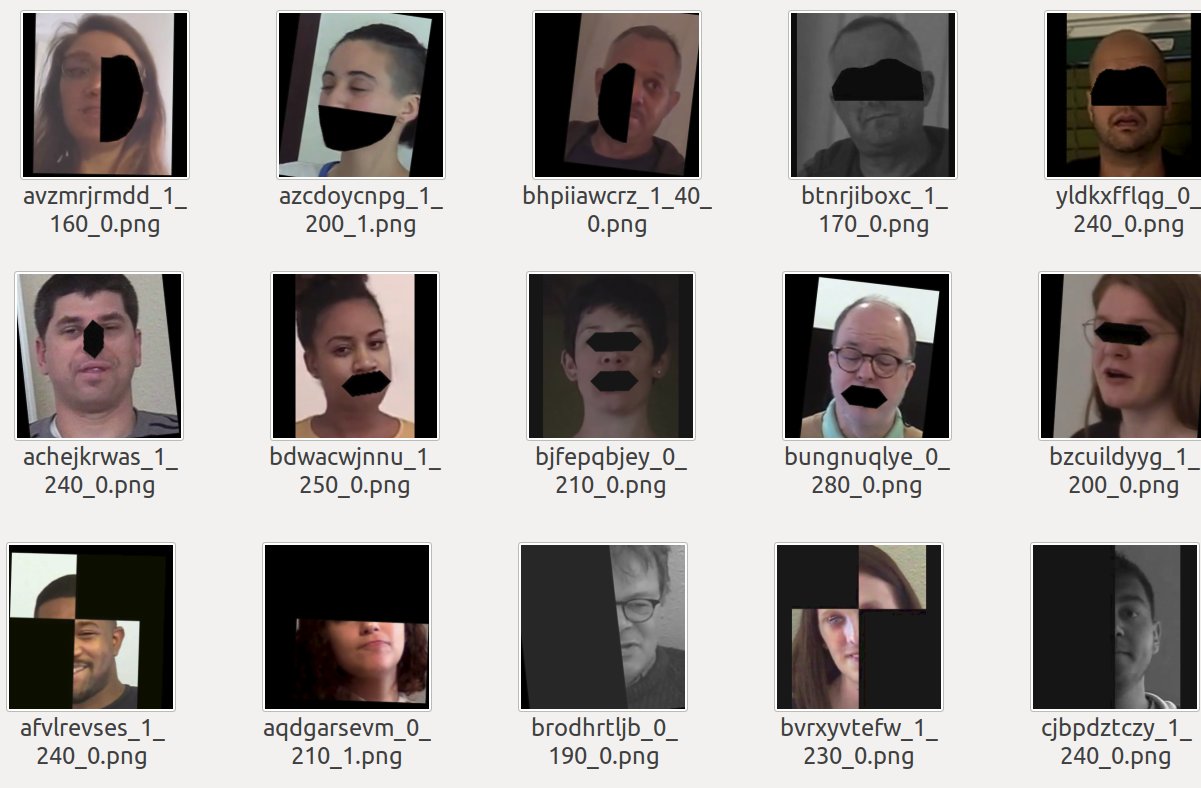

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 35 |

+

libs/shape_predictor_68_face_landmarks.dat filter=lfs diff=lfs merge=lfs -text

|

Dockerfile

ADDED

|

@@ -0,0 +1,54 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

ARG PYTORCH="1.10.0"

|

| 2 |

+

ARG CUDA="11.3"

|

| 3 |

+

ARG CUDNN="8"

|

| 4 |

+

|

| 5 |

+

FROM pytorch/pytorch:${PYTORCH}-cuda${CUDA}-cudnn${CUDNN}-devel

|

| 6 |

+

|

| 7 |

+

ENV TORCH_NVCC_FLAGS="-Xfatbin -compress-all"

|

| 8 |

+

ENV CMAKE_PREFIX_PATH="$(dirname $(which conda))/../"

|

| 9 |

+

|

| 10 |

+

# Setting noninteractive build, setting up tzdata and configuring timezones

|

| 11 |

+

ENV DEBIAN_FRONTEND=noninteractive

|

| 12 |

+

ENV TZ=Europe/Berlin

|

| 13 |

+

RUN ln -snf /usr/share/zoneinfo/$TZ /etc/localtime && echo $TZ > /etc/timezone

|

| 14 |

+

|

| 15 |

+

RUN apt-get update && apt-get install -y libglib2.0-0 libsm6 libxrender-dev libxext6 nano mc glances vim git \

|

| 16 |

+

&& apt-get clean \

|

| 17 |

+

&& rm -rf /var/lib/apt/lists/*

|

| 18 |

+

|

| 19 |

+

# Install cython

|

| 20 |

+

RUN conda install cython -y && conda clean --all

|

| 21 |

+

|

| 22 |

+

# Installing APEX

|

| 23 |

+

RUN pip install -U pip

|

| 24 |

+

RUN git clone https://github.com/NVIDIA/apex

|

| 25 |

+

RUN sed -i 's/check_cuda_torch_binary_vs_bare_metal(torch.utils.cpp_extension.CUDA_HOME)/pass/g' apex/setup.py

|

| 26 |

+

RUN pip install -v --no-cache-dir --global-option="--cpp_ext" --global-option="--cuda_ext" ./apex

|

| 27 |

+

RUN apt-get update -y

|

| 28 |

+

RUN apt-get install build-essential cmake -y

|

| 29 |

+

RUN apt-get install libopenblas-dev liblapack-dev -y

|

| 30 |

+

RUN apt-get install libx11-dev libgtk-3-dev -y

|

| 31 |

+

RUN pip install dlib

|

| 32 |

+

RUN pip install facenet-pytorch

|

| 33 |

+

RUN pip install albumentations==1.0.0 timm==0.4.12 pytorch_toolbelt tensorboardx

|

| 34 |

+

RUN pip install cython jupyter jupyterlab ipykernel matplotlib tqdm pandas

|

| 35 |

+

|

| 36 |

+

# download pretraned Imagenet models

|

| 37 |

+

RUN apt install wget

|

| 38 |

+

RUN wget https://github.com/rwightman/pytorch-image-models/releases/download/v0.1-weights/tf_efficientnet_b7_ns-1dbc32de.pth -P /root/.cache/torch/hub/checkpoints/

|

| 39 |

+

RUN wget https://github.com/rwightman/pytorch-image-models/releases/download/v0.1-weights/tf_efficientnet_b5_ns-6f26d0cf.pth -P /root/.cache/torch/hub/checkpoints/

|

| 40 |

+

|

| 41 |

+

# Setting the working directory

|

| 42 |

+

WORKDIR /workspace

|

| 43 |

+

|

| 44 |

+

# Copying the required codebase

|

| 45 |

+

COPY . /workspace

|

| 46 |

+

|

| 47 |

+

RUN chmod 777 preprocess_data.sh

|

| 48 |

+

RUN chmod 777 train.sh

|

| 49 |

+

RUN chmod 777 predict_submission.sh

|

| 50 |

+

|

| 51 |

+

ENV PYTHONPATH=.

|

| 52 |

+

|

| 53 |

+

CMD ["/bin/bash"]

|

| 54 |

+

|

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2020 Selim Seferbekov

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

README.md

CHANGED

|

@@ -1,12 +1,171 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

## DeepFake Detection (DFDC) Solution by @selimsef

|

| 2 |

+

|

| 3 |

+

## Challenge details:

|

| 4 |

+

|

| 5 |

+

[Kaggle Challenge Page](https://www.kaggle.com/c/deepfake-detection-challenge)

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

### Fake detection articles

|

| 9 |

+

- [The Deepfake Detection Challenge (DFDC) Preview Dataset](https://arxiv.org/abs/1910.08854)

|

| 10 |

+

- [Deep Fake Image Detection Based on Pairwise Learning](https://www.mdpi.com/2076-3417/10/1/370)

|

| 11 |

+

- [DeeperForensics-1.0: A Large-Scale Dataset for Real-World Face Forgery Detection](https://arxiv.org/abs/2001.03024)

|

| 12 |

+

- [DeepFakes and Beyond: A Survey of Face Manipulation and Fake Detection](https://arxiv.org/abs/2001.00179)

|

| 13 |

+

- [Real or Fake? Spoofing State-Of-The-Art Face Synthesis Detection Systems](https://arxiv.org/abs/1911.05351)

|

| 14 |

+

- [CNN-generated images are surprisingly easy to spot... for now](https://arxiv.org/abs/1912.11035)

|

| 15 |

+

- [FakeSpotter: A Simple yet Robust Baseline for Spotting AI-Synthesized Fake Faces](https://arxiv.org/abs/1909.06122)

|

| 16 |

+

- [FakeLocator: Robust Localization of GAN-Based Face Manipulations via Semantic Segmentation Networks with Bells and Whistles](https://arxiv.org/abs/2001.09598)

|

| 17 |

+

- [Media Forensics and DeepFakes: an overview](https://arxiv.org/abs/2001.06564)

|

| 18 |

+

- [Face X-ray for More General Face Forgery Detection](https://arxiv.org/abs/1912.13458)

|

| 19 |

+

|

| 20 |

+

## Solution description

|

| 21 |

+

In general solution is based on frame-by-frame classification approach. Other complex things did not work so well on public leaderboard.

|

| 22 |

+

|

| 23 |

+

#### Face-Detector

|

| 24 |

+

MTCNN detector is chosen due to kernel time limits. It would be better to use S3FD detector as more precise and robust, but opensource Pytorch implementations don't have a license.

|

| 25 |

+

|

| 26 |

+

Input size for face detector was calculated for each video depending on video resolution.

|

| 27 |

+

|

| 28 |

+

- 2x scale for videos with less than 300 pixels wider side

|

| 29 |

+

- no rescale for videos with wider side between 300 and 1000

|

| 30 |

+

- 0.5x scale for videos with wider side > 1000 pixels

|

| 31 |

+

- 0.33x scale for videos with wider side > 1900 pixels

|

| 32 |

+

|

| 33 |

+

### Input size

|

| 34 |

+

As soon as I discovered that EfficientNets significantly outperform other encoders I used only them in my solution.

|

| 35 |

+

As I started with B4 I decided to use "native" size for that network (380x380).

|

| 36 |

+

Due to memory costraints I did not increase input size even for B7 encoder.

|

| 37 |

+

|

| 38 |

+

### Margin

|

| 39 |

+

When I generated crops for training I added 30% of face crop size from each side and used only this setting during the competition.

|

| 40 |

+

See [extract_crops.py](preprocessing/extract_crops.py) for the details

|

| 41 |

+

|

| 42 |

+

### Encoders

|

| 43 |

+

The winning encoder is current state-of-the-art model (EfficientNet B7) pretrained with ImageNet and noisy student [Self-training with Noisy Student improves ImageNet classification

|

| 44 |

+

](https://arxiv.org/abs/1911.04252)

|

| 45 |

+

|

| 46 |

+

### Averaging predictions

|

| 47 |

+

I used 32 frames for each video.

|

| 48 |

+

For each model output instead of simple averaging I used the following heuristic which worked quite well on public leaderbord (0.25 -> 0.22 solo B5).

|

| 49 |

+

```python

|

| 50 |

+

import numpy as np

|

| 51 |

+

|

| 52 |

+

def confident_strategy(pred, t=0.8):

|

| 53 |

+

pred = np.array(pred)

|

| 54 |

+

sz = len(pred)

|

| 55 |

+

fakes = np.count_nonzero(pred > t)

|

| 56 |

+

# 11 frames are detected as fakes with high probability

|

| 57 |

+

if fakes > sz // 2.5 and fakes > 11:

|

| 58 |

+

return np.mean(pred[pred > t])

|

| 59 |

+

elif np.count_nonzero(pred < 0.2) > 0.9 * sz:

|

| 60 |

+

return np.mean(pred[pred < 0.2])

|

| 61 |

+

else:

|

| 62 |

+

return np.mean(pred)

|

| 63 |

+

```

|

| 64 |

+

|

| 65 |

+

### Augmentations

|

| 66 |

+

|

| 67 |

+

I used heavy augmentations by default.

|

| 68 |

+

[Albumentations](https://github.com/albumentations-team/albumentations) library supports most of the augmentations out of the box. Only needed to add IsotropicResize augmentation.

|

| 69 |

+

```

|

| 70 |

+

|

| 71 |

+

def create_train_transforms(size=300):

|

| 72 |

+

return Compose([

|

| 73 |

+

ImageCompression(quality_lower=60, quality_upper=100, p=0.5),

|

| 74 |

+

GaussNoise(p=0.1),

|

| 75 |

+

GaussianBlur(blur_limit=3, p=0.05),

|

| 76 |

+

HorizontalFlip(),

|

| 77 |

+

OneOf([

|

| 78 |

+

IsotropicResize(max_side=size, interpolation_down=cv2.INTER_AREA, interpolation_up=cv2.INTER_CUBIC),

|

| 79 |

+

IsotropicResize(max_side=size, interpolation_down=cv2.INTER_AREA, interpolation_up=cv2.INTER_LINEAR),

|

| 80 |

+

IsotropicResize(max_side=size, interpolation_down=cv2.INTER_LINEAR, interpolation_up=cv2.INTER_LINEAR),

|

| 81 |

+

], p=1),

|

| 82 |

+

PadIfNeeded(min_height=size, min_width=size, border_mode=cv2.BORDER_CONSTANT),

|

| 83 |

+

OneOf([RandomBrightnessContrast(), FancyPCA(), HueSaturationValue()], p=0.7),

|

| 84 |

+

ToGray(p=0.2),

|

| 85 |

+

ShiftScaleRotate(shift_limit=0.1, scale_limit=0.2, rotate_limit=10, border_mode=cv2.BORDER_CONSTANT, p=0.5),

|

| 86 |

+

]

|

| 87 |

+

)

|

| 88 |

+

```

|

| 89 |

+

In addition to these augmentations I wanted to achieve better generalization with

|

| 90 |

+

- Cutout like augmentations (dropping artefacts and parts of face)

|

| 91 |

+

- Dropout part of the image, inspired by [GridMask](https://arxiv.org/abs/2001.04086) and [Severstal Winning Solution](https://www.kaggle.com/c/severstal-steel-defect-detection/discussion/114254)

|

| 92 |

+

|

| 93 |

+

|

| 94 |

+

|

| 95 |

+

## Building docker image

|

| 96 |

+

All libraries and enviroment is already configured with Dockerfile. It requires docker engine https://docs.docker.com/engine/install/ubuntu/ and nvidia docker in your system https://github.com/NVIDIA/nvidia-docker.

|

| 97 |

+

|

| 98 |

+

To build a docker image run `docker build -t df .`

|

| 99 |

+

|

| 100 |

+

## Running docker

|

| 101 |

+

`docker run --runtime=nvidia --ipc=host --rm --volume <DATA_ROOT>:/dataset -it df`

|

| 102 |

+

|

| 103 |

+

## Data preparation

|

| 104 |

+

|

| 105 |

+

Once DFDC dataset is downloaded all the scripts expect to have `dfdc_train_xxx` folders under data root directory.

|

| 106 |

+

|

| 107 |

+

Preprocessing is done in a single script **`preprocess_data.sh`** which requires dataset directory as first argument.

|

| 108 |

+

It will execute the steps below:

|

| 109 |

+

|

| 110 |

+

##### 1. Find face bboxes

|

| 111 |

+

To extract face bboxes I used facenet library, basically only MTCNN.

|

| 112 |

+

`python preprocessing/detect_original_faces.py --root-dir DATA_ROOT`

|

| 113 |

+

This script will detect faces in real videos and store them as jsons in DATA_ROOT/bboxes directory

|

| 114 |

+

|

| 115 |

+

##### 2. Extract crops from videos

|

| 116 |

+

To extract image crops I used bboxes saved before. It will use bounding boxes from original videos for face videos as well.

|

| 117 |

+

`python preprocessing/extract_crops.py --root-dir DATA_ROOT --crops-dir crops`

|

| 118 |

+

This script will extract face crops from videos and save them in DATA_ROOT/crops directory

|

| 119 |

+

|

| 120 |

+

##### 3. Generate landmarks

|

| 121 |

+

From the saved crops it is quite fast to process crops with MTCNN and extract landmarks

|

| 122 |

+

`python preprocessing/generate_landmarks.py --root-dir DATA_ROOT`

|

| 123 |

+

This script will extract landmarks and save them in DATA_ROOT/landmarks directory

|

| 124 |

+

|

| 125 |

+

##### 4. Generate diff SSIM masks

|

| 126 |

+

`python preprocessing/generate_diffs.py --root-dir DATA_ROOT`

|

| 127 |

+

This script will extract SSIM difference masks between real and fake images and save them in DATA_ROOT/diffs directory

|

| 128 |

+

|

| 129 |

+

##### 5. Generate folds

|

| 130 |

+

`python preprocessing/generate_folds.py --root-dir DATA_ROOT --out folds.csv`

|

| 131 |

+

By default it will use 16 splits to have 0-2 folders as a holdout set. Though only 400 videos can be used for validation as well.

|

| 132 |

+

|

| 133 |

+

|

| 134 |

+

## Training

|

| 135 |

+

|

| 136 |

+

Training 5 B7 models with different seeds is done in **`train.sh`** script.

|

| 137 |

+

|

| 138 |

+

During training checkpoints are saved for every epoch.

|

| 139 |

+

|

| 140 |

+

## Hardware requirements

|

| 141 |

+

Mostly trained on devbox configuration with 4xTitan V, thanks to Nvidia and DSB2018 competition where I got these gpus https://www.kaggle.com/c/data-science-bowl-2018/

|

| 142 |

+

|

| 143 |

+

Overall training requires 4 GPUs with 12gb+ memory.

|

| 144 |

+

Batch size needs to be adjusted for standard 1080Ti or 2080Ti graphic cards.

|

| 145 |

+

|

| 146 |

+

As I computed fake loss and real loss separately inside each batch, results might be better with larger batch size, for example on V100 gpus.

|

| 147 |

+

Even though SyncBN is used larger batch on each GPU will lead to less noise as DFDC dataset has some fakes where face detector failed and face crops are not really fakes.

|

| 148 |

+

|

| 149 |

+

## Plotting losses to select checkpoints

|

| 150 |

+

|

| 151 |

+

`python plot_loss.py --log-file logs/<log file>`

|

| 152 |

+

|

| 153 |

+

|

| 154 |

+

|

| 155 |

+

## Inference

|

| 156 |

+

|

| 157 |

+

|

| 158 |

+

Kernel is reproduced with `predict_folder.py` script.

|

| 159 |

+

|

| 160 |

+

|

| 161 |

+

## Pretrained models

|

| 162 |

+

`download_weights.sh` script will download trained models to `weights/` folder. They should be downloaded before building a docker image.

|

| 163 |

+

|

| 164 |

+

Ensemble inference is already preconfigured with `predict_submission.sh` bash script. It expects a directory with videos as first argument and an output csv file as second argument.

|

| 165 |

+

|

| 166 |

+

For example `./predict_submission.sh /mnt/datasets/deepfake/test_videos submission.csv`

|

| 167 |

+

|

| 168 |

+

|

| 169 |

+

|

| 170 |

+

|

| 171 |

+

|

app.py

ADDED

|

@@ -0,0 +1,62 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import argparse

|

| 2 |

+

import os

|

| 3 |

+

import re

|

| 4 |

+

import time

|

| 5 |

+

import cv2

|

| 6 |

+

|

| 7 |

+

import torch

|

| 8 |

+

import pandas as pd

|

| 9 |

+

from kernel_utils import VideoReader, FaceExtractor, confident_strategy, predict_on_video_set

|

| 10 |

+

from training.zoo.classifiers import DeepFakeClassifier

|

| 11 |

+

import gradio as gr

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

def deepfakeclassifier(potential_test_video, option):

|

| 16 |

+

if option == 'Original':

|

| 17 |

+

weights_dir = "./weights"

|

| 18 |

+

models_dir = ["Original_DeepFakeClassifier_tf_efficientnet_b7_ns"]

|

| 19 |

+

else:

|

| 20 |

+

weights_dir = "./weights"

|

| 21 |

+

models_dir = ["Custom_classifier_DeepFakeClassifier_tf_efficientnet_b7_ns"]

|

| 22 |

+

|

| 23 |

+

parts = potential_test_video.split("\\")

|

| 24 |

+

test_videos = [parts[-1]]

|

| 25 |

+

parts[0] += "\\"

|

| 26 |

+

test_dir = parts[:-1]

|

| 27 |

+

test_dir = os.path.join(*test_dir)

|

| 28 |

+

|

| 29 |

+

|

| 30 |

+

models = []

|

| 31 |

+

model_paths = [os.path.join(weights_dir, model) for model in models_dir]

|

| 32 |

+

for path in model_paths:

|

| 33 |

+

model = DeepFakeClassifier(encoder="tf_efficientnet_b7_ns").to('cuda')

|

| 34 |

+

print("loading state dict {}".format(path))

|

| 35 |

+

checkpoint = torch.load(path, map_location="cpu")

|

| 36 |

+

state_dict = checkpoint.get("state_dict", checkpoint)

|

| 37 |

+

model.load_state_dict({re.sub("^module.", "", k): v for k, v in state_dict.items()}, strict=True)

|

| 38 |

+

model.eval()

|

| 39 |

+

del checkpoint

|

| 40 |

+

models.append(model.half())

|

| 41 |

+

|

| 42 |

+

frames_per_video = 32

|

| 43 |

+

video_reader = VideoReader()

|

| 44 |

+

video_read_fn = lambda x: video_reader.read_frames(x, num_frames=frames_per_video)

|

| 45 |

+

face_extractor = FaceExtractor(video_read_fn)

|

| 46 |

+

input_size = 380

|

| 47 |

+

strategy = confident_strategy

|

| 48 |

+

stime = time.time()

|

| 49 |

+

|

| 50 |

+

print("Predicting {} videos".format(len(test_videos)))

|

| 51 |

+

predictions = predict_on_video_set(face_extractor=face_extractor, input_size=input_size, models=models,

|

| 52 |

+

strategy=strategy, frames_per_video=frames_per_video, videos=test_videos,

|

| 53 |

+

num_workers=6, test_dir=test_dir)

|

| 54 |

+

|

| 55 |

+

print("Elapsed:", time.time() - stime)

|

| 56 |

+

return "This video is FAKE with {} probability!".format(predictions[0])

|

| 57 |

+

|

| 58 |

+

demo = gr.Interface(fn=deepfakeclassifier, inputs=[gr.Video(),

|

| 59 |

+

gr.Radio(["Original", "Custom"])] ,outputs="text", description="Original option uses the trained weights of the winning idea. Custom is my trained \

|

| 60 |

+

network. Original optional performs better as it uses much more data for training!")

|

| 61 |

+

|

| 62 |

+

demo.launch()

|

configs/b5.json

ADDED

|

@@ -0,0 +1,28 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"network": "DeepFakeClassifier",

|

| 3 |

+

"encoder": "tf_efficientnet_b5_ns",

|

| 4 |

+

"batches_per_epoch": 2500,

|

| 5 |

+

"size": 380,

|

| 6 |

+

"fp16": true,

|

| 7 |

+

"optimizer": {

|

| 8 |

+

"batch_size": 20,

|

| 9 |

+

"type": "SGD",

|

| 10 |

+

"momentum": 0.9,

|

| 11 |

+

"weight_decay": 1e-4,

|

| 12 |

+

"learning_rate": 0.01,

|

| 13 |

+

"nesterov": true,

|

| 14 |

+

"schedule": {

|

| 15 |

+

"type": "poly",

|

| 16 |

+

"mode": "step",

|

| 17 |

+

"epochs": 30,

|

| 18 |

+

"params": {"max_iter": 75100}

|

| 19 |

+

}

|

| 20 |

+

},

|

| 21 |

+

"normalize": {

|

| 22 |

+

"mean": [0.485, 0.456, 0.406],

|

| 23 |

+

"std": [0.229, 0.224, 0.225]

|

| 24 |

+

},

|

| 25 |

+

"losses": {

|

| 26 |

+

"BinaryCrossentropy": 1

|

| 27 |

+

}

|

| 28 |

+

}

|

configs/b7.json

ADDED

|

@@ -0,0 +1,29 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"network": "DeepFakeClassifier",

|

| 3 |

+

"encoder": "tf_efficientnet_b7_ns",

|

| 4 |

+

"batches_per_epoch": 10000,

|

| 5 |

+

"size": 380,

|

| 6 |

+

"fp16": true,

|

| 7 |

+

"optimizer": {

|

| 8 |

+

"batch_size": 2,

|

| 9 |

+

"type": "SGD",

|

| 10 |

+

"momentum": 0.9,

|

| 11 |

+

"weight_decay": 1e-4,

|

| 12 |

+

"learning_rate": 0.01,

|

| 13 |

+

"nesterov": true,

|

| 14 |

+

"schedule": {

|

| 15 |

+

"type": "poly",

|

| 16 |

+

"mode": "step",

|

| 17 |

+

"epochs": 15,

|

| 18 |

+

"params": {"max_iter": 100500}

|

| 19 |

+

}

|

| 20 |

+

},

|

| 21 |

+

"normalize": {

|

| 22 |

+

"mean": [0.485, 0.456, 0.406],

|

| 23 |

+

"std": [0.229, 0.224, 0.225]

|

| 24 |

+

},

|

| 25 |

+

"losses": {

|

| 26 |

+

"BinaryCrossentropy": 1

|

| 27 |

+

}

|

| 28 |

+

}

|

| 29 |

+

|

download_weights.sh

ADDED

|

@@ -0,0 +1,9 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

tag=0.0.1

|

| 2 |

+

|

| 3 |

+

wget -O weights/final_111_DeepFakeClassifier_tf_efficientnet_b7_ns_0_36 https://github.com/selimsef/dfdc_deepfake_challenge/releases/download/$tag/final_111_DeepFakeClassifier_tf_efficientnet_b7_ns_0_36

|

| 4 |

+

wget -O weights/final_555_DeepFakeClassifier_tf_efficientnet_b7_ns_0_19 https://github.com/selimsef/dfdc_deepfake_challenge/releases/download/$tag/final_555_DeepFakeClassifier_tf_efficientnet_b7_ns_0_19

|

| 5 |

+

wget -O weights/final_777_DeepFakeClassifier_tf_efficientnet_b7_ns_0_29 https://github.com/selimsef/dfdc_deepfake_challenge/releases/download/$tag/final_777_DeepFakeClassifier_tf_efficientnet_b7_ns_0_29

|

| 6 |

+

wget -O weights/final_777_DeepFakeClassifier_tf_efficientnet_b7_ns_0_31 https://github.com/selimsef/dfdc_deepfake_challenge/releases/download/$tag/final_777_DeepFakeClassifier_tf_efficientnet_b7_ns_0_31

|

| 7 |

+

wget -O weights/final_888_DeepFakeClassifier_tf_efficientnet_b7_ns_0_37 https://github.com/selimsef/dfdc_deepfake_challenge/releases/download/$tag/final_888_DeepFakeClassifier_tf_efficientnet_b7_ns_0_37

|

| 8 |

+

wget -O weights/final_888_DeepFakeClassifier_tf_efficientnet_b7_ns_0_40 https://github.com/selimsef/dfdc_deepfake_challenge/releases/download/$tag/final_888_DeepFakeClassifier_tf_efficientnet_b7_ns_0_40

|

| 9 |

+

wget -O weights/final_999_DeepFakeClassifier_tf_efficientnet_b7_ns_0_23 https://github.com/selimsef/dfdc_deepfake_challenge/releases/download/$tag/final_999_DeepFakeClassifier_tf_efficientnet_b7_ns_0_23

|

images/augmentations.jpg

ADDED

|

images/loss_plot.png

ADDED

|

kernel_utils.py

ADDED

|

@@ -0,0 +1,361 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

|

| 3 |

+

import cv2

|

| 4 |

+

import numpy as np

|

| 5 |

+

import torch

|

| 6 |

+

from PIL import Image

|

| 7 |

+

from albumentations.augmentations.functional import image_compression

|

| 8 |

+

from facenet_pytorch.models.mtcnn import MTCNN

|

| 9 |

+

from concurrent.futures import ThreadPoolExecutor

|

| 10 |

+

|

| 11 |

+

from torchvision.transforms import Normalize

|

| 12 |

+

|

| 13 |

+

mean = [0.485, 0.456, 0.406]

|

| 14 |

+

std = [0.229, 0.224, 0.225]

|

| 15 |

+

normalize_transform = Normalize(mean, std)

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

class VideoReader:

|

| 19 |

+

"""Helper class for reading one or more frames from a video file."""

|

| 20 |

+

|

| 21 |

+

def __init__(self, verbose=True, insets=(0, 0)):

|

| 22 |

+

"""Creates a new VideoReader.

|

| 23 |

+

|

| 24 |

+

Arguments:

|

| 25 |

+

verbose: whether to print warnings and error messages

|

| 26 |

+

insets: amount to inset the image by, as a percentage of

|

| 27 |

+

(width, height). This lets you "zoom in" to an image

|

| 28 |

+

to remove unimportant content around the borders.

|

| 29 |

+

Useful for face detection, which may not work if the

|

| 30 |

+

faces are too small.

|

| 31 |

+

"""

|

| 32 |

+

self.verbose = verbose

|

| 33 |

+

self.insets = insets

|

| 34 |

+

|

| 35 |

+

def read_frames(self, path, num_frames, jitter=0, seed=None):

|

| 36 |

+

"""Reads frames that are always evenly spaced throughout the video.

|

| 37 |

+

|

| 38 |

+

Arguments:

|

| 39 |

+

path: the video file

|

| 40 |

+

num_frames: how many frames to read, -1 means the entire video

|

| 41 |

+

(warning: this will take up a lot of memory!)

|

| 42 |

+

jitter: if not 0, adds small random offsets to the frame indices;

|

| 43 |

+

this is useful so we don't always land on even or odd frames

|

| 44 |

+

seed: random seed for jittering; if you set this to a fixed value,

|

| 45 |

+

you probably want to set it only on the first video

|

| 46 |

+

"""

|

| 47 |

+

assert num_frames > 0

|

| 48 |

+

|

| 49 |

+

capture = cv2.VideoCapture(path)

|

| 50 |

+

frame_count = int(capture.get(cv2.CAP_PROP_FRAME_COUNT))

|

| 51 |

+

if frame_count <= 0: return None

|

| 52 |

+

|

| 53 |

+

frame_idxs = np.linspace(0, frame_count - 1, num_frames, endpoint=True, dtype=np.int)

|

| 54 |

+

if jitter > 0:

|

| 55 |

+

np.random.seed(seed)

|

| 56 |

+

jitter_offsets = np.random.randint(-jitter, jitter, len(frame_idxs))

|

| 57 |

+

frame_idxs = np.clip(frame_idxs + jitter_offsets, 0, frame_count - 1)

|

| 58 |

+

|

| 59 |

+

result = self._read_frames_at_indices(path, capture, frame_idxs)

|

| 60 |

+

capture.release()

|

| 61 |

+

return result

|

| 62 |

+

|

| 63 |

+

def read_random_frames(self, path, num_frames, seed=None):

|

| 64 |

+

"""Picks the frame indices at random.

|

| 65 |

+

|

| 66 |

+

Arguments:

|

| 67 |

+

path: the video file

|

| 68 |

+

num_frames: how many frames to read, -1 means the entire video

|

| 69 |

+

(warning: this will take up a lot of memory!)

|

| 70 |

+

"""

|

| 71 |

+

assert num_frames > 0

|

| 72 |

+

np.random.seed(seed)

|

| 73 |

+

|

| 74 |

+

capture = cv2.VideoCapture(path)

|

| 75 |

+

frame_count = int(capture.get(cv2.CAP_PROP_FRAME_COUNT))

|

| 76 |

+

if frame_count <= 0: return None

|

| 77 |

+

|

| 78 |

+

frame_idxs = sorted(np.random.choice(np.arange(0, frame_count), num_frames))

|

| 79 |

+

result = self._read_frames_at_indices(path, capture, frame_idxs)

|

| 80 |

+

|

| 81 |

+

capture.release()

|

| 82 |

+

return result

|

| 83 |

+

|

| 84 |

+

def read_frames_at_indices(self, path, frame_idxs):

|

| 85 |

+

"""Reads frames from a video and puts them into a NumPy array.

|

| 86 |

+

|

| 87 |

+

Arguments:

|

| 88 |

+

path: the video file

|

| 89 |

+

frame_idxs: a list of frame indices. Important: should be

|

| 90 |

+

sorted from low-to-high! If an index appears multiple

|

| 91 |

+

times, the frame is still read only once.

|

| 92 |

+

|

| 93 |

+

Returns:

|

| 94 |

+

- a NumPy array of shape (num_frames, height, width, 3)

|

| 95 |

+

- a list of the frame indices that were read

|

| 96 |

+

|

| 97 |

+

Reading stops if loading a frame fails, in which case the first

|

| 98 |

+

dimension returned may actually be less than num_frames.

|

| 99 |

+

|

| 100 |

+

Returns None if an exception is thrown for any reason, or if no

|

| 101 |

+

frames were read.

|

| 102 |

+

"""

|

| 103 |

+

assert len(frame_idxs) > 0

|

| 104 |

+

capture = cv2.VideoCapture(path)

|

| 105 |

+

result = self._read_frames_at_indices(path, capture, frame_idxs)

|

| 106 |

+

capture.release()

|

| 107 |

+

return result

|

| 108 |

+

|

| 109 |

+

def _read_frames_at_indices(self, path, capture, frame_idxs):

|

| 110 |

+

try:

|

| 111 |

+

frames = []

|

| 112 |

+

idxs_read = []

|

| 113 |

+

for frame_idx in range(frame_idxs[0], frame_idxs[-1] + 1):

|

| 114 |

+

# Get the next frame, but don't decode if we're not using it.

|

| 115 |

+

ret = capture.grab()

|

| 116 |

+

if not ret:

|

| 117 |

+

if self.verbose:

|

| 118 |

+

print("Error grabbing frame %d from movie %s" % (frame_idx, path))

|

| 119 |

+

break

|

| 120 |

+

|

| 121 |

+

# Need to look at this frame?

|

| 122 |

+

current = len(idxs_read)

|

| 123 |

+

if frame_idx == frame_idxs[current]:

|

| 124 |

+

ret, frame = capture.retrieve()

|

| 125 |

+

if not ret or frame is None:

|

| 126 |

+

if self.verbose:

|

| 127 |

+

print("Error retrieving frame %d from movie %s" % (frame_idx, path))

|

| 128 |

+

break

|

| 129 |

+

|

| 130 |

+

frame = self._postprocess_frame(frame)

|

| 131 |

+

frames.append(frame)

|

| 132 |

+

idxs_read.append(frame_idx)

|

| 133 |

+

|

| 134 |

+

if len(frames) > 0:

|

| 135 |

+

return np.stack(frames), idxs_read

|

| 136 |

+

if self.verbose:

|

| 137 |

+

print("No frames read from movie %s" % path)

|

| 138 |

+

return None

|

| 139 |

+

except:

|

| 140 |

+

if self.verbose:

|

| 141 |

+

print("Exception while reading movie %s" % path)

|

| 142 |

+

return None

|

| 143 |

+

|

| 144 |

+

def read_middle_frame(self, path):

|

| 145 |

+

"""Reads the frame from the middle of the video."""

|

| 146 |

+

capture = cv2.VideoCapture(path)

|

| 147 |

+

frame_count = int(capture.get(cv2.CAP_PROP_FRAME_COUNT))

|

| 148 |

+

result = self._read_frame_at_index(path, capture, frame_count // 2)

|

| 149 |

+

capture.release()

|

| 150 |

+

return result

|

| 151 |

+

|

| 152 |

+

def read_frame_at_index(self, path, frame_idx):

|

| 153 |

+

"""Reads a single frame from a video.

|

| 154 |

+

|

| 155 |

+

If you just want to read a single frame from the video, this is more

|

| 156 |

+

efficient than scanning through the video to find the frame. However,

|

| 157 |

+

for reading multiple frames it's not efficient.

|

| 158 |

+

|

| 159 |

+

My guess is that a "streaming" approach is more efficient than a

|

| 160 |

+

"random access" approach because, unless you happen to grab a keyframe,

|

| 161 |

+

the decoder still needs to read all the previous frames in order to

|

| 162 |

+

reconstruct the one you're asking for.

|

| 163 |

+

|

| 164 |

+

Returns a NumPy array of shape (1, H, W, 3) and the index of the frame,

|

| 165 |

+

or None if reading failed.

|

| 166 |

+

"""

|

| 167 |

+

capture = cv2.VideoCapture(path)

|

| 168 |

+

result = self._read_frame_at_index(path, capture, frame_idx)

|

| 169 |

+

capture.release()

|

| 170 |

+

return result

|

| 171 |

+

|

| 172 |

+

def _read_frame_at_index(self, path, capture, frame_idx):

|

| 173 |

+

capture.set(cv2.CAP_PROP_POS_FRAMES, frame_idx)

|

| 174 |

+

ret, frame = capture.read()

|

| 175 |

+

if not ret or frame is None:

|

| 176 |

+

if self.verbose:

|

| 177 |

+

print("Error retrieving frame %d from movie %s" % (frame_idx, path))

|

| 178 |

+

return None

|

| 179 |

+

else:

|

| 180 |

+

frame = self._postprocess_frame(frame)

|

| 181 |

+

return np.expand_dims(frame, axis=0), [frame_idx]

|

| 182 |

+

|

| 183 |

+

def _postprocess_frame(self, frame):

|

| 184 |

+

frame = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

|

| 185 |

+

|

| 186 |

+

if self.insets[0] > 0:

|

| 187 |

+

W = frame.shape[1]

|

| 188 |

+

p = int(W * self.insets[0])

|

| 189 |

+

frame = frame[:, p:-p, :]

|

| 190 |

+

|

| 191 |

+

if self.insets[1] > 0:

|

| 192 |

+

H = frame.shape[1]

|

| 193 |

+

q = int(H * self.insets[1])

|

| 194 |

+

frame = frame[q:-q, :, :]

|

| 195 |

+

|

| 196 |

+

return frame

|

| 197 |

+

|

| 198 |

+

|

| 199 |

+

class FaceExtractor:

|

| 200 |

+

def __init__(self, video_read_fn):

|

| 201 |

+

self.video_read_fn = video_read_fn

|

| 202 |

+

self.detector = MTCNN(margin=0, thresholds=[0.7, 0.8, 0.8], device='cuda')

|

| 203 |

+

|

| 204 |

+

def process_videos(self, input_dir, filenames, video_idxs):

|

| 205 |

+

videos_read = []

|

| 206 |

+

frames_read = []

|

| 207 |

+

frames = []

|

| 208 |

+

results = []

|

| 209 |

+

for video_idx in video_idxs:

|

| 210 |

+

# Read the full-size frames from this video.

|

| 211 |

+

filename = filenames[video_idx]

|

| 212 |

+

video_path = os.path.join(input_dir, filename)

|

| 213 |

+

result = self.video_read_fn(video_path)

|

| 214 |

+

# Error? Then skip this video.

|

| 215 |

+

if result is None: continue

|

| 216 |

+

|

| 217 |

+

videos_read.append(video_idx)

|

| 218 |

+

|

| 219 |

+

# Keep track of the original frames (need them later).

|

| 220 |

+

my_frames, my_idxs = result

|

| 221 |

+

|

| 222 |

+

frames.append(my_frames)

|

| 223 |

+

frames_read.append(my_idxs)

|

| 224 |

+

for i, frame in enumerate(my_frames):

|

| 225 |

+

h, w = frame.shape[:2]

|

| 226 |

+

img = Image.fromarray(frame.astype(np.uint8))

|

| 227 |

+

img = img.resize(size=[s // 2 for s in img.size])

|

| 228 |

+

|

| 229 |

+

batch_boxes, probs = self.detector.detect(img, landmarks=False)

|

| 230 |

+

|

| 231 |

+

faces = []

|

| 232 |

+

scores = []

|

| 233 |

+

if batch_boxes is None:

|

| 234 |

+

continue

|

| 235 |

+

for bbox, score in zip(batch_boxes, probs):

|

| 236 |

+

if bbox is not None:

|

| 237 |

+

xmin, ymin, xmax, ymax = [int(b * 2) for b in bbox]

|

| 238 |

+

w = xmax - xmin

|

| 239 |

+

h = ymax - ymin

|

| 240 |

+

p_h = h // 3

|

| 241 |

+

p_w = w // 3

|

| 242 |

+

crop = frame[max(ymin - p_h, 0):ymax + p_h, max(xmin - p_w, 0):xmax + p_w]

|

| 243 |

+

faces.append(crop)

|

| 244 |

+

scores.append(score)

|

| 245 |

+

|

| 246 |

+

frame_dict = {"video_idx": video_idx,

|

| 247 |

+

"frame_idx": my_idxs[i],

|

| 248 |

+

"frame_w": w,

|

| 249 |

+

"frame_h": h,

|

| 250 |

+

"faces": faces,

|

| 251 |

+

"scores": scores}

|

| 252 |

+

results.append(frame_dict)

|

| 253 |

+

|

| 254 |

+

return results

|

| 255 |

+

|

| 256 |

+

def process_video(self, video_path):

|

| 257 |

+

"""Convenience method for doing face extraction on a single video."""

|

| 258 |

+

input_dir = os.path.dirname(video_path)

|

| 259 |

+

filenames = [os.path.basename(video_path)]

|

| 260 |

+

return self.process_videos(input_dir, filenames, [0])

|

| 261 |

+

|

| 262 |

+

|

| 263 |

+

|

| 264 |

+

def confident_strategy(pred, t=0.8):

|

| 265 |

+

pred = np.array(pred)

|

| 266 |

+

sz = len(pred)

|

| 267 |

+

fakes = np.count_nonzero(pred > t)

|

| 268 |

+

# 11 frames are detected as fakes with high probability

|

| 269 |

+

if fakes > sz // 2.5 and fakes > 11:

|

| 270 |

+

return np.mean(pred[pred > t])

|

| 271 |

+

elif np.count_nonzero(pred < 0.2) > 0.9 * sz:

|

| 272 |

+

return np.mean(pred[pred < 0.2])

|

| 273 |

+

else:

|

| 274 |

+

return np.mean(pred)

|

| 275 |

+

|

| 276 |

+

strategy = confident_strategy

|

| 277 |

+

|

| 278 |

+

|

| 279 |

+

def put_to_center(img, input_size):

|

| 280 |

+

img = img[:input_size, :input_size]

|

| 281 |

+

image = np.zeros((input_size, input_size, 3), dtype=np.uint8)

|

| 282 |

+

start_w = (input_size - img.shape[1]) // 2

|

| 283 |

+

start_h = (input_size - img.shape[0]) // 2

|

| 284 |

+

image[start_h:start_h + img.shape[0], start_w: start_w + img.shape[1], :] = img

|

| 285 |

+

return image

|

| 286 |

+

|

| 287 |

+

|

| 288 |

+

def isotropically_resize_image(img, size, interpolation_down=cv2.INTER_AREA, interpolation_up=cv2.INTER_CUBIC):

|

| 289 |

+

h, w = img.shape[:2]

|

| 290 |

+

if max(w, h) == size:

|

| 291 |

+

return img

|

| 292 |

+

if w > h:

|

| 293 |

+

scale = size / w

|

| 294 |

+

h = h * scale

|

| 295 |

+

w = size

|

| 296 |

+

else:

|

| 297 |

+

scale = size / h

|

| 298 |

+

w = w * scale

|

| 299 |

+

h = size

|

| 300 |

+

interpolation = interpolation_up if scale > 1 else interpolation_down

|

| 301 |

+

resized = cv2.resize(img, (int(w), int(h)), interpolation=interpolation)

|

| 302 |

+

return resized

|

| 303 |

+

|

| 304 |

+

|

| 305 |

+

def predict_on_video(face_extractor, video_path, batch_size, input_size, models, strategy=np.mean,

|

| 306 |

+

apply_compression=False):

|

| 307 |

+

batch_size *= 4

|

| 308 |

+

try:

|

| 309 |

+

faces = face_extractor.process_video(video_path)

|

| 310 |

+

if len(faces) > 0:

|

| 311 |

+

x = np.zeros((batch_size, input_size, input_size, 3), dtype=np.uint8)

|

| 312 |

+

n = 0

|

| 313 |

+

for frame_data in faces:

|

| 314 |

+

for face in frame_data["faces"]:

|

| 315 |

+

resized_face = isotropically_resize_image(face, input_size)

|

| 316 |

+

resized_face = put_to_center(resized_face, input_size)

|

| 317 |

+

if apply_compression:

|

| 318 |

+

resized_face = image_compression(resized_face, quality=90, image_type=".jpg")

|

| 319 |

+

if n + 1 < batch_size:

|

| 320 |

+

x[n] = resized_face

|

| 321 |

+

n += 1

|

| 322 |

+

else:

|

| 323 |

+

pass

|

| 324 |

+

if n > 0:

|

| 325 |

+

x = torch.tensor(x, device="cuda").float()

|

| 326 |

+

# Preprocess the images.

|

| 327 |

+

x = x.permute((0, 3, 1, 2))

|

| 328 |

+

# x = x.to('cpu')

|

| 329 |

+

for i in range(len(x)):

|

| 330 |

+

x[i] = normalize_transform(x[i] / 255.)

|

| 331 |

+

# Make a prediction, then take the average.

|

| 332 |

+

with torch.no_grad():

|

| 333 |

+

preds = []

|

| 334 |

+

for model in models:

|

| 335 |

+

# with torch.cuda.amp.autocast():

|

| 336 |

+

y_pred = model(x[:n].float())

|

| 337 |

+

y_pred = torch.sigmoid(y_pred.squeeze())

|

| 338 |

+

bpred = y_pred[:n].cpu().numpy()

|

| 339 |

+

preds.append(strategy(bpred))

|

| 340 |

+

return np.mean(preds)

|

| 341 |

+

except Exception as e:

|

| 342 |

+

print("Prediction error on video %s: %s" % (video_path, str(e)))

|

| 343 |

+

|

| 344 |

+

return 0.5

|

| 345 |

+

|

| 346 |

+

|

| 347 |

+

def predict_on_video_set(face_extractor, videos, input_size, num_workers, test_dir, frames_per_video, models,

|

| 348 |

+

strategy=np.mean,

|

| 349 |

+

apply_compression=False):

|

| 350 |

+

def process_file(i):

|

| 351 |

+

filename = videos[i]

|

| 352 |

+

y_pred = predict_on_video(face_extractor=face_extractor, video_path=os.path.join(test_dir, filename),

|

| 353 |

+

input_size=input_size,

|

| 354 |

+

batch_size=frames_per_video,

|

| 355 |

+

models=models, strategy=strategy, apply_compression=apply_compression)

|

| 356 |

+

return y_pred

|

| 357 |

+

|

| 358 |

+

with ThreadPoolExecutor(max_workers=num_workers) as ex:

|

| 359 |

+

predictions = ex.map(process_file, range(len(videos)))

|

| 360 |

+

return list(predictions)

|

| 361 |

+

|

libs/shape_predictor_68_face_landmarks.dat

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:fbdc2cb80eb9aa7a758672cbfdda32ba6300efe9b6e6c7a299ff7e736b11b92f

|

| 3 |

+

size 99693937

|

logs/.gitkeep

ADDED

|

File without changes

|

logs/classifier_tf_efficientnet_b7_ns_1/events.out.tfevents.1671630947.Green.15284.0

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:e450ee9880145e3f8ec94a5f7c344e8a24b4c57184d033fa8622a56987a778ba

|

| 3 |

+

size 40

|

logs/classifier_tf_efficientnet_b7_ns_1/events.out.tfevents.1671631032.Green.22668.0

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:b497c6e7dd9bd7d92ab941a7cb3d4778c07b1c91c5cc24d8174a1176b0f25d10

|

| 3 |

+

size 1154

|

logs/classifier_tf_efficientnet_b7_ns_1/events.out.tfevents.1671715958.Green.34292.0

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:95ce902041c80cb054cd04d4c98d9eeecaa51ef6957f8c4dd62a3eba482c1b46

|

| 3 |

+

size 40

|

plot_loss.py

ADDED

|

@@ -0,0 +1,45 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|