Spaces:

Build error

Build error

Commit

·

4acfd41

1

Parent(s):

a74fbfc

Functional Processing Loop

Browse files- __pycache__/app.cpython-37.pyc +0 -0

- __pycache__/loss_functions.cpython-37.pyc +0 -0

- app.py +202 -0

- content/content1.jpg +0 -0

- content/content2.jpg +0 -0

- content/content3.jpg +0 -0

- loss_functions.py +40 -0

- output.mp4 +0 -0

- requirements.txt +5 -0

- style/curvy.jpeg +0 -0

- style/dancing.jpg +0 -0

- style/fresco.jpg +0 -0

- style/rgb.png +0 -0

- style/style1.jpg +0 -0

- style/style2.jpg +0 -0

- style/style3.jpg +0 -0

- style/water_color.jpg +0 -0

__pycache__/app.cpython-37.pyc

ADDED

|

Binary file (4.36 kB). View file

|

|

|

__pycache__/loss_functions.cpython-37.pyc

ADDED

|

Binary file (2.08 kB). View file

|

|

|

app.py

ADDED

|

@@ -0,0 +1,202 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

|

| 3 |

+

os.environ['MKL_SERVICE_FORCE_INTEL'] = '1'

|

| 4 |

+

|

| 5 |

+

from loss_functions import ContentLoss, StyleLoss

|

| 6 |

+

import torchvision.models as models

|

| 7 |

+

|

| 8 |

+

from torch import optim

|

| 9 |

+

from pathlib import Path

|

| 10 |

+

import gradio as gr

|

| 11 |

+

import cv2

|

| 12 |

+

from PIL import Image

|

| 13 |

+

import cv2

|

| 14 |

+

import random, os

|

| 15 |

+

import numpy as np

|

| 16 |

+

import torch

|

| 17 |

+

import torch.nn as nn

|

| 18 |

+

import torchvision.transforms as transforms

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

seed = 2023

|

| 22 |

+

random.seed(seed)

|

| 23 |

+

os.environ['PYTHONHASHSEED'] = str(seed)

|

| 24 |

+

np.random.seed(seed)

|

| 25 |

+

torch.manual_seed(seed)

|

| 26 |

+

torch.cuda.manual_seed(seed)

|

| 27 |

+

torch.backends.cudnn.deterministic = True

|

| 28 |

+

torch.backends.cudnn.benchmark = True

|

| 29 |

+

|

| 30 |

+

# model = create_vgg_model()

|

| 31 |

+

device = 'cuda' if torch.cuda.is_available() else 'cpu'

|

| 32 |

+

cnn = models.vgg16(pretrained=True).features.to(device).eval()

|

| 33 |

+

|

| 34 |

+

|

| 35 |

+

duration = 5

|

| 36 |

+

|

| 37 |

+

content_layers = ['conv_4']

|

| 38 |

+

style_layers = ['conv_1', 'conv_2', 'conv_3', 'conv_4', 'conv_5']

|

| 39 |

+

|

| 40 |

+

|

| 41 |

+

|

| 42 |

+

def predict(content_img, style_img, style, content, lr, epoch):

|

| 43 |

+

# progress(0, desc="Starting...")

|

| 44 |

+

i = 0

|

| 45 |

+

content_losses = []

|

| 46 |

+

style_losses = []

|

| 47 |

+

model = nn.Sequential().to(device)

|

| 48 |

+

|

| 49 |

+

imsize = tuple(content_img.shape[:-1])

|

| 50 |

+

|

| 51 |

+

loader = transforms.Compose([

|

| 52 |

+

transforms.ToTensor()])

|

| 53 |

+

|

| 54 |

+

style_img = cv2.resize(style_img, imsize)

|

| 55 |

+

|

| 56 |

+

content_img = loader(content_img).to(device).unsqueeze(0)

|

| 57 |

+

style_img = loader(style_img).to(device).unsqueeze(0)

|

| 58 |

+

|

| 59 |

+

print(content_img.shape, style_img.shape)

|

| 60 |

+

|

| 61 |

+

for layer in cnn.children():

|

| 62 |

+

if isinstance(layer, nn.Conv2d):

|

| 63 |

+

i += 1

|

| 64 |

+

name = 'conv_{}'.format(i)

|

| 65 |

+

|

| 66 |

+

elif isinstance(layer, nn.ReLU):

|

| 67 |

+

name = 'relu_{}'.format(i)

|

| 68 |

+

layer = nn.ReLU(inplace=False)

|

| 69 |

+

|

| 70 |

+

elif isinstance(layer, nn.MaxPool2d):

|

| 71 |

+

name = 'pool_{}'.format(i)

|

| 72 |

+

|

| 73 |

+

elif isinstance(layer, nn.BatchNorm2d):

|

| 74 |

+

name = 'bn_{}'.format(i)

|

| 75 |

+

|

| 76 |

+

else:

|

| 77 |

+

raise RuntimeError('Unrecognized layer: {}'.format(layer.__class__.__name__))

|

| 78 |

+

|

| 79 |

+

model.add_module(name, layer)

|

| 80 |

+

|

| 81 |

+

if name in content_layers:

|

| 82 |

+

target = model(content_img).detach()

|

| 83 |

+

content_loss = ContentLoss(target)

|

| 84 |

+

model.add_module("content_loss_{}".format(i), content_loss)

|

| 85 |

+

content_losses.append(content_loss)

|

| 86 |

+

|

| 87 |

+

if name in style_layers:

|

| 88 |

+

target_feature = model(style_img).detach()

|

| 89 |

+

style_loss = StyleLoss(target_feature)

|

| 90 |

+

model.add_module("style_loss_{}".format(i), style_loss)

|

| 91 |

+

style_losses.append(style_loss)

|

| 92 |

+

|

| 93 |

+

for i in range(len(model) - 1, -1, -1):

|

| 94 |

+

if isinstance(model[i], ContentLoss) or isinstance(model[i], StyleLoss):

|

| 95 |

+

break

|

| 96 |

+

|

| 97 |

+

model = model[:(i + 1)]

|

| 98 |

+

# model = torch.compile(model)

|

| 99 |

+

input_img = torch.randn(content_img.data.size(), device=device)

|

| 100 |

+

input_img.requires_grad_(True)

|

| 101 |

+

model.requires_grad_(False)

|

| 102 |

+

optimizer = optim.Adam([input_img], lr) #We are using input_img instead of model.parameters bcos input_img is modified

|

| 103 |

+

|

| 104 |

+

|

| 105 |

+

i_=0

|

| 106 |

+

img_history = []

|

| 107 |

+

for i_ in range(epoch):

|

| 108 |

+

with torch.no_grad():

|

| 109 |

+

input_img.clamp_(0, 1)

|

| 110 |

+

|

| 111 |

+

optimizer.zero_grad()

|

| 112 |

+

model(input_img)

|

| 113 |

+

|

| 114 |

+

temp_style_loss = 0

|

| 115 |

+

temp_content_loss = 0

|

| 116 |

+

|

| 117 |

+

for i in style_losses:

|

| 118 |

+

temp_style_loss = temp_style_loss + i.loss

|

| 119 |

+

|

| 120 |

+

for i in content_losses:

|

| 121 |

+

temp_content_loss = temp_content_loss + i.loss

|

| 122 |

+

|

| 123 |

+

loss = temp_style_loss*style + temp_content_loss*content

|

| 124 |

+

loss.backward()

|

| 125 |

+

optimizer.step()

|

| 126 |

+

|

| 127 |

+

img_history.append(np.uint8(torch.permute(input_img[0].clone().cpu().detach(), (1, 2, 0)).numpy()*255.0))

|

| 128 |

+

|

| 129 |

+

i_+=1

|

| 130 |

+

|

| 131 |

+

with torch.no_grad():

|

| 132 |

+

input_img.clamp_(0, 1)

|

| 133 |

+

|

| 134 |

+

print(input_img.shape)

|

| 135 |

+

|

| 136 |

+

|

| 137 |

+

fps = len(img_history) //duration

|

| 138 |

+

fourcc = cv2.VideoWriter_fourcc(*'mp4v')

|

| 139 |

+

pic = img_history[-1]

|

| 140 |

+

out = cv2.VideoWriter('output.mp4', fourcc, int(fps), (pic.shape[1], pic.shape[0]))

|

| 141 |

+

|

| 142 |

+

for img in img_history:

|

| 143 |

+

out.write(img[::,::,::-1])

|

| 144 |

+

out.release()

|

| 145 |

+

|

| 146 |

+

return Image.fromarray(np.uint8(torch.permute(input_img[0], (1, 2, 0)).cpu().detach().numpy()*255.0)), 'output.mp4'

|

| 147 |

+

|

| 148 |

+

|

| 149 |

+

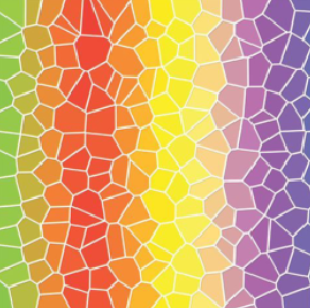

example_list = [['content/content2.jpg',

|

| 150 |

+

'style/style2.jpg', 100000, 0.6, 0.3, 400],

|

| 151 |

+

|

| 152 |

+

['content/content2.jpg',

|

| 153 |

+

'style/curvy.jpeg', 100000, 1, 0.3, 400],

|

| 154 |

+

|

| 155 |

+

|

| 156 |

+

['content/content2.jpg',

|

| 157 |

+

'style/water_color.jpg', 30000, 1, 0.1, 300],

|

| 158 |

+

|

| 159 |

+

|

| 160 |

+

['content/content2.jpg',

|

| 161 |

+

'style/rgb.png', 50000, 1, 0.1, 400],

|

| 162 |

+

|

| 163 |

+

['style/water_color.jpg',

|

| 164 |

+

'style/rgb.png', 70000, 1, 0.1, 400],

|

| 165 |

+

|

| 166 |

+

]

|

| 167 |

+

|

| 168 |

+

title = "Neural Style Transfer 🎨"

|

| 169 |

+

description = "You can run the code on [Kaggle](https://www.kaggle.com/frozenwolf/neural-style-transfer). See the code on [GitHub](https://github.com/FrozenWolf-Cyber/Neural-Style-Transfer) for Neural Style Transfer comparison between VGG16 and AlexNet"

|

| 170 |

+

article = ""

|

| 171 |

+

|

| 172 |

+

|

| 173 |

+

content_input = gr.inputs.Image(label="Upload an image to which you want the style to be applied.",shape= (256,256))

|

| 174 |

+

style_input = gr.inputs.Image( label="Upload Style Image ",shape= (256,256), )

|

| 175 |

+

|

| 176 |

+

|

| 177 |

+

style_slider = gr.inputs.Slider(1,100000,label="Adjust Style Density" ,default=100000,)

|

| 178 |

+

content_slider = gr.inputs.Slider(1/100000,1,label="Content Sharpness" ,default=1,)

|

| 179 |

+

lr_slider = gr.inputs.Slider(0.001,1,label="Learning Rate" ,default=0.1,)

|

| 180 |

+

epoch_slider = gr.inputs.Slider(50,500,label="Epoch Slider" ,default=100,)

|

| 181 |

+

|

| 182 |

+

demo = gr.Interface(fn=predict,

|

| 183 |

+

inputs=[content_input,

|

| 184 |

+

style_input,

|

| 185 |

+

style_slider ,

|

| 186 |

+

content_slider,

|

| 187 |

+

lr_slider,

|

| 188 |

+

epoch_slider

|

| 189 |

+

# style_checkbox

|

| 190 |

+

],

|

| 191 |

+

outputs=[gr.Image(shape= (256,256),),

|

| 192 |

+

gr.Video(shape= (256,256),)],

|

| 193 |

+

examples=example_list,

|

| 194 |

+

title=title,

|

| 195 |

+

description=description,

|

| 196 |

+

article=article)

|

| 197 |

+

|

| 198 |

+

|

| 199 |

+

demo.launch(debug=False,

|

| 200 |

+

share=False)

|

| 201 |

+

|

| 202 |

+

|

content/content1.jpg

ADDED

|

content/content2.jpg

ADDED

|

content/content3.jpg

ADDED

|

loss_functions.py

ADDED

|

@@ -0,0 +1,40 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

import torch

|

| 3 |

+

import torch.nn as nn

|

| 4 |

+

import torch.nn.functional as F

|

| 5 |

+

|

| 6 |

+

class ContentLoss(nn.Module):

|

| 7 |

+

def __init__(self, target,):

|

| 8 |

+

super().__init__()

|

| 9 |

+

self.target = target.detach()

|

| 10 |

+

|

| 11 |

+

def forward(self, input):

|

| 12 |

+

self.loss = F.mse_loss(input, self.target)

|

| 13 |

+

return input

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

class StyleLoss(nn.Module):

|

| 17 |

+

def __init__(self, target_feature):

|

| 18 |

+

super().__init__()

|

| 19 |

+

self.target = self.gram_matrix(target_feature).detach()

|

| 20 |

+

|

| 21 |

+

def gram_matrix(self,input):

|

| 22 |

+

a, b, c, d = input.size()

|

| 23 |

+

features = input.view(a * b, c * d)

|

| 24 |

+

G = torch.mm(features, features.t())

|

| 25 |

+

return G.div(a * b * c * d)

|

| 26 |

+

|

| 27 |

+

def forward(self, input):

|

| 28 |

+

G = self.gram_matrix(input)

|

| 29 |

+

self.loss = F.mse_loss(G, self.target)

|

| 30 |

+

return input

|

| 31 |

+

|

| 32 |

+

|

| 33 |

+

class Normalization(nn.Module):

|

| 34 |

+

def __init__(self, mean, std):

|

| 35 |

+

super().__init__()

|

| 36 |

+

self.mean = torch.tensor(mean).view(-1, 1, 1)

|

| 37 |

+

self.std = torch.tensor(std).view(-1, 1, 1)

|

| 38 |

+

|

| 39 |

+

def forward(self, img):

|

| 40 |

+

return (img - self.mean) / self.std

|

output.mp4

ADDED

|

Binary file (714 kB). View file

|

|

|

requirements.txt

ADDED

|

@@ -0,0 +1,5 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

torch

|

| 2 |

+

torchvision

|

| 3 |

+

gradio

|

| 4 |

+

opencv-python

|

| 5 |

+

|

style/curvy.jpeg

ADDED

|

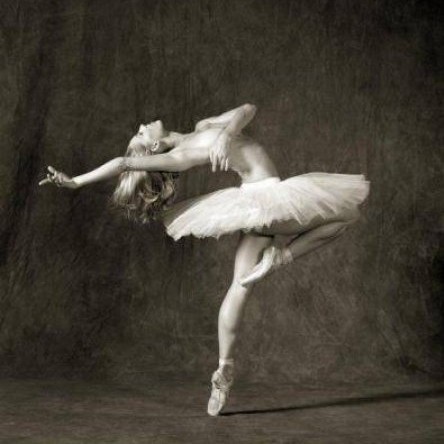

style/dancing.jpg

ADDED

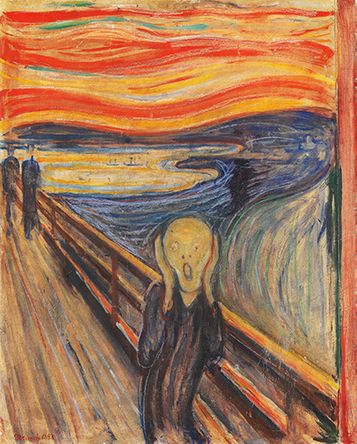

|

style/fresco.jpg

ADDED

|

style/rgb.png

ADDED

|

style/style1.jpg

ADDED

|

style/style2.jpg

ADDED

|

style/style3.jpg

ADDED

|

style/water_color.jpg

ADDED

|