Jordan Pierce

commited on

Commit

•

a1d71d0

1

Parent(s):

2fa9cbf

initial commit

Browse files- .gitattributes +1 -0

- Dockerfile +10 -0

- app.py +38 -0

- images/crab.png +3 -0

- images/eel.png +3 -0

- images/fish.png +3 -0

- images/fish_2.png +3 -0

- images/fish_3.png +3 -0

- images/fish_4.png +3 -0

- images/fish_5.png +3 -0

- images/flat_fish.png +3 -0

- images/flat_red_fish.png +3 -0

- images/jelly.png +3 -0

- images/jelly_2.png +3 -0

- images/jelly_3.png +3 -0

- images/jelly_4.png +3 -0

- images/puff.png +3 -0

- images/red_fish.png +3 -0

- images/red_fish_2.png +3 -0

- images/scene.png +3 -0

- images/scene_2.png +3 -0

- images/scene_3.png +3 -0

- images/scene_4.png +3 -0

- images/scene_5.png +3 -0

- images/scene_6.png +3 -0

- images/soft_coral.png +3 -0

- images/squid.png +3 -0

- images/starfish.png +3 -0

- images/starfish_2.png +3 -0

- inference.py +69 -0

- models/deepsea-detector.pt +3 -0

- models/results-deepsea-detector-datasetv1-trainv1/val_batch0_labels.jpg +0 -0

- models/results-deepsea-detector-datasetv1-trainv1/val_batch0_pred.jpg +0 -0

- models/results-deepsea-detector-datasetv1-trainv1/val_batch1_labels.jpg +0 -0

- models/results-deepsea-detector-datasetv1-trainv1/val_batch1_pred.jpg +0 -0

- models/results-deepsea-detector-datasetv1-trainv1/val_batch2_labels.jpg +0 -0

- models/results-deepsea-detector-datasetv1-trainv1/val_batch2_pred.jpg +0 -0

- requirements.txt +3 -0

- tator_inference.py +100 -0

.gitattributes

CHANGED

|

@@ -31,3 +31,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 31 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 32 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 33 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 31 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 32 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 33 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 34 |

+

*.png filter=lfs diff=lfs merge=lfs -text

|

Dockerfile

ADDED

|

@@ -0,0 +1,10 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

FROM python:3.7

|

| 2 |

+

|

| 3 |

+

RUN apt-get update \

|

| 4 |

+

&& apt-get install ffmpeg libsm6 libxext6 -y

|

| 5 |

+

|

| 6 |

+

RUN pip install yolov5 tator gradio

|

| 7 |

+

|

| 8 |

+

COPY . ./

|

| 9 |

+

|

| 10 |

+

CMD [ "python", "-u", "./tator_inference.py" ]

|

app.py

ADDED

|

@@ -0,0 +1,38 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import glob

|

| 2 |

+

import gradio as gr

|

| 3 |

+

from inference import *

|

| 4 |

+

from PIL import Image

|

| 5 |

+

|

| 6 |

+

|

| 7 |

+

def gradio_app(image_path):

|

| 8 |

+

"""A function that send the file to the inference pipeline, and filters

|

| 9 |

+

some predictions before outputting to gradio interface."""

|

| 10 |

+

|

| 11 |

+

predictions = run_inference(image_path)

|

| 12 |

+

|

| 13 |

+

out_img = Image.fromarray(predictions.render()[0])

|

| 14 |

+

|

| 15 |

+

return out_img

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

title = "UWROV Deepsea Detector"

|

| 19 |

+

description = "Gradio demo for UWROV Deepsea Detector: Developed by Peyton " \

|

| 20 |

+

"Lee, Neha Nagvekar, and Cassandra Lam as part of the " \

|

| 21 |

+

"Underwater Remotely Operated Vehicles Team (UWROV) at the " \

|

| 22 |

+

"University of Washington. Deepsea Detector is built on " \

|

| 23 |

+

"MBARI's Monterey Bay Benthic Object Detector, which can also " \

|

| 24 |

+

"be found in FathomNet's Model Zoo. The model is trained on " \

|

| 25 |

+

"data from NOAA Ocean Exploration and FathomNet, " \

|

| 26 |

+

"with assistance from WoRMS for organism classification. All " \

|

| 27 |

+

"the images and associated annotations we used can be found in " \

|

| 28 |

+

"our Roboflow project. "

|

| 29 |

+

|

| 30 |

+

examples = glob.glob("images/*.png")

|

| 31 |

+

|

| 32 |

+

gr.Interface(gradio_app,

|

| 33 |

+

inputs=[gr.inputs.Image(type="filepath")],

|

| 34 |

+

outputs=gr.outputs.Image(type="pil"),

|

| 35 |

+

enable_queue=True,

|

| 36 |

+

title=title,

|

| 37 |

+

description=description,

|

| 38 |

+

examples=examples).launch()

|

images/crab.png

ADDED

|

Git LFS Details

|

images/eel.png

ADDED

|

Git LFS Details

|

images/fish.png

ADDED

|

Git LFS Details

|

images/fish_2.png

ADDED

|

Git LFS Details

|

images/fish_3.png

ADDED

|

Git LFS Details

|

images/fish_4.png

ADDED

|

Git LFS Details

|

images/fish_5.png

ADDED

|

Git LFS Details

|

images/flat_fish.png

ADDED

|

Git LFS Details

|

images/flat_red_fish.png

ADDED

|

Git LFS Details

|

images/jelly.png

ADDED

|

Git LFS Details

|

images/jelly_2.png

ADDED

|

Git LFS Details

|

images/jelly_3.png

ADDED

|

Git LFS Details

|

images/jelly_4.png

ADDED

|

Git LFS Details

|

images/puff.png

ADDED

|

Git LFS Details

|

images/red_fish.png

ADDED

|

Git LFS Details

|

images/red_fish_2.png

ADDED

|

Git LFS Details

|

images/scene.png

ADDED

|

Git LFS Details

|

images/scene_2.png

ADDED

|

Git LFS Details

|

images/scene_3.png

ADDED

|

Git LFS Details

|

images/scene_4.png

ADDED

|

Git LFS Details

|

images/scene_5.png

ADDED

|

Git LFS Details

|

images/scene_6.png

ADDED

|

Git LFS Details

|

images/soft_coral.png

ADDED

|

Git LFS Details

|

images/squid.png

ADDED

|

Git LFS Details

|

images/starfish.png

ADDED

|

Git LFS Details

|

images/starfish_2.png

ADDED

|

Git LFS Details

|

inference.py

ADDED

|

@@ -0,0 +1,69 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import cv2

|

| 2 |

+

import glob

|

| 3 |

+

import numpy as np

|

| 4 |

+

import torch

|

| 5 |

+

import yolov5

|

| 6 |

+

from typing import Dict, Tuple, Union, List, Optional

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

# -----------------------------------------------------------------------------

|

| 10 |

+

# Configs

|

| 11 |

+

# -----------------------------------------------------------------------------

|

| 12 |

+

|

| 13 |

+

model_path = "models/deepsea-detector.pt"

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

# -----------------------------------------------------------------------------

|

| 17 |

+

# YOLOv5 class

|

| 18 |

+

# -----------------------------------------------------------------------------

|

| 19 |

+

|

| 20 |

+

class YOLO:

|

| 21 |

+

"""Wrapper class for loading and running YOLO model"""

|

| 22 |

+

|

| 23 |

+

def __init__(self, model_path: str, device: Optional[str] = None):

|

| 24 |

+

|

| 25 |

+

# load model

|

| 26 |

+

self.model = yolov5.load(model_path, device=device)

|

| 27 |

+

|

| 28 |

+

def __call__(

|

| 29 |

+

self,

|

| 30 |

+

img: Union[str, np.ndarray],

|

| 31 |

+

conf_threshold: float = 0.25,

|

| 32 |

+

iou_threshold: float = 0.45,

|

| 33 |

+

image_size: int = 720,

|

| 34 |

+

classes: Optional[List[int]] = None) -> torch.Tensor:

|

| 35 |

+

self.model.conf = conf_threshold

|

| 36 |

+

self.model.iou = iou_threshold

|

| 37 |

+

|

| 38 |

+

if classes is not None:

|

| 39 |

+

self.model.classes = classes

|

| 40 |

+

|

| 41 |

+

# pylint: disable=not-callable

|

| 42 |

+

detections = self.model(img, size=image_size)

|

| 43 |

+

|

| 44 |

+

return detections

|

| 45 |

+

|

| 46 |

+

|

| 47 |

+

def run_inference(image_path):

|

| 48 |

+

"""Helper function to execute the inference."""

|

| 49 |

+

|

| 50 |

+

predictions = model(image_path)

|

| 51 |

+

|

| 52 |

+

return predictions

|

| 53 |

+

|

| 54 |

+

|

| 55 |

+

# -----------------------------------------------------------------------------

|

| 56 |

+

# Model Creation

|

| 57 |

+

# -----------------------------------------------------------------------------

|

| 58 |

+

model = YOLO(model_path, device='cpu')

|

| 59 |

+

|

| 60 |

+

if __name__ == "__main__":

|

| 61 |

+

|

| 62 |

+

# For demo purposes: run through a couple of test

|

| 63 |

+

# images and then output the predictions in a folder.

|

| 64 |

+

test_images = glob.glob("images/*.png")

|

| 65 |

+

|

| 66 |

+

for test_image in test_images:

|

| 67 |

+

predictions = run_inference(test_image)

|

| 68 |

+

|

| 69 |

+

print("Done.")

|

models/deepsea-detector.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:14c5a32e23e068bec7849726065f02da91f5cb57eedd4d28186403bdfe4f66a8

|

| 3 |

+

size 175330621

|

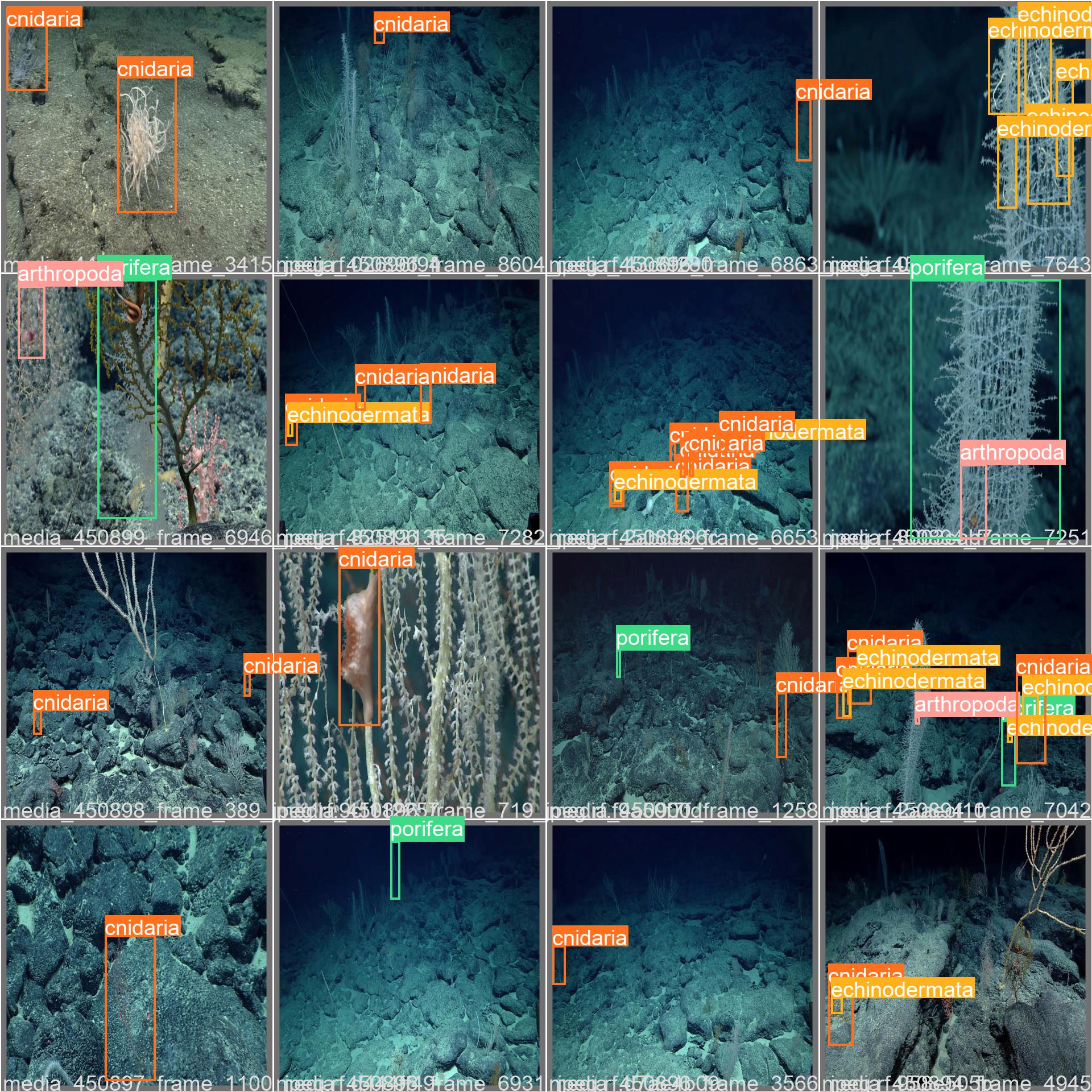

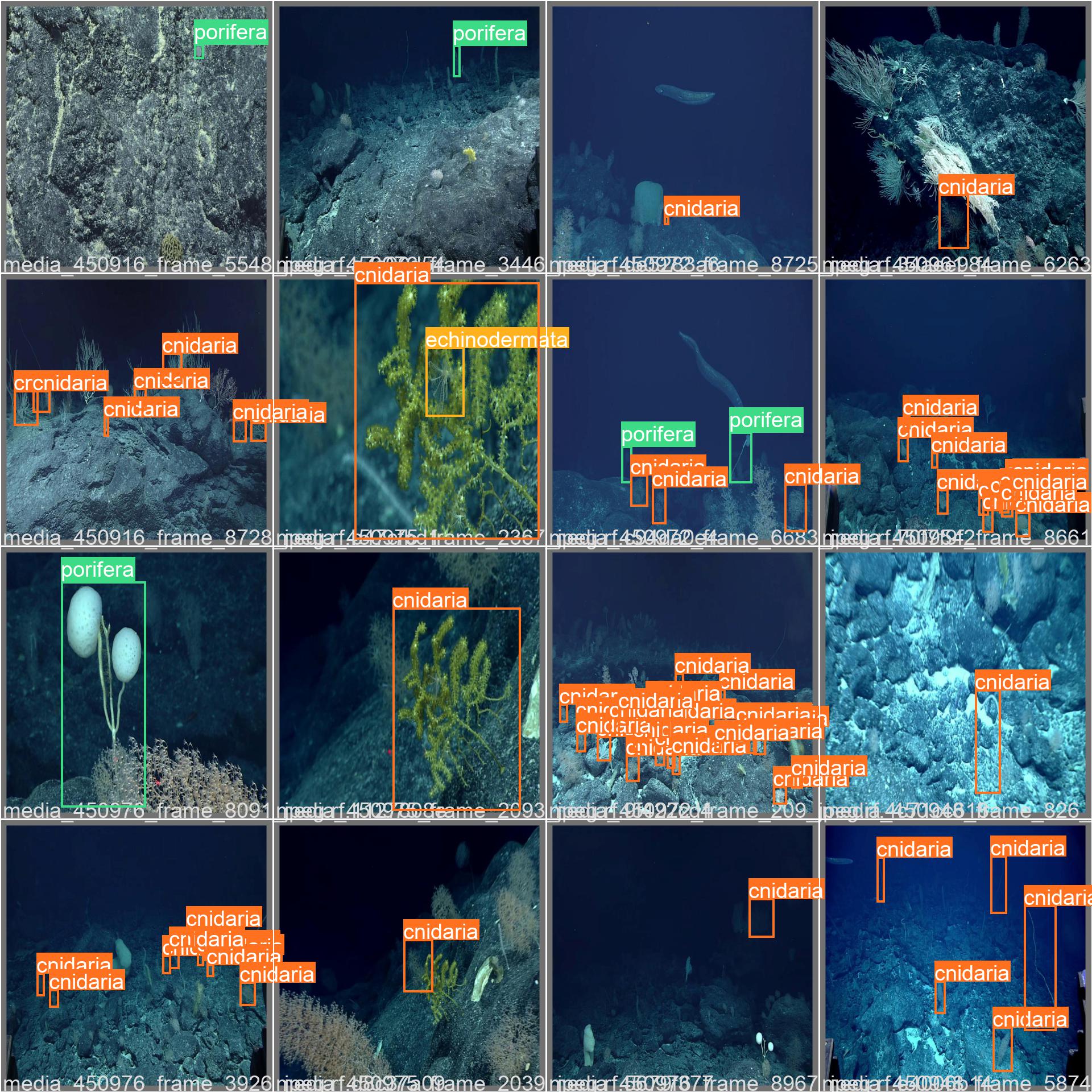

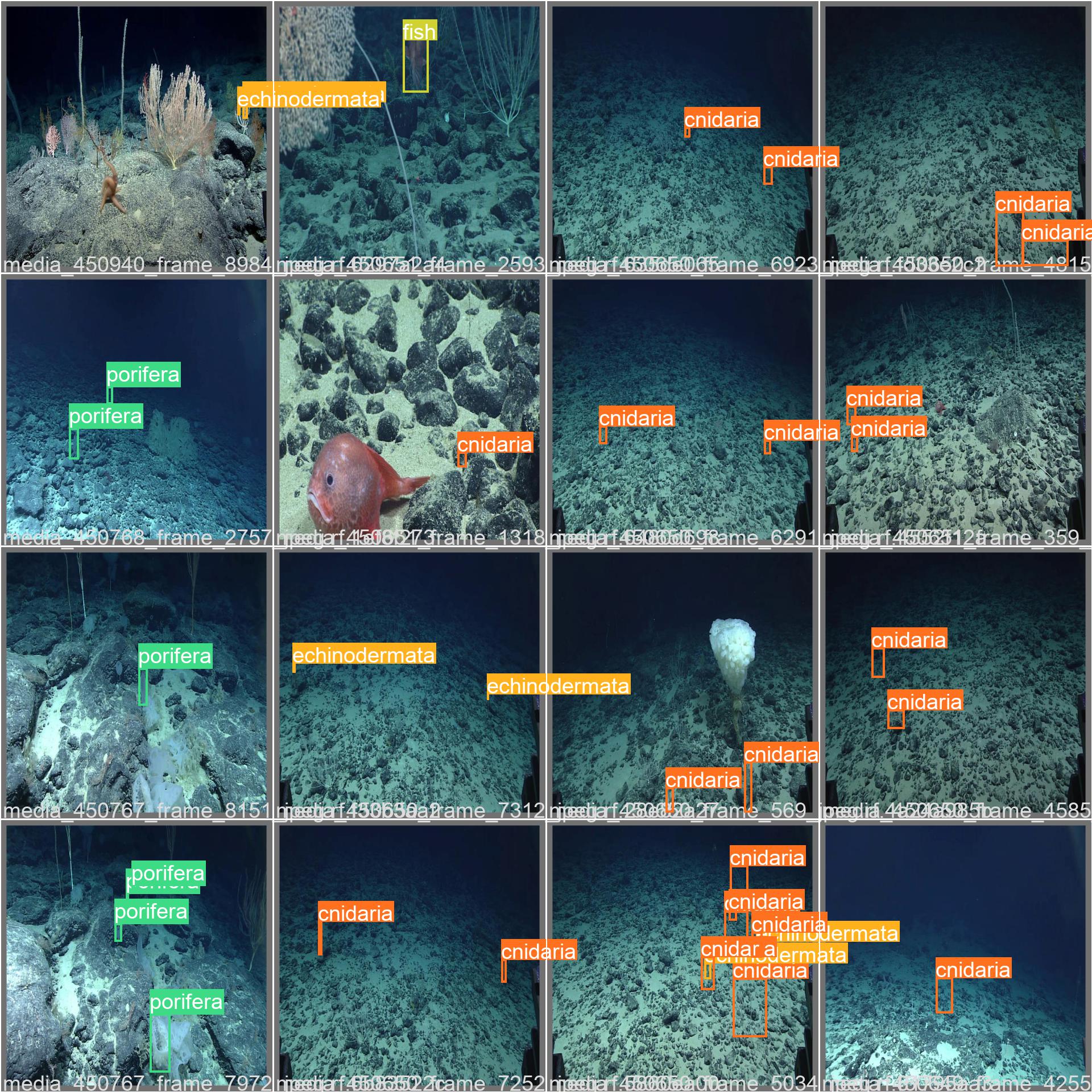

models/results-deepsea-detector-datasetv1-trainv1/val_batch0_labels.jpg

ADDED

|

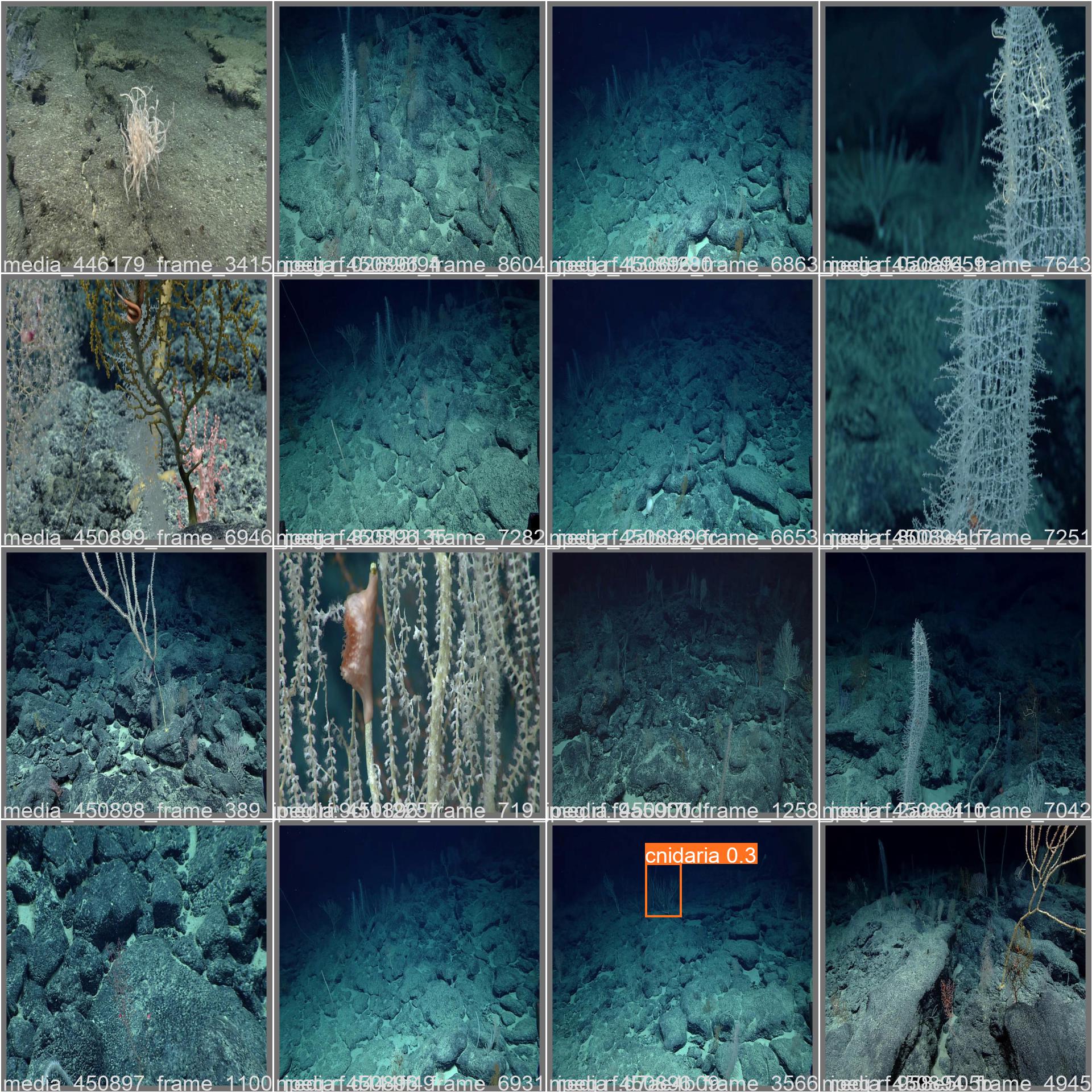

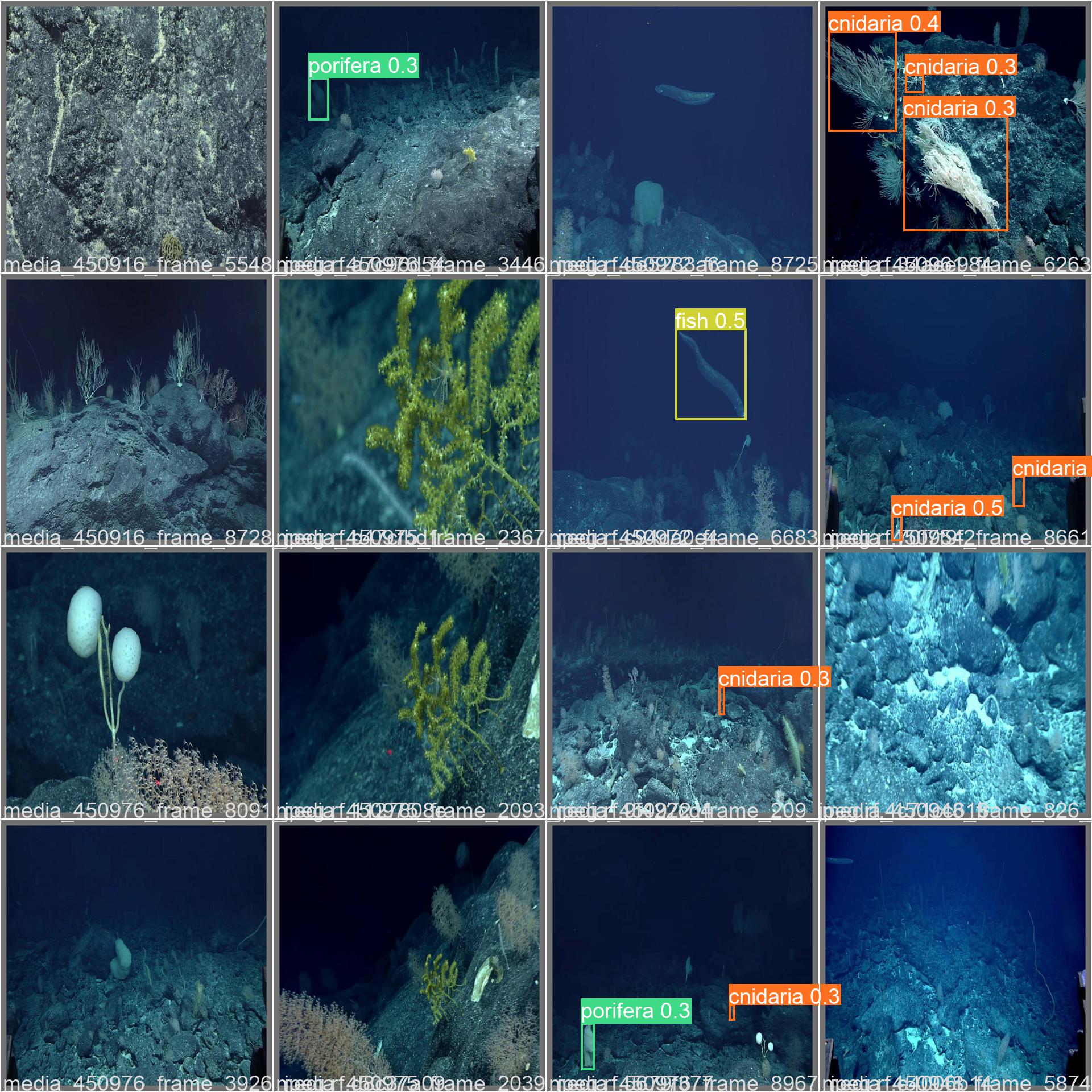

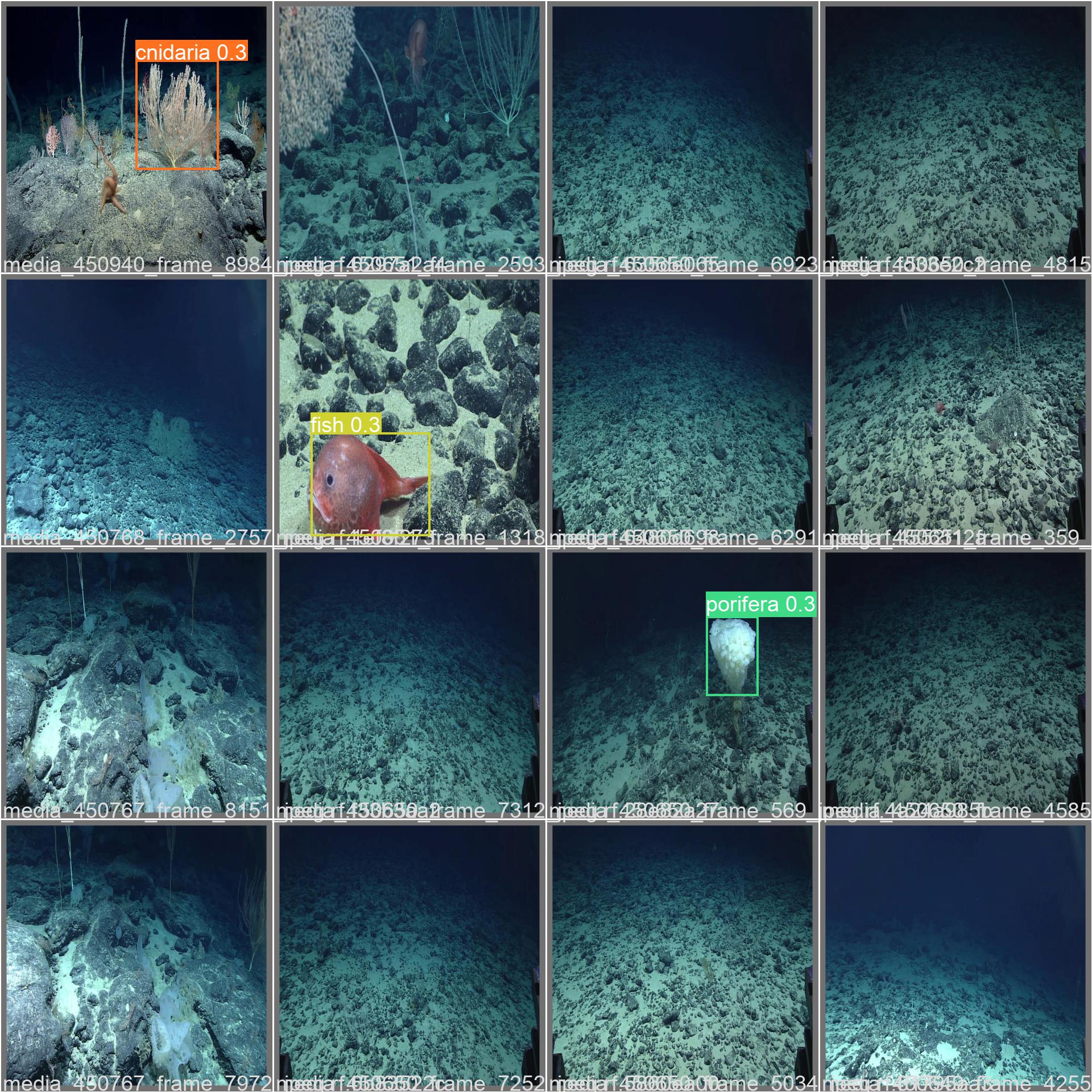

models/results-deepsea-detector-datasetv1-trainv1/val_batch0_pred.jpg

ADDED

|

models/results-deepsea-detector-datasetv1-trainv1/val_batch1_labels.jpg

ADDED

|

models/results-deepsea-detector-datasetv1-trainv1/val_batch1_pred.jpg

ADDED

|

models/results-deepsea-detector-datasetv1-trainv1/val_batch2_labels.jpg

ADDED

|

models/results-deepsea-detector-datasetv1-trainv1/val_batch2_pred.jpg

ADDED

|

requirements.txt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

yolov5

|

| 2 |

+

tator

|

| 3 |

+

gradio

|

tator_inference.py

ADDED

|

@@ -0,0 +1,100 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import logging

|

| 3 |

+

from tempfile import TemporaryFile

|

| 4 |

+

|

| 5 |

+

import cv2

|

| 6 |

+

import numpy as np

|

| 7 |

+

from PIL import Image

|

| 8 |

+

|

| 9 |

+

import tator

|

| 10 |

+

import inference

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

logger = logging.getLogger(__name__)

|

| 14 |

+

logger.setLevel(logging.INFO)

|

| 15 |

+

|

| 16 |

+

# Read environment variables that are provided from TATOR

|

| 17 |

+

host = os.getenv('HOST')

|

| 18 |

+

token = os.getenv('TOKEN')

|

| 19 |

+

project_id = int(os.getenv('PROJECT_ID'))

|

| 20 |

+

media_ids = [int(id_) for id_ in os.getenv('MEDIA_IDS').split(',')]

|

| 21 |

+

frames_per_inference = int(os.getenv('FRAMES_PER_INFERENCE', 30))

|

| 22 |

+

|

| 23 |

+

# Set up the TATOR API.

|

| 24 |

+

api = tator.get_api(host, token)

|

| 25 |

+

|

| 26 |

+

# Iterate through each video.

|

| 27 |

+

for media_id in media_ids:

|

| 28 |

+

|

| 29 |

+

# Download video.

|

| 30 |

+

media = api.get_media(media_id)

|

| 31 |

+

logger.info(f"Downloading {media.name}...")

|

| 32 |

+

out_path = f"/tmp/{media.name}"

|

| 33 |

+

for progress in tator.util.download_media(api, media, out_path):

|

| 34 |

+

logger.info(f"Download progress: {progress}%")

|

| 35 |

+

|

| 36 |

+

# Do inference on each video.

|

| 37 |

+

logger.info(f"Doing inference on {media.name}...")

|

| 38 |

+

localizations = []

|

| 39 |

+

vid = cv2.VideoCapture(out_path)

|

| 40 |

+

frame_number = 0

|

| 41 |

+

|

| 42 |

+

# Read *every* frame from the video, break when at the end.

|

| 43 |

+

while True:

|

| 44 |

+

ret, frame = vid.read()

|

| 45 |

+

if not ret:

|

| 46 |

+

break

|

| 47 |

+

|

| 48 |

+

# Create a temporary file, access the image data, save data to file.

|

| 49 |

+

framefile = TemporaryFile(suffix='.jpg')

|

| 50 |

+

im = Image.fromarray(frame)

|

| 51 |

+

im.save(framefile)

|

| 52 |

+

|

| 53 |

+

# For every N frames, make a prediction; append prediction results

|

| 54 |

+

# to a list, increase the frame count.

|

| 55 |

+

if frame_number % frames_per_inference == 0:

|

| 56 |

+

|

| 57 |

+

spec = {}

|

| 58 |

+

|

| 59 |

+

# Predictions contains all information inside pandas dataframe

|

| 60 |

+

predictions = inference.run_inference(framefile)

|

| 61 |

+

|

| 62 |

+

for i, r in predictions.pandas().xyxy[0].iterrows:

|

| 63 |

+

|

| 64 |

+

spec['media_id'] = media_id

|

| 65 |

+

spec['type'] = None # Unsure, docs not specific

|

| 66 |

+

spec['frame'] = frame_number

|

| 67 |

+

|

| 68 |

+

x, y, x2, y2 = r['xmin'], r['ymin'], r['xmax'], r['ymax']

|

| 69 |

+

w, h = x2 - x, y2 - y

|

| 70 |

+

|

| 71 |

+

spec['x'] = x

|

| 72 |

+

spec['y'] = y

|

| 73 |

+

spec['width'] = w

|

| 74 |

+

spec['height'] = h

|

| 75 |

+

spec['class_category'] = r['name']

|

| 76 |

+

spec['confidence'] = r['confidence']

|

| 77 |

+

|

| 78 |

+

localizations.append(spec)

|

| 79 |

+

|

| 80 |

+

frame_number += 1

|

| 81 |

+

|

| 82 |

+

# End interaction with video properly.

|

| 83 |

+

vid.release()

|

| 84 |

+

|

| 85 |

+

logger.info(f"Uploading object detections on {media.name}...")

|

| 86 |

+

|

| 87 |

+

# Create the localizations in the video.

|

| 88 |

+

num_created = 0

|

| 89 |

+

for response in tator.util.chunked_create(api.create_localization_list,

|

| 90 |

+

project_id,

|

| 91 |

+

localization_spec=localizations):

|

| 92 |

+

num_created += len(response.id)

|

| 93 |

+

|

| 94 |

+

# Output pretty logging information.

|

| 95 |

+

logger.info(f"Successfully created {num_created} localizations on "

|

| 96 |

+

f"{media.name}!")

|

| 97 |

+

|

| 98 |

+

logger.info("-------------------------------------------------")

|

| 99 |

+

|

| 100 |

+

logger.info(f"Completed inference on {len(media_ids)} files.")

|