Spaces:

Build error

Build error

EvanTHU

commited on

Commit

·

445d3d1

1

Parent(s):

bc6c851

update

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitignore +14 -0

- LICENSE +9 -0

- README copy.md +133 -0

- app copy.py +661 -0

- assets/application.png +0 -0

- assets/compare.png +0 -0

- assets/highlight.png +0 -0

- assets/logo.png +0 -0

- assets/system.png +0 -0

- generate.py +199 -0

- lit_gpt/__init__.py +15 -0

- lit_gpt/adapter.py +165 -0

- lit_gpt/adapter_v2.py +197 -0

- lit_gpt/config.py +1040 -0

- lit_gpt/lora.py +671 -0

- lit_gpt/model.py +355 -0

- lit_gpt/packed_dataset.py +235 -0

- lit_gpt/rmsnorm.py +26 -0

- lit_gpt/speed_monitor.py +425 -0

- lit_gpt/tokenizer.py +103 -0

- lit_gpt/utils.py +311 -0

- lit_llama/__init__.py +2 -0

- lit_llama/adapter.py +151 -0

- lit_llama/indexed_dataset.py +588 -0

- lit_llama/lora.py +232 -0

- lit_llama/model.py +246 -0

- lit_llama/quantization.py +281 -0

- lit_llama/tokenizer.py +49 -0

- lit_llama/utils.py +244 -0

- models/__init__.py +0 -0

- models/constants.py +18 -0

- models/encdec.py +67 -0

- models/evaluator_wrapper.py +92 -0

- models/modules.py +109 -0

- models/multimodal_encoder/builder.py +49 -0

- models/multimodal_encoder/clip_encoder.py +78 -0

- models/multimodal_encoder/languagebind/__init__.py +285 -0

- models/multimodal_encoder/languagebind/audio/configuration_audio.py +430 -0

- models/multimodal_encoder/languagebind/audio/modeling_audio.py +1030 -0

- models/multimodal_encoder/languagebind/audio/processing_audio.py +190 -0

- models/multimodal_encoder/languagebind/audio/tokenization_audio.py +77 -0

- models/multimodal_encoder/languagebind/depth/configuration_depth.py +425 -0

- models/multimodal_encoder/languagebind/depth/modeling_depth.py +1030 -0

- models/multimodal_encoder/languagebind/depth/processing_depth.py +108 -0

- models/multimodal_encoder/languagebind/depth/tokenization_depth.py +77 -0

- models/multimodal_encoder/languagebind/image/configuration_image.py +423 -0

- models/multimodal_encoder/languagebind/image/modeling_image.py +1030 -0

- models/multimodal_encoder/languagebind/image/processing_image.py +82 -0

- models/multimodal_encoder/languagebind/image/tokenization_image.py +77 -0

- models/multimodal_encoder/languagebind/thermal/configuration_thermal.py +423 -0

.gitignore

ADDED

|

@@ -0,0 +1,14 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

**/*.pyc

|

| 2 |

+

**/__pycache__

|

| 3 |

+

__pycache__/

|

| 4 |

+

cache_dir/

|

| 5 |

+

checkpoints/

|

| 6 |

+

feedback/

|

| 7 |

+

temp/

|

| 8 |

+

models--LanguageBind--Video-LLaVA-7B/

|

| 9 |

+

*.jsonl

|

| 10 |

+

*.json

|

| 11 |

+

linghao

|

| 12 |

+

run.sh

|

| 13 |

+

examples/

|

| 14 |

+

assets/task.gif

|

LICENSE

ADDED

|

@@ -0,0 +1,9 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

License for Non-commercial Scientific Research Purposes

|

| 2 |

+

|

| 3 |

+

IDEA grants you a non-exclusive, worldwide, non-transferable, non-sublicensable, revocable, royalty free and limited license under IDEA’s copyright interests to reproduce, distribute, and create derivative works of the text, videos, codes solely for your non-commercial research purposes.

|

| 4 |

+

|

| 5 |

+

Any other use, in particular any use for commercial, pornographic, military, or surveillance, purposes is prohibited.

|

| 6 |

+

|

| 7 |

+

Text and visualization results are owned by International Digital Economy Academy (IDEA).

|

| 8 |

+

|

| 9 |

+

You also need to obey the original license of the dependency models/data used in this service.

|

README copy.md

ADDED

|

@@ -0,0 +1,133 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# MotionLLM: Understanding Human Behaviors from Human Motions and Videos

|

| 2 |

+

|

| 3 |

+

|

| 4 |

+

|

| 5 |

+

[Ling-Hao Chen](https://lhchen.top)<sup>😎 1, 3</sup>,

|

| 6 |

+

[Shunlin Lu](https://shunlinlu.github.io)<sup>😎 2, 3</sup>,

|

| 7 |

+

[Ailing Zeng](https://ailingzeng.sit)<sup>3</sup>,

|

| 8 |

+

[Hao Zhang](https://haozhang534.github.io/)<sup>3, 4</sup>,

|

| 9 |

+

[Benyou Wang](https://wabyking.github.io/old.html)<sup>2</sup>,

|

| 10 |

+

[Ruimao Zhang](http://zhangruimao.site)<sup>2</sup>,

|

| 11 |

+

[Lei Zhang](https://leizhang.org)<sup>🤗 3</sup>

|

| 12 |

+

|

| 13 |

+

<sup>😎</sup>Co-first author. Listing order is random.

|

| 14 |

+

<sup>🤗</sup>Corresponding author.

|

| 15 |

+

|

| 16 |

+

<sup>1</sup>Tsinghua University,

|

| 17 |

+

<sup>2</sup>School of Data Science, The Chinese University of Hong Kong, Shenzhen (CUHK-SZ),

|

| 18 |

+

<sup>3</sup>International Digital Economy Academy (IDEA),

|

| 19 |

+

<sup>4</sup>The Hong Kong University of Science and Technology

|

| 20 |

+

|

| 21 |

+

<p align="center">

|

| 22 |

+

<a href='https://arxiv.org/abs/2304'>

|

| 23 |

+

<img src='https://img.shields.io/badge/Arxiv-2304.tomorrow-A42C25?style=flat&logo=arXiv&logoColor=A42C25'>

|

| 24 |

+

</a>

|

| 25 |

+

<a href='https://arxiv.org/pdf/2304.pdf'>

|

| 26 |

+

<img src='https://img.shields.io/badge/Paper-PDF-yellow?style=flat&logo=arXiv&logoColor=yellow'>

|

| 27 |

+

</a>

|

| 28 |

+

<a href='https://lhchen.top/MotionLLM'>

|

| 29 |

+

<img src='https://img.shields.io/badge/Project-Page-%23df5b46?style=flat&logo=Google%20chrome&logoColor=%23df5b46'></a>

|

| 30 |

+

<a href='https://research.lhchen.top/blogpost/motionllm'>

|

| 31 |

+

<img src='https://img.shields.io/badge/Blog-post-4EABE6?style=flat&logoColor=4EABE6'></a>

|

| 32 |

+

<a href='https://github.com/IDEA-Research/MotionLLM'>

|

| 33 |

+

<img src='https://img.shields.io/badge/GitHub-Code-black?style=flat&logo=github&logoColor=white'></a>

|

| 34 |

+

<a href='LICENSE'>

|

| 35 |

+

<img src='https://img.shields.io/badge/License-IDEA-blue.svg'>

|

| 36 |

+

</a>

|

| 37 |

+

<a href="" target='_blank'>

|

| 38 |

+

<img src="https://visitor-badge.laobi.icu/badge?page_id=IDEA-Research.MotionLLM&left_color=gray&right_color=%2342b983">

|

| 39 |

+

</a>

|

| 40 |

+

</p>

|

| 41 |

+

|

| 42 |

+

# 🤩 Abstract

|

| 43 |

+

|

| 44 |

+

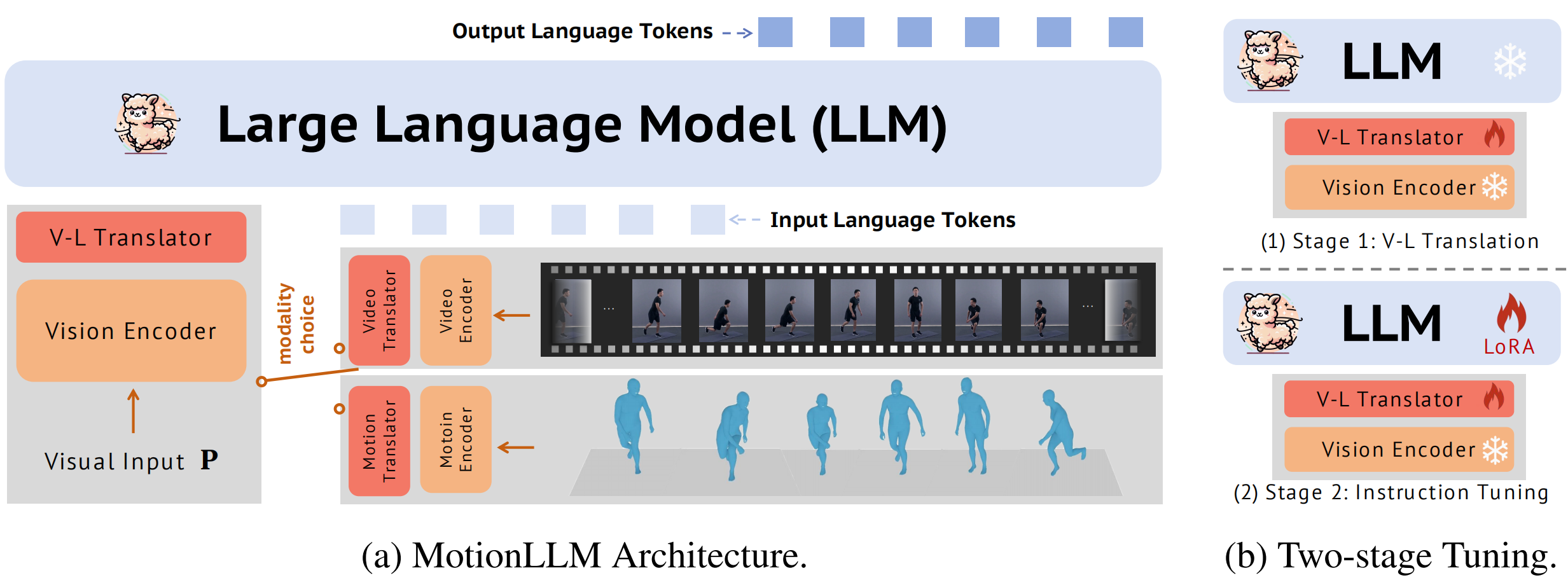

This study delves into the realm of multi-modality (i.e., video and motion modalities) human behavior understanding by leveraging the powerful capabilities of Large Language Models (LLMs). Diverging from recent LLMs designed for video-only or motion-only understanding, we argue that understanding human behavior necessitates joint modeling from both videos and motion sequences (e.g., SMPL sequences) to capture nuanced body part dynamics and semantics effectively. In light of this, we present MotionLLM, a straightforward yet effective framework for human motion understanding, captioning, and reasoning. Specifically, MotionLLM adopts a unified video-motion training strategy that leverages the complementary advantages of existing coarse video-text data and fine-grained motion-text data to glean rich spatial-temporal insights. Furthermore, we collect a substantial dataset, MoVid, comprising diverse videos, motions, captions, and instructions. Additionally, we propose the MoVid-Bench, with carefully manual annotations, for better evaluation of human behavior understanding on video and motion. Extensive experiments show the superiority of MotionLLM in the caption, spatial-temporal comprehension, and reasoning ability.

|

| 45 |

+

|

| 46 |

+

## 🤩 Highlight Applications

|

| 47 |

+

|

| 48 |

+

|

| 49 |

+

|

| 50 |

+

## 🔧 Technical Solution

|

| 51 |

+

|

| 52 |

+

|

| 53 |

+

|

| 54 |

+

## 💻 Try it

|

| 55 |

+

|

| 56 |

+

We provide a simple online [demo](https://demo.humotionx.com/) for you to try MotionLLM. Below is the guidance to deploy the demo on your local machine.

|

| 57 |

+

|

| 58 |

+

### Step 1: Set up the environment

|

| 59 |

+

|

| 60 |

+

```bash

|

| 61 |

+

pip install -r requirements.txt

|

| 62 |

+

```

|

| 63 |

+

|

| 64 |

+

### Step 2: Download the pre-trained model

|

| 65 |

+

|

| 66 |

+

|

| 67 |

+

<details>

|

| 68 |

+

<summary><b> 2.1 Download the LLM </b></summary>

|

| 69 |

+

|

| 70 |

+

Please follow the instruction of [Lit-GPT](https://github.com/Lightning-AI/litgpt) to prepare the LLM model (vicuna 1.5-7B). These files will be:

|

| 71 |

+

```bah

|

| 72 |

+

./checkpoints/vicuna-7b-v1.5

|

| 73 |

+

├── generation_config.json

|

| 74 |

+

├── lit_config.json

|

| 75 |

+

├── lit_model.pth

|

| 76 |

+

├── pytorch_model-00001-of-00002.bin

|

| 77 |

+

├── pytorch_model-00002-of-00002.bin

|

| 78 |

+

├── pytorch_model.bin.index.json

|

| 79 |

+

├── tokenizer_config.json

|

| 80 |

+

└── tokenizer.model

|

| 81 |

+

```

|

| 82 |

+

|

| 83 |

+

If you have any confusion, we will update a more detailed instruction in couple of days.

|

| 84 |

+

|

| 85 |

+

</details>

|

| 86 |

+

|

| 87 |

+

<details>

|

| 88 |

+

<summary><b> 2.2 Dowload the LoRA and the projection layer of the MotionLLM </b></summary>

|

| 89 |

+

|

| 90 |

+

We now release one versions of the MotionLLM checkpoints, namely `v1.0` (download [here](https://drive.google.com/drive/folders/1d_5vaL34Hs2z9ACcMXyPEfZNyMs36xKx?usp=sharing)). Opening for the suggestions to Ling-Hao Chen and Shunlin Lu.

|

| 91 |

+

|

| 92 |

+

```bash

|

| 93 |

+

wget xxx

|

| 94 |

+

```

|

| 95 |

+

Keep them in a folder named and remember the path (`LINEAR_V` and `LORA`).

|

| 96 |

+

|

| 97 |

+

</details>

|

| 98 |

+

|

| 99 |

+

### 2.3 Run the demo

|

| 100 |

+

|

| 101 |

+

```bash

|

| 102 |

+

GRADIO_TEMP_DIR=temp python app.py --lora_path $LORA --mlp_path $LINEAR_V

|

| 103 |

+

```

|

| 104 |

+

If you have some error in downloading the huggingface model, you can try the following command with the mirror of huggingface.

|

| 105 |

+

```bash

|

| 106 |

+

HF_ENDPOINT=https://hf-mirror.com GRADIO_TEMP_DIR=temp python app.py --lora_path $LORA --mlp_path $LINEAR_V

|

| 107 |

+

```

|

| 108 |

+

The `GRADIO_TEMP_DIR=temp` defines a temporary directory as `./temp` for the Gradio to store the data. You can change it to your own path.

|

| 109 |

+

|

| 110 |

+

After thiess, you can open the browser and visit the local host via the command line output reminder. If it is not loaded, please change the IP address as your local IP address (via command `ifconfig`).

|

| 111 |

+

|

| 112 |

+

|

| 113 |

+

## 💼 To-Do

|

| 114 |

+

|

| 115 |

+

- [x] Release the video demo of MotionLLM.

|

| 116 |

+

- [ ] Release the motion demo of MotionLLM.

|

| 117 |

+

- [ ] Release the MoVid dataset and MoVid-Bench.

|

| 118 |

+

- [ ] Release the tuning instruction of MotionLLM.

|

| 119 |

+

|

| 120 |

+

|

| 121 |

+

## 💋 Acknowledgement

|

| 122 |

+

|

| 123 |

+

|

| 124 |

+

The author team would like to deliver many thanks to many people. Qing Jiang helps a lot with some parts of manual annotation on MoVid Bench and resolves some ethics issues of MotionLLM. Jingcheng Hu provided some technical suggestions for efficient training. Shilong Liu and Bojia Zi provided some significant technical suggestions on LLM tuning. Jiale Liu, Wenhao Yang, and Chenlai Qian provided some significant suggestions for us to polish the paper. Hongyang Li helped us a lot with the figure design. Yiren Pang provided GPT API keys when our keys were temporarily out of quota. The code is on the basis of [Video-LLaVA](https://github.com/PKU-YuanGroup/Video-LLaVA), [HumanTOMATO](https://lhchen.top/HumanTOMATO/), [MotionGPT](https://github.com/qiqiApink/MotionGPT). [lit-gpt](https://github.com/Lightning-AI/litgpt), and [HumanML3D](https://github.com/EricGuo5513/HumanML3D). Thanks to all contributors!

|

| 125 |

+

|

| 126 |

+

|

| 127 |

+

## 📚 License

|

| 128 |

+

|

| 129 |

+

This code is distributed under an [IDEA LICENSE](LICENSE). Note that our code depends on other libraries and datasets which each have their own respective licenses that must also be followed.

|

| 130 |

+

|

| 131 |

+

|

| 132 |

+

If you have any question, please contact at: thu [DOT] lhchen [AT] gmail [DOT] com AND shunlinlu0803 [AT] gmail [DOT] com.

|

| 133 |

+

|

app copy.py

ADDED

|

@@ -0,0 +1,661 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import shutil

|

| 2 |

+

import subprocess

|

| 3 |

+

|

| 4 |

+

import torch

|

| 5 |

+

import gradio as gr

|

| 6 |

+

from fastapi import FastAPI

|

| 7 |

+

import os

|

| 8 |

+

from PIL import Image

|

| 9 |

+

import tempfile

|

| 10 |

+

from decord import VideoReader, cpu

|

| 11 |

+

import uvicorn

|

| 12 |

+

from transformers import TextStreamer

|

| 13 |

+

|

| 14 |

+

import hashlib

|

| 15 |

+

import os

|

| 16 |

+

import sys

|

| 17 |

+

import time

|

| 18 |

+

import warnings

|

| 19 |

+

from pathlib import Path

|

| 20 |

+

from typing import Optional

|

| 21 |

+

from typing import Dict, List, Literal, Optional, Tuple

|

| 22 |

+

from lit_gpt.lora import GPT, Block, Config, lora_filter, mark_only_lora_as_trainable

|

| 23 |

+

|

| 24 |

+

import lightning as L

|

| 25 |

+

import numpy as np

|

| 26 |

+

import torch.nn as nn

|

| 27 |

+

import torch.nn.functional as F

|

| 28 |

+

|

| 29 |

+

from generate import generate as generate_

|

| 30 |

+

from lit_llama import Tokenizer, LLaMA, LLaMAConfig

|

| 31 |

+

from lit_llama.lora import lora

|

| 32 |

+

from lit_llama.utils import EmptyInitOnDevice

|

| 33 |

+

from lit_gpt.utils import lazy_load

|

| 34 |

+

from scripts.video_dataset.prepare_video_dataset_video_llava import generate_prompt_mlp

|

| 35 |

+

from options import option

|

| 36 |

+

import imageio

|

| 37 |

+

from tqdm import tqdm

|

| 38 |

+

|

| 39 |

+

from models.multimodal_encoder.builder import build_image_tower, build_video_tower

|

| 40 |

+

from models.multimodal_projector.builder import build_vision_projector

|

| 41 |

+

|

| 42 |

+

|

| 43 |

+

title_markdown = ("""<div class="embed_hidden" style="text-align: center;">

|

| 44 |

+

<h1>MotionLLM: Understanding Human Behaviors from Human Motions and Videos</h1>

|

| 45 |

+

<h3>

|

| 46 |

+

<a href="https://lhchen.top" target="_blank" rel="noopener noreferrer">Ling-Hao Chen</a><sup>😎 1, 3</sup>,

|

| 47 |

+

<a href="https://shunlinlu.github.io" target="_blank" rel="noopener noreferrer">Shunlin Lu</a><sup>😎 2, 3</sup>,

|

| 48 |

+

<br>

|

| 49 |

+

<a href="https://ailingzeng.sit" target="_blank" rel="noopener noreferrer">Ailing Zeng</a><sup>3</sup>,

|

| 50 |

+

<a href="https://haozhang534.github.io/" target="_blank" rel="noopener noreferrer">Hao Zhang</a><sup>3, 4</sup>,

|

| 51 |

+

<a href="https://wabyking.github.io/old.html" target="_blank" rel="noopener noreferrer">Benyou Wang</a><sup>2</sup>,

|

| 52 |

+

<a href="http://zhangruimao.site" target="_blank" rel="noopener noreferrer">Ruimao Zhang</a><sup>2</sup>,

|

| 53 |

+

<a href="https://leizhang.org" target="_blank" rel="noopener noreferrer">Lei Zhang</a><sup>🤗 3</sup>

|

| 54 |

+

</h3>

|

| 55 |

+

<h3><sup>😎</sup><i>Co-first author. Listing order is random.</i>   <sup>🤗</sup><i>Corresponding author.</i></h3>

|

| 56 |

+

<h3>

|

| 57 |

+

<sup>1</sup>THU

|

| 58 |

+

<sup>2</sup>CUHK (SZ)

|

| 59 |

+

<sup>3</sup>IDEA Research

|

| 60 |

+

<sup>4</sup>HKUST

|

| 61 |

+

</h3>

|

| 62 |

+

</div>

|

| 63 |

+

<div style="display: flex; justify-content: center; align-items: center; text-align: center;">

|

| 64 |

+

<img src="https://lhchen.top/MotionLLM/assets/img/highlight.png" alt="MotionLLM" style="width:60%; height: auto; align-items: center;">

|

| 65 |

+

</div>

|

| 66 |

+

|

| 67 |

+

""")

|

| 68 |

+

|

| 69 |

+

block_css = """

|

| 70 |

+

#buttons button {

|

| 71 |

+

min-width: min(120px,100%);

|

| 72 |

+

}

|

| 73 |

+

"""

|

| 74 |

+

|

| 75 |

+

|

| 76 |

+

tos_markdown = ("""

|

| 77 |

+

*We are now working to support the motion branch of the MotionLLM model.

|

| 78 |

+

|

| 79 |

+

### Terms of use

|

| 80 |

+

By using this service, users are required to agree to the following terms:

|

| 81 |

+

The service is a research preview intended for non-commercial use only. It only provides limited safety measures and may generate offensive content.

|

| 82 |

+

It is forbidden to use the service to generate content that is illegal, harmful, violent, racist, or sexual

|

| 83 |

+

The usage of this service is subject to the IDEA License.

|

| 84 |

+

""")

|

| 85 |

+

|

| 86 |

+

|

| 87 |

+

learn_more_markdown = ("""

|

| 88 |

+

### License

|

| 89 |

+

License for Non-commercial Scientific Research Purposes

|

| 90 |

+

|

| 91 |

+

IDEA grants you a non-exclusive, worldwide, non-transferable, non-sublicensable, revocable, royalty free and limited license under IDEA’s copyright interests to reproduce, distribute, and create derivative works of the text, videos, codes solely for your non-commercial research purposes.

|

| 92 |

+

|

| 93 |

+

Any other use, in particular any use for commercial, pornographic, military, or surveillance, purposes is prohibited.

|

| 94 |

+

|

| 95 |

+

Text and visualization results are owned by International Digital Economy Academy (IDEA).

|

| 96 |

+

|

| 97 |

+

You also need to obey the original license of the dependency models/data used in this service.

|

| 98 |

+

""")

|

| 99 |

+

|

| 100 |

+

|

| 101 |

+

|

| 102 |

+

class LlavaMetaModel:

|

| 103 |

+

|

| 104 |

+

def __init__(self, config, pretrained_checkpoint):

|

| 105 |

+

super(LlavaMetaModel, self).__init__()

|

| 106 |

+

# import pdb; pdb.set_trace()

|

| 107 |

+

if hasattr(config, "mm_image_tower") or hasattr(config, "image_tower"):

|

| 108 |

+

self.image_tower = build_image_tower(config, delay_load=True)

|

| 109 |

+

self.mm_projector = build_vision_projector(config)

|

| 110 |

+

if hasattr(config, "mm_video_tower") or hasattr(config, "video_tower"):

|

| 111 |

+

self.video_tower = build_video_tower(config, delay_load=True)

|

| 112 |

+

self.mm_projector = build_vision_projector(config)

|

| 113 |

+

self.load_video_tower_pretrained(pretrained_checkpoint)

|

| 114 |

+

|

| 115 |

+

def get_image_tower(self):

|

| 116 |

+

image_tower = getattr(self, 'image_tower', None)

|

| 117 |

+

if type(image_tower) is list:

|

| 118 |

+

image_tower = image_tower[0]

|

| 119 |

+

return image_tower

|

| 120 |

+

|

| 121 |

+

def get_video_tower(self):

|

| 122 |

+

video_tower = getattr(self, 'video_tower', None)

|

| 123 |

+

|

| 124 |

+

if type(video_tower) is list:

|

| 125 |

+

video_tower = video_tower[0]

|

| 126 |

+

return video_tower

|

| 127 |

+

|

| 128 |

+

|

| 129 |

+

def get_all_tower(self, keys):

|

| 130 |

+

tower = {key: getattr(self, f'get_{key}_tower') for key in keys}

|

| 131 |

+

return tower

|

| 132 |

+

|

| 133 |

+

|

| 134 |

+

def load_video_tower_pretrained(self, pretrained_checkpoint):

|

| 135 |

+

self.mm_projector.load_state_dict(pretrained_checkpoint, strict=True)

|

| 136 |

+

|

| 137 |

+

|

| 138 |

+

def initialize_image_modules(self, model_args, fsdp=None):

|

| 139 |

+

image_tower = model_args.image_tower

|

| 140 |

+

mm_vision_select_layer = model_args.mm_vision_select_layer

|

| 141 |

+

mm_vision_select_feature = model_args.mm_vision_select_feature

|

| 142 |

+

pretrain_mm_mlp_adapter = model_args.pretrain_mm_mlp_adapter

|

| 143 |

+

|

| 144 |

+

self.config.mm_image_tower = image_tower

|

| 145 |

+

|

| 146 |

+

image_tower = build_image_tower(model_args)

|

| 147 |

+

|

| 148 |

+

if fsdp is not None and len(fsdp) > 0:

|

| 149 |

+

self.image_tower = [image_tower]

|

| 150 |

+

else:

|

| 151 |

+

self.image_tower = image_tower

|

| 152 |

+

|

| 153 |

+

self.config.use_mm_proj = True

|

| 154 |

+

self.config.mm_projector_type = getattr(model_args, 'mm_projector_type', 'linear')

|

| 155 |

+

self.config.mm_hidden_size = image_tower.hidden_size

|

| 156 |

+

self.config.mm_vision_select_layer = mm_vision_select_layer

|

| 157 |

+

self.config.mm_vision_select_feature = mm_vision_select_feature

|

| 158 |

+

|

| 159 |

+

self.mm_projector = build_vision_projector(self.config)

|

| 160 |

+

|

| 161 |

+

if pretrain_mm_mlp_adapter is not None:

|

| 162 |

+

mm_projector_weights = torch.load(pretrain_mm_mlp_adapter, map_location='cpu')

|

| 163 |

+

def get_w(weights, keyword):

|

| 164 |

+

return {k.split(keyword + '.')[1]: v for k, v in weights.items() if keyword in k}

|

| 165 |

+

|

| 166 |

+

self.mm_projector.load_state_dict(get_w(mm_projector_weights, 'mm_projector'))

|

| 167 |

+

|

| 168 |

+

def initialize_video_modules(self, model_args, fsdp=None):

|

| 169 |

+

video_tower = model_args.video_tower

|

| 170 |

+

mm_vision_select_layer = model_args.mm_vision_select_layer

|

| 171 |

+

mm_vision_select_feature = model_args.mm_vision_select_feature

|

| 172 |

+

pretrain_mm_mlp_adapter = model_args.pretrain_mm_mlp_adapter

|

| 173 |

+

|

| 174 |

+

self.config.mm_video_tower = video_tower

|

| 175 |

+

|

| 176 |

+

video_tower = build_video_tower(model_args)

|

| 177 |

+

|

| 178 |

+

if fsdp is not None and len(fsdp) > 0:

|

| 179 |

+

self.video_tower = [video_tower]

|

| 180 |

+

else:

|

| 181 |

+

self.video_tower = video_tower

|

| 182 |

+

|

| 183 |

+

self.config.use_mm_proj = True

|

| 184 |

+

self.config.mm_projector_type = getattr(model_args, 'mm_projector_type', 'linear')

|

| 185 |

+

self.config.mm_hidden_size = video_tower.hidden_size

|

| 186 |

+

self.config.mm_vision_select_layer = mm_vision_select_layer

|

| 187 |

+

self.config.mm_vision_select_feature = mm_vision_select_feature

|

| 188 |

+

|

| 189 |

+

self.mm_projector = build_vision_projector(self.config)

|

| 190 |

+

|

| 191 |

+

if pretrain_mm_mlp_adapter is not None:

|

| 192 |

+

mm_projector_weights = torch.load(pretrain_mm_mlp_adapter, map_location='cpu')

|

| 193 |

+

def get_w(weights, keyword):

|

| 194 |

+

return {k.split(keyword + '.')[1]: v for k, v in weights.items() if keyword in k}

|

| 195 |

+

|

| 196 |

+

self.mm_projector.load_state_dict(get_w(mm_projector_weights, 'mm_projector'))

|

| 197 |

+

|

| 198 |

+

def encode_images(self, images):

|

| 199 |

+

image_features = self.get_image_tower()(images)

|

| 200 |

+

image_features = self.mm_projector(image_features)

|

| 201 |

+

return image_features

|

| 202 |

+

|

| 203 |

+

def encode_videos(self, videos):

|

| 204 |

+

# import pdb; pdb.set_trace()

|

| 205 |

+

# videos: torch.Size([1, 3, 8, 224, 224])

|

| 206 |

+

video_features = self.get_video_tower()(videos) # torch.Size([1, 2048, 1024])

|

| 207 |

+

video_features = self.mm_projector(video_features.float()) # torch.Size([1, 2048, 4096])

|

| 208 |

+

return video_features

|

| 209 |

+

|

| 210 |

+

def get_multimodal_embeddings(self, X_modalities):

|

| 211 |

+

Xs, keys= X_modalities

|

| 212 |

+

|

| 213 |

+

X_features = getattr(self, f'encode_{keys[0]}s')(Xs) # expand to get batchsize

|

| 214 |

+

|

| 215 |

+

return X_features

|

| 216 |

+

|

| 217 |

+

|

| 218 |

+

class Projection(nn.Module):

|

| 219 |

+

def __init__(self, ):

|

| 220 |

+

super().__init__()

|

| 221 |

+

self.linear_proj = nn.Linear(512, 4096)

|

| 222 |

+

def forward(self, x):

|

| 223 |

+

return self.linear_proj(x)

|

| 224 |

+

|

| 225 |

+

|

| 226 |

+

class ProjectionNN(nn.Module):

|

| 227 |

+

def __init__(self, ):

|

| 228 |

+

super().__init__()

|

| 229 |

+

self.proj = nn.Sequential(

|

| 230 |

+

nn.Linear(512, 4096),

|

| 231 |

+

nn.GELU(),

|

| 232 |

+

nn.Linear(4096, 4096)

|

| 233 |

+

)

|

| 234 |

+

def forward(self, x):

|

| 235 |

+

return self.proj(x)

|

| 236 |

+

|

| 237 |

+

|

| 238 |

+

class Conversation():

|

| 239 |

+

def __init__(self, output=None, input_prompt=None, prompt=None):

|

| 240 |

+

if output is None:

|

| 241 |

+

self.messages = []

|

| 242 |

+

else:

|

| 243 |

+

self.messages = []

|

| 244 |

+

self.append_message(prompt, input_prompt, output)

|

| 245 |

+

|

| 246 |

+

def append_message(self, output, input_prompt, prompt, show_images):

|

| 247 |

+

# print(output)

|

| 248 |

+

# print(input_prompt)

|

| 249 |

+

# print(prompt)

|

| 250 |

+

# print(show_images)

|

| 251 |

+

self.messages.append((output, input_prompt, prompt, show_images))

|

| 252 |

+

|

| 253 |

+

def to_gradio_chatbot(self, show_images=None, output_text=None):

|

| 254 |

+

# return a list

|

| 255 |

+

if show_images is None:

|

| 256 |

+

show_images = self.messages[-1][3]

|

| 257 |

+

output_text = self.messages[-1][0]

|

| 258 |

+

return [

|

| 259 |

+

[show_images, output_text]

|

| 260 |

+

]

|

| 261 |

+

|

| 262 |

+

def get_info(self):

|

| 263 |

+

return self.messages[-1][0], self.messages[-1][1]

|

| 264 |

+

|

| 265 |

+

|

| 266 |

+

class ConversationBuffer():

|

| 267 |

+

def __init__(self, input_text):

|

| 268 |

+

self.buffer_ = []

|

| 269 |

+

self.buffer.append(input_text)

|

| 270 |

+

|

| 271 |

+

|

| 272 |

+

def init_conv():

|

| 273 |

+

conv = Conversation()

|

| 274 |

+

return conv

|

| 275 |

+

|

| 276 |

+

|

| 277 |

+

def get_processor(X, config, device, pretrained_checkpoint_tower, model_path = 'LanguageBind/MotionLLM-7B'):

|

| 278 |

+

mm_backbone_mlp_model = LlavaMetaModel(config, pretrained_checkpoint_tower)

|

| 279 |

+

|

| 280 |

+

processor = {}

|

| 281 |

+

if 'Image' in X:

|

| 282 |

+

image_tower = mm_backbone_mlp_model.get_image_tower() # LanguageBindImageTower()

|

| 283 |

+

if not image_tower.is_loaded:

|

| 284 |

+

image_tower.load_model()

|

| 285 |

+

image_tower.to(device=device, dtype=torch.float16)

|

| 286 |

+

image_processor = image_tower.image_processor

|

| 287 |

+

processor['image'] = image_processor

|

| 288 |

+

if 'Video' in X:

|

| 289 |

+

video_tower = mm_backbone_mlp_model.get_video_tower()

|

| 290 |

+

if not video_tower.is_loaded:

|

| 291 |

+

video_tower.load_model()

|

| 292 |

+

video_tower.to(device=device, dtype=torch.float16)

|

| 293 |

+

video_processor = video_tower.video_processor

|

| 294 |

+

processor['video'] = video_processor

|

| 295 |

+

|

| 296 |

+

return mm_backbone_mlp_model, processor

|

| 297 |

+

|

| 298 |

+

|

| 299 |

+

def motionllm(

|

| 300 |

+

args,

|

| 301 |

+

input_video_path: str,

|

| 302 |

+

text_en_in: str,

|

| 303 |

+

quantize: Optional[str] = None,

|

| 304 |

+

dtype: str = "float32",

|

| 305 |

+

max_new_tokens: int = 200,

|

| 306 |

+

top_k: int = 200,

|

| 307 |

+

temperature: float = 0.8,

|

| 308 |

+

accelerator: str = "auto",):

|

| 309 |

+

|

| 310 |

+

video_tensor = video_processor(input_video_path, return_tensors='pt')['pixel_values']

|

| 311 |

+

|

| 312 |

+

if type(video_tensor) is list:

|

| 313 |

+

tensor = [video.to('cuda', dtype=torch.float16) for video in video_tensor]

|

| 314 |

+

else:

|

| 315 |

+

tensor = video_tensor.to('cuda', dtype=torch.float16) # (1,3,8,224,224)

|

| 316 |

+

|

| 317 |

+

X_modalities = [tensor,['video']]

|

| 318 |

+

video_feature = mm_backbone_mlp_model.get_multimodal_embeddings(X_modalities)

|

| 319 |

+

prompt = text_en_in

|

| 320 |

+

input_prompt = prompt

|

| 321 |

+

|

| 322 |

+

sample = {"instruction": prompt, "input": input_video_path}

|

| 323 |

+

|

| 324 |

+

prefix = generate_prompt_mlp(sample)

|

| 325 |

+

pre = torch.cat((tokenizer.encode(prefix.split('INPUT_VIDEO: ')[0] + "\n", bos=True, eos=False, device=model.device).view(1, -1), tokenizer.encode("INPUT_VIDEO: ", bos=False, eos=False, device=model.device).view(1, -1)), dim=1)

|

| 326 |

+

|

| 327 |

+

prompt = (pre, ". ASSISTANT: ")

|

| 328 |

+

encoded = (prompt[0], video_feature[0], tokenizer.encode(prompt[1], bos=False, eos=False, device=model.device).view(1, -1))

|

| 329 |

+

|

| 330 |

+

t0 = time.perf_counter()

|

| 331 |

+

|

| 332 |

+

output_seq = generate_(

|

| 333 |

+

model,

|

| 334 |

+

idx=encoded,

|

| 335 |

+

max_seq_length=4096,

|

| 336 |

+

max_new_tokens=max_new_tokens,

|

| 337 |

+

temperature=temperature,

|

| 338 |

+

top_k=top_k,

|

| 339 |

+

eos_id=tokenizer.eos_id,

|

| 340 |

+

tokenizer = tokenizer,

|

| 341 |

+

)

|

| 342 |

+

outputfull = tokenizer.decode(output_seq)

|

| 343 |

+

output = outputfull.split("ASSISTANT:")[-1].strip()

|

| 344 |

+

print("================================")

|

| 345 |

+

print(output)

|

| 346 |

+

print("================================")

|

| 347 |

+

|

| 348 |

+

return output, input_prompt, prompt

|

| 349 |

+

|

| 350 |

+

|

| 351 |

+

def save_image_to_local(image):

|

| 352 |

+

filename = os.path.join('temp', next(tempfile._get_candidate_names()) + '.jpg')

|

| 353 |

+

image = Image.open(image)

|

| 354 |

+

image.save(filename)

|

| 355 |

+

# print(filename)

|

| 356 |

+

return filename

|

| 357 |

+

|

| 358 |

+

|

| 359 |

+

def save_video_to_local(video_path):

|

| 360 |

+

filename = os.path.join('temp', next(tempfile._get_candidate_names()) + '.mp4')

|

| 361 |

+

shutil.copyfile(video_path, filename)

|

| 362 |

+

return filename

|

| 363 |

+

|

| 364 |

+

|

| 365 |

+

def generate(image1, video, textbox_in, first_run, state, images_tensor):

|

| 366 |

+

flag = 1

|

| 367 |

+

|

| 368 |

+

image1 = image1 if image1 else "none"

|

| 369 |

+

video = video if video else "none"

|

| 370 |

+

|

| 371 |

+

if type(state) is not Conversation:

|

| 372 |

+

state = init_conv()

|

| 373 |

+

images_tensor = [[], []]

|

| 374 |

+

|

| 375 |

+

first_run = False if len(state.messages) > 0 else True

|

| 376 |

+

text_en_in = textbox_in.replace("picture", "image")

|

| 377 |

+

output, input_prompt, prompt = motionllm(args, video, text_en_in)

|

| 378 |

+

|

| 379 |

+

text_en_out = output

|

| 380 |

+

textbox_out = text_en_out

|

| 381 |

+

|

| 382 |

+

show_images = ""

|

| 383 |

+

if os.path.exists(image1):

|

| 384 |

+

filename = save_image_to_local(image1)

|

| 385 |

+

show_images += f'<img src="./file={filename}" style="display: inline-block;width: 250px;max-height: 400px;">'

|

| 386 |

+

|

| 387 |

+

if os.path.exists(video):

|

| 388 |

+

filename = save_video_to_local(video)

|

| 389 |

+

show_images += f'<video controls playsinline width="500" style="display: inline-block;" src="./file={filename}"></video>'

|

| 390 |

+

|

| 391 |

+

show_images = textbox_in + "\n" + show_images

|

| 392 |

+

state.append_message(output, input_prompt, prompt, show_images)

|

| 393 |

+

|

| 394 |

+

torch.cuda.empty_cache()

|

| 395 |

+

|

| 396 |

+

return (state, state.to_gradio_chatbot(show_images, output), False, gr.update(value=None, interactive=True), images_tensor, gr.update(value=image1 if os.path.exists(image1) else None, interactive=True), gr.update(value=video if os.path.exists(video) else None, interactive=True))

|

| 397 |

+

|

| 398 |

+

def regenerate(state):

|

| 399 |

+

if len(state.messages) > 0:

|

| 400 |

+

tobot = state.to_gradio_chatbot()

|

| 401 |

+

tobot[-1][1] = None

|

| 402 |

+

textbox = state.messages[-1][1]

|

| 403 |

+

state.messages.pop(-1)

|

| 404 |

+

return state, tobot, False, textbox

|

| 405 |

+

return (state, [], True)

|

| 406 |

+

|

| 407 |

+

|

| 408 |

+

def clear_history(state):

|

| 409 |

+

state = init_conv()

|

| 410 |

+

try:

|

| 411 |

+

tgt = state.to_gradio_chatbot()

|

| 412 |

+

except:

|

| 413 |

+

tgt = [None, None]

|

| 414 |

+

return (gr.update(value=None, interactive=True),

|

| 415 |

+

gr.update(value=None, interactive=True),\

|

| 416 |

+

gr.update(value=None, interactive=True),\

|

| 417 |

+

True, state, tgt, [[], []])

|

| 418 |

+

|

| 419 |

+

|

| 420 |

+

def get_md5(file_path):

|

| 421 |

+

hash_md5 = hashlib.md5()

|

| 422 |

+

with open(file_path, "rb") as f:

|

| 423 |

+

for chunk in iter(lambda: f.read(4096), b""):

|

| 424 |

+

hash_md5.update(chunk)

|

| 425 |

+

return hash_md5.hexdigest()

|

| 426 |

+

|

| 427 |

+

|

| 428 |

+

def logging_up(video, state):

|

| 429 |

+

try:

|

| 430 |

+

state.get_info()

|

| 431 |

+

except:

|

| 432 |

+

return False

|

| 433 |

+

action = "upvote"

|

| 434 |

+

# Get the current time

|

| 435 |

+

current_time = str(time.time())

|

| 436 |

+

|

| 437 |

+

# Create an md5 object

|

| 438 |

+

hash_object = hashlib.md5(current_time.encode())

|

| 439 |

+

|

| 440 |

+

# Get the hexadecimal representation of the hash

|

| 441 |

+

md5_hash = get_md5(video) + "-" + hash_object.hexdigest()

|

| 442 |

+

|

| 443 |

+

command = f"cp {video} ./feedback/{action}/mp4/{md5_hash}.mp4"

|

| 444 |

+

os.system(command)

|

| 445 |

+

with open (f"./feedback/{action}/txt/{md5_hash}.txt", "w") as f:

|

| 446 |

+

out, prp = state.get_info()

|

| 447 |

+

f.write(f"==========\nPrompt: {prp}\n==========\nOutput: {out}==========\n")

|

| 448 |

+

return True

|

| 449 |

+

|

| 450 |

+

|

| 451 |

+

def logging_down(video, state):

|

| 452 |

+

try:

|

| 453 |

+

state.get_info()

|

| 454 |

+

except:

|

| 455 |

+

return False

|

| 456 |

+

action = "downvote"

|

| 457 |

+

# Get the current time

|

| 458 |

+

current_time = str(time.time())

|

| 459 |

+

|

| 460 |

+

# Create an md5 object

|

| 461 |

+

hash_object = hashlib.md5(current_time.encode())

|

| 462 |

+

|

| 463 |

+

# Get the hexadecimal representation of the hash

|

| 464 |

+

md5_hash = get_md5(video) + "-" + hash_object.hexdigest()

|

| 465 |

+

|

| 466 |

+

command = f"cp {video} ./feedback/{action}/mp4/{md5_hash}.mp4"

|

| 467 |

+

os.system(command)

|

| 468 |

+

with open (f"./feedback/{action}/txt/{md5_hash}.txt", "w") as f:

|

| 469 |

+

out, prp = state.get_info()

|

| 470 |

+

f.write(f"==========\nPrompt: {prp}\n==========\nOutput: {out}==========\n")

|

| 471 |

+

return True

|

| 472 |

+

|

| 473 |

+

|

| 474 |

+

torch.set_float32_matmul_precision("high")

|

| 475 |

+

warnings.filterwarnings('ignore')

|

| 476 |

+

args = option.get_args_parser()

|

| 477 |

+

|

| 478 |

+

conv_mode = "llava_v1"

|

| 479 |

+

model_path = 'LanguageBind/Video-LLaVA-7B'

|

| 480 |

+

device = 'cuda'

|

| 481 |

+

load_8bit = False

|

| 482 |

+

load_4bit = True

|

| 483 |

+

dtype = torch.float16

|

| 484 |

+

|

| 485 |

+

if not os.path.exists("temp"):

|

| 486 |

+

os.makedirs("temp")

|

| 487 |

+

|

| 488 |

+

lora_path = Path(args.lora_path)

|

| 489 |

+

pretrained_llm_path = Path(f"./checkpoints/vicuna-7b-v1.5/lit_model.pth")

|

| 490 |

+

tokenizer_llm_path = Path("./checkpoints/vicuna-7b-v1.5/tokenizer.model")

|

| 491 |

+

|

| 492 |

+

# assert lora_path.is_file()

|

| 493 |

+

assert pretrained_llm_path.is_file()

|

| 494 |

+

assert tokenizer_llm_path.is_file()

|

| 495 |

+

|

| 496 |

+

accelerator = "auto"

|

| 497 |

+

fabric = L.Fabric(accelerator=accelerator, devices=1)

|

| 498 |

+

|

| 499 |

+

dtype = "float32"

|

| 500 |

+

dt = getattr(torch, dtype, None)

|

| 501 |

+

if not isinstance(dt, torch.dtype):

|

| 502 |

+

raise ValueError(f"{dtype} is not a valid dtype.")

|

| 503 |

+

dtype = dt

|

| 504 |

+

|

| 505 |

+

quantize = None

|

| 506 |

+

t0 = time.time()

|

| 507 |

+

|

| 508 |

+

with EmptyInitOnDevice(

|

| 509 |

+

device=fabric.device, dtype=dtype, quantization_mode=quantize

|

| 510 |

+

), lora(r=args.lora_r, alpha=args.lora_alpha, dropout=args.lora_dropout, enabled=True):

|

| 511 |

+

checkpoint_dir = Path("checkpoints/vicuna-7b-v1.5")

|

| 512 |

+

lora_query = True

|

| 513 |

+

lora_key = False

|

| 514 |

+

lora_value = True

|

| 515 |

+

lora_projection = False

|

| 516 |

+

lora_mlp = False

|

| 517 |

+

lora_head = False

|

| 518 |

+

config = Config.from_name(

|

| 519 |

+

name=checkpoint_dir.name,

|

| 520 |

+

r=args.lora_r,

|

| 521 |

+

alpha=args.lora_alpha,

|

| 522 |

+

dropout=args.lora_dropout,

|

| 523 |

+

to_query=lora_query,

|

| 524 |

+

to_key=lora_key,

|

| 525 |

+

to_value=lora_value,

|

| 526 |

+

to_projection=lora_projection,

|

| 527 |

+

to_mlp=lora_mlp,

|

| 528 |

+

to_head=lora_head,

|

| 529 |

+

)

|

| 530 |

+

model = GPT(config).bfloat16()

|

| 531 |

+

|

| 532 |

+

mlp_path = args.mlp_path

|

| 533 |

+

pretrained_checkpoint_mlp = torch.load(mlp_path)

|

| 534 |

+

|

| 535 |

+

X = ['Video']

|

| 536 |

+

|

| 537 |

+

mm_backbone_mlp_model, processor = get_processor(X, args, 'cuda', pretrained_checkpoint_mlp, model_path = 'LanguageBind/Video-LLaVA-7B')

|

| 538 |

+

video_processor = processor['video']

|

| 539 |

+

|

| 540 |

+

linear_proj = mm_backbone_mlp_model.mm_projector

|

| 541 |

+

|

| 542 |

+

# 1. Load the pretrained weights

|

| 543 |

+

pretrained_llm_checkpoint = lazy_load(pretrained_llm_path)

|

| 544 |

+

# 2. Load the fine-tuned LoRA weights

|

| 545 |

+

lora_checkpoint = lazy_load(lora_path)

|

| 546 |

+

# 3. merge the two checkpoints

|

| 547 |

+

model_state_dict = {**pretrained_llm_checkpoint, **lora_checkpoint}

|

| 548 |

+

model.load_state_dict(model_state_dict, strict=True)

|

| 549 |

+

print('Load llm base model from', pretrained_llm_path)

|

| 550 |

+

print('Load lora model from', lora_path)

|

| 551 |

+

|

| 552 |

+

# load mlp again, to en sure, not neccessary actually

|

| 553 |

+

linear_proj.load_state_dict(pretrained_checkpoint_mlp)

|

| 554 |

+

linear_proj = linear_proj.cuda()

|

| 555 |

+

print('Load mlp model again from', mlp_path)

|

| 556 |

+

print(f"Time to load model: {time.time() - t0:.02f} seconds.", file=sys.stderr)

|

| 557 |

+

|

| 558 |

+

model.eval()

|

| 559 |

+

model = fabric.setup_module(model)

|

| 560 |

+

linear_proj.eval()

|

| 561 |

+

|

| 562 |

+

tokenizer = Tokenizer(tokenizer_llm_path)

|

| 563 |

+

print('Load tokenizer from', tokenizer_llm_path)

|

| 564 |

+

|

| 565 |

+

print(torch.cuda.memory_allocated())

|

| 566 |

+

print(torch.cuda.max_memory_allocated())

|

| 567 |

+

|

| 568 |

+

|

| 569 |

+

app = FastAPI()

|

| 570 |

+

|

| 571 |

+

textbox = gr.Textbox(

|

| 572 |

+

show_label=False, placeholder="Enter text and press ENTER", container=False

|

| 573 |

+

)

|

| 574 |

+

|

| 575 |

+

with gr.Blocks(title='MotionLLM', theme=gr.themes.Default(), css=block_css) as demo:

|

| 576 |

+

gr.Markdown(title_markdown)

|

| 577 |

+

state = gr.State()

|

| 578 |

+

buffer_ = gr.State()

|

| 579 |

+

first_run = gr.State()

|

| 580 |

+

images_tensor = gr.State()

|

| 581 |

+

|

| 582 |

+

with gr.Row():

|

| 583 |

+

with gr.Column(scale=3):

|

| 584 |

+

image1 = gr.State()

|

| 585 |

+

video = gr.Video(label="Input Video")

|

| 586 |

+

|

| 587 |

+

cur_dir = os.path.dirname(os.path.abspath(__file__))

|

| 588 |

+

gr.Examples(

|

| 589 |

+

examples=[

|

| 590 |

+

[

|

| 591 |

+

f"{cur_dir}/examples/Play_Electric_guitar_16_clip1.mp4",

|

| 592 |

+

"why is the girl so happy",

|

| 593 |

+

],

|

| 594 |

+

[

|

| 595 |

+

f"{cur_dir}/examples/guoyoucai.mov",

|

| 596 |

+

"what is the feeling of him",

|

| 597 |

+

],

|

| 598 |

+

[

|

| 599 |

+

f"{cur_dir}/examples/sprint_run_18_clip1.mp4",

|

| 600 |

+

"Why is the man running so fast?",

|

| 601 |

+

],

|

| 602 |

+

[

|

| 603 |

+

f"{cur_dir}/examples/lift_weight.mp4",

|

| 604 |

+

"Assume you are a fitness coach, refer to the video of the professional athlete, please analyze specific action essentials in steps and give detailed instruction.",

|

| 605 |

+

],

|

| 606 |

+

[

|

| 607 |

+

f"{cur_dir}/examples/Shaolin_Kung_Fu_Wushu_Selfdefense_Sword_Form_Session_22_clip3.mp4",

|

| 608 |

+

"wow, can you teach me the motion, step by step in detail",

|

| 609 |

+

],

|

| 610 |

+

[

|

| 611 |

+

f"{cur_dir}/examples/mabaoguo.mp4",

|

| 612 |

+

"why is the video funny?",

|

| 613 |

+

],

|

| 614 |

+

[

|

| 615 |

+

f"{cur_dir}/examples/COBRA_PUSH_UPS_clip2.mp4",

|

| 616 |

+

"describe the body movement of the woman",

|

| 617 |

+

],

|

| 618 |

+

[

|

| 619 |

+

f"{cur_dir}/examples/sample_demo_1.mp4",

|

| 620 |

+

"Why is this video interesting?",

|

| 621 |

+

],

|

| 622 |

+

],

|

| 623 |

+

inputs=[video, textbox],

|

| 624 |

+

)