Upload 7 files

Browse files- .gitattributes +1 -0

- app.py +57 -0

- requirements.txt +68 -0

- resources/DF_FINAL.csv +3 -0

- resources/corpus_embeddings_rub.pth +3 -0

- resources/functions.py +40 -0

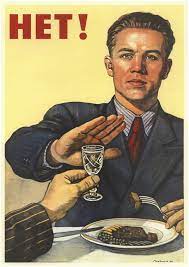

- resources/img.jpeg +0 -0

- resources/parcing.ipynb +0 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

resources/DF_FINAL.csv filter=lfs diff=lfs merge=lfs -text

|

app.py

ADDED

|

@@ -0,0 +1,57 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

import pandas as pd

|

| 3 |

+

import numpy as np

|

| 4 |

+

import torch

|

| 5 |

+

|

| 6 |

+

from resources.functions import recommend

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

st.markdown(f"<h1 style='text-align: center;'>Глупый поиск фильмов", unsafe_allow_html=True)

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

df = pd.read_csv('resources/DF_FINAL.csv')

|

| 13 |

+

emb = torch.load('resources/corpus_embeddings_rub.pth')

|

| 14 |

+

|

| 15 |

+

st.write(f'<p style="text-align: center; font-family: Arial, sans-serif; font-size: 20px; color: white;">Количество фильмов \

|

| 16 |

+

для поиска {len(df)}</p>', unsafe_allow_html=True)

|

| 17 |

+

|

| 18 |

+

# genre_lists = df['ganres'].apply(lambda x: x.split(', ') if isinstance(x, str) else [])

|

| 19 |

+

# all_genres = list(set([genre for sublist in genre_lists for genre in sublist]))

|

| 20 |

+

# unique_genres = sorted(all_genres[1:])

|

| 21 |

+

|

| 22 |

+

st.header(':wrench: Панель инструментов')

|

| 23 |

+

# choice_g = st.multiselect("Выберите жанры", options=unique_genres)

|

| 24 |

+

top_k = st.selectbox("Сколько фильмов предложить?", options=[5, 10, 15, 20])

|

| 25 |

+

|

| 26 |

+

text = st.text_input('Что будем искать?')

|

| 27 |

+

button = st.button('Начать поиск', type="primary")

|

| 28 |

+

|

| 29 |

+

if text and button:

|

| 30 |

+

# if len(choice_g) == 0:

|

| 31 |

+

# choice_g = all_genres

|

| 32 |

+

# filt_ind = filter(df, choice_g)

|

| 33 |

+

hits = recommend(text, emb, top_k)

|

| 34 |

+

st.write(f'<p style="font-family: Arial, sans-serif; font-size: 24px; color: blue; font-weight: bold;"><strong>Всего подобранных \

|

| 35 |

+

рекомендаций {len(hits[0])}</strong></p>', unsafe_allow_html=True)

|

| 36 |

+

st.write('\n')

|

| 37 |

+

|

| 38 |

+

for i in range(top_k):

|

| 39 |

+

col1, col2 = st.columns([3, 4])

|

| 40 |

+

with col1:

|

| 41 |

+

try:

|

| 42 |

+

st.image(df['poster'][hits[0][i]['corpus_id']], width=300)

|

| 43 |

+

except:

|

| 44 |

+

st.image('https://cdnn11.img.sputnik.by/img/104126/36/1041263627_235:441:1472:1802_1920x0_80_0_0_fc2acc893b618b7c650d661fafe178b8.jpg', width=300)

|

| 45 |

+

with col2:

|

| 46 |

+

st.write(f"***Название:*** {df['title'][hits[0][i]['corpus_id']]}")

|

| 47 |

+

st.write(f"***Жанр:*** {(df['ganres'][hits[0][i]['corpus_id']])}")

|

| 48 |

+

st.write(f"***Описание:*** {df['description'][hits[0][i]['corpus_id']]}")

|

| 49 |

+

st.write(f"***Год:*** {df['year'][hits[0][i]['corpus_id']]}")

|

| 50 |

+

st.write(f"***Актерский состав:*** {df['cast'][hits[0][i]['corpus_id']]}")

|

| 51 |

+

st.write(f"***Косинусное сходство:*** {round(hits[0][i]['score'], 2)}")

|

| 52 |

+

st.write(f"***Ссылка на фильм : {df['url'][hits[0][i]['corpus_id']]}***")

|

| 53 |

+

|

| 54 |

+

st.markdown(

|

| 55 |

+

"<hr style='border: 2px solid #000; margin-top: 10px; margin-bottom: 10px;'>",

|

| 56 |

+

unsafe_allow_html=True

|

| 57 |

+

)

|

requirements.txt

ADDED

|

@@ -0,0 +1,68 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

altair==5.2.0

|

| 2 |

+

attrs==23.2.0

|

| 3 |

+

blinker==1.7.0

|

| 4 |

+

cachetools==5.3.2

|

| 5 |

+

certifi==2024.2.2

|

| 6 |

+

charset-normalizer==3.3.2

|

| 7 |

+

click==8.1.7

|

| 8 |

+

DAWG-Python==0.7.2

|

| 9 |

+

docopt==0.6.2

|

| 10 |

+

filelock==3.13.1

|

| 11 |

+

fsspec==2024.2.0

|

| 12 |

+

gitdb==4.0.11

|

| 13 |

+

GitPython==3.1.41

|

| 14 |

+

huggingface-hub==0.20.3

|

| 15 |

+

idna==3.6

|

| 16 |

+

importlib-metadata==7.0.1

|

| 17 |

+

Jinja2==3.1.3

|

| 18 |

+

joblib==1.3.2

|

| 19 |

+

jsonschema==4.21.1

|

| 20 |

+

jsonschema-specifications==2023.12.1

|

| 21 |

+

markdown-it-py==3.0.0

|

| 22 |

+

MarkupSafe==2.1.5

|

| 23 |

+

mdurl==0.1.2

|

| 24 |

+

mpmath==1.3.0

|

| 25 |

+

networkx==3.2.1

|

| 26 |

+

nltk==3.8.1

|

| 27 |

+

numpy==1.26.4

|

| 28 |

+

packaging==23.2

|

| 29 |

+

pandas==2.2.0

|

| 30 |

+

pillow==10.2.0

|

| 31 |

+

protobuf==4.25.2

|

| 32 |

+

pyarrow==15.0.0

|

| 33 |

+

pydeck==0.8.1b0

|

| 34 |

+

Pygments==2.17.2

|

| 35 |

+

pymorphy2==0.9.1

|

| 36 |

+

pymorphy2-dicts-ru==2.4.417127.4579844

|

| 37 |

+

python-dateutil==2.8.2

|

| 38 |

+

pytz==2024.1

|

| 39 |

+

PyYAML==6.0.1

|

| 40 |

+

referencing==0.33.0

|

| 41 |

+

regex==2023.12.25

|

| 42 |

+

requests==2.31.0

|

| 43 |

+

rich==13.7.0

|

| 44 |

+

rpds-py==0.17.1

|

| 45 |

+

safetensors==0.4.2

|

| 46 |

+

scikit-learn==1.4.0

|

| 47 |

+

scipy==1.12.0

|

| 48 |

+

sentence-transformers==2.3.1

|

| 49 |

+

sentencepiece==0.1.99

|

| 50 |

+

six==1.16.0

|

| 51 |

+

smmap==5.0.1

|

| 52 |

+

streamlit==1.31.0

|

| 53 |

+

sympy==1.12

|

| 54 |

+

tenacity==8.2.3

|

| 55 |

+

threadpoolctl==3.2.0

|

| 56 |

+

tokenizers==0.15.1

|

| 57 |

+

toml==0.10.2

|

| 58 |

+

toolz==0.12.1

|

| 59 |

+

torch==2.2.0

|

| 60 |

+

tornado==6.4

|

| 61 |

+

tqdm==4.66.1

|

| 62 |

+

transformers==4.37.2

|

| 63 |

+

typing_extensions==4.9.0

|

| 64 |

+

tzdata==2023.4

|

| 65 |

+

tzlocal==5.2

|

| 66 |

+

urllib3==2.2.0

|

| 67 |

+

validators==0.22.0

|

| 68 |

+

zipp==3.17.0

|

resources/DF_FINAL.csv

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:c028d793b0c651c6d043309a3b272946c80762dfa8eb0565927a36cc897b9032

|

| 3 |

+

size 118068895

|

resources/corpus_embeddings_rub.pth

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:d0b94a30ec61d3dab95c9c4f05896cbb9d298b2079535af4f61e739e1df9a703

|

| 3 |

+

size 56046434

|

resources/functions.py

ADDED

|

@@ -0,0 +1,40 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import re

|

| 2 |

+

import string

|

| 3 |

+

import pandas as pd

|

| 4 |

+

# import numpy as np

|

| 5 |

+

# import torch

|

| 6 |

+

import nltk

|

| 7 |

+

import pymorphy2

|

| 8 |

+

from nltk.corpus import stopwords

|

| 9 |

+

nltk.download('stopwords')

|

| 10 |

+

from sentence_transformers import SentenceTransformer, util

|

| 11 |

+

|

| 12 |

+

stop_words = set(stopwords.words('russian'))

|

| 13 |

+

morph = pymorphy2.MorphAnalyzer()

|

| 14 |

+

model = SentenceTransformer('cointegrated/rubert-tiny2')

|

| 15 |

+

|

| 16 |

+

def data_preprocessing_hard(text: str) -> str:

|

| 17 |

+

text = str(text)

|

| 18 |

+

text = text.lower()

|

| 19 |

+

text = re.sub('<.*?>', '', text)

|

| 20 |

+

text = re.sub(r'[^а-яА-Я\s]', '', text)

|

| 21 |

+

text = ''.join([c for c in text if c not in string.punctuation])

|

| 22 |

+

text = ' '.join([word for word in text.split() if word not in stop_words])

|

| 23 |

+

# text = ''.join([char for char in text if not char.isdigit()])

|

| 24 |

+

text = ' '.join([morph.parse(word)[0].normal_form for word in text.split()])

|

| 25 |

+

return text

|

| 26 |

+

|

| 27 |

+

def filter(df: pd.DataFrame, ganre_list: list):

|

| 28 |

+

filtered_df = df[df['ganres'].apply(lambda x: any(g in ganre_list for g in(x)))]

|

| 29 |

+

filt_ind = filtered_df.index.to_list()

|

| 30 |

+

return filt_ind

|

| 31 |

+

|

| 32 |

+

def recommend(text: str, embeddings, top_k):

|

| 33 |

+

query_embeddings = model.encode([data_preprocessing_hard(text)], convert_to_tensor=True)

|

| 34 |

+

embeddings = embeddings.to("cpu")

|

| 35 |

+

embeddings = util.normalize_embeddings(embeddings)

|

| 36 |

+

|

| 37 |

+

query_embeddings = query_embeddings.to("cpu")

|

| 38 |

+

query_embeddings = util.normalize_embeddings(query_embeddings)

|

| 39 |

+

hits = util.semantic_search(query_embeddings, embeddings, top_k, score_function=util.dot_score)

|

| 40 |

+

return hits

|

resources/img.jpeg

ADDED

|

resources/parcing.ipynb

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|