Spaces:

Runtime error

Runtime error

Commit

•

ff0340e

1

Parent(s):

eaa1b39

Update hf

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- 2D_Stage/configs/infer.yaml +24 -0

- 2D_Stage/input.png +0 -0

- 2D_Stage/material/examples/1.png +0 -0

- 2D_Stage/material/examples/2.png +0 -0

- 2D_Stage/material/examples/3.png +0 -0

- 2D_Stage/material/examples/4.png +0 -0

- 2D_Stage/material/examples/5.png +0 -0

- 2D_Stage/material/examples/6.png +0 -0

- 2D_Stage/material/examples/7.png +0 -0

- 2D_Stage/material/examples/8.png +0 -0

- 2D_Stage/material/pose.json +38 -0

- 2D_Stage/material/pose0.png +0 -0

- 2D_Stage/material/pose1.png +0 -0

- 2D_Stage/material/pose2.png +0 -0

- 2D_Stage/material/pose3.png +0 -0

- 2D_Stage/tuneavideo/__pycache__/util.cpython-310.pyc +0 -0

- 2D_Stage/tuneavideo/models/PoseGuider.py +59 -0

- 2D_Stage/tuneavideo/models/__pycache__/PoseGuider.cpython-310.pyc +0 -0

- 2D_Stage/tuneavideo/models/__pycache__/refunet.cpython-310.pyc +0 -0

- 2D_Stage/tuneavideo/models/__pycache__/resnet.cpython-310.pyc +0 -0

- 2D_Stage/tuneavideo/models/__pycache__/transformer_mv2d.cpython-310.pyc +0 -0

- 2D_Stage/tuneavideo/models/__pycache__/unet.cpython-310.pyc +0 -0

- 2D_Stage/tuneavideo/models/__pycache__/unet_blocks.cpython-310.pyc +0 -0

- 2D_Stage/tuneavideo/models/__pycache__/unet_mv2d_blocks.cpython-310.pyc +0 -0

- 2D_Stage/tuneavideo/models/__pycache__/unet_mv2d_condition.cpython-310.pyc +0 -0

- 2D_Stage/tuneavideo/models/__pycache__/unet_mv2d_ref.cpython-310.pyc +0 -0

- 2D_Stage/tuneavideo/models/attention.py +344 -0

- 2D_Stage/tuneavideo/models/imageproj.py +118 -0

- 2D_Stage/tuneavideo/models/refunet.py +125 -0

- 2D_Stage/tuneavideo/models/resnet.py +210 -0

- 2D_Stage/tuneavideo/models/transformer_mv2d.py +1010 -0

- 2D_Stage/tuneavideo/models/unet.py +497 -0

- 2D_Stage/tuneavideo/models/unet_blocks.py +596 -0

- 2D_Stage/tuneavideo/models/unet_mv2d_blocks.py +926 -0

- 2D_Stage/tuneavideo/models/unet_mv2d_condition.py +1509 -0

- 2D_Stage/tuneavideo/models/unet_mv2d_ref.py +1570 -0

- 2D_Stage/tuneavideo/pipelines/__pycache__/pipeline_tuneavideo.cpython-310.pyc +0 -0

- 2D_Stage/tuneavideo/pipelines/pipeline_tuneavideo.py +585 -0

- 2D_Stage/tuneavideo/util.py +128 -0

- 2D_Stage/webui.py +323 -0

- 3D_Stage/__pycache__/refine.cpython-310.pyc +0 -0

- 3D_Stage/configs/infer.yaml +104 -0

- 3D_Stage/load/tets/128_tets.npz +3 -0

- 3D_Stage/load/tets/256_tets.npz +3 -0

- 3D_Stage/load/tets/32_tets.npz +3 -0

- 3D_Stage/load/tets/64_tets.npz +3 -0

- 3D_Stage/load/tets/generate_tets.py +58 -0

- 3D_Stage/lrm/__init__.py +29 -0

- 3D_Stage/lrm/__pycache__/__init__.cpython-310.pyc +0 -0

- 3D_Stage/lrm/models/__init__.py +0 -0

2D_Stage/configs/infer.yaml

ADDED

|

@@ -0,0 +1,24 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

pretrained_model_path: "stabilityai/stable-diffusion-2-1"

|

| 2 |

+

image_encoder_path: "./models/image_encoder"

|

| 3 |

+

ckpt_dir: "./models/checkpoint"

|

| 4 |

+

|

| 5 |

+

validation:

|

| 6 |

+

guidance_scale: 5.0

|

| 7 |

+

use_inv_latent: False

|

| 8 |

+

video_length: 4

|

| 9 |

+

|

| 10 |

+

use_pose_guider: True

|

| 11 |

+

use_noise: False

|

| 12 |

+

use_shifted_noise: False

|

| 13 |

+

unet_condition_type: image

|

| 14 |

+

|

| 15 |

+

unet_from_pretrained_kwargs:

|

| 16 |

+

camera_embedding_type: 'e_de_da_sincos'

|

| 17 |

+

projection_class_embeddings_input_dim: 10 # modify

|

| 18 |

+

joint_attention: false # modify

|

| 19 |

+

num_views: 4

|

| 20 |

+

sample_size: 96

|

| 21 |

+

zero_init_conv_in: false

|

| 22 |

+

zero_init_camera_projection: false

|

| 23 |

+

in_channels: 4

|

| 24 |

+

use_safetensors: true

|

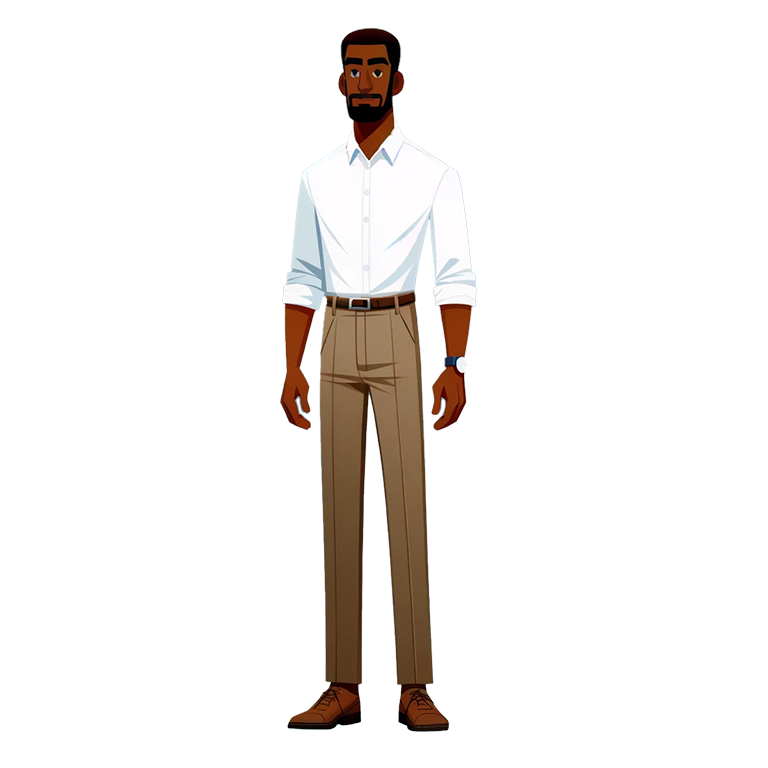

2D_Stage/input.png

ADDED

|

2D_Stage/material/examples/1.png

ADDED

|

2D_Stage/material/examples/2.png

ADDED

|

2D_Stage/material/examples/3.png

ADDED

|

2D_Stage/material/examples/4.png

ADDED

|

2D_Stage/material/examples/5.png

ADDED

|

2D_Stage/material/examples/6.png

ADDED

|

2D_Stage/material/examples/7.png

ADDED

|

2D_Stage/material/examples/8.png

ADDED

|

2D_Stage/material/pose.json

ADDED

|

@@ -0,0 +1,38 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

[

|

| 2 |

+

[

|

| 3 |

+

[

|

| 4 |

+

0, 0, -1, 0,

|

| 5 |

+

0, 1, 0, 0,

|

| 6 |

+

1, 0, 0, 0,

|

| 7 |

+

1.5, 0, 0, 1

|

| 8 |

+

],

|

| 9 |

+

"pose0.png"

|

| 10 |

+

],

|

| 11 |

+

[

|

| 12 |

+

[

|

| 13 |

+

0, 0, 1, 0,

|

| 14 |

+

0, 1, 0, 0,

|

| 15 |

+

-1, 0, 0, 0,

|

| 16 |

+

-1.5, 0, 0, 1

|

| 17 |

+

],

|

| 18 |

+

"pose1.png"

|

| 19 |

+

],

|

| 20 |

+

[

|

| 21 |

+

[

|

| 22 |

+

0, 0, 1, 0,

|

| 23 |

+

0, 1, 0, 0,

|

| 24 |

+

-1, 0, 0, 0,

|

| 25 |

+

-1.5, 0, 0, 1

|

| 26 |

+

],

|

| 27 |

+

"pose2.png"

|

| 28 |

+

],

|

| 29 |

+

[

|

| 30 |

+

[

|

| 31 |

+

-1, 0, 0, 0,

|

| 32 |

+

0, 1, 0, 0,

|

| 33 |

+

0, 0, -1, 0,

|

| 34 |

+

0, 0, -1.5, 1

|

| 35 |

+

],

|

| 36 |

+

"pose3.png"

|

| 37 |

+

]

|

| 38 |

+

]

|

2D_Stage/material/pose0.png

ADDED

|

2D_Stage/material/pose1.png

ADDED

|

2D_Stage/material/pose2.png

ADDED

|

2D_Stage/material/pose3.png

ADDED

|

2D_Stage/tuneavideo/__pycache__/util.cpython-310.pyc

ADDED

|

Binary file (4.36 kB). View file

|

|

|

2D_Stage/tuneavideo/models/PoseGuider.py

ADDED

|

@@ -0,0 +1,59 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import torch

|

| 3 |

+

import torch.nn as nn

|

| 4 |

+

import torch.nn.init as init

|

| 5 |

+

from einops import rearrange

|

| 6 |

+

|

| 7 |

+

class PoseGuider(nn.Module):

|

| 8 |

+

def __init__(self, noise_latent_channels=4):

|

| 9 |

+

super(PoseGuider, self).__init__()

|

| 10 |

+

|

| 11 |

+

self.conv_layers = nn.Sequential(

|

| 12 |

+

nn.Conv2d(in_channels=3, out_channels=16, kernel_size=4, stride=2, padding=1),

|

| 13 |

+

nn.ReLU(),

|

| 14 |

+

nn.Conv2d(in_channels=16, out_channels=32, kernel_size=4, stride=2, padding=1),

|

| 15 |

+

nn.ReLU(),

|

| 16 |

+

nn.Conv2d(in_channels=32, out_channels=64, kernel_size=4, stride=2, padding=1),

|

| 17 |

+

nn.ReLU(),

|

| 18 |

+

nn.Conv2d(in_channels=64, out_channels=128, kernel_size=3, stride=1, padding=1),

|

| 19 |

+

nn.ReLU()

|

| 20 |

+

)

|

| 21 |

+

|

| 22 |

+

# Final projection layer

|

| 23 |

+

self.final_proj = nn.Conv2d(in_channels=128, out_channels=noise_latent_channels, kernel_size=1)

|

| 24 |

+

|

| 25 |

+

# Initialize layers

|

| 26 |

+

self._initialize_weights()

|

| 27 |

+

|

| 28 |

+

def _initialize_weights(self):

|

| 29 |

+

# Initialize weights with Gaussian distribution and zero out the final layer

|

| 30 |

+

for m in self.conv_layers:

|

| 31 |

+

if isinstance(m, nn.Conv2d):

|

| 32 |

+

init.normal_(m.weight, mean=0.0, std=0.02)

|

| 33 |

+

if m.bias is not None:

|

| 34 |

+

init.zeros_(m.bias)

|

| 35 |

+

|

| 36 |

+

init.zeros_(self.final_proj.weight)

|

| 37 |

+

if self.final_proj.bias is not None:

|

| 38 |

+

init.zeros_(self.final_proj.bias)

|

| 39 |

+

|

| 40 |

+

def forward(self, pose_image):

|

| 41 |

+

x = self.conv_layers(pose_image)

|

| 42 |

+

x = self.final_proj(x)

|

| 43 |

+

|

| 44 |

+

return x

|

| 45 |

+

|

| 46 |

+

@classmethod

|

| 47 |

+

def from_pretrained(pretrained_model_path):

|

| 48 |

+

if not os.path.exists(pretrained_model_path):

|

| 49 |

+

print(f"There is no model file in {pretrained_model_path}")

|

| 50 |

+

print(f"loaded PoseGuider's pretrained weights from {pretrained_model_path} ...")

|

| 51 |

+

|

| 52 |

+

state_dict = torch.load(pretrained_model_path, map_location="cpu")

|

| 53 |

+

model = PoseGuider(noise_latent_channels=4)

|

| 54 |

+

m, u = model.load_state_dict(state_dict, strict=False)

|

| 55 |

+

print(f"### missing keys: {len(m)}; \n### unexpected keys: {len(u)};")

|

| 56 |

+

params = [p.numel() if "temporal" in n else 0 for n, p in model.named_parameters()]

|

| 57 |

+

print(f"### PoseGuider's Parameters: {sum(params) / 1e6} M")

|

| 58 |

+

|

| 59 |

+

return model

|

2D_Stage/tuneavideo/models/__pycache__/PoseGuider.cpython-310.pyc

ADDED

|

Binary file (2.41 kB). View file

|

|

|

2D_Stage/tuneavideo/models/__pycache__/refunet.cpython-310.pyc

ADDED

|

Binary file (4.05 kB). View file

|

|

|

2D_Stage/tuneavideo/models/__pycache__/resnet.cpython-310.pyc

ADDED

|

Binary file (5.13 kB). View file

|

|

|

2D_Stage/tuneavideo/models/__pycache__/transformer_mv2d.cpython-310.pyc

ADDED

|

Binary file (23 kB). View file

|

|

|

2D_Stage/tuneavideo/models/__pycache__/unet.cpython-310.pyc

ADDED

|

Binary file (11.9 kB). View file

|

|

|

2D_Stage/tuneavideo/models/__pycache__/unet_blocks.cpython-310.pyc

ADDED

|

Binary file (10.9 kB). View file

|

|

|

2D_Stage/tuneavideo/models/__pycache__/unet_mv2d_blocks.cpython-310.pyc

ADDED

|

Binary file (15.2 kB). View file

|

|

|

2D_Stage/tuneavideo/models/__pycache__/unet_mv2d_condition.cpython-310.pyc

ADDED

|

Binary file (45.7 kB). View file

|

|

|

2D_Stage/tuneavideo/models/__pycache__/unet_mv2d_ref.cpython-310.pyc

ADDED

|

Binary file (48.1 kB). View file

|

|

|

2D_Stage/tuneavideo/models/attention.py

ADDED

|

@@ -0,0 +1,344 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Adapted from https://github.com/huggingface/diffusers/blob/main/src/diffusers/models/attention.py

|

| 2 |

+

|

| 3 |

+

from dataclasses import dataclass

|

| 4 |

+

from typing import Optional

|

| 5 |

+

|

| 6 |

+

import torch

|

| 7 |

+

import torch.nn.functional as F

|

| 8 |

+

from torch import nn

|

| 9 |

+

|

| 10 |

+

from diffusers.configuration_utils import ConfigMixin, register_to_config

|

| 11 |

+

from diffusers import ModelMixin

|

| 12 |

+

from diffusers.utils import BaseOutput

|

| 13 |

+

from diffusers.utils.import_utils import is_xformers_available

|

| 14 |

+

from diffusers.models.attention import CrossAttention, FeedForward, AdaLayerNorm

|

| 15 |

+

|

| 16 |

+

from einops import rearrange, repeat

|

| 17 |

+

|

| 18 |

+

|

| 19 |

+

@dataclass

|

| 20 |

+

class Transformer3DModelOutput(BaseOutput):

|

| 21 |

+

sample: torch.FloatTensor

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

if is_xformers_available():

|

| 25 |

+

import xformers

|

| 26 |

+

import xformers.ops

|

| 27 |

+

else:

|

| 28 |

+

xformers = None

|

| 29 |

+

|

| 30 |

+

|

| 31 |

+

class Transformer3DModel(ModelMixin, ConfigMixin):

|

| 32 |

+

@register_to_config

|

| 33 |

+

def __init__(

|

| 34 |

+

self,

|

| 35 |

+

num_attention_heads: int = 16,

|

| 36 |

+

attention_head_dim: int = 88,

|

| 37 |

+

in_channels: Optional[int] = None,

|

| 38 |

+

num_layers: int = 1,

|

| 39 |

+

dropout: float = 0.0,

|

| 40 |

+

norm_num_groups: int = 32,

|

| 41 |

+

cross_attention_dim: Optional[int] = None,

|

| 42 |

+

attention_bias: bool = False,

|

| 43 |

+

activation_fn: str = "geglu",

|

| 44 |

+

num_embeds_ada_norm: Optional[int] = None,

|

| 45 |

+

use_linear_projection: bool = False,

|

| 46 |

+

only_cross_attention: bool = False,

|

| 47 |

+

upcast_attention: bool = False,

|

| 48 |

+

use_attn_temp: bool = False,

|

| 49 |

+

):

|

| 50 |

+

super().__init__()

|

| 51 |

+

self.use_linear_projection = use_linear_projection

|

| 52 |

+

self.num_attention_heads = num_attention_heads

|

| 53 |

+

self.attention_head_dim = attention_head_dim

|

| 54 |

+

inner_dim = num_attention_heads * attention_head_dim

|

| 55 |

+

|

| 56 |

+

# Define input layers

|

| 57 |

+

self.in_channels = in_channels

|

| 58 |

+

|

| 59 |

+

self.norm = torch.nn.GroupNorm(num_groups=norm_num_groups, num_channels=in_channels, eps=1e-6, affine=True)

|

| 60 |

+

if use_linear_projection:

|

| 61 |

+

self.proj_in = nn.Linear(in_channels, inner_dim)

|

| 62 |

+

else:

|

| 63 |

+

self.proj_in = nn.Conv2d(in_channels, inner_dim, kernel_size=1, stride=1, padding=0)

|

| 64 |

+

|

| 65 |

+

# Define transformers blocks

|

| 66 |

+

self.transformer_blocks = nn.ModuleList(

|

| 67 |

+

[

|

| 68 |

+

BasicTransformerBlock(

|

| 69 |

+

inner_dim,

|

| 70 |

+

num_attention_heads,

|

| 71 |

+

attention_head_dim,

|

| 72 |

+

dropout=dropout,

|

| 73 |

+

cross_attention_dim=cross_attention_dim,

|

| 74 |

+

activation_fn=activation_fn,

|

| 75 |

+

num_embeds_ada_norm=num_embeds_ada_norm,

|

| 76 |

+

attention_bias=attention_bias,

|

| 77 |

+

only_cross_attention=only_cross_attention,

|

| 78 |

+

upcast_attention=upcast_attention,

|

| 79 |

+

use_attn_temp = use_attn_temp,

|

| 80 |

+

)

|

| 81 |

+

for d in range(num_layers)

|

| 82 |

+

]

|

| 83 |

+

)

|

| 84 |

+

|

| 85 |

+

# 4. Define output layers

|

| 86 |

+

if use_linear_projection:

|

| 87 |

+

self.proj_out = nn.Linear(in_channels, inner_dim)

|

| 88 |

+

else:

|

| 89 |

+

self.proj_out = nn.Conv2d(inner_dim, in_channels, kernel_size=1, stride=1, padding=0)

|

| 90 |

+

|

| 91 |

+

def forward(self, hidden_states, encoder_hidden_states=None, timestep=None, return_dict: bool = True):

|

| 92 |

+

# Input

|

| 93 |

+

assert hidden_states.dim() == 5, f"Expected hidden_states to have ndim=5, but got ndim={hidden_states.dim()}."

|

| 94 |

+

video_length = hidden_states.shape[2]

|

| 95 |

+

hidden_states = rearrange(hidden_states, "b c f h w -> (b f) c h w")

|

| 96 |

+

encoder_hidden_states = repeat(encoder_hidden_states, 'b n c -> (b f) n c', f=video_length)

|

| 97 |

+

|

| 98 |

+

batch, channel, height, weight = hidden_states.shape

|

| 99 |

+

residual = hidden_states

|

| 100 |

+

|

| 101 |

+

hidden_states = self.norm(hidden_states)

|

| 102 |

+

if not self.use_linear_projection:

|

| 103 |

+

hidden_states = self.proj_in(hidden_states)

|

| 104 |

+

inner_dim = hidden_states.shape[1]

|

| 105 |

+

hidden_states = hidden_states.permute(0, 2, 3, 1).reshape(batch, height * weight, inner_dim)

|

| 106 |

+

else:

|

| 107 |

+

inner_dim = hidden_states.shape[1]

|

| 108 |

+

hidden_states = hidden_states.permute(0, 2, 3, 1).reshape(batch, height * weight, inner_dim)

|

| 109 |

+

hidden_states = self.proj_in(hidden_states)

|

| 110 |

+

|

| 111 |

+

# Blocks

|

| 112 |

+

for block in self.transformer_blocks:

|

| 113 |

+

hidden_states = block(

|

| 114 |

+

hidden_states,

|

| 115 |

+

encoder_hidden_states=encoder_hidden_states,

|

| 116 |

+

timestep=timestep,

|

| 117 |

+

video_length=video_length

|

| 118 |

+

)

|

| 119 |

+

|

| 120 |

+

# Output

|

| 121 |

+

if not self.use_linear_projection:

|

| 122 |

+

hidden_states = (

|

| 123 |

+

hidden_states.reshape(batch, height, weight, inner_dim).permute(0, 3, 1, 2).contiguous()

|

| 124 |

+

)

|

| 125 |

+

hidden_states = self.proj_out(hidden_states)

|

| 126 |

+

else:

|

| 127 |

+

hidden_states = self.proj_out(hidden_states)

|

| 128 |

+

hidden_states = (

|

| 129 |

+

hidden_states.reshape(batch, height, weight, inner_dim).permute(0, 3, 1, 2).contiguous()

|

| 130 |

+

)

|

| 131 |

+

|

| 132 |

+

output = hidden_states + residual

|

| 133 |

+

|

| 134 |

+

output = rearrange(output, "(b f) c h w -> b c f h w", f=video_length)

|

| 135 |

+

if not return_dict:

|

| 136 |

+

return (output,)

|

| 137 |

+

|

| 138 |

+

return Transformer3DModelOutput(sample=output)

|

| 139 |

+

|

| 140 |

+

|

| 141 |

+

class BasicTransformerBlock(nn.Module):

|

| 142 |

+

def __init__(

|

| 143 |

+

self,

|

| 144 |

+

dim: int,

|

| 145 |

+

num_attention_heads: int,

|

| 146 |

+

attention_head_dim: int,

|

| 147 |

+

dropout=0.0,

|

| 148 |

+

cross_attention_dim: Optional[int] = None,

|

| 149 |

+

activation_fn: str = "geglu",

|

| 150 |

+

num_embeds_ada_norm: Optional[int] = None,

|

| 151 |

+

attention_bias: bool = False,

|

| 152 |

+

only_cross_attention: bool = False,

|

| 153 |

+

upcast_attention: bool = False,

|

| 154 |

+

use_attn_temp: bool = False

|

| 155 |

+

):

|

| 156 |

+

super().__init__()

|

| 157 |

+

self.only_cross_attention = only_cross_attention

|

| 158 |

+

self.use_ada_layer_norm = num_embeds_ada_norm is not None

|

| 159 |

+

self.use_attn_temp = use_attn_temp

|

| 160 |

+

# SC-Attn

|

| 161 |

+

self.attn1 = SparseCausalAttention(

|

| 162 |

+

query_dim=dim,

|

| 163 |

+

heads=num_attention_heads,

|

| 164 |

+

dim_head=attention_head_dim,

|

| 165 |

+

dropout=dropout,

|

| 166 |

+

bias=attention_bias,

|

| 167 |

+

cross_attention_dim=cross_attention_dim if only_cross_attention else None,

|

| 168 |

+

upcast_attention=upcast_attention,

|

| 169 |

+

)

|

| 170 |

+

self.norm1 = AdaLayerNorm(dim, num_embeds_ada_norm) if self.use_ada_layer_norm else nn.LayerNorm(dim)

|

| 171 |

+

|

| 172 |

+

# Cross-Attn

|

| 173 |

+

if cross_attention_dim is not None:

|

| 174 |

+

self.attn2 = CrossAttention(

|

| 175 |

+

query_dim=dim,

|

| 176 |

+

cross_attention_dim=cross_attention_dim,

|

| 177 |

+

heads=num_attention_heads,

|

| 178 |

+

dim_head=attention_head_dim,

|

| 179 |

+

dropout=dropout,

|

| 180 |

+

bias=attention_bias,

|

| 181 |

+

upcast_attention=upcast_attention,

|

| 182 |

+

)

|

| 183 |

+

else:

|

| 184 |

+

self.attn2 = None

|

| 185 |

+

|

| 186 |

+

if cross_attention_dim is not None:

|

| 187 |

+

self.norm2 = AdaLayerNorm(dim, num_embeds_ada_norm) if self.use_ada_layer_norm else nn.LayerNorm(dim)

|

| 188 |

+

else:

|

| 189 |

+

self.norm2 = None

|

| 190 |

+

|

| 191 |

+

# Feed-forward

|

| 192 |

+

self.ff = FeedForward(dim, dropout=dropout, activation_fn=activation_fn)

|

| 193 |

+

self.norm3 = nn.LayerNorm(dim)

|

| 194 |

+

|

| 195 |

+

# Temp-Attn

|

| 196 |

+

if self.use_attn_temp:

|

| 197 |

+

self.attn_temp = CrossAttention(

|

| 198 |

+

query_dim=dim,

|

| 199 |

+

heads=num_attention_heads,

|

| 200 |

+

dim_head=attention_head_dim,

|

| 201 |

+

dropout=dropout,

|

| 202 |

+

bias=attention_bias,

|

| 203 |

+

upcast_attention=upcast_attention,

|

| 204 |

+

)

|

| 205 |

+

nn.init.zeros_(self.attn_temp.to_out[0].weight.data)

|

| 206 |

+

self.norm_temp = AdaLayerNorm(dim, num_embeds_ada_norm) if self.use_ada_layer_norm else nn.LayerNorm(dim)

|

| 207 |

+

|

| 208 |

+

def set_use_memory_efficient_attention_xformers(self, use_memory_efficient_attention_xformers: bool):

|

| 209 |

+

if not is_xformers_available():

|

| 210 |

+

print("Here is how to install it")

|

| 211 |

+

raise ModuleNotFoundError(

|

| 212 |

+

"Refer to https://github.com/facebookresearch/xformers for more information on how to install"

|

| 213 |

+

" xformers",

|

| 214 |

+

name="xformers",

|

| 215 |

+

)

|

| 216 |

+

elif not torch.cuda.is_available():

|

| 217 |

+

raise ValueError(

|

| 218 |

+

"torch.cuda.is_available() should be True but is False. xformers' memory efficient attention is only"

|

| 219 |

+

" available for GPU "

|

| 220 |

+

)

|

| 221 |

+

else:

|

| 222 |

+

try:

|

| 223 |

+

# Make sure we can run the memory efficient attention

|

| 224 |

+

_ = xformers.ops.memory_efficient_attention(

|

| 225 |

+

torch.randn((1, 2, 40), device="cuda"),

|

| 226 |

+

torch.randn((1, 2, 40), device="cuda"),

|

| 227 |

+

torch.randn((1, 2, 40), device="cuda"),

|

| 228 |

+

)

|

| 229 |

+

except Exception as e:

|

| 230 |

+

raise e

|

| 231 |

+

self.attn1._use_memory_efficient_attention_xformers = use_memory_efficient_attention_xformers

|

| 232 |

+

if self.attn2 is not None:

|

| 233 |

+

self.attn2._use_memory_efficient_attention_xformers = use_memory_efficient_attention_xformers

|

| 234 |

+

#self.attn_temp._use_memory_efficient_attention_xformers = use_memory_efficient_attention_xformers

|

| 235 |

+

|

| 236 |

+

def forward(self, hidden_states, encoder_hidden_states=None, timestep=None, attention_mask=None, video_length=None):

|

| 237 |

+

# SparseCausal-Attention

|

| 238 |

+

norm_hidden_states = (

|

| 239 |

+

self.norm1(hidden_states, timestep) if self.use_ada_layer_norm else self.norm1(hidden_states)

|

| 240 |

+

)

|

| 241 |

+

|

| 242 |

+

if self.only_cross_attention:

|

| 243 |

+

hidden_states = (

|

| 244 |

+

self.attn1(norm_hidden_states, encoder_hidden_states, attention_mask=attention_mask) + hidden_states

|

| 245 |

+

)

|

| 246 |

+

else:

|

| 247 |

+

hidden_states = self.attn1(norm_hidden_states, attention_mask=attention_mask, video_length=video_length) + hidden_states

|

| 248 |

+

|

| 249 |

+

if self.attn2 is not None:

|

| 250 |

+

# Cross-Attention

|

| 251 |

+

norm_hidden_states = (

|

| 252 |

+

self.norm2(hidden_states, timestep) if self.use_ada_layer_norm else self.norm2(hidden_states)

|

| 253 |

+

)

|

| 254 |

+

hidden_states = (

|

| 255 |

+

self.attn2(

|

| 256 |

+

norm_hidden_states, encoder_hidden_states=encoder_hidden_states, attention_mask=attention_mask

|

| 257 |

+

)

|

| 258 |

+

+ hidden_states

|

| 259 |

+

)

|

| 260 |

+

|

| 261 |

+

# Feed-forward

|

| 262 |

+

hidden_states = self.ff(self.norm3(hidden_states)) + hidden_states

|

| 263 |

+

|

| 264 |

+

# Temporal-Attention

|

| 265 |

+

if self.use_attn_temp:

|

| 266 |

+

d = hidden_states.shape[1]

|

| 267 |

+

hidden_states = rearrange(hidden_states, "(b f) d c -> (b d) f c", f=video_length)

|

| 268 |

+

norm_hidden_states = (

|

| 269 |

+

self.norm_temp(hidden_states, timestep) if self.use_ada_layer_norm else self.norm_temp(hidden_states)

|

| 270 |

+

)

|

| 271 |

+

hidden_states = self.attn_temp(norm_hidden_states) + hidden_states

|

| 272 |

+

hidden_states = rearrange(hidden_states, "(b d) f c -> (b f) d c", d=d)

|

| 273 |

+

|

| 274 |

+

return hidden_states

|

| 275 |

+

|

| 276 |

+

|

| 277 |

+

class SparseCausalAttention(CrossAttention):

|

| 278 |

+

def forward(self, hidden_states, encoder_hidden_states=None, attention_mask=None, video_length=None, use_full_attn=True):

|

| 279 |

+

batch_size, sequence_length, _ = hidden_states.shape

|

| 280 |

+

|

| 281 |

+

encoder_hidden_states = encoder_hidden_states

|

| 282 |

+

|

| 283 |

+

if self.group_norm is not None:

|

| 284 |

+

hidden_states = self.group_norm(hidden_states.transpose(1, 2)).transpose(1, 2)

|

| 285 |

+

|

| 286 |

+

query = self.to_q(hidden_states)

|

| 287 |

+

# query = rearrange(query, "(b f) d c -> b (f d) c", f=video_length)

|

| 288 |

+

dim = query.shape[-1]

|

| 289 |

+

query = self.reshape_heads_to_batch_dim(query)

|

| 290 |

+

|

| 291 |

+

if self.added_kv_proj_dim is not None:

|

| 292 |

+

raise NotImplementedError

|

| 293 |

+

|

| 294 |

+

encoder_hidden_states = encoder_hidden_states if encoder_hidden_states is not None else hidden_states

|

| 295 |

+

key = self.to_k(encoder_hidden_states)

|

| 296 |

+

value = self.to_v(encoder_hidden_states)

|

| 297 |

+

|

| 298 |

+

former_frame_index = torch.arange(video_length) - 1

|

| 299 |

+

former_frame_index[0] = 0

|

| 300 |

+

|

| 301 |

+

key = rearrange(key, "(b f) d c -> b f d c", f=video_length)

|

| 302 |

+

if not use_full_attn:

|

| 303 |

+

key = torch.cat([key[:, [0] * video_length], key[:, former_frame_index]], dim=2)

|

| 304 |

+

else:

|

| 305 |

+

# key = torch.cat([key[:, [0] * video_length], key[:, [1] * video_length], key[:, [2] * video_length], key[:, [3] * video_length]], dim=2)

|

| 306 |

+

key_video_length = [key[:, [i] * video_length] for i in range(video_length)]

|

| 307 |

+

key = torch.cat(key_video_length, dim=2)

|

| 308 |

+

key = rearrange(key, "b f d c -> (b f) d c")

|

| 309 |

+

|

| 310 |

+

value = rearrange(value, "(b f) d c -> b f d c", f=video_length)

|

| 311 |

+

if not use_full_attn:

|

| 312 |

+

value = torch.cat([value[:, [0] * video_length], value[:, former_frame_index]], dim=2)

|

| 313 |

+

else:

|

| 314 |

+

# value = torch.cat([value[:, [0] * video_length], value[:, [1] * video_length], value[:, [2] * video_length], value[:, [3] * video_length]], dim=2)

|

| 315 |

+

value_video_length = [value[:, [i] * video_length] for i in range(video_length)]

|

| 316 |

+

value = torch.cat(value_video_length, dim=2)

|

| 317 |

+

value = rearrange(value, "b f d c -> (b f) d c")

|

| 318 |

+

|

| 319 |

+

key = self.reshape_heads_to_batch_dim(key)

|

| 320 |

+

value = self.reshape_heads_to_batch_dim(value)

|

| 321 |

+

|

| 322 |

+

if attention_mask is not None:

|

| 323 |

+

if attention_mask.shape[-1] != query.shape[1]:

|

| 324 |

+

target_length = query.shape[1]

|

| 325 |

+

attention_mask = F.pad(attention_mask, (0, target_length), value=0.0)

|

| 326 |

+

attention_mask = attention_mask.repeat_interleave(self.heads, dim=0)

|

| 327 |

+

|

| 328 |

+

# attention, what we cannot get enough of

|

| 329 |

+

if self._use_memory_efficient_attention_xformers:

|

| 330 |

+

hidden_states = self._memory_efficient_attention_xformers(query, key, value, attention_mask)

|

| 331 |

+

# Some versions of xformers return output in fp32, cast it back to the dtype of the input

|

| 332 |

+

hidden_states = hidden_states.to(query.dtype)

|

| 333 |

+

else:

|

| 334 |

+

if self._slice_size is None or query.shape[0] // self._slice_size == 1:

|

| 335 |

+

hidden_states = self._attention(query, key, value, attention_mask)

|

| 336 |

+

else:

|

| 337 |

+

hidden_states = self._sliced_attention(query, key, value, sequence_length, dim, attention_mask)

|

| 338 |

+

|

| 339 |

+

# linear proj

|

| 340 |

+

hidden_states = self.to_out[0](hidden_states)

|

| 341 |

+

|

| 342 |

+

# dropout

|

| 343 |

+

hidden_states = self.to_out[1](hidden_states)

|

| 344 |

+

return hidden_states

|

2D_Stage/tuneavideo/models/imageproj.py

ADDED

|

@@ -0,0 +1,118 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# modified from https://github.com/mlfoundations/open_flamingo/blob/main/open_flamingo/src/helpers.py

|

| 2 |

+

import math

|

| 3 |

+

|

| 4 |

+

import torch

|

| 5 |

+

import torch.nn as nn

|

| 6 |

+

|

| 7 |

+

# FFN

|

| 8 |

+

def FeedForward(dim, mult=4):

|

| 9 |

+

inner_dim = int(dim * mult)

|

| 10 |

+

return nn.Sequential(

|

| 11 |

+

nn.LayerNorm(dim),

|

| 12 |

+

nn.Linear(dim, inner_dim, bias=False),

|

| 13 |

+

nn.GELU(),

|

| 14 |

+

nn.Linear(inner_dim, dim, bias=False),

|

| 15 |

+

)

|

| 16 |

+

|

| 17 |

+

def reshape_tensor(x, heads):

|

| 18 |

+

bs, length, width = x.shape

|

| 19 |

+

#(bs, length, width) --> (bs, length, n_heads, dim_per_head)

|

| 20 |

+

x = x.view(bs, length, heads, -1)

|

| 21 |

+

# (bs, length, n_heads, dim_per_head) --> (bs, n_heads, length, dim_per_head)

|

| 22 |

+

x = x.transpose(1, 2)

|

| 23 |

+

# (bs, n_heads, length, dim_per_head) --> (bs*n_heads, length, dim_per_head)

|

| 24 |

+

x = x.reshape(bs, heads, length, -1)

|

| 25 |

+

return x

|

| 26 |

+

|

| 27 |

+

|

| 28 |

+

class PerceiverAttention(nn.Module):

|

| 29 |

+

def __init__(self, *, dim, dim_head=64, heads=8):

|

| 30 |

+

super().__init__()

|

| 31 |

+

self.scale = dim_head**-0.5

|

| 32 |

+

self.dim_head = dim_head

|

| 33 |

+

self.heads = heads

|

| 34 |

+

inner_dim = dim_head * heads

|

| 35 |

+

|

| 36 |

+

self.norm1 = nn.LayerNorm(dim)

|

| 37 |

+

self.norm2 = nn.LayerNorm(dim)

|

| 38 |

+

|

| 39 |

+

self.to_q = nn.Linear(dim, inner_dim, bias=False)

|

| 40 |

+

self.to_kv = nn.Linear(dim, inner_dim * 2, bias=False)

|

| 41 |

+

self.to_out = nn.Linear(inner_dim, dim, bias=False)

|

| 42 |

+

|

| 43 |

+

|

| 44 |

+

def forward(self, x, latents):

|

| 45 |

+

"""

|

| 46 |

+

Args:

|

| 47 |

+

x (torch.Tensor): image features

|

| 48 |

+

shape (b, n1, D)

|

| 49 |

+

latent (torch.Tensor): latent features

|

| 50 |

+

shape (b, n2, D)

|

| 51 |

+

"""

|

| 52 |

+

x = self.norm1(x)

|

| 53 |

+

latents = self.norm2(latents)

|

| 54 |

+

|

| 55 |

+

b, l, _ = latents.shape

|

| 56 |

+

|

| 57 |

+

q = self.to_q(latents)

|

| 58 |

+

kv_input = torch.cat((x, latents), dim=-2)

|

| 59 |

+

k, v = self.to_kv(kv_input).chunk(2, dim=-1)

|

| 60 |

+

|

| 61 |

+

q = reshape_tensor(q, self.heads)

|

| 62 |

+

k = reshape_tensor(k, self.heads)

|

| 63 |

+

v = reshape_tensor(v, self.heads)

|

| 64 |

+

|

| 65 |

+

# attention

|

| 66 |

+

scale = 1 / math.sqrt(math.sqrt(self.dim_head))

|

| 67 |

+

weight = (q * scale) @ (k * scale).transpose(-2, -1) # More stable with f16 than dividing afterwards

|

| 68 |

+

weight = torch.softmax(weight.float(), dim=-1).type(weight.dtype)

|

| 69 |

+

out = weight @ v

|

| 70 |

+

|

| 71 |

+

out = out.permute(0, 2, 1, 3).reshape(b, l, -1)

|

| 72 |

+

|

| 73 |

+

return self.to_out(out)

|

| 74 |

+

|

| 75 |

+

class Resampler(nn.Module):

|

| 76 |

+

def __init__(

|

| 77 |

+

self,

|

| 78 |

+

dim=1024,

|

| 79 |

+

depth=8,

|

| 80 |

+

dim_head=64,

|

| 81 |

+

heads=16,

|

| 82 |

+

num_queries=8,

|

| 83 |

+

embedding_dim=768,

|

| 84 |

+

output_dim=1024,

|

| 85 |

+

ff_mult=4,

|

| 86 |

+

):

|

| 87 |

+

super().__init__()

|

| 88 |

+

|

| 89 |

+

self.latents = nn.Parameter(torch.randn(1, num_queries, dim) / dim**0.5)

|

| 90 |

+

|

| 91 |

+

self.proj_in = nn.Linear(embedding_dim, dim)

|

| 92 |

+

|

| 93 |

+

self.proj_out = nn.Linear(dim, output_dim)

|

| 94 |

+

self.norm_out = nn.LayerNorm(output_dim)

|

| 95 |

+

|

| 96 |

+

self.layers = nn.ModuleList([])

|

| 97 |

+

for _ in range(depth):

|

| 98 |

+

self.layers.append(

|

| 99 |

+

nn.ModuleList(

|

| 100 |

+

[

|

| 101 |

+

PerceiverAttention(dim=dim, dim_head=dim_head, heads=heads),

|

| 102 |

+

FeedForward(dim=dim, mult=ff_mult),

|

| 103 |

+

]

|

| 104 |

+

)

|

| 105 |

+

)

|

| 106 |

+

|

| 107 |

+

def forward(self, x):

|

| 108 |

+

|

| 109 |

+

latents = self.latents.repeat(x.size(0), 1, 1)

|

| 110 |

+

|

| 111 |

+

x = self.proj_in(x)

|

| 112 |

+

|

| 113 |

+

for attn, ff in self.layers:

|

| 114 |

+

latents = attn(x, latents) + latents

|

| 115 |

+

latents = ff(latents) + latents

|

| 116 |

+

|

| 117 |

+

latents = self.proj_out(latents)

|

| 118 |

+

return self.norm_out(latents)

|

2D_Stage/tuneavideo/models/refunet.py

ADDED

|

@@ -0,0 +1,125 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

from einops import rearrange

|

| 3 |

+

from typing import Any, Dict, Optional

|

| 4 |

+

from diffusers.utils.import_utils import is_xformers_available

|

| 5 |

+

from tuneavideo.models.transformer_mv2d import XFormersMVAttnProcessor, MVAttnProcessor

|

| 6 |

+

class ReferenceOnlyAttnProc(torch.nn.Module):

|

| 7 |

+

def __init__(

|

| 8 |

+

self,

|

| 9 |

+

chained_proc,

|

| 10 |

+

enabled=False,

|

| 11 |

+

name=None

|

| 12 |

+

) -> None:

|

| 13 |

+

super().__init__()

|

| 14 |

+

self.enabled = enabled

|

| 15 |

+

self.chained_proc = chained_proc

|

| 16 |

+

self.name = name

|

| 17 |

+

|

| 18 |

+

def __call__(

|

| 19 |

+

self, attn, hidden_states, encoder_hidden_states=None, attention_mask=None,

|

| 20 |

+

mode="w", ref_dict: dict = None, is_cfg_guidance = False,num_views=4,

|

| 21 |

+

multiview_attention=True,

|

| 22 |

+

cross_domain_attention=False,

|

| 23 |

+

) -> Any:

|

| 24 |

+

if encoder_hidden_states is None:

|

| 25 |

+

encoder_hidden_states = hidden_states

|

| 26 |

+

# print(self.enabled)

|

| 27 |

+

if self.enabled:

|

| 28 |

+

if mode == 'w':

|

| 29 |

+

ref_dict[self.name] = encoder_hidden_states

|

| 30 |

+

res = self.chained_proc(attn, hidden_states, encoder_hidden_states, attention_mask, num_views=1,

|

| 31 |

+

multiview_attention=False,

|

| 32 |

+

cross_domain_attention=False,)

|

| 33 |

+

elif mode == 'r':

|

| 34 |

+

encoder_hidden_states = rearrange(encoder_hidden_states, '(b t) d c-> b (t d) c', t=num_views)

|

| 35 |

+

if self.name in ref_dict:

|

| 36 |

+

encoder_hidden_states = torch.cat([encoder_hidden_states, ref_dict.pop(self.name)], dim=1).unsqueeze(1).repeat(1,num_views,1,1).flatten(0,1)

|

| 37 |

+

res = self.chained_proc(attn, hidden_states, encoder_hidden_states, attention_mask, num_views=num_views,

|

| 38 |

+

multiview_attention=False,

|

| 39 |

+

cross_domain_attention=False,)

|

| 40 |

+

elif mode == 'm':

|

| 41 |

+

encoder_hidden_states = torch.cat([encoder_hidden_states, ref_dict[self.name]], dim=1)

|

| 42 |

+

elif mode == 'n':

|

| 43 |

+

encoder_hidden_states = rearrange(encoder_hidden_states, '(b t) d c-> b (t d) c', t=num_views)

|

| 44 |

+

encoder_hidden_states = torch.cat([encoder_hidden_states], dim=1).unsqueeze(1).repeat(1,num_views,1,1).flatten(0,1)

|

| 45 |

+

res = self.chained_proc(attn, hidden_states, encoder_hidden_states, attention_mask, num_views=num_views,

|

| 46 |

+

multiview_attention=False,

|

| 47 |

+

cross_domain_attention=False,)

|

| 48 |

+

else:

|

| 49 |

+

assert False, mode

|

| 50 |

+

else:

|

| 51 |

+

res = self.chained_proc(attn, hidden_states, encoder_hidden_states, attention_mask)

|

| 52 |

+

return res

|

| 53 |

+

|

| 54 |

+

class RefOnlyNoisedUNet(torch.nn.Module):

|

| 55 |

+

def __init__(self, unet, train_sched, val_sched) -> None:

|

| 56 |

+

super().__init__()

|

| 57 |

+

self.unet = unet

|

| 58 |

+

self.train_sched = train_sched

|

| 59 |

+

self.val_sched = val_sched

|

| 60 |

+

|

| 61 |

+

unet_lora_attn_procs = dict()

|

| 62 |

+

for name, _ in unet.attn_processors.items():

|

| 63 |

+

if is_xformers_available():

|

| 64 |

+

default_attn_proc = XFormersMVAttnProcessor()

|

| 65 |

+

else:

|

| 66 |

+

default_attn_proc = MVAttnProcessor()

|

| 67 |

+

unet_lora_attn_procs[name] = ReferenceOnlyAttnProc(

|

| 68 |

+

default_attn_proc, enabled=name.endswith("attn1.processor"), name=name)

|

| 69 |

+

|

| 70 |

+

self.unet.set_attn_processor(unet_lora_attn_procs)

|

| 71 |

+

|

| 72 |

+

def __getattr__(self, name: str):

|

| 73 |

+

try:

|

| 74 |

+

return super().__getattr__(name)

|

| 75 |

+

except AttributeError:

|

| 76 |

+

return getattr(self.unet, name)

|

| 77 |

+

|

| 78 |

+

def forward_cond(self, noisy_cond_lat, timestep, encoder_hidden_states, class_labels, ref_dict, is_cfg_guidance, **kwargs):

|

| 79 |

+

if is_cfg_guidance:

|

| 80 |

+

encoder_hidden_states = encoder_hidden_states[1:]

|

| 81 |

+

class_labels = class_labels[1:]

|

| 82 |

+

self.unet(

|

| 83 |

+

noisy_cond_lat, timestep,

|

| 84 |

+

encoder_hidden_states=encoder_hidden_states,

|

| 85 |

+

class_labels=class_labels,

|

| 86 |

+

cross_attention_kwargs=dict(mode="w", ref_dict=ref_dict),

|

| 87 |

+

**kwargs

|

| 88 |

+

)

|

| 89 |

+

|

| 90 |

+

def forward(

|

| 91 |

+

self, sample, timestep, encoder_hidden_states, class_labels=None,

|

| 92 |

+

*args, cross_attention_kwargs,

|

| 93 |

+

down_block_res_samples=None, mid_block_res_sample=None,

|

| 94 |

+

**kwargs

|

| 95 |

+

):

|

| 96 |

+

cond_lat = cross_attention_kwargs['cond_lat']

|

| 97 |

+

is_cfg_guidance = cross_attention_kwargs.get('is_cfg_guidance', False)

|

| 98 |

+

noise = torch.randn_like(cond_lat)

|

| 99 |

+

if self.training:

|

| 100 |

+

noisy_cond_lat = self.train_sched.add_noise(cond_lat, noise, timestep)

|

| 101 |

+

noisy_cond_lat = self.train_sched.scale_model_input(noisy_cond_lat, timestep)

|

| 102 |

+

else:

|

| 103 |

+

noisy_cond_lat = self.val_sched.add_noise(cond_lat, noise, timestep.reshape(-1))

|

| 104 |

+

noisy_cond_lat = self.val_sched.scale_model_input(noisy_cond_lat, timestep.reshape(-1))

|

| 105 |

+

ref_dict = {}

|

| 106 |

+

self.forward_cond(

|

| 107 |

+

noisy_cond_lat, timestep,

|

| 108 |

+

encoder_hidden_states, class_labels,

|

| 109 |

+

ref_dict, is_cfg_guidance, **kwargs

|

| 110 |

+

)

|

| 111 |

+

weight_dtype = self.unet.dtype

|

| 112 |

+

return self.unet(

|

| 113 |

+

sample, timestep,

|

| 114 |

+

encoder_hidden_states, *args,

|

| 115 |

+

class_labels=class_labels,

|

| 116 |

+

cross_attention_kwargs=dict(mode="r", ref_dict=ref_dict, is_cfg_guidance=is_cfg_guidance),

|

| 117 |

+

down_block_additional_residuals=[

|

| 118 |

+

sample.to(dtype=weight_dtype) for sample in down_block_res_samples

|

| 119 |

+

] if down_block_res_samples is not None else None,

|

| 120 |

+

mid_block_additional_residual=(

|

| 121 |

+

mid_block_res_sample.to(dtype=weight_dtype)

|

| 122 |

+

if mid_block_res_sample is not None else None

|

| 123 |

+

),

|

| 124 |

+

**kwargs

|

| 125 |

+

)

|

2D_Stage/tuneavideo/models/resnet.py

ADDED

|

@@ -0,0 +1,210 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Adapted from https://github.com/huggingface/diffusers/blob/main/src/diffusers/models/resnet.py

|

| 2 |

+

|

| 3 |

+

import torch

|

| 4 |

+

import torch.nn as nn

|

| 5 |

+

import torch.nn.functional as F

|

| 6 |

+

|

| 7 |

+

from einops import rearrange

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

class InflatedConv3d(nn.Conv2d):

|

| 11 |

+

def forward(self, x):

|

| 12 |

+

video_length = x.shape[2]

|

| 13 |

+

|

| 14 |

+

x = rearrange(x, "b c f h w -> (b f) c h w")

|

| 15 |

+

x = super().forward(x)

|

| 16 |

+

x = rearrange(x, "(b f) c h w -> b c f h w", f=video_length)

|

| 17 |

+

|

| 18 |

+

return x

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

class Upsample3D(nn.Module):

|

| 22 |

+

def __init__(self, channels, use_conv=False, use_conv_transpose=False, out_channels=None, name="conv"):

|

| 23 |

+

super().__init__()

|

| 24 |

+

self.channels = channels

|

| 25 |

+