Spaces:

Runtime error

Runtime error

Dalia Gala

commited on

Commit

·

686717d

1

Parent(s):

2f1a119

Add application file

Browse files- .DS_Store +0 -0

- .Rhistory +0 -0

- data/.DS_Store +0 -0

- data/dataframe.csv +0 -0

- images/.DS_Store +0 -0

- images/.ipynb_checkpoints/pie_charts-checkpoint.png +0 -0

- images/.ipynb_checkpoints/tests-checkpoint.png +0 -0

- images/pie_charts.png +0 -0

- images/tests.png +0 -0

- pages/.DS_Store +0 -0

- pages/.ipynb_checkpoints/1_📊_Define_Target_Variables-checkpoint.py +266 -0

- pages/.ipynb_checkpoints/2_📈_Visualize_the_Results-checkpoint.py +593 -0

- pages/.ipynb_checkpoints/3_💡_Put_the_Idea_into_Practice-checkpoint.py +47 -0

- pages/1_📊_Define_Target_Variables.py +266 -0

- pages/2_📈_Visualize_the_Results.py +593 -0

- pages/3_💡_Put_the_Idea_into_Practice.py +57 -0

- requirements.txt +20 -0

- utils.py +255 -0

- 🏠Home.py +58 -0

.DS_Store

ADDED

|

Binary file (6.15 kB). View file

|

|

|

.Rhistory

ADDED

|

File without changes

|

data/.DS_Store

ADDED

|

Binary file (6.15 kB). View file

|

|

|

data/dataframe.csv

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

images/.DS_Store

ADDED

|

Binary file (6.15 kB). View file

|

|

|

images/.ipynb_checkpoints/pie_charts-checkpoint.png

ADDED

|

images/.ipynb_checkpoints/tests-checkpoint.png

ADDED

|

images/pie_charts.png

ADDED

|

images/tests.png

ADDED

|

pages/.DS_Store

ADDED

|

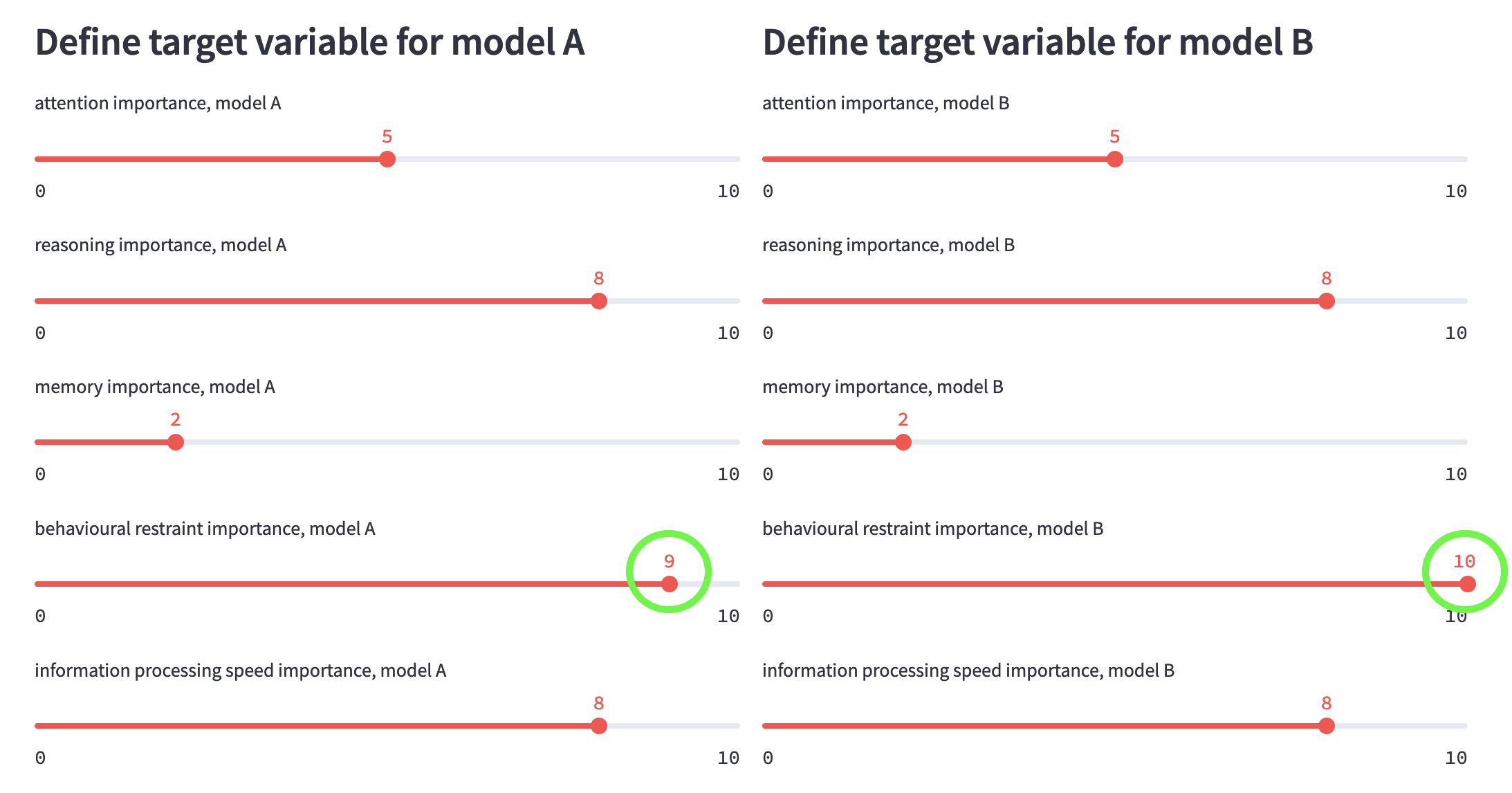

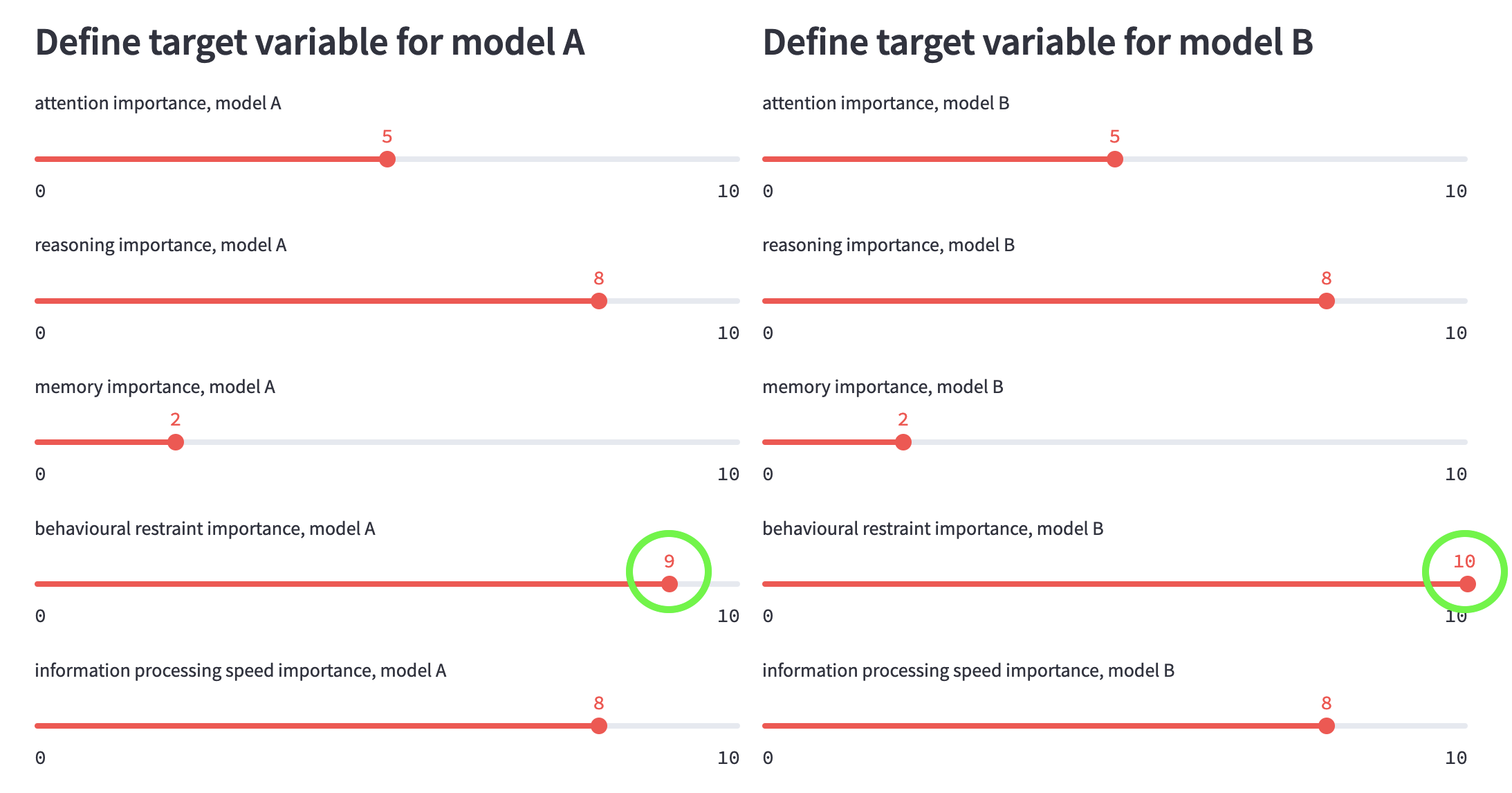

Binary file (6.15 kB). View file

|

|

|

pages/.ipynb_checkpoints/1_📊_Define_Target_Variables-checkpoint.py

ADDED

|

@@ -0,0 +1,266 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/usr/bin/env python3

|

| 2 |

+

# -*- coding: utf-8 -*-

|

| 3 |

+

"""

|

| 4 |

+

Created on Thu May 25 15:16:50 2023

|

| 5 |

+

|

| 6 |

+

@author: daliagala

|

| 7 |

+

"""

|

| 8 |

+

|

| 9 |

+

### LIBRARIES ###

|

| 10 |

+

import streamlit as st

|

| 11 |

+

import pandas as pd

|

| 12 |

+

from sklearn.model_selection import train_test_split

|

| 13 |

+

from utils import assign_labels_by_probabilities, drop_data, train_and_predict

|

| 14 |

+

|

| 15 |

+

### PAGE CONFIG ###

|

| 16 |

+

st.set_page_config(page_title='EquiVar', page_icon=':robot_face:', layout='wide')

|

| 17 |

+

|

| 18 |

+

hide_st_style = """

|

| 19 |

+

<style>

|

| 20 |

+

#GithubIcon {visibility: hidden;}

|

| 21 |

+

#MainMenu {visibility: hidden;}

|

| 22 |

+

footer {visibility: hidden;}

|

| 23 |

+

header {visibility: hidden;}

|

| 24 |

+

</style>

|

| 25 |

+

"""

|

| 26 |

+

st.markdown(hide_st_style, unsafe_allow_html=True)

|

| 27 |

+

|

| 28 |

+

### IMPORT DATA FILES ###

|

| 29 |

+

dataframe = pd.read_csv('./data/dataframe.csv')

|

| 30 |

+

dataframe = dataframe.drop(["Unnamed: 0"], axis = 1)

|

| 31 |

+

dataframe = dataframe.rename(columns={"education_level": "education level"})

|

| 32 |

+

|

| 33 |

+

### DICTIONARIES AND CONSTANTS###

|

| 34 |

+

groups = ["attention", "reasoning", "memory", "behavioural restraint", "information processing speed"]

|

| 35 |

+

|

| 36 |

+

groups_dict = {

|

| 37 |

+

'Divided Visual Attention' : 'attention',

|

| 38 |

+

'Forward Memory Span': 'memory',

|

| 39 |

+

'Arithmetic Reasoning' : 'reasoning',

|

| 40 |

+

'Grammatical Reasoning' : 'reasoning',

|

| 41 |

+

'Go/No go' : 'behavioural restraint',

|

| 42 |

+

'Reverse_Memory_Span' : 'memory',

|

| 43 |

+

'Verbal List Learning' : 'memory',

|

| 44 |

+

'Delayed Verbal List Learning' : 'memory',

|

| 45 |

+

'Digit Symbol Coding' : 'information processing speed',

|

| 46 |

+

'Trail Making Part A' : 'information processing speed',

|

| 47 |

+

'Trail Making Part B' : 'information processing speed'

|

| 48 |

+

}

|

| 49 |

+

|

| 50 |

+

education_dict = {

|

| 51 |

+

1: 'Some high school',

|

| 52 |

+

2: 'High school diploma / GED',

|

| 53 |

+

3: 'Some college',

|

| 54 |

+

4: 'College degree',

|

| 55 |

+

5: 'Professional degree',

|

| 56 |

+

6: "Master's degree",

|

| 57 |

+

7: 'Ph.D.',

|

| 58 |

+

8: "Associate's degree",

|

| 59 |

+

99: 'Other'

|

| 60 |

+

}

|

| 61 |

+

|

| 62 |

+

df_keys_dict = {

|

| 63 |

+

'Divided Visual Attention' :'divided_visual_attention',

|

| 64 |

+

'Forward Memory Span' :'forward_memory_span',

|

| 65 |

+

'Arithmetic Reasoning' : 'arithmetic_problem_solving',

|

| 66 |

+

'Grammatical Reasoning' : 'logical_reasoning',

|

| 67 |

+

'Go/No go': 'adaptive_behaviour_response_inhibition',

|

| 68 |

+

'Reverse_Memory_Span' : 'reverse_memory_span',

|

| 69 |

+

'Verbal List Learning': 'episodic_verbal_learning',

|

| 70 |

+

'Delayed Verbal List Learning': 'delayed_recall',

|

| 71 |

+

'Digit Symbol Coding': 'abstract_symbol_processing_speed',

|

| 72 |

+

'Trail Making Part A' :'numerical_info_processing_speed',

|

| 73 |

+

'Trail Making Part B': 'numerical_and_lexical_info_processing_speed'

|

| 74 |

+

}

|

| 75 |

+

|

| 76 |

+

|

| 77 |

+

### CREATE THE "TARGET VARIABLE DEFINITION" PAGE ###

|

| 78 |

+

st.title('Target variable definition')

|

| 79 |

+

|

| 80 |

+

st.markdown('''On this page, we invite you to imagine that you are hiring for a certain role. Using the sliders below, you will specify two different notions of a “good employee” for that role—two different target variables. Once you’re done, the simulator will build two models, one for each of your target variable definitions. You can visualize these datasets and models—and their effects on fairness and overall features of the models and data—in the Visualize the Results page.''')

|

| 81 |

+

|

| 82 |

+

st.markdown('''You specify the notions of different employees by assigning weights of importance to cognitive characteristics that a “good” employee would have for the role. The cognitive test data that you’ll be working with comes from real-world people, mirroring an increasing number of hiring algorithms that are based cognitive tests ([Wilson, et al. 2021](https://dl.acm.org/doi/10.1145/3442188.3445928)).''')

|

| 83 |

+

|

| 84 |

+

st.markdown('''We have pre-set the weights below to reflect two different conceptions of a “good” employee: one conception that emphasizes attentiveness and numerical skills (A) and another conception that emphasizes interpersonal skills and memory (B). If you want to, you can change the slider values as you see fit.''')

|

| 85 |

+

|

| 86 |

+

st.markdown('''After you’ve set the slider values, click :red[“Assign labels and train your models”]. The simulator will then label certain individuals as "good employees"—in other words it will assign class labels "1" for successful and "0" for not good, based on your selections. Then, the simulator will build the two models.''')

|

| 87 |

+

|

| 88 |

+

st.markdown('''To learn more about target variable definition and hiring models based in cognitive tests, :green["See explanation"] below.''')

|

| 89 |

+

|

| 90 |

+

with st.expander("See explanation"):

|

| 91 |

+

|

| 92 |

+

st.markdown('''The models that the simulator builds are of a kind increasingly used in hiring software: companies will have applicants play games that test for different kinds of cognitive ability (like reasoning or memory). Then hiring software will be built to predict which applicants will be successful based on which cognitive characteristics they have. What cognitive characteristics make for a successful employee? This will depend on what role is being hired. And it will also depend on how one defines “successful employee.”''')

|

| 93 |

+

|

| 94 |

+

st.markdown('''In the real world, “successful employee” is defined for these kinds of hiring models in the following way. Managers select a group of current employees that they consider to be successful; this group of employees plays the cognitive test games. A model is then trained to identify applicants who share cognitive characteristics with the current employees that are considered successful. The target variable of “successful employee” is thus defined in terms of comparison to certain people who are deemed successful. ''')

|

| 95 |

+

|

| 96 |

+

st.markdown('''One will get different target variables if one deems different current employees as the successful ones. And, as we discussed in the Home page (and as we explain more in the Putting the Idea into Practice page), there will likely be disagreement between managers about which employees are successful. For instance, a manager who values attentiveness and numerical skills will deem different employees “successful” than a manager who values interpersonal skills and memory. Even when different managers roughly share their sensibilities in what characteristics make for a successful employee, there may still be different, equally good ways to “weight” the importance of the various characteristics.''')

|

| 97 |

+

|

| 98 |

+

st.markdown('''In the real world, the cognitive characteristics shared by those considered successful employees is implicit. Companies do not first identify the cognitive characteristics that make for a successful employee; rather, they identify employees who they consider successful, and then the hiring model works backwards to identify what characteristics these employees share.''')

|

| 99 |

+

|

| 100 |

+

st.markdown('''In our simulator, the cognitive characteristics shared by “good” employees are explicit. You assign different weights—using the sliders—to the cognitive characteristics you think are more or less important in a good employee (for the role you’re considering). To illustrate how different target variables have different effects on fairness and overall model attributes, you’ll define “good” employee in two ways. (We’ve made the cognitive characteristics explicit both so you can see the point of different target variable definitions more clearly, and because of limitations of the data that we’re working with.)''')

|

| 101 |

+

|

| 102 |

+

st.markdown('''The cognitive characteristics that the simulator works with are from one of datasets of the [NeuroCognitive Performance Test](https://www.nature.com/articles/s41597-022-01872-8). This dataset has eleven different tests which we have grouped into five categories: ''')

|

| 103 |

+

|

| 104 |

+

st.markdown(

|

| 105 |

+

"""

|

| 106 |

+

- **Memory**: Forward Memory Span, Reverse Memory Span, Verbal List Learning, Delayed Verbal List Learning

|

| 107 |

+

- **Information Processing Speed**: Digit Symbol Coding, Trail Making Part A, Trail Making Part B

|

| 108 |

+

- **Reasoning**: Arithmetic Reasoning, Grammatical Reasoning

|

| 109 |

+

- **Attention**: Divided Visual Attention

|

| 110 |

+

- **Behavioral Restraint**: Go/No go

|

| 111 |

+

""")

|

| 112 |

+

|

| 113 |

+

st.markdown('''After you’ve set the weights to these five characteristics using the sliders, you can see which weights are assigned to each test (e.g. Forward Memory Span or Digit Symbol Coding) by ticking the checkbox beneath the sliders. ''')

|

| 114 |

+

|

| 115 |

+

col1, col2 = st.columns(2)

|

| 116 |

+

|

| 117 |

+

#Initialise slider values

|

| 118 |

+

list_values_A = (9, 10, 2, 1, 5)

|

| 119 |

+

list_values_B = (1, 2, 10, 9, 3)

|

| 120 |

+

|

| 121 |

+

selectionsA = {}

|

| 122 |

+

selectionsB = {}

|

| 123 |

+

results_dict_A = groups_dict

|

| 124 |

+

results_dict_B = groups_dict

|

| 125 |

+

|

| 126 |

+

with col1:

|

| 127 |

+

st.subheader("Define target variable for model A ")

|

| 128 |

+

|

| 129 |

+

if "slider_values_A" not in st.session_state:

|

| 130 |

+

for count, value in enumerate(groups):

|

| 131 |

+

selectionsA[value] = list_values_A[count]

|

| 132 |

+

st.session_state["slider_values_A"] = selectionsA

|

| 133 |

+

else:

|

| 134 |

+

selectionsA = st.session_state["slider_values_A"]

|

| 135 |

+

|

| 136 |

+

for i in groups:

|

| 137 |

+

nameA = f"{i} importance, model A"

|

| 138 |

+

value = selectionsA[i]

|

| 139 |

+

slider = st.slider(nameA, min_value=0, max_value=10, value = value)

|

| 140 |

+

selectionsA[i] = slider

|

| 141 |

+

|

| 142 |

+

results_dict_A = {k: selectionsA.get(v, v) for k, v in results_dict_A.items()}

|

| 143 |

+

total = sum(results_dict_A.values())

|

| 144 |

+

for (key, u) in results_dict_A.items():

|

| 145 |

+

if total != 0:

|

| 146 |

+

w = (u/total)

|

| 147 |

+

results_dict_A[key] = w

|

| 148 |

+

|

| 149 |

+

if st.checkbox("Show target variable A weights per subtest", key="A"):

|

| 150 |

+

for (key, u) in results_dict_A.items():

|

| 151 |

+

txt = key.replace("_", " ")

|

| 152 |

+

st.markdown("- " + txt + " : " + f":green[{str(round((u*100), 2))}]")

|

| 153 |

+

|

| 154 |

+

|

| 155 |

+

st.session_state["slider_values_A"] = selectionsA

|

| 156 |

+

|

| 157 |

+

|

| 158 |

+

with col2:

|

| 159 |

+

st.subheader("Define target variable for model B ")

|

| 160 |

+

|

| 161 |

+

if "slider_values_B" not in st.session_state:

|

| 162 |

+

for count, value in enumerate(groups):

|

| 163 |

+

selectionsB[value] = list_values_B[count]

|

| 164 |

+

st.session_state["slider_values_B"] = selectionsB

|

| 165 |

+

else:

|

| 166 |

+

selectionsB = st.session_state["slider_values_B"]

|

| 167 |

+

|

| 168 |

+

for i in groups:

|

| 169 |

+

nameB = f"{i} importance, model B"

|

| 170 |

+

value = selectionsB[i]

|

| 171 |

+

slider = st.slider(nameB, min_value=0, max_value=10, value = value)

|

| 172 |

+

selectionsB[i] = slider

|

| 173 |

+

|

| 174 |

+

results_dict_B = {k: selectionsB.get(v, v) for k, v in results_dict_B.items()}

|

| 175 |

+

total = sum(results_dict_B.values())

|

| 176 |

+

for (key, u) in results_dict_B.items():

|

| 177 |

+

if total != 0:

|

| 178 |

+

w = ((u/total))

|

| 179 |

+

results_dict_B[key] = w

|

| 180 |

+

|

| 181 |

+

if st.checkbox("Show target variable B weights per subtest", key = "B"):

|

| 182 |

+

for (key, u) in results_dict_B.items():

|

| 183 |

+

txt = key.replace("_", " ")

|

| 184 |

+

st.markdown("- " + txt + " : " + f":green[{str(round((u*100), 2))}]")

|

| 185 |

+

|

| 186 |

+

st.session_state["slider_values_B"] = selectionsB

|

| 187 |

+

|

| 188 |

+

if st.button("Assign labels and train your models", type = "primary", use_container_width = True):

|

| 189 |

+

if 'complete_df' in st.session_state:

|

| 190 |

+

del st.session_state['complete_df']

|

| 191 |

+

if 'clean_df' in st.session_state:

|

| 192 |

+

del st.session_state['clean_df']

|

| 193 |

+

if 'cm_A' in st.session_state:

|

| 194 |

+

del st.session_state['cm_A']

|

| 195 |

+

if 'cm_B' in st.session_state:

|

| 196 |

+

del st.session_state['cm_B']

|

| 197 |

+

scoreA = pd.DataFrame()

|

| 198 |

+

scoreB = pd.DataFrame()

|

| 199 |

+

test1 = all(value == 0 for value in results_dict_A.values())

|

| 200 |

+

test2 = all(value == 0 for value in results_dict_B.values())

|

| 201 |

+

if test1 == True or test2 == True:

|

| 202 |

+

st.error('Cannot train the models if you do not define the target variables. Make your selections for both models first!', icon="🚨")

|

| 203 |

+

else:

|

| 204 |

+

for (key, u) in results_dict_A.items():

|

| 205 |

+

scoreA[df_keys_dict[key]] = u * dataframe[df_keys_dict[key]]

|

| 206 |

+

scoresA = scoreA.sum(axis=1)

|

| 207 |

+

dataframe['model_A_scores'] = scoresA

|

| 208 |

+

for (key, u) in results_dict_B.items():

|

| 209 |

+

scoreB[df_keys_dict[key]] = u * dataframe[df_keys_dict[key]]

|

| 210 |

+

scoresB = scoreB.sum(axis=1)

|

| 211 |

+

dataframe['model_B_scores'] = scoresB

|

| 212 |

+

|

| 213 |

+

new_annotated = assign_labels_by_probabilities(dataframe, "model_A_scores", "Model_A_label", "Model_A_probabilities", quantile=0.85, num_samples=100)

|

| 214 |

+

new_annotated = assign_labels_by_probabilities(new_annotated, "model_B_scores", "Model_B_label", "Model_B_probabilities", quantile=0.85, num_samples=100)

|

| 215 |

+

new_annotated = new_annotated.reset_index()

|

| 216 |

+

|

| 217 |

+

|

| 218 |

+

clean_data = drop_data(new_annotated)

|

| 219 |

+

# specify the columns of interest

|

| 220 |

+

selected_cols = ['Model_A_label', 'Model_B_label']

|

| 221 |

+

|

| 222 |

+

# count the number of rows where all three selected columns have a value of 1

|

| 223 |

+

num_rows_with_all_flags_1 = len(new_annotated[new_annotated[selected_cols].sum(axis=1) == len(selected_cols)])

|

| 224 |

+

|

| 225 |

+

# print the result

|

| 226 |

+

st.write(f"Shared candidates between your target variables: :green[{num_rows_with_all_flags_1}].")

|

| 227 |

+

with st.spinner('Please wait... The models will be trained now.'):

|

| 228 |

+

|

| 229 |

+

X_data, Y_data_A, Y_data_B = clean_data.iloc[:, :-2], clean_data.iloc[:, [-2]], clean_data.iloc[:, [-1]]

|

| 230 |

+

X_data = X_data.drop(["index"], axis = 1)

|

| 231 |

+

Y_data_B = Y_data_B.reset_index()

|

| 232 |

+

X_train, X_test, y_train_A, y_test_A = train_test_split(X_data, Y_data_A, test_size=0.2)

|

| 233 |

+

y_train_A = y_train_A.reset_index()

|

| 234 |

+

y_test_A = y_test_A.reset_index()

|

| 235 |

+

y_train_B = pd.merge(y_train_A,Y_data_B[['index', 'Model_B_label']],on='index', how='left')

|

| 236 |

+

y_test_B = pd.merge(y_test_A,Y_data_B[['index', 'Model_B_label']],on='index', how='left')

|

| 237 |

+

y_train_B = y_train_B.drop(labels='Model_A_label', axis = 1)

|

| 238 |

+

y_test_B = y_test_B.drop(labels='Model_A_label', axis = 1)

|

| 239 |

+

y_train_A = y_train_A.set_index("index")

|

| 240 |

+

y_train_B = y_train_B.set_index("index")

|

| 241 |

+

y_test_A = y_test_A.set_index("index")

|

| 242 |

+

y_test_B = y_test_B.set_index("index")

|

| 243 |

+

|

| 244 |

+

accuracy_A, precision_A, recall_A, X_full_A, cm_A, baseline_accuracy_A = train_and_predict("A", X_train, X_test, y_train_A, y_test_A)

|

| 245 |

+

accuracy_B, precision_B, recall_B, X_full_B, cm_B, baseline_accuracy_B = train_and_predict("B", X_train, X_test, y_train_B, y_test_B)

|

| 246 |

+

full = pd.merge(X_full_A,X_full_B[['index','Predicted_B', 'Prob_0_B', "Prob_1_B"]],on='index', how='left')

|

| 247 |

+

complete = pd.merge(full,new_annotated[['index', 'age', 'gender', 'education level', 'country', 'Model_A_label', 'Model_B_label', 'model_A_scores', 'model_B_scores']],on='index', how='left')

|

| 248 |

+

complete=complete.replace({"education level": education_dict})

|

| 249 |

+

complete = complete.rename(columns={"index": "Candidate ID"})

|

| 250 |

+

|

| 251 |

+

if 'complete_df' not in st.session_state:

|

| 252 |

+

st.session_state['complete_df'] = complete

|

| 253 |

+

if 'clean_df' not in st.session_state:

|

| 254 |

+

st.session_state['clean_df'] = clean_data

|

| 255 |

+

if 'cm_A' not in st.session_state:

|

| 256 |

+

st.session_state['cm_A'] = cm_A

|

| 257 |

+

if 'cm_B' not in st.session_state:

|

| 258 |

+

st.session_state['cm_B'] = cm_B

|

| 259 |

+

|

| 260 |

+

row1_space1, row1_1, row1_space2, row1_2, row1_space3 = st.columns((0.1, 3, 0.1, 3, 0.1))

|

| 261 |

+

with row1_1:

|

| 262 |

+

st.write(f"Model A accuracy: :green[{baseline_accuracy_A}].")

|

| 263 |

+

with row1_2:

|

| 264 |

+

st.write(f"Model B accuracy: :green[{baseline_accuracy_B}].")

|

| 265 |

+

|

| 266 |

+

st.success('''Success! You have defined the target variables and trained your models. Head to "Visualise the Results" in the sidebar.''')

|

pages/.ipynb_checkpoints/2_📈_Visualize_the_Results-checkpoint.py

ADDED

|

@@ -0,0 +1,593 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/usr/bin/env python3

|

| 2 |

+

# -*- coding: utf-8 -*-

|

| 3 |

+

"""

|

| 4 |

+

Created on Thu May 25 15:30:56 2023

|

| 5 |

+

|

| 6 |

+

@author: daliagala

|

| 7 |

+

"""

|

| 8 |

+

|

| 9 |

+

### LIBRARIES ###

|

| 10 |

+

import streamlit as st

|

| 11 |

+

import numpy as np

|

| 12 |

+

import pandas as pd

|

| 13 |

+

import matplotlib.pyplot as plt

|

| 14 |

+

import plotly.express as px

|

| 15 |

+

import plotly.graph_objects as go

|

| 16 |

+

from matplotlib_venn import venn2

|

| 17 |

+

from sklearn.metrics import confusion_matrix

|

| 18 |

+

from utils import display_proportional, plot_data, run_PCA, create_confusion_matrix_heatmap, plot_conf_rates

|

| 19 |

+

|

| 20 |

+

### PAGE CONFIG ###

|

| 21 |

+

st.set_page_config(page_title='EquiVar', page_icon=':robot_face:', layout='wide')

|

| 22 |

+

|

| 23 |

+

hide_st_style = """

|

| 24 |

+

<style>

|

| 25 |

+

#GithubIcon {visibility: hidden;}

|

| 26 |

+

#MainMenu {visibility: hidden;}

|

| 27 |

+

footer {visibility: hidden;}

|

| 28 |

+

header {visibility: hidden;}

|

| 29 |

+

</style>

|

| 30 |

+

"""

|

| 31 |

+

st.markdown(hide_st_style, unsafe_allow_html=True)

|

| 32 |

+

|

| 33 |

+

### DICTIONARIES AND CONSTANTS###

|

| 34 |

+

|

| 35 |

+

colours_education = {

|

| 36 |

+

'Some high school' : 'indigo',

|

| 37 |

+

'High school diploma / GED' : '#7ae99e',

|

| 38 |

+

'Some college' : '#0a68c9',

|

| 39 |

+

'College degree': '#80c4fa',

|

| 40 |

+

'Professional degree': '#f48508',

|

| 41 |

+

"Master's degree" : '#2fa493',

|

| 42 |

+

'Ph.D.' : '#f2a3a1',

|

| 43 |

+

"Associate's degree" : '#fbcc66',

|

| 44 |

+

'Other' : '#fa322f'

|

| 45 |

+

}

|

| 46 |

+

|

| 47 |

+

colours_country = {

|

| 48 |

+

'AU' : '#7ae99e',

|

| 49 |

+

'US': '#80c4fa',

|

| 50 |

+

'NZ': '#2fa493',

|

| 51 |

+

'CA' : '#fbcc66'

|

| 52 |

+

}

|

| 53 |

+

|

| 54 |

+

colours_gender = {

|

| 55 |

+

'f' : '#83C9FF',

|

| 56 |

+

'm': '#0067C9'

|

| 57 |

+

}

|

| 58 |

+

|

| 59 |

+

characteristic_dict = {

|

| 60 |

+

'gender' : colours_gender,

|

| 61 |

+

'education level' : colours_education,

|

| 62 |

+

'country' : colours_country,

|

| 63 |

+

'age' : 'indigo'

|

| 64 |

+

}

|

| 65 |

+

|

| 66 |

+

pred_dict = {

|

| 67 |

+

'Model A' : 'Predicted_A',

|

| 68 |

+

'Model B' : 'Predicted_B'

|

| 69 |

+

}

|

| 70 |

+

|

| 71 |

+

prob_dict = {

|

| 72 |

+

'Model A' : 'Prob_1_A',

|

| 73 |

+

'Model B' : 'Prob_1_B'

|

| 74 |

+

}

|

| 75 |

+

|

| 76 |

+

model_dict = {

|

| 77 |

+

'Model A' : 'Model_A_label',

|

| 78 |

+

'Model B' : 'Model_B_label'

|

| 79 |

+

}

|

| 80 |

+

|

| 81 |

+

df_keys_dict = {

|

| 82 |

+

'Divided Visual Attention' :'divided_visual_attention',

|

| 83 |

+

'Forward Memory Span' :'forward_memory_span',

|

| 84 |

+

'Arithmetic Reasoning' : 'arithmetic_problem_solving',

|

| 85 |

+

'Grammatical Reasoning' : 'logical_reasoning',

|

| 86 |

+

'Go/No go': 'adaptive_behaviour_response_inhibition',

|

| 87 |

+

'Reverse_Memory_Span' : 'reverse_memory_span',

|

| 88 |

+

'Verbal List Learning': 'episodic_verbal_learning',

|

| 89 |

+

'Delayed Verbal List Learning': 'delayed_recall',

|

| 90 |

+

'Digit Symbol Coding': 'abstract_symbol_processing_speed',

|

| 91 |

+

'Trail Making Part A' :'numerical_info_processing_speed',

|

| 92 |

+

'Trail Making Part B': 'numerical_and_lexical_info_processing_speed'

|

| 93 |

+

}

|

| 94 |

+

|

| 95 |

+

### DEFINE ALL SUB-PAGES AS FUNCTIONS TO CALL ###

|

| 96 |

+

|

| 97 |

+

def mod_prop(cmA, cmB):

|

| 98 |

+

st.markdown('''This section contains model confusion matrices, which give us a lot of information about how good the models which we produced really are. We obtain the confusion matrices by plotting "Actual" labels on one axis, and "Predicted" labels on the other. For both models, in each square of each confusion matrix, we can see which group of candidates it represents, the number of candidates in this group, and what percentage of all candidates assessed they represent.''')

|

| 99 |

+

st.markdown('''

|

| 100 |

+

- **True Negative (TN)**: candidates predicted to have label "0" whose actual label was "0".

|

| 101 |

+

- **False Positive (FP)**: candidates predicted to have label "1" whose actual label was "0".

|

| 102 |

+

- **False Negative (FN)** candidates predicted to have label "0" whose actual label was "1".

|

| 103 |

+

- **True Positive (TP)**: candidates predicted to have label "1" whose actual label was "1".

|

| 104 |

+

''')

|

| 105 |

+

|

| 106 |

+

row1_space1, row1_1, row1_space2, row1_2, row1_space3 = st.columns((0.1, 3, 0.1, 3, 0.1))

|

| 107 |

+

with row1_1:

|

| 108 |

+

create_confusion_matrix_heatmap(cmB, "Model A")

|

| 109 |

+

|

| 110 |

+

with row1_2:

|

| 111 |

+

model = "B"

|

| 112 |

+

create_confusion_matrix_heatmap(cmB, "Model B")

|

| 113 |

+

|

| 114 |

+

st.subheader("Model Accuracy Rates")

|

| 115 |

+

st.markdown('''We can also represent each model in terms of its accuracy rates, calculated using the numbers of candidates in each group shown in the confusion matrix:''')

|

| 116 |

+

st.markdown('''

|

| 117 |

+

- True Positive Rate (TPR), also called recall, sensitivity or hit rate, is the probability of a positive test result when the result is indeed positive.

|

| 118 |

+

- True Negative Rate (TNR), or specificity/selectivity, is the probability of a negative test result when the test is indeed negative.

|

| 119 |

+

- Positive Predictive Value (PPV) or Precision is the ratio of truly positive results to all positive results.

|

| 120 |

+

- Negative Predictive Value (NPR) is the ratio of truly negative results to all negative results.

|

| 121 |

+

- False Positive Rate (FPR) or fall-out is the probability of assigning a falsely positive result when the test is negative.

|

| 122 |

+

- False Negative Rate (FNR) or miss rate is the probability that a test with a positive result will falsely be assigned a negative result.

|

| 123 |

+

- False Discovery Rate (FDR) it the ratio of false positive results to total number of positive results.

|

| 124 |

+

''')

|

| 125 |

+

measures_A = plot_conf_rates(cmA)

|

| 126 |

+

measures_B = plot_conf_rates(cmB)

|

| 127 |

+

fig = go.Figure()

|

| 128 |

+

fig.add_trace(go.Bar(

|

| 129 |

+

x=measures_A["Measure"],

|

| 130 |

+

y=measures_A["Score"],

|

| 131 |

+

name='Model A rates',

|

| 132 |

+

marker_color='rgb(55, 83, 109)'

|

| 133 |

+

))

|

| 134 |

+

fig.add_trace(go.Bar(

|

| 135 |

+

x=measures_B["Measure"],

|

| 136 |

+

y=measures_B["Score"],

|

| 137 |

+

name='Model B rates',

|

| 138 |

+

marker_color='rgb(26, 118, 255)'

|

| 139 |

+

))

|

| 140 |

+

fig.update_layout(

|

| 141 |

+

title='Model measures comparison',

|

| 142 |

+

xaxis_tickfont_size=14,

|

| 143 |

+

xaxis=dict(

|

| 144 |

+

title='Measure'),

|

| 145 |

+

yaxis=dict(

|

| 146 |

+

title='Score',

|

| 147 |

+

titlefont_size=16,

|

| 148 |

+

tickfont_size=14,

|

| 149 |

+

),

|

| 150 |

+

legend=dict(

|

| 151 |

+

bgcolor='rgba(255, 255, 255, 0)',

|

| 152 |

+

bordercolor='rgba(255, 255, 255, 0)'

|

| 153 |

+

),

|

| 154 |

+

barmode='group',

|

| 155 |

+

bargap=0.15, # gap between bars of adjacent location coordinates.

|

| 156 |

+

bargroupgap=0.1 # gap between bars of the same location coordinate.

|

| 157 |

+

)

|

| 158 |

+

st.plotly_chart(fig, use_container_width = True)

|

| 159 |

+

if st.checkbox("Show tables"):

|

| 160 |

+

row1_space1, row1_1, row1_space2, row1_2, row1_space3 = st.columns((0.1, 3, 0.1, 3, 0.1))

|

| 161 |

+

# CSS to inject contained in a string

|

| 162 |

+

hide_table_row_index = """

|

| 163 |

+

<style>

|

| 164 |

+

thead tr th:first-child {display:none}

|

| 165 |

+

tbody th {display:none}

|

| 166 |

+

</style> """

|

| 167 |

+

|

| 168 |

+

# Inject CSS with Markdown

|

| 169 |

+

st.markdown(hide_table_row_index, unsafe_allow_html=True)

|

| 170 |

+

with row1_1:

|

| 171 |

+

st.markdown("Accuracy rates for model A")

|

| 172 |

+

st.table(measures_A)

|

| 173 |

+

with row1_2:

|

| 174 |

+

st.markdown("Accuracy rates for model B")

|

| 175 |

+

st.table(measures_B)

|

| 176 |

+

|

| 177 |

+

def model_scores(dataframe):

|

| 178 |

+

st.markdown('''This section displays the distribution of scores assigned to each hypothetical employee, according to the values you set on the sliders, compared with the distribution of protected characteristics. These scores are then used to assign labels to the hypothetical employees, creating two distinct datasets to train the models. In essence, these scores explicitly and numerically mimic the viewpoints of hypothetical hiring managers A and B when deciding who to label as "top employees."''')

|

| 179 |

+

st.markdown('''Just as in the original NCPT dataset analysis, the scores obtained by participants in the cognitive games decline with age. This observation provides insight into the potential issue of ageism inherent in the use of gamified hiring processes.''')

|

| 180 |

+

# Create a selectbox to choose a protected characteristic to explore

|

| 181 |

+

plot_radio = st.selectbox('Characteristic to explore', characteristic_dict.keys())

|

| 182 |

+

row2_space1, row2_1, row2_space2 = st.columns((0.1, 5, 0.1))

|

| 183 |

+

|

| 184 |

+

with row2_1:

|

| 185 |

+

data = dataframe[["model_A_scores", "model_B_scores", plot_radio]]

|

| 186 |

+

|

| 187 |

+

if plot_radio == "age":

|

| 188 |

+

bins= [18,20,30,40,50,60,70,80,90]

|

| 189 |

+

labels = ['18-20','21-30','31-40','41-50','51-60','61-70','71-80','81-90']

|

| 190 |

+

data['age_bins'] = pd.cut(data['age'], bins=bins, labels=labels, right=False)

|

| 191 |

+

plot_radio = 'age_bins'

|

| 192 |

+

colours = ['rgba(93, 164, 214, 0.5)', 'rgba(255, 144, 14, 0.5)']

|

| 193 |

+

c1, c2, c3, c4, c5 = st.columns((0.1, 3, 0.1, 3, 0.1))

|

| 194 |

+

with c2:

|

| 195 |

+

fig = px.box(data, x = plot_radio, y="model_A_scores", labels={"model_A_scores":"Dataset A Input Scores", 'age_bins':"Age"}, title="Input scores in dataset A")

|

| 196 |

+

fig.update_layout(showlegend=False)

|

| 197 |

+

fig.update_traces(marker_color='rgba(93, 164, 214, 0.5)')

|

| 198 |

+

st.plotly_chart(fig, use_container_width=True)

|

| 199 |

+

with c4:

|

| 200 |

+

fig = px.box(data, x = plot_radio, y="model_B_scores", labels={"model_B_scores":"Dataset B Input Scores", 'age_bins':"Age"}, title="Input scores in dataset B")

|

| 201 |

+

fig.update_traces(marker_color = 'rgba(255, 144, 14, 0.5)')

|

| 202 |

+

fig.update_layout(showlegend=False)

|

| 203 |

+

st.plotly_chart(fig, use_container_width=True)

|

| 204 |

+

|

| 205 |

+

def PCA_general(full_df, dataframe_PCA):

|

| 206 |

+

st.markdown('''On this page, you can see the distribution of the dataset labels which were assigned based on the scores calculated from the slider values you selected previously. Principal Components Analysis, or PCA, is a technique often used to analyse and subsequently visualize datasets where there are many features per single example. This is the case with the NCPT dataset used in our simulator. Specifically, the battery which we used has 11 features per single example, the example being the player of cognitive games, and, in our metaphor, a hypothetical employee or job candidate. It is impossible to plot 11 dimensions, and PCA allows for the visualisation of multidimensional data, while also preserving as much information as possible.''')

|

| 207 |

+

choice = st.radio("What would you like to explore?", ("PCAs", "Components loading"), horizontal = True)

|

| 208 |

+

pcaA, dfA, labelsA, coeffA, componentsA = run_PCA(dataframe_PCA, 'Model_B_label', 'Model_A_label', 2)

|

| 209 |

+

pcaB, dfB, labelsB, coeffB, componentsB = run_PCA(dataframe_PCA, 'Model_A_label', 'Model_B_label', 2)

|

| 210 |

+

loadings = pcaB.components_.T * np.sqrt(pcaB.explained_variance_)

|

| 211 |

+

total_var = pcaA.explained_variance_ratio_.sum() * 100

|

| 212 |

+

dfA = dfA.rename(columns={'target': 'Dataset A'}).reset_index()

|

| 213 |

+

dfB = dfB.rename(columns={'target': 'Dataset B'}).reset_index()

|

| 214 |

+

df_all = pd.merge(dfA, dfB[['index', 'Dataset B']], on='index', how='left')

|

| 215 |

+

|

| 216 |

+

conditions = [

|

| 217 |

+

(df_all['Dataset A'] == 1) & (df_all['Dataset B'] == 0),

|

| 218 |

+

(df_all['Dataset B'] == 1) & (df_all['Dataset A'] == 0),

|

| 219 |

+

(df_all['Dataset A'] == 1) & (df_all['Dataset B'] == 1),

|

| 220 |

+

(df_all['Dataset A'] == 0) & (df_all['Dataset B'] == 0)]

|

| 221 |

+

|

| 222 |

+

values = ['Selected A', 'Selected B', 'Selected both', 'Not selected']

|

| 223 |

+

df_all['All'] = np.select(conditions, values)

|

| 224 |

+

|

| 225 |

+

df_all = df_all.drop(["index"], axis = 1)

|

| 226 |

+

df_all.All=pd.Categorical(df_all.All,categories=['Not selected', 'Selected A', 'Selected B', 'Selected both'])

|

| 227 |

+

df_all=df_all.sort_values('All')

|

| 228 |

+

|

| 229 |

+

selections_dict = {0: 'Not selected', 1: 'Selected'}

|

| 230 |

+

df_all = df_all.replace({"Dataset A": selections_dict, "Dataset B": selections_dict})

|

| 231 |

+

|

| 232 |

+

color_dict_sel = {'Not selected': '#3366CC', 'Selected': 'grey'}

|

| 233 |

+

|

| 234 |

+

if "pca_df" not in st.session_state:

|

| 235 |

+

st.session_state.pca_df = df_all

|

| 236 |

+

|

| 237 |

+

if choice == "PCAs":

|

| 238 |

+

c1, c2 = st.columns(2)

|

| 239 |

+

with c1:

|

| 240 |

+

fig = px.scatter(st.session_state.pca_df,

|

| 241 |

+

x=st.session_state.pca_df['principal component 1'].astype(str),

|

| 242 |

+

y=st.session_state.pca_df['principal component 2'].astype(str),

|

| 243 |

+

title='Dataset A PCA',

|

| 244 |

+

labels={"x": 'PC 1', "y": 'PC 2'},

|

| 245 |

+

color=st.session_state.pca_df['Dataset A'],

|

| 246 |

+

color_discrete_map=color_dict_sel)

|

| 247 |

+

fig.update_traces(marker_size = 8)

|

| 248 |

+

st.plotly_chart(fig, use_container_width=True)

|

| 249 |

+

with c2:

|

| 250 |

+

fig = px.scatter(st.session_state.pca_df,

|

| 251 |

+

x=st.session_state.pca_df['principal component 1'].astype(str),

|

| 252 |

+

y=st.session_state.pca_df['principal component 2'].astype(str),

|

| 253 |

+

title='Dataset B PCA',

|

| 254 |

+

labels={"x": 'PC 1', "y": 'PC 2'},

|

| 255 |

+

color=st.session_state.pca_df['Dataset B'],

|

| 256 |

+

color_discrete_map=color_dict_sel)

|

| 257 |

+

fig.update_traces(marker_size = 8)

|

| 258 |

+

st.plotly_chart(fig, use_container_width=True)

|

| 259 |

+

|

| 260 |

+

st.markdown(f'''These plots show the reduction of 11 dimensions (11 subtest results) to 2 dimensions. Total Variance for the data is {total_var:.2f}%. Both of the datasets have the same features, therefore they both have the same total variance. Total variance value indicates what percentage of information has been preserved when the dimensionality was reduced. Note that for both datasets, A and B, different points are labelled "1" or "0". This shows that the two datasets represent the two different target variable definitions which were created by you previously. The plots are interactive - zoom in to explore in detail.''')

|

| 261 |

+

|

| 262 |

+

pcaA, dfA, labelsA, coeffA, componentsA = run_PCA(dataframe_PCA, 'Model_B_label', 'Model_A_label', 2)

|

| 263 |

+

pcaB, dfB, labelsB, coeffB, componentsB = run_PCA(dataframe_PCA, 'Model_A_label', 'Model_B_label', 2)

|

| 264 |

+

loadings = pcaB.components_.T * np.sqrt(pcaB.explained_variance_)

|

| 265 |

+

total_var = pcaA.explained_variance_ratio_.sum() * 100

|

| 266 |

+

dfA = dfA.rename(columns={'target': 'Dataset A'}).reset_index()

|

| 267 |

+

dfB = dfB.rename(columns={'target': 'Dataset B'}).reset_index()

|

| 268 |

+

df_all = pd.merge(dfA, dfB[['index', 'Dataset B']], on='index', how='left')

|

| 269 |

+

|

| 270 |

+

conditions = [

|

| 271 |

+

(df_all['Dataset A'] == 1) & (df_all['Dataset B'] == 0),

|

| 272 |

+

(df_all['Dataset B'] == 1) & (df_all['Dataset A'] == 0),

|

| 273 |

+

(df_all['Dataset A'] == 1) & (df_all['Dataset B'] == 1),

|

| 274 |

+

(df_all['Dataset A'] == 0) & (df_all['Dataset B'] == 0)]

|

| 275 |

+

|

| 276 |

+

values = ['Selected A', 'Selected B', 'Selected both', 'Not selected']

|

| 277 |

+

df_all['All'] = np.select(conditions, values)

|

| 278 |

+

|

| 279 |

+

df_all = df_all.drop(["index"], axis = 1)

|

| 280 |

+

df_all.All=pd.Categorical(df_all.All,categories=['Not selected', 'Selected A', 'Selected B', 'Selected both'])

|

| 281 |

+

df_all=df_all.sort_values('All')

|

| 282 |

+

|

| 283 |

+

selections_dict = {0: 'Not selected', 1: 'Selected'}

|

| 284 |

+

df_all = df_all.replace({"Dataset A": selections_dict, "Dataset B": selections_dict})

|

| 285 |

+

|

| 286 |

+

if "pca_df" not in st.session_state:

|

| 287 |

+

st.session_state.pca_df = df_all

|

| 288 |

+

|

| 289 |

+

fig = px.scatter(st.session_state.pca_df,

|

| 290 |

+

x=st.session_state.pca_df['principal component 1'],

|

| 291 |

+

y=st.session_state.pca_df['principal component 2'],

|

| 292 |

+

title="PCA with labelled groups",

|

| 293 |

+

color=st.session_state.pca_df["All"],

|

| 294 |

+

width = 800, height = 800,

|

| 295 |

+

color_discrete_sequence=px.colors.qualitative.Safe,

|

| 296 |

+

opacity = 0.95)

|

| 297 |

+

|

| 298 |

+

fig.update_yaxes(

|

| 299 |

+

scaleanchor="x",

|

| 300 |

+

scaleratio=1,

|

| 301 |

+

)

|

| 302 |

+

fig.update_traces(marker_size = 10)

|

| 303 |

+

st.plotly_chart(fig)

|

| 304 |

+

|

| 305 |

+

if choice == "Components loading":

|

| 306 |

+

c1,c2 = st.columns(2)

|

| 307 |

+

loadings_df = pd.DataFrame(loadings, columns = ["PC1", "PC2"])

|

| 308 |

+

labels_A_proper = { v:k for k,v in df_keys_dict.items()}

|

| 309 |

+

loadings_df["Features"] = labels_A_proper.values()

|

| 310 |

+

with c1:

|

| 311 |

+

fig = px.bar(loadings_df, x="PC1", y="Features", orientation = 'h')

|

| 312 |

+

st.plotly_chart(fig, use_container_width = True)

|

| 313 |

+

with c2:

|

| 314 |

+

fig = px.bar(loadings_df, x="PC2", y="Features", orientation = 'h')

|

| 315 |

+

st.plotly_chart(fig, use_container_width = True)

|

| 316 |

+

|

| 317 |

+

# fig = go.Figure()

|

| 318 |

+

# fig.add_trace(go.Bar(

|

| 319 |

+

# x=loadings_df["PC1"],

|

| 320 |

+

# y=loadings_df["Features"],

|

| 321 |

+

# name='Principal Component 1',

|

| 322 |

+

# marker_color='rgb(55, 83, 109)',

|

| 323 |

+

# orientation='h'

|

| 324 |

+

# ))

|

| 325 |

+

# fig.add_trace(go.Bar(

|

| 326 |

+

# x=loadings_df["PC2"],

|

| 327 |

+

# y=loadings_df["Features"],

|

| 328 |

+

# name='Principal Component 2',

|

| 329 |

+

# marker_color='rgb(26, 118, 255)',

|

| 330 |

+

# orientation='h'

|

| 331 |

+

# ))

|

| 332 |

+

# fig.update_layout(

|

| 333 |

+

# title='Component loadings',

|

| 334 |

+

# xaxis_tickfont_size=14,

|

| 335 |

+

# xaxis=dict(

|

| 336 |

+

# title='Loading value'),

|

| 337 |

+

# yaxis=dict(

|

| 338 |

+

# title='Feature',

|

| 339 |

+

# titlefont_size=16,

|

| 340 |

+

# tickfont_size=14,

|

| 341 |

+

# ),

|

| 342 |

+

# legend=dict(

|

| 343 |

+

# bgcolor='rgba(255, 255, 255, 0)',

|

| 344 |

+

# bordercolor='rgba(255, 255, 255, 0)'

|

| 345 |

+

# ),

|

| 346 |

+

# barmode='group',

|

| 347 |

+

# bargap=0.15, # gap between bars of adjacent location coordinates.

|

| 348 |

+

# bargroupgap=0.1 # gap between bars of the same location coordinate.

|

| 349 |

+

# )

|

| 350 |

+

# st.plotly_chart(fig, use_container_width = True)

|

| 351 |

+

|

| 352 |

+

st.markdown('''On this plot, PCA component loadings can be explored. These facilitate the understanding of how much each variable (which there are 11 of) contributes to a particular principal component. Here, the 11 variables were reduced to 2 components, which are labelled PC1 and PC2. The magnitude of the loading (here displayed as the size of the bar in the bar chart) indicates how strong the relationship between the variable and the component is. Therefore, the higher the bar, the stronger the relationship between that component and that variable. The loading's sign can be positive or negative. This indicates whether the principal component and that variable are positively or negatively correlated. We can see that multiple variables are positively correlated with PC2. Two variables, episodic verbal learning and delayed recall are negatively correlated with both of the components.''')

|

| 353 |

+

|

| 354 |

+

|

| 355 |

+

def model_out(full_df):

|

| 356 |

+

st.markdown('''This section highlights the discrepancies between your two models when presented with the same pool of new, previously unseen candidates to label. Specifically, you'll be investigating the candidates assigned a "1" label by both models. These individuals would be those considered for a job interview or chosen for the role, according to your defined target variable.''')

|

| 357 |

+

# Create a selectbox to choose a protected characteristic to explore

|

| 358 |

+

selectbox = st.selectbox('Characteristic to explore', characteristic_dict.keys())

|

| 359 |

+

representation = st.selectbox("Representation", ("absolute", "proportional"))

|

| 360 |

+

row1_space1, row1_1, row1_space2, row1_2, row1_space3 = st.columns((0.1, 3, 0.1, 3, 0.1))

|

| 361 |

+

with row1_1:

|

| 362 |

+

st.subheader("Candidates selected by model A")

|

| 363 |

+

|

| 364 |

+

if representation == "absolute":

|

| 365 |

+

# Select predicted data ==1

|

| 366 |

+

data = full_df.loc[full_df['Predicted_A'] == 1]

|

| 367 |

+

|

| 368 |

+

# Use function plot_data to plot selected data

|

| 369 |

+

plot_data(data, selectbox, characteristic_dict[selectbox])

|

| 370 |

+

else:

|

| 371 |

+

display_proportional(full_df, selectbox, 'Predicted_A')

|

| 372 |

+

|

| 373 |

+

with row1_2:

|

| 374 |

+

st.subheader("Candidates selected by model B")

|

| 375 |

+

|

| 376 |

+

if representation == "absolute":

|

| 377 |

+

# Select predicted data ==1

|

| 378 |

+

data = full_df.loc[full_df['Predicted_B'] == 1]

|

| 379 |

+

|