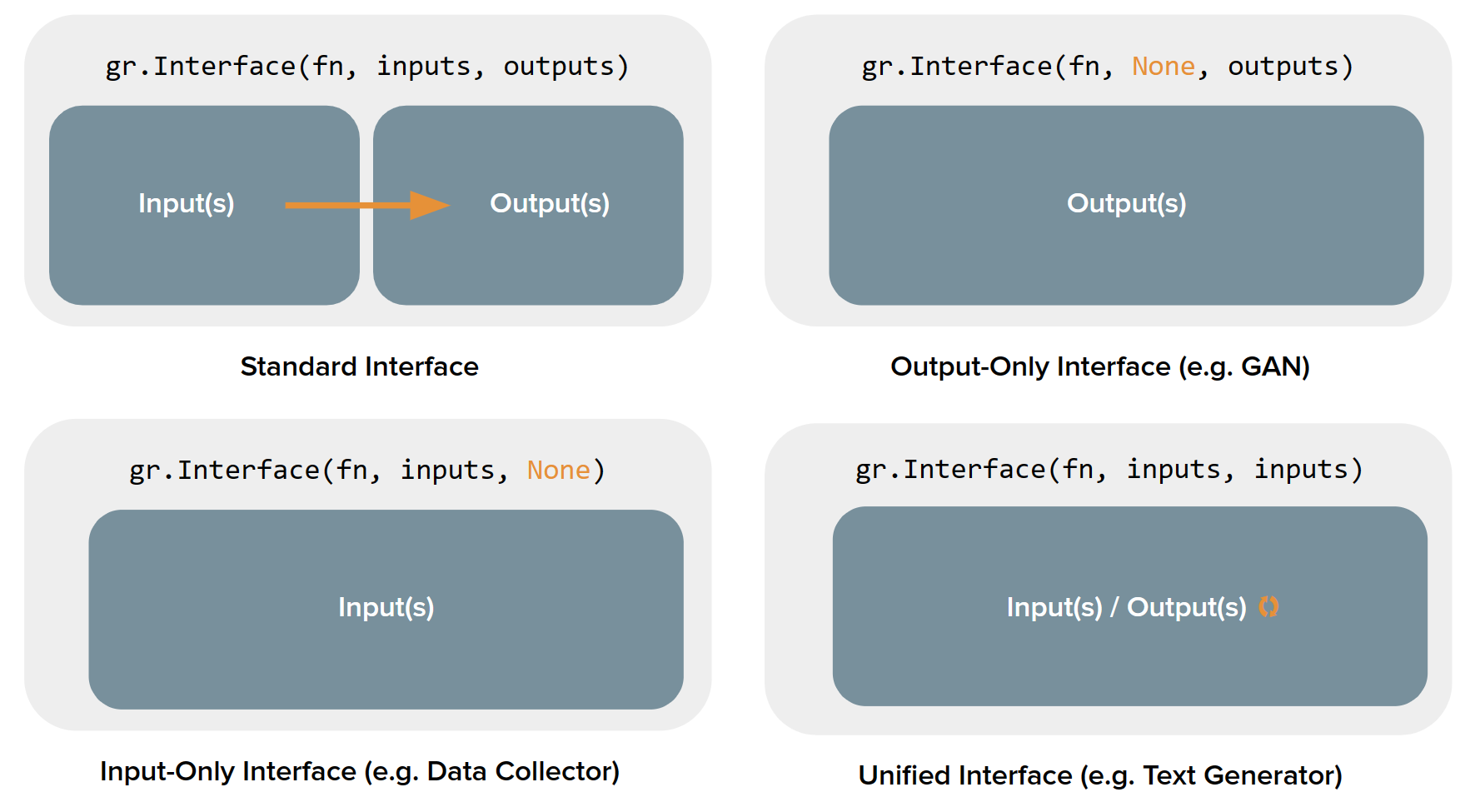

| {"guide": {"name": "four-kinds-of-interfaces", "category": "building-interfaces", "pretty_category": "Building Interfaces", "guide_index": 5, "absolute_index": 6, "pretty_name": "Four Kinds Of Interfaces", "content": "# The 4 Kinds of Gradio Interfaces\n\nSo far, we've always assumed that in order to build an Gradio demo, you need both inputs and outputs. But this isn't always the case for machine learning demos: for example, _unconditional image generation models_ don't take any input but produce an image as the output.\n\nIt turns out that the `gradio.Interface` class can actually handle 4 different kinds of demos:\n\n1. **Standard demos**: which have both separate inputs and outputs (e.g. an image classifier or speech-to-text model)\n2. **Output-only demos**: which don't take any input but produce on output (e.g. an unconditional image generation model)\n3. **Input-only demos**: which don't produce any output but do take in some sort of input (e.g. a demo that saves images that you upload to a persistent external database)\n4. **Unified demos**: which have both input and output components, but the input and output components _are the same_. This means that the output produced overrides the input (e.g. a text autocomplete model)\n\nDepending on the kind of demo, the user interface (UI) looks slightly different:\n\n\n\nLet's see how to build each kind of demo using the `Interface` class, along with examples:\n\n## Standard demos\n\nTo create a demo that has both the input and the output components, you simply need to set the values of the `inputs` and `outputs` parameter in `Interface()`. Here's an example demo of a simple image filter:\n\n```python\nimport numpy as np\nimport gradio as gr\n\ndef sepia(input_img):\n sepia_filter = np.array([\n [0.393, 0.769, 0.189],\n [0.349, 0.686, 0.168],\n [0.272, 0.534, 0.131]\n ])\n sepia_img = input_img.dot(sepia_filter.T)\n sepia_img /= sepia_img.max()\n return sepia_img\n\ndemo = gr.Interface(sepia, gr.Image(), \"image\")\ndemo.launch()\n\n```\n<gradio-app space='gradio/sepia_filter'></gradio-app>\n\n## Output-only demos\n\nWhat about demos that only contain outputs? In order to build such a demo, you simply set the value of the `inputs` parameter in `Interface()` to `None`. Here's an example demo of a mock image generation model:\n\n```python\nimport time\n\nimport gradio as gr\n\ndef fake_gan():\n time.sleep(1)\n images = [\n \"https://images.unsplash.com/photo-1507003211169-0a1dd7228f2d?ixlib=rb-1.2.1&ixid=MnwxMjA3fDB8MHxwaG90by1wYWdlfHx8fGVufDB8fHx8&auto=format&fit=crop&w=387&q=80\",\n \"https://images.unsplash.com/photo-1554151228-14d9def656e4?ixlib=rb-1.2.1&ixid=MnwxMjA3fDB8MHxwaG90by1wYWdlfHx8fGVufDB8fHx8&auto=format&fit=crop&w=386&q=80\",\n \"https://images.unsplash.com/photo-1542909168-82c3e7fdca5c?ixlib=rb-1.2.1&ixid=MnwxMjA3fDB8MHxzZWFyY2h8MXx8aHVtYW4lMjBmYWNlfGVufDB8fDB8fA%3D%3D&w=1000&q=80\",\n ]\n return images\n\ndemo = gr.Interface(\n fn=fake_gan,\n inputs=None,\n outputs=gr.Gallery(label=\"Generated Images\", columns=[2]),\n title=\"FD-GAN\",\n description=\"This is a fake demo of a GAN. In reality, the images are randomly chosen from Unsplash.\",\n)\n\ndemo.launch()\n\n```\n<gradio-app space='gradio/fake_gan_no_input'></gradio-app>\n\n## Input-only demos\n\nSimilarly, to create a demo that only contains inputs, set the value of `outputs` parameter in `Interface()` to be `None`. Here's an example demo that saves any uploaded image to disk:\n\n```python\nimport random\nimport string\nimport gradio as gr\n\ndef save_image_random_name(image):\n random_string = ''.join(random.choices(string.ascii_letters, k=20)) + '.png'\n image.save(random_string)\n print(f\"Saved image to {random_string}!\")\n\ndemo = gr.Interface(\n fn=save_image_random_name,\n inputs=gr.Image(type=\"pil\"),\n outputs=None,\n)\ndemo.launch()\n\n```\n<gradio-app space='gradio/save_file_no_output'></gradio-app>\n\n## Unified demos\n\nA demo that has a single component as both the input and the output. It can simply be created by setting the values of the `inputs` and `outputs` parameter as the same component. Here's an example demo of a text generation model:\n\n```python\nimport gradio as gr\nfrom transformers import pipeline\n\ngenerator = pipeline('text-generation', model = 'gpt2')\n\ndef generate_text(text_prompt):\n response = generator(text_prompt, max_length = 30, num_return_sequences=5)\n return response[0]['generated_text'] \n\ntextbox = gr.Textbox()\n\ndemo = gr.Interface(generate_text, textbox, textbox)\n\ndemo.launch()\n\n```\n<gradio-app space='gradio/unified_demo_text_generation'></gradio-app>\n\nIt may be the case that none of the 4 cases fulfill your exact needs. In this case, you need to use the `gr.Blocks()` approach!", "tags": [], "spaces": [], "url": "/guides/four-kinds-of-interfaces/", "contributor": null}} |