Upload folder using huggingface_hub

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +1 -0

- .github/PULL_REQUEST_TEMPLATE.md +17 -0

- .github/workflows/python-package.yml +30 -0

- .gitignore +31 -0

- .pylintrc +449 -0

- 2024-02-26-conv.json +23 -0

- 2024-02-27-conv.json +0 -0

- 2024-02-28-conv.json +0 -0

- 2024-02-29-conv.json +33 -0

- 2024-03-01-conv.json +8 -0

- LICENSE +201 -0

- README.md +353 -7

- assets/demo_narrow.gif +3 -0

- assets/qa_browser.png +0 -0

- assets/screenshot_cli.png +0 -0

- assets/screenshot_gui.png +0 -0

- assets/server_arch.png +0 -0

- assets/vicuna_logo.jpeg +0 -0

- controller.log +0 -0

- controller.log.2024-02-26 +0 -0

- controller.log.2024-02-27 +0 -0

- controller.log.2024-02-28 +0 -0

- data/dummy_conversation.json +0 -0

- docker/Dockerfile +7 -0

- docker/docker-compose.yml +36 -0

- docs/arena.md +15 -0

- docs/awq.md +71 -0

- docs/commands/conv_release.md +38 -0

- docs/commands/data_cleaning.md +19 -0

- docs/commands/leaderboard.md +37 -0

- docs/commands/local_cluster.md +38 -0

- docs/commands/pypi.md +11 -0

- docs/commands/webserver.md +94 -0

- docs/dataset_release.md +6 -0

- docs/exllama_v2.md +63 -0

- docs/gptq.md +59 -0

- docs/langchain_integration.md +90 -0

- docs/lightllm_integration.md +18 -0

- docs/mlx_integration.md +23 -0

- docs/model_support.md +130 -0

- docs/openai_api.md +152 -0

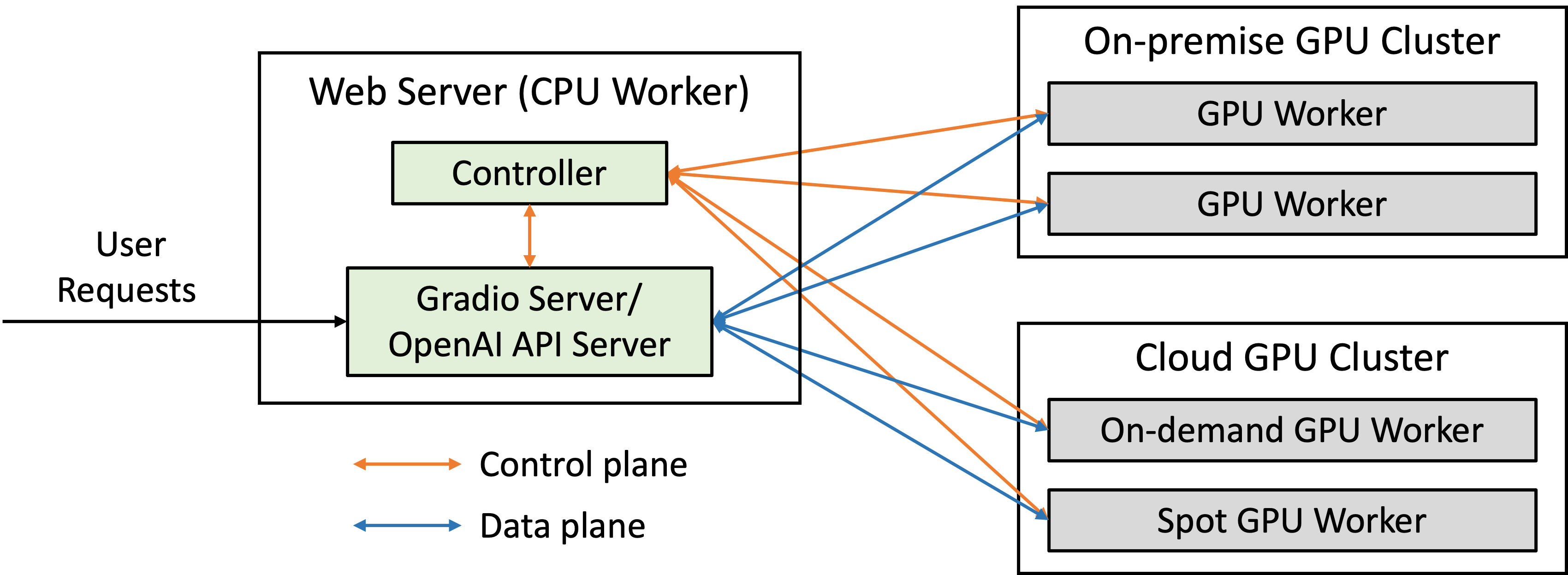

- docs/server_arch.md +2 -0

- docs/third_party_ui.md +24 -0

- docs/training.md +118 -0

- docs/vicuna_weights_version.md +97 -0

- docs/vllm_integration.md +25 -0

- docs/xFasterTransformer.md +90 -0

- fastchat/__init__.py +1 -0

- fastchat/__pycache__/__init__.cpython-310.pyc +0 -0

- fastchat/__pycache__/__init__.cpython-311.pyc +0 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

assets/demo_narrow.gif filter=lfs diff=lfs merge=lfs -text

|

.github/PULL_REQUEST_TEMPLATE.md

ADDED

|

@@ -0,0 +1,17 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<!-- Thank you for your contribution! -->

|

| 2 |

+

|

| 3 |

+

<!-- Please add a reviewer to the assignee section when you create a PR. If you don't have the access to it, we will shortly find a reviewer and assign them to your PR. -->

|

| 4 |

+

|

| 5 |

+

## Why are these changes needed?

|

| 6 |

+

|

| 7 |

+

<!-- Please give a short summary of the change and the problem this solves. -->

|

| 8 |

+

|

| 9 |

+

## Related issue number (if applicable)

|

| 10 |

+

|

| 11 |

+

<!-- For example: "Closes #1234" -->

|

| 12 |

+

|

| 13 |

+

## Checks

|

| 14 |

+

|

| 15 |

+

- [ ] I've run `format.sh` to lint the changes in this PR.

|

| 16 |

+

- [ ] I've included any doc changes needed.

|

| 17 |

+

- [ ] I've made sure the relevant tests are passing (if applicable).

|

.github/workflows/python-package.yml

ADDED

|

@@ -0,0 +1,30 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: Python package

|

| 2 |

+

|

| 3 |

+

on: [push, pull_request]

|

| 4 |

+

|

| 5 |

+

jobs:

|

| 6 |

+

build:

|

| 7 |

+

|

| 8 |

+

runs-on: ubuntu-latest

|

| 9 |

+

strategy:

|

| 10 |

+

fail-fast: false

|

| 11 |

+

matrix:

|

| 12 |

+

python-version: ["3.10"]

|

| 13 |

+

|

| 14 |

+

steps:

|

| 15 |

+

- uses: actions/checkout@v3

|

| 16 |

+

- name: Set up Python ${{ matrix.python-version }}

|

| 17 |

+

uses: actions/setup-python@v4

|

| 18 |

+

with:

|

| 19 |

+

python-version: ${{ matrix.python-version }}

|

| 20 |

+

cache: 'pip'

|

| 21 |

+

- name: Install dependencies

|

| 22 |

+

run: |

|

| 23 |

+

python -m pip install --upgrade pip

|

| 24 |

+

python -m pip install -e '.[dev]'

|

| 25 |

+

- name: Run linter

|

| 26 |

+

run: |

|

| 27 |

+

pylint -d all -e E0602 ./fastchat/

|

| 28 |

+

- name: Check formatting

|

| 29 |

+

run: |

|

| 30 |

+

black --check .

|

.gitignore

ADDED

|

@@ -0,0 +1,31 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Python

|

| 2 |

+

__pycache__

|

| 3 |

+

*.pyc

|

| 4 |

+

*.egg-info

|

| 5 |

+

dist

|

| 6 |

+

.venv

|

| 7 |

+

|

| 8 |

+

# Log

|

| 9 |

+

*.log

|

| 10 |

+

*.log.*

|

| 11 |

+

*.json

|

| 12 |

+

!playground/deepspeed_config_s2.json

|

| 13 |

+

!playground/deepspeed_config_s3.json

|

| 14 |

+

|

| 15 |

+

# Editor

|

| 16 |

+

.idea

|

| 17 |

+

*.swp

|

| 18 |

+

|

| 19 |

+

# Other

|

| 20 |

+

.DS_Store

|

| 21 |

+

wandb

|

| 22 |

+

output

|

| 23 |

+

checkpoints_flant5_3b

|

| 24 |

+

|

| 25 |

+

# Data

|

| 26 |

+

*.pkl

|

| 27 |

+

*.csv

|

| 28 |

+

tests/state_of_the_union.txt

|

| 29 |

+

|

| 30 |

+

# Build

|

| 31 |

+

build

|

.pylintrc

ADDED

|

@@ -0,0 +1,449 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# This Pylint rcfile contains a best-effort configuration to uphold the

|

| 2 |

+

# best-practices and style described in the Google Python style guide:

|

| 3 |

+

# https://google.github.io/styleguide/pyguide.html

|

| 4 |

+

#

|

| 5 |

+

# Its canonical open-source location is:

|

| 6 |

+

# https://google.github.io/styleguide/pylintrc

|

| 7 |

+

|

| 8 |

+

[MASTER]

|

| 9 |

+

|

| 10 |

+

# Files or directories to be skipped. They should be base names, not paths.

|

| 11 |

+

ignore=third_party,ray_patches,providers

|

| 12 |

+

|

| 13 |

+

# Files or directories matching the regex patterns are skipped. The regex

|

| 14 |

+

# matches against base names, not paths.

|

| 15 |

+

ignore-patterns=

|

| 16 |

+

|

| 17 |

+

# Pickle collected data for later comparisons.

|

| 18 |

+

persistent=no

|

| 19 |

+

|

| 20 |

+

# List of plugins (as comma separated values of python modules names) to load,

|

| 21 |

+

# usually to register additional checkers.

|

| 22 |

+

load-plugins=

|

| 23 |

+

|

| 24 |

+

# Use multiple processes to speed up Pylint.

|

| 25 |

+

jobs=4

|

| 26 |

+

|

| 27 |

+

# Allow loading of arbitrary C extensions. Extensions are imported into the

|

| 28 |

+

# active Python interpreter and may run arbitrary code.

|

| 29 |

+

unsafe-load-any-extension=no

|

| 30 |

+

|

| 31 |

+

|

| 32 |

+

[MESSAGES CONTROL]

|

| 33 |

+

|

| 34 |

+

# Only show warnings with the listed confidence levels. Leave empty to show

|

| 35 |

+

# all. Valid levels: HIGH, INFERENCE, INFERENCE_FAILURE, UNDEFINED

|

| 36 |

+

confidence=

|

| 37 |

+

|

| 38 |

+

# Enable the message, report, category or checker with the given id(s). You can

|

| 39 |

+

# either give multiple identifier separated by comma (,) or put this option

|

| 40 |

+

# multiple time (only on the command line, not in the configuration file where

|

| 41 |

+

# it should appear only once). See also the "--disable" option for examples.

|

| 42 |

+

#enable=

|

| 43 |

+

|

| 44 |

+

# Disable the message, report, category or checker with the given id(s). You

|

| 45 |

+

# can either give multiple identifiers separated by comma (,) or put this

|

| 46 |

+

# option multiple times (only on the command line, not in the configuration

|

| 47 |

+

# file where it should appear only once).You can also use "--disable=all" to

|

| 48 |

+

# disable everything first and then reenable specific checks. For example, if

|

| 49 |

+

# you want to run only the similarities checker, you can use "--disable=all

|

| 50 |

+

# --enable=similarities". If you want to run only the classes checker, but have

|

| 51 |

+

# no Warning level messages displayed, use"--disable=all --enable=classes

|

| 52 |

+

# --disable=W"

|

| 53 |

+

disable=abstract-method,

|

| 54 |

+

apply-builtin,

|

| 55 |

+

arguments-differ,

|

| 56 |

+

attribute-defined-outside-init,

|

| 57 |

+

backtick,

|

| 58 |

+

bad-option-value,

|

| 59 |

+

basestring-builtin,

|

| 60 |

+

buffer-builtin,

|

| 61 |

+

c-extension-no-member,

|

| 62 |

+

consider-using-enumerate,

|

| 63 |

+

cmp-builtin,

|

| 64 |

+

cmp-method,

|

| 65 |

+

coerce-builtin,

|

| 66 |

+

coerce-method,

|

| 67 |

+

delslice-method,

|

| 68 |

+

div-method,

|

| 69 |

+

duplicate-code,

|

| 70 |

+

eq-without-hash,

|

| 71 |

+

execfile-builtin,

|

| 72 |

+

file-builtin,

|

| 73 |

+

filter-builtin-not-iterating,

|

| 74 |

+

fixme,

|

| 75 |

+

getslice-method,

|

| 76 |

+

global-statement,

|

| 77 |

+

hex-method,

|

| 78 |

+

idiv-method,

|

| 79 |

+

implicit-str-concat-in-sequence,

|

| 80 |

+

import-error,

|

| 81 |

+

import-self,

|

| 82 |

+

import-star-module-level,

|

| 83 |

+

inconsistent-return-statements,

|

| 84 |

+

input-builtin,

|

| 85 |

+

intern-builtin,

|

| 86 |

+

invalid-str-codec,

|

| 87 |

+

locally-disabled,

|

| 88 |

+

logging-format-interpolation, # FIXME(sky): make pass.

|

| 89 |

+

logging-fstring-interpolation, # FIXME(sky): make pass.

|

| 90 |

+

long-builtin,

|

| 91 |

+

long-suffix,

|

| 92 |

+

map-builtin-not-iterating,

|

| 93 |

+

misplaced-comparison-constant,

|

| 94 |

+

missing-function-docstring,

|

| 95 |

+

metaclass-assignment,

|

| 96 |

+

next-method-called,

|

| 97 |

+

next-method-defined,

|

| 98 |

+

no-absolute-import,

|

| 99 |

+

no-else-break,

|

| 100 |

+

no-else-continue,

|

| 101 |

+

no-else-raise,

|

| 102 |

+

no-else-return,

|

| 103 |

+

no-init, # added

|

| 104 |

+

no-member,

|

| 105 |

+

no-name-in-module,

|

| 106 |

+

no-self-use,

|

| 107 |

+

nonzero-method,

|

| 108 |

+

oct-method,

|

| 109 |

+

old-division,

|

| 110 |

+

old-ne-operator,

|

| 111 |

+

old-octal-literal,

|

| 112 |

+

old-raise-syntax,

|

| 113 |

+

parameter-unpacking,

|

| 114 |

+

print-statement,

|

| 115 |

+

raising-string,

|

| 116 |

+

range-builtin-not-iterating,

|

| 117 |

+

raw_input-builtin,

|

| 118 |

+

rdiv-method,

|

| 119 |

+

reduce-builtin,

|

| 120 |

+

relative-import,

|

| 121 |

+

reload-builtin,

|

| 122 |

+

round-builtin,

|

| 123 |

+

setslice-method,

|

| 124 |

+

signature-differs,

|

| 125 |

+

standarderror-builtin,

|

| 126 |

+

suppressed-message,

|

| 127 |

+

sys-max-int,

|

| 128 |

+

too-few-public-methods,

|

| 129 |

+

too-many-ancestors,

|

| 130 |

+

too-many-arguments,

|

| 131 |

+

too-many-boolean-expressions,

|

| 132 |

+

too-many-branches,

|

| 133 |

+

too-many-instance-attributes,

|

| 134 |

+

too-many-locals,

|

| 135 |

+

too-many-nested-blocks,

|

| 136 |

+

too-many-public-methods,

|

| 137 |

+

too-many-return-statements,

|

| 138 |

+

too-many-statements,

|

| 139 |

+

trailing-newlines,

|

| 140 |

+

unichr-builtin,

|

| 141 |

+

unicode-builtin,

|

| 142 |

+

unnecessary-pass,

|

| 143 |

+

unpacking-in-except,

|

| 144 |

+

useless-else-on-loop,

|

| 145 |

+

useless-object-inheritance,

|

| 146 |

+

useless-suppression,

|

| 147 |

+

using-cmp-argument,

|

| 148 |

+

wrong-import-order,

|

| 149 |

+

xrange-builtin,

|

| 150 |

+

zip-builtin-not-iterating,

|

| 151 |

+

|

| 152 |

+

|

| 153 |

+

[REPORTS]

|

| 154 |

+

|

| 155 |

+

# Set the output format. Available formats are text, parseable, colorized, msvs

|

| 156 |

+

# (visual studio) and html. You can also give a reporter class, eg

|

| 157 |

+

# mypackage.mymodule.MyReporterClass.

|

| 158 |

+

output-format=text

|

| 159 |

+

|

| 160 |

+

# Put messages in a separate file for each module / package specified on the

|

| 161 |

+

# command line instead of printing them on stdout. Reports (if any) will be

|

| 162 |

+

# written in a file name "pylint_global.[txt|html]". This option is deprecated

|

| 163 |

+

# and it will be removed in Pylint 2.0.

|

| 164 |

+

files-output=no

|

| 165 |

+

|

| 166 |

+

# Tells whether to display a full report or only the messages

|

| 167 |

+

reports=no

|

| 168 |

+

|

| 169 |

+

# Python expression which should return a note less than 10 (10 is the highest

|

| 170 |

+

# note). You have access to the variables errors warning, statement which

|

| 171 |

+

# respectively contain the number of errors / warnings messages and the total

|

| 172 |

+

# number of statements analyzed. This is used by the global evaluation report

|

| 173 |

+

# (RP0004).

|

| 174 |

+

evaluation=10.0 - ((float(5 * error + warning + refactor + convention) / statement) * 10)

|

| 175 |

+

|

| 176 |

+

# Template used to display messages. This is a python new-style format string

|

| 177 |

+

# used to format the message information. See doc for all details

|

| 178 |

+

#msg-template=

|

| 179 |

+

|

| 180 |

+

|

| 181 |

+

[BASIC]

|

| 182 |

+

|

| 183 |

+

# Good variable names which should always be accepted, separated by a comma

|

| 184 |

+

good-names=main,_

|

| 185 |

+

|

| 186 |

+

# Bad variable names which should always be refused, separated by a comma

|

| 187 |

+

bad-names=

|

| 188 |

+

|

| 189 |

+

# Colon-delimited sets of names that determine each other's naming style when

|

| 190 |

+

# the name regexes allow several styles.

|

| 191 |

+

name-group=

|

| 192 |

+

|

| 193 |

+

# Include a hint for the correct naming format with invalid-name

|

| 194 |

+

include-naming-hint=no

|

| 195 |

+

|

| 196 |

+

# List of decorators that produce properties, such as abc.abstractproperty. Add

|

| 197 |

+

# to this list to register other decorators that produce valid properties.

|

| 198 |

+

property-classes=abc.abstractproperty,cached_property.cached_property,cached_property.threaded_cached_property,cached_property.cached_property_with_ttl,cached_property.threaded_cached_property_with_ttl

|

| 199 |

+

|

| 200 |

+

# Regular expression matching correct function names

|

| 201 |

+

function-rgx=^(?:(?P<exempt>setUp|tearDown|setUpModule|tearDownModule)|(?P<camel_case>_?[A-Z][a-zA-Z0-9]*)|(?P<snake_case>_?[a-z][a-z0-9_]*))$

|

| 202 |

+

|

| 203 |

+

# Regular expression matching correct variable names

|

| 204 |

+

variable-rgx=^[a-z][a-z0-9_]*$

|

| 205 |

+

|

| 206 |

+

# Regular expression matching correct constant names

|

| 207 |

+

const-rgx=^(_?[A-Z][A-Z0-9_]*|__[a-z0-9_]+__|_?[a-z][a-z0-9_]*)$

|

| 208 |

+

|

| 209 |

+

# Regular expression matching correct attribute names

|

| 210 |

+

attr-rgx=^_{0,2}[a-z][a-z0-9_]*$

|

| 211 |

+

|

| 212 |

+

# Regular expression matching correct argument names

|

| 213 |

+

argument-rgx=^[a-z][a-z0-9_]*$

|

| 214 |

+

|

| 215 |

+

# Regular expression matching correct class attribute names

|

| 216 |

+

class-attribute-rgx=^(_?[A-Z][A-Z0-9_]*|__[a-z0-9_]+__|_?[a-z][a-z0-9_]*)$

|

| 217 |

+

|

| 218 |

+

# Regular expression matching correct inline iteration names

|

| 219 |

+

inlinevar-rgx=^[a-z][a-z0-9_]*$

|

| 220 |

+

|

| 221 |

+

# Regular expression matching correct class names

|

| 222 |

+

class-rgx=^_?[A-Z][a-zA-Z0-9]*$

|

| 223 |

+

|

| 224 |

+

# Regular expression matching correct module names

|

| 225 |

+

module-rgx=^(_?[a-z][a-z0-9_]*|__init__)$

|

| 226 |

+

|

| 227 |

+

# Regular expression matching correct method names

|

| 228 |

+

method-rgx=(?x)^(?:(?P<exempt>_[a-z0-9_]+__|runTest|setUp|tearDown|setUpTestCase|tearDownTestCase|setupSelf|tearDownClass|setUpClass|(test|assert)_*[A-Z0-9][a-zA-Z0-9_]*|next)|(?P<camel_case>_{0,2}[A-Z][a-zA-Z0-9_]*)|(?P<snake_case>_{0,2}[a-z][a-z0-9_]*))$

|

| 229 |

+

|

| 230 |

+

# Regular expression which should only match function or class names that do

|

| 231 |

+

# not require a docstring.

|

| 232 |

+

no-docstring-rgx=(__.*__|main|test.*|.*test|.*Test)$

|

| 233 |

+

|

| 234 |

+

# Minimum line length for functions/classes that require docstrings, shorter

|

| 235 |

+

# ones are exempt.

|

| 236 |

+

docstring-min-length=10

|

| 237 |

+

|

| 238 |

+

|

| 239 |

+

[TYPECHECK]

|

| 240 |

+

|

| 241 |

+

# List of decorators that produce context managers, such as

|

| 242 |

+

# contextlib.contextmanager. Add to this list to register other decorators that

|

| 243 |

+

# produce valid context managers.

|

| 244 |

+

contextmanager-decorators=contextlib.contextmanager,contextlib2.contextmanager

|

| 245 |

+

|

| 246 |

+

# Tells whether missing members accessed in mixin class should be ignored. A

|

| 247 |

+

# mixin class is detected if its name ends with "mixin" (case insensitive).

|

| 248 |

+

ignore-mixin-members=yes

|

| 249 |

+

|

| 250 |

+

# List of module names for which member attributes should not be checked

|

| 251 |

+

# (useful for modules/projects where namespaces are manipulated during runtime

|

| 252 |

+

# and thus existing member attributes cannot be deduced by static analysis. It

|

| 253 |

+

# supports qualified module names, as well as Unix pattern matching.

|

| 254 |

+

ignored-modules=

|

| 255 |

+

|

| 256 |

+

# List of class names for which member attributes should not be checked (useful

|

| 257 |

+

# for classes with dynamically set attributes). This supports the use of

|

| 258 |

+

# qualified names.

|

| 259 |

+

ignored-classes=optparse.Values,thread._local,_thread._local

|

| 260 |

+

|

| 261 |

+

# List of members which are set dynamically and missed by pylint inference

|

| 262 |

+

# system, and so shouldn't trigger E1101 when accessed. Python regular

|

| 263 |

+

# expressions are accepted.

|

| 264 |

+

generated-members=

|

| 265 |

+

|

| 266 |

+

|

| 267 |

+

[FORMAT]

|

| 268 |

+

|

| 269 |

+

# Maximum number of characters on a single line.

|

| 270 |

+

max-line-length=100

|

| 271 |

+

|

| 272 |

+

# TODO(https://github.com/PyCQA/pylint/issues/3352): Direct pylint to exempt

|

| 273 |

+

# lines made too long by directives to pytype.

|

| 274 |

+

|

| 275 |

+

# Regexp for a line that is allowed to be longer than the limit.

|

| 276 |

+

ignore-long-lines=(?x)(

|

| 277 |

+

^\s*(\#\ )?<?https?://\S+>?$|

|

| 278 |

+

^\s*(from\s+\S+\s+)?import\s+.+$)

|

| 279 |

+

|

| 280 |

+

# Allow the body of an if to be on the same line as the test if there is no

|

| 281 |

+

# else.

|

| 282 |

+

single-line-if-stmt=yes

|

| 283 |

+

|

| 284 |

+

# List of optional constructs for which whitespace checking is disabled. `dict-

|

| 285 |

+

# separator` is used to allow tabulation in dicts, etc.: {1 : 1,\n222: 2}.

|

| 286 |

+

# `trailing-comma` allows a space between comma and closing bracket: (a, ).

|

| 287 |

+

# `empty-line` allows space-only lines.

|

| 288 |

+

no-space-check=

|

| 289 |

+

|

| 290 |

+

# Maximum number of lines in a module

|

| 291 |

+

max-module-lines=99999

|

| 292 |

+

|

| 293 |

+

# String used as indentation unit. The internal Google style guide mandates 2

|

| 294 |

+

# spaces. Google's externaly-published style guide says 4, consistent with

|

| 295 |

+

# PEP 8. Here we use 4 spaces.

|

| 296 |

+

indent-string=' '

|

| 297 |

+

|

| 298 |

+

# Number of spaces of indent required inside a hanging or continued line.

|

| 299 |

+

indent-after-paren=4

|

| 300 |

+

|

| 301 |

+

# Expected format of line ending, e.g. empty (any line ending), LF or CRLF.

|

| 302 |

+

expected-line-ending-format=

|

| 303 |

+

|

| 304 |

+

|

| 305 |

+

[MISCELLANEOUS]

|

| 306 |

+

|

| 307 |

+

# List of note tags to take in consideration, separated by a comma.

|

| 308 |

+

notes=TODO

|

| 309 |

+

|

| 310 |

+

|

| 311 |

+

[STRING]

|

| 312 |

+

|

| 313 |

+

# This flag controls whether inconsistent-quotes generates a warning when the

|

| 314 |

+

# character used as a quote delimiter is used inconsistently within a module.

|

| 315 |

+

check-quote-consistency=yes

|

| 316 |

+

|

| 317 |

+

|

| 318 |

+

[VARIABLES]

|

| 319 |

+

|

| 320 |

+

# Tells whether we should check for unused import in __init__ files.

|

| 321 |

+

init-import=no

|

| 322 |

+

|

| 323 |

+

# A regular expression matching the name of dummy variables (i.e. expectedly

|

| 324 |

+

# not used).

|

| 325 |

+

dummy-variables-rgx=^\*{0,2}(_$|unused_|dummy_)

|

| 326 |

+

|

| 327 |

+

# List of additional names supposed to be defined in builtins. Remember that

|

| 328 |

+

# you should avoid to define new builtins when possible.

|

| 329 |

+

additional-builtins=

|

| 330 |

+

|

| 331 |

+

# List of strings which can identify a callback function by name. A callback

|

| 332 |

+

# name must start or end with one of those strings.

|

| 333 |

+

callbacks=cb_,_cb

|

| 334 |

+

|

| 335 |

+

# List of qualified module names which can have objects that can redefine

|

| 336 |

+

# builtins.

|

| 337 |

+

redefining-builtins-modules=six,six.moves,past.builtins,future.builtins,functools

|

| 338 |

+

|

| 339 |

+

|

| 340 |

+

[LOGGING]

|

| 341 |

+

|

| 342 |

+

# Logging modules to check that the string format arguments are in logging

|

| 343 |

+

# function parameter format

|

| 344 |

+

logging-modules=logging,absl.logging,tensorflow.io.logging

|

| 345 |

+

|

| 346 |

+

|

| 347 |

+

[SIMILARITIES]

|

| 348 |

+

|

| 349 |

+

# Minimum lines number of a similarity.

|

| 350 |

+

min-similarity-lines=4

|

| 351 |

+

|

| 352 |

+

# Ignore comments when computing similarities.

|

| 353 |

+

ignore-comments=yes

|

| 354 |

+

|

| 355 |

+

# Ignore docstrings when computing similarities.

|

| 356 |

+

ignore-docstrings=yes

|

| 357 |

+

|

| 358 |

+

# Ignore imports when computing similarities.

|

| 359 |

+

ignore-imports=no

|

| 360 |

+

|

| 361 |

+

|

| 362 |

+

[SPELLING]

|

| 363 |

+

|

| 364 |

+

# Spelling dictionary name. Available dictionaries: none. To make it working

|

| 365 |

+

# install python-enchant package.

|

| 366 |

+

spelling-dict=

|

| 367 |

+

|

| 368 |

+

# List of comma separated words that should not be checked.

|

| 369 |

+

spelling-ignore-words=

|

| 370 |

+

|

| 371 |

+

# A path to a file that contains private dictionary; one word per line.

|

| 372 |

+

spelling-private-dict-file=

|

| 373 |

+

|

| 374 |

+

# Tells whether to store unknown words to indicated private dictionary in

|

| 375 |

+

# --spelling-private-dict-file option instead of raising a message.

|

| 376 |

+

spelling-store-unknown-words=no

|

| 377 |

+

|

| 378 |

+

|

| 379 |

+

[IMPORTS]

|

| 380 |

+

|

| 381 |

+

# Deprecated modules which should not be used, separated by a comma

|

| 382 |

+

deprecated-modules=regsub,

|

| 383 |

+

TERMIOS,

|

| 384 |

+

Bastion,

|

| 385 |

+

rexec,

|

| 386 |

+

sets

|

| 387 |

+

|

| 388 |

+

# Create a graph of every (i.e. internal and external) dependencies in the

|

| 389 |

+

# given file (report RP0402 must not be disabled)

|

| 390 |

+

import-graph=

|

| 391 |

+

|

| 392 |

+

# Create a graph of external dependencies in the given file (report RP0402 must

|

| 393 |

+

# not be disabled)

|

| 394 |

+

ext-import-graph=

|

| 395 |

+

|

| 396 |

+

# Create a graph of internal dependencies in the given file (report RP0402 must

|

| 397 |

+

# not be disabled)

|

| 398 |

+

int-import-graph=

|

| 399 |

+

|

| 400 |

+

# Force import order to recognize a module as part of the standard

|

| 401 |

+

# compatibility libraries.

|

| 402 |

+

known-standard-library=

|

| 403 |

+

|

| 404 |

+

# Force import order to recognize a module as part of a third party library.

|

| 405 |

+

known-third-party=enchant, absl

|

| 406 |

+

|

| 407 |

+

# Analyse import fallback blocks. This can be used to support both Python 2 and

|

| 408 |

+

# 3 compatible code, which means that the block might have code that exists

|

| 409 |

+

# only in one or another interpreter, leading to false positives when analysed.

|

| 410 |

+

analyse-fallback-blocks=no

|

| 411 |

+

|

| 412 |

+

|

| 413 |

+

[CLASSES]

|

| 414 |

+

|

| 415 |

+

# List of method names used to declare (i.e. assign) instance attributes.

|

| 416 |

+

defining-attr-methods=__init__,

|

| 417 |

+

__new__,

|

| 418 |

+

setUp

|

| 419 |

+

|

| 420 |

+

# List of member names, which should be excluded from the protected access

|

| 421 |

+

# warning.

|

| 422 |

+

exclude-protected=_asdict,

|

| 423 |

+

_fields,

|

| 424 |

+

_replace,

|

| 425 |

+

_source,

|

| 426 |

+

_make

|

| 427 |

+

|

| 428 |

+

# List of valid names for the first argument in a class method.

|

| 429 |

+

valid-classmethod-first-arg=cls,

|

| 430 |

+

class_

|

| 431 |

+

|

| 432 |

+

# List of valid names for the first argument in a metaclass class method.

|

| 433 |

+

valid-metaclass-classmethod-first-arg=mcs

|

| 434 |

+

|

| 435 |

+

|

| 436 |

+

[EXCEPTIONS]

|

| 437 |

+

|

| 438 |

+

# Exceptions that will emit a warning when being caught. Defaults to

|

| 439 |

+

# "Exception"

|

| 440 |

+

overgeneral-exceptions=StandardError,

|

| 441 |

+

Exception,

|

| 442 |

+

BaseException

|

| 443 |

+

|

| 444 |

+

#######

|

| 445 |

+

|

| 446 |

+

# https://github.com/edaniszewski/pylint-quotes#configuration

|

| 447 |

+

string-quote=single

|

| 448 |

+

triple-quote=double

|

| 449 |

+

docstring-quote=double

|

2024-02-26-conv.json

ADDED

|

@@ -0,0 +1,23 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{"tstamp": 1708979292.1046, "type": "chat", "model": "MobiLlama-05B-Chat", "gen_params": {"temperature": 0.7, "top_p": 1.0, "max_new_tokens": 1024}, "start": 1708979275.4427, "finish": 1708979292.1046, "state": {"template_name": "billa", "system_message": "", "roles": ["Human", "Assistant"], "messages": [["Human", "Hello "], ["Assistant", "Hello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello \n\nHello"]], "offset": 0, "conv_id": "0ea82788ded24b3e92860dc5b47febc0", "model_name": "MobiLlama-05B-Chat"}, "ip": "172.31.50.130"}

|

| 2 |

+

{"tstamp": 1708979428.0297, "type": "chat", "model": "MobiLlama-05B-Chat", "gen_params": {"temperature": 0.7, "top_p": 1.0, "max_new_tokens": 1024}, "start": 1708979427.7589, "finish": 1708979428.0297, "state": {"template_name": "billa", "system_message": "", "roles": ["Human", "Assistant"], "messages": [["Human", "Hello how are you?"], ["Assistant", "Hello, how are you?"]], "offset": 0, "conv_id": "49f3fb8a82584c788578dda8e359e9d7", "model_name": "MobiLlama-05B-Chat"}, "ip": "172.31.37.241"}

|

| 3 |

+

{"tstamp": 1708979651.6512, "type": "chat", "model": "MobiLlama-05B-Chat", "gen_params": {"temperature": 0.7, "top_p": 1.0, "max_new_tokens": 1024}, "start": 1708979651.3346, "finish": 1708979651.6512, "state": {"template_name": "billa", "system_message": "", "roles": ["Human", "Assistant"], "messages": [["Human", "Hello How are you?"], ["Assistant", "Hi, how are you doing?"]], "offset": 0, "conv_id": "1cb4f039ccde4e9abf3d237b47bc39d3", "model_name": "MobiLlama-05B-Chat"}, "ip": "172.31.25.123"}

|

| 4 |

+

{"tstamp": 1708979958.428, "type": "chat", "model": "MobiLlama-05B-Chat", "gen_params": {"temperature": 0.7, "top_p": 1.0, "max_new_tokens": 1024}, "start": 1708979958.1858, "finish": 1708979958.428, "state": {"template_name": "one_shot", "system_message": "A chat between a curious human and an artificial intelligence assistant. The assistant gives helpful, detailed, and polite answers to the human's questions.", "roles": ["Human", "Assistant"], "messages": [["Human", "Hello how are you?"], ["Assistant", "Hello, how are you?"]], "offset": 2, "conv_id": "4e4c5de905f64b64814562ce6cb7ca92", "model_name": "MobiLlama-05B-Chat"}, "ip": "172.31.37.241"}

|

| 5 |

+

{"tstamp": 1708980099.492, "type": "chat", "model": "MobiLlama-05B-Chat", "gen_params": {"temperature": 0.7, "top_p": 1.0, "max_new_tokens": 1024}, "start": 1708980099.1622, "finish": 1708980099.492, "state": {"template_name": "one_shot", "system_message": "A chat between a curious human and an artificial intelligence assistant. The assistant gives helpful, detailed, and polite answers to the human's questions.", "roles": ["Human", "Assistant"], "messages": [["Human", "Hello how are you?"], ["Assistant", "I am good thank you. How can I assist you today?"]], "offset": 2, "conv_id": "7a2f85c78ad244cfadbe214712847864", "model_name": "MobiLlama-05B-Chat"}, "ip": "172.31.37.241"}

|

| 6 |

+

{"tstamp": 1708980395.3758, "type": "chat", "model": "MobiLlama-05B-Chat", "gen_params": {"temperature": 0.7, "top_p": 1.0, "max_new_tokens": 1024}, "start": 1708980394.8771, "finish": 1708980395.3758, "state": {"template_name": "one_shot", "system_message": "A chat between a curious human and an artificial intelligence assistant. The assistant gives helpful, detailed, and polite answers to the human's questions.", "roles": ["Human", "Assistant"], "messages": [["Human", "Hello How are you?"], ["Assistant", "Hello! How can I help you?"]], "offset": 2, "conv_id": "dcd8306a9bb84462afa55336f94182da", "model_name": "MobiLlama-05B-Chat"}, "ip": "172.31.37.241"}

|

| 7 |

+

{"tstamp": 1708980429.3817, "type": "chat", "model": "MobiLlama-1B-Chat", "gen_params": {"temperature": 0.7, "top_p": 1.0, "max_new_tokens": 1024}, "start": 1708980425.9794, "finish": 1708980429.3817, "state": {"template_name": "one_shot", "system_message": "A chat between a curious human and an artificial intelligence assistant. The assistant gives helpful, detailed, and polite answers to the human's questions.", "roles": ["Human", "Assistant"], "messages": [["Human", "Generate a C code snippet that implements a function to calculate the Fibonacci sequence using recursion."], ["Assistant", "```c\n#include <stdio.h>\nint fib(int n);\nint fib(int n) {\n if (n <= 1) {\n return n;\n }\n else {\n return fib(n-1) + fib(n-2);\n }\n}\nint main() {\n int n;\n printf(\"Enter the value of n: \");\n scanf(\"%d\", &n);\n printf(\"The Fibonacci sequence is: \\n\");\n for (int i = 0; i < n; i++) {\n printf(\"%d \", fib(i));\n }\n return 0;\n}\n```\nThis C code implementation uses a recursive function `fib` to calculate the Fibonacci sequence. The function takes an integer `n` as input and returns the value of the `n`th Fibonacci"]], "offset": 2, "conv_id": "f250c5ef3c3d427582fe19e75839e236", "model_name": "MobiLlama-1B-Chat"}, "ip": "172.31.37.241"}

|

| 8 |

+

{"tstamp": 1708980445.7322, "type": "chat", "model": "MobiLlama-05B-Chat", "gen_params": {"temperature": 0.7, "top_p": 1.0, "max_new_tokens": 1024}, "start": 1708980442.5778, "finish": 1708980445.7322, "state": {"template_name": "one_shot", "system_message": "A chat between a curious human and an artificial intelligence assistant. The assistant gives helpful, detailed, and polite answers to the human's questions.", "roles": ["Human", "Assistant"], "messages": [["Human", "Generate a C code snippet that implements a function to calculate the Fibonacci sequence using recursion."], ["Assistant", "```c\r\n#include <stdio.h>\r\n#include <stdlib.h>\r\n#include <math.h>\r\nint fibonacci(int n) {\r\n if (n <= 1) {\r\n return n;\r\n }\r\n else {\r\n return fibonacci(n - 1) + fibonacci(n - 2);\r\n }\r\n}\r\nint main() {\r\n int n;\r\n printf(\"Enter the first number: \");\r\n scanf(\"%d\", &n);\r\n printf(\"Enter the second number: \");\r\n scanf(\"%d\", &n);\r\n printf(\"The Fibonacci sequence is: %d %d %d\\n\", fibonacci(n), fibonacci(n-1), fibonacci(n-2));\r\n return 0;\r\n}\r\n```\r\nThis function takes two parameters"]], "offset": 2, "conv_id": "e1f77bf7e30940db9addb35c3dd38294", "model_name": "MobiLlama-05B-Chat"}, "ip": "172.31.12.31"}

|

| 9 |

+

{"tstamp": 1708981892.4992, "type": "chat", "model": "MobiLlama-05B", "gen_params": {"temperature": 0.7, "top_p": 1.0, "max_new_tokens": 1024}, "start": 1708981891.9426, "finish": 1708981892.4992, "state": {"template_name": "one_shot", "system_message": "A chat between a curious human and an artificial intelligence assistant. The assistant gives helpful, detailed, and polite answers to the human's questions.", "roles": ["Human", "Assistant"], "messages": [["Human", "I was walking in the garden when "], ["Assistant", "Then I saw your scissors, I cut them."]], "offset": 2, "conv_id": "573e0b8ebc9a419e814a0f24e4151e60", "model_name": "MobiLlama-05B"}, "ip": "172.31.50.130"}

|

| 10 |

+

{"tstamp": 1708981911.5711, "type": "chat", "model": "MobiLlama-05B", "gen_params": {"temperature": 0.7, "top_p": 1.0, "max_new_tokens": 1024}, "start": 1708981911.2039, "finish": 1708981911.5711, "state": {"template_name": "one_shot", "system_message": "A chat between a curious human and an artificial intelligence assistant. The assistant gives helpful, detailed, and polite answers to the human's questions.", "roles": ["Human", "Assistant"], "messages": [["Human", "How to write a python code to calculate factorial of a number"], ["Assistant", "How to write a python code to calculate factorial of a number"]], "offset": 2, "conv_id": "573e0b8ebc9a419e814a0f24e4151e60", "model_name": "MobiLlama-05B"}, "ip": "172.31.50.130"}

|

| 11 |

+

{"tstamp": 1708981935.556, "type": "chat", "model": "MobiLlama-05B", "gen_params": {"temperature": 0.7, "top_p": 1.0, "max_new_tokens": 1024}, "start": 1708981935.3194, "finish": 1708981935.556, "state": {"template_name": "one_shot", "system_message": "A chat between a curious human and an artificial intelligence assistant. The assistant gives helpful, detailed, and polite answers to the human's questions.", "roles": ["Human", "Assistant"], "messages": [["Human", "On a bright sunny day I was "], ["Assistant", "I'm a"]], "offset": 2, "conv_id": "573e0b8ebc9a419e814a0f24e4151e60", "model_name": "MobiLlama-05B"}, "ip": "172.31.12.31"}

|

| 12 |

+

{"tstamp": 1708982111.1212, "type": "chat", "model": "MobiLlama-05B", "gen_params": {"temperature": 0.7, "top_p": 1.0, "max_new_tokens": 1024}, "start": 1708982110.8392, "finish": 1708982111.1212, "state": {"template_name": "zero_shot", "system_message": "", "roles": ["Human", "Assistant"], "messages": [["Human", "On a bright sunny day I was "], ["Assistant", "On the first day of an important"]], "offset": 0, "conv_id": "672719a556364698b8c1b5a9c7063529", "model_name": "MobiLlama-05B"}, "ip": "172.31.37.241"}

|

| 13 |

+

{"tstamp": 1708982323.6584, "type": "chat", "model": "MobiLlama-05B", "gen_params": {"temperature": 0.7, "top_p": 1.0, "max_new_tokens": 1024}, "start": 1708982323.5031, "finish": 1708982323.6584, "state": {"template_name": "zero_shot", "system_message": "", "roles": ["Human", "Assistant"], "messages": [["Human", "On a bright sunny day I was "], ["Assistant", ""]], "offset": 0, "conv_id": "da84b42618f34b7bbaf4364a08c25dda", "model_name": "MobiLlama-05B"}, "ip": "172.31.37.241"}

|

| 14 |

+

{"tstamp": 1708982336.4937, "type": "chat", "model": "MobiLlama-05B", "gen_params": {"temperature": 0.7, "top_p": 1.0, "max_new_tokens": 1024}, "start": 1708982336.3268, "finish": 1708982336.4937, "state": {"template_name": "zero_shot", "system_message": "", "roles": ["Human", "Assistant"], "messages": [["Human", "On a bright sunny day I was "], ["Assistant", ""]], "offset": 0, "conv_id": "da84b42618f34b7bbaf4364a08c25dda", "model_name": "MobiLlama-05B"}, "ip": "172.31.37.241"}

|

| 15 |

+

{"tstamp": 1708982496.4846, "type": "chat", "model": "MobiLlama-05B", "gen_params": {"temperature": 0.7, "top_p": 1.0, "max_new_tokens": 1024}, "start": 1708982496.3086, "finish": 1708982496.4846, "state": {"template_name": "zero_shot", "system_message": "", "roles": ["Human", "Assistant"], "messages": [["Human", "On a bright sunny day I was "], ["Assistant", ""]], "offset": 0, "conv_id": "da84b42618f34b7bbaf4364a08c25dda", "model_name": "MobiLlama-05B"}, "ip": "172.31.25.123"}

|

| 16 |

+

{"tstamp": 1708982505.7526, "type": "chat", "model": "MobiLlama-05B", "gen_params": {"temperature": 0.7, "top_p": 1.0, "max_new_tokens": 1024}, "start": 1708982505.6079, "finish": 1708982505.7526, "state": {"template_name": "zero_shot", "system_message": "", "roles": ["Human", "Assistant"], "messages": [["Human", "On a bright sunny day I was "], ["Assistant", ""]], "offset": 0, "conv_id": "da84b42618f34b7bbaf4364a08c25dda", "model_name": "MobiLlama-05B"}, "ip": "172.31.25.123"}

|

| 17 |

+

{"tstamp": 1708983764.1363, "type": "chat", "model": "MobiLlama-05B-Chat", "gen_params": {"temperature": 0.7, "top_p": 1.0, "max_new_tokens": 1024}, "start": 1708983763.3268, "finish": 1708983764.1363, "state": {"template_name": "one_shot", "system_message": "A chat between a curious human and an artificial intelligence assistant. The assistant gives helpful, detailed, and polite answers to the human's questions.", "roles": ["Human", "Assistant"], "messages": [["Human", "Hi how are you?"], ["Assistant", "Hello! How can I help you today please \ud83d\ude0a"]], "offset": 2, "conv_id": "2325cc89da09435fa365959702fa2b56", "model_name": "MobiLlama-05B-Chat"}, "ip": "172.31.37.241"}

|

| 18 |

+

{"tstamp": 1708983783.8594, "type": "chat", "model": "MobiLlama-05B-Chat", "gen_params": {"temperature": 0.7, "top_p": 1.0, "max_new_tokens": 1024}, "start": 1708983781.9502, "finish": 1708983783.8594, "state": {"template_name": "one_shot", "system_message": "A chat between a curious human and an artificial intelligence assistant. The assistant gives helpful, detailed, and polite answers to the human's questions.", "roles": ["Human", "Assistant"], "messages": [["Human", "Write a python code to print a table of a number"], ["Assistant", "Sure! Here is one way you can do it using Python with NumPy arrays as data sources.\r\n```python\r\nimport numpy as np \r\nnumbers = [1024968753., // ASCII value for \"Happy\"\r\n ---------------------// Countries in this alphabet are listed here too if desired (not shown)\r\n ]) # Define array containing numbers from 'input_file', where file path given on command line argument or loaded into variable `numbs` similarily according specification provided by user below during execution time step"]], "offset": 2, "conv_id": "2325cc89da09435fa365959702fa2b56", "model_name": "MobiLlama-05B-Chat"}, "ip": "172.31.37.241"}

|

| 19 |

+

{"tstamp": 1708983818.622, "type": "chat", "model": "MobiLlama-05B-Chat", "gen_params": {"temperature": 0.7, "top_p": 1.0, "max_new_tokens": 1024}, "start": 1708983816.9916, "finish": 1708983818.622, "state": {"template_name": "one_shot", "system_message": "A chat between a curious human and an artificial intelligence assistant. The assistant gives helpful, detailed, and polite answers to the human's questions.", "roles": ["Human", "Assistant"], "messages": [["Human", "write a python code to print the factorial of a number"], ["Assistant", "here is one possible solution that could work for your problem without any additional libraries or dependencies on other platforms (Python 3 only):\r\n```python \r\ndef fact(n) : if n <=0 else 'factor_of {}'.format((int)(fact))[1] + str(*list())[:-2][:-4].join() # this function uses `str` instead of regular expressions as it works with integers.\r\nprint(\"Fact value = \", end=\"\")"]], "offset": 2, "conv_id": "2325cc89da09435fa365959702fa2b56", "model_name": "MobiLlama-05B-Chat"}, "ip": "172.31.12.31"}

|

| 20 |

+

{"tstamp": 1708983829.2042, "type": "chat", "model": "MobiLlama-05B-Chat", "gen_params": {"temperature": 0.7, "top_p": 1.0, "max_new_tokens": 1024}, "start": 1708983826.7386, "finish": 1708983829.2042, "state": {"template_name": "one_shot", "system_message": "A chat between a curious human and an artificial intelligence assistant. The assistant gives helpful, detailed, and polite answers to the human's questions.", "roles": ["Human", "Assistant"], "messages": [["Human", "write a python code to print the factorial of a number"], ["Assistant", "Sure! Here is Python Code that can help you with your problem.\r\n```python\r\nfactor_factors = [1 ** i for i in range(2)] # initialize list containing factors from 0 upwards (starting at all numbers) \r\nprint(\"The Factorial of %d\"%num)\r\nfor num in factoars[::-1]: if sum([f**i * f^k - k*sum()/j->p == j+len($elems)-4] > len('.')): return True break else : continue end except StopIteration as e:`No such element found` or `None`: raise ValueError(\"%r not positive integer\")"]], "offset": 2, "conv_id": "2325cc89da09435fa365959702fa2b56", "model_name": "MobiLlama-05B-Chat"}, "ip": "172.31.12.31"}

|

| 21 |

+

{"tstamp": 1708983854.4101, "type": "chat", "model": "MobiLlama-05B-Chat", "gen_params": {"temperature": 0.7, "top_p": 1.0, "max_new_tokens": 1024}, "start": 1708983851.2397, "finish": 1708983854.4101, "state": {"template_name": "one_shot", "system_message": "A chat between a curious human and an artificial intelligence assistant. The assistant gives helpful, detailed, and polite answers to the human's questions.", "roles": ["Human", "Assistant"], "messages": [["Human", "write a python code to calculate the factorial of a number"], ["Assistant", "Sure! Here is one way you can do it using Python as follows : \r\n\r\n```python # Assume we have already defined `factors` variable containing all possible factors up until n-1.\r\ndef fact(n): return [factor for i in range(2**i) if (4*5 - sum([x * y + z] == s/d[j]) <= d[-3]*b))[:len(f)*6:-8].count((0).flatten())+[(a-.7)+(-9)-sum({_:[m:][\"identity\"]} < _ / g)[::-(g//k)]>= (\"Factoring\" not \"not algebraic\").strftime('%.%')]+pow(.|_.split(\".\")(int(_))))/(abs(__)!._!)*(reverse(*range(``.__list()))[_?<->](_) | reverse(\".\")>().index(!_(.)!(dig"]], "offset": 2, "conv_id": "2325cc89da09435fa365959702fa2b56", "model_name": "MobiLlama-05B-Chat"}, "ip": "172.31.12.31"}

|

| 22 |

+

{"tstamp": 1708983875.5177, "type": "chat", "model": "MobiLlama-05B-Chat", "gen_params": {"temperature": 0.7, "top_p": 1.0, "max_new_tokens": 1024}, "start": 1708983872.355, "finish": 1708983875.5177, "state": {"template_name": "one_shot", "system_message": "A chat between a curious human and an artificial intelligence assistant. The assistant gives helpful, detailed, and polite answers to the human's questions.", "roles": ["Human", "Assistant"], "messages": [["Human", "write a code in C language to find the factorial of a number"], ["Assistant", "Sure! Here is one possible implementation using recursion as shown above with some modifications based on your requirements.\r\n```c\r\nint fact(char c){ // define function call for finding faktor (factor) by character value x = 0; \r\n if ((x == '1') && (!isdigit((unsigned char)(p))) ||!('\\n')){\r\n printf(\"%d\\t\", p); exit();\r\n }\r\n\tif (*--v!= '\\b'+'\\f');\r\n else {\r\n *++q++;;\r\n while(*y > v-k+2&&*j >= k)\r\n j -= q - y + gpp(-4);\r\n do{printf(\"-> %e%a:%u -> \", i++, n++){*(i++) += d[g];}\r\n ++m,\r\n 5<=(39-(&putl()))?cout<<\"Shift left \":\""]], "offset": 2, "conv_id": "2325cc89da09435fa365959702fa2b56", "model_name": "MobiLlama-05B-Chat"}, "ip": "172.31.12.31"}

|

| 23 |

+

{"tstamp": 1708983904.2687, "type": "chat", "model": "MobiLlama-1B-Chat", "gen_params": {"temperature": 0.7, "top_p": 1.0, "max_new_tokens": 1024}, "start": 1708983901.9497, "finish": 1708983904.2687, "state": {"template_name": "one_shot", "system_message": "A chat between a curious human and an artificial intelligence assistant. The assistant gives helpful, detailed, and polite answers to the human's questions.", "roles": ["Human", "Assistant"], "messages": [["Human", "write a C language code to find the factorial of a number"], ["Assistant", "Here is one way you can implement this in Python using recursion: \n```python\ndef factorial(n):\n if n <=1: # Base case for when n=0 or 1\n return 1\n else: # Recursive call with n-1 as argument\n result = n * factorial(n - 1)\n print(\"The factorial of\", n,\"is\",result)\n \nfactorial(5) \nprint() # This prints \"24\" since it returns 6 x 3! (which equals 72) multiplied by itself three times\n```"]], "offset": 2, "conv_id": "7db4fc8937364fd881dc9cf144dd1467", "model_name": "MobiLlama-1B-Chat"}, "ip": "172.31.50.130"}

|

2024-02-27-conv.json

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

2024-02-28-conv.json

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

2024-02-29-conv.json

ADDED

|

@@ -0,0 +1,33 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{"tstamp": 1709173113.8235, "type": "chat", "model": "MobiLlama-05B-Chat", "gen_params": {"temperature": 0.7, "top_p": 1.0, "max_new_tokens": 1024}, "start": 1709173113.5155, "finish": 1709173113.8235, "state": {"template_name": "zero_shot", "system_message": "A chat between a curious human and an artificial intelligence assistant. The assistant gives helpful, detailed, and polite answers to the human's questions.", "roles": ["Human", "Assistant"], "messages": [["Human", "who are you?"], ["Assistant", "I am an AI language model that helps people find information."]], "offset": 0, "conv_id": "74253ff2f9744483bdb9517b98bc58a3", "model_name": "MobiLlama-05B-Chat"}, "ip": "172.31.12.31"}

|

| 2 |

+

{"tstamp": 1709173839.3768, "type": "chat", "model": "MobiLlama-05B-Chat", "gen_params": {"temperature": 0.7, "top_p": 1.0, "max_new_tokens": 1024}, "start": 1709173838.7165, "finish": 1709173839.3768, "state": {"template_name": "zero_shot", "system_message": "A chat between a curious human and an artificial intelligence assistant. The assistant gives helpful, detailed, and polite answers to the human's questions.", "roles": ["Human", "Assistant"], "messages": [["Human", "What you know about Pakistan cricket?"], ["Assistant", "I'm an AI language model and don't have any knowledge about Pakistan cricket. However, I can provide you with some information about Pakistan cricket."]], "offset": 0, "conv_id": "3b298402f6474d0e872d1e7131b7e642", "model_name": "MobiLlama-05B-Chat"}, "ip": "172.31.38.44"}

|

| 3 |

+

{"tstamp": 1709173851.8231, "type": "chat", "model": "MobiLlama-05B-Chat", "gen_params": {"temperature": 0.7, "top_p": 1.0, "max_new_tokens": 1024}, "start": 1709173851.2365, "finish": 1709173851.8231, "state": {"template_name": "zero_shot", "system_message": "A chat between a curious human and an artificial intelligence assistant. The assistant gives helpful, detailed, and polite answers to the human's questions.", "roles": ["Human", "Assistant"], "messages": [["Human", "Yes sure."], ["Assistant", "As an AI language model, I don't have a physical body, but I can provide you with the information you are looking for."]], "offset": 0, "conv_id": "3b298402f6474d0e872d1e7131b7e642", "model_name": "MobiLlama-05B-Chat"}, "ip": "172.31.38.44"}

|

| 4 |

+

{"tstamp": 1709174172.9003, "type": "chat", "model": "MobiLlama-05B-Chat", "gen_params": {"temperature": 0.7, "top_p": 1.0, "max_new_tokens": 1024}, "start": 1709174172.6649, "finish": 1709174172.9003, "state": {"template_name": "zero_shot", "system_message": "A chat between a curious human and an artificial intelligence assistant. The assistant gives helpful, detailed, and polite answers to the human's questions.", "roles": ["Human", "Assistant"], "messages": [["Human", "Hii how are you "], ["Assistant", "Hi, how are you?"]], "offset": 0, "conv_id": "a1a8982247434c678f7f8e877dd0c20b", "model_name": "MobiLlama-05B-Chat"}, "ip": "172.31.57.119"}

|

| 5 |

+

{"tstamp": 1709174184.9852, "type": "chat", "model": "MobiLlama-05B-Chat", "gen_params": {"temperature": 0.7, "top_p": 1.0, "max_new_tokens": 1024}, "start": 1709174184.4893, "finish": 1709174184.9852, "state": {"template_name": "zero_shot", "system_message": "A chat between a curious human and an artificial intelligence assistant. The assistant gives helpful, detailed, and polite answers to the human's questions.", "roles": ["Human", "Assistant"], "messages": [["Human", "I'm fine "], ["Assistant", "Thanks for letting me know. I'm glad to hear that you're okay. Have you been feeling better lately?"]], "offset": 0, "conv_id": "a1a8982247434c678f7f8e877dd0c20b", "model_name": "MobiLlama-05B-Chat"}, "ip": "172.31.57.119"}

|

| 6 |

+

{"tstamp": 1709174203.8211, "type": "chat", "model": "MobiLlama-05B-Chat", "gen_params": {"temperature": 0.7, "top_p": 1.0, "max_new_tokens": 1024}, "start": 1709174202.7726, "finish": 1709174203.8211, "state": {"template_name": "zero_shot", "system_message": "A chat between a curious human and an artificial intelligence assistant. The assistant gives helpful, detailed, and polite answers to the human's questions.", "roles": ["Human", "Assistant"], "messages": [["Human", "What can you do for me?"], ["Assistant", "As an AI language model, I don't have a physical body, but I can provide you with information and help you with your queries. I can help you with your queries related to technology, finance, and any other topic you can think of. Please feel free to ask me anything you want."]], "offset": 0, "conv_id": "a1a8982247434c678f7f8e877dd0c20b", "model_name": "MobiLlama-05B-Chat"}, "ip": "172.31.57.119"}

|

| 7 |

+