first commit

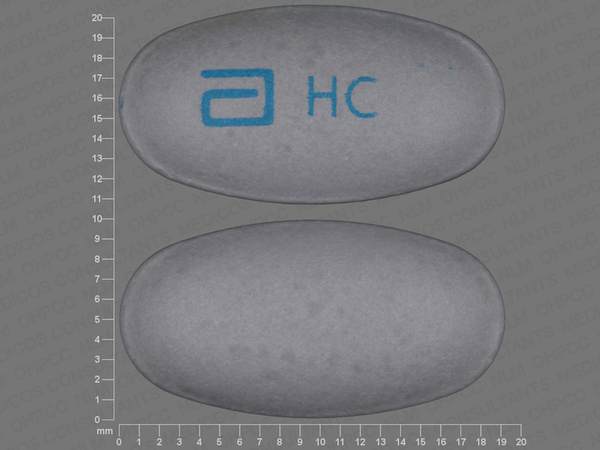

Browse files- RXBASE-600_00071-1014-68_NLMIMAGE10_5715ABFD.jpg +0 -0

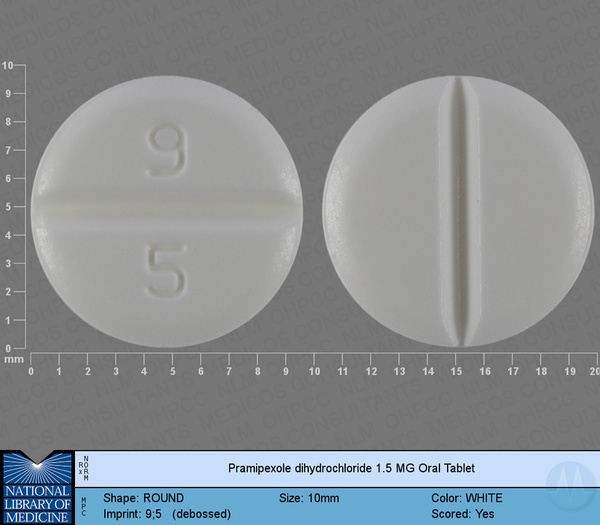

- RXBASE-600_00074-7126-13_NLMIMAGE10_C003606B.jpg +0 -0

- RXNAV-600_13668-0095-90_RXNAVIMAGE10_D145E8EF.jpg +0 -0

- app.py +168 -0

- drug_yolov10.pt +3 -0

- image_class.csv +0 -0

- requirements.txt +4 -0

RXBASE-600_00071-1014-68_NLMIMAGE10_5715ABFD.jpg

ADDED

|

RXBASE-600_00074-7126-13_NLMIMAGE10_C003606B.jpg

ADDED

|

RXNAV-600_13668-0095-90_RXNAVIMAGE10_D145E8EF.jpg

ADDED

|

app.py

ADDED

|

@@ -0,0 +1,168 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

import cv2

|

| 3 |

+

import tempfile

|

| 4 |

+

from ultralytics import YOLOv10

|

| 5 |

+

import pandas as pd

|

| 6 |

+

|

| 7 |

+

df = pd.read_csv('image_class.csv')

|

| 8 |

+

df = df[['name', 'class']]

|

| 9 |

+

df.drop_duplicates(inplace=True)

|

| 10 |

+

# print(df)

|

| 11 |

+

|

| 12 |

+

def yolov10_inference(image, video, image_size, conf_threshold, iou_threshold):

|

| 13 |

+

model = YOLOv10('./drug_yolov10.pt')

|

| 14 |

+

# model = YOLOv10('./pills_yolov10.pt')

|

| 15 |

+

if image:

|

| 16 |

+

results = model.predict(source=image, imgsz=image_size, conf=conf_threshold, iou=iou_threshold)

|

| 17 |

+

annotated_image = results[0].plot()

|

| 18 |

+

# Print the detected objects' information (class, coordinates, and probability)

|

| 19 |

+

box = results[0].boxes

|

| 20 |

+

cls = [int(c) for c in box.cls.tolist()]

|

| 21 |

+

cnf = [round(f,2) for f in box.conf.tolist()]

|

| 22 |

+

name = [df[df['class']==n]['name'].item() for n in cls]

|

| 23 |

+

# print(cls)

|

| 24 |

+

# print(name)

|

| 25 |

+

# print(list(zip(cls, cnf)))

|

| 26 |

+

# print("Object type:", box.cls)

|

| 27 |

+

# print("Coordinates:", box.xyxy)

|

| 28 |

+

# print("Probability:", box.conf)

|

| 29 |

+

# print('box.class data tyupe', type(box.cls.tolist()))

|

| 30 |

+

return annotated_image[:, :, ::-1], None, list(zip(cls,cnf)), name

|

| 31 |

+

else:

|

| 32 |

+

video_path = tempfile.mktemp(suffix=".webm")

|

| 33 |

+

with open(video_path, "wb") as f:

|

| 34 |

+

with open(video, "rb") as g:

|

| 35 |

+

f.write(g.read())

|

| 36 |

+

|

| 37 |

+

cap = cv2.VideoCapture(video_path)

|

| 38 |

+

fps = cap.get(cv2.CAP_PROP_FPS)

|

| 39 |

+

frame_width = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH))

|

| 40 |

+

frame_height = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT))

|

| 41 |

+

|

| 42 |

+

output_video_path = tempfile.mktemp(suffix=".webm")

|

| 43 |

+

out = cv2.VideoWriter(output_video_path, cv2.VideoWriter_fourcc(*'vp80'), fps, (frame_width, frame_height))

|

| 44 |

+

|

| 45 |

+

while cap.isOpened():

|

| 46 |

+

ret, frame = cap.read()

|

| 47 |

+

if not ret:

|

| 48 |

+

break

|

| 49 |

+

|

| 50 |

+

results = model.predict(source=frame, imgsz=image_size, conf=conf_threshold, iou=iou_threshold)

|

| 51 |

+

annotated_frame = results[0].plot()

|

| 52 |

+

out.write(annotated_frame)

|

| 53 |

+

|

| 54 |

+

cap.release()

|

| 55 |

+

out.release()

|

| 56 |

+

|

| 57 |

+

return None, output_video_path

|

| 58 |

+

|

| 59 |

+

|

| 60 |

+

def yolov10_inference_for_examples(image, image_size, conf_threshold, iou_threshold):

|

| 61 |

+

annotated_image, _, output_class, output_name = yolov10_inference(image, None, image_size, conf_threshold, iou_threshold)

|

| 62 |

+

return annotated_image, None, output_class, output_name

|

| 63 |

+

|

| 64 |

+

def app():

|

| 65 |

+

with gr.Blocks():

|

| 66 |

+

with gr.Row():

|

| 67 |

+

with gr.Column():

|

| 68 |

+

image = gr.Image(type="pil", label="Image", visible=True)

|

| 69 |

+

video = gr.Video(label="Video", visible=False)

|

| 70 |

+

input_type = gr.Radio(

|

| 71 |

+

choices=["Image", "Video"],

|

| 72 |

+

value="Image",

|

| 73 |

+

label="Input Type",

|

| 74 |

+

)

|

| 75 |

+

image_size = gr.Slider(

|

| 76 |

+

label="Image Size",

|

| 77 |

+

minimum=0,

|

| 78 |

+

maximum=1280,

|

| 79 |

+

step=10,

|

| 80 |

+

value=640,

|

| 81 |

+

)

|

| 82 |

+

conf_threshold = gr.Slider(

|

| 83 |

+

label="Confidence Threshold",

|

| 84 |

+

minimum=0.0,

|

| 85 |

+

maximum=1.0,

|

| 86 |

+

step=0.05,

|

| 87 |

+

value=0.25,

|

| 88 |

+

)

|

| 89 |

+

iou_threshold = gr.Slider(

|

| 90 |

+

label="IOU Threshold",

|

| 91 |

+

minimum=0,

|

| 92 |

+

maximum=1,

|

| 93 |

+

step=0.1,

|

| 94 |

+

value=0.6,

|

| 95 |

+

)

|

| 96 |

+

yolov10_infer = gr.Button(value="Detect Objects")

|

| 97 |

+

|

| 98 |

+

with gr.Column():

|

| 99 |

+

output_image = gr.Image(type="numpy", label="Annotated Image", visible=True)

|

| 100 |

+

output_video = gr.Video(label="Annotated Video", visible=False)

|

| 101 |

+

output_name = gr.Textbox(label='Predicted Drug Name')

|

| 102 |

+

output_name.change(outputs=output_name)

|

| 103 |

+

output_class = gr.Textbox(label='Predicted Class')

|

| 104 |

+

output_class.change(outputs=output_class)

|

| 105 |

+

|

| 106 |

+

def update_visibility(input_type):

|

| 107 |

+

image = gr.update(visible=True) if input_type == "Image" else gr.update(visible=False)

|

| 108 |

+

video = gr.update(visible=False) if input_type == "Image" else gr.update(visible=True)

|

| 109 |

+

output_image = gr.update(visible=True) if input_type == "Image" else gr.update(visible=False)

|

| 110 |

+

output_video = gr.update(visible=False) if input_type == "Image" else gr.update(visible=True)

|

| 111 |

+

|

| 112 |

+

return image, video, output_image, output_video

|

| 113 |

+

|

| 114 |

+

input_type.change(

|

| 115 |

+

fn=update_visibility,

|

| 116 |

+

inputs=[input_type],

|

| 117 |

+

outputs=[image, video, output_image, output_video],

|

| 118 |

+

)

|

| 119 |

+

|

| 120 |

+

def run_inference(image, video, image_size, conf_threshold, iou_threshold, input_type):

|

| 121 |

+

if input_type == "Image":

|

| 122 |

+

return yolov10_inference(image, None, image_size, conf_threshold, iou_threshold)

|

| 123 |

+

else:

|

| 124 |

+

return yolov10_inference(None, video, image_size, conf_threshold, iou_threshold)

|

| 125 |

+

|

| 126 |

+

|

| 127 |

+

yolov10_infer.click(

|

| 128 |

+

fn=run_inference,

|

| 129 |

+

inputs=[image, video, image_size, conf_threshold, iou_threshold, input_type],

|

| 130 |

+

outputs=[output_image, output_video, output_class, output_name],

|

| 131 |

+

)

|

| 132 |

+

|

| 133 |

+

gr.Examples(

|

| 134 |

+

examples = [

|

| 135 |

+

['./RXBASE-600_00071-1014-68_NLMIMAGE10_5715ABFD.jpg', 280, 0.2, 0.6],

|

| 136 |

+

['./RXNAV-600_13668-0095-90_RXNAVIMAGE10_D145E8EF.jpg', 640, 0.2, 0.7],

|

| 137 |

+

['./RXBASE-600_00074-7126-13_NLMIMAGE10_C003606B.jpg', 640, 0.2, 0.8],

|

| 138 |

+

],

|

| 139 |

+

fn=yolov10_inference_for_examples,

|

| 140 |

+

inputs=[

|

| 141 |

+

image,

|

| 142 |

+

image_size,

|

| 143 |

+

conf_threshold,

|

| 144 |

+

iou_threshold,

|

| 145 |

+

],

|

| 146 |

+

outputs=[output_image],

|

| 147 |

+

cache_examples='lazy',

|

| 148 |

+

)

|

| 149 |

+

|

| 150 |

+

gradio_app = gr.Blocks()

|

| 151 |

+

with gradio_app:

|

| 152 |

+

gr.HTML(

|

| 153 |

+

"""

|

| 154 |

+

<h1 style='text-align: center'>

|

| 155 |

+

YOLOv10: Real-Time End-to-End Object Detection

|

| 156 |

+

</h1>

|

| 157 |

+

""")

|

| 158 |

+

gr.HTML(

|

| 159 |

+

"""

|

| 160 |

+

<h3 style='text-align: center'>

|

| 161 |

+

<a href='https://arxiv.org/abs/2405.14458' target='_blank'>arXiv</a> | <a href='https://github.com/THU-MIG/yolov10' target='_blank'>github</a>

|

| 162 |

+

</h3>

|

| 163 |

+

""")

|

| 164 |

+

with gr.Row():

|

| 165 |

+

with gr.Column():

|

| 166 |

+

app()

|

| 167 |

+

if __name__ == '__main__':

|

| 168 |

+

gradio_app.launch()

|

drug_yolov10.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:d953e3db8e56519197a3e6d74d2b078226f7f1cf1de07064631003acb4a493f3

|

| 3 |

+

size 33211887

|

image_class.csv

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

requirements.txt

ADDED

|

@@ -0,0 +1,4 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

gradio==4.32.1

|

| 2 |

+

opencv_python==4.8.1.78

|

| 3 |

+

opencv_python_headless==4.8.0.74

|

| 4 |

+

git+https://github.com/THU-MIG/yolov10

|