Spaces:

Configuration error

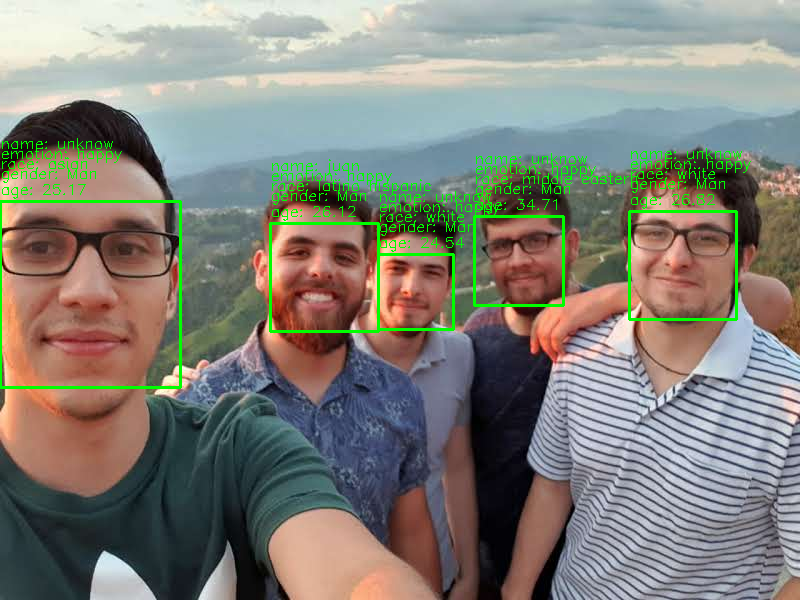

Configuration error

Upload 25 files

Browse files- .gitattributes +2 -0

- .gitignore +129 -0

- Face_info.py +45 -0

- LICENSE +21 -0

- README.md +31 -12

- __pycache__/f_Face_info.cpython-310.pyc +0 -0

- __pycache__/f_Face_info.cpython-39.pyc +0 -0

- age_detection/f_my_age.py +70 -0

- config.py +12 -0

- data_test/0.jpg +0 -0

- data_test/1.jpg +0 -0

- data_test/friends.jpg +0 -0

- deep_face.py +9 -0

- emotion_detection/Modelos/model_dropout.hdf5 +3 -0

- emotion_detection/f_emotion_detection.py +37 -0

- f_Face_info.py +112 -0

- gender_detection/f_my_gender.py +57 -0

- images_db/juan.jpg +0 -0

- images_db/karo.jpg +0 -0

- my_face_recognition/f_face_recognition.py +47 -0

- my_face_recognition/f_main.py +107 -0

- my_face_recognition/f_storage.py +57 -0

- race_detection/f_my_race.py +67 -0

- requirements.txt +82 -0

- results/Face_ID.gif +3 -0

- results/result.png +0 -0

.gitattributes

CHANGED

|

@@ -32,3 +32,5 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 32 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 33 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

| 32 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 33 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 35 |

+

emotion_detection/Modelos/model_dropout.hdf5 filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

results/Face_ID.gif filter=lfs diff=lfs merge=lfs -text

|

.gitignore

ADDED

|

@@ -0,0 +1,129 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Byte-compiled / optimized / DLL files

|

| 2 |

+

__pycache__/

|

| 3 |

+

*.py[cod]

|

| 4 |

+

*$py.class

|

| 5 |

+

|

| 6 |

+

# C extensions

|

| 7 |

+

*.so

|

| 8 |

+

|

| 9 |

+

# Distribution / packaging

|

| 10 |

+

.Python

|

| 11 |

+

build/

|

| 12 |

+

develop-eggs/

|

| 13 |

+

dist/

|

| 14 |

+

downloads/

|

| 15 |

+

eggs/

|

| 16 |

+

.eggs/

|

| 17 |

+

lib/

|

| 18 |

+

lib64/

|

| 19 |

+

parts/

|

| 20 |

+

sdist/

|

| 21 |

+

var/

|

| 22 |

+

wheels/

|

| 23 |

+

pip-wheel-metadata/

|

| 24 |

+

share/python-wheels/

|

| 25 |

+

*.egg-info/

|

| 26 |

+

.installed.cfg

|

| 27 |

+

*.egg

|

| 28 |

+

MANIFEST

|

| 29 |

+

|

| 30 |

+

# PyInstaller

|

| 31 |

+

# Usually these files are written by a python script from a template

|

| 32 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 33 |

+

*.manifest

|

| 34 |

+

*.spec

|

| 35 |

+

|

| 36 |

+

# Installer logs

|

| 37 |

+

pip-log.txt

|

| 38 |

+

pip-delete-this-directory.txt

|

| 39 |

+

|

| 40 |

+

# Unit test / coverage reports

|

| 41 |

+

htmlcov/

|

| 42 |

+

.tox/

|

| 43 |

+

.nox/

|

| 44 |

+

.coverage

|

| 45 |

+

.coverage.*

|

| 46 |

+

.cache

|

| 47 |

+

nosetests.xml

|

| 48 |

+

coverage.xml

|

| 49 |

+

*.cover

|

| 50 |

+

*.py,cover

|

| 51 |

+

.hypothesis/

|

| 52 |

+

.pytest_cache/

|

| 53 |

+

|

| 54 |

+

# Translations

|

| 55 |

+

*.mo

|

| 56 |

+

*.pot

|

| 57 |

+

|

| 58 |

+

# Django stuff:

|

| 59 |

+

*.log

|

| 60 |

+

local_settings.py

|

| 61 |

+

db.sqlite3

|

| 62 |

+

db.sqlite3-journal

|

| 63 |

+

|

| 64 |

+

# Flask stuff:

|

| 65 |

+

instance/

|

| 66 |

+

.webassets-cache

|

| 67 |

+

|

| 68 |

+

# Scrapy stuff:

|

| 69 |

+

.scrapy

|

| 70 |

+

|

| 71 |

+

# Sphinx documentation

|

| 72 |

+

docs/_build/

|

| 73 |

+

|

| 74 |

+

# PyBuilder

|

| 75 |

+

target/

|

| 76 |

+

|

| 77 |

+

# Jupyter Notebook

|

| 78 |

+

.ipynb_checkpoints

|

| 79 |

+

|

| 80 |

+

# IPython

|

| 81 |

+

profile_default/

|

| 82 |

+

ipython_config.py

|

| 83 |

+

|

| 84 |

+

# pyenv

|

| 85 |

+

.python-version

|

| 86 |

+

|

| 87 |

+

# pipenv

|

| 88 |

+

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

| 89 |

+

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

| 90 |

+

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

| 91 |

+

# install all needed dependencies.

|

| 92 |

+

#Pipfile.lock

|

| 93 |

+

|

| 94 |

+

# PEP 582; used by e.g. github.com/David-OConnor/pyflow

|

| 95 |

+

__pypackages__/

|

| 96 |

+

|

| 97 |

+

# Celery stuff

|

| 98 |

+

celerybeat-schedule

|

| 99 |

+

celerybeat.pid

|

| 100 |

+

|

| 101 |

+

# SageMath parsed files

|

| 102 |

+

*.sage.py

|

| 103 |

+

|

| 104 |

+

# Environments

|

| 105 |

+

.env

|

| 106 |

+

.venv

|

| 107 |

+

env/

|

| 108 |

+

venv/

|

| 109 |

+

ENV/

|

| 110 |

+

env.bak/

|

| 111 |

+

venv.bak/

|

| 112 |

+

|

| 113 |

+

# Spyder project settings

|

| 114 |

+

.spyderproject

|

| 115 |

+

.spyproject

|

| 116 |

+

|

| 117 |

+

# Rope project settings

|

| 118 |

+

.ropeproject

|

| 119 |

+

|

| 120 |

+

# mkdocs documentation

|

| 121 |

+

/site

|

| 122 |

+

|

| 123 |

+

# mypy

|

| 124 |

+

.mypy_cache/

|

| 125 |

+

.dmypy.json

|

| 126 |

+

dmypy.json

|

| 127 |

+

|

| 128 |

+

# Pyre type checker

|

| 129 |

+

.pyre/

|

Face_info.py

ADDED

|

@@ -0,0 +1,45 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import f_Face_info

|

| 2 |

+

import cv2

|

| 3 |

+

import time

|

| 4 |

+

import imutils

|

| 5 |

+

import argparse

|

| 6 |

+

|

| 7 |

+

parser = argparse.ArgumentParser(description="Face Info")

|

| 8 |

+

parser.add_argument('--input', type=str, default= 'webcam',

|

| 9 |

+

help="webcam or image")

|

| 10 |

+

parser.add_argument('--path_im', type=str,

|

| 11 |

+

help="path of image")

|

| 12 |

+

args = vars(parser.parse_args())

|

| 13 |

+

|

| 14 |

+

type_input = args['input']

|

| 15 |

+

if type_input == 'image':

|

| 16 |

+

# ----------------------------- image -----------------------------

|

| 17 |

+

#ingestar data

|

| 18 |

+

frame = cv2.imread(args['path_im'])

|

| 19 |

+

# obtenego info del frame

|

| 20 |

+

out = f_Face_info.get_face_info(frame)

|

| 21 |

+

# pintar imagen

|

| 22 |

+

res_img = f_Face_info.bounding_box(out,frame)

|

| 23 |

+

cv2.imshow('Face info',res_img)

|

| 24 |

+

cv2.waitKey(0)

|

| 25 |

+

|

| 26 |

+

if type_input == 'webcam':

|

| 27 |

+

# ----------------------------- webcam -----------------------------

|

| 28 |

+

cv2.namedWindow("Face info")

|

| 29 |

+

cam = cv2.VideoCapture(0)

|

| 30 |

+

while True:

|

| 31 |

+

star_time = time.time()

|

| 32 |

+

ret, frame = cam.read()

|

| 33 |

+

frame = imutils.resize(frame, width=720)

|

| 34 |

+

|

| 35 |

+

# obtenego info del frame

|

| 36 |

+

out = f_Face_info.get_face_info(frame)

|

| 37 |

+

# pintar imagen

|

| 38 |

+

res_img = f_Face_info.bounding_box(out,frame)

|

| 39 |

+

|

| 40 |

+

end_time = time.time() - star_time

|

| 41 |

+

FPS = 1/end_time

|

| 42 |

+

cv2.putText(res_img,f"FPS: {round(FPS,3)}",(10,50),cv2.FONT_HERSHEY_COMPLEX,1,(0,0,255),2)

|

| 43 |

+

cv2.imshow('Face info',res_img)

|

| 44 |

+

if cv2.waitKey(1) &0xFF == ord('q'):

|

| 45 |

+

break

|

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2020 Juan Camilo López Montes

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

README.md

CHANGED

|

@@ -1,12 +1,31 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Face Info

|

| 2 |

+

Face Info is an implementation of facial recognition, detection of facial attributes (age, gender, emotion and race) for python.

|

| 3 |

+

The repository provides a script to run Face Info with the webcam or by entering the path of an image.

|

| 4 |

+

This implementation allows recognition of multiple faces and the registration of new users for facial recognition.

|

| 5 |

+

|

| 6 |

+

# How to install:

|

| 7 |

+

<pre><code>pip install -r requirements.txt </code></pre>

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

# How to run:

|

| 11 |

+

The code is tested in python 3.7.8 and macOS Catalina

|

| 12 |

+

<pre><code>python Face_info.py --input webcam </code></pre>

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

running over an image

|

| 18 |

+

<pre><code>python Face_info.py --input image --path_im data_test/friends.jpg </code></pre>

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

# Add new faces to the database (facial recognition)

|

| 23 |

+

You can add new users to the faces database simply by adding the person's photo in the **images_db** folder, for the registry to work correctly, only the person of interest should appear in the photo.

|

| 24 |

+

|

| 25 |

+

# References

|

| 26 |

+

|

| 27 |

+

- **Emotion detection:** https://github.com/juan-csv/emotion_detection

|

| 28 |

+

- **Face Recognition:** https://github.com/juan-csv/face-recognition

|

| 29 |

+

- **Age detection:** https://github.com/serengil/deepface

|

| 30 |

+

- **Gender detection:** https://github.com/serengil/deepface

|

| 31 |

+

- **Race detection:** https://github.com/serengil/deepface

|

__pycache__/f_Face_info.cpython-310.pyc

ADDED

|

Binary file (2.24 kB). View file

|

|

|

__pycache__/f_Face_info.cpython-39.pyc

ADDED

|

Binary file (2.26 kB). View file

|

|

|

age_detection/f_my_age.py

ADDED

|

@@ -0,0 +1,70 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

como usar

|

| 3 |

+

1.instalar la libreria deepface

|

| 4 |

+

pip install deepface

|

| 5 |

+

2. instanciar el modelo

|

| 6 |

+

emo = f_my_emotion.Age_Model()

|

| 7 |

+

3. ingresar una imagen donde solo se vea un rostro (usar modelo deteccion de rostros para extraer una imagen con solo el rostro)

|

| 8 |

+

emo.predict_age(face_image)

|

| 9 |

+

"""

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

#from basemodels import VGGFace

|

| 13 |

+

from deepface.basemodels import VGGFace

|

| 14 |

+

import os

|

| 15 |

+

from pathlib import Path

|

| 16 |

+

import gdown

|

| 17 |

+

import numpy as np

|

| 18 |

+

from keras.models import Model, Sequential

|

| 19 |

+

from keras.layers import Convolution2D, Flatten, Activation

|

| 20 |

+

from keras.preprocessing import image

|

| 21 |

+

import cv2

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

class Age_Model():

|

| 25 |

+

def __init__(self):

|

| 26 |

+

self.model = self.loadModel()

|

| 27 |

+

self.output_indexes = np.array([i for i in range(0, 101)])

|

| 28 |

+

|

| 29 |

+

def predict_age(self,face_image):

|

| 30 |

+

image_preprocesing = self.transform_face_array2age_face(face_image)

|

| 31 |

+

age_predictions = self.model.predict(image_preprocesing )[0,:]

|

| 32 |

+

result_age = self.findApparentAge(age_predictions)

|

| 33 |

+

return result_age

|

| 34 |

+

|

| 35 |

+

def loadModel(self):

|

| 36 |

+

model = VGGFace.baseModel()

|

| 37 |

+

#--------------------------

|

| 38 |

+

classes = 101

|

| 39 |

+

base_model_output = Sequential()

|

| 40 |

+

base_model_output = Convolution2D(classes, (1, 1), name='predictions')(model.layers[-4].output)

|

| 41 |

+

base_model_output = Flatten()(base_model_output)

|

| 42 |

+

base_model_output = Activation('softmax')(base_model_output)

|

| 43 |

+

#--------------------------

|

| 44 |

+

age_model = Model(inputs=model.input, outputs=base_model_output)

|

| 45 |

+

#--------------------------

|

| 46 |

+

#load weights

|

| 47 |

+

home = str(Path.home())

|

| 48 |

+

if os.path.isfile(home+'/.deepface/weights/age_model_weights.h5') != True:

|

| 49 |

+

print("age_model_weights.h5 will be downloaded...")

|

| 50 |

+

url = 'https://drive.google.com/uc?id=1YCox_4kJ-BYeXq27uUbasu--yz28zUMV'

|

| 51 |

+

output = home+'/.deepface/weights/age_model_weights.h5'

|

| 52 |

+

gdown.download(url, output, quiet=False)

|

| 53 |

+

age_model.load_weights(home+'/.deepface/weights/age_model_weights.h5')

|

| 54 |

+

return age_model

|

| 55 |

+

#--------------------------

|

| 56 |

+

|

| 57 |

+

def findApparentAge(self,age_predictions):

|

| 58 |

+

apparent_age = np.sum(age_predictions * self.output_indexes)

|

| 59 |

+

return apparent_age

|

| 60 |

+

|

| 61 |

+

def transform_face_array2age_face(self,face_array,grayscale=False,target_size = (224, 224)):

|

| 62 |

+

detected_face = face_array

|

| 63 |

+

if grayscale == True:

|

| 64 |

+

detected_face = cv2.cvtColor(detected_face, cv2.COLOR_BGR2GRAY)

|

| 65 |

+

detected_face = cv2.resize(detected_face, target_size)

|

| 66 |

+

img_pixels = image.img_to_array(detected_face)

|

| 67 |

+

img_pixels = np.expand_dims(img_pixels, axis = 0)

|

| 68 |

+

#normalize input in [0, 1]

|

| 69 |

+

img_pixels /= 255

|

| 70 |

+

return img_pixels

|

config.py

ADDED

|

@@ -0,0 +1,12 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# -------------------------------------- emotion_detection ---------------------------------------

|

| 2 |

+

# modelo de deteccion de emociones

|

| 3 |

+

path_model = 'emotion_detection/Modelos/model_dropout.hdf5'

|

| 4 |

+

# Parametros del modelo, la imagen se debe convertir a una de tamaño 48x48 en escala de grises

|

| 5 |

+

w,h = 48,48

|

| 6 |

+

rgb = False

|

| 7 |

+

labels = ['angry','disgust','fear','happy','neutral','sad','surprise']

|

| 8 |

+

|

| 9 |

+

# -------------------------------------- face_recognition ---------------------------------------

|

| 10 |

+

# path imagenes folder

|

| 11 |

+

path_images = "images_db"

|

| 12 |

+

|

data_test/0.jpg

ADDED

|

data_test/1.jpg

ADDED

|

data_test/friends.jpg

ADDED

|

deep_face.py

ADDED

|

@@ -0,0 +1,9 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from deepface import DeepFace

|

| 2 |

+

demography = DeepFace.analyze("juan.jpg", actions = ['age', 'gender', 'race', 'emotion'])

|

| 3 |

+

#demographies = DeepFace.analyze(["img1.jpg", "img2.jpg", "img3.jpg"]) #analyzing multiple faces same time

|

| 4 |

+

print("Age: ", demography["age"])

|

| 5 |

+

print("Gender: ", demography["gender"])

|

| 6 |

+

print("Emotion: ", demography["dominant_emotion"])

|

| 7 |

+

print("Race: ", demography["dominant_race"])

|

| 8 |

+

|

| 9 |

+

|

emotion_detection/Modelos/model_dropout.hdf5

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:11b4f80613fe377a57ee0a09046bcfe87d3ccb2a21f9e63dda05bad384981c55

|

| 3 |

+

size 53819464

|

emotion_detection/f_emotion_detection.py

ADDED

|

@@ -0,0 +1,37 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import config as cfg

|

| 2 |

+

import cv2

|

| 3 |

+

import numpy as np

|

| 4 |

+

from keras.models import load_model

|

| 5 |

+

from keras.preprocessing.image import img_to_array

|

| 6 |

+

|

| 7 |

+

class predict_emotions():

|

| 8 |

+

def __init__(self):

|

| 9 |

+

# cargo modelo de deteccion de emociones

|

| 10 |

+

self.model = load_model(cfg.path_model)

|

| 11 |

+

|

| 12 |

+

def preprocess_img(self,face_image,rgb=True,w=48,h=48):

|

| 13 |

+

face_image = cv2.resize(face_image, (w,h))

|

| 14 |

+

if rgb == False:

|

| 15 |

+

face_image = cv2.cvtColor(face_image, cv2.COLOR_BGR2GRAY)

|

| 16 |

+

face_image = face_image.astype("float") / 255.0

|

| 17 |

+

face_image= img_to_array(face_image)

|

| 18 |

+

face_image = np.expand_dims(face_image, axis=0)

|

| 19 |

+

return face_image

|

| 20 |

+

|

| 21 |

+

def get_emotion(self,img,boxes_face):

|

| 22 |

+

emotions = []

|

| 23 |

+

if len(boxes_face)!=0:

|

| 24 |

+

for box in boxes_face:

|

| 25 |

+

y0,x0,y1,x1 = box

|

| 26 |

+

face_image = img[x0:x1,y0:y1]

|

| 27 |

+

# preprocesar data

|

| 28 |

+

face_image = self.preprocess_img(face_image ,cfg.rgb, cfg.w, cfg.h)

|

| 29 |

+

# predecir imagen

|

| 30 |

+

prediction = self.model.predict(face_image)

|

| 31 |

+

emotion = cfg.labels[prediction.argmax()]

|

| 32 |

+

emotions.append(emotion)

|

| 33 |

+

else:

|

| 34 |

+

emotions = []

|

| 35 |

+

boxes_face = []

|

| 36 |

+

return boxes_face,emotions

|

| 37 |

+

|

f_Face_info.py

ADDED

|

@@ -0,0 +1,112 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import cv2

|

| 2 |

+

import numpy as np

|

| 3 |

+

import face_recognition

|

| 4 |

+

from age_detection import f_my_age

|

| 5 |

+

from gender_detection import f_my_gender

|

| 6 |

+

from race_detection import f_my_race

|

| 7 |

+

from emotion_detection import f_emotion_detection

|

| 8 |

+

from my_face_recognition import f_main

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

# instanciar detectores

|

| 13 |

+

age_detector = f_my_age.Age_Model()

|

| 14 |

+

gender_detector = f_my_gender.Gender_Model()

|

| 15 |

+

race_detector = f_my_race.Race_Model()

|

| 16 |

+

emotion_detector = f_emotion_detection.predict_emotions()

|

| 17 |

+

rec_face = f_main.rec()

|

| 18 |

+

#----------------------------------------------

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

def get_face_info(im):

|

| 23 |

+

# face detection

|

| 24 |

+

boxes_face = face_recognition.face_locations(im)

|

| 25 |

+

out = []

|

| 26 |

+

if len(boxes_face)!=0:

|

| 27 |

+

for box_face in boxes_face:

|

| 28 |

+

# segmento rostro

|

| 29 |

+

box_face_fc = box_face

|

| 30 |

+

x0,y1,x1,y0 = box_face

|

| 31 |

+

box_face = np.array([y0,x0,y1,x1])

|

| 32 |

+

face_features = {

|

| 33 |

+

"name":[],

|

| 34 |

+

"age":[],

|

| 35 |

+

"gender":[],

|

| 36 |

+

"race":[],

|

| 37 |

+

"emotion":[],

|

| 38 |

+

"bbx_frontal_face":box_face

|

| 39 |

+

}

|

| 40 |

+

|

| 41 |

+

face_image = im[x0:x1,y0:y1]

|

| 42 |

+

|

| 43 |

+

# -------------------------------------- face_recognition ---------------------------------------

|

| 44 |

+

face_features["name"] = rec_face.recognize_face2(im,[box_face_fc])[0]

|

| 45 |

+

|

| 46 |

+

# -------------------------------------- age_detection ---------------------------------------

|

| 47 |

+

age = age_detector.predict_age(face_image)

|

| 48 |

+

face_features["age"] = str(round(age,2))

|

| 49 |

+

|

| 50 |

+

# -------------------------------------- gender_detection ---------------------------------------

|

| 51 |

+

face_features["gender"] = gender_detector.predict_gender(face_image)

|

| 52 |

+

|

| 53 |

+

# -------------------------------------- race_detection ---------------------------------------

|

| 54 |

+

face_features["race"] = race_detector.predict_race(face_image)

|

| 55 |

+

|

| 56 |

+

# -------------------------------------- emotion_detection ---------------------------------------

|

| 57 |

+

_,emotion = emotion_detector.get_emotion(im,[box_face])

|

| 58 |

+

face_features["emotion"] = emotion[0]

|

| 59 |

+

|

| 60 |

+

# -------------------------------------- out ---------------------------------------

|

| 61 |

+

out.append(face_features)

|

| 62 |

+

else:

|

| 63 |

+

face_features = {

|

| 64 |

+

"name":[],

|

| 65 |

+

"age":[],

|

| 66 |

+

"gender":[],

|

| 67 |

+

"race":[],

|

| 68 |

+

"emotion":[],

|

| 69 |

+

"bbx_frontal_face":[]

|

| 70 |

+

}

|

| 71 |

+

out.append(face_features)

|

| 72 |

+

return out

|

| 73 |

+

|

| 74 |

+

|

| 75 |

+

|

| 76 |

+

def bounding_box(out,img):

|

| 77 |

+

for data_face in out:

|

| 78 |

+

box = data_face["bbx_frontal_face"]

|

| 79 |

+

if len(box) == 0:

|

| 80 |

+

continue

|

| 81 |

+

else:

|

| 82 |

+

x0,y0,x1,y1 = box

|

| 83 |

+

img = cv2.rectangle(img,

|

| 84 |

+

(x0,y0),

|

| 85 |

+

(x1,y1),

|

| 86 |

+

(0,255,0),2);

|

| 87 |

+

thickness = 1

|

| 88 |

+

fontSize = 0.5

|

| 89 |

+

step = 13

|

| 90 |

+

|

| 91 |

+

try:

|

| 92 |

+

cv2.putText(img, "age: " +data_face["age"], (x0, y0-7), cv2.FONT_HERSHEY_SIMPLEX, fontSize, (0,255,0), thickness)

|

| 93 |

+

except:

|

| 94 |

+

pass

|

| 95 |

+

try:

|

| 96 |

+

cv2.putText(img, "gender: " +data_face["gender"], (x0, y0-step-10*1), cv2.FONT_HERSHEY_SIMPLEX, fontSize, (0,255,0), thickness)

|

| 97 |

+

except:

|

| 98 |

+

pass

|

| 99 |

+

try:

|

| 100 |

+

cv2.putText(img, "race: " +data_face["race"], (x0, y0-step-10*2), cv2.FONT_HERSHEY_SIMPLEX, fontSize, (0,255,0), thickness)

|

| 101 |

+

except:

|

| 102 |

+

pass

|

| 103 |

+

try:

|

| 104 |

+

cv2.putText(img, "emotion: " +data_face["emotion"], (x0, y0-step-10*3), cv2.FONT_HERSHEY_SIMPLEX, fontSize, (0,255,0), thickness)

|

| 105 |

+

except:

|

| 106 |

+

pass

|

| 107 |

+

try:

|

| 108 |

+

cv2.putText(img, "name: " +data_face["name"], (x0, y0-step-10*4), cv2.FONT_HERSHEY_SIMPLEX, fontSize, (0,255,0), thickness)

|

| 109 |

+

except:

|

| 110 |

+

pass

|

| 111 |

+

return img

|

| 112 |

+

|

gender_detection/f_my_gender.py

ADDED

|

@@ -0,0 +1,57 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#from basemodels import VGGFace

|

| 2 |

+

from deepface.basemodels import VGGFace

|

| 3 |

+

import os

|

| 4 |

+

from pathlib import Path

|

| 5 |

+

import gdown

|

| 6 |

+

import numpy as np

|

| 7 |

+

from keras.models import Model, Sequential

|

| 8 |

+

from keras.layers import Convolution2D, Flatten, Activation

|

| 9 |

+

from keras.preprocessing import image

|

| 10 |

+

import cv2

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

class Gender_Model():

|

| 14 |

+

def __init__(self):

|

| 15 |

+

self.model = self.loadModel()

|

| 16 |

+

|

| 17 |

+

def predict_gender(self, face_image):

|

| 18 |

+

image_preprocesing = self.transform_face_array2gender_face(face_image)

|

| 19 |

+

gender_predictions = self.model.predict(image_preprocesing )[0,:]

|

| 20 |

+

if np.argmax(gender_predictions) == 0:

|

| 21 |

+

result_gender = "Woman"

|

| 22 |

+

elif np.argmax(gender_predictions) == 1:

|

| 23 |

+

result_gender = "Man"

|

| 24 |

+

return result_gender

|

| 25 |

+

|

| 26 |

+

def loadModel(self):

|

| 27 |

+

model = VGGFace.baseModel()

|

| 28 |

+

#--------------------------

|

| 29 |

+

classes = 2

|

| 30 |

+

base_model_output = Sequential()

|

| 31 |

+

base_model_output = Convolution2D(classes, (1, 1), name='predictions')(model.layers[-4].output)

|

| 32 |

+

base_model_output = Flatten()(base_model_output)

|

| 33 |

+

base_model_output = Activation('softmax')(base_model_output)

|

| 34 |

+

#--------------------------

|

| 35 |

+

gender_model = Model(inputs=model.input, outputs=base_model_output)

|

| 36 |

+

#--------------------------

|

| 37 |

+

#load weights

|

| 38 |

+

home = str(Path.home())

|

| 39 |

+

if os.path.isfile(home+'/.deepface/weights/gender_model_weights.h5') != True:

|

| 40 |

+

print("gender_model_weights.h5 will be downloaded...")

|

| 41 |

+

url = 'https://drive.google.com/uc?id=1wUXRVlbsni2FN9-jkS_f4UTUrm1bRLyk'

|

| 42 |

+

output = home+'/.deepface/weights/gender_model_weights.h5'

|

| 43 |

+

gdown.download(url, output, quiet=False)

|

| 44 |

+

gender_model.load_weights(home+'/.deepface/weights/gender_model_weights.h5')

|

| 45 |

+

return gender_model

|

| 46 |

+

#--------------------------

|

| 47 |

+

|

| 48 |

+

def transform_face_array2gender_face(self,face_array,grayscale=False,target_size = (224, 224)):

|

| 49 |

+

detected_face = face_array

|

| 50 |

+

if grayscale == True:

|

| 51 |

+

detected_face = cv2.cvtColor(detected_face, cv2.COLOR_BGR2GRAY)

|

| 52 |

+

detected_face = cv2.resize(detected_face, target_size)

|

| 53 |

+

img_pixels = image.img_to_array(detected_face)

|

| 54 |

+

img_pixels = np.expand_dims(img_pixels, axis = 0)

|

| 55 |

+

#normalize input in [0, 1]

|

| 56 |

+

img_pixels /= 255

|

| 57 |

+

return img_pixels

|

images_db/juan.jpg

ADDED

|

images_db/karo.jpg

ADDED

|

my_face_recognition/f_face_recognition.py

ADDED

|

@@ -0,0 +1,47 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import face_recognition

|

| 2 |

+

import numpy as np

|

| 3 |

+

|

| 4 |

+

def detect_face(image):

|

| 5 |

+

'''

|

| 6 |

+

Input: imagen numpy.ndarray, shape=(W,H,3)

|

| 7 |

+

Output: [(y0,x1,y1,x0),(y0,x1,y1,x0),...,(y0,x1,y1,x0)] ,cada tupla representa un rostro detectado

|

| 8 |

+

si no se detecta nada --> Output: []

|

| 9 |

+

'''

|

| 10 |

+

Output = face_recognition.face_locations(image)

|

| 11 |

+

return Output

|

| 12 |

+

|

| 13 |

+

def get_features(img,box):

|

| 14 |

+

'''

|

| 15 |

+

Input:

|

| 16 |

+

-img:imagen numpy.ndarray, shape=(W,H,3)

|

| 17 |

+

-box: [(y0,x1,y1,x0),(y0,x1,y1,x0),...,(y0,x1,y1,x0)] ,cada tupla representa un rostro detectado

|

| 18 |

+

Output:

|

| 19 |

+

-features: [array,array,...,array] , cada array representa las caracteristicas de un rostro

|

| 20 |

+

'''

|

| 21 |

+

features = face_recognition.face_encodings(img,box)

|

| 22 |

+

return features

|

| 23 |

+

|

| 24 |

+

def compare_faces(face_encodings,db_features,db_names):

|

| 25 |

+

'''

|

| 26 |

+

Input:

|

| 27 |

+

db_features = [array,array,...,array] , cada array representa las caracteristicas de un rostro

|

| 28 |

+

db_names = array(array,array,...,array) cada array representa las caracteriticas de un usuario

|

| 29 |

+

Output:

|

| 30 |

+

-match_name: ['name', 'unknow'] lista con los nombres que hizo match

|

| 31 |

+

si no hace match pero hay una persona devuelve 'unknow'

|

| 32 |

+

'''

|

| 33 |

+

match_name = []

|

| 34 |

+

names_temp = db_names

|

| 35 |

+

Feats_temp = db_features

|

| 36 |

+

|

| 37 |

+

for face_encoding in face_encodings:

|

| 38 |

+

try:

|

| 39 |

+

dist = face_recognition.face_distance(Feats_temp,face_encoding)

|

| 40 |

+

except:

|

| 41 |

+

dist = face_recognition.face_distance([Feats_temp],face_encoding)

|

| 42 |

+

index = np.argmin(dist)

|

| 43 |

+

if dist[index] <= 0.6:

|

| 44 |

+

match_name = match_name + [names_temp[index]]

|

| 45 |

+

else:

|

| 46 |

+

match_name = match_name + ["unknow"]

|

| 47 |

+

return match_name

|

my_face_recognition/f_main.py

ADDED

|

@@ -0,0 +1,107 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from my_face_recognition import f_face_recognition as rec_face

|

| 2 |

+

from my_face_recognition import f_storage as st

|

| 3 |

+

import traceback

|

| 4 |

+

import numpy as np

|

| 5 |

+

import cv2

|

| 6 |

+

|

| 7 |

+

#------------------------ Inicia el flujo principal ----------------------------

|

| 8 |

+

class rec():

|

| 9 |

+

def __init__(self):

|

| 10 |

+

'''

|

| 11 |

+

-db_names: [name1,name2,...,namen] lista de strings

|

| 12 |

+

-db_features: array(array,array,...,array) cada array representa las caracteriticas de un usuario

|

| 13 |

+

'''

|

| 14 |

+

self.db_names, self.db_features = st.load_images_to_database()

|

| 15 |

+

|

| 16 |

+

def recognize_face(self,im):

|

| 17 |

+

'''

|

| 18 |

+

Input:

|

| 19 |

+

-imb64: imagen

|

| 20 |

+

Output:

|

| 21 |

+

res:{'status': si todo sale bien es 'ok' en otro caso devuelve el erroe encontrado

|

| 22 |

+

'faces': [(y0,x1,y1,x0),(y0,x1,y1,x0),...,(y0,x1,y1,x0)] ,cada tupla representa un rostro detectado

|

| 23 |

+

'names': ['name', 'unknow'] lista con los nombres que hizo match}

|

| 24 |

+

'''

|

| 25 |

+

try:

|

| 26 |

+

# detectar rostro

|

| 27 |

+

box_faces = rec_face.detect_face(im)

|

| 28 |

+

# condiconal para el caso de que no se detecte rostro

|

| 29 |

+

if not box_faces:

|

| 30 |

+

res = {

|

| 31 |

+

'status':'ok',

|

| 32 |

+

'faces':[],

|

| 33 |

+

'names':[]}

|

| 34 |

+

return res

|

| 35 |

+

else:

|

| 36 |

+

if not self.db_names:

|

| 37 |

+

res = {

|

| 38 |

+

'status':'ok',

|

| 39 |

+

'faces':box_faces,

|

| 40 |

+

'names':['unknow']*len(box_faces)}

|

| 41 |

+

return res

|

| 42 |

+

else:

|

| 43 |

+

# (continua) extraer features

|

| 44 |

+

actual_features = rec_face.get_features(im,box_faces)

|

| 45 |

+

# comparar actual_features con las que estan almacenadas en la base de datos

|

| 46 |

+

match_names = rec_face.compare_faces(actual_features,self.db_features,self.db_names)

|

| 47 |

+

# guardar

|

| 48 |

+

res = {

|

| 49 |

+

'status':'ok',

|

| 50 |

+

'faces':box_faces,

|

| 51 |

+

'names':match_names}

|

| 52 |

+

return res

|

| 53 |

+

except Exception as ex:

|

| 54 |

+

error = ''.join(traceback.format_exception(etype=type(ex), value=ex, tb=ex.__traceback__))

|

| 55 |

+

res = {

|

| 56 |

+

'status':'error: ' + str(error),

|

| 57 |

+

'faces':[],

|

| 58 |

+

'names':[]}

|

| 59 |

+

return res

|

| 60 |

+

|

| 61 |

+

def recognize_face2(self,im,box_faces):

|

| 62 |

+

try:

|

| 63 |

+

if not self.db_names:

|

| 64 |

+

res = 'unknow'

|

| 65 |

+

return res

|

| 66 |

+

else:

|

| 67 |

+

# (continua) extraer features

|

| 68 |

+

actual_features = rec_face.get_features(im,box_faces)

|

| 69 |

+

# comparar actual_features con las que estan almacenadas en la base de datos

|

| 70 |

+

match_names = rec_face.compare_faces(actual_features,self.db_features,self.db_names)

|

| 71 |

+

# guardar

|

| 72 |

+

res = match_names

|

| 73 |

+

return res

|

| 74 |

+

except:

|

| 75 |

+

res = []

|

| 76 |

+

return res

|

| 77 |

+

|

| 78 |

+

|

| 79 |

+

def bounding_box(img,box,match_name=[]):

|

| 80 |

+

for i in np.arange(len(box)):

|

| 81 |

+

x0,y0,x1,y1 = box[i]

|

| 82 |

+

img = cv2.rectangle(img,

|

| 83 |

+

(x0,y0),

|

| 84 |

+

(x1,y1),

|

| 85 |

+

(0,255,0),3);

|

| 86 |

+

if not match_name:

|

| 87 |

+

continue

|

| 88 |

+

else:

|

| 89 |

+

cv2.putText(img, match_name[i], (x0, y1-10), cv2.FONT_HERSHEY_SIMPLEX, 0.9, (0,255,0), 2)

|

| 90 |

+

return img

|

| 91 |

+

|

| 92 |

+

if __name__ == "__main__":

|

| 93 |

+

import argparse

|

| 94 |

+

|

| 95 |

+

parse = argparse.ArgumentParser()

|

| 96 |

+

parse.add_argument("-im","--path_im",help="path image")

|

| 97 |

+

parse = parse.parse_args()

|

| 98 |

+

|

| 99 |

+

path_im = parse.path_im

|

| 100 |

+

im = cv2.imread(path_im)

|

| 101 |

+

# instancio detector

|

| 102 |

+

recognizer = rec()

|

| 103 |

+

res = recognizer.recognize_face(im)

|

| 104 |

+

im = bounding_box(im,res["faces"],res["names"])

|

| 105 |

+

cv2.imshow("face recogntion", im)

|

| 106 |

+

cv2.waitKey(0)

|

| 107 |

+

print(res)

|

my_face_recognition/f_storage.py

ADDED

|

@@ -0,0 +1,57 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

'''

|

| 2 |

+

cargo las imagenes que estan en el folder database_images

|

| 3 |

+

'''

|

| 4 |

+

import config as cfg

|

| 5 |

+

import os

|

| 6 |

+

from my_face_recognition import f_main

|

| 7 |

+

import cv2

|

| 8 |

+

import numpy as np

|

| 9 |

+

import traceback

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

def load_images_to_database():

|

| 13 |

+

list_images = os.listdir(cfg.path_images)

|

| 14 |

+

# filto los archivos que no son imagenes

|

| 15 |

+

list_images = [File for File in list_images if File.endswith(('.jpg','.jpeg','JPEG'))]

|

| 16 |

+

|

| 17 |

+

# inicalizo variables

|

| 18 |

+

name = []

|

| 19 |

+

Feats = []

|

| 20 |

+

|

| 21 |

+

# ingesta de imagenes

|

| 22 |

+

for file_name in list_images:

|

| 23 |

+

im = cv2.imread(cfg.path_images+os.sep+file_name)

|

| 24 |

+

|

| 25 |

+

# obtengo las caracteristicas del rostro

|

| 26 |

+

box_face = f_main.rec_face.detect_face(im)

|

| 27 |

+

feat = f_main.rec_face.get_features(im,box_face)

|

| 28 |

+

if len(feat)!=1:

|

| 29 |

+

'''

|

| 30 |

+

esto significa que no hay rostros o hay mas de un rostro

|

| 31 |

+

'''

|

| 32 |

+

continue

|

| 33 |

+

else:

|

| 34 |

+

# inserto las nuevas caracteristicas en la base de datos

|

| 35 |

+

new_name = file_name.split(".")[0]

|

| 36 |

+

if new_name == "":

|

| 37 |

+

continue

|

| 38 |

+

name.append(new_name)

|

| 39 |

+

if len(Feats)==0:

|

| 40 |

+

Feats = np.frombuffer(feat[0], dtype=np.float64)

|

| 41 |

+

else:

|

| 42 |

+

Feats = np.vstack((Feats,np.frombuffer(feat[0], dtype=np.float64)))

|

| 43 |

+

return name, Feats

|

| 44 |

+

|

| 45 |

+

def insert_new_user(rec_face,name,feat,im):

|

| 46 |

+

try:

|

| 47 |

+

rec_face.db_names.append(name)

|

| 48 |

+

if len(rec_face.db_features)==0:

|

| 49 |

+

rec_face.db_features = np.frombuffer(feat[0], dtype=np.float64)

|

| 50 |

+

else:

|

| 51 |

+

rec_face.db_features = np.vstack((rec_face.db_features,np.frombuffer(feat[0], dtype=np.float64)))

|

| 52 |

+

# guardo la imagen

|

| 53 |

+

cv2.imwrite(cfg.path_images+os.sep+name+".jpg", im)

|

| 54 |

+

return 'ok'

|

| 55 |

+

except Exception as ex:

|

| 56 |

+

error = ''.join(traceback.format_exception(etype=type(ex), value=ex, tb=ex.__traceback__))

|

| 57 |

+

return error

|

race_detection/f_my_race.py

ADDED

|

@@ -0,0 +1,67 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

como usar

|

| 3 |

+

1. instanciar el modelo

|

| 4 |

+

emo = f_my_race.Race_Model()

|

| 5 |

+

2. ingresar una imagen donde solo se vea un rostro (usar modelo deteccion de rostros para extraer una imagen con solo el rostro)

|

| 6 |

+

emo.predict_race(face_image)

|

| 7 |

+

"""

|

| 8 |

+

|

| 9 |

+

#from basemodels import VGGFace

|

| 10 |

+

from deepface.basemodels import VGGFace

|

| 11 |

+

import os

|

| 12 |

+

from pathlib import Path

|

| 13 |

+

import gdown

|

| 14 |

+

import numpy as np

|

| 15 |

+

from keras.models import Model, Sequential

|

| 16 |

+

from keras.layers import Convolution2D, Flatten, Activation

|

| 17 |

+

import zipfile

|

| 18 |

+

from keras.preprocessing import image

|

| 19 |

+

import cv2

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

class Race_Model():

|

| 23 |

+

def __init__(self):

|

| 24 |

+

self.model = self.loadModel()

|

| 25 |

+

self.race_labels = ['asian', 'indian', 'black', 'white', 'middle eastern', 'latino hispanic']

|

| 26 |

+

|

| 27 |

+

def predict_race(self,face_image):

|

| 28 |

+

image_preprocesing = self.transform_face_array2race_face(face_image)

|

| 29 |

+

race_predictions = self.model.predict(image_preprocesing )[0,:]

|

| 30 |

+

result_race = self.race_labels[np.argmax(race_predictions)]

|

| 31 |

+

return result_race

|

| 32 |

+

|

| 33 |

+

def loadModel(self):

|

| 34 |

+

model = VGGFace.baseModel()

|

| 35 |

+

#--------------------------

|

| 36 |

+

classes = 6

|

| 37 |

+

base_model_output = Sequential()

|

| 38 |

+

base_model_output = Convolution2D(classes, (1, 1), name='predictions')(model.layers[-4].output)

|

| 39 |

+

base_model_output = Flatten()(base_model_output)

|

| 40 |

+

base_model_output = Activation('softmax')(base_model_output)

|

| 41 |

+

#--------------------------

|

| 42 |

+

race_model = Model(inputs=model.input, outputs=base_model_output)

|

| 43 |

+

#--------------------------

|

| 44 |

+

#load weights

|

| 45 |

+

home = str(Path.home())

|

| 46 |

+

if os.path.isfile(home+'/.deepface/weights/race_model_single_batch.h5') != True:

|

| 47 |

+

print("race_model_single_batch.h5 will be downloaded...")

|

| 48 |

+

#zip

|

| 49 |

+

url = 'https://drive.google.com/uc?id=1nz-WDhghGQBC4biwShQ9kYjvQMpO6smj'

|

| 50 |

+

output = home+'/.deepface/weights/race_model_single_batch.zip'

|

| 51 |

+

gdown.download(url, output, quiet=False)

|

| 52 |

+

#unzip race_model_single_batch.zip

|

| 53 |

+

with zipfile.ZipFile(output, 'r') as zip_ref:

|

| 54 |

+

zip_ref.extractall(home+'/.deepface/weights/')

|

| 55 |

+

race_model.load_weights(home+'/.deepface/weights/race_model_single_batch.h5')

|

| 56 |

+

return race_model

|

| 57 |

+

#--------------------------

|

| 58 |

+

def transform_face_array2race_face(self,face_array,grayscale=False,target_size = (224, 224)):

|

| 59 |

+

detected_face = face_array

|

| 60 |

+

if grayscale == True:

|

| 61 |

+

detected_face = cv2.cvtColor(detected_face, cv2.COLOR_BGR2GRAY)

|

| 62 |

+

detected_face = cv2.resize(detected_face, target_size)

|

| 63 |

+

img_pixels = image.img_to_array(detected_face)

|

| 64 |

+

img_pixels = np.expand_dims(img_pixels, axis = 0)

|

| 65 |

+

#normalize input in [0, 1]

|

| 66 |

+

img_pixels /= 255

|

| 67 |

+

return img_pixels

|

requirements.txt

ADDED

|

@@ -0,0 +1,82 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|