Upload 15 files

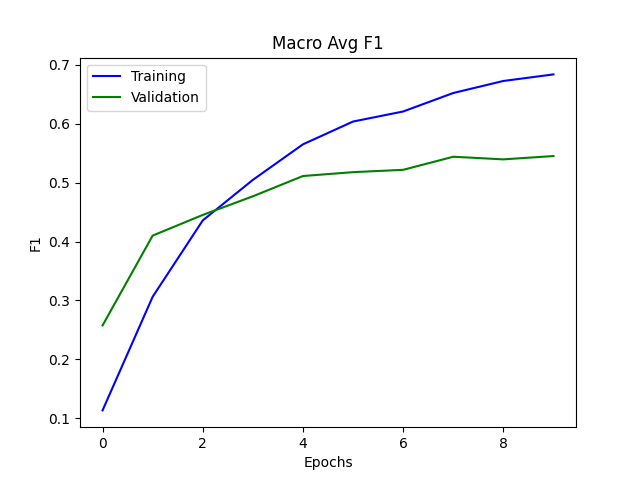

Browse files- results/f1_macro.png +0 -0

- results/f1_micro.png +0 -0

- results/f1_weighted.png +0 -0

- results/loss.png +0 -0

- results/precision_macro.png +0 -0

- results/precision_micro.png +0 -0

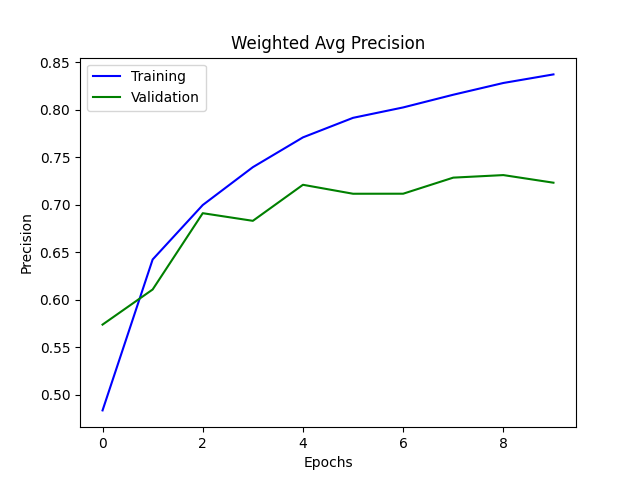

- results/precision_weighted.png +0 -0

- results/recall_macro.png +0 -0

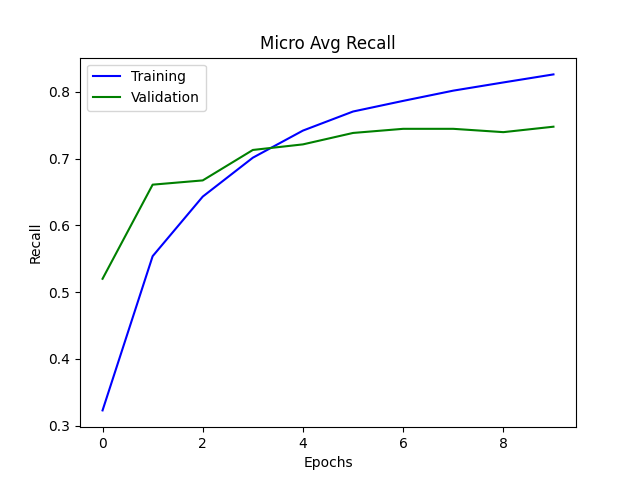

- results/recall_micro.png +0 -0

- results/recall_weighted.png +0 -0

- results/results_E6.txt +45 -0

- results/results_E7.txt +45 -0

- results/results_E8.txt +45 -0

- results/results_E9.txt +45 -0

- results/syngnn_main.log +352 -0

results/f1_macro.png

ADDED

|

results/f1_micro.png

ADDED

|

results/f1_weighted.png

ADDED

|

results/loss.png

ADDED

|

results/precision_macro.png

ADDED

|

results/precision_micro.png

ADDED

|

results/precision_weighted.png

ADDED

|

results/recall_macro.png

ADDED

|

results/recall_micro.png

ADDED

|

results/recall_weighted.png

ADDED

|

results/results_E6.txt

ADDED

|

@@ -0,0 +1,45 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

***** Test results *****

|

| 2 |

+

Thu Sep 22 06:41:21 2022

|

| 3 |

+

Task: ner

|

| 4 |

+

Model path: bert-base-uncased

|

| 5 |

+

Data path: ./data/ud/

|

| 6 |

+

Tokenizer: bert-base-uncased

|

| 7 |

+

Batch size: 32

|

| 8 |

+

Epoch: 6

|

| 9 |

+

Learning rate: 2e-05

|

| 10 |

+

LR Decay End Factor: 0.3LR Decay End Epoch: 5Sequence length: 96

|

| 11 |

+

Training: True

|

| 12 |

+

Num Threads: 24

|

| 13 |

+

Num Sentences: 0

|

| 14 |

+

Max Grad Norm: 0.0

|

| 15 |

+

Use GNN: False

|

| 16 |

+

Syntax graph style: dep

|

| 17 |

+

Use label weights: False

|

| 18 |

+

Clip value: 50

|

| 19 |

+

precision recall f1-score support

|

| 20 |

+

|

| 21 |

+

CARDINAL 0.7133 0.6503 0.6803 612

|

| 22 |

+

DATE 0.6922 0.7254 0.7084 1045

|

| 23 |

+

EVENT 0.4429 0.3875 0.4133 80

|

| 24 |

+

FAC 0.3390 0.3974 0.3659 151

|

| 25 |

+

GPE 0.8456 0.8714 0.8583 1936

|

| 26 |

+

LANGUAGE 0.5135 0.2468 0.3333 77

|

| 27 |

+

LAW 0.4130 0.3333 0.3689 57

|

| 28 |

+

LOC 0.5934 0.4977 0.5414 217

|

| 29 |

+

MONEY 0.5370 0.4754 0.5043 61

|

| 30 |

+

NORP 0.6211 0.7536 0.6809 422

|

| 31 |

+

ORDINAL 0.8208 0.8304 0.8256 171

|

| 32 |

+

ORG 0.5289 0.5869 0.5564 857

|

| 33 |

+

PERCENT 0.3333 0.4722 0.3908 36

|

| 34 |

+

PERSON 0.7192 0.7885 0.7523 1371

|

| 35 |

+

PRODUCT 0.2705 0.3367 0.3000 98

|

| 36 |

+

QUANTITY 0.3485 0.4340 0.3866 53

|

| 37 |

+

SEP] 0.0000 0.0000 0.0000 0

|

| 38 |

+

TIME 0.6071 0.6355 0.6210 214

|

| 39 |

+

WORK_OF_ART 0.3000 0.2538 0.2750 130

|

| 40 |

+

|

| 41 |

+

micro avg 0.6487 0.7110 0.6784 7588

|

| 42 |

+

macro avg 0.5073 0.5093 0.5033 7588

|

| 43 |

+

weighted avg 0.6821 0.7110 0.6946 7588

|

| 44 |

+

|

| 45 |

+

Special token predictions: 0

|

results/results_E7.txt

ADDED

|

@@ -0,0 +1,45 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

***** Test results *****

|

| 2 |

+

Thu Sep 22 07:44:16 2022

|

| 3 |

+

Task: ner

|

| 4 |

+

Model path: bert-base-uncased

|

| 5 |

+

Data path: ./data/ud/

|

| 6 |

+

Tokenizer: bert-base-uncased

|

| 7 |

+

Batch size: 32

|

| 8 |

+

Epoch: 7

|

| 9 |

+

Learning rate: 2e-05

|

| 10 |

+

LR Decay End Factor: 0.3LR Decay End Epoch: 5Sequence length: 96

|

| 11 |

+

Training: True

|

| 12 |

+

Num Threads: 24

|

| 13 |

+

Num Sentences: 0

|

| 14 |

+

Max Grad Norm: 0.0

|

| 15 |

+

Use GNN: False

|

| 16 |

+

Syntax graph style: dep

|

| 17 |

+

Use label weights: False

|

| 18 |

+

Clip value: 50

|

| 19 |

+

precision recall f1-score support

|

| 20 |

+

|

| 21 |

+

CARDINAL 0.7030 0.6225 0.6603 612

|

| 22 |

+

DATE 0.7036 0.7177 0.7106 1045

|

| 23 |

+

EVENT 0.4133 0.3875 0.4000 80

|

| 24 |

+

FAC 0.3661 0.4437 0.4012 151

|

| 25 |

+

GPE 0.8721 0.8667 0.8694 1936

|

| 26 |

+

LANGUAGE 0.5758 0.2468 0.3455 77

|

| 27 |

+

LAW 0.3621 0.3684 0.3652 57

|

| 28 |

+

LOC 0.4978 0.5115 0.5045 217

|

| 29 |

+

MONEY 0.5849 0.5082 0.5439 61

|

| 30 |

+

NORP 0.6927 0.7156 0.7040 422

|

| 31 |

+

ORDINAL 0.8035 0.8129 0.8081 171

|

| 32 |

+

ORG 0.5158 0.5893 0.5501 857

|

| 33 |

+

PERCENT 0.3878 0.5278 0.4471 36

|

| 34 |

+

PERSON 0.7476 0.7994 0.7726 1371

|

| 35 |

+

PRODUCT 0.2742 0.3469 0.3063 98

|

| 36 |

+

QUANTITY 0.3443 0.3962 0.3684 53

|

| 37 |

+

SEP] 0.0000 0.0000 0.0000 0

|

| 38 |

+

TIME 0.5816 0.6495 0.6137 214

|

| 39 |

+

WORK_OF_ART 0.3544 0.2154 0.2679 130

|

| 40 |

+

|

| 41 |

+

micro avg 0.6680 0.7080 0.6874 7588

|

| 42 |

+

macro avg 0.5148 0.5119 0.5073 7588

|

| 43 |

+

weighted avg 0.6955 0.7080 0.6998 7588

|

| 44 |

+

|

| 45 |

+

Special token predictions: 0

|

results/results_E8.txt

ADDED

|

@@ -0,0 +1,45 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

***** Test results *****

|

| 2 |

+

Thu Sep 22 08:47:08 2022

|

| 3 |

+

Task: ner

|

| 4 |

+

Model path: bert-base-uncased

|

| 5 |

+

Data path: ./data/ud/

|

| 6 |

+

Tokenizer: bert-base-uncased

|

| 7 |

+

Batch size: 32

|

| 8 |

+

Epoch: 8

|

| 9 |

+

Learning rate: 2e-05

|

| 10 |

+

LR Decay End Factor: 0.3LR Decay End Epoch: 5Sequence length: 96

|

| 11 |

+

Training: True

|

| 12 |

+

Num Threads: 24

|

| 13 |

+

Num Sentences: 0

|

| 14 |

+

Max Grad Norm: 0.0

|

| 15 |

+

Use GNN: False

|

| 16 |

+

Syntax graph style: dep

|

| 17 |

+

Use label weights: False

|

| 18 |

+

Clip value: 50

|

| 19 |

+

precision recall f1-score support

|

| 20 |

+

|

| 21 |

+

CARDINAL 0.7269 0.6307 0.6754 612

|

| 22 |

+

DATE 0.6856 0.7053 0.6953 1045

|

| 23 |

+

EVENT 0.4286 0.4500 0.4390 80

|

| 24 |

+

FAC 0.3454 0.4437 0.3884 151

|

| 25 |

+

GPE 0.8709 0.8574 0.8641 1936

|

| 26 |

+

LANGUAGE 0.5758 0.2468 0.3455 77

|

| 27 |

+

LAW 0.4314 0.3860 0.4074 57

|

| 28 |

+

LOC 0.5829 0.4700 0.5204 217

|

| 29 |

+

MONEY 0.5085 0.4918 0.5000 61

|

| 30 |

+

NORP 0.7023 0.7156 0.7089 422

|

| 31 |

+

ORDINAL 0.8258 0.8596 0.8424 171

|

| 32 |

+

ORG 0.5300 0.5776 0.5528 857

|

| 33 |

+

PERCENT 0.4255 0.5556 0.4819 36

|

| 34 |

+

PERSON 0.7398 0.7841 0.7613 1371

|

| 35 |

+

PRODUCT 0.2975 0.3673 0.3288 98

|

| 36 |

+

QUANTITY 0.3284 0.4151 0.3667 53

|

| 37 |

+

SEP] 0.0000 0.0000 0.0000 0

|

| 38 |

+

TIME 0.5586 0.6682 0.6085 214

|

| 39 |

+

WORK_OF_ART 0.3010 0.2385 0.2661 130

|

| 40 |

+

|

| 41 |

+

micro avg 0.6607 0.7024 0.6809 7588

|

| 42 |

+

macro avg 0.5192 0.5191 0.5133 7588

|

| 43 |

+

weighted avg 0.6968 0.7024 0.6977 7588

|

| 44 |

+

|

| 45 |

+

Special token predictions: 0

|

results/results_E9.txt

ADDED

|

@@ -0,0 +1,45 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

***** Test results *****

|

| 2 |

+

Thu Sep 22 09:50:02 2022

|

| 3 |

+

Task: ner

|

| 4 |

+

Model path: bert-base-uncased

|

| 5 |

+

Data path: ./data/ud/

|

| 6 |

+

Tokenizer: bert-base-uncased

|

| 7 |

+

Batch size: 32

|

| 8 |

+

Epoch: 9

|

| 9 |

+

Learning rate: 2e-05

|

| 10 |

+

LR Decay End Factor: 0.3LR Decay End Epoch: 5Sequence length: 96

|

| 11 |

+

Training: True

|

| 12 |

+

Num Threads: 24

|

| 13 |

+

Num Sentences: 0

|

| 14 |

+

Max Grad Norm: 0.0

|

| 15 |

+

Use GNN: False

|

| 16 |

+

Syntax graph style: dep

|

| 17 |

+

Use label weights: False

|

| 18 |

+

Clip value: 50

|

| 19 |

+

precision recall f1-score support

|

| 20 |

+

|

| 21 |

+

CARDINAL 0.6809 0.6520 0.6661 612

|

| 22 |

+

DATE 0.6870 0.7225 0.7043 1045

|

| 23 |

+

EVENT 0.3977 0.4375 0.4167 80

|

| 24 |

+

FAC 0.3404 0.4238 0.3776 151

|

| 25 |

+

GPE 0.8799 0.8549 0.8672 1936

|

| 26 |

+

LANGUAGE 0.4906 0.3377 0.4000 77

|

| 27 |

+

LAW 0.4062 0.4561 0.4298 57

|

| 28 |

+

LOC 0.5000 0.5023 0.5011 217

|

| 29 |

+

MONEY 0.5161 0.5246 0.5203 61

|

| 30 |

+

NORP 0.6817 0.7512 0.7148 422

|

| 31 |

+

ORDINAL 0.8276 0.8421 0.8348 171

|

| 32 |

+

ORG 0.5455 0.5741 0.5594 857

|

| 33 |

+

PERCENT 0.5476 0.6389 0.5897 36

|

| 34 |

+

PERSON 0.7531 0.7943 0.7732 1371

|

| 35 |

+

PRODUCT 0.2937 0.4286 0.3485 98

|

| 36 |

+

QUANTITY 0.3492 0.4151 0.3793 53

|

| 37 |

+

SEP] 0.0000 0.0000 0.0000 0

|

| 38 |

+

TIME 0.5748 0.6822 0.6239 214

|

| 39 |

+

WORK_OF_ART 0.2963 0.2462 0.2689 130

|

| 40 |

+

|

| 41 |

+

micro avg 0.6736 0.7127 0.6926 7588

|

| 42 |

+

macro avg 0.5141 0.5413 0.5250 7588

|

| 43 |

+

weighted avg 0.6958 0.7127 0.7033 7588

|

| 44 |

+

|

| 45 |

+

Special token predictions: 0

|

results/syngnn_main.log

ADDED

|

@@ -0,0 +1,352 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 0 |

0%| | 0/942 [00:00<?, ?it/s]

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

0%| | 0/153 [00:00<?, ?it/s]

|

|

|

|

|

|

|

|

|

|

|

|

|

| 2 |

0%| | 0/942 [00:00<?, ?it/s]

|

|

|

|

|

|

|

|

|

|

| 3 |

0%| | 0/153 [00:00<?, ?it/s]

|

|

|

|

|

|

|

|

|

|

|

|

|

| 4 |

0%| | 0/942 [00:00<?, ?it/s]

|

|

|

|

|

|

|

|

|

|

| 5 |

0%| | 0/153 [00:00<?, ?it/s]

|

|

|

|

|

|

|

|

|

|

|

|

|

| 6 |

0%| | 0/942 [00:00<?, ?it/s]

|

|

|

|

|

|

|

|

|

|

| 7 |

0%| | 0/153 [00:00<?, ?it/s]

|

|

|

|

|

|

|

|

|

|

|

|

|

| 8 |

0%| | 0/942 [00:00<?, ?it/s]

|

|

|

|

|

|

|

|

|

|

| 9 |

0%| | 0/153 [00:00<?, ?it/s]

|

|

|

|

|

|

|

|

|

|

|

|

|

| 10 |

0%| | 0/942 [00:00<?, ?it/s]

|

|

|

|

|

|

|

|

|

|

| 11 |

0%| | 0/153 [00:00<?, ?it/s]

|

|

|

|

|

|

|

|

|

|

|

|

|

| 12 |

0%| | 0/942 [00:00<?, ?it/s]

|

|

|

|

|

|

|

|

|

|

| 13 |

0%| | 0/153 [00:00<?, ?it/s]

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 14 |

0%| | 0/196 [00:00<?, ?it/s]

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 15 |

0%| | 0/942 [00:00<?, ?it/s]

|

|

|

|

|

|

|

|

|

|

| 16 |

0%| | 0/153 [00:00<?, ?it/s]

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 17 |

0%| | 0/196 [00:00<?, ?it/s]

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 18 |

0%| | 0/942 [00:00<?, ?it/s]

|

|

|

|

|

|

|

|

|

|

| 19 |

0%| | 0/153 [00:00<?, ?it/s]

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 20 |

0%| | 0/196 [00:00<?, ?it/s]

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 21 |

0%| | 0/942 [00:00<?, ?it/s]

|

|

|

|

|

|

|

|

|

|

| 22 |

0%| | 0/153 [00:00<?, ?it/s]

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 23 |

0%| | 0/196 [00:00<?, ?it/s]

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 24 |

0%| | 0/196 [00:00<?, ?it/s]

|

|

|

|

| 1 |

+

Loading model from path bert-base-uncased

|

| 2 |

+

Task: ner

|

| 3 |

+

Model path: bert-base-uncased

|

| 4 |

+

Data path: ./data/ud/

|

| 5 |

+

Tokenizer: bert-base-uncased

|

| 6 |

+

Batch size: 32

|

| 7 |

+

Epochs: 10

|

| 8 |

+

Learning rate: 2e-05

|

| 9 |

+

LR Decay: 0.3

|

| 10 |

+

LR Decay End Epoch: 5

|

| 11 |

+

Sequence length: 96

|

| 12 |

+

Training: True

|

| 13 |

+

Num Threads: 24

|

| 14 |

+

Num Sentences: 0

|

| 15 |

+

Max Norm: 0.0

|

| 16 |

+

Use GNN: False

|

| 17 |

+

Use label weights: False

|

| 18 |

+

PID: 3523179, PGID: 3523174

|

| 19 |

+

ATen/Parallel:

|

| 20 |

+

at::get_num_threads() : 24

|

| 21 |

+

at::get_num_interop_threads() : 36

|

| 22 |

+

OpenMP 201511 (a.k.a. OpenMP 4.5)

|

| 23 |

+

omp_get_max_threads() : 24

|

| 24 |

+

Intel(R) Math Kernel Library Version 2020.0.0 Product Build 20191122 for Intel(R) 64 architecture applications

|

| 25 |

+

mkl_get_max_threads() : 24

|

| 26 |

+

Intel(R) MKL-DNN v2.6.0 (Git Hash 52b5f107dd9cf10910aaa19cb47f3abf9b349815)

|

| 27 |

+

std::thread::hardware_concurrency() : 72

|

| 28 |

+

Environment variables:

|

| 29 |

+

OMP_NUM_THREADS : 24

|

| 30 |

+

MKL_NUM_THREADS : 24

|

| 31 |

+

ATen parallel backend: OpenMP

|

| 32 |

+

|

| 33 |

+

Training model

|

| 34 |

+

Loading Training Data

|

| 35 |

+

Loading NER labels from ./data/ud/**/*-train-orig.ner

|

| 36 |

+

en_atis-ud-train-orig.ner

|

| 37 |

+

num sentences: 4274

|

| 38 |

+

en_cesl-ud-train-orig.ner

|

| 39 |

+

num sentences: 4124

|

| 40 |

+

en_ewt-ud-train-orig.ner

|

| 41 |

+

num sentences: 11649

|

| 42 |

+

en_gum-ud-train-orig.ner

|

| 43 |

+

num sentences: 5344

|

| 44 |

+

en_lines-ud-train-orig.ner

|

| 45 |

+

num sentences: 3010

|

| 46 |

+

en_partut-ud-train-orig.ner

|

| 47 |

+

num sentences: 1739

|

| 48 |

+

Example of NER labels: [[['what', 'O'], ['is', 'O'], ['the', 'O'], ['cost', 'O'], ['of', 'O'], ['a', 'O'], ['round', 'O'], ['trip', 'O'], ['flight', 'O'], ['from', 'O'], ['pittsburgh', 'S-GPE'], ['to', 'O'], ['atlanta', 'S-GPE'], ['beginning', 'O'], ['on', 'O'], ['april', 'B-DATE'], ['twenty', 'I-DATE'], ['fifth', 'E-DATE'], ['and', 'O'], ['returning', 'O'], ['on', 'O'], ['may', 'B-DATE'], ['sixth', 'E-DATE']], [['now', 'O'], ['i', 'O'], ['need', 'O'], ['a', 'O'], ['flight', 'O'], ['leaving', 'O'], ['fort', 'B-GPE'], ['worth', 'E-GPE'], ['and', 'O'], ['arriving', 'O'], ['in', 'O'], ['denver', 'S-GPE'], ['no', 'O'], ['later', 'O'], ['than', 'O'], ['2', 'B-TIME'], ['pm', 'E-TIME'], ['next', 'B-DATE'], ['monday', 'E-DATE']]]

|

| 49 |

+

30140 sentences, 942 batches of size 32

|

| 50 |

+

|

| 51 |

+

Control example of InputFeatures

|

| 52 |

+

Input Ids: [101, 2085, 1045, 2342, 1037, 3462, 2975, 3481, 4276, 1998, 7194, 1999, 7573, 2053, 2101, 2084, 1016, 7610, 2279, 6928, 102, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]

|

| 53 |

+

Input Mask: [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]

|

| 54 |

+

Label Ids: [77, 1, 1, 1, 1, 1, 1, 31, 32, 1, 1, 1, 16, 1, 1, 1, 28, 30, 17, 19, 78, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]

|

| 55 |

+

Valid Ids: [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1]

|

| 56 |

+

Label Mask: [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]

|

| 57 |

+

Segment Ids: [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]

|

| 58 |

+

Loading Validation Data

|

| 59 |

+

Loading NER labels from ./data/ud/**/*-dev-orig.ner

|

| 60 |

+

en_atis-ud-dev-orig.ner

|

| 61 |

+

num sentences: 572

|

| 62 |

+

en_cesl-ud-dev-orig.ner

|

| 63 |

+

num sentences: 500

|

| 64 |

+

en_ewt-ud-dev-orig.ner

|

| 65 |

+

num sentences: 1875

|

| 66 |

+

en_gum-ud-dev-orig.ner

|

| 67 |

+

num sentences: 788

|

| 68 |

+

en_lines-ud-dev-orig.ner

|

| 69 |

+

num sentences: 986

|

| 70 |

+

en_partut-ud-dev-orig.ner

|

| 71 |

+

num sentences: 149

|

| 72 |

+

Example of NER labels: [[['i', 'O'], ['would', 'O'], ['like', 'O'], ['the', 'O'], ['cheapest', 'O'], ['flight', 'O'], ['from', 'O'], ['pittsburgh', 'S-GPE'], ['to', 'O'], ['atlanta', 'S-GPE'], ['leaving', 'O'], ['april', 'B-DATE'], ['twenty', 'I-DATE'], ['fifth', 'E-DATE'], ['and', 'O'], ['returning', 'O'], ['may', 'B-DATE'], ['sixth', 'E-DATE']], [['i', 'O'], ['want', 'O'], ['a', 'O'], ['flight', 'O'], ['from', 'O'], ['memphis', 'S-LOC'], ['to', 'O'], ['seattle', 'S-FAC'], ['that', 'O'], ['arrives', 'O'], ['no', 'O'], ['later', 'O'], ['than', 'O'], ['3', 'B-TIME'], ['pm', 'E-TIME']]]

|

| 73 |

+

4870 sentences, 153 batches of size 32

|

| 74 |

+

|

| 75 |

+

Control example of InputFeatures

|

| 76 |

+

Input Ids: [101, 1045, 2215, 1037, 3462, 2013, 9774, 2000, 5862, 2008, 8480, 2053, 2101, 2084, 1017, 7610, 102, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]

|

| 77 |

+

Input Mask: [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]

|

| 78 |

+

Label Ids: [77, 1, 1, 1, 1, 1, 59, 1, 60, 1, 1, 1, 1, 1, 28, 30, 78, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]

|

| 79 |

+

Valid Ids: [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1]

|

| 80 |

+

Label Mask: [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]

|

| 81 |

+

Segment Ids: [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]

|

| 82 |

+

Test Data

|

| 83 |

+

Loading NER labels from ./data/ud/**/*-test-orig.ner

|

| 84 |

+

en_atis-ud-test-orig.ner

|

| 85 |

+

num sentences: 586

|

| 86 |

+

en_cesl-ud-test-orig.ner

|

| 87 |

+

num sentences: 500

|

| 88 |

+

en_ewt-ud-test-orig.ner

|

| 89 |

+

num sentences: 1955

|

| 90 |

+

en_gum-ud-test-orig.ner

|

| 91 |

+

num sentences: 851

|

| 92 |

+

en_lines-ud-test-orig.ner

|

| 93 |

+

num sentences: 988

|

| 94 |

+

en_pud-ud-test-orig.ner

|

| 95 |

+

num sentences: 973

|

| 96 |

+

en_partut-ud-test-orig.ner

|

| 97 |

+

num sentences: 149

|

| 98 |

+

en_pronouns-ud-test-orig.ner

|

| 99 |

+

Some weights of the model checkpoint at bert-base-uncased were not used when initializing BertForNer: ['cls.predictions.transform.dense.weight', 'cls.seq_relationship.bias', 'cls.predictions.transform.LayerNorm.bias', 'cls.seq_relationship.weight', 'cls.predictions.bias', 'cls.predictions.transform.LayerNorm.weight', 'cls.predictions.transform.dense.bias', 'cls.predictions.decoder.weight']

|

| 100 |

+

- This IS expected if you are initializing BertForNer from the checkpoint of a model trained on another task or with another architecture (e.g. initializing a BertForSequenceClassification model from a BertForPreTraining model).

|

| 101 |

+

- This IS NOT expected if you are initializing BertForNer from the checkpoint of a model that you expect to be exactly identical (initializing a BertForSequenceClassification model from a BertForSequenceClassification model).

|

| 102 |

+

Some weights of BertForNer were not initialized from the model checkpoint at bert-base-uncased and are newly initialized: ['classifier.weight', 'classifier.bias']

|

| 103 |

+

You should probably TRAIN this model on a down-stream task to be able to use it for predictions and inference.

|

| 104 |

+

num sentences: 265

|

| 105 |

+

Example of NER labels: [[['what', 'O'], ['are', 'O'], ['the', 'O'], ['coach', 'O'], ['flights', 'O'], ['between', 'O'], ['dallas', 'S-GPE'], ['and', 'O'], ['baltimore', 'S-GPE'], ['leaving', 'O'], ['august', 'B-DATE'], ['tenth', 'E-DATE'], ['and', 'O'], ['returning', 'O'], ['august', 'B-DATE'], ['twelve', 'E-DATE']], [['i', 'O'], ['want', 'O'], ['a', 'O'], ['flight', 'O'], ['from', 'O'], ['nashville', 'S-GPE'], ['to', 'O'], ['seattle', 'S-GPE'], ['that', 'O'], ['arrives', 'O'], ['no', 'O'], ['later', 'O'], ['than', 'O'], ['3', 'B-TIME'], ['pm', 'E-TIME']]]

|

| 106 |

+

6267 sentences, 196 batches of size 32

|

| 107 |

+

|

| 108 |

+

Control example of InputFeatures

|

| 109 |

+

Input Ids: [101, 1045, 2215, 1037, 3462, 2013, 8423, 2000, 5862, 2008, 8480, 2053, 2101, 2084, 1017, 7610, 102, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]

|

| 110 |

+

Input Mask: [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]

|

| 111 |

+

Label Ids: [77, 1, 1, 1, 1, 1, 16, 1, 16, 1, 1, 1, 1, 1, 28, 30, 78, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]

|

| 112 |

+

Valid Ids: [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1]

|

| 113 |

+

Label Mask: [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]

|

| 114 |

+

Segment Ids: [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]

|

| 115 |

+

Adjusting learning rate of group 0 to 2.0000e-05.

|

| 116 |

+

|

| 117 |

0%| | 0/942 [00:00<?, ?it/s]

|

| 118 |

+

/home/9_QuAnTuM_6/cdaniel/venv_syntrans/lib/python3.8/site-packages/seqeval/metrics/sequence_labeling.py:171: UserWarning: [CLS] seems not to be NE tag.

|

| 119 |

+

warnings.warn('{} seems not to be NE tag.'.format(chunk))

|

| 120 |

+

/home/9_QuAnTuM_6/cdaniel/venv_syntrans/lib/python3.8/site-packages/seqeval/metrics/sequence_labeling.py:171: UserWarning: <unk> seems not to be NE tag.

|

| 121 |

+

warnings.warn('{} seems not to be NE tag.'.format(chunk))

|

| 122 |

+

/home/9_QuAnTuM_6/cdaniel/venv_syntrans/lib/python3.8/site-packages/seqeval/metrics/sequence_labeling.py:171: UserWarning: <START> seems not to be NE tag.

|

| 123 |

+

warnings.warn('{} seems not to be NE tag.'.format(chunk))

|

| 124 |

+

/home/9_QuAnTuM_6/cdaniel/venv_syntrans/lib/python3.8/site-packages/seqeval/metrics/sequence_labeling.py:171: UserWarning: X seems not to be NE tag.

|

| 125 |

+

warnings.warn('{} seems not to be NE tag.'.format(chunk))

|

| 126 |

+

/home/9_QuAnTuM_6/cdaniel/venv_syntrans/lib/python3.8/site-packages/seqeval/metrics/sequence_labeling.py:171: UserWarning: [SEP] seems not to be NE tag.

|

| 127 |

+

warnings.warn('{} seems not to be NE tag.'.format(chunk))

|

| 128 |

+

/home/9_QuAnTuM_6/cdaniel/venv_syntrans/lib/python3.8/site-packages/seqeval/metrics/sequence_labeling.py:171: UserWarning: <STOP> seems not to be NE tag.

|

| 129 |

+

warnings.warn('{} seems not to be NE tag.'.format(chunk))

|

| 130 |

+

O Token Predictions: 471383, NER token predictions: 32921

|

| 131 |

+

loss: 0.4831624427086608 w prec: 0.48333733331137896 w recall: 0.32263332619404583 w f1: 0.3743943894083346

|

| 132 |

+

|

| 133 |

0%| | 0/153 [00:00<?, ?it/s]

|

| 134 |

+

O Token Predictions: 68594, NER token predictions: 6306

|

| 135 |

+

loss: 0.24858878246125052 w prec: 0.5736847431314509 w recall: 0.5198167695678152 w f1: 0.5394522935165895

|

| 136 |

+

Adjusting learning rate of group 0 to 1.7200e-05.

|

| 137 |

+

|

| 138 |

0%| | 0/942 [00:00<?, ?it/s]

|

| 139 |

+

O Token Predictions: 457692, NER token predictions: 46666

|

| 140 |

+

loss: 0.23743267873828072 w prec: 0.642246843284797 w recall: 0.5536785178839152 w f1: 0.5869654887673703

|

| 141 |

+

|

| 142 |

0%| | 0/153 [00:00<?, ?it/s]

|

| 143 |

+

O Token Predictions: 67004, NER token predictions: 7896

|

| 144 |

+

loss: 0.19031139838150124 w prec: 0.6106517236837183 w recall: 0.6608245369448317 w f1: 0.6317247629054747

|

| 145 |

+

Adjusting learning rate of group 0 to 1.4400e-05.

|

| 146 |

+

|

| 147 |

0%| | 0/942 [00:00<?, ?it/s]

|

| 148 |

+

O Token Predictions: 452542, NER token predictions: 51816

|

| 149 |

+

loss: 0.17240141229723796 w prec: 0.6995943496786741 w recall: 0.6429374598415079 w f1: 0.6678698690045989

|

| 150 |

+

|

| 151 |

0%| | 0/153 [00:00<?, ?it/s]

|

| 152 |

+

O Token Predictions: 67555, NER token predictions: 7345

|

| 153 |

+

loss: 0.16576223912971472 w prec: 0.6909975902827813 w recall: 0.6671977693686517 w f1: 0.6759967434078691

|

| 154 |

+

Adjusting learning rate of group 0 to 1.1600e-05.

|

| 155 |

+

|

| 156 |

0%| | 0/942 [00:00<?, ?it/s]

|

| 157 |

+

O Token Predictions: 450026, NER token predictions: 54332

|

| 158 |

+

loss: 0.13777431711601984 w prec: 0.7394564960771575 w recall: 0.7012208181623474 w f1: 0.718659603222537

|

| 159 |

+

|

| 160 |

0%| | 0/153 [00:00<?, ?it/s]

|

| 161 |

+

O Token Predictions: 67201, NER token predictions: 7699

|

| 162 |

+

loss: 0.16132921111934326 w prec: 0.6829530578688375 w recall: 0.7128062139016133 w f1: 0.6956742392256572

|

| 163 |

+

Adjusting learning rate of group 0 to 8.8000e-06.

|

| 164 |

+

|

| 165 |

0%| | 0/942 [00:00<?, ?it/s]

|

| 166 |

+

O Token Predictions: 448629, NER token predictions: 55729

|

| 167 |

+

loss: 0.11438079141950405 w prec: 0.770823616445863 w recall: 0.7418344399228957 w f1: 0.7551456680915405

|

| 168 |

+

|

| 169 |

0%| | 0/153 [00:00<?, ?it/s]

|

| 170 |

+

O Token Predictions: 67145, NER token predictions: 7755

|

| 171 |

+

loss: 0.15573614080941756 w prec: 0.720899538634842 w recall: 0.7211710814578769 w f1: 0.7191736846769018

|

| 172 |

+

Adjusting learning rate of group 0 to 6.0000e-06.

|

| 173 |

+

|

| 174 |

0%| | 0/942 [00:00<?, ?it/s]

|

| 175 |

+

O Token Predictions: 447725, NER token predictions: 56633

|

| 176 |

+

loss: 0.09880779274579161 w prec: 0.7914035913278309 w recall: 0.7704005140286999 w f1: 0.780219783818005

|

| 177 |

+

|

| 178 |

0%| | 0/153 [00:00<?, ?it/s]

|

| 179 |

+

O Token Predictions: 66994, NER token predictions: 7906

|

| 180 |

+

loss: 0.15097037561578688 w prec: 0.7114692952948101 w recall: 0.7382991435968931 w f1: 0.7234172615803383

|

| 181 |

+

Adjusting learning rate of group 0 to 6.0000e-06.

|

| 182 |

+

|

| 183 |

0%| | 0/942 [00:00<?, ?it/s]

|

| 184 |

+

O Token Predictions: 447405, NER token predictions: 56953

|

| 185 |

+

loss: 0.0908111283029657 w prec: 0.8023543679818423 w recall: 0.7863300492610837 w f1: 0.7937841209593911

|

| 186 |

+

|

| 187 |

0%| | 0/153 [00:00<?, ?it/s]

|

| 188 |

+

loss: 0.15317525608112026 w prec: 0.7115102285753689 w recall: 0.7444732125074687 w f1: 0.7263377157668733

|

| 189 |

+

Adjusting learning rate of group 0 to 6.0000e-06.

|

| 190 |

+

Model evaluation

|

| 191 |

+

|

| 192 |

+

|

| 193 |

+

|

| 194 |

0%| | 0/196 [00:00<?, ?it/s]

|

| 195 |

+

/home/9_QuAnTuM_6/cdaniel/venv_syntrans/lib/python3.8/site-packages/seqeval/metrics/v1.py:57: UndefinedMetricWarning: Recall and F-score are ill-defined and being set to 0.0 in labels with no true samples. Use `zero_division` parameter to control this behavior.

|

| 196 |

+

_warn_prf(average, modifier, msg_start, len(result))

|

| 197 |

+

***** Test results *****

|

| 198 |

+

precision recall f1-score support

|

| 199 |

+

|

| 200 |

+

CARDINAL 0.7133 0.6503 0.6803 612

|

| 201 |

+

DATE 0.6922 0.7254 0.7084 1045

|

| 202 |

+

EVENT 0.4429 0.3875 0.4133 80

|

| 203 |

+

FAC 0.3390 0.3974 0.3659 151

|

| 204 |

+

GPE 0.8456 0.8714 0.8583 1936

|

| 205 |

+

LANGUAGE 0.5135 0.2468 0.3333 77

|

| 206 |

+

LAW 0.4130 0.3333 0.3689 57

|

| 207 |

+

LOC 0.5934 0.4977 0.5414 217

|

| 208 |

+

MONEY 0.5370 0.4754 0.5043 61

|

| 209 |

+

NORP 0.6211 0.7536 0.6809 422

|

| 210 |

+

ORDINAL 0.8208 0.8304 0.8256 171

|

| 211 |

+

ORG 0.5289 0.5869 0.5564 857

|

| 212 |

+

PERCENT 0.3333 0.4722 0.3908 36

|

| 213 |

+

PERSON 0.7192 0.7885 0.7523 1371

|

| 214 |

+

PRODUCT 0.2705 0.3367 0.3000 98

|

| 215 |

+

QUANTITY 0.3485 0.4340 0.3866 53

|

| 216 |

+

SEP] 0.0000 0.0000 0.0000 0

|

| 217 |

+

TIME 0.6071 0.6355 0.6210 214

|

| 218 |

+

WORK_OF_ART 0.3000 0.2538 0.2750 130

|

| 219 |

+

|

| 220 |

+

micro avg 0.6487 0.7110 0.6784 7588

|

| 221 |

+

macro avg 0.5073 0.5093 0.5033 7588

|

| 222 |

+

weighted avg 0.6821 0.7110 0.6946 7588

|

| 223 |

+

|

| 224 |

+

Special token predictions: 0

|

| 225 |

+

|

| 226 |

0%| | 0/942 [00:00<?, ?it/s]

|

| 227 |

+

O Token Predictions: 447026, NER token predictions: 57332

|

| 228 |

+

loss: 0.0834173557759834 w prec: 0.815729338081138 w recall: 0.8016705932747912 w f1: 0.8082084594984448

|

| 229 |

+

|

| 230 |

0%| | 0/153 [00:00<?, ?it/s]

|

| 231 |

+

loss: 0.15407624415249802 w prec: 0.7284351035722969 w recall: 0.7444732125074687 w f1: 0.7346851885936531

|

| 232 |

+

Adjusting learning rate of group 0 to 6.0000e-06.

|

| 233 |

+

Model evaluation

|

| 234 |

+

|

| 235 |

+

|

| 236 |

+

|

| 237 |

0%| | 0/196 [00:00<?, ?it/s]

|

| 238 |

+

***** Test results *****

|

| 239 |

+

precision recall f1-score support

|

| 240 |

+

|

| 241 |

+

CARDINAL 0.7030 0.6225 0.6603 612

|

| 242 |

+

DATE 0.7036 0.7177 0.7106 1045

|

| 243 |

+

EVENT 0.4133 0.3875 0.4000 80

|

| 244 |

+

FAC 0.3661 0.4437 0.4012 151

|

| 245 |

+

GPE 0.8721 0.8667 0.8694 1936

|

| 246 |

+

LANGUAGE 0.5758 0.2468 0.3455 77

|

| 247 |

+

LAW 0.3621 0.3684 0.3652 57

|

| 248 |

+

LOC 0.4978 0.5115 0.5045 217

|

| 249 |

+

MONEY 0.5849 0.5082 0.5439 61

|

| 250 |

+

NORP 0.6927 0.7156 0.7040 422

|

| 251 |

+

ORDINAL 0.8035 0.8129 0.8081 171

|

| 252 |

+

ORG 0.5158 0.5893 0.5501 857

|

| 253 |

+

PERCENT 0.3878 0.5278 0.4471 36

|

| 254 |

+

PERSON 0.7476 0.7994 0.7726 1371

|

| 255 |

+

PRODUCT 0.2742 0.3469 0.3063 98

|

| 256 |

+

QUANTITY 0.3443 0.3962 0.3684 53

|

| 257 |

+

SEP] 0.0000 0.0000 0.0000 0

|

| 258 |

+

TIME 0.5816 0.6495 0.6137 214

|

| 259 |

+

WORK_OF_ART 0.3544 0.2154 0.2679 130

|

| 260 |

+

|

| 261 |

+

micro avg 0.6680 0.7080 0.6874 7588

|

| 262 |

+

macro avg 0.5148 0.5119 0.5073 7588

|

| 263 |

+

weighted avg 0.6955 0.7080 0.6998 7588

|

| 264 |

+

|

| 265 |

+

Special token predictions: 0

|

| 266 |

+

|

| 267 |

0%| | 0/942 [00:00<?, ?it/s]

|

| 268 |

+

O Token Predictions: 446823, NER token predictions: 57535

|

| 269 |

+

loss: 0.07700805802633807 w prec: 0.8281029278359268 w recall: 0.8139590918826302 w f1: 0.8206010130635916

|

| 270 |

+

|

| 271 |

0%| | 0/153 [00:00<?, ?it/s]

|

| 272 |

+

loss: 0.1566143021888398 w prec: 0.7311165058086735 w recall: 0.7394941246763593 w f1: 0.7335244139037927

|

| 273 |

+

Adjusting learning rate of group 0 to 6.0000e-06.

|

| 274 |

+

Model evaluation

|

| 275 |

+

|

| 276 |

+

|

| 277 |

+

|

| 278 |

0%| | 0/196 [00:00<?, ?it/s]

|

| 279 |

+

***** Test results *****

|

| 280 |

+

precision recall f1-score support

|

| 281 |

+

|

| 282 |

+

CARDINAL 0.7269 0.6307 0.6754 612

|

| 283 |

+

DATE 0.6856 0.7053 0.6953 1045

|

| 284 |

+

EVENT 0.4286 0.4500 0.4390 80

|

| 285 |

+

FAC 0.3454 0.4437 0.3884 151

|

| 286 |

+

GPE 0.8709 0.8574 0.8641 1936

|

| 287 |

+

LANGUAGE 0.5758 0.2468 0.3455 77

|

| 288 |

+

LAW 0.4314 0.3860 0.4074 57

|

| 289 |

+

LOC 0.5829 0.4700 0.5204 217

|

| 290 |

+

MONEY 0.5085 0.4918 0.5000 61

|

| 291 |

+

NORP 0.7023 0.7156 0.7089 422

|

| 292 |

+

ORDINAL 0.8258 0.8596 0.8424 171

|

| 293 |

+

ORG 0.5300 0.5776 0.5528 857

|

| 294 |

+

PERCENT 0.4255 0.5556 0.4819 36

|

| 295 |

+

PERSON 0.7398 0.7841 0.7613 1371

|

| 296 |

+

PRODUCT 0.2975 0.3673 0.3288 98

|

| 297 |

+

QUANTITY 0.3284 0.4151 0.3667 53

|

| 298 |

+

SEP] 0.0000 0.0000 0.0000 0

|

| 299 |

+

TIME 0.5586 0.6682 0.6085 214

|

| 300 |

+

WORK_OF_ART 0.3010 0.2385 0.2661 130

|

| 301 |

+

|

| 302 |

+

micro avg 0.6607 0.7024 0.6809 7588

|

| 303 |

+

macro avg 0.5192 0.5191 0.5133 7588

|

| 304 |

+

weighted avg 0.6968 0.7024 0.6977 7588

|

| 305 |

+

|

| 306 |

+

Special token predictions: 0

|

| 307 |

+

|

| 308 |

0%| | 0/942 [00:00<?, ?it/s]

|

| 309 |

+

O Token Predictions: 446643, NER token predictions: 57715

|

| 310 |

+

loss: 0.07239252862554131 w prec: 0.8371405381680805 w recall: 0.8260066395373742 w f1: 0.8311608710414287

|

| 311 |

+

|

| 312 |

0%| | 0/153 [00:00<?, ?it/s]

|

| 313 |

+

loss: 0.15688133854540734 w prec: 0.7230917519740736 w recall: 0.7476598287193786 w f1: 0.7338068025133403

|

| 314 |

+

Adjusting learning rate of group 0 to 6.0000e-06.

|

| 315 |

+

Model evaluation

|

| 316 |

+

|

| 317 |

+

|

| 318 |

+

|

| 319 |

0%| | 0/196 [00:00<?, ?it/s]

|

| 320 |

+

precision recall f1-score support

|

| 321 |

+

|

| 322 |

+

CARDINAL 0.6809 0.6520 0.6661 612

|

| 323 |

+

DATE 0.6870 0.7225 0.7043 1045

|

| 324 |

+

EVENT 0.3977 0.4375 0.4167 80

|

| 325 |

+

FAC 0.3404 0.4238 0.3776 151

|

| 326 |

+

GPE 0.8799 0.8549 0.8672 1936

|

| 327 |

+

LANGUAGE 0.4906 0.3377 0.4000 77

|

| 328 |

+

LAW 0.4062 0.4561 0.4298 57

|

| 329 |

+

LOC 0.5000 0.5023 0.5011 217

|

| 330 |

+

MONEY 0.5161 0.5246 0.5203 61

|

| 331 |

+

NORP 0.6817 0.7512 0.7148 422

|

| 332 |

+

ORDINAL 0.8276 0.8421 0.8348 171

|

| 333 |

+

ORG 0.5455 0.5741 0.5594 857

|

| 334 |

+

PERCENT 0.5476 0.6389 0.5897 36

|

| 335 |

+

PERSON 0.7531 0.7943 0.7732 1371

|

| 336 |

+

PRODUCT 0.2937 0.4286 0.3485 98

|

| 337 |

+

QUANTITY 0.3492 0.4151 0.3793 53

|

| 338 |

+

SEP] 0.0000 0.0000 0.0000 0

|

| 339 |

+

TIME 0.5748 0.6822 0.6239 214

|

| 340 |

+

WORK_OF_ART 0.2963 0.2462 0.2689 130

|

| 341 |

+

|

| 342 |

+

micro avg 0.6736 0.7127 0.6926 7588

|

| 343 |

+

macro avg 0.5141 0.5413 0.5250 7588

|

| 344 |

+

weighted avg 0.6958 0.7127 0.7033 7588

|

| 345 |

+

|

| 346 |

+

Special token predictions: 0

|

| 347 |

+

Test Data

|

| 348 |

+

Loading NER labels from ./data/ud/**/*-test-orig.ner

|

| 349 |

+

en_atis-ud-test-orig.ner

|

| 350 |

+

num sentences: 586

|

| 351 |

+

en_cesl-ud-test-orig.ner

|

| 352 |

+

num sentences: 500

|

| 353 |

+

en_ewt-ud-test-orig.ner

|

| 354 |

+

num sentences: 1955

|

| 355 |

+

en_gum-ud-test-orig.ner

|

| 356 |

+

num sentences: 851

|

| 357 |

+

en_lines-ud-test-orig.ner

|

| 358 |

+

num sentences: 988

|

| 359 |

+

en_pud-ud-test-orig.ner

|

| 360 |

+

num sentences: 973

|

| 361 |

+

en_partut-ud-test-orig.ner

|

| 362 |

+

num sentences: 149

|

| 363 |

+

en_pronouns-ud-test-orig.ner

|

| 364 |

+

|

| 365 |

+

num sentences: 265

|

| 366 |

+

Example of NER labels: [[['what', 'O'], ['are', 'O'], ['the', 'O'], ['coach', 'O'], ['flights', 'O'], ['between', 'O'], ['dallas', 'S-GPE'], ['and', 'O'], ['baltimore', 'S-GPE'], ['leaving', 'O'], ['august', 'B-DATE'], ['tenth', 'E-DATE'], ['and', 'O'], ['returning', 'O'], ['august', 'B-DATE'], ['twelve', 'E-DATE']], [['i', 'O'], ['want', 'O'], ['a', 'O'], ['flight', 'O'], ['from', 'O'], ['nashville', 'S-GPE'], ['to', 'O'], ['seattle', 'S-GPE'], ['that', 'O'], ['arrives', 'O'], ['no', 'O'], ['later', 'O'], ['than', 'O'], ['3', 'B-TIME'], ['pm', 'E-TIME']]]

|

| 367 |

+

6267 sentences, 196 batches of size 32

|

| 368 |

+

|

| 369 |

+

Control example of InputFeatures

|

| 370 |

+

Input Ids: [101, 1045, 2215, 1037, 3462, 2013, 8423, 2000, 5862, 2008, 8480, 2053, 2101, 2084, 1017, 7610, 102, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]

|

| 371 |

+

Input Mask: [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]

|

| 372 |

+

Label Ids: [77, 1, 1, 1, 1, 1, 16, 1, 16, 1, 1, 1, 1, 1, 28, 30, 78, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]

|

| 373 |

+

Valid Ids: [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1]

|

| 374 |

+

Label Mask: [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]

|

| 375 |

+

Segment Ids: [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]

|

| 376 |

+

|

| 377 |

0%| | 0/196 [00:00<?, ?it/s]

|