Upload . with huggingface_hub

Browse files- README.md +434 -0

- config.json +21 -0

- confusion_matrix.png +0 -0

- model.pkl +3 -0

README.md

ADDED

|

@@ -0,0 +1,434 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

library_name: sklearn

|

| 3 |

+

tags:

|

| 4 |

+

- sklearn

|

| 5 |

+

- skops

|

| 6 |

+

- text-classification

|

| 7 |

+

---

|

| 8 |

+

|

| 9 |

+

# Model description

|

| 10 |

+

|

| 11 |

+

This is a neural net classifier and distilbert model chained with sklearn Pipeline trained on 20 news groups dataset.

|

| 12 |

+

|

| 13 |

+

## Intended uses & limitations

|

| 14 |

+

|

| 15 |

+

This model is trained for a tutorial and is not ready to be used in production.

|

| 16 |

+

|

| 17 |

+

## Training Procedure

|

| 18 |

+

|

| 19 |

+

### Hyperparameters

|

| 20 |

+

|

| 21 |

+

The model is trained with below hyperparameters.

|

| 22 |

+

|

| 23 |

+

<details>

|

| 24 |

+

<summary> Click to expand </summary>

|

| 25 |

+

|

| 26 |

+

| Hyperparameter | Value |

|

| 27 |

+

|------------------------------------------------|-----------------------------------------------------------------------------------------------------------------------------------------|

|

| 28 |

+

| memory | |

|

| 29 |

+

| steps | [('tokenizer', HuggingfacePretrainedTokenizer(tokenizer='distilbert-base-uncased')), ('net', <class 'skorch.classifier.NeuralNetClassifier'>[initialized](

|

| 30 |

+

module_=BertModule(

|

| 31 |

+

(bert): DistilBertForSequenceClassification(

|

| 32 |

+

(distilbert): DistilBertModel(

|

| 33 |

+

(embeddings): Embeddings(

|

| 34 |

+

(word_embeddings): Embedding(30522, 768, padding_idx=0)

|

| 35 |

+

(position_embeddings): Embedding(512, 768)

|

| 36 |

+

(LayerNorm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

|

| 37 |

+

(dropout): Dropout(p=0.1, inplace=False)

|

| 38 |

+

)

|

| 39 |

+

(transformer): Transformer(

|

| 40 |

+

(layer): ModuleList(

|

| 41 |

+

(0): TransformerBlock(

|

| 42 |

+

(attention): MultiHeadSelfAttention(

|

| 43 |

+

(dropout): Dropout(p=0.1, inplace=False)

|

| 44 |

+

(q_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 45 |

+

(k_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 46 |

+

(v_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 47 |

+

(out_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 48 |

+

)

|

| 49 |

+

(sa_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

|

| 50 |

+

(ffn): FFN(

|

| 51 |

+

(dropout): Dropout(p=0.1, inplace=False)

|

| 52 |

+

(lin1): Linear(in_features=768, out_features=3072, bias=True)

|

| 53 |

+

(lin2): Linear(in_features=3072, out_features=768, bias=True)

|

| 54 |

+

(activation): GELUActivation()

|

| 55 |

+

)

|

| 56 |

+

(output_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

|

| 57 |

+

)

|

| 58 |

+

(1): TransformerBlock(

|

| 59 |

+

(attention): MultiHeadSelfAttention(

|

| 60 |

+

(dropout): Dropout(p=0.1, inplace=False)

|

| 61 |

+

(q_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 62 |

+

(k_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 63 |

+

(v_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 64 |

+

(out_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 65 |

+

)

|

| 66 |

+

(sa_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

|

| 67 |

+

(ffn): FFN(

|

| 68 |

+

(dropout): Dropout(p=0.1, inplace=False)

|

| 69 |

+

(lin1): Linear(in_features=768, out_features=3072, bias=True)

|

| 70 |

+

(lin2): Linear(in_features=3072, out_features=768, bias=True)

|

| 71 |

+

(activation): GELUActivation()

|

| 72 |

+

)

|

| 73 |

+

(output_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

|

| 74 |

+

)

|

| 75 |

+

(2): TransformerBlock(

|

| 76 |

+

(attention): MultiHeadSelfAttention(

|

| 77 |

+

(dropout): Dropout(p=0.1, inplace=False)

|

| 78 |

+

(q_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 79 |

+

(k_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 80 |

+

(v_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 81 |

+

(out_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 82 |

+

)

|

| 83 |

+

(sa_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

|

| 84 |

+

(ffn): FFN(

|

| 85 |

+

(dropout): Dropout(p=0.1, inplace=False)

|

| 86 |

+

(lin1): Linear(in_features=768, out_features=3072, bias=True)

|

| 87 |

+

(lin2): Linear(in_features=3072, out_features=768, bias=True)

|

| 88 |

+

(activation): GELUActivation()

|

| 89 |

+

)

|

| 90 |

+

(output_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

|

| 91 |

+

)

|

| 92 |

+

(3): TransformerBlock(

|

| 93 |

+

(attention): MultiHeadSelfAttention(

|

| 94 |

+

(dropout): Dropout(p=0.1, inplace=False)

|

| 95 |

+

(q_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 96 |

+

(k_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 97 |

+

(v_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 98 |

+

(out_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 99 |

+

)

|

| 100 |

+

(sa_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

|

| 101 |

+

(ffn): FFN(

|

| 102 |

+

(dropout): Dropout(p=0.1, inplace=False)

|

| 103 |

+

(lin1): Linear(in_features=768, out_features=3072, bias=True)

|

| 104 |

+

(lin2): Linear(in_features=3072, out_features=768, bias=True)

|

| 105 |

+

(activation): GELUActivation()

|

| 106 |

+

)

|

| 107 |

+

(output_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

|

| 108 |

+

)

|

| 109 |

+

(4): TransformerBlock(

|

| 110 |

+

(attention): MultiHeadSelfAttention(

|

| 111 |

+

(dropout): Dropout(p=0.1, inplace=False)

|

| 112 |

+

(q_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 113 |

+

(k_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 114 |

+

(v_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 115 |

+

(out_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 116 |

+

)

|

| 117 |

+

(sa_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

|

| 118 |

+

(ffn): FFN(

|

| 119 |

+

(dropout): Dropout(p=0.1, inplace=False)

|

| 120 |

+

(lin1): Linear(in_features=768, out_features=3072, bias=True)

|

| 121 |

+

(lin2): Linear(in_features=3072, out_features=768, bias=True)

|

| 122 |

+

(activation): GELUActivation()

|

| 123 |

+

)

|

| 124 |

+

(output_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

|

| 125 |

+

)

|

| 126 |

+

(5): TransformerBlock(

|

| 127 |

+

(attention): MultiHeadSelfAttention(

|

| 128 |

+

(dropout): Dropout(p=0.1, inplace=False)

|

| 129 |

+

(q_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 130 |

+

(k_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 131 |

+

(v_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 132 |

+

(out_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 133 |

+

)

|

| 134 |

+

(sa_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

|

| 135 |

+

(ffn): FFN(

|

| 136 |

+

(dropout): Dropout(p=0.1, inplace=False)

|

| 137 |

+

(lin1): Linear(in_features=768, out_features=3072, bias=True)

|

| 138 |

+

(lin2): Linear(in_features=3072, out_features=768, bias=True)

|

| 139 |

+

(activation): GELUActivation()

|

| 140 |

+

)

|

| 141 |

+

(output_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

|

| 142 |

+

)

|

| 143 |

+

)

|

| 144 |

+

)

|

| 145 |

+

)

|

| 146 |

+

(pre_classifier): Linear(in_features=768, out_features=768, bias=True)

|

| 147 |

+

(classifier): Linear(in_features=768, out_features=20, bias=True)

|

| 148 |

+

(dropout): Dropout(p=0.2, inplace=False)

|

| 149 |

+

)

|

| 150 |

+

),

|

| 151 |

+

))] |

|

| 152 |

+

| verbose | False |

|

| 153 |

+

| tokenizer | HuggingfacePretrainedTokenizer(tokenizer='distilbert-base-uncased') |

|

| 154 |

+

| net | <class 'skorch.classifier.NeuralNetClassifier'>[initialized](

|

| 155 |

+

module_=BertModule(

|

| 156 |

+

(bert): DistilBertForSequenceClassification(

|

| 157 |

+

(distilbert): DistilBertModel(

|

| 158 |

+

(embeddings): Embeddings(

|

| 159 |

+

(word_embeddings): Embedding(30522, 768, padding_idx=0)

|

| 160 |

+

(position_embeddings): Embedding(512, 768)

|

| 161 |

+

(LayerNorm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

|

| 162 |

+

(dropout): Dropout(p=0.1, inplace=False)

|

| 163 |

+

)

|

| 164 |

+

(transformer): Transformer(

|

| 165 |

+

(layer): ModuleList(

|

| 166 |

+

(0): TransformerBlock(

|

| 167 |

+

(attention): MultiHeadSelfAttention(

|

| 168 |

+

(dropout): Dropout(p=0.1, inplace=False)

|

| 169 |

+

(q_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 170 |

+

(k_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 171 |

+

(v_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 172 |

+

(out_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 173 |

+

)

|

| 174 |

+

(sa_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

|

| 175 |

+

(ffn): FFN(

|

| 176 |

+

(dropout): Dropout(p=0.1, inplace=False)

|

| 177 |

+

(lin1): Linear(in_features=768, out_features=3072, bias=True)

|

| 178 |

+

(lin2): Linear(in_features=3072, out_features=768, bias=True)

|

| 179 |

+

(activation): GELUActivation()

|

| 180 |

+

)

|

| 181 |

+

(output_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

|

| 182 |

+

)

|

| 183 |

+

(1): TransformerBlock(

|

| 184 |

+

(attention): MultiHeadSelfAttention(

|

| 185 |

+

(dropout): Dropout(p=0.1, inplace=False)

|

| 186 |

+

(q_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 187 |

+

(k_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 188 |

+

(v_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 189 |

+

(out_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 190 |

+

)

|

| 191 |

+

(sa_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

|

| 192 |

+

(ffn): FFN(

|

| 193 |

+

(dropout): Dropout(p=0.1, inplace=False)

|

| 194 |

+

(lin1): Linear(in_features=768, out_features=3072, bias=True)

|

| 195 |

+

(lin2): Linear(in_features=3072, out_features=768, bias=True)

|

| 196 |

+

(activation): GELUActivation()

|

| 197 |

+

)

|

| 198 |

+

(output_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

|

| 199 |

+

)

|

| 200 |

+

(2): TransformerBlock(

|

| 201 |

+

(attention): MultiHeadSelfAttention(

|

| 202 |

+

(dropout): Dropout(p=0.1, inplace=False)

|

| 203 |

+

(q_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 204 |

+

(k_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 205 |

+

(v_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 206 |

+

(out_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 207 |

+

)

|

| 208 |

+

(sa_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

|

| 209 |

+

(ffn): FFN(

|

| 210 |

+

(dropout): Dropout(p=0.1, inplace=False)

|

| 211 |

+

(lin1): Linear(in_features=768, out_features=3072, bias=True)

|

| 212 |

+

(lin2): Linear(in_features=3072, out_features=768, bias=True)

|

| 213 |

+

(activation): GELUActivation()

|

| 214 |

+

)

|

| 215 |

+

(output_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

|

| 216 |

+

)

|

| 217 |

+

(3): TransformerBlock(

|

| 218 |

+

(attention): MultiHeadSelfAttention(

|

| 219 |

+

(dropout): Dropout(p=0.1, inplace=False)

|

| 220 |

+

(q_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 221 |

+

(k_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 222 |

+

(v_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 223 |

+

(out_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 224 |

+

)

|

| 225 |

+

(sa_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

|

| 226 |

+

(ffn): FFN(

|

| 227 |

+

(dropout): Dropout(p=0.1, inplace=False)

|

| 228 |

+

(lin1): Linear(in_features=768, out_features=3072, bias=True)

|

| 229 |

+

(lin2): Linear(in_features=3072, out_features=768, bias=True)

|

| 230 |

+

(activation): GELUActivation()

|

| 231 |

+

)

|

| 232 |

+

(output_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

|

| 233 |

+

)

|

| 234 |

+

(4): TransformerBlock(

|

| 235 |

+

(attention): MultiHeadSelfAttention(

|

| 236 |

+

(dropout): Dropout(p=0.1, inplace=False)

|

| 237 |

+

(q_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 238 |

+

(k_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 239 |

+

(v_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 240 |

+

(out_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 241 |

+

)

|

| 242 |

+

(sa_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

|

| 243 |

+

(ffn): FFN(

|

| 244 |

+

(dropout): Dropout(p=0.1, inplace=False)

|

| 245 |

+

(lin1): Linear(in_features=768, out_features=3072, bias=True)

|

| 246 |

+

(lin2): Linear(in_features=3072, out_features=768, bias=True)

|

| 247 |

+

(activation): GELUActivation()

|

| 248 |

+

)

|

| 249 |

+

(output_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

|

| 250 |

+

)

|

| 251 |

+

(5): TransformerBlock(

|

| 252 |

+

(attention): MultiHeadSelfAttention(

|

| 253 |

+

(dropout): Dropout(p=0.1, inplace=False)

|

| 254 |

+

(q_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 255 |

+

(k_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 256 |

+

(v_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 257 |

+

(out_lin): Linear(in_features=768, out_features=768, bias=True)

|

| 258 |

+

)

|

| 259 |

+

(sa_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

|

| 260 |

+

(ffn): FFN(

|

| 261 |

+

(dropout): Dropout(p=0.1, inplace=False)

|

| 262 |

+

(lin1): Linear(in_features=768, out_features=3072, bias=True)

|

| 263 |

+

(lin2): Linear(in_features=3072, out_features=768, bias=True)

|

| 264 |

+

(activation): GELUActivation()

|

| 265 |

+

)

|

| 266 |

+

(output_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)

|

| 267 |

+

)

|

| 268 |

+

)

|

| 269 |

+

)

|

| 270 |

+

)

|

| 271 |

+

(pre_classifier): Linear(in_features=768, out_features=768, bias=True)

|

| 272 |

+

(classifier): Linear(in_features=768, out_features=20, bias=True)

|

| 273 |

+

(dropout): Dropout(p=0.2, inplace=False)

|

| 274 |

+

)

|

| 275 |

+

),

|

| 276 |

+

) |

|

| 277 |

+

| tokenizer__max_length | 256 |

|

| 278 |

+

| tokenizer__return_attention_mask | True |

|

| 279 |

+

| tokenizer__return_length | False |

|

| 280 |

+

| tokenizer__return_tensors | pt |

|

| 281 |

+

| tokenizer__return_token_type_ids | False |

|

| 282 |

+

| tokenizer__tokenizer | distilbert-base-uncased |

|

| 283 |

+

| tokenizer__train | False |

|

| 284 |

+

| tokenizer__verbose | 0 |

|

| 285 |

+

| tokenizer__vocab_size | |

|

| 286 |

+

| net__module | <class '__main__.BertModule'> |

|

| 287 |

+

| net__criterion | <class 'torch.nn.modules.loss.CrossEntropyLoss'> |

|

| 288 |

+

| net__optimizer | <class 'torch.optim.adamw.AdamW'> |

|

| 289 |

+

| net__lr | 5e-05 |

|

| 290 |

+

| net__max_epochs | 3 |

|

| 291 |

+

| net__batch_size | 8 |

|

| 292 |

+

| net__iterator_train | <class 'torch.utils.data.dataloader.DataLoader'> |

|

| 293 |

+

| net__iterator_valid | <class 'torch.utils.data.dataloader.DataLoader'> |

|

| 294 |

+

| net__dataset | <class 'skorch.dataset.Dataset'> |

|

| 295 |

+

| net__train_split | <skorch.dataset.ValidSplit object at 0x7f9945e18c90> |

|

| 296 |

+

| net__callbacks | [<skorch.callbacks.lr_scheduler.LRScheduler object at 0x7f9945da85d0>, <skorch.callbacks.logging.ProgressBar object at 0x7f9945da8250>] |

|

| 297 |

+

| net__predict_nonlinearity | auto |

|

| 298 |

+

| net__warm_start | False |

|

| 299 |

+

| net__verbose | 1 |

|

| 300 |

+

| net__device | cuda |

|

| 301 |

+

| net___params_to_validate | {'module__num_labels', 'module__name', 'iterator_train__shuffle'} |

|

| 302 |

+

| net__module__name | distilbert-base-uncased |

|

| 303 |

+

| net__module__num_labels | 20 |

|

| 304 |

+

| net__iterator_train__shuffle | True |

|

| 305 |

+

| net__classes | |

|

| 306 |

+

| net__callbacks__epoch_timer | <skorch.callbacks.logging.EpochTimer object at 0x7f993cb300d0> |

|

| 307 |

+

| net__callbacks__train_loss | <skorch.callbacks.scoring.PassthroughScoring object at 0x7f993cb306d0> |

|

| 308 |

+

| net__callbacks__train_loss__name | train_loss |

|

| 309 |

+

| net__callbacks__train_loss__lower_is_better | True |

|

| 310 |

+

| net__callbacks__train_loss__on_train | True |

|

| 311 |

+

| net__callbacks__valid_loss | <skorch.callbacks.scoring.PassthroughScoring object at 0x7f993cb30ed0> |

|

| 312 |

+

| net__callbacks__valid_loss__name | valid_loss |

|

| 313 |

+

| net__callbacks__valid_loss__lower_is_better | True |

|

| 314 |

+

| net__callbacks__valid_loss__on_train | False |

|

| 315 |

+

| net__callbacks__valid_acc | <skorch.callbacks.scoring.EpochScoring object at 0x7f993cb30410> |

|

| 316 |

+

| net__callbacks__valid_acc__scoring | accuracy |

|

| 317 |

+

| net__callbacks__valid_acc__lower_is_better | False |

|

| 318 |

+

| net__callbacks__valid_acc__on_train | False |

|

| 319 |

+

| net__callbacks__valid_acc__name | valid_acc |

|

| 320 |

+

| net__callbacks__valid_acc__target_extractor | <function to_numpy at 0x7f9945e46a70> |

|

| 321 |

+

| net__callbacks__valid_acc__use_caching | True |

|

| 322 |

+

| net__callbacks__LRScheduler | <skorch.callbacks.lr_scheduler.LRScheduler object at 0x7f9945da85d0> |

|

| 323 |

+

| net__callbacks__LRScheduler__policy | <class 'torch.optim.lr_scheduler.LambdaLR'> |

|

| 324 |

+

| net__callbacks__LRScheduler__monitor | train_loss |

|

| 325 |

+

| net__callbacks__LRScheduler__event_name | event_lr |

|

| 326 |

+

| net__callbacks__LRScheduler__step_every | batch |

|

| 327 |

+

| net__callbacks__LRScheduler__lr_lambda | <function lr_schedule at 0x7f9945d9c440> |

|

| 328 |

+

| net__callbacks__ProgressBar | <skorch.callbacks.logging.ProgressBar object at 0x7f9945da8250> |

|

| 329 |

+

| net__callbacks__ProgressBar__batches_per_epoch | auto |

|

| 330 |

+

| net__callbacks__ProgressBar__detect_notebook | True |

|

| 331 |

+

| net__callbacks__ProgressBar__postfix_keys | ['train_loss', 'valid_loss'] |

|

| 332 |

+

| net__callbacks__print_log | <skorch.callbacks.logging.PrintLog object at 0x7f993cb30dd0> |

|

| 333 |

+

| net__callbacks__print_log__keys_ignored | |

|

| 334 |

+

| net__callbacks__print_log__sink | <built-in function print> |

|

| 335 |

+

| net__callbacks__print_log__tablefmt | simple |

|

| 336 |

+

| net__callbacks__print_log__floatfmt | .4f |

|

| 337 |

+

| net__callbacks__print_log__stralign | right |

|

| 338 |

+

|

| 339 |

+

</details>

|

| 340 |

+

|

| 341 |

+

### Model Plot

|

| 342 |

+

|

| 343 |

+

The model plot is below.

|

| 344 |

+

|

| 345 |

+

<style>#sk-4e25a02e-dd88-4cf5-9fc1-aa5db6749fbb {color: black;background-color: white;}#sk-4e25a02e-dd88-4cf5-9fc1-aa5db6749fbb pre{padding: 0;}#sk-4e25a02e-dd88-4cf5-9fc1-aa5db6749fbb div.sk-toggleable {background-color: white;}#sk-4e25a02e-dd88-4cf5-9fc1-aa5db6749fbb label.sk-toggleable__label {cursor: pointer;display: block;width: 100%;margin-bottom: 0;padding: 0.3em;box-sizing: border-box;text-align: center;}#sk-4e25a02e-dd88-4cf5-9fc1-aa5db6749fbb label.sk-toggleable__label-arrow:before {content: "▸";float: left;margin-right: 0.25em;color: #696969;}#sk-4e25a02e-dd88-4cf5-9fc1-aa5db6749fbb label.sk-toggleable__label-arrow:hover:before {color: black;}#sk-4e25a02e-dd88-4cf5-9fc1-aa5db6749fbb div.sk-estimator:hover label.sk-toggleable__label-arrow:before {color: black;}#sk-4e25a02e-dd88-4cf5-9fc1-aa5db6749fbb div.sk-toggleable__content {max-height: 0;max-width: 0;overflow: hidden;text-align: left;background-color: #f0f8ff;}#sk-4e25a02e-dd88-4cf5-9fc1-aa5db6749fbb div.sk-toggleable__content pre {margin: 0.2em;color: black;border-radius: 0.25em;background-color: #f0f8ff;}#sk-4e25a02e-dd88-4cf5-9fc1-aa5db6749fbb input.sk-toggleable__control:checked~div.sk-toggleable__content {max-height: 200px;max-width: 100%;overflow: auto;}#sk-4e25a02e-dd88-4cf5-9fc1-aa5db6749fbb input.sk-toggleable__control:checked~label.sk-toggleable__label-arrow:before {content: "▾";}#sk-4e25a02e-dd88-4cf5-9fc1-aa5db6749fbb div.sk-estimator input.sk-toggleable__control:checked~label.sk-toggleable__label {background-color: #d4ebff;}#sk-4e25a02e-dd88-4cf5-9fc1-aa5db6749fbb div.sk-label input.sk-toggleable__control:checked~label.sk-toggleable__label {background-color: #d4ebff;}#sk-4e25a02e-dd88-4cf5-9fc1-aa5db6749fbb input.sk-hidden--visually {border: 0;clip: rect(1px 1px 1px 1px);clip: rect(1px, 1px, 1px, 1px);height: 1px;margin: -1px;overflow: hidden;padding: 0;position: absolute;width: 1px;}#sk-4e25a02e-dd88-4cf5-9fc1-aa5db6749fbb div.sk-estimator {font-family: monospace;background-color: #f0f8ff;border: 1px dotted black;border-radius: 0.25em;box-sizing: border-box;margin-bottom: 0.5em;}#sk-4e25a02e-dd88-4cf5-9fc1-aa5db6749fbb div.sk-estimator:hover {background-color: #d4ebff;}#sk-4e25a02e-dd88-4cf5-9fc1-aa5db6749fbb div.sk-parallel-item::after {content: "";width: 100%;border-bottom: 1px solid gray;flex-grow: 1;}#sk-4e25a02e-dd88-4cf5-9fc1-aa5db6749fbb div.sk-label:hover label.sk-toggleable__label {background-color: #d4ebff;}#sk-4e25a02e-dd88-4cf5-9fc1-aa5db6749fbb div.sk-serial::before {content: "";position: absolute;border-left: 1px solid gray;box-sizing: border-box;top: 2em;bottom: 0;left: 50%;}#sk-4e25a02e-dd88-4cf5-9fc1-aa5db6749fbb div.sk-serial {display: flex;flex-direction: column;align-items: center;background-color: white;padding-right: 0.2em;padding-left: 0.2em;}#sk-4e25a02e-dd88-4cf5-9fc1-aa5db6749fbb div.sk-item {z-index: 1;}#sk-4e25a02e-dd88-4cf5-9fc1-aa5db6749fbb div.sk-parallel {display: flex;align-items: stretch;justify-content: center;background-color: white;}#sk-4e25a02e-dd88-4cf5-9fc1-aa5db6749fbb div.sk-parallel::before {content: "";position: absolute;border-left: 1px solid gray;box-sizing: border-box;top: 2em;bottom: 0;left: 50%;}#sk-4e25a02e-dd88-4cf5-9fc1-aa5db6749fbb div.sk-parallel-item {display: flex;flex-direction: column;position: relative;background-color: white;}#sk-4e25a02e-dd88-4cf5-9fc1-aa5db6749fbb div.sk-parallel-item:first-child::after {align-self: flex-end;width: 50%;}#sk-4e25a02e-dd88-4cf5-9fc1-aa5db6749fbb div.sk-parallel-item:last-child::after {align-self: flex-start;width: 50%;}#sk-4e25a02e-dd88-4cf5-9fc1-aa5db6749fbb div.sk-parallel-item:only-child::after {width: 0;}#sk-4e25a02e-dd88-4cf5-9fc1-aa5db6749fbb div.sk-dashed-wrapped {border: 1px dashed gray;margin: 0 0.4em 0.5em 0.4em;box-sizing: border-box;padding-bottom: 0.4em;background-color: white;position: relative;}#sk-4e25a02e-dd88-4cf5-9fc1-aa5db6749fbb div.sk-label label {font-family: monospace;font-weight: bold;background-color: white;display: inline-block;line-height: 1.2em;}#sk-4e25a02e-dd88-4cf5-9fc1-aa5db6749fbb div.sk-label-container {position: relative;z-index: 2;text-align: center;}#sk-4e25a02e-dd88-4cf5-9fc1-aa5db6749fbb div.sk-container {/* jupyter's `normalize.less` sets `[hidden] { display: none; }` but bootstrap.min.css set `[hidden] { display: none !important; }` so we also need the `!important` here to be able to override the default hidden behavior on the sphinx rendered scikit-learn.org. See: https://github.com/scikit-learn/scikit-learn/issues/21755 */display: inline-block !important;position: relative;}#sk-4e25a02e-dd88-4cf5-9fc1-aa5db6749fbb div.sk-text-repr-fallback {display: none;}</style><div id="sk-4e25a02e-dd88-4cf5-9fc1-aa5db6749fbb" class="sk-top-container"><div class="sk-text-repr-fallback"><pre>Pipeline(steps=[('tokenizer',HuggingfacePretrainedTokenizer(tokenizer='distilbert-base-uncased')),('net',<class 'skorch.classifier.NeuralNetClassifier'>[initialized](module_=BertModule((bert): DistilBertForSequenceClassification((distilbert): DistilBertModel((embeddings): Embeddings((word_embeddings): Embedding(30522, 768, padding_idx=0)(position_embeddin...(lin1): Linear(in_features=768, out_features=3072, bias=True)(lin2): Linear(in_features=3072, out_features=768, bias=True)(activation): GELUActivation())(output_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)))))(pre_classifier): Linear(in_features=768, out_features=768, bias=True)(classifier): Linear(in_features=768, out_features=20, bias=True)(dropout): Dropout(p=0.2, inplace=False))),

|

| 346 |

+

))])</pre><b>Please rerun this cell to show the HTML repr or trust the notebook.</b></div><div class="sk-container" hidden><div class="sk-item sk-dashed-wrapped"><div class="sk-label-container"><div class="sk-label sk-toggleable"><input class="sk-toggleable__control sk-hidden--visually" id="4905268f-3ec2-45fc-8bc7-80d9200ae5a5" type="checkbox" ><label for="4905268f-3ec2-45fc-8bc7-80d9200ae5a5" class="sk-toggleable__label sk-toggleable__label-arrow">Pipeline</label><div class="sk-toggleable__content"><pre>Pipeline(steps=[('tokenizer',HuggingfacePretrainedTokenizer(tokenizer='distilbert-base-uncased')),('net',<class 'skorch.classifier.NeuralNetClassifier'>[initialized](module_=BertModule((bert): DistilBertForSequenceClassification((distilbert): DistilBertModel((embeddings): Embeddings((word_embeddings): Embedding(30522, 768, padding_idx=0)(position_embeddin...(lin1): Linear(in_features=768, out_features=3072, bias=True)(lin2): Linear(in_features=3072, out_features=768, bias=True)(activation): GELUActivation())(output_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)))))(pre_classifier): Linear(in_features=768, out_features=768, bias=True)(classifier): Linear(in_features=768, out_features=20, bias=True)(dropout): Dropout(p=0.2, inplace=False))),

|

| 347 |

+

))])</pre></div></div></div><div class="sk-serial"><div class="sk-item"><div class="sk-estimator sk-toggleable"><input class="sk-toggleable__control sk-hidden--visually" id="4c9a801f-37d5-4fdb-9892-222c86b927bf" type="checkbox" ><label for="4c9a801f-37d5-4fdb-9892-222c86b927bf" class="sk-toggleable__label sk-toggleable__label-arrow">HuggingfacePretrainedTokenizer</label><div class="sk-toggleable__content"><pre>HuggingfacePretrainedTokenizer(tokenizer='distilbert-base-uncased')</pre></div></div></div><div class="sk-item"><div class="sk-estimator sk-toggleable"><input class="sk-toggleable__control sk-hidden--visually" id="062dd9ff-2b54-4166-90fc-fa3276cd482a" type="checkbox" ><label for="062dd9ff-2b54-4166-90fc-fa3276cd482a" class="sk-toggleable__label sk-toggleable__label-arrow">NeuralNetClassifier</label><div class="sk-toggleable__content"><pre><class 'skorch.classifier.NeuralNetClassifier'>[initialized](module_=BertModule((bert): DistilBertForSequenceClassification((distilbert): DistilBertModel((embeddings): Embeddings((word_embeddings): Embedding(30522, 768, padding_idx=0)(position_embeddings): Embedding(512, 768)(LayerNorm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)(dropout): Dropout(p=0.1, inplace=False))(transformer): Transformer((layer): ModuleList((0): TransformerBlock((attention): MultiHeadSelfAttention((dropout): Dropout(p=0.1, inplace=False)(q_lin): Linear(in_features=768, out_features=768, bias=True)(k_lin): Linear(in_features=768, out_features=768, bias=True)(v_lin): Linear(in_features=768, out_features=768, bias=True)(out_lin): Linear(in_features=768, out_features=768, bias=True))(sa_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)(ffn): FFN((dropout): Dropout(p=0.1, inplace=False)(lin1): Linear(in_features=768, out_features=3072, bias=True)(lin2): Linear(in_features=3072, out_features=768, bias=True)(activation): GELUActivation())(output_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True))(1): TransformerBlock((attention): MultiHeadSelfAttention((dropout): Dropout(p=0.1, inplace=False)(q_lin): Linear(in_features=768, out_features=768, bias=True)(k_lin): Linear(in_features=768, out_features=768, bias=True)(v_lin): Linear(in_features=768, out_features=768, bias=True)(out_lin): Linear(in_features=768, out_features=768, bias=True))(sa_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)(ffn): FFN((dropout): Dropout(p=0.1, inplace=False)(lin1): Linear(in_features=768, out_features=3072, bias=True)(lin2): Linear(in_features=3072, out_features=768, bias=True)(activation): GELUActivation())(output_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True))(2): TransformerBlock((attention): MultiHeadSelfAttention((dropout): Dropout(p=0.1, inplace=False)(q_lin): Linear(in_features=768, out_features=768, bias=True)(k_lin): Linear(in_features=768, out_features=768, bias=True)(v_lin): Linear(in_features=768, out_features=768, bias=True)(out_lin): Linear(in_features=768, out_features=768, bias=True))(sa_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)(ffn): FFN((dropout): Dropout(p=0.1, inplace=False)(lin1): Linear(in_features=768, out_features=3072, bias=True)(lin2): Linear(in_features=3072, out_features=768, bias=True)(activation): GELUActivation())(output_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True))(3): TransformerBlock((attention): MultiHeadSelfAttention((dropout): Dropout(p=0.1, inplace=False)(q_lin): Linear(in_features=768, out_features=768, bias=True)(k_lin): Linear(in_features=768, out_features=768, bias=True)(v_lin): Linear(in_features=768, out_features=768, bias=True)(out_lin): Linear(in_features=768, out_features=768, bias=True))(sa_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)(ffn): FFN((dropout): Dropout(p=0.1, inplace=False)(lin1): Linear(in_features=768, out_features=3072, bias=True)(lin2): Linear(in_features=3072, out_features=768, bias=True)(activation): GELUActivation())(output_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True))(4): TransformerBlock((attention): MultiHeadSelfAttention((dropout): Dropout(p=0.1, inplace=False)(q_lin): Linear(in_features=768, out_features=768, bias=True)(k_lin): Linear(in_features=768, out_features=768, bias=True)(v_lin): Linear(in_features=768, out_features=768, bias=True)(out_lin): Linear(in_features=768, out_features=768, bias=True))(sa_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)(ffn): FFN((dropout): Dropout(p=0.1, inplace=False)(lin1): Linear(in_features=768, out_features=3072, bias=True)(lin2): Linear(in_features=3072, out_features=768, bias=True)(activation): GELUActivation())(output_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True))(5): TransformerBlock((attention): MultiHeadSelfAttention((dropout): Dropout(p=0.1, inplace=False)(q_lin): Linear(in_features=768, out_features=768, bias=True)(k_lin): Linear(in_features=768, out_features=768, bias=True)(v_lin): Linear(in_features=768, out_features=768, bias=True)(out_lin): Linear(in_features=768, out_features=768, bias=True))(sa_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)(ffn): FFN((dropout): Dropout(p=0.1, inplace=False)(lin1): Linear(in_features=768, out_features=3072, bias=True)(lin2): Linear(in_features=3072, out_features=768, bias=True)(activation): GELUActivation())(output_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True)))))(pre_classifier): Linear(in_features=768, out_features=768, bias=True)(classifier): Linear(in_features=768, out_features=20, bias=True)(dropout): Dropout(p=0.2, inplace=False))),

|

| 348 |

+

)</pre></div></div></div></div></div></div></div>

|

| 349 |

+

|

| 350 |

+

## Evaluation Results

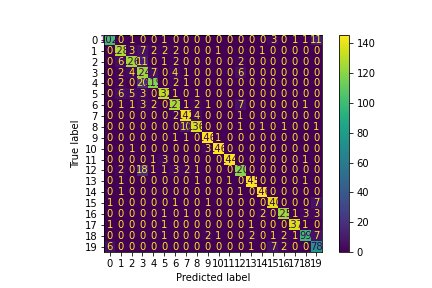

|

| 351 |

+

|

| 352 |

+

You can find the details about evaluation process and the evaluation results.

|

| 353 |

+

|

| 354 |

+

|

| 355 |

+

|

| 356 |

+

| Metric | Value |

|

| 357 |

+

|----------|---------|

|

| 358 |

+

| accuracy | 0.90562 |

|

| 359 |

+

| f1 score | 0.90562 |

|

| 360 |

+

|

| 361 |

+

# How to Get Started with the Model

|

| 362 |

+

|

| 363 |

+

Use the code below to get started with the model.

|

| 364 |

+

|

| 365 |

+

<details>

|

| 366 |

+

<summary> Click to expand </summary>

|

| 367 |

+

|

| 368 |

+

```python

|

| 369 |

+

[More Information Needed]

|

| 370 |

+

```

|

| 371 |

+

|

| 372 |

+

</details>

|

| 373 |

+

|

| 374 |

+

|

| 375 |

+

|

| 376 |

+

|

| 377 |

+

# Model Card Authors

|

| 378 |

+

|

| 379 |

+

This model card is written by following authors:

|

| 380 |

+

|

| 381 |

+

[More Information Needed]

|

| 382 |

+

|

| 383 |

+

# Model Card Contact

|

| 384 |

+

|

| 385 |

+

You can contact the model card authors through following channels:

|

| 386 |

+

[More Information Needed]

|

| 387 |

+

|

| 388 |

+

# Citation

|

| 389 |

+

|

| 390 |

+

Below you can find information related to citation.

|

| 391 |

+

|

| 392 |

+

**BibTeX:**

|

| 393 |

+

```

|

| 394 |

+

[More Information Needed]

|

| 395 |

+

```

|

| 396 |

+

|

| 397 |

+

|

| 398 |

+

# Additional Content

|

| 399 |

+

|

| 400 |

+

## Confusion matrix

|

| 401 |

+

|

| 402 |

+

|

| 403 |

+

|

| 404 |

+

## Classification Report

|

| 405 |

+

|

| 406 |

+

<details>

|

| 407 |

+

<summary> Click to expand </summary>

|

| 408 |

+

|

| 409 |

+

| index | precision | recall | f1-score | support |

|

| 410 |

+

|--------------------------|-------------|----------|------------|-----------|

|

| 411 |

+

| alt.atheism | 0.927273 | 0.85 | 0.886957 | 120 |

|

| 412 |

+

| comp.graphics | 0.85906 | 0.876712 | 0.867797 | 146 |

|

| 413 |

+

| comp.os.ms-windows.misc | 0.893617 | 0.851351 | 0.871972 | 148 |

|

| 414 |

+

| comp.sys.ibm.pc.hardware | 0.666667 | 0.837838 | 0.742515 | 148 |

|

| 415 |

+

| comp.sys.mac.hardware | 0.901515 | 0.826389 | 0.862319 | 144 |

|

| 416 |

+

| comp.windows.x | 0.923077 | 0.891892 | 0.907216 | 148 |

|

| 417 |

+

| misc.forsale | 0.875862 | 0.869863 | 0.872852 | 146 |

|

| 418 |

+

| rec.autos | 0.893082 | 0.95302 | 0.922078 | 149 |

|

| 419 |

+

| rec.motorcycles | 0.937931 | 0.906667 | 0.922034 | 150 |

|

| 420 |

+

| rec.sport.baseball | 0.954248 | 0.979866 | 0.966887 | 149 |

|

| 421 |

+

| rec.sport.hockey | 0.979866 | 0.973333 | 0.976589 | 150 |

|

| 422 |

+

| sci.crypt | 0.993103 | 0.966443 | 0.979592 | 149 |

|

| 423 |

+

| sci.electronics | 0.869565 | 0.810811 | 0.839161 | 148 |

|

| 424 |

+

| sci.med | 0.973154 | 0.973154 | 0.973154 | 149 |

|

| 425 |

+

| sci.space | 0.973333 | 0.986486 | 0.979866 | 148 |

|

| 426 |

+

| soc.religion.christian | 0.927152 | 0.933333 | 0.930233 | 150 |

|

| 427 |

+

| talk.politics.guns | 0.961538 | 0.919118 | 0.93985 | 136 |

|

| 428 |

+

| talk.politics.mideast | 0.978571 | 0.971631 | 0.975089 | 141 |

|

| 429 |

+

| talk.politics.misc | 0.925234 | 0.853448 | 0.887892 | 116 |

|

| 430 |

+

| talk.religion.misc | 0.728972 | 0.829787 | 0.776119 | 94 |

|

| 431 |

+

| macro avg | 0.907141 | 0.903057 | 0.904009 | 2829 |

|

| 432 |

+

| weighted avg | 0.909947 | 0.90562 | 0.906742 | 2829 |

|

| 433 |

+

|

| 434 |

+

</details>

|

config.json

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"sklearn": {

|

| 3 |

+

"environment": [

|

| 4 |

+

"scikit-learn=1.0.2",

|

| 5 |

+

"transformers=4.22.2",

|

| 6 |

+

"torch=1.12.1+cu113",

|

| 7 |

+

"skorch=0.11.1.dev0"

|

| 8 |

+

],

|

| 9 |

+

"example_input": {

|

| 10 |

+

"data": [

|

| 11 |

+

"From: abou@dam.cee.clarkson.edu\nSubject: Re: computer books for sale (UPDATED LIST)\nArticle-I.D.: news.1993Apr6.013433.16103\nOrganization: Clarkson University\nLines: 76\nNntp-Posting-Host: dam.cee.clarkson.edu\n\n\n UPDATED LIST\nHi everybody\n I have the following books for sale. Some of these books are brand new.\nIf you find any book you like and need more information about it, please\nfeel free to send me an E-Mail. The buyers pays the shipping fees.\n Thanks.\nabou@sun.soe.clarkson.edu\n\n========================================================================\nTITLE : Windows Programming: An Introduction \nAUTHOR : William H. Murray, III & Chris H. Pappas\nPUBLISH.: Osborne McGraw-Hill\npp. : 650\nCOVER : Soft\nNOTE : Covers up to Windows 3.0\nASKING : $15\n======================================================================\nTITLE : Harvard Graphics: The Complete Reference\nAUTHOR : Cary Jensen & Loy Anderson\nPUBLISH.: Osborne McGraw-Hill\npp. : 1073\nCOVER : Soft\nNOTE : Covers Releases Through 2.3 & Draw Partner\nASKING : $15\n=======================================================================\nTITLE : High Performance Interactive Graphics: Modeling, Rendering, \n and Animating\nAUTHOR : Lee Adams\nPUBLISH.:Windcrest\npp. : 402\nCOVER : Soft\nNOTE : Full of examples programs in BASIC\nASKING :$15\n========================================================================\nTITLE : Science and Engineering Applications on the IBM PC\nAUTHOR : R. Severin\nPUBLISH.: Abacus\npp. : 262\nCOVER : Soft\nNOTE : A lot of Examples in BASIC\nASKING :$ 10\n=========================================================================\nTITLE : Graphics for the Dot-Matrix Printer: How to Get Your Printer \n to Perform Miracles\nAUTHOR : John W. Davenport\nPUBLISH.: Simon & Schuster\npp. : 461\nCOVER : Soft\nNOTE : Full of examples Programs in BASIC\nASKING : $10\n==========================================================================\nTITLE : Programming With TURBO C\nAUTHOR : S. Scott Zimmerman & Beverly B. Zimmerman\nPUBLISH.: Scott, Foresman and Co.\npp. : 637\nCOVER : Soft\nNOTE : Some of the pages are highlighted\nASKING : $10\n==========================================================================\nTITLE : Introduction to Computer Graphics\nAUTHOR : John Demel & Michael Miller\nPUBLISH.: Brooks/Cole Engineering Division\npp. : 427\nCOVER : Soft\nNOTE : Example Programs in BASIC and Fortran\nASKING : $10\n==========================================================================\nTITLE : Hard Disk Mangement: The Pocket Reference\nAUTHOR : Kris Jamsa\nPUBLISH.: Osborne McGraw-Hill\npp. : 128\nCOVER : Soft\nNOTE : Pocket Size\nASKING : $ 4\n==========================================================================\n",

|

| 12 |

+

"From: dzk@cs.brown.edu (Danny Keren)\nSubject: Suicide Bomber Attack in the Territories \nOrganization: Brown University Department of Computer Science\nLines: 22\n\n Attention Israel Line Recipients\n \n Friday, April 16, 1993\n \n \nTwo Arabs Killed and Eight IDF Soldiers Wounded in West Bank Car\nBomb Explosion\n \nIsrael Defense Forces Radio, GALEI ZAHAL, reports today that a car\nbomb explosion in the West Bank today killed two Palestinians and\nwounded eight IDF soldiers. The blast is believed to be the work of\na suicide bomber. Radio reports said a car packed with butane gas\nexploded between two parked buses, one belonging to the IDF and the\nother civilian. Both busses went up in flames. The blast killed an\nArab man who worked at a nearby snack bar in the Mehola settlement.\nAn Israel Radio report stated that the other man who was killed may\nhave been the one who set off the bomb. According to officials at\nthe Haemek Hospital in Afula, the eight IDF soldiers injured in the\nblast suffered light to moderate injuries.\n \n\n-Danny Keren\n",

|

| 13 |

+

"From: korenek@ferranti.com (gary korenek)\nSubject: Re: 80486DX-50 vs 80486DX2-50\nOrganization: Network Management Technology Inc.\nLines: 26\n\nIn article <1qd5bcINNmep@golem.wcc.govt.nz> hamilton@golem.wcc.govt.nz (Michael Hamilton) writes:\n>I have definitly seen a\n>mother board with 2 local bus slots which claimed to be able to\n>support any CPU, including the DX2/66 and DX50. Can someone throw\n>some more informed light on this issue?\n>[...]\n>Michael Hamilton\n\nSome motherboards support VL bus and 50-DX CPU. There is an option\n(BIOS I think) where additional wait(s) can be added with regard to\nCPU/VL bus transactions. This slows the CPU down to a rate that gives\nthe VL bus device(s) time to 'do their thing'. These particular wait(s)\nare applied when the CPU transacts with VL bus device(s). You want to\nenable these wait(s) only if you are using a 50-DX with VL bus devices.\n\nThis is from reading my motherboard manual, and these are my interpre-\ntations. Your mileage may vary.\n\nStrictly speaking, VL and 50mhz are not compatable. And, there is at\nleast one 'fudge' mechanism to physically allow it to work.\n\n-- \nGary Korenek (korenek@ferranti.com)\nNetwork Management Technology Incorporated\n(formerly Ferranti International Controls Corp.)\nSugar Land, Texas (713)274-5357\n"

|

| 14 |

+

]

|

| 15 |

+

},

|

| 16 |

+

"model": {

|

| 17 |

+

"file": "model.pkl"

|

| 18 |

+

},

|

| 19 |

+

"task": "text-classification"

|

| 20 |

+

}

|

| 21 |

+

}

|

confusion_matrix.png

ADDED

|

model.pkl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:1eb771544f23962f6f8358ec5823872f383a2e55e7e462721e611559ceb1a705

|

| 3 |

+

size 1608239120

|