Commit

•

48b8703

1

Parent(s):

212a407

updated README.md

Browse files

README.md

CHANGED

|

@@ -4,10 +4,15 @@ license: creativeml-openrail-m

|

|

| 4 |

tags:

|

| 5 |

- stable-diffusion

|

| 6 |

- text-to-image

|

|

|

|

|

|

|

| 7 |

---

|

| 8 |

# Ukeiyo-style Diffusion

|

| 9 |

-

|

| 10 |

-

|

|

|

|

|

|

|

|

|

|

| 11 |

|

| 12 |

### 🧨 Diffusers

|

| 13 |

|

|

@@ -27,3 +32,42 @@ prompt = "illustration of ukeiyoddim style landscape"

|

|

| 27 |

image = pipe(prompt).images[0]

|

| 28 |

image.save("./ukeiyo_landscape.png")

|

| 29 |

```

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 4 |

tags:

|

| 5 |

- stable-diffusion

|

| 6 |

- text-to-image

|

| 7 |

+

datasets:

|

| 8 |

+

- ProGamerGov/StableDiffusion-v1-5-Regularization-Images

|

| 9 |

---

|

| 10 |

# Ukeiyo-style Diffusion

|

| 11 |

+

|

| 12 |

+

This is the fine-tuned Stable Diffusion model trained on traditional Japanese Ukeiyo-style images.

|

| 13 |

+

Use the tokens **_ukeiyoddim style_** in your prompts for the effect.

|

| 14 |

+

The model repo also contains a ckpt file , so that you can use the model with your own implementation of

|

| 15 |

+

stable diffusion.

|

| 16 |

|

| 17 |

### 🧨 Diffusers

|

| 18 |

|

|

|

|

| 32 |

image = pipe(prompt).images[0]

|

| 33 |

image.save("./ukeiyo_landscape.png")

|

| 34 |

```

|

| 35 |

+

|

| 36 |

+

## Training procedure and data

|

| 37 |

+

|

| 38 |

+

The training for this model was done using a RTX 3090. The training was completed in 28 minutes for a total of 2000 steps. A total of 33 instance images (Images of the style I was aiming for) and 1k Regularization images was used. Regularization images dataset used by [ProGamerGov](https://huggingface.co/datasets/ProGamerGov/StableDiffusion-v1-5-Regularization-Images).

|

| 39 |

+

|

| 40 |

+

Training notebook used by [Shivam Shrirao](https://colab.research.google.com/github/ShivamShrirao/diffusers/blob/main/examples/dreambooth/DreamBooth_Stable_Diffusion.ipynb).

|

| 41 |

+

|

| 42 |

+

### Training hyperparameters

|

| 43 |

+

|

| 44 |

+

The following hyperparameters were used during training:

|

| 45 |

+

- number of steps : 2000

|

| 46 |

+

- learning_rate: 1e-6

|

| 47 |

+

- train_batch_size: 1

|

| 48 |

+

- scheduler_type: DDIM

|

| 49 |

+

- number of instance images : 33

|

| 50 |

+

- number of regularization images : 1000

|

| 51 |

+

- lr_scheduler : constant

|

| 52 |

+

- gradient_checkpointing

|

| 53 |

+

|

| 54 |

+

### Results

|

| 55 |

+

|

| 56 |

+

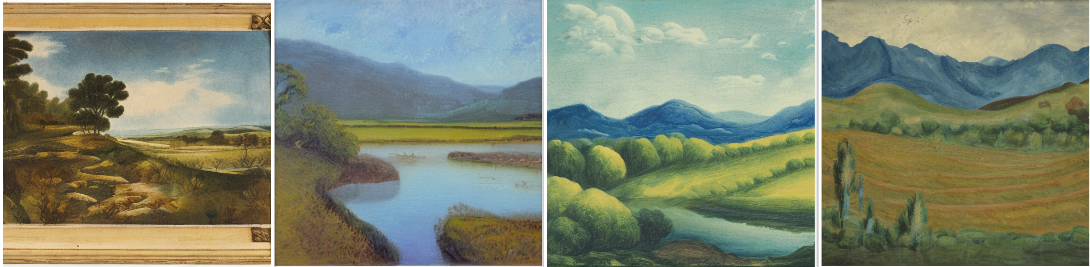

Below are the sample results for different training steps :

|

| 57 |

+

|

| 58 |

+

|

| 59 |

+

### Sample images by model trained for 2000 steps :

|

| 60 |

+

|

| 61 |

+

prompt = "landscape"

|

| 62 |

+

|

| 63 |

+

prompt = "ukeiyoddim style landscape"

|

| 64 |

+

|

| 65 |

+

prompt = " illustration of ukeiyoddim style landscape"

|

| 66 |

+

|

| 67 |

+

|

| 68 |

+

|

| 69 |

+

|

| 70 |

+

### Acknowledgement

|

| 71 |

+

|

| 72 |

+

Many thanks to [nitrosocke](https://huggingface.co/nitrosocke), for inspiration and for the [guide](https://github.com/nitrosocke/dreambooth-training-guide). Also thanks, to all the amazing people making stable diffusion easily accessible for everyone.

|

| 73 |

+

|