update readme and plots

Browse files- README.md +51 -27

- train_loss.png +0 -0

- val_jaccard_index.png +0 -0

- val_loss.png +0 -0

- val_multiclassaccuracy_tree.png +0 -0

- val_multiclassf1score_tree.png +0 -0

README.md

CHANGED

|

@@ -10,13 +10,20 @@ pipeline_tag: image-segmentation

|

|

| 10 |

widget:

|

| 11 |

- src: samples/610160855a90f10006fd303e_10_00418.tif

|

| 12 |

example_title: Urban scene

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 13 |

---

|

| 14 |

|

| 15 |

# Model Card for Restor's SegFormer-based TCD models

|

| 16 |

|

| 17 |

-

This is a semantic segmentation model that can delineate tree cover in aerial images.

|

| 18 |

|

| 19 |

-

This model card

|

|

|

|

|

|

|

| 20 |

|

| 21 |

## Model Details

|

| 22 |

|

|

@@ -27,13 +34,15 @@ This semantic segmentation model was trained on global aerial imagery and is abl

|

|

| 27 |

- **Developed by:** [Restor](https://restor.eco) / [ETH Zurich](https://ethz.ch)

|

| 28 |

- **Funded by:** This project was made possible via a (Google.org impact grant)[https://blog.google/outreach-initiatives/sustainability/restor-helps-anyone-be-part-ecological-restoration/]

|

| 29 |

- **Model type:** Semantic segmentation (binary class)

|

| 30 |

-

- **License:** Model training code is provided under an Apache-2 license. NVIDIA has released SegFormer under their own research license. Users should check the terms of this license before deploying.

|

| 31 |

- **Finetuned from model:** SegFormer family

|

| 32 |

|

| 33 |

-

|

|

|

|

|

|

|

| 34 |

|

| 35 |

-

- **Repository:**

|

| 36 |

-

- **Paper

|

| 37 |

|

| 38 |

## Uses

|

| 39 |

|

|

@@ -67,22 +76,28 @@ There is no substitute for trying the model on your own data and performing your

|

|

| 67 |

|

| 68 |

## How to Get Started with the Model

|

| 69 |

|

| 70 |

-

You can see a brief example of inference in [this Colab notebook]().

|

| 71 |

|

| 72 |

-

For end-to-end usage, we direct users to our prediction and training pipeline which also supports tiled prediction over arbitrarily large images, reporting outputs, etc.

|

| 73 |

|

| 74 |

## Training Details

|

| 75 |

|

| 76 |

### Training Data

|

| 77 |

|

| 78 |

-

The training dataset may be found [here](https://huggingface.co/datasets/restor/tcd), where you can find more details about the collection and annotation procedure. Our image labels are released under a

|

| 79 |

|

| 80 |

### Training Procedure

|

| 81 |

|

| 82 |

-

|

| 83 |

|

| 84 |

We used [Pytorch Lightning](https://lightning.ai/) as our training framework with hyperparameters listed below. The training procedure is straightforward and should be familiar to anyone with experience training deep neural networks.

|

| 85 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 86 |

#### Preprocessing

|

| 87 |

|

| 88 |

This repository contains a pre-processor configuration that can be used with the model, assuming you use the `transformers` library.

|

|

@@ -110,27 +125,32 @@ Note that we do not resize input images (so that the geospatial scale of the sou

|

|

| 110 |

|

| 111 |

You should be able to evaluate the model on a CPU (even up to mit-b5) however you will need a lot of available RAM if you try to infer large tile sizes. In general we find that 1024 px inputs are as large as you want to go, given the fixed size of the output segmentation masks (i.e. it is probably better to perform inference in batched mode at 1024x1024 px than try to predict a single 2048x2048 px image).

|

| 112 |

|

| 113 |

-

All models were trained on a single GPU with

|

| 114 |

|

| 115 |

-

|

| 116 |

|

| 117 |

-

|

| 118 |

|

| 119 |

-

|

| 120 |

|

| 121 |

-

|

| 122 |

|

| 123 |

The training dataset may be found [here](https://huggingface.co/datasets/restor/tcd).

|

| 124 |

|

| 125 |

This model (`main` branch) was trained on all `train` images and tested on the `test` (holdout) images.

|

| 126 |

|

| 127 |

-

|

|

|

|

|

|

|

| 128 |

|

| 129 |

-

|

| 130 |

|

| 131 |

### Results

|

| 132 |

|

| 133 |

-

|

|

|

|

|

|

|

|

|

|

| 134 |

|

| 135 |

## Environmental Impact

|

| 136 |

|

|

@@ -146,21 +166,25 @@ This estimate does not take into account time require for experimentation, faile

|

|

| 146 |

|

| 147 |

Efficient inference on CPU is possible for field work, at the expense of inference latency. A typical single-battery drone flight can be processed in minutes.

|

| 148 |

|

| 149 |

-

## Citation

|

| 150 |

|

| 151 |

-

|

| 152 |

|

| 153 |

**BibTeX:**

|

| 154 |

|

| 155 |

-

|

| 156 |

-

|

| 157 |

-

|

| 158 |

-

|

| 159 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

| 160 |

|

| 161 |

## Model Card Authors

|

| 162 |

-

Josh Veitch-Michaelis, 2024

|

| 163 |

|

| 164 |

## Model Card Contact

|

| 165 |

|

| 166 |

-

Please contact josh [at] restor.eco for questions or further information.

|

|

|

|

| 10 |

widget:

|

| 11 |

- src: samples/610160855a90f10006fd303e_10_00418.tif

|

| 12 |

example_title: Urban scene

|

| 13 |

+

license: cc

|

| 14 |

+

metrics:

|

| 15 |

+

- accuracy

|

| 16 |

+

- f1

|

| 17 |

+

- iou

|

| 18 |

---

|

| 19 |

|

| 20 |

# Model Card for Restor's SegFormer-based TCD models

|

| 21 |

|

| 22 |

+

This is a semantic segmentation model that can delineate tree cover in high resolution (10 cm/px) aerial images.

|

| 23 |

|

| 24 |

+

This model card is mostly the same for all similar models uploaded to Hugging Face. The model name refers to the specific architecture variant (e.g. nvidia-mit-b0 to nvidia-mit-b5) but the broad details for training and evaluation are identical.

|

| 25 |

+

|

| 26 |

+

This repository is for `tcd-segformer-mit-b0`

|

| 27 |

|

| 28 |

## Model Details

|

| 29 |

|

|

|

|

| 34 |

- **Developed by:** [Restor](https://restor.eco) / [ETH Zurich](https://ethz.ch)

|

| 35 |

- **Funded by:** This project was made possible via a (Google.org impact grant)[https://blog.google/outreach-initiatives/sustainability/restor-helps-anyone-be-part-ecological-restoration/]

|

| 36 |

- **Model type:** Semantic segmentation (binary class)

|

| 37 |

+

- **License:** Model training code is provided under an Apache-2 license. NVIDIA has released SegFormer under their own research license. Users should check the terms of this license before deploying. This model was trained on CC BY-NC imagery.

|

| 38 |

- **Finetuned from model:** SegFormer family

|

| 39 |

|

| 40 |

+

SegFormer is a variant of the Pyramid Vision Transformer v2 model, with many identical structural features and a semantic segmentation decode head. Functionally, the architecture is quite similar to a Feature Pyramid Network (FPN) as the output predictions are based on combining features from different stages of the network at different spatial resolutions.

|

| 41 |

+

|

| 42 |

+

### Model Sources

|

| 43 |

|

| 44 |

+

- **Repository:** https://github.com/restor-foundation/tcd

|

| 45 |

+

- **Paper:** We will release a preprint shortly.

|

| 46 |

|

| 47 |

## Uses

|

| 48 |

|

|

|

|

| 76 |

|

| 77 |

## How to Get Started with the Model

|

| 78 |

|

| 79 |

+

You can see a brief example of inference in [this Colab notebook](https://colab.research.google.com/drive/1N_rWko6jzGji3j_ayDR7ngT5lf4P8at_).

|

| 80 |

|

| 81 |

+

For end-to-end usage, we direct users to our prediction and training [pipeline](https://github.com/restor-foundation/tcd) which also supports tiled prediction over arbitrarily large images, reporting outputs, etc.

|

| 82 |

|

| 83 |

## Training Details

|

| 84 |

|

| 85 |

### Training Data

|

| 86 |

|

| 87 |

+

The training dataset may be found [here](https://huggingface.co/datasets/restor/tcd), where you can find more details about the collection and annotation procedure. Our image labels are largely released under a CC-BY 4.0 license, with smaller subsets of CC BY-NC and CC BY-SA imagery.

|

| 88 |

|

| 89 |

### Training Procedure

|

| 90 |

|

| 91 |

+

We used a 5-fold cross-validation process to adjust hyperparameters during training, before training on the "full" training set and evaluating on a holdout set of images. The model in the main branch of this repository should be considered the release version.

|

| 92 |

|

| 93 |

We used [Pytorch Lightning](https://lightning.ai/) as our training framework with hyperparameters listed below. The training procedure is straightforward and should be familiar to anyone with experience training deep neural networks.

|

| 94 |

|

| 95 |

+

A typical training command using our pipeline for this model:

|

| 96 |

+

|

| 97 |

+

```bash

|

| 98 |

+

tcd-train semantic segformer-mit-b0 data.output= ... data.root=/mnt/data/tcd/dataset/holdout data.tile_size=1024

|

| 99 |

+

```

|

| 100 |

+

|

| 101 |

#### Preprocessing

|

| 102 |

|

| 103 |

This repository contains a pre-processor configuration that can be used with the model, assuming you use the `transformers` library.

|

|

|

|

| 125 |

|

| 126 |

You should be able to evaluate the model on a CPU (even up to mit-b5) however you will need a lot of available RAM if you try to infer large tile sizes. In general we find that 1024 px inputs are as large as you want to go, given the fixed size of the output segmentation masks (i.e. it is probably better to perform inference in batched mode at 1024x1024 px than try to predict a single 2048x2048 px image).

|

| 127 |

|

| 128 |

+

All models were trained on a single GPU with 24 GB VRAM (NVIDIA RTX3090) attached to a 32-core machine with 64GB RAM. All but the largest models can be trained in under a day on a machine of this specification. The smallest models take under half a day, while the largest models take just over a day to train.

|

| 129 |

|

| 130 |

+

Feedback we've received from users (in the field) is that landowners are often interested in seeing the results of aerial surveys, but data bandwidth is often a prohibiting factor in remote areas. One of our goals was to support this kind of in-field usage, so that users who fly a survey can process results offline and in a reasonable amount of time (i.e. on the order of an hour).

|

| 131 |

|

| 132 |

+

## Evaluation

|

| 133 |

|

| 134 |

+

We report evaluation results on the OAM-TCD holdout split.

|

| 135 |

|

| 136 |

+

### Testing Data

|

| 137 |

|

| 138 |

The training dataset may be found [here](https://huggingface.co/datasets/restor/tcd).

|

| 139 |

|

| 140 |

This model (`main` branch) was trained on all `train` images and tested on the `test` (holdout) images.

|

| 141 |

|

| 142 |

+

|

| 143 |

+

|

| 144 |

+

### Metrics

|

| 145 |

|

| 146 |

+

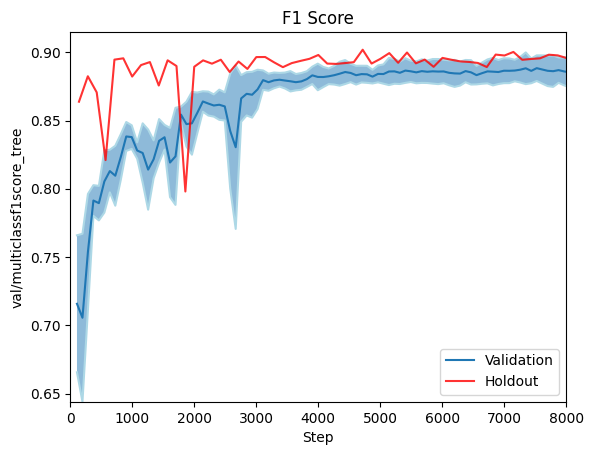

We report F1, Accuracy and IoU on the holdout dataset, as well as results on a 5-fold cross validation split. Cross validtion is visualised as min/max error bars on the plots below.

|

| 147 |

|

| 148 |

### Results

|

| 149 |

|

| 150 |

+

|

| 151 |

+

|

| 152 |

+

|

| 153 |

+

|

| 154 |

|

| 155 |

## Environmental Impact

|

| 156 |

|

|

|

|

| 166 |

|

| 167 |

Efficient inference on CPU is possible for field work, at the expense of inference latency. A typical single-battery drone flight can be processed in minutes.

|

| 168 |

|

| 169 |

+

## Citation

|

| 170 |

|

| 171 |

+

We will provide a preprint version of our paper shortly. In the mean time, please cite as:

|

| 172 |

|

| 173 |

**BibTeX:**

|

| 174 |

|

| 175 |

+

```latex

|

| 176 |

+

@unpublished{restortcd,

|

| 177 |

+

author = "Veitch-Michaelis, Josh and Cottam, Andrew and Schweizer, Daniella Schweizer and Broadbent, Eben N. and Dao, David and Zhang, Ce and Almeyda Zambrano, Angelica and Max, Simeon",

|

| 178 |

+

title = "OAM-TCD: A globally diverse dataset of high-resolution tree cover maps",

|

| 179 |

+

note = "In prep.",

|

| 180 |

+

month = "06",

|

| 181 |

+

year = "2024"

|

| 182 |

+

}

|

| 183 |

+

```

|

| 184 |

|

| 185 |

## Model Card Authors

|

| 186 |

+

Josh Veitch-Michaelis, 2024; on behalf of the dataset authors.

|

| 187 |

|

| 188 |

## Model Card Contact

|

| 189 |

|

| 190 |

+

Please contact josh [at] restor.eco for questions or further information.

|

train_loss.png

ADDED

|

val_jaccard_index.png

ADDED

|

val_loss.png

ADDED

|

val_multiclassaccuracy_tree.png

ADDED

|

val_multiclassf1score_tree.png

ADDED

|