Commit

•

f4f2928

1

Parent(s):

5518cd2

Upload folder using huggingface_hub

Browse files- events.out.tfevents.1731678675.hsuvaspc.509458.1 +3 -0

- events.out.tfevents.1731678732.hsuvaspc.511785.0 +3 -0

- model.safetensors +1 -1

- roberta-large_classification_report_with_val.csv +8 -8

- roberta-large_confusion_matrix_with_val.png +0 -0

- roberta-large_predictions_with_val.csv +0 -0

- roberta-large_wikiquote_label_removed_metrics.json +1 -1

- tokenizer.json +2 -16

- training_args.bin +1 -1

events.out.tfevents.1731678675.hsuvaspc.509458.1

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:f41d8695dd69d442e4ef298166911c35068ba9150c518b7b2dca550afc769236

|

| 3 |

+

size 1524

|

events.out.tfevents.1731678732.hsuvaspc.511785.0

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:a04f39403688d82dcf32a78118b92c8e373e26e7d7f1edd432d0fb4b0994abc3

|

| 3 |

+

size 65390

|

model.safetensors

CHANGED

|

@@ -1,3 +1,3 @@

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

-

oid sha256:

|

| 3 |

size 1421507716

|

|

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:c722d7dea3c71ae4d7645349bd680cf5299032e25786cf49d443f2e540495471

|

| 3 |

size 1421507716

|

roberta-large_classification_report_with_val.csv

CHANGED

|

@@ -1,9 +1,9 @@

|

|

| 1 |

,precision,recall,f1-score,support

|

| 2 |

-

merge,0.

|

| 3 |

-

keep,

|

| 4 |

-

no_consensus,0.

|

| 5 |

-

redirect,0.

|

| 6 |

-

delete,0.

|

| 7 |

-

accuracy,0.

|

| 8 |

-

macro avg,0.

|

| 9 |

-

weighted avg,0.

|

|

|

|

| 1 |

,precision,recall,f1-score,support

|

| 2 |

+

merge,0.5,0.5,0.5,2.0

|

| 3 |

+

keep,1.0,0.8571428571428571,0.923076923076923,14.0

|

| 4 |

+

no_consensus,0.5,0.25,0.3333333333333333,4.0

|

| 5 |

+

redirect,0.6666666666666666,1.0,0.8,6.0

|

| 6 |

+

delete,0.9829059829059829,0.9913793103448276,0.9871244635193134,116.0

|

| 7 |

+

accuracy,0.9507042253521126,0.9507042253521126,0.9507042253521126,0.9507042253521126

|

| 8 |

+

macro avg,0.7299145299145299,0.719704433497537,0.7087069439859139,142.0

|

| 9 |

+

weighted avg,0.950824605754183,0.9507042253521126,0.9476256903144409,142.0

|

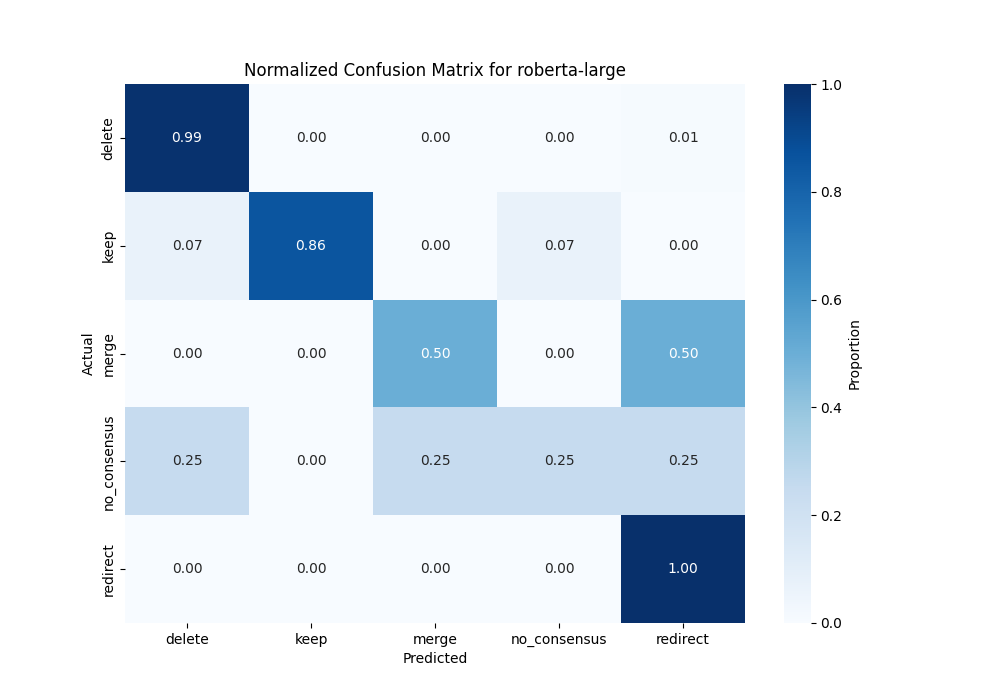

roberta-large_confusion_matrix_with_val.png

CHANGED

|

|

roberta-large_predictions_with_val.csv

CHANGED

|

The diff for this file is too large to render.

See raw diff

|

|

|

roberta-large_wikiquote_label_removed_metrics.json

CHANGED

|

@@ -1 +1 @@

|

|

| 1 |

-

{"accuracy": 0.

|

|

|

|

| 1 |

+

{"accuracy": 0.9507042253521126, "precision": 0.7299145299145299, "recall": 0.719704433497537, "f1": 0.7087069439859139}

|

tokenizer.json

CHANGED

|

@@ -1,21 +1,7 @@

|

|

| 1 |

{

|

| 2 |

"version": "1.0",

|

| 3 |

-

"truncation":

|

| 4 |

-

|

| 5 |

-

"max_length": 512,

|

| 6 |

-

"strategy": "LongestFirst",

|

| 7 |

-

"stride": 0

|

| 8 |

-

},

|

| 9 |

-

"padding": {

|

| 10 |

-

"strategy": {

|

| 11 |

-

"Fixed": 512

|

| 12 |

-

},

|

| 13 |

-

"direction": "Right",

|

| 14 |

-

"pad_to_multiple_of": null,

|

| 15 |

-

"pad_id": 1,

|

| 16 |

-

"pad_type_id": 0,

|

| 17 |

-

"pad_token": "<pad>"

|

| 18 |

-

},

|

| 19 |

"added_tokens": [

|

| 20 |

{

|

| 21 |

"id": 0,

|

|

|

|

| 1 |

{

|

| 2 |

"version": "1.0",

|

| 3 |

+

"truncation": null,

|

| 4 |

+

"padding": null,

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 5 |

"added_tokens": [

|

| 6 |

{

|

| 7 |

"id": 0,

|

training_args.bin

CHANGED

|

@@ -1,3 +1,3 @@

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

-

oid sha256:

|

| 3 |

size 5176

|

|

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:d204efb06f3fbca17907399ddaa5e7d89db9f65fa2c4fa091b0548c013d9e919

|

| 3 |

size 5176

|