add model files

Browse files- README.md +797 -0

- data/en_token_list/bpe_unigram500/bpe.model +3 -0

- exp/asr_stats_raw_en_bpe500_sp/train/feats_stats.npz +3 -0

- exp/asr_train_asr_ctc_conformer_e15_linear1024_raw_en_bpe500_sp/RESULTS.md +32 -0

- exp/asr_train_asr_ctc_conformer_e15_linear1024_raw_en_bpe500_sp/config.yaml +694 -0

- exp/asr_train_asr_ctc_conformer_e15_linear1024_raw_en_bpe500_sp/images/acc.png +0 -0

- exp/asr_train_asr_ctc_conformer_e15_linear1024_raw_en_bpe500_sp/images/backward_time.png +0 -0

- exp/asr_train_asr_ctc_conformer_e15_linear1024_raw_en_bpe500_sp/images/cer.png +0 -0

- exp/asr_train_asr_ctc_conformer_e15_linear1024_raw_en_bpe500_sp/images/cer_ctc.png +0 -0

- exp/asr_train_asr_ctc_conformer_e15_linear1024_raw_en_bpe500_sp/images/forward_time.png +0 -0

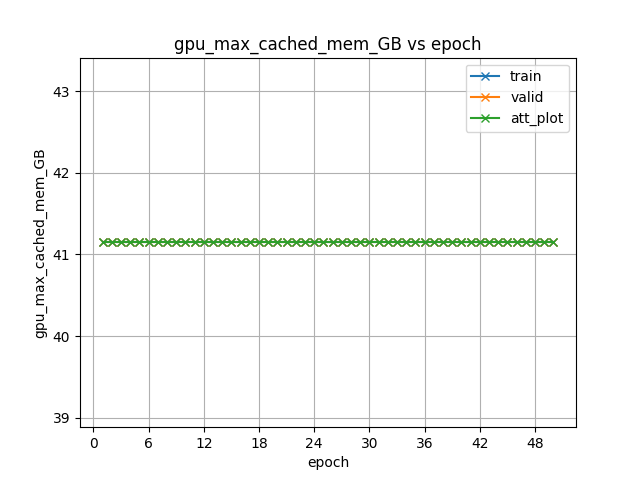

- exp/asr_train_asr_ctc_conformer_e15_linear1024_raw_en_bpe500_sp/images/gpu_max_cached_mem_GB.png +0 -0

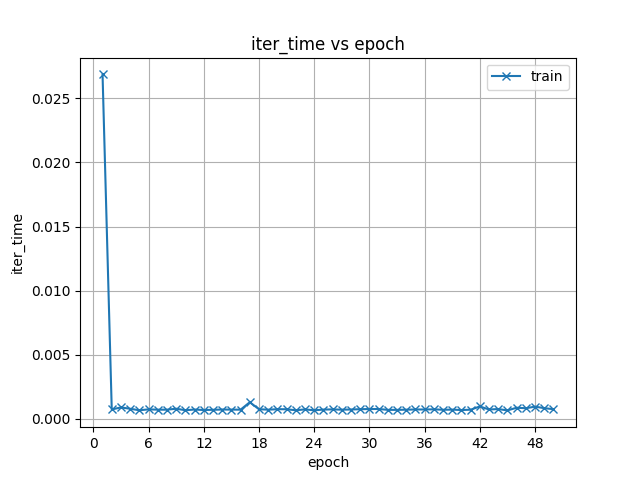

- exp/asr_train_asr_ctc_conformer_e15_linear1024_raw_en_bpe500_sp/images/iter_time.png +0 -0

- exp/asr_train_asr_ctc_conformer_e15_linear1024_raw_en_bpe500_sp/images/loss.png +0 -0

- exp/asr_train_asr_ctc_conformer_e15_linear1024_raw_en_bpe500_sp/images/loss_att.png +0 -0

- exp/asr_train_asr_ctc_conformer_e15_linear1024_raw_en_bpe500_sp/images/loss_ctc.png +0 -0

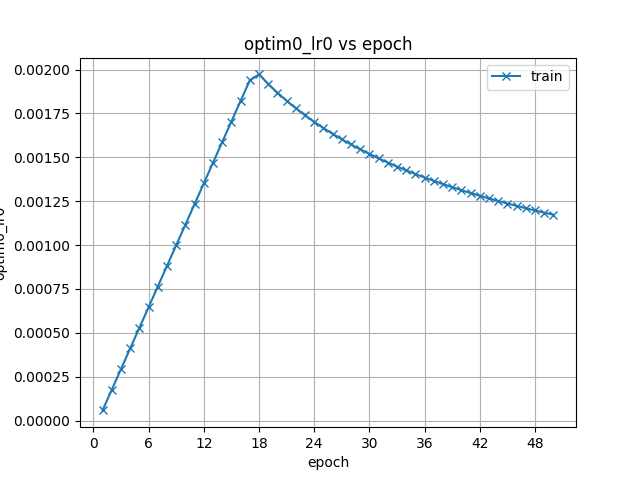

- exp/asr_train_asr_ctc_conformer_e15_linear1024_raw_en_bpe500_sp/images/optim0_lr0.png +0 -0

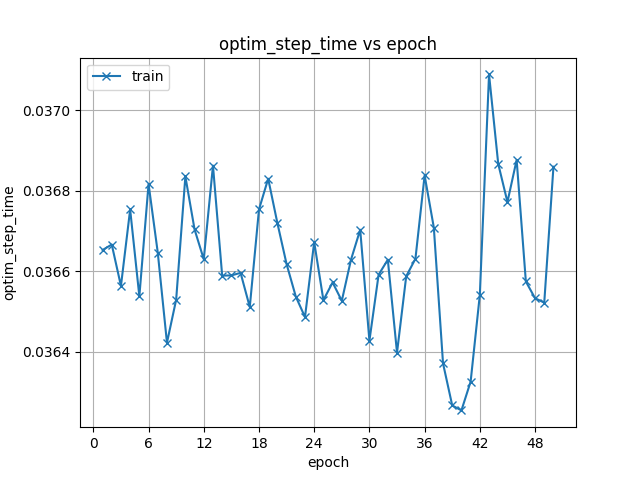

- exp/asr_train_asr_ctc_conformer_e15_linear1024_raw_en_bpe500_sp/images/optim_step_time.png +0 -0

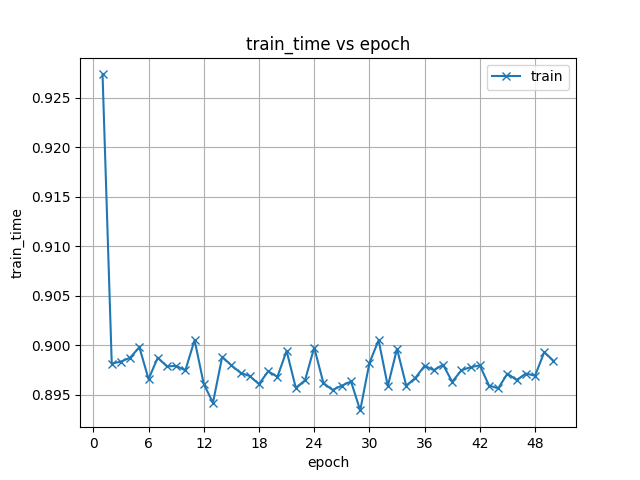

- exp/asr_train_asr_ctc_conformer_e15_linear1024_raw_en_bpe500_sp/images/train_time.png +0 -0

- exp/asr_train_asr_ctc_conformer_e15_linear1024_raw_en_bpe500_sp/images/wer.png +0 -0

- exp/asr_train_asr_ctc_conformer_e15_linear1024_raw_en_bpe500_sp/valid.cer_ctc.ave_10best.pth +3 -0

- meta.yaml +8 -0

README.md

ADDED

|

@@ -0,0 +1,797 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

tags:

|

| 3 |

+

- espnet

|

| 4 |

+

- audio

|

| 5 |

+

- automatic-speech-recognition

|

| 6 |

+

language: en

|

| 7 |

+

datasets:

|

| 8 |

+

- tedlium2

|

| 9 |

+

license: cc-by-4.0

|

| 10 |

+

---

|

| 11 |

+

|

| 12 |

+

## ESPnet2 ASR model

|

| 13 |

+

|

| 14 |

+

### `pyf98/tedlium2_ctc_conformer_e15_linear1024`

|

| 15 |

+

|

| 16 |

+

This model was trained by Yifan Peng using tedlium2 recipe in [espnet](https://github.com/espnet/espnet/).

|

| 17 |

+

|

| 18 |

+

### Demo: How to use in ESPnet2

|

| 19 |

+

|

| 20 |

+

Follow the [ESPnet installation instructions](https://espnet.github.io/espnet/installation.html)

|

| 21 |

+

if you haven't done that already.

|

| 22 |

+

|

| 23 |

+

```bash

|

| 24 |

+

cd espnet

|

| 25 |

+

git checkout e62de171f1d11015cb856f83780c61bd5ca7fa8f

|

| 26 |

+

pip install -e .

|

| 27 |

+

cd egs2/tedlium2/asr1

|

| 28 |

+

./run.sh --skip_data_prep false --skip_train true --download_model pyf98/tedlium2_ctc_conformer_e15_linear1024

|

| 29 |

+

```

|

| 30 |

+

|

| 31 |

+

<!-- Generated by scripts/utils/show_asr_result.sh -->

|

| 32 |

+

# RESULTS

|

| 33 |

+

## Environments

|

| 34 |

+

- date: `Fri Dec 30 08:37:09 CST 2022`

|

| 35 |

+

- python version: `3.9.15 (main, Nov 24 2022, 14:31:59) [GCC 11.2.0]`

|

| 36 |

+

- espnet version: `espnet 202211`

|

| 37 |

+

- pytorch version: `pytorch 1.12.1`

|

| 38 |

+

- Git hash: `e62de171f1d11015cb856f83780c61bd5ca7fa8f`

|

| 39 |

+

- Commit date: `Thu Dec 29 14:18:44 2022 -0500`

|

| 40 |

+

|

| 41 |

+

## asr_train_asr_ctc_conformer_e15_linear1024_raw_en_bpe500_sp

|

| 42 |

+

### WER

|

| 43 |

+

|

| 44 |

+

|dataset|Snt|Wrd|Corr|Sub|Del|Ins|Err|S.Err|

|

| 45 |

+

|---|---|---|---|---|---|---|---|---|

|

| 46 |

+

|decode_asr_ctc_asr_model_valid.cer_ctc.ave/dev|466|14671|92.2|5.6|2.2|1.2|9.1|75.3|

|

| 47 |

+

|decode_asr_ctc_asr_model_valid.cer_ctc.ave/test|1155|27500|92.1|5.4|2.5|1.1|9.0|72.8|

|

| 48 |

+

|

| 49 |

+

### CER

|

| 50 |

+

|

| 51 |

+

|dataset|Snt|Wrd|Corr|Sub|Del|Ins|Err|S.Err|

|

| 52 |

+

|---|---|---|---|---|---|---|---|---|

|

| 53 |

+

|decode_asr_ctc_asr_model_valid.cer_ctc.ave/dev|466|78259|97.0|0.9|2.1|1.2|4.2|75.3|

|

| 54 |

+

|decode_asr_ctc_asr_model_valid.cer_ctc.ave/test|1155|145066|96.9|0.9|2.2|1.2|4.3|72.8|

|

| 55 |

+

|

| 56 |

+

### TER

|

| 57 |

+

|

| 58 |

+

|dataset|Snt|Wrd|Corr|Sub|Del|Ins|Err|S.Err|

|

| 59 |

+

|---|---|---|---|---|---|---|---|---|

|

| 60 |

+

|decode_asr_ctc_asr_model_valid.cer_ctc.ave/dev|466|28296|94.5|3.1|2.4|1.2|6.7|75.3|

|

| 61 |

+

|decode_asr_ctc_asr_model_valid.cer_ctc.ave/test|1155|52113|94.6|2.9|2.5|1.2|6.5|72.8|

|

| 62 |

+

|

| 63 |

+

## ASR config

|

| 64 |

+

|

| 65 |

+

<details><summary>expand</summary>

|

| 66 |

+

|

| 67 |

+

```

|

| 68 |

+

config: conf/tuning/train_asr_ctc_conformer_e15_linear1024.yaml

|

| 69 |

+

print_config: false

|

| 70 |

+

log_level: INFO

|

| 71 |

+

dry_run: false

|

| 72 |

+

iterator_type: sequence

|

| 73 |

+

output_dir: exp/asr_train_asr_ctc_conformer_e15_linear1024_raw_en_bpe500_sp

|

| 74 |

+

ngpu: 1

|

| 75 |

+

seed: 2022

|

| 76 |

+

num_workers: 4

|

| 77 |

+

num_att_plot: 3

|

| 78 |

+

dist_backend: nccl

|

| 79 |

+

dist_init_method: env://

|

| 80 |

+

dist_world_size: 2

|

| 81 |

+

dist_rank: 0

|

| 82 |

+

local_rank: 0

|

| 83 |

+

dist_master_addr: localhost

|

| 84 |

+

dist_master_port: 53439

|

| 85 |

+

dist_launcher: null

|

| 86 |

+

multiprocessing_distributed: true

|

| 87 |

+

unused_parameters: false

|

| 88 |

+

sharded_ddp: false

|

| 89 |

+

cudnn_enabled: true

|

| 90 |

+

cudnn_benchmark: false

|

| 91 |

+

cudnn_deterministic: true

|

| 92 |

+

collect_stats: false

|

| 93 |

+

write_collected_feats: false

|

| 94 |

+

max_epoch: 50

|

| 95 |

+

patience: null

|

| 96 |

+

val_scheduler_criterion:

|

| 97 |

+

- valid

|

| 98 |

+

- loss

|

| 99 |

+

early_stopping_criterion:

|

| 100 |

+

- valid

|

| 101 |

+

- loss

|

| 102 |

+

- min

|

| 103 |

+

best_model_criterion:

|

| 104 |

+

- - valid

|

| 105 |

+

- cer_ctc

|

| 106 |

+

- min

|

| 107 |

+

keep_nbest_models: 10

|

| 108 |

+

nbest_averaging_interval: 0

|

| 109 |

+

grad_clip: 5.0

|

| 110 |

+

grad_clip_type: 2.0

|

| 111 |

+

grad_noise: false

|

| 112 |

+

accum_grad: 1

|

| 113 |

+

no_forward_run: false

|

| 114 |

+

resume: true

|

| 115 |

+

train_dtype: float32

|

| 116 |

+

use_amp: true

|

| 117 |

+

log_interval: null

|

| 118 |

+

use_matplotlib: true

|

| 119 |

+

use_tensorboard: true

|

| 120 |

+

create_graph_in_tensorboard: false

|

| 121 |

+

use_wandb: false

|

| 122 |

+

wandb_project: null

|

| 123 |

+

wandb_id: null

|

| 124 |

+

wandb_entity: null

|

| 125 |

+

wandb_name: null

|

| 126 |

+

wandb_model_log_interval: -1

|

| 127 |

+

detect_anomaly: false

|

| 128 |

+

pretrain_path: null

|

| 129 |

+

init_param: []

|

| 130 |

+

ignore_init_mismatch: false

|

| 131 |

+

freeze_param: []

|

| 132 |

+

num_iters_per_epoch: null

|

| 133 |

+

batch_size: 20

|

| 134 |

+

valid_batch_size: null

|

| 135 |

+

batch_bins: 50000000

|

| 136 |

+

valid_batch_bins: null

|

| 137 |

+

train_shape_file:

|

| 138 |

+

- exp/asr_stats_raw_en_bpe500_sp/train/speech_shape

|

| 139 |

+

- exp/asr_stats_raw_en_bpe500_sp/train/text_shape.bpe

|

| 140 |

+

valid_shape_file:

|

| 141 |

+

- exp/asr_stats_raw_en_bpe500_sp/valid/speech_shape

|

| 142 |

+

- exp/asr_stats_raw_en_bpe500_sp/valid/text_shape.bpe

|

| 143 |

+

batch_type: numel

|

| 144 |

+

valid_batch_type: null

|

| 145 |

+

fold_length:

|

| 146 |

+

- 80000

|

| 147 |

+

- 150

|

| 148 |

+

sort_in_batch: descending

|

| 149 |

+

sort_batch: descending

|

| 150 |

+

multiple_iterator: false

|

| 151 |

+

chunk_length: 500

|

| 152 |

+

chunk_shift_ratio: 0.5

|

| 153 |

+

num_cache_chunks: 1024

|

| 154 |

+

train_data_path_and_name_and_type:

|

| 155 |

+

- - dump/raw/train_sp/wav.scp

|

| 156 |

+

- speech

|

| 157 |

+

- kaldi_ark

|

| 158 |

+

- - dump/raw/train_sp/text

|

| 159 |

+

- text

|

| 160 |

+

- text

|

| 161 |

+

valid_data_path_and_name_and_type:

|

| 162 |

+

- - dump/raw/dev/wav.scp

|

| 163 |

+

- speech

|

| 164 |

+

- kaldi_ark

|

| 165 |

+

- - dump/raw/dev/text

|

| 166 |

+

- text

|

| 167 |

+

- text

|

| 168 |

+

allow_variable_data_keys: false

|

| 169 |

+

max_cache_size: 0.0

|

| 170 |

+

max_cache_fd: 32

|

| 171 |

+

valid_max_cache_size: null

|

| 172 |

+

optim: adam

|

| 173 |

+

optim_conf:

|

| 174 |

+

lr: 0.002

|

| 175 |

+

weight_decay: 1.0e-06

|

| 176 |

+

scheduler: warmuplr

|

| 177 |

+

scheduler_conf:

|

| 178 |

+

warmup_steps: 15000

|

| 179 |

+

token_list:

|

| 180 |

+

- <blank>

|

| 181 |

+

- <unk>

|

| 182 |

+

- s

|

| 183 |

+

- ▁the

|

| 184 |

+

- t

|

| 185 |

+

- ▁a

|

| 186 |

+

- ▁and

|

| 187 |

+

- ▁to

|

| 188 |

+

- d

|

| 189 |

+

- e

|

| 190 |

+

- ▁of

|

| 191 |

+

- ''''

|

| 192 |

+

- n

|

| 193 |

+

- ing

|

| 194 |

+

- ▁in

|

| 195 |

+

- ▁i

|

| 196 |

+

- ▁that

|

| 197 |

+

- i

|

| 198 |

+

- a

|

| 199 |

+

- l

|

| 200 |

+

- p

|

| 201 |

+

- m

|

| 202 |

+

- y

|

| 203 |

+

- o

|

| 204 |

+

- ▁it

|

| 205 |

+

- ▁we

|

| 206 |

+

- c

|

| 207 |

+

- u

|

| 208 |

+

- ▁you

|

| 209 |

+

- ed

|

| 210 |

+

- ▁

|

| 211 |

+

- r

|

| 212 |

+

- ▁is

|

| 213 |

+

- re

|

| 214 |

+

- ▁this

|

| 215 |

+

- ar

|

| 216 |

+

- g

|

| 217 |

+

- ▁so

|

| 218 |

+

- al

|

| 219 |

+

- b

|

| 220 |

+

- ▁s

|

| 221 |

+

- or

|

| 222 |

+

- ▁f

|

| 223 |

+

- ▁c

|

| 224 |

+

- in

|

| 225 |

+

- k

|

| 226 |

+

- f

|

| 227 |

+

- ▁for

|

| 228 |

+

- ic

|

| 229 |

+

- er

|

| 230 |

+

- le

|

| 231 |

+

- ▁be

|

| 232 |

+

- ▁do

|

| 233 |

+

- ▁re

|

| 234 |

+

- ve

|

| 235 |

+

- ▁e

|

| 236 |

+

- ▁w

|

| 237 |

+

- ▁was

|

| 238 |

+

- es

|

| 239 |

+

- ▁they

|

| 240 |

+

- ly

|

| 241 |

+

- h

|

| 242 |

+

- ▁on

|

| 243 |

+

- v

|

| 244 |

+

- ▁are

|

| 245 |

+

- ri

|

| 246 |

+

- ▁have

|

| 247 |

+

- an

|

| 248 |

+

- ▁what

|

| 249 |

+

- ▁with

|

| 250 |

+

- ▁t

|

| 251 |

+

- w

|

| 252 |

+

- ur

|

| 253 |

+

- it

|

| 254 |

+

- ent

|

| 255 |

+

- ▁can

|

| 256 |

+

- ▁he

|

| 257 |

+

- ▁but

|

| 258 |

+

- ra

|

| 259 |

+

- ce

|

| 260 |

+

- ▁me

|

| 261 |

+

- ▁b

|

| 262 |

+

- ▁ma

|

| 263 |

+

- ▁p

|

| 264 |

+

- ll

|

| 265 |

+

- ▁st

|

| 266 |

+

- ▁one

|

| 267 |

+

- 'on'

|

| 268 |

+

- ▁about

|

| 269 |

+

- th

|

| 270 |

+

- ▁de

|

| 271 |

+

- en

|

| 272 |

+

- ▁all

|

| 273 |

+

- ▁not

|

| 274 |

+

- il

|

| 275 |

+

- ▁g

|

| 276 |

+

- ch

|

| 277 |

+

- at

|

| 278 |

+

- ▁there

|

| 279 |

+

- ▁mo

|

| 280 |

+

- ter

|

| 281 |

+

- ation

|

| 282 |

+

- tion

|

| 283 |

+

- ▁at

|

| 284 |

+

- ▁my

|

| 285 |

+

- ro

|

| 286 |

+

- ▁as

|

| 287 |

+

- te

|

| 288 |

+

- ▁le

|

| 289 |

+

- ▁con

|

| 290 |

+

- ▁like

|

| 291 |

+

- ▁people

|

| 292 |

+

- ▁or

|

| 293 |

+

- ▁an

|

| 294 |

+

- el

|

| 295 |

+

- ▁if

|

| 296 |

+

- ▁from

|

| 297 |

+

- ver

|

| 298 |

+

- ▁su

|

| 299 |

+

- ▁co

|

| 300 |

+

- ate

|

| 301 |

+

- ▁these

|

| 302 |

+

- ol

|

| 303 |

+

- ci

|

| 304 |

+

- ▁now

|

| 305 |

+

- ▁see

|

| 306 |

+

- ▁out

|

| 307 |

+

- ▁our

|

| 308 |

+

- ion

|

| 309 |

+

- ▁know

|

| 310 |

+

- ect

|

| 311 |

+

- ▁just

|

| 312 |

+

- as

|

| 313 |

+

- ▁ex

|

| 314 |

+

- ▁ch

|

| 315 |

+

- ▁d

|

| 316 |

+

- ▁when

|

| 317 |

+

- ▁very

|

| 318 |

+

- ▁think

|

| 319 |

+

- ▁who

|

| 320 |

+

- ▁because

|

| 321 |

+

- ▁go

|

| 322 |

+

- ▁up

|

| 323 |

+

- ▁us

|

| 324 |

+

- ▁pa

|

| 325 |

+

- ▁no

|

| 326 |

+

- ies

|

| 327 |

+

- ▁di

|

| 328 |

+

- ▁ho

|

| 329 |

+

- om

|

| 330 |

+

- ive

|

| 331 |

+

- ▁get

|

| 332 |

+

- id

|

| 333 |

+

- ▁o

|

| 334 |

+

- ▁hi

|

| 335 |

+

- un

|

| 336 |

+

- ▁how

|

| 337 |

+

- ▁by

|

| 338 |

+

- ir

|

| 339 |

+

- et

|

| 340 |

+

- ck

|

| 341 |

+

- ity

|

| 342 |

+

- ▁po

|

| 343 |

+

- ul

|

| 344 |

+

- ▁which

|

| 345 |

+

- ▁mi

|

| 346 |

+

- ▁some

|

| 347 |

+

- z

|

| 348 |

+

- ▁sp

|

| 349 |

+

- ▁un

|

| 350 |

+

- ▁going

|

| 351 |

+

- ▁pro

|

| 352 |

+

- ist

|

| 353 |

+

- ▁se

|

| 354 |

+

- ▁look

|

| 355 |

+

- ▁time

|

| 356 |

+

- ment

|

| 357 |

+

- de

|

| 358 |

+

- ▁more

|

| 359 |

+

- ▁had

|

| 360 |

+

- ng

|

| 361 |

+

- ▁would

|

| 362 |

+

- ge

|

| 363 |

+

- la

|

| 364 |

+

- ▁here

|

| 365 |

+

- ▁really

|

| 366 |

+

- x

|

| 367 |

+

- ▁your

|

| 368 |

+

- ▁them

|

| 369 |

+

- us

|

| 370 |

+

- me

|

| 371 |

+

- ▁en

|

| 372 |

+

- ▁two

|

| 373 |

+

- ▁k

|

| 374 |

+

- ▁li

|

| 375 |

+

- ▁world

|

| 376 |

+

- ne

|

| 377 |

+

- ow

|

| 378 |

+

- ▁way

|

| 379 |

+

- ▁want

|

| 380 |

+

- ▁work

|

| 381 |

+

- ▁don

|

| 382 |

+

- ▁lo

|

| 383 |

+

- ▁fa

|

| 384 |

+

- ▁were

|

| 385 |

+

- ▁their

|

| 386 |

+

- age

|

| 387 |

+

- vi

|

| 388 |

+

- ▁ha

|

| 389 |

+

- ac

|

| 390 |

+

- der

|

| 391 |

+

- est

|

| 392 |

+

- ▁bo

|

| 393 |

+

- am

|

| 394 |

+

- ▁other

|

| 395 |

+

- able

|

| 396 |

+

- ▁actually

|

| 397 |

+

- ▁sh

|

| 398 |

+

- ▁make

|

| 399 |

+

- ▁ba

|

| 400 |

+

- ▁la

|

| 401 |

+

- ine

|

| 402 |

+

- ▁into

|

| 403 |

+

- ▁where

|

| 404 |

+

- ▁could

|

| 405 |

+

- ▁comp

|

| 406 |

+

- ting

|

| 407 |

+

- ▁has

|

| 408 |

+

- ▁will

|

| 409 |

+

- ▁ne

|

| 410 |

+

- j

|

| 411 |

+

- ical

|

| 412 |

+

- ally

|

| 413 |

+

- ▁vi

|

| 414 |

+

- ▁things

|

| 415 |

+

- ▁te

|

| 416 |

+

- igh

|

| 417 |

+

- ▁say

|

| 418 |

+

- ▁years

|

| 419 |

+

- ers

|

| 420 |

+

- ▁ra

|

| 421 |

+

- ther

|

| 422 |

+

- ▁than

|

| 423 |

+

- ru

|

| 424 |

+

- ▁ro

|

| 425 |

+

- op

|

| 426 |

+

- ▁did

|

| 427 |

+

- ▁any

|

| 428 |

+

- ▁new

|

| 429 |

+

- ound

|

| 430 |

+

- ig

|

| 431 |

+

- ▁well

|

| 432 |

+

- mo

|

| 433 |

+

- ▁she

|

| 434 |

+

- ▁na

|

| 435 |

+

- ▁been

|

| 436 |

+

- he

|

| 437 |

+

- ▁thousand

|

| 438 |

+

- ▁car

|

| 439 |

+

- ▁take

|

| 440 |

+

- ▁right

|

| 441 |

+

- ▁then

|

| 442 |

+

- ▁need

|

| 443 |

+

- ▁start

|

| 444 |

+

- ▁hundred

|

| 445 |

+

- ▁something

|

| 446 |

+

- ▁over

|

| 447 |

+

- ▁com

|

| 448 |

+

- ia

|

| 449 |

+

- ▁kind

|

| 450 |

+

- um

|

| 451 |

+

- if

|

| 452 |

+

- ▁those

|

| 453 |

+

- ▁first

|

| 454 |

+

- ▁pre

|

| 455 |

+

- ta

|

| 456 |

+

- ▁said

|

| 457 |

+

- ize

|

| 458 |

+

- end

|

| 459 |

+

- ▁even

|

| 460 |

+

- ▁thing

|

| 461 |

+

- one

|

| 462 |

+

- ▁back

|

| 463 |

+

- ite

|

| 464 |

+

- ▁every

|

| 465 |

+

- ▁little

|

| 466 |

+

- ry

|

| 467 |

+

- ▁life

|

| 468 |

+

- ▁much

|

| 469 |

+

- ke

|

| 470 |

+

- ▁also

|

| 471 |

+

- ▁most

|

| 472 |

+

- ant

|

| 473 |

+

- per

|

| 474 |

+

- ▁three

|

| 475 |

+

- ▁come

|

| 476 |

+

- ▁lot

|

| 477 |

+

- ance

|

| 478 |

+

- ▁got

|

| 479 |

+

- ▁talk

|

| 480 |

+

- ▁per

|

| 481 |

+

- ▁inter

|

| 482 |

+

- ▁sa

|

| 483 |

+

- ▁use

|

| 484 |

+

- ▁mu

|

| 485 |

+

- ▁part

|

| 486 |

+

- ish

|

| 487 |

+

- ence

|

| 488 |

+

- ▁happen

|

| 489 |

+

- ▁bi

|

| 490 |

+

- ▁mean

|

| 491 |

+

- ough

|

| 492 |

+

- ▁qu

|

| 493 |

+

- ▁bu

|

| 494 |

+

- ▁day

|

| 495 |

+

- ▁ga

|

| 496 |

+

- ▁only

|

| 497 |

+

- ▁many

|

| 498 |

+

- ▁different

|

| 499 |

+

- ▁dr

|

| 500 |

+

- ▁th

|

| 501 |

+

- ▁show

|

| 502 |

+

- ful

|

| 503 |

+

- ▁down

|

| 504 |

+

- ated

|

| 505 |

+

- ▁good

|

| 506 |

+

- ▁tra

|

| 507 |

+

- ▁around

|

| 508 |

+

- ▁idea

|

| 509 |

+

- ▁human

|

| 510 |

+

- ous

|

| 511 |

+

- ▁put

|

| 512 |

+

- ▁through

|

| 513 |

+

- ▁five

|

| 514 |

+

- ▁why

|

| 515 |

+

- ▁change

|

| 516 |

+

- ▁real

|

| 517 |

+

- ff

|

| 518 |

+

- ible

|

| 519 |

+

- ▁fact

|

| 520 |

+

- ▁same

|

| 521 |

+

- ▁jo

|

| 522 |

+

- ▁live

|

| 523 |

+

- ▁year

|

| 524 |

+

- ▁problem

|

| 525 |

+

- ▁ph

|

| 526 |

+

- ▁four

|

| 527 |

+

- ▁give

|

| 528 |

+

- ▁big

|

| 529 |

+

- ▁tell

|

| 530 |

+

- ▁great

|

| 531 |

+

- ▁try

|

| 532 |

+

- ▁va

|

| 533 |

+

- ▁ru

|

| 534 |

+

- ▁system

|

| 535 |

+

- ▁six

|

| 536 |

+

- ▁plan

|

| 537 |

+

- ▁place

|

| 538 |

+

- ▁build

|

| 539 |

+

- ▁called

|

| 540 |

+

- ▁again

|

| 541 |

+

- ▁point

|

| 542 |

+

- ▁twenty

|

| 543 |

+

- ▁percent

|

| 544 |

+

- ▁nine

|

| 545 |

+

- ▁find

|

| 546 |

+

- ▁app

|

| 547 |

+

- ▁after

|

| 548 |

+

- ▁long

|

| 549 |

+

- ▁eight

|

| 550 |

+

- ▁imp

|

| 551 |

+

- ▁gene

|

| 552 |

+

- ▁design

|

| 553 |

+

- ▁today

|

| 554 |

+

- ▁should

|

| 555 |

+

- ▁made

|

| 556 |

+

- ious

|

| 557 |

+

- ▁came

|

| 558 |

+

- ▁learn

|

| 559 |

+

- ▁last

|

| 560 |

+

- ▁own

|

| 561 |

+

- way

|

| 562 |

+

- ▁turn

|

| 563 |

+

- ▁seven

|

| 564 |

+

- ▁high

|

| 565 |

+

- ▁question

|

| 566 |

+

- ▁person

|

| 567 |

+

- ▁brain

|

| 568 |

+

- ▁important

|

| 569 |

+

- ▁another

|

| 570 |

+

- ▁thought

|

| 571 |

+

- ▁trans

|

| 572 |

+

- ▁create

|

| 573 |

+

- ness

|

| 574 |

+

- ▁hu

|

| 575 |

+

- ▁power

|

| 576 |

+

- ▁act

|

| 577 |

+

- land

|

| 578 |

+

- ▁play

|

| 579 |

+

- ▁sort

|

| 580 |

+

- ▁old

|

| 581 |

+

- ▁before

|

| 582 |

+

- ▁course

|

| 583 |

+

- ▁understand

|

| 584 |

+

- ▁feel

|

| 585 |

+

- ▁might

|

| 586 |

+

- ▁each

|

| 587 |

+

- ▁million

|

| 588 |

+

- ▁better

|

| 589 |

+

- ▁together

|

| 590 |

+

- ▁ago

|

| 591 |

+

- ▁example

|

| 592 |

+

- ▁help

|

| 593 |

+

- ▁story

|

| 594 |

+

- ▁next

|

| 595 |

+

- ▁hand

|

| 596 |

+

- ▁school

|

| 597 |

+

- ▁water

|

| 598 |

+

- ▁develop

|

| 599 |

+

- ▁technology

|

| 600 |

+

- que

|

| 601 |

+

- ▁second

|

| 602 |

+

- ▁grow

|

| 603 |

+

- ▁still

|

| 604 |

+

- ▁cell

|

| 605 |

+

- ▁believe

|

| 606 |

+

- ▁number

|

| 607 |

+

- ▁small

|

| 608 |

+

- ▁between

|

| 609 |

+

- qui

|

| 610 |

+

- ▁data

|

| 611 |

+

- ▁become

|

| 612 |

+

- ▁america

|

| 613 |

+

- ▁maybe

|

| 614 |

+

- ▁space

|

| 615 |

+

- ▁project

|

| 616 |

+

- ▁organ

|

| 617 |

+

- ▁vo

|

| 618 |

+

- ▁children

|

| 619 |

+

- ▁book

|

| 620 |

+

- graph

|

| 621 |

+

- ▁open

|

| 622 |

+

- ▁fifty

|

| 623 |

+

- ▁picture

|

| 624 |

+

- ▁health

|

| 625 |

+

- ▁thirty

|

| 626 |

+

- ▁africa

|

| 627 |

+

- ▁reason

|

| 628 |

+

- ▁large

|

| 629 |

+

- ▁hard

|

| 630 |

+

- ▁computer

|

| 631 |

+

- ▁always

|

| 632 |

+

- ▁sense

|

| 633 |

+

- ▁money

|

| 634 |

+

- ▁women

|

| 635 |

+

- ▁everything

|

| 636 |

+

- ▁information

|

| 637 |

+

- ▁country

|

| 638 |

+

- ▁teach

|

| 639 |

+

- ▁energy

|

| 640 |

+

- ▁experience

|

| 641 |

+

- ▁food

|

| 642 |

+

- ▁process

|

| 643 |

+

- qua

|

| 644 |

+

- ▁interesting

|

| 645 |

+

- ▁future

|

| 646 |

+

- ▁science

|

| 647 |

+

- q

|

| 648 |

+

- '0'

|

| 649 |

+

- '5'

|

| 650 |

+

- '6'

|

| 651 |

+

- '9'

|

| 652 |

+

- '3'

|

| 653 |

+

- '8'

|

| 654 |

+

- '4'

|

| 655 |

+

- N

|

| 656 |

+

- A

|

| 657 |

+

- '7'

|

| 658 |

+

- S

|

| 659 |

+

- G

|

| 660 |

+

- F

|

| 661 |

+

- R

|

| 662 |

+

- L

|

| 663 |

+

- U

|

| 664 |

+

- E

|

| 665 |

+

- T

|

| 666 |

+

- H

|

| 667 |

+

- _

|

| 668 |

+

- B

|

| 669 |

+

- D

|

| 670 |

+

- J

|

| 671 |

+

- M

|

| 672 |

+

- ă

|

| 673 |

+

- ō

|

| 674 |

+

- ť

|

| 675 |

+

- '2'

|

| 676 |

+

- '-'

|

| 677 |

+

- '1'

|

| 678 |

+

- C

|

| 679 |

+

- <sos/eos>

|

| 680 |

+

init: null

|

| 681 |

+

input_size: null

|

| 682 |

+

ctc_conf:

|

| 683 |

+

dropout_rate: 0.0

|

| 684 |

+

ctc_type: builtin

|

| 685 |

+

reduce: true

|

| 686 |

+

ignore_nan_grad: null

|

| 687 |

+

zero_infinity: true

|

| 688 |

+

joint_net_conf: null

|

| 689 |

+

use_preprocessor: true

|

| 690 |

+

token_type: bpe

|

| 691 |

+

bpemodel: data/en_token_list/bpe_unigram500/bpe.model

|

| 692 |

+

non_linguistic_symbols: null

|

| 693 |

+

cleaner: null

|

| 694 |

+

g2p: null

|

| 695 |

+

speech_volume_normalize: null

|

| 696 |

+

rir_scp: null

|

| 697 |

+

rir_apply_prob: 1.0

|

| 698 |

+

noise_scp: null

|

| 699 |

+

noise_apply_prob: 1.0

|

| 700 |

+

noise_db_range: '13_15'

|

| 701 |

+

short_noise_thres: 0.5

|

| 702 |

+

frontend: default

|

| 703 |

+

frontend_conf:

|

| 704 |

+

n_fft: 512

|

| 705 |

+

win_length: 400

|

| 706 |

+

hop_length: 160

|

| 707 |

+

fs: 16k

|

| 708 |

+

specaug: specaug

|

| 709 |

+

specaug_conf:

|

| 710 |

+

apply_time_warp: true

|

| 711 |

+

time_warp_window: 5

|

| 712 |

+

time_warp_mode: bicubic

|

| 713 |

+

apply_freq_mask: true

|

| 714 |

+

freq_mask_width_range:

|

| 715 |

+

- 0

|

| 716 |

+

- 27

|

| 717 |

+

num_freq_mask: 2

|

| 718 |

+

apply_time_mask: true

|

| 719 |

+

time_mask_width_ratio_range:

|

| 720 |

+

- 0.0

|

| 721 |

+

- 0.05

|

| 722 |

+

num_time_mask: 5

|

| 723 |

+

normalize: global_mvn

|

| 724 |

+

normalize_conf:

|

| 725 |

+

stats_file: exp/asr_stats_raw_en_bpe500_sp/train/feats_stats.npz

|

| 726 |

+

model: espnet

|

| 727 |

+

model_conf:

|

| 728 |

+

ctc_weight: 1.0

|

| 729 |

+

lsm_weight: 0.1

|

| 730 |

+

length_normalized_loss: false

|

| 731 |

+

preencoder: null

|

| 732 |

+

preencoder_conf: {}

|

| 733 |

+

encoder: conformer

|

| 734 |

+

encoder_conf:

|

| 735 |

+

output_size: 256

|

| 736 |

+

attention_heads: 4

|

| 737 |

+

linear_units: 1024

|

| 738 |

+

num_blocks: 15

|

| 739 |

+

dropout_rate: 0.1

|

| 740 |

+

positional_dropout_rate: 0.1

|

| 741 |

+

attention_dropout_rate: 0.1

|

| 742 |

+

input_layer: conv2d

|

| 743 |

+

normalize_before: true

|

| 744 |

+

macaron_style: true

|

| 745 |

+

rel_pos_type: latest

|

| 746 |

+

pos_enc_layer_type: rel_pos

|

| 747 |

+

selfattention_layer_type: rel_selfattn

|

| 748 |

+

activation_type: swish

|

| 749 |

+

use_cnn_module: true

|

| 750 |

+

cnn_module_kernel: 31

|

| 751 |

+

postencoder: null

|

| 752 |

+

postencoder_conf: {}

|

| 753 |

+

decoder: rnn

|

| 754 |

+

decoder_conf: {}

|

| 755 |

+

preprocessor: default

|

| 756 |

+

preprocessor_conf: {}

|

| 757 |

+

required:

|

| 758 |

+

- output_dir

|

| 759 |

+

- token_list

|

| 760 |

+

version: '202211'

|

| 761 |

+

distributed: true

|

| 762 |

+

```

|

| 763 |

+

|

| 764 |

+

</details>

|

| 765 |

+

|

| 766 |

+

|

| 767 |

+

|

| 768 |

+

### Citing ESPnet

|

| 769 |

+

|

| 770 |

+

```BibTex

|

| 771 |

+

@inproceedings{watanabe2018espnet,

|

| 772 |

+

author={Shinji Watanabe and Takaaki Hori and Shigeki Karita and Tomoki Hayashi and Jiro Nishitoba and Yuya Unno and Nelson Yalta and Jahn Heymann and Matthew Wiesner and Nanxin Chen and Adithya Renduchintala and Tsubasa Ochiai},

|

| 773 |

+

title={{ESPnet}: End-to-End Speech Processing Toolkit},

|

| 774 |

+

year={2018},

|

| 775 |

+

booktitle={Proceedings of Interspeech},

|

| 776 |

+

pages={2207--2211},

|

| 777 |

+

doi={10.21437/Interspeech.2018-1456},

|

| 778 |

+

url={http://dx.doi.org/10.21437/Interspeech.2018-1456}

|

| 779 |

+

}

|

| 780 |

+

|

| 781 |

+

|

| 782 |

+

|

| 783 |

+

|

| 784 |

+

```

|

| 785 |

+

|

| 786 |

+

or arXiv:

|

| 787 |

+

|

| 788 |

+

```bibtex

|

| 789 |

+

@misc{watanabe2018espnet,

|

| 790 |

+

title={ESPnet: End-to-End Speech Processing Toolkit},

|

| 791 |

+

author={Shinji Watanabe and Takaaki Hori and Shigeki Karita and Tomoki Hayashi and Jiro Nishitoba and Yuya Unno and Nelson Yalta and Jahn Heymann and Matthew Wiesner and Nanxin Chen and Adithya Renduchintala and Tsubasa Ochiai},

|

| 792 |

+

year={2018},

|

| 793 |

+

eprint={1804.00015},

|

| 794 |

+

archivePrefix={arXiv},

|

| 795 |

+

primaryClass={cs.CL}

|

| 796 |

+

}

|

| 797 |

+

```

|

data/en_token_list/bpe_unigram500/bpe.model

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:ca848c3a0b756847776bc5c8e8ae797ad73381cb4fe9db9109b3131e9416b5f6

|

| 3 |

+

size 244853

|

exp/asr_stats_raw_en_bpe500_sp/train/feats_stats.npz

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:a9aa2bdc65662202e277008f62275fef28e17e564fbcf6b759a4a169cdcfdbbd

|

| 3 |

+

size 1402

|

exp/asr_train_asr_ctc_conformer_e15_linear1024_raw_en_bpe500_sp/RESULTS.md

ADDED

|

@@ -0,0 +1,32 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<!-- Generated by scripts/utils/show_asr_result.sh -->

|

| 2 |

+

# RESULTS

|

| 3 |

+

## Environments

|

| 4 |

+

- date: `Fri Dec 30 08:37:09 CST 2022`

|

| 5 |

+

- python version: `3.9.15 (main, Nov 24 2022, 14:31:59) [GCC 11.2.0]`

|

| 6 |

+

- espnet version: `espnet 202211`

|

| 7 |

+

- pytorch version: `pytorch 1.12.1`

|

| 8 |

+

- Git hash: `e62de171f1d11015cb856f83780c61bd5ca7fa8f`

|

| 9 |

+

- Commit date: `Thu Dec 29 14:18:44 2022 -0500`

|

| 10 |

+

|

| 11 |

+

## asr_train_asr_ctc_conformer_e15_linear1024_raw_en_bpe500_sp

|

| 12 |

+

### WER

|

| 13 |

+

|

| 14 |

+

|dataset|Snt|Wrd|Corr|Sub|Del|Ins|Err|S.Err|

|

| 15 |

+

|---|---|---|---|---|---|---|---|---|

|

| 16 |

+

|decode_asr_ctc_asr_model_valid.cer_ctc.ave/dev|466|14671|92.2|5.6|2.2|1.2|9.1|75.3|

|

| 17 |

+

|decode_asr_ctc_asr_model_valid.cer_ctc.ave/test|1155|27500|92.1|5.4|2.5|1.1|9.0|72.8|

|

| 18 |

+

|

| 19 |

+

### CER

|

| 20 |

+

|

| 21 |

+

|dataset|Snt|Wrd|Corr|Sub|Del|Ins|Err|S.Err|

|

| 22 |

+

|---|---|---|---|---|---|---|---|---|

|

| 23 |

+

|decode_asr_ctc_asr_model_valid.cer_ctc.ave/dev|466|78259|97.0|0.9|2.1|1.2|4.2|75.3|

|

| 24 |

+

|decode_asr_ctc_asr_model_valid.cer_ctc.ave/test|1155|145066|96.9|0.9|2.2|1.2|4.3|72.8|

|

| 25 |

+

|

| 26 |

+

### TER

|

| 27 |

+

|

| 28 |

+

|dataset|Snt|Wrd|Corr|Sub|Del|Ins|Err|S.Err|

|

| 29 |

+

|---|---|---|---|---|---|---|---|---|

|

| 30 |

+

|decode_asr_ctc_asr_model_valid.cer_ctc.ave/dev|466|28296|94.5|3.1|2.4|1.2|6.7|75.3|

|

| 31 |

+

|decode_asr_ctc_asr_model_valid.cer_ctc.ave/test|1155|52113|94.6|2.9|2.5|1.2|6.5|72.8|

|

| 32 |

+

|

exp/asr_train_asr_ctc_conformer_e15_linear1024_raw_en_bpe500_sp/config.yaml

ADDED

|

@@ -0,0 +1,694 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|