Upload folder using huggingface_hub

Browse files- checkpoints/checkpoint-pt-55000/model.safetensors +3 -0

- checkpoints/checkpoint-pt-55000/random_states_0.pkl +3 -0

- checkpoints/grad_l2_over_steps.png +0 -0

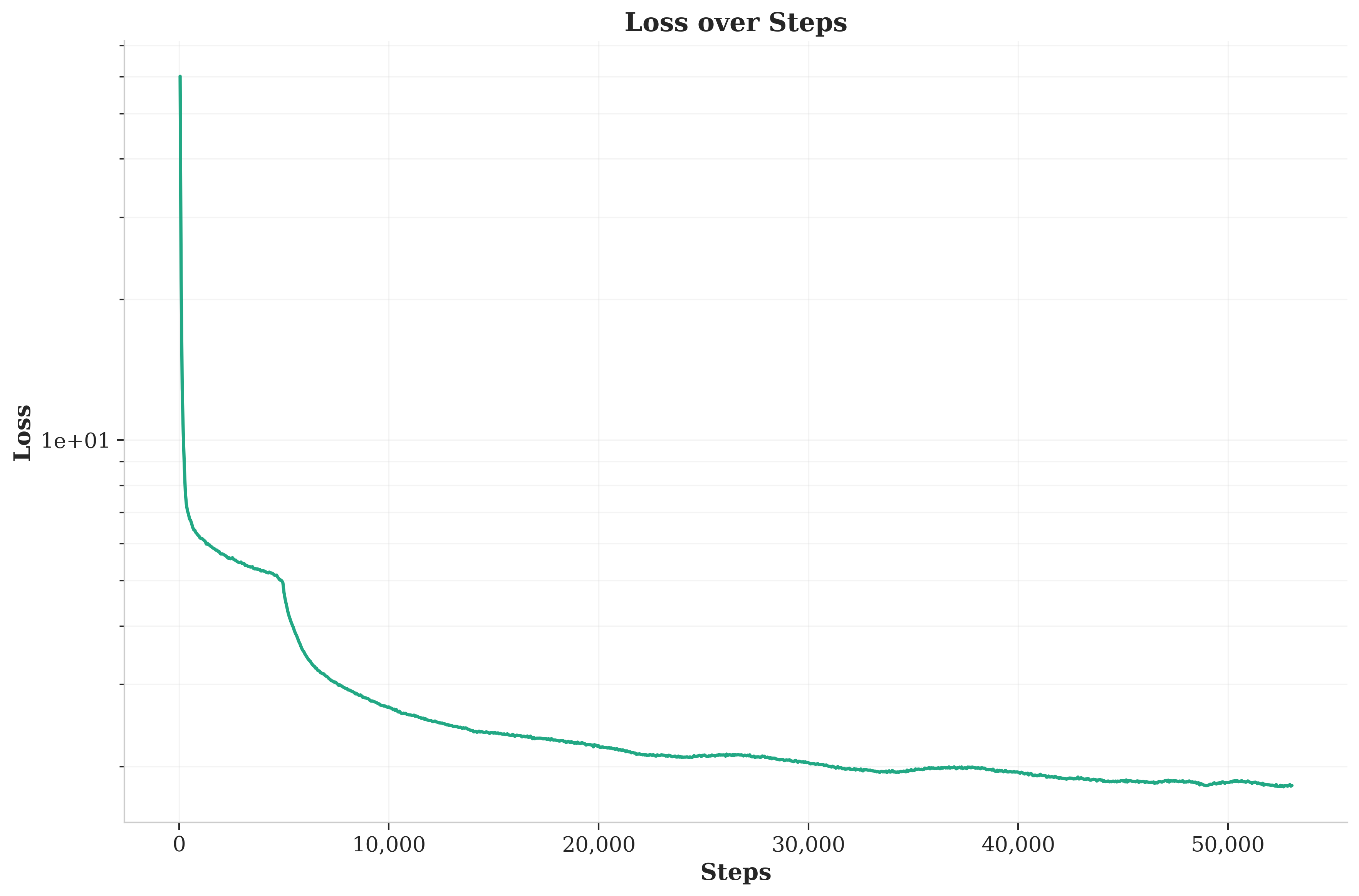

- checkpoints/loss_over_steps.png +0 -0

- checkpoints/lr_over_steps.png +0 -0

- checkpoints/main.log +76 -0

- checkpoints/seconds_per_step_over_steps.png +0 -0

- checkpoints/training_metrics.csv +69 -0

- checkpoints/weights_l2_over_steps.png +0 -0

checkpoints/checkpoint-pt-55000/model.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:ddf4208a5e75ff89821322a7579b77b4c225aafd335871f64c16b203ff171d85

|

| 3 |

+

size 1202681712

|

checkpoints/checkpoint-pt-55000/random_states_0.pkl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:634ae87ad9ec14553a807f970f4e595e3fef7b62fd4afaddf671a76426ff94ed

|

| 3 |

+

size 14344

|

checkpoints/grad_l2_over_steps.png

CHANGED

|

|

checkpoints/loss_over_steps.png

CHANGED

|

|

checkpoints/lr_over_steps.png

CHANGED

|

|

checkpoints/main.log

CHANGED

|

@@ -1149,3 +1149,79 @@ Mixed precision type: bf16

|

|

| 1149 |

[2024-08-11 14:41:03,151][Main][INFO] - [train] Step 53000 out of 80000 | Loss --> 1.816 | Grad_l2 --> 0.305 | Weights_l2 --> 9097.407 | Lr --> 0.003 | Seconds_per_step --> 3.356 |

|

| 1150 |

[2024-08-11 14:43:51,226][Main][INFO] - [train] Step 53050 out of 80000 | Loss --> 1.822 | Grad_l2 --> 0.303 | Weights_l2 --> 9097.331 | Lr --> 0.003 | Seconds_per_step --> 3.361 |

|

| 1151 |

[2024-08-11 14:46:39,664][Main][INFO] - [train] Step 53100 out of 80000 | Loss --> 1.814 | Grad_l2 --> 0.304 | Weights_l2 --> 9097.250 | Lr --> 0.003 | Seconds_per_step --> 3.369 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1149 |

[2024-08-11 14:41:03,151][Main][INFO] - [train] Step 53000 out of 80000 | Loss --> 1.816 | Grad_l2 --> 0.305 | Weights_l2 --> 9097.407 | Lr --> 0.003 | Seconds_per_step --> 3.356 |

|

| 1150 |

[2024-08-11 14:43:51,226][Main][INFO] - [train] Step 53050 out of 80000 | Loss --> 1.822 | Grad_l2 --> 0.303 | Weights_l2 --> 9097.331 | Lr --> 0.003 | Seconds_per_step --> 3.361 |

|

| 1151 |

[2024-08-11 14:46:39,664][Main][INFO] - [train] Step 53100 out of 80000 | Loss --> 1.814 | Grad_l2 --> 0.304 | Weights_l2 --> 9097.250 | Lr --> 0.003 | Seconds_per_step --> 3.369 |

|

| 1152 |

+

[2024-08-11 14:49:28,881][Main][INFO] - [train] Step 53150 out of 80000 | Loss --> 1.827 | Grad_l2 --> 0.304 | Weights_l2 --> 9097.175 | Lr --> 0.003 | Seconds_per_step --> 3.384 |

|

| 1153 |

+

[2024-08-11 14:52:17,120][Main][INFO] - [train] Step 53200 out of 80000 | Loss --> 1.821 | Grad_l2 --> 0.305 | Weights_l2 --> 9097.092 | Lr --> 0.003 | Seconds_per_step --> 3.365 |

|

| 1154 |

+

[2024-08-11 14:55:05,338][Main][INFO] - [train] Step 53250 out of 80000 | Loss --> 1.820 | Grad_l2 --> 0.305 | Weights_l2 --> 9097.015 | Lr --> 0.003 | Seconds_per_step --> 3.364 |

|

| 1155 |

+

[2024-08-11 14:57:54,070][Main][INFO] - [train] Step 53300 out of 80000 | Loss --> 1.830 | Grad_l2 --> 0.304 | Weights_l2 --> 9096.937 | Lr --> 0.003 | Seconds_per_step --> 3.375 |

|

| 1156 |

+

[2024-08-11 15:00:43,188][Main][INFO] - [train] Step 53350 out of 80000 | Loss --> 1.826 | Grad_l2 --> 0.306 | Weights_l2 --> 9096.856 | Lr --> 0.003 | Seconds_per_step --> 3.382 |

|

| 1157 |

+

[2024-08-11 15:03:31,527][Main][INFO] - [train] Step 53400 out of 80000 | Loss --> 1.824 | Grad_l2 --> 0.304 | Weights_l2 --> 9096.776 | Lr --> 0.003 | Seconds_per_step --> 3.367 |

|

| 1158 |

+

[2024-08-11 15:06:19,876][Main][INFO] - [train] Step 53450 out of 80000 | Loss --> 1.834 | Grad_l2 --> 0.306 | Weights_l2 --> 9096.693 | Lr --> 0.003 | Seconds_per_step --> 3.367 |

|

| 1159 |

+

[2024-08-11 15:09:08,569][Main][INFO] - [train] Step 53500 out of 80000 | Loss --> 1.819 | Grad_l2 --> 0.305 | Weights_l2 --> 9096.607 | Lr --> 0.003 | Seconds_per_step --> 3.374 |

|

| 1160 |

+

[2024-08-11 15:11:58,235][Main][INFO] - [train] Step 53550 out of 80000 | Loss --> 1.827 | Grad_l2 --> 0.308 | Weights_l2 --> 9096.526 | Lr --> 0.003 | Seconds_per_step --> 3.393 |

|

| 1161 |

+

[2024-08-11 15:15:16,128][Main][INFO] - [train] Step 53600 out of 80000 | Loss --> 1.830 | Grad_l2 --> 0.303 | Weights_l2 --> 9096.440 | Lr --> 0.003 | Seconds_per_step --> 3.958 |

|

| 1162 |

+

[2024-08-11 15:19:21,909][Main][INFO] - [train] Step 53650 out of 80000 | Loss --> 1.823 | Grad_l2 --> 0.307 | Weights_l2 --> 9096.354 | Lr --> 0.002 | Seconds_per_step --> 4.916 |

|

| 1163 |

+

[2024-08-11 15:23:19,247][Main][INFO] - [train] Step 53700 out of 80000 | Loss --> 1.822 | Grad_l2 --> 0.305 | Weights_l2 --> 9096.265 | Lr --> 0.002 | Seconds_per_step --> 4.747 |

|

| 1164 |

+

[2024-08-11 15:27:16,170][Main][INFO] - [train] Step 53750 out of 80000 | Loss --> 1.822 | Grad_l2 --> 0.307 | Weights_l2 --> 9096.176 | Lr --> 0.002 | Seconds_per_step --> 4.738 |

|

| 1165 |

+

[2024-08-11 15:31:18,960][Main][INFO] - [train] Step 53800 out of 80000 | Loss --> 1.819 | Grad_l2 --> 0.304 | Weights_l2 --> 9096.085 | Lr --> 0.002 | Seconds_per_step --> 4.856 |

|

| 1166 |

+

[2024-08-11 15:35:29,615][Main][INFO] - [train] Step 53850 out of 80000 | Loss --> 1.821 | Grad_l2 --> 0.306 | Weights_l2 --> 9095.991 | Lr --> 0.002 | Seconds_per_step --> 5.013 |

|

| 1167 |

+

[2024-08-11 15:39:24,381][Main][INFO] - [train] Step 53900 out of 80000 | Loss --> 1.824 | Grad_l2 --> 0.307 | Weights_l2 --> 9095.907 | Lr --> 0.002 | Seconds_per_step --> 4.695 |

|

| 1168 |

+

[2024-08-11 15:43:26,740][Main][INFO] - [train] Step 53950 out of 80000 | Loss --> 1.828 | Grad_l2 --> 0.309 | Weights_l2 --> 9095.828 | Lr --> 0.002 | Seconds_per_step --> 4.847 |

|

| 1169 |

+

[2024-08-11 15:47:32,997][Main][INFO] - [train] Step 54000 out of 80000 | Loss --> 1.820 | Grad_l2 --> 0.306 | Weights_l2 --> 9095.743 | Lr --> 0.002 | Seconds_per_step --> 4.925 |

|

| 1170 |

+

[2024-08-11 15:51:31,612][Main][INFO] - [train] Step 54050 out of 80000 | Loss --> 1.827 | Grad_l2 --> 0.305 | Weights_l2 --> 9095.659 | Lr --> 0.002 | Seconds_per_step --> 4.772 |

|

| 1171 |

+

[2024-08-11 15:55:28,280][Main][INFO] - [train] Step 54100 out of 80000 | Loss --> 1.820 | Grad_l2 --> 0.306 | Weights_l2 --> 9095.573 | Lr --> 0.002 | Seconds_per_step --> 4.733 |

|

| 1172 |

+

[2024-08-11 15:59:31,710][Main][INFO] - [train] Step 54150 out of 80000 | Loss --> 1.820 | Grad_l2 --> 0.307 | Weights_l2 --> 9095.484 | Lr --> 0.002 | Seconds_per_step --> 4.869 |

|

| 1173 |

+

[2024-08-11 16:03:47,362][Main][INFO] - [train] Step 54200 out of 80000 | Loss --> 1.821 | Grad_l2 --> 0.309 | Weights_l2 --> 9095.393 | Lr --> 0.002 | Seconds_per_step --> 5.113 |

|

| 1174 |

+

[2024-08-11 16:07:43,746][Main][INFO] - [train] Step 54250 out of 80000 | Loss --> 1.823 | Grad_l2 --> 0.304 | Weights_l2 --> 9095.311 | Lr --> 0.002 | Seconds_per_step --> 4.728 |

|

| 1175 |

+

[2024-08-11 16:11:42,782][Main][INFO] - [train] Step 54300 out of 80000 | Loss --> 1.825 | Grad_l2 --> 0.306 | Weights_l2 --> 9095.229 | Lr --> 0.002 | Seconds_per_step --> 4.781 |

|

| 1176 |

+

[2024-08-11 16:15:51,539][Main][INFO] - [train] Step 54350 out of 80000 | Loss --> 1.815 | Grad_l2 --> 0.305 | Weights_l2 --> 9095.141 | Lr --> 0.002 | Seconds_per_step --> 4.975 |

|

| 1177 |

+

[2024-08-11 16:19:57,704][Main][INFO] - [train] Step 54400 out of 80000 | Loss --> 1.825 | Grad_l2 --> 0.306 | Weights_l2 --> 9095.050 | Lr --> 0.002 | Seconds_per_step --> 4.923 |

|

| 1178 |

+

[2024-08-11 16:23:55,708][Main][INFO] - [train] Step 54450 out of 80000 | Loss --> 1.818 | Grad_l2 --> 0.307 | Weights_l2 --> 9094.969 | Lr --> 0.002 | Seconds_per_step --> 4.760 |

|

| 1179 |

+

[2024-08-11 16:27:58,061][Main][INFO] - [train] Step 54500 out of 80000 | Loss --> 1.821 | Grad_l2 --> 0.305 | Weights_l2 --> 9094.882 | Lr --> 0.002 | Seconds_per_step --> 4.847 |

|

| 1180 |

+

[2024-08-11 16:32:13,877][Main][INFO] - [train] Step 54550 out of 80000 | Loss --> 1.822 | Grad_l2 --> 0.304 | Weights_l2 --> 9094.795 | Lr --> 0.002 | Seconds_per_step --> 5.116 |

|

| 1181 |

+

[2024-08-11 16:36:14,196][Main][INFO] - [train] Step 54600 out of 80000 | Loss --> 1.814 | Grad_l2 --> 0.307 | Weights_l2 --> 9094.709 | Lr --> 0.002 | Seconds_per_step --> 4.806 |

|

| 1182 |

+

[2024-08-11 16:40:14,393][Main][INFO] - [train] Step 54650 out of 80000 | Loss --> 1.820 | Grad_l2 --> 0.304 | Weights_l2 --> 9094.615 | Lr --> 0.002 | Seconds_per_step --> 4.804 |

|

| 1183 |

+

[2024-08-11 16:44:22,066][Main][INFO] - [train] Step 54700 out of 80000 | Loss --> 1.814 | Grad_l2 --> 0.307 | Weights_l2 --> 9094.525 | Lr --> 0.002 | Seconds_per_step --> 4.953 |

|

| 1184 |

+

[2024-08-11 16:48:21,020][Main][INFO] - [train] Step 54750 out of 80000 | Loss --> 1.820 | Grad_l2 --> 0.308 | Weights_l2 --> 9094.438 | Lr --> 0.002 | Seconds_per_step --> 4.779 |

|

| 1185 |

+

[2024-08-11 16:52:25,503][Main][INFO] - [train] Step 54800 out of 80000 | Loss --> 1.817 | Grad_l2 --> 0.306 | Weights_l2 --> 9094.352 | Lr --> 0.002 | Seconds_per_step --> 4.890 |

|

| 1186 |

+

[2024-08-11 16:56:33,065][Main][INFO] - [train] Step 54850 out of 80000 | Loss --> 1.812 | Grad_l2 --> 0.306 | Weights_l2 --> 9094.259 | Lr --> 0.002 | Seconds_per_step --> 4.951 |

|

| 1187 |

+

[2024-08-11 17:00:52,228][Main][INFO] - [train] Step 54900 out of 80000 | Loss --> 1.811 | Grad_l2 --> 0.305 | Weights_l2 --> 9094.169 | Lr --> 0.002 | Seconds_per_step --> 5.183 |

|

| 1188 |

+

[2024-08-11 17:04:52,733][Main][INFO] - [train] Step 54950 out of 80000 | Loss --> 1.801 | Grad_l2 --> 0.307 | Weights_l2 --> 9094.079 | Lr --> 0.002 | Seconds_per_step --> 4.810 |

|

| 1189 |

+

[2024-08-11 17:08:57,633][Main][INFO] - [train] Step 55000 out of 80000 | Loss --> 1.818 | Grad_l2 --> 0.308 | Weights_l2 --> 9093.989 | Lr --> 0.002 | Seconds_per_step --> 4.898 |

|

| 1190 |

+

[2024-08-11 17:08:57,633][accelerate.accelerator][INFO] - Saving current state to checkpoint-pt-55000

|

| 1191 |

+

[2024-08-11 17:08:57,637][accelerate.utils.other][WARNING] - Removed shared tensor {'encoder.embed_tokens.weight', 'decoder.embed_tokens.weight'} while saving. This should be OK, but check by verifying that you don't receive any warning while reloading

|

| 1192 |

+

[2024-08-11 17:09:01,182][accelerate.checkpointing][INFO] - Model weights saved in checkpoint-pt-55000/model.safetensors

|

| 1193 |

+

[2024-08-11 17:09:08,530][accelerate.checkpointing][INFO] - Optimizer state saved in checkpoint-pt-55000/optimizer.bin

|

| 1194 |

+

[2024-08-11 17:09:08,531][accelerate.checkpointing][INFO] - Scheduler state saved in checkpoint-pt-55000/scheduler.bin

|

| 1195 |

+

[2024-08-11 17:09:08,531][accelerate.checkpointing][INFO] - Sampler state for dataloader 0 saved in checkpoint-pt-55000/sampler.bin

|

| 1196 |

+

[2024-08-11 17:09:08,531][accelerate.checkpointing][INFO] - Sampler state for dataloader 1 saved in checkpoint-pt-55000/sampler_1.bin

|

| 1197 |

+

[2024-08-11 17:09:08,532][accelerate.checkpointing][INFO] - Random states saved in checkpoint-pt-55000/random_states_0.pkl

|

| 1198 |

+

[2024-08-11 17:13:21,437][Main][INFO] - [train] Step 55050 out of 80000 | Loss --> 1.807 | Grad_l2 --> 0.304 | Weights_l2 --> 9093.890 | Lr --> 0.002 | Seconds_per_step --> 5.276 |

|

| 1199 |

+

[2024-08-11 17:17:31,393][Main][INFO] - [train] Step 55100 out of 80000 | Loss --> 1.806 | Grad_l2 --> 0.307 | Weights_l2 --> 9093.799 | Lr --> 0.002 | Seconds_per_step --> 4.999 |

|

| 1200 |

+

[2024-08-11 17:21:35,081][Main][INFO] - [train] Step 55150 out of 80000 | Loss --> 1.809 | Grad_l2 --> 0.306 | Weights_l2 --> 9093.712 | Lr --> 0.002 | Seconds_per_step --> 4.874 |

|

| 1201 |

+

[2024-08-11 17:25:45,792][Main][INFO] - [train] Step 55200 out of 80000 | Loss --> 1.801 | Grad_l2 --> 0.305 | Weights_l2 --> 9093.624 | Lr --> 0.002 | Seconds_per_step --> 5.014 |

|

| 1202 |

+

[2024-08-11 17:30:02,450][Main][INFO] - [train] Step 55250 out of 80000 | Loss --> 1.796 | Grad_l2 --> 0.306 | Weights_l2 --> 9093.529 | Lr --> 0.002 | Seconds_per_step --> 5.133 |

|

| 1203 |

+

[2024-08-11 17:34:08,579][Main][INFO] - [train] Step 55300 out of 80000 | Loss --> 1.802 | Grad_l2 --> 0.307 | Weights_l2 --> 9093.433 | Lr --> 0.002 | Seconds_per_step --> 4.922 |

|

| 1204 |

+

[2024-08-11 17:38:13,235][Main][INFO] - [train] Step 55350 out of 80000 | Loss --> 1.796 | Grad_l2 --> 0.306 | Weights_l2 --> 9093.344 | Lr --> 0.002 | Seconds_per_step --> 4.893 |

|

| 1205 |

+

[2024-08-11 17:42:27,852][Main][INFO] - [train] Step 55400 out of 80000 | Loss --> 1.800 | Grad_l2 --> 0.305 | Weights_l2 --> 9093.242 | Lr --> 0.002 | Seconds_per_step --> 5.092 |

|

| 1206 |

+

[2024-08-11 17:46:22,982][Main][INFO] - [train] Step 55450 out of 80000 | Loss --> 1.802 | Grad_l2 --> 0.304 | Weights_l2 --> 9093.152 | Lr --> 0.002 | Seconds_per_step --> 4.703 |

|

| 1207 |

+

[2024-08-11 17:50:20,417][Main][INFO] - [train] Step 55500 out of 80000 | Loss --> 1.802 | Grad_l2 --> 0.306 | Weights_l2 --> 9093.070 | Lr --> 0.002 | Seconds_per_step --> 4.749 |

|

| 1208 |

+

[2024-08-11 17:54:30,178][Main][INFO] - [train] Step 55550 out of 80000 | Loss --> 1.811 | Grad_l2 --> 0.307 | Weights_l2 --> 9092.986 | Lr --> 0.002 | Seconds_per_step --> 4.995 |

|

| 1209 |

+

[2024-08-11 17:58:42,001][Main][INFO] - [train] Step 55600 out of 80000 | Loss --> 1.804 | Grad_l2 --> 0.306 | Weights_l2 --> 9092.885 | Lr --> 0.002 | Seconds_per_step --> 5.036 |

|

| 1210 |

+

[2024-08-11 18:02:39,257][Main][INFO] - [train] Step 55650 out of 80000 | Loss --> 1.804 | Grad_l2 --> 0.311 | Weights_l2 --> 9092.792 | Lr --> 0.002 | Seconds_per_step --> 4.745 |

|

| 1211 |

+

[2024-08-11 18:06:36,810][Main][INFO] - [train] Step 55700 out of 80000 | Loss --> 1.797 | Grad_l2 --> 0.306 | Weights_l2 --> 9092.687 | Lr --> 0.002 | Seconds_per_step --> 4.751 |

|

| 1212 |

+

[2024-08-11 18:10:48,385][Main][INFO] - [train] Step 55750 out of 80000 | Loss --> 1.805 | Grad_l2 --> 0.308 | Weights_l2 --> 9092.598 | Lr --> 0.002 | Seconds_per_step --> 5.031 |

|

| 1213 |

+

[2024-08-11 18:14:53,396][Main][INFO] - [train] Step 55800 out of 80000 | Loss --> 1.813 | Grad_l2 --> 0.308 | Weights_l2 --> 9092.501 | Lr --> 0.002 | Seconds_per_step --> 4.900 |

|

| 1214 |

+

[2024-08-11 18:18:51,650][Main][INFO] - [train] Step 55850 out of 80000 | Loss --> 1.812 | Grad_l2 --> 0.308 | Weights_l2 --> 9092.395 | Lr --> 0.002 | Seconds_per_step --> 4.765 |

|

| 1215 |

+

[2024-08-11 18:23:03,278][Main][INFO] - [train] Step 55900 out of 80000 | Loss --> 1.810 | Grad_l2 --> 0.306 | Weights_l2 --> 9092.299 | Lr --> 0.002 | Seconds_per_step --> 5.033 |

|

| 1216 |

+

[2024-08-11 18:27:14,679][Main][INFO] - [train] Step 55950 out of 80000 | Loss --> 1.811 | Grad_l2 --> 0.309 | Weights_l2 --> 9092.202 | Lr --> 0.002 | Seconds_per_step --> 5.028 |

|

| 1217 |

+

[2024-08-11 18:31:16,692][Main][INFO] - [train] Step 56000 out of 80000 | Loss --> 1.816 | Grad_l2 --> 0.306 | Weights_l2 --> 9092.110 | Lr --> 0.002 | Seconds_per_step --> 4.840 |

|

| 1218 |

+

[2024-08-11 18:35:23,672][Main][INFO] - [train] Step 56050 out of 80000 | Loss --> 1.811 | Grad_l2 --> 0.309 | Weights_l2 --> 9092.013 | Lr --> 0.002 | Seconds_per_step --> 4.940 |

|

| 1219 |

+

[2024-08-11 18:39:34,197][Main][INFO] - [train] Step 56100 out of 80000 | Loss --> 1.803 | Grad_l2 --> 0.308 | Weights_l2 --> 9091.909 | Lr --> 0.002 | Seconds_per_step --> 5.010 |

|

| 1220 |

+

[2024-08-11 18:43:29,112][Main][INFO] - [train] Step 56150 out of 80000 | Loss --> 1.811 | Grad_l2 --> 0.306 | Weights_l2 --> 9091.817 | Lr --> 0.002 | Seconds_per_step --> 4.698 |

|

| 1221 |

+

[2024-08-11 18:47:29,146][Main][INFO] - [train] Step 56200 out of 80000 | Loss --> 1.809 | Grad_l2 --> 0.307 | Weights_l2 --> 9091.721 | Lr --> 0.002 | Seconds_per_step --> 4.801 |

|

| 1222 |

+

[2024-08-11 18:51:37,058][Main][INFO] - [train] Step 56250 out of 80000 | Loss --> 1.810 | Grad_l2 --> 0.306 | Weights_l2 --> 9091.613 | Lr --> 0.002 | Seconds_per_step --> 4.958 |

|

| 1223 |

+

[2024-08-11 18:55:48,367][Main][INFO] - [train] Step 56300 out of 80000 | Loss --> 1.813 | Grad_l2 --> 0.309 | Weights_l2 --> 9091.518 | Lr --> 0.002 | Seconds_per_step --> 5.026 |

|

| 1224 |

+

[2024-08-11 18:59:48,900][Main][INFO] - [train] Step 56350 out of 80000 | Loss --> 1.813 | Grad_l2 --> 0.309 | Weights_l2 --> 9091.421 | Lr --> 0.002 | Seconds_per_step --> 4.811 |

|

| 1225 |

+

[2024-08-11 19:03:49,099][Main][INFO] - [train] Step 56400 out of 80000 | Loss --> 1.803 | Grad_l2 --> 0.310 | Weights_l2 --> 9091.329 | Lr --> 0.002 | Seconds_per_step --> 4.804 |

|

| 1226 |

+

[2024-08-11 19:08:07,847][Main][INFO] - [train] Step 56450 out of 80000 | Loss --> 1.806 | Grad_l2 --> 0.309 | Weights_l2 --> 9091.234 | Lr --> 0.002 | Seconds_per_step --> 5.175 |

|

| 1227 |

+

[2024-08-11 19:12:12,785][Main][INFO] - [train] Step 56500 out of 80000 | Loss --> 1.804 | Grad_l2 --> 0.310 | Weights_l2 --> 9091.130 | Lr --> 0.002 | Seconds_per_step --> 4.899 |

|

checkpoints/seconds_per_step_over_steps.png

CHANGED

|

|

checkpoints/training_metrics.csv

CHANGED

|

@@ -1060,3 +1060,72 @@ timestamp,step,loss,grad_l2,weights_l2,lr,seconds_per_step

|

|

| 1060 |

"2024-08-11 14:38:15,366",52950,1.83,0.306,9097.48,0.003,3.373

|

| 1061 |

"2024-08-11 14:41:03,151",53000,1.816,0.305,9097.407,0.003,3.356

|

| 1062 |

"2024-08-11 14:43:51,226",53050,1.822,0.303,9097.331,0.003,3.361

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1060 |

"2024-08-11 14:38:15,366",52950,1.83,0.306,9097.48,0.003,3.373

|

| 1061 |

"2024-08-11 14:41:03,151",53000,1.816,0.305,9097.407,0.003,3.356

|

| 1062 |

"2024-08-11 14:43:51,226",53050,1.822,0.303,9097.331,0.003,3.361

|

| 1063 |

+

"2024-08-11 14:46:39,664",53100,1.814,0.304,9097.25,0.003,3.369

|

| 1064 |

+

"2024-08-11 14:49:28,881",53150,1.827,0.304,9097.175,0.003,3.384

|

| 1065 |

+

"2024-08-11 14:52:17,120",53200,1.821,0.305,9097.092,0.003,3.365

|

| 1066 |

+

"2024-08-11 14:55:05,338",53250,1.82,0.305,9097.015,0.003,3.364

|

| 1067 |

+

"2024-08-11 14:57:54,070",53300,1.83,0.304,9096.937,0.003,3.375

|

| 1068 |

+

"2024-08-11 15:00:43,188",53350,1.826,0.306,9096.856,0.003,3.382

|

| 1069 |

+

"2024-08-11 15:03:31,527",53400,1.824,0.304,9096.776,0.003,3.367

|

| 1070 |

+

"2024-08-11 15:06:19,876",53450,1.834,0.306,9096.693,0.003,3.367

|

| 1071 |

+

"2024-08-11 15:09:08,569",53500,1.819,0.305,9096.607,0.003,3.374

|

| 1072 |

+

"2024-08-11 15:11:58,235",53550,1.827,0.308,9096.526,0.003,3.393

|

| 1073 |

+

"2024-08-11 15:15:16,128",53600,1.83,0.303,9096.44,0.003,3.958

|

| 1074 |

+

"2024-08-11 15:19:21,909",53650,1.823,0.307,9096.354,0.002,4.916

|

| 1075 |

+

"2024-08-11 15:23:19,247",53700,1.822,0.305,9096.265,0.002,4.747

|

| 1076 |

+

"2024-08-11 15:27:16,170",53750,1.822,0.307,9096.176,0.002,4.738

|

| 1077 |

+

"2024-08-11 15:31:18,960",53800,1.819,0.304,9096.085,0.002,4.856

|

| 1078 |

+

"2024-08-11 15:35:29,615",53850,1.821,0.306,9095.991,0.002,5.013

|

| 1079 |

+

"2024-08-11 15:39:24,381",53900,1.824,0.307,9095.907,0.002,4.695

|

| 1080 |

+

"2024-08-11 15:43:26,740",53950,1.828,0.309,9095.828,0.002,4.847

|

| 1081 |

+

"2024-08-11 15:47:32,997",54000,1.82,0.306,9095.743,0.002,4.925

|

| 1082 |

+

"2024-08-11 15:51:31,612",54050,1.827,0.305,9095.659,0.002,4.772

|

| 1083 |

+

"2024-08-11 15:55:28,280",54100,1.82,0.306,9095.573,0.002,4.733

|

| 1084 |

+

"2024-08-11 15:59:31,710",54150,1.82,0.307,9095.484,0.002,4.869

|

| 1085 |

+

"2024-08-11 16:03:47,362",54200,1.821,0.309,9095.393,0.002,5.113

|

| 1086 |

+

"2024-08-11 16:07:43,746",54250,1.823,0.304,9095.311,0.002,4.728

|

| 1087 |

+

"2024-08-11 16:11:42,782",54300,1.825,0.306,9095.229,0.002,4.781

|

| 1088 |

+

"2024-08-11 16:15:51,539",54350,1.815,0.305,9095.141,0.002,4.975

|

| 1089 |

+

"2024-08-11 16:19:57,704",54400,1.825,0.306,9095.05,0.002,4.923

|

| 1090 |

+

"2024-08-11 16:23:55,708",54450,1.818,0.307,9094.969,0.002,4.76

|

| 1091 |

+

"2024-08-11 16:27:58,061",54500,1.821,0.305,9094.882,0.002,4.847

|

| 1092 |

+

"2024-08-11 16:32:13,877",54550,1.822,0.304,9094.795,0.002,5.116

|

| 1093 |

+

"2024-08-11 16:36:14,196",54600,1.814,0.307,9094.709,0.002,4.806

|

| 1094 |

+

"2024-08-11 16:40:14,393",54650,1.82,0.304,9094.615,0.002,4.804

|

| 1095 |

+

"2024-08-11 16:44:22,066",54700,1.814,0.307,9094.525,0.002,4.953

|

| 1096 |

+

"2024-08-11 16:48:21,020",54750,1.82,0.308,9094.438,0.002,4.779

|

| 1097 |

+

"2024-08-11 16:52:25,503",54800,1.817,0.306,9094.352,0.002,4.89

|

| 1098 |

+

"2024-08-11 16:56:33,065",54850,1.812,0.306,9094.259,0.002,4.951

|

| 1099 |

+

"2024-08-11 17:00:52,228",54900,1.811,0.305,9094.169,0.002,5.183

|

| 1100 |

+

"2024-08-11 17:04:52,733",54950,1.801,0.307,9094.079,0.002,4.81

|

| 1101 |

+

"2024-08-11 17:08:57,633",55000,1.818,0.308,9093.989,0.002,4.898

|

| 1102 |

+

"2024-08-11 17:13:21,437",55050,1.807,0.304,9093.89,0.002,5.276

|

| 1103 |

+

"2024-08-11 17:17:31,393",55100,1.806,0.307,9093.799,0.002,4.999

|

| 1104 |

+

"2024-08-11 17:21:35,081",55150,1.809,0.306,9093.712,0.002,4.874

|

| 1105 |

+

"2024-08-11 17:25:45,792",55200,1.801,0.305,9093.624,0.002,5.014

|

| 1106 |

+

"2024-08-11 17:30:02,450",55250,1.796,0.306,9093.529,0.002,5.133

|

| 1107 |

+

"2024-08-11 17:34:08,579",55300,1.802,0.307,9093.433,0.002,4.922

|

| 1108 |

+

"2024-08-11 17:38:13,235",55350,1.796,0.306,9093.344,0.002,4.893

|

| 1109 |

+

"2024-08-11 17:42:27,852",55400,1.8,0.305,9093.242,0.002,5.092

|

| 1110 |

+

"2024-08-11 17:46:22,982",55450,1.802,0.304,9093.152,0.002,4.703

|

| 1111 |

+

"2024-08-11 17:50:20,417",55500,1.802,0.306,9093.07,0.002,4.749

|

| 1112 |

+

"2024-08-11 17:54:30,178",55550,1.811,0.307,9092.986,0.002,4.995

|

| 1113 |

+

"2024-08-11 17:58:42,001",55600,1.804,0.306,9092.885,0.002,5.036

|

| 1114 |

+

"2024-08-11 18:02:39,257",55650,1.804,0.311,9092.792,0.002,4.745

|

| 1115 |

+

"2024-08-11 18:06:36,810",55700,1.797,0.306,9092.687,0.002,4.751

|

| 1116 |

+

"2024-08-11 18:10:48,385",55750,1.805,0.308,9092.598,0.002,5.031

|

| 1117 |

+

"2024-08-11 18:14:53,396",55800,1.813,0.308,9092.501,0.002,4.9

|

| 1118 |

+

"2024-08-11 18:18:51,650",55850,1.812,0.308,9092.395,0.002,4.765

|

| 1119 |

+

"2024-08-11 18:23:03,278",55900,1.81,0.306,9092.299,0.002,5.033

|

| 1120 |

+

"2024-08-11 18:27:14,679",55950,1.811,0.309,9092.202,0.002,5.028

|

| 1121 |

+

"2024-08-11 18:31:16,692",56000,1.816,0.306,9092.11,0.002,4.84

|

| 1122 |

+

"2024-08-11 18:35:23,672",56050,1.811,0.309,9092.013,0.002,4.94

|

| 1123 |

+

"2024-08-11 18:39:34,197",56100,1.803,0.308,9091.909,0.002,5.01

|

| 1124 |

+

"2024-08-11 18:43:29,112",56150,1.811,0.306,9091.817,0.002,4.698

|

| 1125 |

+

"2024-08-11 18:47:29,146",56200,1.809,0.307,9091.721,0.002,4.801

|

| 1126 |

+

"2024-08-11 18:51:37,058",56250,1.81,0.306,9091.613,0.002,4.958

|

| 1127 |

+

"2024-08-11 18:55:48,367",56300,1.813,0.309,9091.518,0.002,5.026

|

| 1128 |

+

"2024-08-11 18:59:48,900",56350,1.813,0.309,9091.421,0.002,4.811

|

| 1129 |

+

"2024-08-11 19:03:49,099",56400,1.803,0.31,9091.329,0.002,4.804

|

| 1130 |

+

"2024-08-11 19:08:07,847",56450,1.806,0.309,9091.234,0.002,5.175

|

| 1131 |

+

"2024-08-11 19:12:12,785",56500,1.804,0.31,9091.13,0.002,4.899

|

checkpoints/weights_l2_over_steps.png

CHANGED

|

|