Commit

·

675d607

1

Parent(s):

a6047ae

Update README.md

Browse files

README.md

CHANGED

|

@@ -1,22 +1,21 @@

|

|

| 1 |

---

|

| 2 |

-

language:

|

| 3 |

-

- pt

|

| 4 |

license: apache-2.0

|

| 5 |

tags:

|

| 6 |

-

- whisper-event

|

| 7 |

- generated_from_trainer

|

|

|

|

| 8 |

datasets:

|

| 9 |

- mozilla-foundation/common_voice_11_0

|

| 10 |

metrics:

|

| 11 |

- wer

|

| 12 |

model-index:

|

| 13 |

-

- name:

|

| 14 |

results:

|

| 15 |

- task:

|

| 16 |

name: Automatic Speech Recognition

|

| 17 |

type: automatic-speech-recognition

|

| 18 |

dataset:

|

| 19 |

-

name: mozilla-foundation/common_voice_11_0

|

| 20 |

type: mozilla-foundation/common_voice_11_0

|

| 21 |

config: pt

|

| 22 |

split: test

|

|

@@ -30,24 +29,24 @@ model-index:

|

|

| 30 |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

|

| 31 |

should probably proofread and complete it, then remove this comment. -->

|

| 32 |

|

| 33 |

-

#

|

| 34 |

|

| 35 |

-

This model is a fine-tuned version of [openai/whisper-medium](https://huggingface.co/openai/whisper-medium) on the

|

| 36 |

It achieves the following results on the evaluation set:

|

| 37 |

- Loss: 0.2628

|

| 38 |

- Wer: 6.5987

|

| 39 |

|

| 40 |

-

##

|

| 41 |

|

| 42 |

-

|

| 43 |

|

| 44 |

-

##

|

| 45 |

|

| 46 |

-

|

| 47 |

|

| 48 |

-

|

| 49 |

|

| 50 |

-

|

| 51 |

|

| 52 |

## Training procedure

|

| 53 |

|

|

@@ -79,4 +78,4 @@ The following hyperparameters were used during training:

|

|

| 79 |

- Transformers 4.26.0.dev0

|

| 80 |

- Pytorch 1.13.0+cu117

|

| 81 |

- Datasets 2.7.1.dev0

|

| 82 |

-

- Tokenizers 0.13.2

|

|

|

|

| 1 |

---

|

| 2 |

+

language: pt

|

|

|

|

| 3 |

license: apache-2.0

|

| 4 |

tags:

|

|

|

|

| 5 |

- generated_from_trainer

|

| 6 |

+

- whisper-event

|

| 7 |

datasets:

|

| 8 |

- mozilla-foundation/common_voice_11_0

|

| 9 |

metrics:

|

| 10 |

- wer

|

| 11 |

model-index:

|

| 12 |

+

- name: openai/whisper-medium

|

| 13 |

results:

|

| 14 |

- task:

|

| 15 |

name: Automatic Speech Recognition

|

| 16 |

type: automatic-speech-recognition

|

| 17 |

dataset:

|

| 18 |

+

name: mozilla-foundation/common_voice_11_0

|

| 19 |

type: mozilla-foundation/common_voice_11_0

|

| 20 |

config: pt

|

| 21 |

split: test

|

|

|

|

| 29 |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

|

| 30 |

should probably proofread and complete it, then remove this comment. -->

|

| 31 |

|

| 32 |

+

# Portuguese Medium Whisper

|

| 33 |

|

| 34 |

+

This model is a fine-tuned version of [openai/whisper-medium](https://huggingface.co/openai/whisper-medium) on the common_voice_11_0 dataset.

|

| 35 |

It achieves the following results on the evaluation set:

|

| 36 |

- Loss: 0.2628

|

| 37 |

- Wer: 6.5987

|

| 38 |

|

| 39 |

+

## Blog post

|

| 40 |

|

| 41 |

+

All information about this model in this blog post: [Speech-to-Text & IA | Transcreva qualquer áudio para o português com o Whisper (OpenAI)... sem nenhum custo!](https://medium.com/@pierre_guillou/speech-to-text-ia-transcreva-qualquer-%C3%A1udio-para-o-portugu%C3%AAs-com-o-whisper-openai-sem-ad0c17384681).

|

| 42 |

|

| 43 |

+

## New SOTA

|

| 44 |

|

| 45 |

+

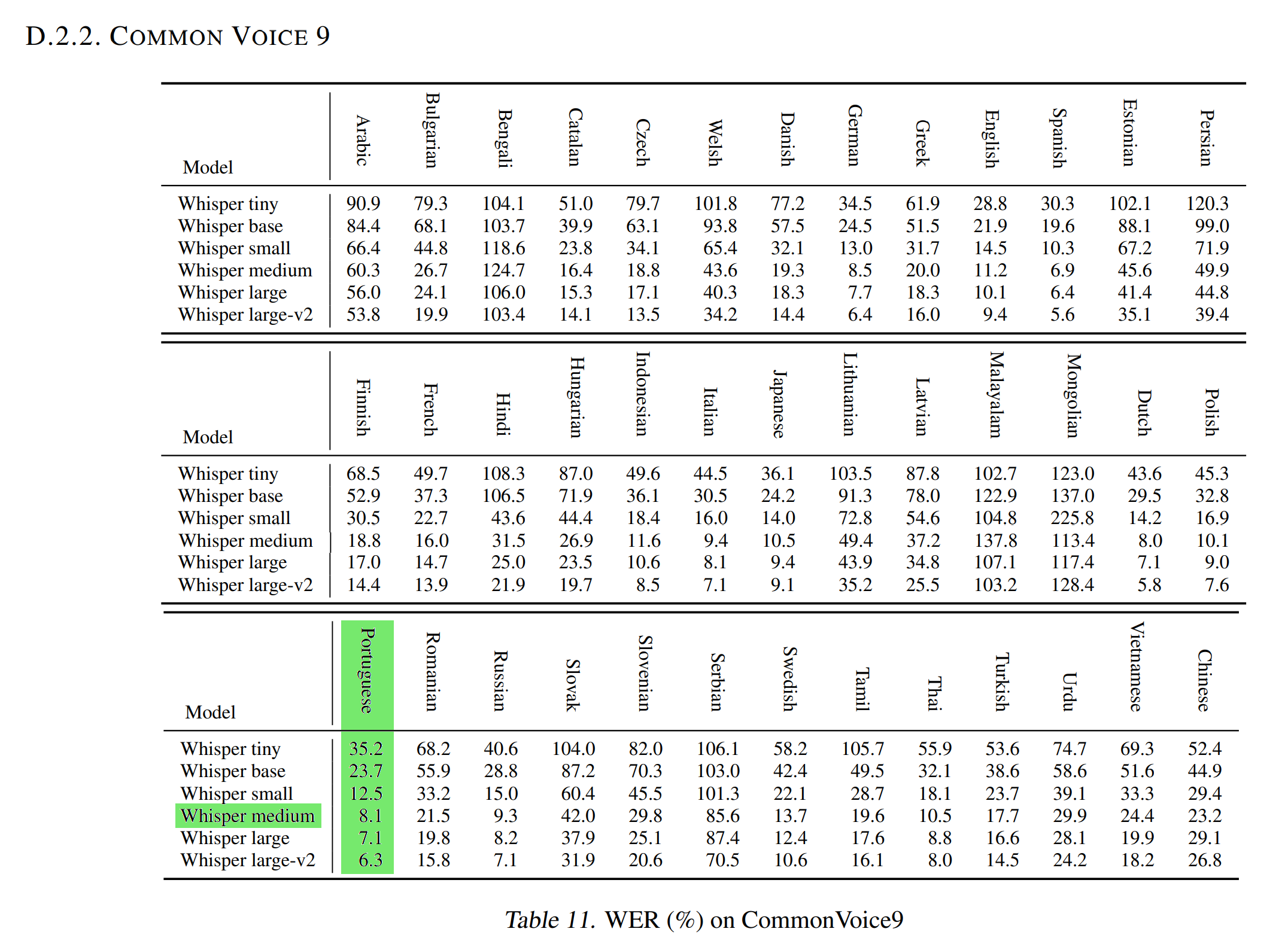

The Normalized WER in the [OpenAI Whisper article](https://cdn.openai.com/papers/whisper.pdf) with the [Common Voice 9.0](https://huggingface.co/datasets/mozilla-foundation/common_voice_9_0) test dataset is 8.1.

|

| 46 |

|

| 47 |

+

As this test dataset is similar to the [Common Voice 11.0](https://huggingface.co/datasets/mozilla-foundation/common_voice_11_0) test dataset used to evaluate our model (WER and WER Norm), it means that **our Portuguese Medium Whisper is better than the [Medium Whisper](https://huggingface.co/openai/whisper-medium) model at transcribing audios Portuguese in text** (and even better than the [Whisper Large](https://huggingface.co/openai/whisper-large) that has a WER Norm of 7.1!).

|

| 48 |

|

| 49 |

+

|

| 50 |

|

| 51 |

## Training procedure

|

| 52 |

|

|

|

|

| 78 |

- Transformers 4.26.0.dev0

|

| 79 |

- Pytorch 1.13.0+cu117

|

| 80 |

- Datasets 2.7.1.dev0

|

| 81 |

+

- Tokenizers 0.13.2

|