Upload 3 files

Browse files- README.md +86 -3

- inf.png +0 -0

- predict_online.py +5 -15

README.md

CHANGED

|

@@ -20,14 +20,97 @@ metrics:

|

|

| 20 |

- accuracy

|

| 21 |

---

|

| 22 |

|

| 23 |

-

|

|

|

|

|

|

|

| 24 |

- age

|

| 25 |

- gender

|

| 26 |

-

|

| 27 |

... of the current speaker in one forward pass.

|

| 28 |

|

|

|

|

|

|

|

| 29 |

It was trained on [mozilla common voice](https://commonvoice.mozilla.org/).

|

| 30 |

|

| 31 |

Code for training can be found [here](https://github.com/padmalcom/wav2vec2-asr-ultimate-german).

|

| 32 |

|

| 33 |

-

*inference_online.py* shows, how the model can be used.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 20 |

- accuracy

|

| 21 |

---

|

| 22 |

|

| 23 |

+

# German multi-task ASR with age and gender classification

|

| 24 |

+

|

| 25 |

+

This multi-task wav2vec2 based ASR model has two additional classification heads to detect:

|

| 26 |

- age

|

| 27 |

- gender

|

|

|

|

| 28 |

... of the current speaker in one forward pass.

|

| 29 |

|

| 30 |

+

|

| 31 |

+

|

| 32 |

It was trained on [mozilla common voice](https://commonvoice.mozilla.org/).

|

| 33 |

|

| 34 |

Code for training can be found [here](https://github.com/padmalcom/wav2vec2-asr-ultimate-german).

|

| 35 |

|

| 36 |

+

*inference_online.py* shows, how the model can be used.

|

| 37 |

+

|

| 38 |

+

```python

|

| 39 |

+

from transformers import (

|

| 40 |

+

Wav2Vec2FeatureExtractor,

|

| 41 |

+

Wav2Vec2CTCTokenizer,

|

| 42 |

+

Wav2Vec2Processor

|

| 43 |

+

)

|

| 44 |

+

import librosa

|

| 45 |

+

from datasets import Dataset

|

| 46 |

+

import numpy as np

|

| 47 |

+

from model import Wav2Vec2ForCTCnCLS

|

| 48 |

+

from ctctrainer import CTCTrainer

|

| 49 |

+

from datacollator import DataCollatorCTCWithPadding

|

| 50 |

+

|

| 51 |

+

model_path = "padmalcom/wav2vec2-asr-ultimate-german"

|

| 52 |

+

pred_data = {'file': ['audio2.wav']}

|

| 53 |

+

|

| 54 |

+

cls_age_label_map = {'teens':0, 'twenties': 1, 'thirties': 2, 'fourties': 3, 'fifties': 4, 'sixties': 5, 'seventies': 6, 'eighties': 7}

|

| 55 |

+

cls_age_label_class_weights = [0] * len(cls_age_label_map)

|

| 56 |

+

|

| 57 |

+

cls_gender_label_map = {'female': 0, 'male': 1}

|

| 58 |

+

cls_gender_label_class_weights = [0] * len(cls_gender_label_map)

|

| 59 |

+

|

| 60 |

+

tokenizer = Wav2Vec2CTCTokenizer("./vocab.json", unk_token="<unk>", pad_token="<pad>", word_delimiter_token="|")

|

| 61 |

+

|

| 62 |

+

feature_extractor = Wav2Vec2FeatureExtractor(feature_size=1, sampling_rate=16000, padding_value=0.0, do_normalize=True, return_attention_mask=False)

|

| 63 |

+

|

| 64 |

+

processor = Wav2Vec2Processor(feature_extractor, tokenizer)

|

| 65 |

+

|

| 66 |

+

model = Wav2Vec2ForCTCnCLS.from_pretrained(

|

| 67 |

+

model_path,

|

| 68 |

+

vocab_size=len(processor.tokenizer),

|

| 69 |

+

age_cls_len=len(cls_age_label_map),

|

| 70 |

+

gender_cls_len=len(cls_gender_label_map),

|

| 71 |

+

age_cls_weights=cls_age_label_class_weights,

|

| 72 |

+

gender_cls_weights=cls_gender_label_class_weights,

|

| 73 |

+

alpha=0.1,

|

| 74 |

+

)

|

| 75 |

+

|

| 76 |

+

data_collator = DataCollatorCTCWithPadding(processor=processor, padding=True, audio_only=True)

|

| 77 |

+

|

| 78 |

+

def prepare_dataset_step1(example):

|

| 79 |

+

example["speech"], example["sampling_rate"] = librosa.load(example["file"], sr=feature_extractor.sampling_rate)

|

| 80 |

+

return example

|

| 81 |

+

|

| 82 |

+

def prepare_dataset_step2(batch):

|

| 83 |

+

batch["input_values"] = processor(batch["speech"], sampling_rate=batch["sampling_rate"][0]).input_values

|

| 84 |

+

return batch

|

| 85 |

+

|

| 86 |

+

val_dataset = Dataset.from_dict(pred_data)

|

| 87 |

+

val_dataset = val_dataset.map(prepare_dataset_step1, load_from_cache_file=False)

|

| 88 |

+

val_dataset = val_dataset.map(prepare_dataset_step2, batch_size=2, batched=True, num_proc=1, load_from_cache_file=False)

|

| 89 |

+

|

| 90 |

+

trainer = CTCTrainer(

|

| 91 |

+

model=model,

|

| 92 |

+

data_collator=data_collator,

|

| 93 |

+

eval_dataset=val_dataset,

|

| 94 |

+

tokenizer=processor.feature_extractor,

|

| 95 |

+

)

|

| 96 |

+

|

| 97 |

+

predictions, _, _ = trainer.predict(val_dataset, metric_key_prefix="predict")

|

| 98 |

+

logits_ctc, logits_age_cls, logits_gender_cls = predictions

|

| 99 |

+

|

| 100 |

+

# process age classification

|

| 101 |

+

pred_ids_age_cls = np.argmax(logits_age_cls, axis=-1)

|

| 102 |

+

pred_age = pred_ids_age_cls[0]

|

| 103 |

+

age_class = [k for k, v in cls_age_label_map.items() if v == pred_age]

|

| 104 |

+

print("Predicted age: ", age_class[0])

|

| 105 |

+

|

| 106 |

+

# process gender classification

|

| 107 |

+

pred_ids_gender_cls = np.argmax(logits_gender_cls, axis=-1)

|

| 108 |

+

pred_gender = pred_ids_gender_cls[0]

|

| 109 |

+

gender_class = [k for k, v in cls_gender_label_map.items() if v == pred_gender]

|

| 110 |

+

print("Predicted gender: ", gender_class[0])

|

| 111 |

+

|

| 112 |

+

# process token classification

|

| 113 |

+

pred_ids_ctc = np.argmax(logits_ctc, axis=-1)

|

| 114 |

+

pred_str = processor.batch_decode(pred_ids_ctc, output_word_offsets=True)

|

| 115 |

+

print("pred text: ", pred_str.text[0])

|

| 116 |

+

```

|

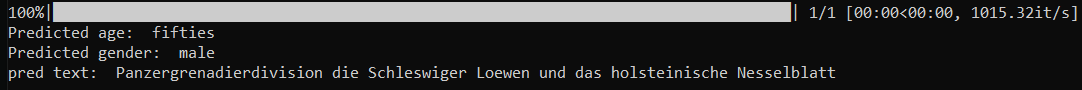

inf.png

ADDED

|

predict_online.py

CHANGED

|

@@ -3,26 +3,21 @@ from transformers import (

|

|

| 3 |

Wav2Vec2CTCTokenizer,

|

| 4 |

Wav2Vec2Processor

|

| 5 |

)

|

| 6 |

-

import os

|

| 7 |

import librosa

|

| 8 |

from datasets import Dataset

|

| 9 |

-

from datasets import disable_caching

|

| 10 |

import numpy as np

|

| 11 |

-

import torch.nn.functional as F

|

| 12 |

-

import torch

|

| 13 |

from model import Wav2Vec2ForCTCnCLS

|

| 14 |

from ctctrainer import CTCTrainer

|

| 15 |

from datacollator import DataCollatorCTCWithPadding

|

| 16 |

|

| 17 |

-

|

|

|

|

| 18 |

|

| 19 |

cls_age_label_map = {'teens':0, 'twenties': 1, 'thirties': 2, 'fourties': 3, 'fifties': 4, 'sixties': 5, 'seventies': 6, 'eighties': 7}

|

| 20 |

cls_age_label_class_weights = [0] * len(cls_age_label_map)

|

| 21 |

|

| 22 |

cls_gender_label_map = {'female': 0, 'male': 1}

|

| 23 |

cls_gender_label_class_weights = [0] * len(cls_gender_label_map)

|

| 24 |

-

|

| 25 |

-

model_path = "padmalcom/wav2vec2-asr-ultimate-german"

|

| 26 |

|

| 27 |

tokenizer = Wav2Vec2CTCTokenizer("./vocab.json", unk_token="<unk>", pad_token="<pad>", word_delimiter_token="|")

|

| 28 |

|

|

@@ -42,12 +37,8 @@ model = Wav2Vec2ForCTCnCLS.from_pretrained(

|

|

| 42 |

|

| 43 |

data_collator = DataCollatorCTCWithPadding(processor=processor, padding=True, audio_only=True)

|

| 44 |

|

| 45 |

-

pred_data = {'file': ['audio2.wav']}

|

| 46 |

-

|

| 47 |

-

target_sr = 16000

|

| 48 |

-

|

| 49 |

def prepare_dataset_step1(example):

|

| 50 |

-

example["speech"], example["sampling_rate"] = librosa.load(example["file"], sr=

|

| 51 |

return example

|

| 52 |

|

| 53 |

def prepare_dataset_step2(batch):

|

|

@@ -65,8 +56,7 @@ trainer = CTCTrainer(

|

|

| 65 |

tokenizer=processor.feature_extractor,

|

| 66 |

)

|

| 67 |

|

| 68 |

-

|

| 69 |

-

predictions, labels, metrics = trainer.predict(val_dataset, metric_key_prefix="predict")

|

| 70 |

logits_ctc, logits_age_cls, logits_gender_cls = predictions

|

| 71 |

|

| 72 |

# process age classification

|

|

@@ -84,4 +74,4 @@ print("Predicted gender: ", gender_class[0])

|

|

| 84 |

# process token classification

|

| 85 |

pred_ids_ctc = np.argmax(logits_ctc, axis=-1)

|

| 86 |

pred_str = processor.batch_decode(pred_ids_ctc, output_word_offsets=True)

|

| 87 |

-

print("pred text: ", pred_str.text)

|

|

|

|

| 3 |

Wav2Vec2CTCTokenizer,

|

| 4 |

Wav2Vec2Processor

|

| 5 |

)

|

|

|

|

| 6 |

import librosa

|

| 7 |

from datasets import Dataset

|

|

|

|

| 8 |

import numpy as np

|

|

|

|

|

|

|

| 9 |

from model import Wav2Vec2ForCTCnCLS

|

| 10 |

from ctctrainer import CTCTrainer

|

| 11 |

from datacollator import DataCollatorCTCWithPadding

|

| 12 |

|

| 13 |

+

model_path = "padmalcom/wav2vec2-asr-ultimate-german"

|

| 14 |

+

pred_data = {'file': ['audio2.wav']}

|

| 15 |

|

| 16 |

cls_age_label_map = {'teens':0, 'twenties': 1, 'thirties': 2, 'fourties': 3, 'fifties': 4, 'sixties': 5, 'seventies': 6, 'eighties': 7}

|

| 17 |

cls_age_label_class_weights = [0] * len(cls_age_label_map)

|

| 18 |

|

| 19 |

cls_gender_label_map = {'female': 0, 'male': 1}

|

| 20 |

cls_gender_label_class_weights = [0] * len(cls_gender_label_map)

|

|

|

|

|

|

|

| 21 |

|

| 22 |

tokenizer = Wav2Vec2CTCTokenizer("./vocab.json", unk_token="<unk>", pad_token="<pad>", word_delimiter_token="|")

|

| 23 |

|

|

|

|

| 37 |

|

| 38 |

data_collator = DataCollatorCTCWithPadding(processor=processor, padding=True, audio_only=True)

|

| 39 |

|

|

|

|

|

|

|

|

|

|

|

|

|

| 40 |

def prepare_dataset_step1(example):

|

| 41 |

+

example["speech"], example["sampling_rate"] = librosa.load(example["file"], sr=feature_extractor.sampling_rate)

|

| 42 |

return example

|

| 43 |

|

| 44 |

def prepare_dataset_step2(batch):

|

|

|

|

| 56 |

tokenizer=processor.feature_extractor,

|

| 57 |

)

|

| 58 |

|

| 59 |

+

predictions, _, _ = trainer.predict(val_dataset, metric_key_prefix="predict")

|

|

|

|

| 60 |

logits_ctc, logits_age_cls, logits_gender_cls = predictions

|

| 61 |

|

| 62 |

# process age classification

|

|

|

|

| 74 |

# process token classification

|

| 75 |

pred_ids_ctc = np.argmax(logits_ctc, axis=-1)

|

| 76 |

pred_str = processor.batch_decode(pred_ids_ctc, output_word_offsets=True)

|

| 77 |

+

print("pred text: ", pred_str.text[0])

|