End of training

Browse files- all_results.json +19 -0

- egy_training_log.txt +2 -0

- eval_results.json +13 -0

- train_results.json +9 -0

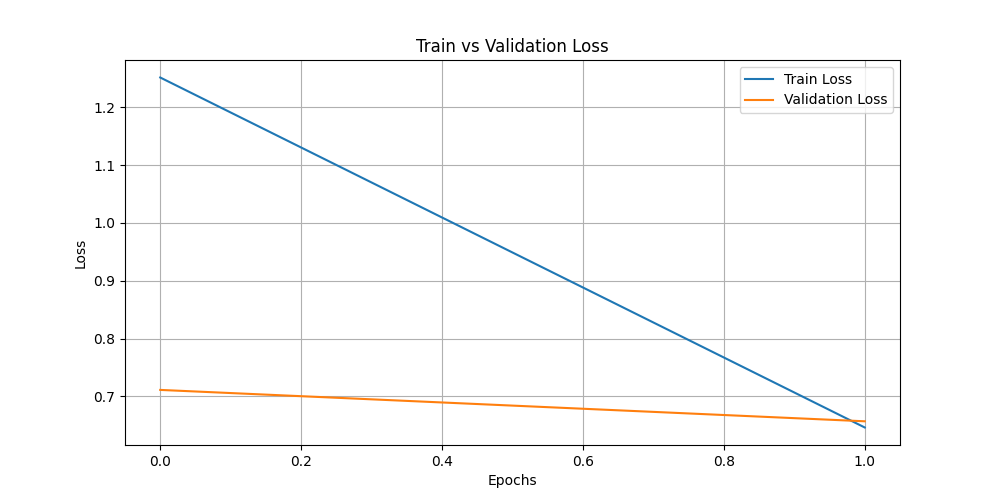

- train_vs_val_loss.png +0 -0

- trainer_state.json +108 -0

all_results.json

ADDED

|

@@ -0,0 +1,19 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"epoch": 3.0,

|

| 3 |

+

"eval_bleu": 0.2518224574721449,

|

| 4 |

+

"eval_loss": 0.6498554348945618,

|

| 5 |

+

"eval_rouge1": 0.57015304568835,

|

| 6 |

+

"eval_rouge2": 0.3118394078609683,

|

| 7 |

+

"eval_rougeL": 0.5676740384593599,

|

| 8 |

+

"eval_runtime": 143.5324,

|

| 9 |

+

"eval_samples": 5405,

|

| 10 |

+

"eval_samples_per_second": 37.657,

|

| 11 |

+

"eval_steps_per_second": 4.71,

|

| 12 |

+

"perplexity": 1.9152639286675246,

|

| 13 |

+

"total_flos": 4237242236928000.0,

|

| 14 |

+

"train_loss": 0.8216270929436044,

|

| 15 |

+

"train_runtime": 2338.2192,

|

| 16 |

+

"train_samples": 21622,

|

| 17 |

+

"train_samples_per_second": 27.742,

|

| 18 |

+

"train_steps_per_second": 3.468

|

| 19 |

+

}

|

egy_training_log.txt

CHANGED

|

@@ -150,3 +150,5 @@ INFO:root:Epoch 2.0: Train Loss = 1.2513, Eval Loss = 0.7111806869506836

|

|

| 150 |

INFO:absl:Using default tokenizer.

|

| 151 |

INFO:root:Epoch 3.0: Train Loss = 0.6462, Eval Loss = 0.6569304466247559

|

| 152 |

INFO:absl:Using default tokenizer.

|

|

|

|

|

|

|

|

|

| 150 |

INFO:absl:Using default tokenizer.

|

| 151 |

INFO:root:Epoch 3.0: Train Loss = 0.6462, Eval Loss = 0.6569304466247559

|

| 152 |

INFO:absl:Using default tokenizer.

|

| 153 |

+

INFO:__main__:*** Evaluate ***

|

| 154 |

+

INFO:absl:Using default tokenizer.

|

eval_results.json

ADDED

|

@@ -0,0 +1,13 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"epoch": 3.0,

|

| 3 |

+

"eval_bleu": 0.2518224574721449,

|

| 4 |

+

"eval_loss": 0.6498554348945618,

|

| 5 |

+

"eval_rouge1": 0.57015304568835,

|

| 6 |

+

"eval_rouge2": 0.3118394078609683,

|

| 7 |

+

"eval_rougeL": 0.5676740384593599,

|

| 8 |

+

"eval_runtime": 143.5324,

|

| 9 |

+

"eval_samples": 5405,

|

| 10 |

+

"eval_samples_per_second": 37.657,

|

| 11 |

+

"eval_steps_per_second": 4.71,

|

| 12 |

+

"perplexity": 1.9152639286675246

|

| 13 |

+

}

|

train_results.json

ADDED

|

@@ -0,0 +1,9 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"epoch": 3.0,

|

| 3 |

+

"total_flos": 4237242236928000.0,

|

| 4 |

+

"train_loss": 0.8216270929436044,

|

| 5 |

+

"train_runtime": 2338.2192,

|

| 6 |

+

"train_samples": 21622,

|

| 7 |

+

"train_samples_per_second": 27.742,

|

| 8 |

+

"train_steps_per_second": 3.468

|

| 9 |

+

}

|

train_vs_val_loss.png

ADDED

|

trainer_state.json

ADDED

|

@@ -0,0 +1,108 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"best_metric": 0.6498554348945618,

|

| 3 |

+

"best_model_checkpoint": "/home/iais_marenpielka/Bouthaina/res_nw_dj/checkpoint-8109",

|

| 4 |

+

"epoch": 3.0,

|

| 5 |

+

"eval_steps": 500,

|

| 6 |

+

"global_step": 8109,

|

| 7 |

+

"is_hyper_param_search": false,

|

| 8 |

+

"is_local_process_zero": true,

|

| 9 |

+

"is_world_process_zero": true,

|

| 10 |

+

"log_history": [

|

| 11 |

+

{

|

| 12 |

+

"epoch": 1.0,

|

| 13 |

+

"grad_norm": 1.3912957906723022,

|

| 14 |

+

"learning_rate": 3.552372190826653e-05,

|

| 15 |

+

"loss": 1.2513,

|

| 16 |

+

"step": 2703

|

| 17 |

+

},

|

| 18 |

+

{

|

| 19 |

+

"epoch": 1.0,

|

| 20 |

+

"eval_bleu": 0.22005527068442493,

|

| 21 |

+

"eval_loss": 0.7111806869506836,

|

| 22 |

+

"eval_rouge1": 0.5171822713118965,

|

| 23 |

+

"eval_rouge2": 0.253317924025756,

|

| 24 |

+

"eval_rougeL": 0.5148202154962768,

|

| 25 |

+

"eval_runtime": 21.8652,

|

| 26 |

+

"eval_samples_per_second": 247.197,

|

| 27 |

+

"eval_steps_per_second": 30.917,

|

| 28 |

+

"step": 2703

|

| 29 |

+

},

|

| 30 |

+

{

|

| 31 |

+

"epoch": 2.0,

|

| 32 |

+

"grad_norm": 1.4323362112045288,

|

| 33 |

+

"learning_rate": 1.7761860954133264e-05,

|

| 34 |

+

"loss": 0.6462,

|

| 35 |

+

"step": 5406

|

| 36 |

+

},

|

| 37 |

+

{

|

| 38 |

+

"epoch": 2.0,

|

| 39 |

+

"eval_bleu": 0.24508343939723337,

|

| 40 |

+

"eval_loss": 0.6569304466247559,

|

| 41 |

+

"eval_rouge1": 0.557883823201639,

|

| 42 |

+

"eval_rouge2": 0.2973836473978787,

|

| 43 |

+

"eval_rougeL": 0.5553146565104614,

|

| 44 |

+

"eval_runtime": 150.8243,

|

| 45 |

+

"eval_samples_per_second": 35.836,

|

| 46 |

+

"eval_steps_per_second": 4.482,

|

| 47 |

+

"step": 5406

|

| 48 |

+

},

|

| 49 |

+

{

|

| 50 |

+

"epoch": 3.0,

|

| 51 |

+

"grad_norm": 1.2555302381515503,

|

| 52 |

+

"learning_rate": 0.0,

|

| 53 |

+

"loss": 0.5673,

|

| 54 |

+

"step": 8109

|

| 55 |

+

},

|

| 56 |

+

{

|

| 57 |

+

"epoch": 3.0,

|

| 58 |

+

"eval_bleu": 0.2518224574721449,

|

| 59 |

+

"eval_loss": 0.6498554348945618,

|

| 60 |

+

"eval_rouge1": 0.57015304568835,

|

| 61 |

+

"eval_rouge2": 0.3118394078609683,

|

| 62 |

+

"eval_rougeL": 0.5676740384593599,

|

| 63 |

+

"eval_runtime": 172.9357,

|

| 64 |

+

"eval_samples_per_second": 31.254,

|

| 65 |

+

"eval_steps_per_second": 3.909,

|

| 66 |

+

"step": 8109

|

| 67 |

+

},

|

| 68 |

+

{

|

| 69 |

+

"epoch": 3.0,

|

| 70 |

+

"step": 8109,

|

| 71 |

+

"total_flos": 4237242236928000.0,

|

| 72 |

+

"train_loss": 0.8216270929436044,

|

| 73 |

+

"train_runtime": 2338.2192,

|

| 74 |

+

"train_samples_per_second": 27.742,

|

| 75 |

+

"train_steps_per_second": 3.468

|

| 76 |

+

}

|

| 77 |

+

],

|

| 78 |

+

"logging_steps": 500,

|

| 79 |

+

"max_steps": 8109,

|

| 80 |

+

"num_input_tokens_seen": 0,

|

| 81 |

+

"num_train_epochs": 3,

|

| 82 |

+

"save_steps": 500,

|

| 83 |

+

"stateful_callbacks": {

|

| 84 |

+

"EarlyStoppingCallback": {

|

| 85 |

+

"args": {

|

| 86 |

+

"early_stopping_patience": 5,

|

| 87 |

+

"early_stopping_threshold": 0.0

|

| 88 |

+

},

|

| 89 |

+

"attributes": {

|

| 90 |

+

"early_stopping_patience_counter": 0

|

| 91 |

+

}

|

| 92 |

+

},

|

| 93 |

+

"TrainerControl": {

|

| 94 |

+

"args": {

|

| 95 |

+

"should_epoch_stop": false,

|

| 96 |

+

"should_evaluate": false,

|

| 97 |

+

"should_log": false,

|

| 98 |

+

"should_save": true,

|

| 99 |

+

"should_training_stop": true

|

| 100 |

+

},

|

| 101 |

+

"attributes": {}

|

| 102 |

+

}

|

| 103 |

+

},

|

| 104 |

+

"total_flos": 4237242236928000.0,

|

| 105 |

+

"train_batch_size": 8,

|

| 106 |

+

"trial_name": null,

|

| 107 |

+

"trial_params": null

|

| 108 |

+

}

|