Commit

•

02648b5

1

Parent(s):

58952c1

Update README.md

Browse files

README.md

CHANGED

|

@@ -1,3 +1,213 @@

|

|

| 1 |

---

|

| 2 |

license: apache-2.0

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

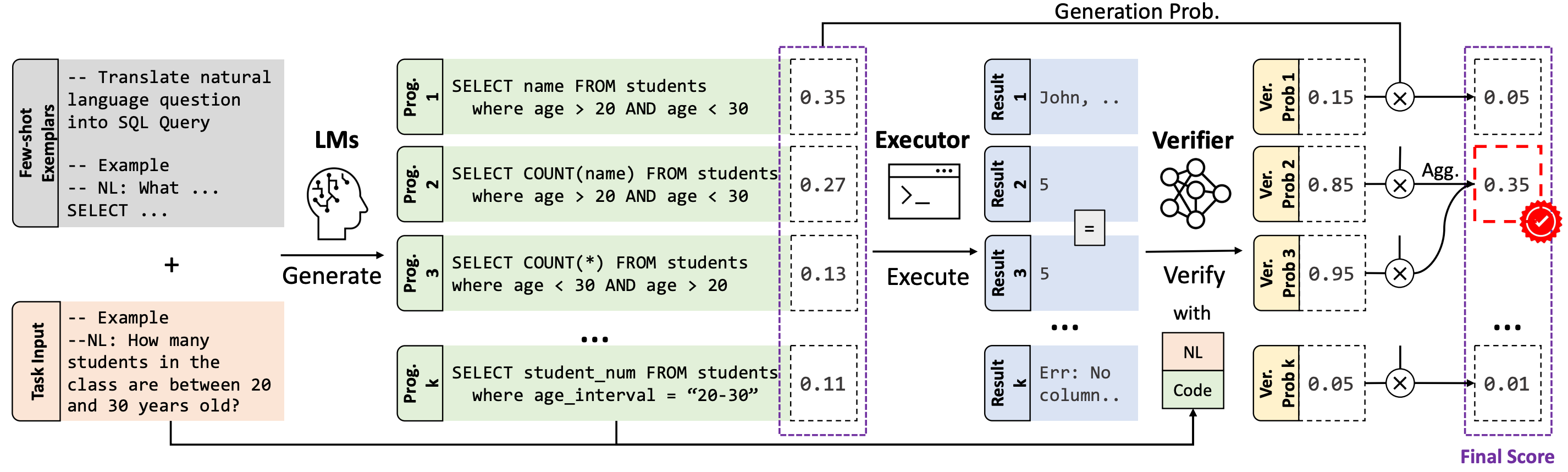

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 3 |

---

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

license: apache-2.0

|

| 3 |

+

datasets:

|

| 4 |

+

- wikitablequestions

|

| 5 |

+

metrics:

|

| 6 |

+

- accuracy

|

| 7 |

+

model-index:

|

| 8 |

+

- name: lever-wikitq-codex

|

| 9 |

+

results:

|

| 10 |

+

- task:

|

| 11 |

+

type: code generation # Required. Example: automatic-speech-recognition

|

| 12 |

+

# name: {task_name} # Optional. Example: Speech Recognition

|

| 13 |

+

dataset:

|

| 14 |

+

type: wikitablequestions # Required. Example: common_voice. Use dataset id from https://hf.co/datasets

|

| 15 |

+

name: WikiTQ (text-to-sql) # Required. A pretty name for the dataset. Example: Common Voice (French)

|

| 16 |

+

# config: {dataset_config} # Optional. The name of the dataset configuration used in `load_dataset()`. Example: fr in `load_dataset("common_voice", "fr")`. See the `datasets` docs for more info: https://huggingface.co/docs/datasets/package_reference/loading_methods#datasets.load_dataset.name

|

| 17 |

+

# split: {dataset_split} # Optional. Example: test

|

| 18 |

+

# revision: {dataset_revision} # Optional. Example: 5503434ddd753f426f4b38109466949a1217c2bb

|

| 19 |

+

# args:

|

| 20 |

+

# {arg_0}: {value_0} # Optional. Additional arguments to `load_dataset()`. Example for wikipedia: language: en

|

| 21 |

+

# {arg_1}: {value_1} # Optional. Example for wikipedia: date: 20220301

|

| 22 |

+

metrics:

|

| 23 |

+

- type: accuracy # Required. Example: wer. Use metric id from https://hf.co/metrics

|

| 24 |

+

value: 65.8 # Required. Example: 20.90

|

| 25 |

+

# name: {metric_name} # Optional. Example: Test WER

|

| 26 |

+

# config: {metric_config} # Optional. The name of the metric configuration used in `load_metric()`. Example: bleurt-large-512 in `load_metric("bleurt", "bleurt-large-512")`. See the `datasets` docs for more info: https://huggingface.co/docs/datasets/v2.1.0/en/loading#load-configurations

|

| 27 |

+

# args:

|

| 28 |

+

# {arg_0}: {value_0} # Optional. The arguments passed during `Metric.compute()`. Example for `bleu`: max_order: 4

|

| 29 |

+

verified: false # Optional. If true, indicates that evaluation was generated by Hugging Face (vs. self-reported).

|

| 30 |

---

|

| 31 |

+

|

| 32 |

+

# LEVER (for Codex on WikiTQ)

|

| 33 |

+

|

| 34 |

+

This is one of the models produced by the paper ["LEVER: Learning to Verify Language-to-Code Generation with Execution"](https://arxiv.org/abs/2302.08468).

|

| 35 |

+

|

| 36 |

+

**Authors:** [Ansong Ni](https://niansong1996.github.io), Srini Iyer, Dragomir Radev, Ves Stoyanov, Wen-tau Yih, Sida I. Wang*, Xi Victoria Lin*

|

| 37 |

+

|

| 38 |

+

|

| 39 |

+

|

| 40 |

+

# Model Details

|

| 41 |

+

|

| 42 |

+

|

| 43 |

+

## Model Description

|

| 44 |

+

The advent of pre-trained code language models (Code LLMs) has led to significant progress in language-to-code generation. State-of-the-art approaches in this area combine CodeLM decoding with sample pruning and reranking using test cases or heuristics based on the execution results. However, it is challenging to obtain test cases for many real-world language-to-code applications, and heuristics cannot well capture the semantic features of the execution results, such as data type and value range, which often indicates the correctness of the program. In this work, we propose LEVER, a simple approach to improve language-to-code generation by learning to verify the generated programs with their execution results. Specifically, we train verifiers to determine whether a program sampled from the CodeLM is correct or not based on the natural language input, the program itself and its execution results. The sampled programs are reranked by combining the verification score with the CodeLM generation probability, and marginalizing over programs with the same execution results. On four datasets across the domains of table QA, math QA and basic Python programming, LEVER consistently improves over the base CodeLMs (4.6% to 10.9% with code-davinci-002) and achieves new state-of-the-art results on all of them.

|

| 45 |

+

|

| 46 |

+

|

| 47 |

+

- **Developed by:** Yale LILY Lab and Meta AI

|

| 48 |

+

- **Shared by [Optional]:** Yale LILY Lab

|

| 49 |

+

|

| 50 |

+

- **Model type:** Text Classification

|

| 51 |

+

- **Language(s) (NLP):** More information needed

|

| 52 |

+

- **License:** Apache-2.0

|

| 53 |

+

- **Parent Model:** T5-base

|

| 54 |

+

- **Resources for more information:**

|

| 55 |

+

- [Github Repo](https://github.com/niansong1996/lever)

|

| 56 |

+

- [Associated Paper](https://arxiv.org/abs/2302.08468)

|

| 57 |

+

|

| 58 |

+

|

| 59 |

+

# Uses

|

| 60 |

+

|

| 61 |

+

|

| 62 |

+

## Direct Use

|

| 63 |

+

|

| 64 |

+

This model is *not* intended to be directly used. LEVER is used to verify and rerank the programs generated by code LLMs (e.g., Codex). We recommend checking out our [Github Repo](https://github.com/niansong1996/lever) for more details.

|

| 65 |

+

|

| 66 |

+

## Downstream Use

|

| 67 |

+

|

| 68 |

+

LEVER is learned to verify and rerank the programs sampled from code LLMs for different tasks.

|

| 69 |

+

More specifically, for `lever-wikitq-codex`, it was trained on the outputs of `code-davinci-002` on the [WikiTQ](https://github.com/ppasupat/WikiTableQuestions) dataset. It can be used to rerank the SQL programs generated by Codex out-of-box.

|

| 70 |

+

Moreover, it may also be applied to other model's outputs on the WikiTQ dataset, as studied in the [Original Paper](https://arxiv.org/abs/2302.08468).

|

| 71 |

+

|

| 72 |

+

|

| 73 |

+

|

| 74 |

+

## Out-of-Scope Use

|

| 75 |

+

|

| 76 |

+

The model should not be used to intentionally create hostile or alienating environments for people.

|

| 77 |

+

|

| 78 |

+

# Bias, Risks, and Limitations

|

| 79 |

+

|

| 80 |

+

|

| 81 |

+

Significant research has explored bias and fairness issues with language models (see, e.g., [Sheng et al. (2021)](https://aclanthology.org/2021.acl-long.330.pdf) and [Bender et al. (2021)](https://dl.acm.org/doi/pdf/10.1145/3442188.3445922)). Predictions generated by the model may include disturbing and harmful stereotypes across protected classes; identity characteristics; and sensitive, social, and occupational groups.

|

| 82 |

+

|

| 83 |

+

|

| 84 |

+

|

| 85 |

+

## Recommendations

|

| 86 |

+

|

| 87 |

+

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

|

| 88 |

+

|

| 89 |

+

# Training Details

|

| 90 |

+

|

| 91 |

+

## Training Data

|

| 92 |

+

|

| 93 |

+

The model is trained with the outputs from `code-davinci-002` model on the [WikiTQ](https://github.com/ppasupat/WikiTableQuestions) dataset.

|

| 94 |

+

|

| 95 |

+

## Training Procedure

|

| 96 |

+

|

| 97 |

+

20 program samples are drawn from the Codex model on the training examples of the WikiTQ dataset, those programs are later executed to obtain the execution information.

|

| 98 |

+

And for each example and its program sample, the natural language description and execution information are also part of the inputs that used to train the T5-based model to predict "yes" or "no" as the verification labels.

|

| 99 |

+

|

| 100 |

+

|

| 101 |

+

### Preprocessing

|

| 102 |

+

|

| 103 |

+

Please follow the instructions in the [Github Repo](https://github.com/niansong1996/lever) to reproduce the results.

|

| 104 |

+

|

| 105 |

+

|

| 106 |

+

|

| 107 |

+

### Speeds, Sizes, Times

|

| 108 |

+

|

| 109 |

+

More information needed

|

| 110 |

+

|

| 111 |

+

|

| 112 |

+

|

| 113 |

+

# Evaluation

|

| 114 |

+

|

| 115 |

+

|

| 116 |

+

## Testing Data, Factors & Metrics

|

| 117 |

+

|

| 118 |

+

### Testing Data

|

| 119 |

+

|

| 120 |

+

Dev and test set of the [WikiTQ](https://github.com/ppasupat/WikiTableQuestions) dataset.

|

| 121 |

+

|

| 122 |

+

### Factors

|

| 123 |

+

More information needed

|

| 124 |

+

|

| 125 |

+

### Metrics

|

| 126 |

+

|

| 127 |

+

Execution accuracy (i.e., pass@1)

|

| 128 |

+

|

| 129 |

+

|

| 130 |

+

## Results

|

| 131 |

+

|

| 132 |

+

|

| 133 |

+

### WikiTQ Text-to-SQL Generation

|

| 134 |

+

|

| 135 |

+

| | Exec. Acc. (Dev) | Exec. Acc. (Test) |

|

| 136 |

+

|-----------------|------------------|-------------------|

|

| 137 |

+

| Codex | 49.6 | 53.0 |

|

| 138 |

+

| Codex+LEVER | 64.6 | 65.8 |

|

| 139 |

+

|

| 140 |

+

|

| 141 |

+

|

| 142 |

+

|

| 143 |

+

# Model Examination

|

| 144 |

+

|

| 145 |

+

More information needed

|

| 146 |

+

|

| 147 |

+

|

| 148 |

+

# Environmental Impact

|

| 149 |

+

|

| 150 |

+

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

|

| 151 |

+

|

| 152 |

+

- **Hardware Type:** More information needed

|

| 153 |

+

- **Hours used:** More information needed

|

| 154 |

+

- **Cloud Provider:** More information needed

|

| 155 |

+

- **Compute Region:** More information needed

|

| 156 |

+

- **Carbon Emitted:** More information needed

|

| 157 |

+

|

| 158 |

+

# Technical Specifications [optional]

|

| 159 |

+

|

| 160 |

+

## Model Architecture and Objective

|

| 161 |

+

|

| 162 |

+

`lever-wikitq-codex` is based on T5-base.

|

| 163 |

+

|

| 164 |

+

## Compute Infrastructure

|

| 165 |

+

|

| 166 |

+

More information needed

|

| 167 |

+

|

| 168 |

+

### Hardware

|

| 169 |

+

|

| 170 |

+

|

| 171 |

+

More information needed

|

| 172 |

+

|

| 173 |

+

### Software

|

| 174 |

+

|

| 175 |

+

More information needed.

|

| 176 |

+

|

| 177 |

+

# Citation

|

| 178 |

+

|

| 179 |

+

|

| 180 |

+

**BibTeX:**

|

| 181 |

+

|

| 182 |

+

|

| 183 |

+

```bibtex

|

| 184 |

+

@inproceedings{ni2023lever,

|

| 185 |

+

title={Lever: Learning to verify language-to-code generation with execution},

|

| 186 |

+

author={Ni, Ansong and Iyer, Srini and Radev, Dragomir and Stoyanov, Ves and Yih, Wen-tau and Wang, Sida I and Lin, Xi Victoria},

|

| 187 |

+

booktitle={Proceedings of the 40th International Conference on Machine Learning (ICML'23)},

|

| 188 |

+

year={2023}

|

| 189 |

+

}

|

| 190 |

+

```

|

| 191 |

+

|

| 192 |

+

|

| 193 |

+

|

| 194 |

+

|

| 195 |

+

# Glossary [optional]

|

| 196 |

+

|

| 197 |

+

More information needed

|

| 198 |

+

|

| 199 |

+

# More Information [optional]

|

| 200 |

+

More information needed

|

| 201 |

+

|

| 202 |

+

|

| 203 |

+

# Model Card Authors [optional]

|

| 204 |

+

|

| 205 |

+

Yale LILY Lab in collaboration with Ezi Ozoani and the Hugging Face team

|

| 206 |

+

|

| 207 |

+

# Model Card Contact

|

| 208 |

+

|

| 209 |

+

Ansong Ni, contact info on [personal website](https://niansong1996.github.io)

|

| 210 |

+

|

| 211 |

+

# How to Get Started with the Model

|

| 212 |

+

|

| 213 |

+

This model is *not* intended to be directly used, please follow the instructions in the [Github Repo](https://github.com/niansong1996/lever).

|