complete the model package

Browse files- README.md +90 -0

- configs/inference.json +138 -0

- configs/logging.conf +21 -0

- configs/metadata.json +82 -0

- docs/README.md +83 -0

- docs/demos.png +0 -0

- docs/license.txt +4 -0

- docs/renal.png +0 -0

- docs/unest.png +0 -0

- docs/val_dice.png +0 -0

- models/model.pt +3 -0

- scripts/__init__.py +10 -0

- scripts/__pycache__/__init__.cpython-38.pyc +0 -0

- scripts/networks/__init__.py +10 -0

- scripts/networks/__pycache__/__init__.cpython-38.pyc +0 -0

- scripts/networks/__pycache__/nest_transformer_3D.cpython-38.pyc +0 -0

- scripts/networks/__pycache__/patchEmbed3D.cpython-38.pyc +0 -0

- scripts/networks/__pycache__/unest.cpython-38.pyc +0 -0

- scripts/networks/__pycache__/unest_block.cpython-38.pyc +0 -0

- scripts/networks/nest/__init__.py +16 -0

- scripts/networks/nest/__pycache__/__init__.cpython-38.pyc +0 -0

- scripts/networks/nest/__pycache__/utils.cpython-38.pyc +0 -0

- scripts/networks/nest/utils.py +485 -0

- scripts/networks/nest_transformer_3D.py +489 -0

- scripts/networks/patchEmbed3D.py +190 -0

- scripts/networks/unest.py +274 -0

- scripts/networks/unest_block.py +245 -0

README.md

ADDED

|

@@ -0,0 +1,90 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

tags:

|

| 3 |

+

- monai

|

| 4 |

+

- medical

|

| 5 |

+

library_name: monai

|

| 6 |

+

license: unknown

|

| 7 |

+

---

|

| 8 |

+

# Description

|

| 9 |

+

A pre-trained model for inferencing volumetric (3D) kidney substructures segmentation from contrast-enhanced CT images (Arterial/Portal Venous Phase).

|

| 10 |

+

A tutorial and release of model for kidney cortex, medulla and collecting system segmentation.

|

| 11 |

+

|

| 12 |

+

Authors: Yinchi Zhou (yinchi.zhou@vanderbilt.edu) | Xin Yu (xin.yu@vanderbilt.edu) | Yucheng Tang (yuchengt@nvidia.com) |

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

# Model Overview

|

| 16 |

+

A pre-trained UNEST base model [1] for volumetric (3D) renal structures segmentation using dynamic contrast enhanced arterial or venous phase CT images.

|

| 17 |

+

|

| 18 |

+

## Data

|

| 19 |

+

The training data is from the [ImageVU RenalSeg dataset] from Vanderbilt University and Vanderbilt University Medical Center.

|

| 20 |

+

(The training data is not public available yet).

|

| 21 |

+

|

| 22 |

+

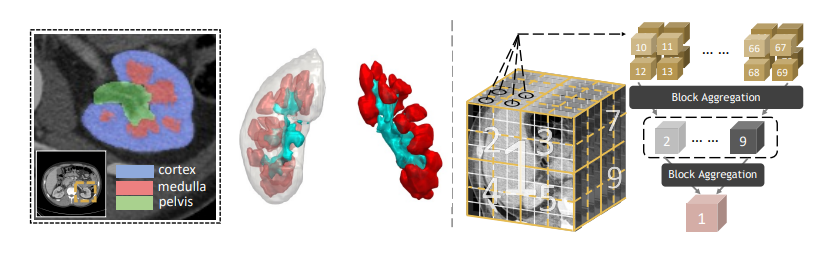

- Target: Renal Cortex | Medulla | Pelvis Collecting System

|

| 23 |

+

- Task: Segmentation

|

| 24 |

+

- Modality: CT (Artrial | Venous phase)

|

| 25 |

+

- Size: 96 3D volumes

|

| 26 |

+

|

| 27 |

+

|

| 28 |

+

The data and segmentation demonstration is as follow:

|

| 29 |

+

|

| 30 |

+

<br>

|

| 31 |

+

|

| 32 |

+

## Method and Network

|

| 33 |

+

|

| 34 |

+

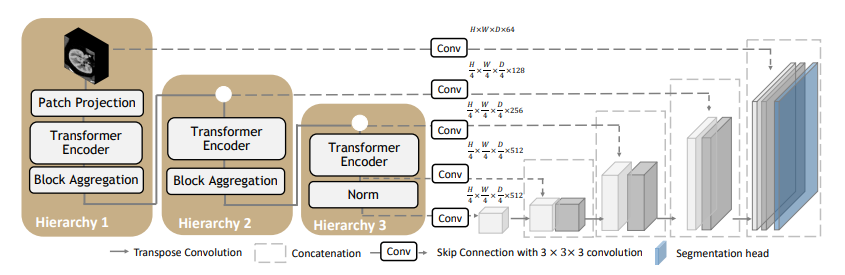

The UNEST model is a 3D hierarchical transformer-based semgnetation network.

|

| 35 |

+

|

| 36 |

+

Details of the architecture:

|

| 37 |

+

<br>

|

| 38 |

+

|

| 39 |

+

## Training configuration

|

| 40 |

+

The training was performed with at least one 16GB-memory GPU.

|

| 41 |

+

|

| 42 |

+

Actual Model Input: 96 x 96 x 96

|

| 43 |

+

|

| 44 |

+

## Input and output formats

|

| 45 |

+

Input: 1 channel CT image

|

| 46 |

+

|

| 47 |

+

Output: 4: 0:Background, 1:Renal Cortex, 2:Medulla, 3:Pelvicalyceal System

|

| 48 |

+

|

| 49 |

+

## Performance

|

| 50 |

+

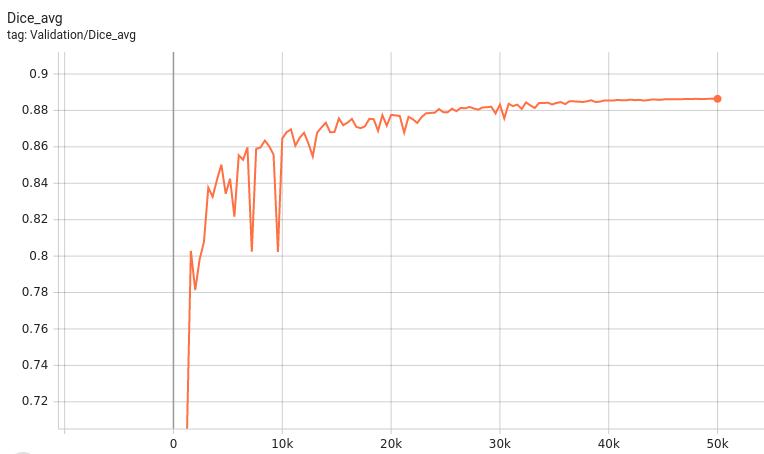

A graph showing the validation mean Dice for 5000 epochs.

|

| 51 |

+

|

| 52 |

+

<br>

|

| 53 |

+

|

| 54 |

+

This model achieves the following Dice score on the validation data (our own split from the training dataset):

|

| 55 |

+

|

| 56 |

+

Mean Valdiation Dice = 0.8523

|

| 57 |

+

|

| 58 |

+

Note that mean dice is computed in the original spacing of the input data.

|

| 59 |

+

|

| 60 |

+

## commands example

|

| 61 |

+

Download trained checkpoint model to ./model/model.pt:

|

| 62 |

+

|

| 63 |

+

|

| 64 |

+

Add scripts component: To run the workflow with customized components, PYTHONPATH should be revised to include the path to the customized component:

|

| 65 |

+

|

| 66 |

+

```

|

| 67 |

+

export PYTHONPATH=$PYTHONPATH:"'<path to the bundle root dir>/scripts'"

|

| 68 |

+

|

| 69 |

+

```

|

| 70 |

+

|

| 71 |

+

|

| 72 |

+

Execute inference:

|

| 73 |

+

|

| 74 |

+

```

|

| 75 |

+

python -m monai.bundle run evaluating --meta_file configs/metadata.json --config_file configs/inference.json --logging_file configs/logging.conf

|

| 76 |

+

```

|

| 77 |

+

|

| 78 |

+

|

| 79 |

+

## More examples output

|

| 80 |

+

|

| 81 |

+

<br>

|

| 82 |

+

|

| 83 |

+

|

| 84 |

+

# Disclaimer

|

| 85 |

+

This is an example, not to be used for diagnostic purposes.

|

| 86 |

+

|

| 87 |

+

# References

|

| 88 |

+

[1] Yu, Xin, Yinchi Zhou, Yucheng Tang et al. "Characterizing Renal Structures with 3D Block Aggregate Transformers." arXiv preprint arXiv:2203.02430 (2022). https://arxiv.org/pdf/2203.02430.pdf

|

| 89 |

+

|

| 90 |

+

[2] Zizhao Zhang et al. "Nested Hierarchical Transformer: Towards Accurate, Data-Efficient and Interpretable Visual Understanding." AAAI Conference on Artificial Intelligence (AAAI) 2022

|

configs/inference.json

ADDED

|

@@ -0,0 +1,138 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"imports": [

|

| 3 |

+

"$import glob",

|

| 4 |

+

"$import os"

|

| 5 |

+

],

|

| 6 |

+

"bundle_root": "/models/renalStructures_UNEST_segmentation",

|

| 7 |

+

"output_dir": "$@bundle_root + '/eval'",

|

| 8 |

+

"dataset_dir": "$@bundle_root + './dataset/spleen'",

|

| 9 |

+

"datalist": "$list(sorted(glob.glob(@dataset_dir + '/*.nii.gz')))",

|

| 10 |

+

"device": "$torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')",

|

| 11 |

+

"network_def": {

|

| 12 |

+

"_target_": "scripts.networks.unest.UNesT",

|

| 13 |

+

"in_channels": 1,

|

| 14 |

+

"out_channels": 4

|

| 15 |

+

},

|

| 16 |

+

"network": "$@network_def.to(@device)",

|

| 17 |

+

"preprocessing": {

|

| 18 |

+

"_target_": "Compose",

|

| 19 |

+

"transforms": [

|

| 20 |

+

{

|

| 21 |

+

"_target_": "LoadImaged",

|

| 22 |

+

"keys": "image"

|

| 23 |

+

},

|

| 24 |

+

{

|

| 25 |

+

"_target_": "AddChanneld",

|

| 26 |

+

"keys": "image"

|

| 27 |

+

},

|

| 28 |

+

{

|

| 29 |

+

"_target_": "Orientationd",

|

| 30 |

+

"keys": "image",

|

| 31 |

+

"axcodes": "RAS"

|

| 32 |

+

},

|

| 33 |

+

{

|

| 34 |

+

"_target_": "Spacingd",

|

| 35 |

+

"keys": "image",

|

| 36 |

+

"pixdim": [

|

| 37 |

+

1.0,

|

| 38 |

+

1.0,

|

| 39 |

+

1.0

|

| 40 |

+

],

|

| 41 |

+

"mode": "bilinear"

|

| 42 |

+

},

|

| 43 |

+

{

|

| 44 |

+

"_target_": "ScaleIntensityRanged",

|

| 45 |

+

"keys": "image",

|

| 46 |

+

"a_min": -175,

|

| 47 |

+

"a_max": 250,

|

| 48 |

+

"b_min": 0.0,

|

| 49 |

+

"b_max": 1.0,

|

| 50 |

+

"clip": true

|

| 51 |

+

},

|

| 52 |

+

{

|

| 53 |

+

"_target_": "EnsureTyped",

|

| 54 |

+

"keys": "image"

|

| 55 |

+

}

|

| 56 |

+

]

|

| 57 |

+

},

|

| 58 |

+

"dataset": {

|

| 59 |

+

"_target_": "Dataset",

|

| 60 |

+

"data": "$[{'image': i} for i in @datalist]",

|

| 61 |

+

"transform": "@preprocessing"

|

| 62 |

+

},

|

| 63 |

+

"dataloader": {

|

| 64 |

+

"_target_": "DataLoader",

|

| 65 |

+

"dataset": "@dataset",

|

| 66 |

+

"batch_size": 1,

|

| 67 |

+

"shuffle": false,

|

| 68 |

+

"num_workers": 4

|

| 69 |

+

},

|

| 70 |

+

"inferer": {

|

| 71 |

+

"_target_": "SlidingWindowInferer",

|

| 72 |

+

"roi_size": [

|

| 73 |

+

96,

|

| 74 |

+

96,

|

| 75 |

+

96

|

| 76 |

+

],

|

| 77 |

+

"sw_batch_size": 4,

|

| 78 |

+

"overlap": 0.5

|

| 79 |

+

},

|

| 80 |

+

"postprocessing": {

|

| 81 |

+

"_target_": "Compose",

|

| 82 |

+

"transforms": [

|

| 83 |

+

{

|

| 84 |

+

"_target_": "Activationsd",

|

| 85 |

+

"keys": "pred",

|

| 86 |

+

"softmax": true

|

| 87 |

+

},

|

| 88 |

+

{

|

| 89 |

+

"_target_": "Invertd",

|

| 90 |

+

"keys": "pred",

|

| 91 |

+

"transform": "@preprocessing",

|

| 92 |

+

"orig_keys": "image",

|

| 93 |

+

"meta_key_postfix": "meta_dict",

|

| 94 |

+

"nearest_interp": false,

|

| 95 |

+

"to_tensor": true

|

| 96 |

+

},

|

| 97 |

+

{

|

| 98 |

+

"_target_": "AsDiscreted",

|

| 99 |

+

"keys": "pred",

|

| 100 |

+

"argmax": true

|

| 101 |

+

},

|

| 102 |

+

{

|

| 103 |

+

"_target_": "SaveImaged",

|

| 104 |

+

"keys": "pred",

|

| 105 |

+

"meta_keys": "pred_meta_dict",

|

| 106 |

+

"output_dir": "@output_dir"

|

| 107 |

+

}

|

| 108 |

+

]

|

| 109 |

+

},

|

| 110 |

+

"handlers": [

|

| 111 |

+

{

|

| 112 |

+

"_target_": "CheckpointLoader",

|

| 113 |

+

"load_path": "$@bundle_root + '/models/model.pt'",

|

| 114 |

+

"load_dict": {

|

| 115 |

+

"state_dict": "@network"

|

| 116 |

+

},

|

| 117 |

+

"strict": "True"

|

| 118 |

+

},

|

| 119 |

+

{

|

| 120 |

+

"_target_": "StatsHandler",

|

| 121 |

+

"iteration_log": false

|

| 122 |

+

}

|

| 123 |

+

],

|

| 124 |

+

"evaluator": {

|

| 125 |

+

"_target_": "SupervisedEvaluator",

|

| 126 |

+

"device": "@device",

|

| 127 |

+

"val_data_loader": "@dataloader",

|

| 128 |

+

"network": "@network",

|

| 129 |

+

"inferer": "@inferer",

|

| 130 |

+

"postprocessing": "@postprocessing",

|

| 131 |

+

"val_handlers": "@handlers",

|

| 132 |

+

"amp": false

|

| 133 |

+

},

|

| 134 |

+

"evaluating": [

|

| 135 |

+

"$setattr(torch.backends.cudnn, 'benchmark', True)",

|

| 136 |

+

"$@evaluator.run()"

|

| 137 |

+

]

|

| 138 |

+

}

|

configs/logging.conf

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

[loggers]

|

| 2 |

+

keys=root

|

| 3 |

+

|

| 4 |

+

[handlers]

|

| 5 |

+

keys=consoleHandler

|

| 6 |

+

|

| 7 |

+

[formatters]

|

| 8 |

+

keys=fullFormatter

|

| 9 |

+

|

| 10 |

+

[logger_root]

|

| 11 |

+

level=INFO

|

| 12 |

+

handlers=consoleHandler

|

| 13 |

+

|

| 14 |

+

[handler_consoleHandler]

|

| 15 |

+

class=StreamHandler

|

| 16 |

+

level=INFO

|

| 17 |

+

formatter=fullFormatter

|

| 18 |

+

args=(sys.stdout,)

|

| 19 |

+

|

| 20 |

+

[formatter_fullFormatter]

|

| 21 |

+

format=%(asctime)s - %(name)s - %(levelname)s - %(message)s

|

configs/metadata.json

ADDED

|

@@ -0,0 +1,82 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"schema": "https://github.com/Project-MONAI/MONAI-extra-test-data/releases/download/0.8.1/meta_schema_20220324.json",

|

| 3 |

+

"version": "0.1.0",

|

| 4 |

+

"changelog": {

|

| 5 |

+

"0.1.0": "complete the model package",

|

| 6 |

+

"0.0.1": "initialize the model package structure"

|

| 7 |

+

},

|

| 8 |

+

"monai_version": "0.9.0",

|

| 9 |

+

"pytorch_version": "1.10.0",

|

| 10 |

+

"numpy_version": "1.21.2",

|

| 11 |

+

"optional_packages_version": {

|

| 12 |

+

"nibabel": "3.2.1",

|

| 13 |

+

"pytorch-ignite": "0.4.8",

|

| 14 |

+

"einops": "0.4.1",

|

| 15 |

+

"fire": "0.4.0",

|

| 16 |

+

"timm": "0.6.7"

|

| 17 |

+

},

|

| 18 |

+

"task": "Renal segmentation",

|

| 19 |

+

"description": "A transformer-based model for renal segmentation from CT image",

|

| 20 |

+

"authors": "Vanderbilt University + MONAI team",

|

| 21 |

+

"copyright": "Copyright (c) MONAI Consortium",

|

| 22 |

+

"data_source": "RawData.zip",

|

| 23 |

+

"data_type": "nibabel",

|

| 24 |

+

"image_classes": "single channel data, intensity scaled to [0, 1]",

|

| 25 |

+

"label_classes": "1: Kideny Cortex, 2:Medulla, 3:Pelvicalyceal system",

|

| 26 |

+

"pred_classes": "1: Kideny Cortex, 2:Medulla, 3:Pelvicalyceal system",

|

| 27 |

+

"eval_metrics": {

|

| 28 |

+

"mean_dice": 0.85

|

| 29 |

+

},

|

| 30 |

+

"intended_use": "This is an example, not to be used for diagnostic purposes",

|

| 31 |

+

"references": [

|

| 32 |

+

"Tang, Yucheng, et al. 'Self-supervised pre-training of swin transformers for 3d medical image analysis. arXiv preprint arXiv:2111.14791 (2021). https://arxiv.org/abs/2111.14791."

|

| 33 |

+

],

|

| 34 |

+

"network_data_format": {

|

| 35 |

+

"inputs": {

|

| 36 |

+

"image": {

|

| 37 |

+

"type": "image",

|

| 38 |

+

"format": "hounsfield",

|

| 39 |

+

"modality": "CT",

|

| 40 |

+

"num_channels": 1,

|

| 41 |

+

"spatial_shape": [

|

| 42 |

+

96,

|

| 43 |

+

96,

|

| 44 |

+

96

|

| 45 |

+

],

|

| 46 |

+

"dtype": "float32",

|

| 47 |

+

"value_range": [

|

| 48 |

+

0,

|

| 49 |

+

1

|

| 50 |

+

],

|

| 51 |

+

"is_patch_data": true,

|

| 52 |

+

"channel_def": {

|

| 53 |

+

"0": "image"

|

| 54 |

+

}

|

| 55 |

+

}

|

| 56 |

+

},

|

| 57 |

+

"outputs": {

|

| 58 |

+

"pred": {

|

| 59 |

+

"type": "image",

|

| 60 |

+

"format": "segmentation",

|

| 61 |

+

"num_channels": 4,

|

| 62 |

+

"spatial_shape": [

|

| 63 |

+

96,

|

| 64 |

+

96,

|

| 65 |

+

96

|

| 66 |

+

],

|

| 67 |

+

"dtype": "float32",

|

| 68 |

+

"value_range": [

|

| 69 |

+

0,

|

| 70 |

+

1

|

| 71 |

+

],

|

| 72 |

+

"is_patch_data": true,

|

| 73 |

+

"channel_def": {

|

| 74 |

+

"0": "background",

|

| 75 |

+

"1": "kidney cortex",

|

| 76 |

+

"2": "medulla",

|

| 77 |

+

"3": "pelvicalyceal system"

|

| 78 |

+

}

|

| 79 |

+

}

|

| 80 |

+

}

|

| 81 |

+

}

|

| 82 |

+

}

|

docs/README.md

ADDED

|

@@ -0,0 +1,83 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Description

|

| 2 |

+

A pre-trained model for inferencing volumetric (3D) kidney substructures segmentation from contrast-enhanced CT images (Arterial/Portal Venous Phase).

|

| 3 |

+

A tutorial and release of model for kidney cortex, medulla and collecting system segmentation.

|

| 4 |

+

|

| 5 |

+

Authors: Yinchi Zhou (yinchi.zhou@vanderbilt.edu) | Xin Yu (xin.yu@vanderbilt.edu) | Yucheng Tang (yuchengt@nvidia.com) |

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

# Model Overview

|

| 9 |

+

A pre-trained UNEST base model [1] for volumetric (3D) renal structures segmentation using dynamic contrast enhanced arterial or venous phase CT images.

|

| 10 |

+

|

| 11 |

+

## Data

|

| 12 |

+

The training data is from the [ImageVU RenalSeg dataset] from Vanderbilt University and Vanderbilt University Medical Center.

|

| 13 |

+

(The training data is not public available yet).

|

| 14 |

+

|

| 15 |

+

- Target: Renal Cortex | Medulla | Pelvis Collecting System

|

| 16 |

+

- Task: Segmentation

|

| 17 |

+

- Modality: CT (Artrial | Venous phase)

|

| 18 |

+

- Size: 96 3D volumes

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

The data and segmentation demonstration is as follow:

|

| 22 |

+

|

| 23 |

+

<br>

|

| 24 |

+

|

| 25 |

+

## Method and Network

|

| 26 |

+

|

| 27 |

+

The UNEST model is a 3D hierarchical transformer-based semgnetation network.

|

| 28 |

+

|

| 29 |

+

Details of the architecture:

|

| 30 |

+

<br>

|

| 31 |

+

|

| 32 |

+

## Training configuration

|

| 33 |

+

The training was performed with at least one 16GB-memory GPU.

|

| 34 |

+

|

| 35 |

+

Actual Model Input: 96 x 96 x 96

|

| 36 |

+

|

| 37 |

+

## Input and output formats

|

| 38 |

+

Input: 1 channel CT image

|

| 39 |

+

|

| 40 |

+

Output: 4: 0:Background, 1:Renal Cortex, 2:Medulla, 3:Pelvicalyceal System

|

| 41 |

+

|

| 42 |

+

## Performance

|

| 43 |

+

A graph showing the validation mean Dice for 5000 epochs.

|

| 44 |

+

|

| 45 |

+

<br>

|

| 46 |

+

|

| 47 |

+

This model achieves the following Dice score on the validation data (our own split from the training dataset):

|

| 48 |

+

|

| 49 |

+

Mean Valdiation Dice = 0.8523

|

| 50 |

+

|

| 51 |

+

Note that mean dice is computed in the original spacing of the input data.

|

| 52 |

+

|

| 53 |

+

## commands example

|

| 54 |

+

Download trained checkpoint model to ./model/model.pt:

|

| 55 |

+

|

| 56 |

+

|

| 57 |

+

Add scripts component: To run the workflow with customized components, PYTHONPATH should be revised to include the path to the customized component:

|

| 58 |

+

|

| 59 |

+

```

|

| 60 |

+

export PYTHONPATH=$PYTHONPATH:"'<path to the bundle root dir>/scripts'"

|

| 61 |

+

|

| 62 |

+

```

|

| 63 |

+

|

| 64 |

+

|

| 65 |

+

Execute inference:

|

| 66 |

+

|

| 67 |

+

```

|

| 68 |

+

python -m monai.bundle run evaluating --meta_file configs/metadata.json --config_file configs/inference.json --logging_file configs/logging.conf

|

| 69 |

+

```

|

| 70 |

+

|

| 71 |

+

|

| 72 |

+

## More examples output

|

| 73 |

+

|

| 74 |

+

<br>

|

| 75 |

+

|

| 76 |

+

|

| 77 |

+

# Disclaimer

|

| 78 |

+

This is an example, not to be used for diagnostic purposes.

|

| 79 |

+

|

| 80 |

+

# References

|

| 81 |

+

[1] Yu, Xin, Yinchi Zhou, Yucheng Tang et al. "Characterizing Renal Structures with 3D Block Aggregate Transformers." arXiv preprint arXiv:2203.02430 (2022). https://arxiv.org/pdf/2203.02430.pdf

|

| 82 |

+

|

| 83 |

+

[2] Zizhao Zhang et al. "Nested Hierarchical Transformer: Towards Accurate, Data-Efficient and Interpretable Visual Understanding." AAAI Conference on Artificial Intelligence (AAAI) 2022

|

docs/demos.png

ADDED

|

docs/license.txt

ADDED

|

@@ -0,0 +1,4 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Third Party Licenses

|

| 2 |

+

-----------------------------------------------------------------------

|

| 3 |

+

|

| 4 |

+

/*********************************************************************/

|

docs/renal.png

ADDED

|

docs/unest.png

ADDED

|

docs/val_dice.png

ADDED

|

models/model.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:8928e88771d31945c51d1b302a8448825e6f9861a543a6e1023acb9576840962

|

| 3 |

+

size 348887167

|

scripts/__init__.py

ADDED

|

@@ -0,0 +1,10 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Copyright (c) MONAI Consortium

|

| 2 |

+

# Licensed under the Apache License, Version 2.0 (the "License");

|

| 3 |

+

# you may not use this file except in compliance with the License.

|

| 4 |

+

# You may obtain a copy of the License at

|

| 5 |

+

# http://www.apache.org/licenses/LICENSE-2.0

|

| 6 |

+

# Unless required by applicable law or agreed to in writing, software

|

| 7 |

+

# distributed under the License is distributed on an "AS IS" BASIS,

|

| 8 |

+

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 9 |

+

# See the License for the specific language governing permissions and

|

| 10 |

+

# limitations under the License.

|

scripts/__pycache__/__init__.cpython-38.pyc

ADDED

|

Binary file (192 Bytes). View file

|

|

|

scripts/networks/__init__.py

ADDED

|

@@ -0,0 +1,10 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Copyright (c) MONAI Consortium

|

| 2 |

+

# Licensed under the Apache License, Version 2.0 (the "License");

|

| 3 |

+

# you may not use this file except in compliance with the License.

|

| 4 |

+

# You may obtain a copy of the License at

|

| 5 |

+

# http://www.apache.org/licenses/LICENSE-2.0

|

| 6 |

+

# Unless required by applicable law or agreed to in writing, software

|

| 7 |

+

# distributed under the License is distributed on an "AS IS" BASIS,

|

| 8 |

+

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 9 |

+

# See the License for the specific language governing permissions and

|

| 10 |

+

# limitations under the License.

|

scripts/networks/__pycache__/__init__.cpython-38.pyc

ADDED

|

Binary file (201 Bytes). View file

|

|

|

scripts/networks/__pycache__/nest_transformer_3D.cpython-38.pyc

ADDED

|

Binary file (15.5 kB). View file

|

|

|

scripts/networks/__pycache__/patchEmbed3D.cpython-38.pyc

ADDED

|

Binary file (5.8 kB). View file

|

|

|

scripts/networks/__pycache__/unest.cpython-38.pyc

ADDED

|

Binary file (5.79 kB). View file

|

|

|

scripts/networks/__pycache__/unest_block.cpython-38.pyc

ADDED

|

Binary file (5.45 kB). View file

|

|

|

scripts/networks/nest/__init__.py

ADDED

|

@@ -0,0 +1,16 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/usr/bin/env python3

|

| 2 |

+

from .utils import (

|

| 3 |

+

Conv3dSame,

|

| 4 |

+

DropPath,

|

| 5 |

+

Linear,

|

| 6 |

+

Mlp,

|

| 7 |

+

_assert,

|

| 8 |

+

conv3d_same,

|

| 9 |

+

create_conv3d,

|

| 10 |

+

create_pool3d,

|

| 11 |

+

get_padding,

|

| 12 |

+

get_same_padding,

|

| 13 |

+

pad_same,

|

| 14 |

+

to_ntuple,

|

| 15 |

+

trunc_normal_,

|

| 16 |

+

)

|

scripts/networks/nest/__pycache__/__init__.cpython-38.pyc

ADDED

|

Binary file (496 Bytes). View file

|

|

|

scripts/networks/nest/__pycache__/utils.cpython-38.pyc

ADDED

|

Binary file (15.2 kB). View file

|

|

|

scripts/networks/nest/utils.py

ADDED

|

@@ -0,0 +1,485 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/usr/bin/env python3

|

| 2 |

+

|

| 3 |

+

|

| 4 |

+

import collections.abc

|

| 5 |

+

import math

|

| 6 |

+

import warnings

|

| 7 |

+

from itertools import repeat

|

| 8 |

+

from typing import List, Optional, Tuple

|

| 9 |

+

|

| 10 |

+

import torch

|

| 11 |

+

import torch.nn as nn

|

| 12 |

+

import torch.nn.functional as F

|

| 13 |

+

|

| 14 |

+

try:

|

| 15 |

+

from torch import _assert

|

| 16 |

+

except ImportError:

|

| 17 |

+

|

| 18 |

+

def _assert(condition: bool, message: str):

|

| 19 |

+

assert condition, message

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

def drop_block_2d(

|

| 23 |

+

x,

|

| 24 |

+

drop_prob: float = 0.1,

|

| 25 |

+

block_size: int = 7,

|

| 26 |

+

gamma_scale: float = 1.0,

|

| 27 |

+

with_noise: bool = False,

|

| 28 |

+

inplace: bool = False,

|

| 29 |

+

batchwise: bool = False,

|

| 30 |

+

):

|

| 31 |

+

"""DropBlock. See https://arxiv.org/pdf/1810.12890.pdf

|

| 32 |

+

|

| 33 |

+

DropBlock with an experimental gaussian noise option. This layer has been tested on a few training

|

| 34 |

+

runs with success, but needs further validation and possibly optimization for lower runtime impact.

|

| 35 |

+

"""

|

| 36 |

+

b, c, h, w = x.shape

|

| 37 |

+

total_size = w * h

|

| 38 |

+

clipped_block_size = min(block_size, min(w, h))

|

| 39 |

+

# seed_drop_rate, the gamma parameter

|

| 40 |

+

gamma = (

|

| 41 |

+

gamma_scale * drop_prob * total_size / clipped_block_size**2 / ((w - block_size + 1) * (h - block_size + 1))

|

| 42 |

+

)

|

| 43 |

+

|

| 44 |

+

# Forces the block to be inside the feature map.

|

| 45 |

+

w_i, h_i = torch.meshgrid(torch.arange(w).to(x.device), torch.arange(h).to(x.device))

|

| 46 |

+

valid_block = ((w_i >= clipped_block_size // 2) & (w_i < w - (clipped_block_size - 1) // 2)) & (

|

| 47 |

+

(h_i >= clipped_block_size // 2) & (h_i < h - (clipped_block_size - 1) // 2)

|

| 48 |

+

)

|

| 49 |

+

valid_block = torch.reshape(valid_block, (1, 1, h, w)).to(dtype=x.dtype)

|

| 50 |

+

|

| 51 |

+

if batchwise:

|

| 52 |

+

# one mask for whole batch, quite a bit faster

|

| 53 |

+

uniform_noise = torch.rand((1, c, h, w), dtype=x.dtype, device=x.device)

|

| 54 |

+

else:

|

| 55 |

+

uniform_noise = torch.rand_like(x)

|

| 56 |

+

block_mask = ((2 - gamma - valid_block + uniform_noise) >= 1).to(dtype=x.dtype)

|

| 57 |

+

block_mask = -F.max_pool2d(

|

| 58 |

+

-block_mask, kernel_size=clipped_block_size, stride=1, padding=clipped_block_size // 2 # block_size,

|

| 59 |

+

)

|

| 60 |

+

|

| 61 |

+

if with_noise:

|

| 62 |

+

normal_noise = torch.randn((1, c, h, w), dtype=x.dtype, device=x.device) if batchwise else torch.randn_like(x)

|

| 63 |

+

if inplace:

|

| 64 |

+

x.mul_(block_mask).add_(normal_noise * (1 - block_mask))

|

| 65 |

+

else:

|

| 66 |

+

x = x * block_mask + normal_noise * (1 - block_mask)

|

| 67 |

+

else:

|

| 68 |

+

normalize_scale = (block_mask.numel() / block_mask.to(dtype=torch.float32).sum().add(1e-7)).to(x.dtype)

|

| 69 |

+

if inplace:

|

| 70 |

+

x.mul_(block_mask * normalize_scale)

|

| 71 |

+

else:

|

| 72 |

+

x = x * block_mask * normalize_scale

|

| 73 |

+

return x

|

| 74 |

+

|

| 75 |

+

|

| 76 |

+

def drop_block_fast_2d(

|

| 77 |

+

x: torch.Tensor,

|

| 78 |

+

drop_prob: float = 0.1,

|

| 79 |

+

block_size: int = 7,

|

| 80 |

+

gamma_scale: float = 1.0,

|

| 81 |

+

with_noise: bool = False,

|

| 82 |

+

inplace: bool = False,

|

| 83 |

+

):

|

| 84 |

+

"""DropBlock. See https://arxiv.org/pdf/1810.12890.pdf

|

| 85 |

+

|

| 86 |

+

DropBlock with an experimental gaussian noise option. Simplied from above without concern for valid

|

| 87 |

+

block mask at edges.

|

| 88 |

+

"""

|

| 89 |

+

b, c, h, w = x.shape

|

| 90 |

+

total_size = w * h

|

| 91 |

+

clipped_block_size = min(block_size, min(w, h))

|

| 92 |

+

gamma = (

|

| 93 |

+

gamma_scale * drop_prob * total_size / clipped_block_size**2 / ((w - block_size + 1) * (h - block_size + 1))

|

| 94 |

+

)

|

| 95 |

+

|

| 96 |

+

block_mask = torch.empty_like(x).bernoulli_(gamma)

|

| 97 |

+

block_mask = F.max_pool2d(

|

| 98 |

+

block_mask.to(x.dtype), kernel_size=clipped_block_size, stride=1, padding=clipped_block_size // 2

|

| 99 |

+

)

|

| 100 |

+

|

| 101 |

+

if with_noise:

|

| 102 |

+

normal_noise = torch.empty_like(x).normal_()

|

| 103 |

+

if inplace:

|

| 104 |

+

x.mul_(1.0 - block_mask).add_(normal_noise * block_mask)

|

| 105 |

+

else:

|

| 106 |

+

x = x * (1.0 - block_mask) + normal_noise * block_mask

|

| 107 |

+

else:

|

| 108 |

+

block_mask = 1 - block_mask

|

| 109 |

+

normalize_scale = (block_mask.numel() / block_mask.to(dtype=torch.float32).sum().add(1e-6)).to(dtype=x.dtype)

|

| 110 |

+

if inplace:

|

| 111 |

+

x.mul_(block_mask * normalize_scale)

|

| 112 |

+

else:

|

| 113 |

+

x = x * block_mask * normalize_scale

|

| 114 |

+

return x

|

| 115 |

+

|

| 116 |

+

|

| 117 |

+

class DropBlock2d(nn.Module):

|

| 118 |

+

"""DropBlock. See https://arxiv.org/pdf/1810.12890.pdf"""

|

| 119 |

+

|

| 120 |

+

def __init__(

|

| 121 |

+

self, drop_prob=0.1, block_size=7, gamma_scale=1.0, with_noise=False, inplace=False, batchwise=False, fast=True

|

| 122 |

+

):

|

| 123 |

+

super(DropBlock2d, self).__init__()

|

| 124 |

+

self.drop_prob = drop_prob

|

| 125 |

+

self.gamma_scale = gamma_scale

|

| 126 |

+

self.block_size = block_size

|

| 127 |

+

self.with_noise = with_noise

|

| 128 |

+

self.inplace = inplace

|

| 129 |

+

self.batchwise = batchwise

|

| 130 |

+

self.fast = fast # FIXME finish comparisons of fast vs not

|

| 131 |

+

|

| 132 |

+

def forward(self, x):

|

| 133 |

+

if not self.training or not self.drop_prob:

|

| 134 |

+

return x

|

| 135 |

+

if self.fast:

|

| 136 |

+

return drop_block_fast_2d(

|

| 137 |

+

x, self.drop_prob, self.block_size, self.gamma_scale, self.with_noise, self.inplace

|

| 138 |

+

)

|

| 139 |

+

else:

|

| 140 |

+

return drop_block_2d(

|

| 141 |

+

x, self.drop_prob, self.block_size, self.gamma_scale, self.with_noise, self.inplace, self.batchwise

|

| 142 |

+

)

|

| 143 |

+

|

| 144 |

+

|

| 145 |

+

def drop_path(x, drop_prob: float = 0.0, training: bool = False, scale_by_keep: bool = True):

|

| 146 |

+

"""Drop paths (Stochastic Depth) per sample (when applied in main path of residual blocks).

|

| 147 |

+

|

| 148 |

+

This is the same as the DropConnect impl I created for EfficientNet, etc networks, however,

|

| 149 |

+

the original name is misleading as 'Drop Connect' is a different form of dropout in a separate paper...

|

| 150 |

+

See discussion: https://github.com/tensorflow/tpu/issues/494#issuecomment-532968956 ... I've opted for

|

| 151 |

+

changing the layer and argument names to 'drop path' rather than mix DropConnect as a layer name and use

|

| 152 |

+

'survival rate' as the argument.

|

| 153 |

+

|

| 154 |

+

"""

|

| 155 |

+

if drop_prob == 0.0 or not training:

|

| 156 |

+

return x

|

| 157 |

+

keep_prob = 1 - drop_prob

|

| 158 |

+

shape = (x.shape[0],) + (1,) * (x.ndim - 1) # work with diff dim tensors, not just 2D ConvNets

|

| 159 |

+

random_tensor = x.new_empty(shape).bernoulli_(keep_prob)

|

| 160 |

+

if keep_prob > 0.0 and scale_by_keep:

|

| 161 |

+

random_tensor.div_(keep_prob)

|

| 162 |

+

return x * random_tensor

|

| 163 |

+

|

| 164 |

+

|

| 165 |

+

class DropPath(nn.Module):

|

| 166 |

+

"""Drop paths (Stochastic Depth) per sample (when applied in main path of residual blocks)."""

|

| 167 |

+

|

| 168 |

+

def __init__(self, drop_prob=None, scale_by_keep=True):

|

| 169 |

+

super(DropPath, self).__init__()

|

| 170 |

+

self.drop_prob = drop_prob

|

| 171 |

+

self.scale_by_keep = scale_by_keep

|

| 172 |

+

|

| 173 |

+

def forward(self, x):

|

| 174 |

+

return drop_path(x, self.drop_prob, self.training, self.scale_by_keep)

|

| 175 |

+

|

| 176 |

+

|

| 177 |

+

def create_conv3d(in_channels, out_channels, kernel_size, **kwargs):

|

| 178 |

+

"""Select a 2d convolution implementation based on arguments

|

| 179 |

+

Creates and returns one of torch.nn.Conv2d, Conv2dSame, MixedConv3d, or CondConv2d.

|

| 180 |

+

|

| 181 |

+

Used extensively by EfficientNet, MobileNetv3 and related networks.

|

| 182 |

+

"""

|

| 183 |

+

|

| 184 |

+

depthwise = kwargs.pop("depthwise", False)

|

| 185 |

+

# for DW out_channels must be multiple of in_channels as must have out_channels % groups == 0

|

| 186 |

+

groups = in_channels if depthwise else kwargs.pop("groups", 1)

|

| 187 |

+

|

| 188 |

+

m = create_conv3d_pad(in_channels, out_channels, kernel_size, groups=groups, **kwargs)

|

| 189 |

+

return m

|

| 190 |

+

|

| 191 |

+

|

| 192 |

+

def conv3d_same(

|

| 193 |

+

x,

|

| 194 |

+

weight: torch.Tensor,

|

| 195 |

+

bias: Optional[torch.Tensor] = None,

|

| 196 |

+

stride: Tuple[int, int] = (1, 1, 1),

|

| 197 |

+

padding: Tuple[int, int] = (0, 0, 0),

|

| 198 |

+

dilation: Tuple[int, int] = (1, 1, 1),

|

| 199 |

+

groups: int = 1,

|

| 200 |

+

):

|

| 201 |

+

x = pad_same(x, weight.shape[-3:], stride, dilation)

|

| 202 |

+

return F.conv3d(x, weight, bias, stride, (0, 0, 0), dilation, groups)

|

| 203 |

+

|

| 204 |

+

|

| 205 |

+

class Conv3dSame(nn.Conv2d):

|

| 206 |

+

"""Tensorflow like 'SAME' convolution wrapper for 2D convolutions"""

|

| 207 |

+

|

| 208 |

+

def __init__(self, in_channels, out_channels, kernel_size, stride=1, padding=0, dilation=1, groups=1, bias=True):

|

| 209 |

+

super(Conv3dSame, self).__init__(in_channels, out_channels, kernel_size, stride, 0, dilation, groups, bias)

|

| 210 |

+

|

| 211 |

+

def forward(self, x):

|

| 212 |

+

return conv3d_same(x, self.weight, self.bias, self.stride, self.padding, self.dilation, self.groups)

|

| 213 |

+

|

| 214 |

+

|

| 215 |

+

def create_conv3d_pad(in_chs, out_chs, kernel_size, **kwargs):

|

| 216 |

+

padding = kwargs.pop("padding", "")

|

| 217 |

+

kwargs.setdefault("bias", False)

|

| 218 |

+

padding, is_dynamic = get_padding_value(padding, kernel_size, **kwargs)

|

| 219 |

+

if is_dynamic:

|

| 220 |

+

return Conv3dSame(in_chs, out_chs, kernel_size, **kwargs)

|

| 221 |

+

else:

|

| 222 |

+

return nn.Conv3d(in_chs, out_chs, kernel_size, padding=padding, **kwargs)

|

| 223 |

+

|

| 224 |

+

|

| 225 |

+

# Calculate symmetric padding for a convolution

|

| 226 |

+

def get_padding(kernel_size: int, stride: int = 1, dilation: int = 1, **_) -> int:

|

| 227 |

+

padding = ((stride - 1) + dilation * (kernel_size - 1)) // 2

|

| 228 |

+

return padding

|

| 229 |

+

|

| 230 |

+

|

| 231 |

+

# Calculate asymmetric TensorFlow-like 'SAME' padding for a convolution

|

| 232 |

+

def get_same_padding(x: int, k: int, s: int, d: int):

|

| 233 |

+

return max((math.ceil(x / s) - 1) * s + (k - 1) * d + 1 - x, 0)

|

| 234 |

+

|

| 235 |

+

|

| 236 |

+

# Can SAME padding for given args be done statically?

|

| 237 |

+

def is_static_pad(kernel_size: int, stride: int = 1, dilation: int = 1, **_):

|

| 238 |

+

return stride == 1 and (dilation * (kernel_size - 1)) % 2 == 0

|

| 239 |

+

|

| 240 |

+

|

| 241 |

+

# Dynamically pad input x with 'SAME' padding for conv with specified args

|

| 242 |

+

def pad_same(x, k: List[int], s: List[int], d: List[int] = (1, 1, 1), value: float = 0):

|

| 243 |

+

id, ih, iw = x.size()[-3:]

|

| 244 |

+

pad_d, pad_h, pad_w = (

|

| 245 |

+

get_same_padding(id, k[0], s[0], d[0]),

|

| 246 |

+

get_same_padding(ih, k[1], s[1], d[1]),

|

| 247 |

+

get_same_padding(iw, k[2], s[2], d[2]),

|

| 248 |

+

)

|

| 249 |

+

if pad_d > 0 or pad_h > 0 or pad_w > 0:

|

| 250 |

+

x = F.pad(

|

| 251 |

+

x,

|

| 252 |

+

[pad_d // 2, pad_d - pad_d // 2, pad_w // 2, pad_w - pad_w // 2, pad_h // 2, pad_h - pad_h // 2],

|

| 253 |

+

value=value,

|

| 254 |

+

)

|

| 255 |

+

return x

|

| 256 |

+

|

| 257 |

+

|

| 258 |

+

def get_padding_value(padding, kernel_size, **kwargs) -> Tuple[Tuple, bool]:

|

| 259 |

+

dynamic = False

|

| 260 |

+

if isinstance(padding, str):

|

| 261 |

+

# for any string padding, the padding will be calculated for you, one of three ways

|

| 262 |

+

padding = padding.lower()

|

| 263 |

+

if padding == "same":

|

| 264 |

+

# TF compatible 'SAME' padding, has a performance and GPU memory allocation impact

|

| 265 |

+

if is_static_pad(kernel_size, **kwargs):

|

| 266 |

+

# static case, no extra overhead

|

| 267 |

+

padding = get_padding(kernel_size, **kwargs)

|

| 268 |

+

else:

|

| 269 |

+

# dynamic 'SAME' padding, has runtime/GPU memory overhead

|

| 270 |

+

padding = 0

|

| 271 |

+

dynamic = True

|

| 272 |

+

elif padding == "valid":

|

| 273 |

+

# 'VALID' padding, same as padding=0

|

| 274 |

+

padding = 0

|

| 275 |

+

else:

|

| 276 |

+

# Default to PyTorch style 'same'-ish symmetric padding

|

| 277 |

+

padding = get_padding(kernel_size, **kwargs)

|

| 278 |

+

return padding, dynamic

|

| 279 |

+

|

| 280 |

+

|

| 281 |

+

# From PyTorch internals

|

| 282 |

+

def _ntuple(n):

|

| 283 |

+

def parse(x):

|

| 284 |

+

if isinstance(x, collections.abc.Iterable):

|

| 285 |

+

return x

|

| 286 |

+

return tuple(repeat(x, n))

|

| 287 |

+

|

| 288 |

+

return parse

|

| 289 |

+

|

| 290 |

+

|

| 291 |

+

to_1tuple = _ntuple(1)

|

| 292 |

+

to_2tuple = _ntuple(2)

|

| 293 |

+

to_3tuple = _ntuple(3)

|

| 294 |

+

to_4tuple = _ntuple(4)

|

| 295 |

+

to_ntuple = _ntuple

|

| 296 |

+

|

| 297 |

+

|

| 298 |

+

def make_divisible(v, divisor=8, min_value=None, round_limit=0.9):

|

| 299 |

+

min_value = min_value or divisor

|

| 300 |

+

new_v = max(min_value, int(v + divisor / 2) // divisor * divisor)

|

| 301 |

+

# Make sure that round down does not go down by more than 10%.

|

| 302 |

+

if new_v < round_limit * v:

|

| 303 |

+

new_v += divisor

|

| 304 |

+

return new_v

|

| 305 |

+

|

| 306 |

+

|

| 307 |

+

class Linear(nn.Linear):

|

| 308 |

+

r"""Applies a linear transformation to the incoming data: :math:`y = xA^T + b`

|

| 309 |

+

|

| 310 |

+

Wraps torch.nn.Linear to support AMP + torchscript usage by manually casting

|

| 311 |

+

weight & bias to input.dtype to work around an issue w/ torch.addmm in this use case.

|

| 312 |

+

"""

|

| 313 |

+

|

| 314 |

+

def forward(self, input: torch.Tensor) -> torch.Tensor:

|

| 315 |

+

if torch.jit.is_scripting():

|

| 316 |

+

bias = self.bias.to(dtype=input.dtype) if self.bias is not None else None

|

| 317 |

+

return F.linear(input, self.weight.to(dtype=input.dtype), bias=bias)

|

| 318 |

+

else:

|

| 319 |

+

return F.linear(input, self.weight, self.bias)

|

| 320 |

+

|

| 321 |

+

|

| 322 |

+

class Mlp(nn.Module):

|

| 323 |

+

"""MLP as used in Vision Transformer, MLP-Mixer and related networks"""

|

| 324 |

+

|

| 325 |

+

def __init__(self, in_features, hidden_features=None, out_features=None, act_layer=nn.GELU, drop=0.0):

|

| 326 |

+

super().__init__()

|

| 327 |

+

out_features = out_features or in_features

|

| 328 |

+

hidden_features = hidden_features or in_features

|

| 329 |

+