Upload folder using huggingface_hub

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitignore +167 -0

- Dockerfile +45 -0

- LICENSE +201 -0

- README.md +376 -0

- README_zh-CN.md +375 -0

- __init__.py +3 -0

- app.py +49 -0

- cogvideox/__init__.py +0 -0

- cogvideox/api/api.py +173 -0

- cogvideox/api/post_infer.py +89 -0

- cogvideox/data/bucket_sampler.py +379 -0

- cogvideox/data/dataset_image.py +76 -0

- cogvideox/data/dataset_image_video.py +550 -0

- cogvideox/data/dataset_video.py +262 -0

- cogvideox/pipeline/pipeline_cogvideox.py +751 -0

- cogvideox/pipeline/pipeline_cogvideox_control.py +843 -0

- cogvideox/pipeline/pipeline_cogvideox_inpaint.py +1020 -0

- cogvideox/ui/ui.py +1614 -0

- cogvideox/utils/__init__.py +0 -0

- cogvideox/utils/lora_utils.py +477 -0

- cogvideox/utils/utils.py +208 -0

- cogvideox/video_caption/README.md +174 -0

- cogvideox/video_caption/README_zh-CN.md +159 -0

- cogvideox/video_caption/beautiful_prompt.py +103 -0

- cogvideox/video_caption/caption_rewrite.py +224 -0

- cogvideox/video_caption/compute_motion_score.py +186 -0

- cogvideox/video_caption/compute_text_score.py +214 -0

- cogvideox/video_caption/compute_video_quality.py +201 -0

- cogvideox/video_caption/cutscene_detect.py +97 -0

- cogvideox/video_caption/filter_meta_train.py +88 -0

- cogvideox/video_caption/package_patches/easyocr_detection_patched.py +114 -0

- cogvideox/video_caption/package_patches/vila_siglip_encoder_patched.py +42 -0

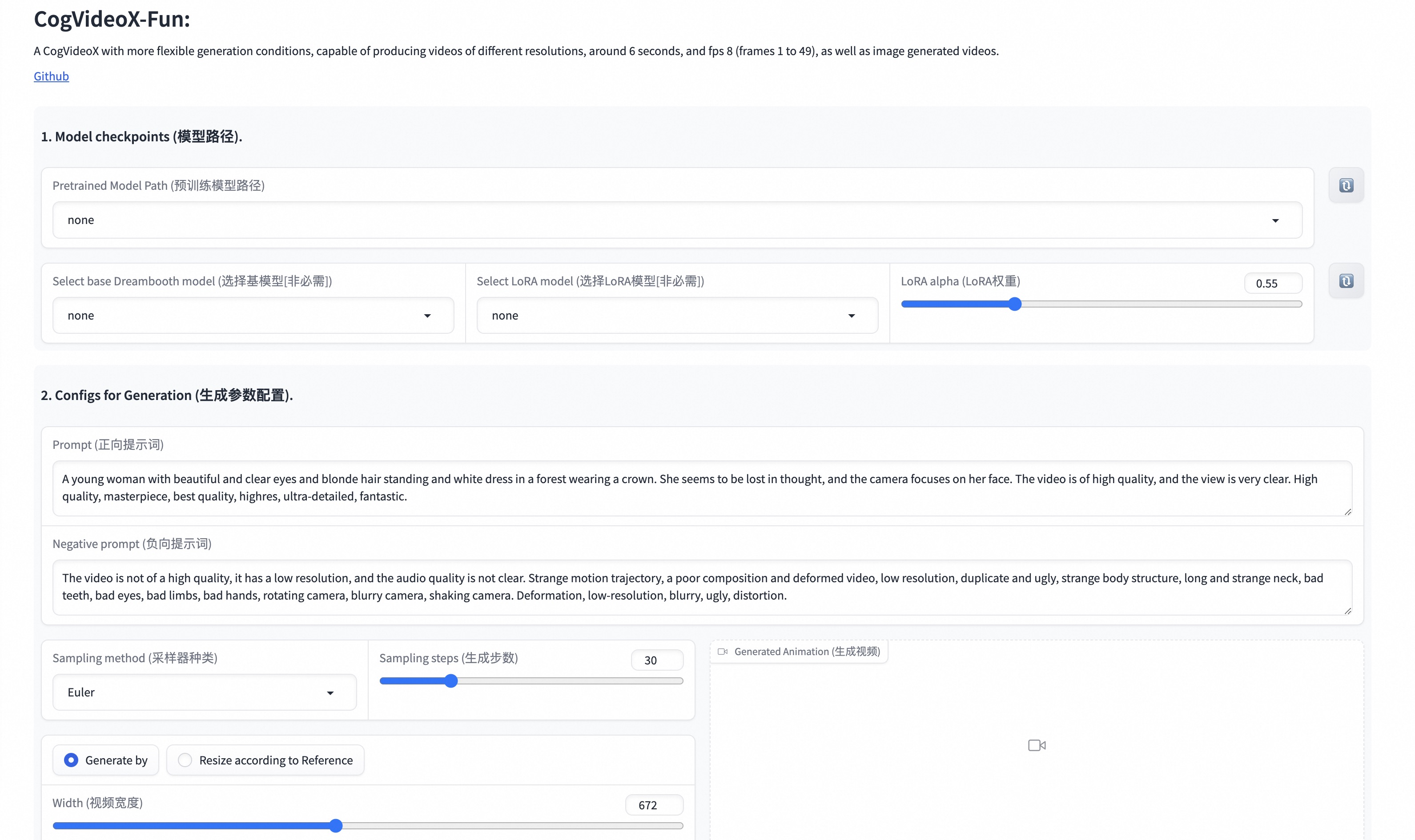

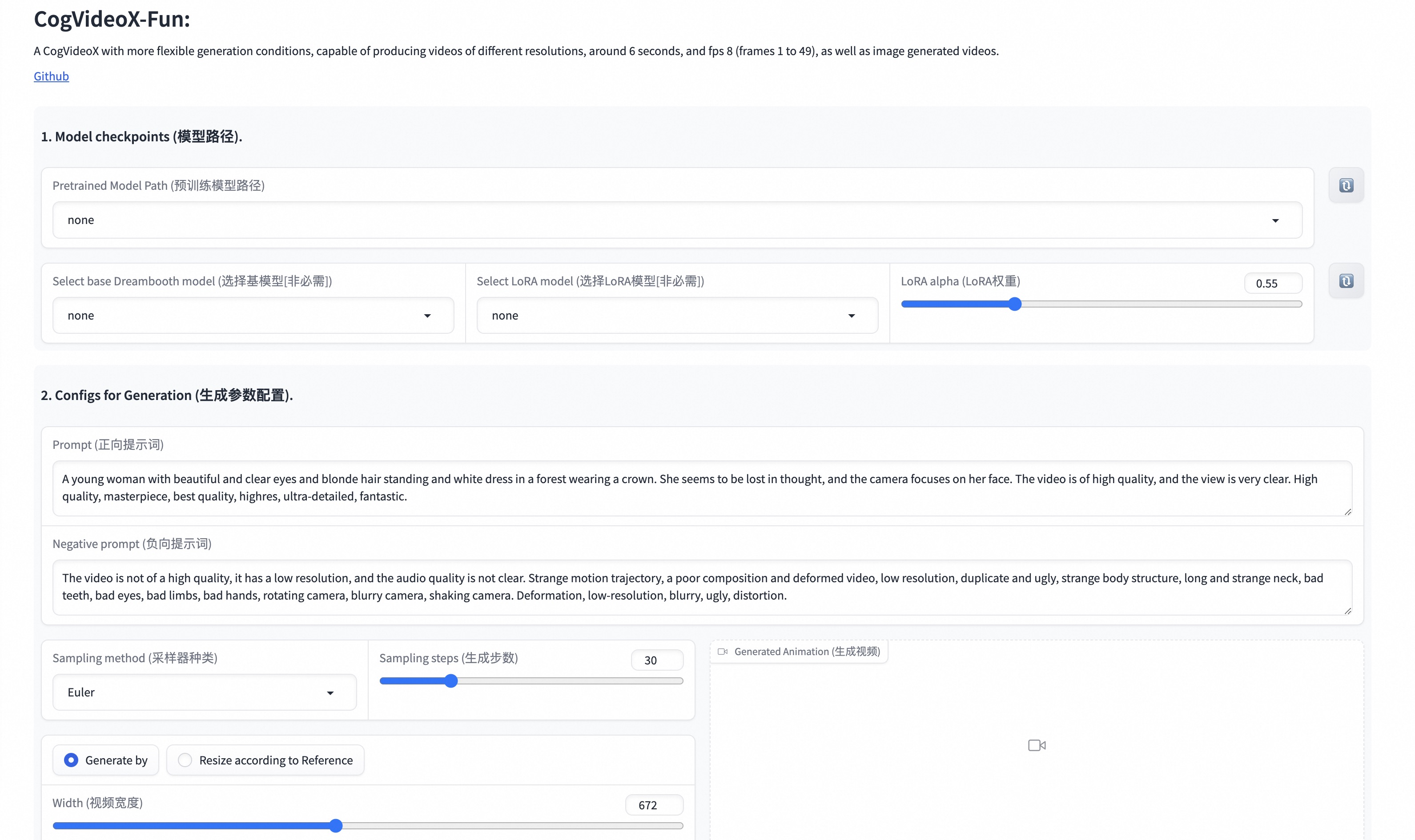

- cogvideox/video_caption/prompt/beautiful_prompt.txt +9 -0

- cogvideox/video_caption/prompt/rewrite.txt +9 -0

- cogvideox/video_caption/requirements.txt +9 -0

- cogvideox/video_caption/scripts/stage_1_video_splitting.sh +39 -0

- cogvideox/video_caption/scripts/stage_2_video_filtering.sh +41 -0

- cogvideox/video_caption/scripts/stage_3_video_recaptioning.sh +52 -0

- cogvideox/video_caption/utils/filter.py +162 -0

- cogvideox/video_caption/utils/gather_jsonl.py +55 -0

- cogvideox/video_caption/utils/get_meta_file.py +74 -0

- cogvideox/video_caption/utils/image_evaluator.py +248 -0

- cogvideox/video_caption/utils/logger.py +36 -0

- cogvideox/video_caption/utils/longclip/README.md +19 -0

- cogvideox/video_caption/utils/longclip/__init__.py +1 -0

- cogvideox/video_caption/utils/longclip/bpe_simple_vocab_16e6.txt.gz +3 -0

- cogvideox/video_caption/utils/longclip/longclip.py +353 -0

- cogvideox/video_caption/utils/longclip/model_longclip.py +471 -0

- cogvideox/video_caption/utils/longclip/simple_tokenizer.py +132 -0

- cogvideox/video_caption/utils/siglip_v2_5.py +127 -0

.gitignore

ADDED

|

@@ -0,0 +1,167 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Byte-compiled / optimized / DLL files

|

| 2 |

+

models*

|

| 3 |

+

output*

|

| 4 |

+

logs*

|

| 5 |

+

taming*

|

| 6 |

+

samples*

|

| 7 |

+

datasets*

|

| 8 |

+

asset*

|

| 9 |

+

__pycache__/

|

| 10 |

+

*.py[cod]

|

| 11 |

+

*$py.class

|

| 12 |

+

|

| 13 |

+

# C extensions

|

| 14 |

+

*.so

|

| 15 |

+

|

| 16 |

+

# Distribution / packaging

|

| 17 |

+

.Python

|

| 18 |

+

build/

|

| 19 |

+

develop-eggs/

|

| 20 |

+

dist/

|

| 21 |

+

downloads/

|

| 22 |

+

eggs/

|

| 23 |

+

.eggs/

|

| 24 |

+

lib/

|

| 25 |

+

lib64/

|

| 26 |

+

parts/

|

| 27 |

+

sdist/

|

| 28 |

+

var/

|

| 29 |

+

wheels/

|

| 30 |

+

share/python-wheels/

|

| 31 |

+

*.egg-info/

|

| 32 |

+

.installed.cfg

|

| 33 |

+

*.egg

|

| 34 |

+

MANIFEST

|

| 35 |

+

|

| 36 |

+

# PyInstaller

|

| 37 |

+

# Usually these files are written by a python script from a template

|

| 38 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 39 |

+

*.manifest

|

| 40 |

+

*.spec

|

| 41 |

+

|

| 42 |

+

# Installer logs

|

| 43 |

+

pip-log.txt

|

| 44 |

+

pip-delete-this-directory.txt

|

| 45 |

+

|

| 46 |

+

# Unit test / coverage reports

|

| 47 |

+

htmlcov/

|

| 48 |

+

.tox/

|

| 49 |

+

.nox/

|

| 50 |

+

.coverage

|

| 51 |

+

.coverage.*

|

| 52 |

+

.cache

|

| 53 |

+

nosetests.xml

|

| 54 |

+

coverage.xml

|

| 55 |

+

*.cover

|

| 56 |

+

*.py,cover

|

| 57 |

+

.hypothesis/

|

| 58 |

+

.pytest_cache/

|

| 59 |

+

cover/

|

| 60 |

+

|

| 61 |

+

# Translations

|

| 62 |

+

*.mo

|

| 63 |

+

*.pot

|

| 64 |

+

|

| 65 |

+

# Django stuff:

|

| 66 |

+

*.log

|

| 67 |

+

local_settings.py

|

| 68 |

+

db.sqlite3

|

| 69 |

+

db.sqlite3-journal

|

| 70 |

+

|

| 71 |

+

# Flask stuff:

|

| 72 |

+

instance/

|

| 73 |

+

.webassets-cache

|

| 74 |

+

|

| 75 |

+

# Scrapy stuff:

|

| 76 |

+

.scrapy

|

| 77 |

+

|

| 78 |

+

# Sphinx documentation

|

| 79 |

+

docs/_build/

|

| 80 |

+

|

| 81 |

+

# PyBuilder

|

| 82 |

+

.pybuilder/

|

| 83 |

+

target/

|

| 84 |

+

|

| 85 |

+

# Jupyter Notebook

|

| 86 |

+

.ipynb_checkpoints

|

| 87 |

+

|

| 88 |

+

# IPython

|

| 89 |

+

profile_default/

|

| 90 |

+

ipython_config.py

|

| 91 |

+

|

| 92 |

+

# pyenv

|

| 93 |

+

# For a library or package, you might want to ignore these files since the code is

|

| 94 |

+

# intended to run in multiple environments; otherwise, check them in:

|

| 95 |

+

# .python-version

|

| 96 |

+

|

| 97 |

+

# pipenv

|

| 98 |

+

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

| 99 |

+

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

| 100 |

+

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

| 101 |

+

# install all needed dependencies.

|

| 102 |

+

#Pipfile.lock

|

| 103 |

+

|

| 104 |

+

# poetry

|

| 105 |

+

# Similar to Pipfile.lock, it is generally recommended to include poetry.lock in version control.

|

| 106 |

+

# This is especially recommended for binary packages to ensure reproducibility, and is more

|

| 107 |

+

# commonly ignored for libraries.

|

| 108 |

+

# https://python-poetry.org/docs/basic-usage/#commit-your-poetrylock-file-to-version-control

|

| 109 |

+

#poetry.lock

|

| 110 |

+

|

| 111 |

+

# pdm

|

| 112 |

+

# Similar to Pipfile.lock, it is generally recommended to include pdm.lock in version control.

|

| 113 |

+

#pdm.lock

|

| 114 |

+

# pdm stores project-wide configurations in .pdm.toml, but it is recommended to not include it

|

| 115 |

+

# in version control.

|

| 116 |

+

# https://pdm.fming.dev/#use-with-ide

|

| 117 |

+

.pdm.toml

|

| 118 |

+

|

| 119 |

+

# PEP 582; used by e.g. github.com/David-OConnor/pyflow and github.com/pdm-project/pdm

|

| 120 |

+

__pypackages__/

|

| 121 |

+

|

| 122 |

+

# Celery stuff

|

| 123 |

+

celerybeat-schedule

|

| 124 |

+

celerybeat.pid

|

| 125 |

+

|

| 126 |

+

# SageMath parsed files

|

| 127 |

+

*.sage.py

|

| 128 |

+

|

| 129 |

+

# Environments

|

| 130 |

+

.env

|

| 131 |

+

.venv

|

| 132 |

+

env/

|

| 133 |

+

venv/

|

| 134 |

+

ENV/

|

| 135 |

+

env.bak/

|

| 136 |

+

venv.bak/

|

| 137 |

+

|

| 138 |

+

# Spyder project settings

|

| 139 |

+

.spyderproject

|

| 140 |

+

.spyproject

|

| 141 |

+

|

| 142 |

+

# Rope project settings

|

| 143 |

+

.ropeproject

|

| 144 |

+

|

| 145 |

+

# mkdocs documentation

|

| 146 |

+

/site

|

| 147 |

+

|

| 148 |

+

# mypy

|

| 149 |

+

.mypy_cache/

|

| 150 |

+

.dmypy.json

|

| 151 |

+

dmypy.json

|

| 152 |

+

|

| 153 |

+

# Pyre type checker

|

| 154 |

+

.pyre/

|

| 155 |

+

|

| 156 |

+

# pytype static type analyzer

|

| 157 |

+

.pytype/

|

| 158 |

+

|

| 159 |

+

# Cython debug symbols

|

| 160 |

+

cython_debug/

|

| 161 |

+

|

| 162 |

+

# PyCharm

|

| 163 |

+

# JetBrains specific template is maintained in a separate JetBrains.gitignore that can

|

| 164 |

+

# be found at https://github.com/github/gitignore/blob/main/Global/JetBrains.gitignore

|

| 165 |

+

# and can be added to the global gitignore or merged into this file. For a more nuclear

|

| 166 |

+

# option (not recommended) you can uncomment the following to ignore the entire idea folder.

|

| 167 |

+

#.idea/

|

Dockerfile

ADDED

|

@@ -0,0 +1,45 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

FROM runpod/pytorch:2.2.1-py3.10-cuda12.1.1-devel-ubuntu22.04

|

| 2 |

+

WORKDIR /content

|

| 3 |

+

ENV PATH="/home/zebraslive/.local/bin:${PATH}"

|

| 4 |

+

|

| 5 |

+

RUN adduser --disabled-password --gecos '' zebraslive && \

|

| 6 |

+

adduser zebraslive sudo && \

|

| 7 |

+

echo '%sudo ALL=(ALL) NOPASSWD:ALL' >> /etc/sudoers && \

|

| 8 |

+

chown -R zebraslive:zebraslive /content && \

|

| 9 |

+

chmod -R 777 /content && \

|

| 10 |

+

chown -R zebraslive:zebraslive /home && \

|

| 11 |

+

chmod -R 777 /home && \

|

| 12 |

+

apt update -y && add-apt-repository -y ppa:git-core/ppa && apt update -y && apt install -y aria2 git git-lfs unzip ffmpeg

|

| 13 |

+

|

| 14 |

+

USER zebraslive

|

| 15 |

+

|

| 16 |

+

RUN pip install -q torch==2.4.0+cu121 torchvision==0.19.0+cu121 torchaudio==2.4.0+cu121 torchtext==0.18.0 torchdata==0.8.0 --extra-index-url https://download.pytorch.org/whl/cu121 \

|

| 17 |

+

tqdm==4.66.5 numpy==1.26.3 imageio==2.35.1 imageio-ffmpeg==0.5.1 xformers==0.0.27.post2 diffusers==0.30.3 moviepy==1.0.3 transformers==4.44.2 accelerate==0.33.0 sentencepiece==0.2.0 pillow==9.5.0 runpod && \

|

| 18 |

+

aria2c --console-log-level=error -c -x 16 -s 16 -k 1M https://huggingface.co/alibaba-pai/CogVideoX-Fun-V1.1-5b-InP/raw/main/scheduler/scheduler_config.json -d /content/model/scheduler -o scheduler_config.json && \

|

| 19 |

+

aria2c --console-log-level=error -c -x 16 -s 16 -k 1M https://huggingface.co/alibaba-pai/CogVideoX-Fun-V1.1-5b-InP/raw/main/text_encoder/config.json -d /content/model/text_encoder -o config.json && \

|

| 20 |

+

aria2c --console-log-level=error -c -x 16 -s 16 -k 1M https://huggingface.co/alibaba-pai/CogVideoX-Fun-V1.1-5b-InP/resolve/main/text_encoder/model-00001-of-00002.safetensors -d /content/model/text_encoder -o model-00001-of-00002.safetensors && \

|

| 21 |

+

aria2c --console-log-level=error -c -x 16 -s 16 -k 1M https://huggingface.co/alibaba-pai/CogVideoX-Fun-V1.1-5b-InP/resolve/main/text_encoder/model-00002-of-00002.safetensors -d /content/model/text_encoder -o model-00002-of-00002.safetensors && \

|

| 22 |

+

aria2c --console-log-level=error -c -x 16 -s 16 -k 1M https://huggingface.co/alibaba-pai/CogVideoX-Fun-V1.1-5b-InP/raw/main/text_encoder/model.safetensors.index.json -d /content/model/text_encoder -o model.safetensors.index.json && \

|

| 23 |

+

aria2c --console-log-level=error -c -x 16 -s 16 -k 1M https://huggingface.co/alibaba-pai/CogVideoX-Fun-V1.1-5b-InP/raw/main/tokenizer/added_tokens.json -d /content/model/tokenizer -o added_tokens.json && \

|

| 24 |

+

aria2c --console-log-level=error -c -x 16 -s 16 -k 1M https://huggingface.co/alibaba-pai/CogVideoX-Fun-V1.1-5b-InP/raw/main/tokenizer/special_tokens_map.json -d /content/model/tokenizer -o special_tokens_map.json && \

|

| 25 |

+

aria2c --console-log-level=error -c -x 16 -s 16 -k 1M https://huggingface.co/alibaba-pai/CogVideoX-Fun-V1.1-5b-InP/resolve/main/tokenizer/spiece.model -d /content/model/tokenizer -o spiece.model && \

|

| 26 |

+

aria2c --console-log-level=error -c -x 16 -s 16 -k 1M https://huggingface.co/alibaba-pai/CogVideoX-Fun-V1.1-5b-InP/raw/main/tokenizer/tokenizer_config.json -d /content/model/tokenizer -o tokenizer_config.json && \

|

| 27 |

+

aria2c --console-log-level=error -c -x 16 -s 16 -k 1M https://huggingface.co/alibaba-pai/CogVideoX-Fun-V1.1-5b-InP/raw/main/transformer/config.json -d /content/model/transformer -o config.json && \

|

| 28 |

+

aria2c --console-log-level=error -c -x 16 -s 16 -k 1M https://huggingface.co/alibaba-pai/CogVideoX-Fun-V1.1-5b-InP/resolve/main/transformer/diffusion_pytorch_model-00001-of-00003.safetensors -d /content/model/transformer -o diffusion_pytorch_model-00001-of-00003.safetensors && \

|

| 29 |

+

aria2c --console-log-level=error -c -x 16 -s 16 -k 1M https://huggingface.co/alibaba-pai/CogVideoX-Fun-V1.1-5b-InP/resolve/main/transformer/diffusion_pytorch_model-00002-of-00003.safetensors -d /content/model/transformer -o diffusion_pytorch_model-00002-of-00003.safetensors && \

|

| 30 |

+

aria2c --console-log-level=error -c -x 16 -s 16 -k 1M https://huggingface.co/alibaba-pai/CogVideoX-Fun-V1.1-5b-InP/resolve/main/transformer/diffusion_pytorch_model-00003-of-00003.safetensors -d /content/model/transformer -o diffusion_pytorch_model-00003-of-00003.safetensors && \

|

| 31 |

+

aria2c --console-log-level=error -c -x 16 -s 16 -k 1M https://huggingface.co/alibaba-pai/CogVideoX-Fun-V1.1-5b-InP/raw/main/transformer/diffusion_pytorch_model.safetensors.index.json -d /content/model/transformer -o diffusion_pytorch_model.safetensors.index.json && \

|

| 32 |

+

aria2c --console-log-level=error -c -x 16 -s 16 -k 1M https://huggingface.co/alibaba-pai/CogVideoX-Fun-V1.1-5b-InP/raw/main/vae/config.json -d /content/model/vae -o config.json && \

|

| 33 |

+

aria2c --console-log-level=error -c -x 16 -s 16 -k 1M https://huggingface.co/alibaba-pai/CogVideoX-Fun-V1.1-5b-InP/resolve/main/vae/diffusion_pytorch_model.safetensors -d /content/model/vae -o diffusion_pytorch_model.safetensors && \

|

| 34 |

+

aria2c --console-log-level=error -c -x 16 -s 16 -k 1M https://huggingface.co/alibaba-pai/CogVideoX-Fun-V1.1-5b-InP/raw/main/configuration.json -d /content/model -o configuration.json && \

|

| 35 |

+

aria2c --console-log-level=error -c -x 16 -s 16 -k 1M https://huggingface.co/alibaba-pai/CogVideoX-Fun-V1.1-5b-InP/raw/main/model_index.json -d /content/model -o model_index.json

|

| 36 |

+

|

| 37 |

+

COPY ./worker_runpod.py /content/worker_runpod.py

|

| 38 |

+

COPY ./cogvideox /content/cogvideox

|

| 39 |

+

COPY ./asset /content/asset

|

| 40 |

+

COPY ./config /content/config

|

| 41 |

+

COPY ./datasets /content/datasets

|

| 42 |

+

COPY ./reports /content/reports

|

| 43 |

+

COPY ./requirements.txt /content/requirements.txt

|

| 44 |

+

RUN pip install -r /content/requirements.txt

|

| 45 |

+

WORKDIR /content

|

LICENSE

ADDED

|

@@ -0,0 +1,201 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Apache License

|

| 2 |

+

Version 2.0, January 2004

|

| 3 |

+

http://www.apache.org/licenses/

|

| 4 |

+

|

| 5 |

+

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

| 6 |

+

|

| 7 |

+

1. Definitions.

|

| 8 |

+

|

| 9 |

+

"License" shall mean the terms and conditions for use, reproduction,

|

| 10 |

+

and distribution as defined by Sections 1 through 9 of this document.

|

| 11 |

+

|

| 12 |

+

"Licensor" shall mean the copyright owner or entity authorized by

|

| 13 |

+

the copyright owner that is granting the License.

|

| 14 |

+

|

| 15 |

+

"Legal Entity" shall mean the union of the acting entity and all

|

| 16 |

+

other entities that control, are controlled by, or are under common

|

| 17 |

+

control with that entity. For the purposes of this definition,

|

| 18 |

+

"control" means (i) the power, direct or indirect, to cause the

|

| 19 |

+

direction or management of such entity, whether by contract or

|

| 20 |

+

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

| 21 |

+

outstanding shares, or (iii) beneficial ownership of such entity.

|

| 22 |

+

|

| 23 |

+

"You" (or "Your") shall mean an individual or Legal Entity

|

| 24 |

+

exercising permissions granted by this License.

|

| 25 |

+

|

| 26 |

+

"Source" form shall mean the preferred form for making modifications,

|

| 27 |

+

including but not limited to software source code, documentation

|

| 28 |

+

source, and configuration files.

|

| 29 |

+

|

| 30 |

+

"Object" form shall mean any form resulting from mechanical

|

| 31 |

+

transformation or translation of a Source form, including but

|

| 32 |

+

not limited to compiled object code, generated documentation,

|

| 33 |

+

and conversions to other media types.

|

| 34 |

+

|

| 35 |

+

"Work" shall mean the work of authorship, whether in Source or

|

| 36 |

+

Object form, made available under the License, as indicated by a

|

| 37 |

+

copyright notice that is included in or attached to the work

|

| 38 |

+

(an example is provided in the Appendix below).

|

| 39 |

+

|

| 40 |

+

"Derivative Works" shall mean any work, whether in Source or Object

|

| 41 |

+

form, that is based on (or derived from) the Work and for which the

|

| 42 |

+

editorial revisions, annotations, elaborations, or other modifications

|

| 43 |

+

represent, as a whole, an original work of authorship. For the purposes

|

| 44 |

+

of this License, Derivative Works shall not include works that remain

|

| 45 |

+

separable from, or merely link (or bind by name) to the interfaces of,

|

| 46 |

+

the Work and Derivative Works thereof.

|

| 47 |

+

|

| 48 |

+

"Contribution" shall mean any work of authorship, including

|

| 49 |

+

the original version of the Work and any modifications or additions

|

| 50 |

+

to that Work or Derivative Works thereof, that is intentionally

|

| 51 |

+

submitted to Licensor for inclusion in the Work by the copyright owner

|

| 52 |

+

or by an individual or Legal Entity authorized to submit on behalf of

|

| 53 |

+

the copyright owner. For the purposes of this definition, "submitted"

|

| 54 |

+

means any form of electronic, verbal, or written communication sent

|

| 55 |

+

to the Licensor or its representatives, including but not limited to

|

| 56 |

+

communication on electronic mailing lists, source code control systems,

|

| 57 |

+

and issue tracking systems that are managed by, or on behalf of, the

|

| 58 |

+

Licensor for the purpose of discussing and improving the Work, but

|

| 59 |

+

excluding communication that is conspicuously marked or otherwise

|

| 60 |

+

designated in writing by the copyright owner as "Not a Contribution."

|

| 61 |

+

|

| 62 |

+

"Contributor" shall mean Licensor and any individual or Legal Entity

|

| 63 |

+

on behalf of whom a Contribution has been received by Licensor and

|

| 64 |

+

subsequently incorporated within the Work.

|

| 65 |

+

|

| 66 |

+

2. Grant of Copyright License. Subject to the terms and conditions of

|

| 67 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 68 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 69 |

+

copyright license to reproduce, prepare Derivative Works of,

|

| 70 |

+

publicly display, publicly perform, sublicense, and distribute the

|

| 71 |

+

Work and such Derivative Works in Source or Object form.

|

| 72 |

+

|

| 73 |

+

3. Grant of Patent License. Subject to the terms and conditions of

|

| 74 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 75 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 76 |

+

(except as stated in this section) patent license to make, have made,

|

| 77 |

+

use, offer to sell, sell, import, and otherwise transfer the Work,

|

| 78 |

+

where such license applies only to those patent claims licensable

|

| 79 |

+

by such Contributor that are necessarily infringed by their

|

| 80 |

+

Contribution(s) alone or by combination of their Contribution(s)

|

| 81 |

+

with the Work to which such Contribution(s) was submitted. If You

|

| 82 |

+

institute patent litigation against any entity (including a

|

| 83 |

+

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

| 84 |

+

or a Contribution incorporated within the Work constitutes direct

|

| 85 |

+

or contributory patent infringement, then any patent licenses

|

| 86 |

+

granted to You under this License for that Work shall terminate

|

| 87 |

+

as of the date such litigation is filed.

|

| 88 |

+

|

| 89 |

+

4. Redistribution. You may reproduce and distribute copies of the

|

| 90 |

+

Work or Derivative Works thereof in any medium, with or without

|

| 91 |

+

modifications, and in Source or Object form, provided that You

|

| 92 |

+

meet the following conditions:

|

| 93 |

+

|

| 94 |

+

(a) You must give any other recipients of the Work or

|

| 95 |

+

Derivative Works a copy of this License; and

|

| 96 |

+

|

| 97 |

+

(b) You must cause any modified files to carry prominent notices

|

| 98 |

+

stating that You changed the files; and

|

| 99 |

+

|

| 100 |

+

(c) You must retain, in the Source form of any Derivative Works

|

| 101 |

+

that You distribute, all copyright, patent, trademark, and

|

| 102 |

+

attribution notices from the Source form of the Work,

|

| 103 |

+

excluding those notices that do not pertain to any part of

|

| 104 |

+

the Derivative Works; and

|

| 105 |

+

|

| 106 |

+

(d) If the Work includes a "NOTICE" text file as part of its

|

| 107 |

+

distribution, then any Derivative Works that You distribute must

|

| 108 |

+

include a readable copy of the attribution notices contained

|

| 109 |

+

within such NOTICE file, excluding those notices that do not

|

| 110 |

+

pertain to any part of the Derivative Works, in at least one

|

| 111 |

+

of the following places: within a NOTICE text file distributed

|

| 112 |

+

as part of the Derivative Works; within the Source form or

|

| 113 |

+

documentation, if provided along with the Derivative Works; or,

|

| 114 |

+

within a display generated by the Derivative Works, if and

|

| 115 |

+

wherever such third-party notices normally appear. The contents

|

| 116 |

+

of the NOTICE file are for informational purposes only and

|

| 117 |

+

do not modify the License. You may add Your own attribution

|

| 118 |

+

notices within Derivative Works that You distribute, alongside

|

| 119 |

+

or as an addendum to the NOTICE text from the Work, provided

|

| 120 |

+

that such additional attribution notices cannot be construed

|

| 121 |

+

as modifying the License.

|

| 122 |

+

|

| 123 |

+

You may add Your own copyright statement to Your modifications and

|

| 124 |

+

may provide additional or different license terms and conditions

|

| 125 |

+

for use, reproduction, or distribution of Your modifications, or

|

| 126 |

+

for any such Derivative Works as a whole, provided Your use,

|

| 127 |

+

reproduction, and distribution of the Work otherwise complies with

|

| 128 |

+

the conditions stated in this License.

|

| 129 |

+

|

| 130 |

+

5. Submission of Contributions. Unless You explicitly state otherwise,

|

| 131 |

+

any Contribution intentionally submitted for inclusion in the Work

|

| 132 |

+

by You to the Licensor shall be under the terms and conditions of

|

| 133 |

+

this License, without any additional terms or conditions.

|

| 134 |

+

Notwithstanding the above, nothing herein shall supersede or modify

|

| 135 |

+

the terms of any separate license agreement you may have executed

|

| 136 |

+

with Licensor regarding such Contributions.

|

| 137 |

+

|

| 138 |

+

6. Trademarks. This License does not grant permission to use the trade

|

| 139 |

+

names, trademarks, service marks, or product names of the Licensor,

|

| 140 |

+

except as required for reasonable and customary use in describing the

|

| 141 |

+

origin of the Work and reproducing the content of the NOTICE file.

|

| 142 |

+

|

| 143 |

+

7. Disclaimer of Warranty. Unless required by applicable law or

|

| 144 |

+

agreed to in writing, Licensor provides the Work (and each

|

| 145 |

+

Contributor provides its Contributions) on an "AS IS" BASIS,

|

| 146 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

| 147 |

+

implied, including, without limitation, any warranties or conditions

|

| 148 |

+

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

| 149 |

+

PARTICULAR PURPOSE. You are solely responsible for determining the

|

| 150 |

+

appropriateness of using or redistributing the Work and assume any

|

| 151 |

+

risks associated with Your exercise of permissions under this License.

|

| 152 |

+

|

| 153 |

+

8. Limitation of Liability. In no event and under no legal theory,

|

| 154 |

+

whether in tort (including negligence), contract, or otherwise,

|

| 155 |

+

unless required by applicable law (such as deliberate and grossly

|

| 156 |

+

negligent acts) or agreed to in writing, shall any Contributor be

|

| 157 |

+

liable to You for damages, including any direct, indirect, special,

|

| 158 |

+

incidental, or consequential damages of any character arising as a

|

| 159 |

+

result of this License or out of the use or inability to use the

|

| 160 |

+

Work (including but not limited to damages for loss of goodwill,

|

| 161 |

+

work stoppage, computer failure or malfunction, or any and all

|

| 162 |

+

other commercial damages or losses), even if such Contributor

|

| 163 |

+

has been advised of the possibility of such damages.

|

| 164 |

+

|

| 165 |

+

9. Accepting Warranty or Additional Liability. While redistributing

|

| 166 |

+

the Work or Derivative Works thereof, You may choose to offer,

|

| 167 |

+

and charge a fee for, acceptance of support, warranty, indemnity,

|

| 168 |

+

or other liability obligations and/or rights consistent with this

|

| 169 |

+

License. However, in accepting such obligations, You may act only

|

| 170 |

+

on Your own behalf and on Your sole responsibility, not on behalf

|

| 171 |

+

of any other Contributor, and only if You agree to indemnify,

|

| 172 |

+

defend, and hold each Contributor harmless for any liability

|

| 173 |

+

incurred by, or claims asserted against, such Contributor by reason

|

| 174 |

+

of your accepting any such warranty or additional liability.

|

| 175 |

+

|

| 176 |

+

END OF TERMS AND CONDITIONS

|

| 177 |

+

|

| 178 |

+

APPENDIX: How to apply the Apache License to your work.

|

| 179 |

+

|

| 180 |

+

To apply the Apache License to your work, attach the following

|

| 181 |

+

boilerplate notice, with the fields enclosed by brackets "[]"

|

| 182 |

+

replaced with your own identifying information. (Don't include

|

| 183 |

+

the brackets!) The text should be enclosed in the appropriate

|

| 184 |

+

comment syntax for the file format. We also recommend that a

|

| 185 |

+

file or class name and description of purpose be included on the

|

| 186 |

+

same "printed page" as the copyright notice for easier

|

| 187 |

+

identification within third-party archives.

|

| 188 |

+

|

| 189 |

+

Copyright [yyyy] [name of copyright owner]

|

| 190 |

+

|

| 191 |

+

Licensed under the Apache License, Version 2.0 (the "License");

|

| 192 |

+

you may not use this file except in compliance with the License.

|

| 193 |

+

You may obtain a copy of the License at

|

| 194 |

+

|

| 195 |

+

http://www.apache.org/licenses/LICENSE-2.0

|

| 196 |

+

|

| 197 |

+

Unless required by applicable law or agreed to in writing, software

|

| 198 |

+

distributed under the License is distributed on an "AS IS" BASIS,

|

| 199 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 200 |

+

See the License for the specific language governing permissions and

|

| 201 |

+

limitations under the License.

|

README.md

ADDED

|

@@ -0,0 +1,376 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# CogVideoX-Fun

|

| 2 |

+

|

| 3 |

+

😊 Welcome!

|

| 4 |

+

|

| 5 |

+

[](https://huggingface.co/spaces/alibaba-pai/CogVideoX-Fun-5b)

|

| 6 |

+

|

| 7 |

+

English | [简体中文](./README_zh-CN.md)

|

| 8 |

+

|

| 9 |

+

# Table of Contents

|

| 10 |

+

- [Table of Contents](#table-of-contents)

|

| 11 |

+

- [Introduction](#introduction)

|

| 12 |

+

- [Quick Start](#quick-start)

|

| 13 |

+

- [Video Result](#video-result)

|

| 14 |

+

- [How to use](#how-to-use)

|

| 15 |

+

- [Model zoo](#model-zoo)

|

| 16 |

+

- [TODO List](#todo-list)

|

| 17 |

+

- [Reference](#reference)

|

| 18 |

+

- [License](#license)

|

| 19 |

+

|

| 20 |

+

# Introduction

|

| 21 |

+

CogVideoX-Fun is a modified pipeline based on the CogVideoX structure, designed to provide more flexibility in generation. It can be used to create AI images and videos, as well as to train baseline models and Lora models for Diffusion Transformer. We support predictions directly from the already trained CogVideoX-Fun model, allowing the generation of videos at different resolutions, approximately 6 seconds long with 8 fps (1 to 49 frames). Users can also train their own baseline models and Lora models to achieve certain style transformations.

|

| 22 |

+

|

| 23 |

+

We will support quick pull-ups from different platforms, refer to [Quick Start](#quick-start).

|

| 24 |

+

|

| 25 |

+

What's New:

|

| 26 |

+

- CogVideoX-Fun Control is now supported in diffusers. Thanks to [a-r-r-o-w](https://github.com/a-r-r-o-w) who contributed the support in this [PR](https://github.com/huggingface/diffusers/pull/9671). Check out the [docs](https://huggingface.co/docs/diffusers/main/en/api/pipelines/cogvideox) to know more. [ 2024.10.16 ]

|

| 27 |

+

- Retrain the i2v model and add noise to increase the motion amplitude of the video. Upload the control model training code and control model. [ 2024.09.29 ]

|

| 28 |

+

- Create code! Now supporting Windows and Linux. Supports 2b and 5b models. Supports video generation at any resolution from 256x256x49 to 1024x1024x49. [ 2024.09.18 ]

|

| 29 |

+

|

| 30 |

+

Function:

|

| 31 |

+

- [Data Preprocessing](#data-preprocess)

|

| 32 |

+

- [Train DiT](#dit-train)

|

| 33 |

+

- [Video Generation](#video-gen)

|

| 34 |

+

|

| 35 |

+

Our UI interface is as follows:

|

| 36 |

+

|

| 37 |

+

|

| 38 |

+

# Quick Start

|

| 39 |

+

### 1. Cloud usage: AliyunDSW/Docker

|

| 40 |

+

#### a. From AliyunDSW

|

| 41 |

+

DSW has free GPU time, which can be applied once by a user and is valid for 3 months after applying.

|

| 42 |

+

|

| 43 |

+

Aliyun provide free GPU time in [Freetier](https://free.aliyun.com/?product=9602825&crowd=enterprise&spm=5176.28055625.J_5831864660.1.e939154aRgha4e&scm=20140722.M_9974135.P_110.MO_1806-ID_9974135-MID_9974135-CID_30683-ST_8512-V_1), get it and use in Aliyun PAI-DSW to start CogVideoX-Fun within 5min!

|

| 44 |

+

|

| 45 |

+

[](https://gallery.pai-ml.com/#/preview/deepLearning/cv/cogvideox_fun)

|

| 46 |

+

|

| 47 |

+

#### b. From ComfyUI

|

| 48 |

+

Our ComfyUI is as follows, please refer to [ComfyUI README](comfyui/README.md) for details.

|

| 49 |

+

|

| 50 |

+

|

| 51 |

+

#### c. From docker

|

| 52 |

+

If you are using docker, please make sure that the graphics card driver and CUDA environment have been installed correctly in your machine.

|

| 53 |

+

|

| 54 |

+

Then execute the following commands in this way:

|

| 55 |

+

|

| 56 |

+

```

|

| 57 |

+

# pull image

|

| 58 |

+

docker pull mybigpai-public-registry.cn-beijing.cr.aliyuncs.com/easycv/torch_cuda:cogvideox_fun

|

| 59 |

+

|

| 60 |

+

# enter image

|

| 61 |

+

docker run -it -p 7860:7860 --network host --gpus all --security-opt seccomp:unconfined --shm-size 200g mybigpai-public-registry.cn-beijing.cr.aliyuncs.com/easycv/torch_cuda:cogvideox_fun

|

| 62 |

+

|

| 63 |

+

# clone code

|

| 64 |

+

git clone https://github.com/aigc-apps/CogVideoX-Fun.git

|

| 65 |

+

|

| 66 |

+

# enter CogVideoX-Fun's dir

|

| 67 |

+

cd CogVideoX-Fun

|

| 68 |

+

|

| 69 |

+

# download weights

|

| 70 |

+

mkdir models/Diffusion_Transformer

|

| 71 |

+

mkdir models/Personalized_Model

|

| 72 |

+

|

| 73 |

+

wget https://pai-aigc-photog.oss-cn-hangzhou.aliyuncs.com/cogvideox_fun/Diffusion_Transformer/CogVideoX-Fun-V1.1-2b-InP.tar.gz -O models/Diffusion_Transformer/CogVideoX-Fun-V1.1-2b-InP.tar.gz

|

| 74 |

+

|

| 75 |

+

cd models/Diffusion_Transformer/

|

| 76 |

+

tar -xvf CogVideoX-Fun-V1.1-2b-InP.tar.gz

|

| 77 |

+

cd ../../

|

| 78 |

+

```

|

| 79 |

+

|

| 80 |

+

### 2. Local install: Environment Check/Downloading/Installation

|

| 81 |

+

#### a. Environment Check

|

| 82 |

+

We have verified CogVideoX-Fun execution on the following environment:

|

| 83 |

+

|

| 84 |

+

The detailed of Windows:

|

| 85 |

+

- OS: Windows 10

|

| 86 |

+

- python: python3.10 & python3.11

|

| 87 |

+

- pytorch: torch2.2.0

|

| 88 |

+

- CUDA: 11.8 & 12.1

|

| 89 |

+

- CUDNN: 8+

|

| 90 |

+

- GPU: Nvidia-3060 12G & Nvidia-3090 24G

|

| 91 |

+

|

| 92 |

+

The detailed of Linux:

|

| 93 |

+

- OS: Ubuntu 20.04, CentOS

|

| 94 |

+

- python: python3.10 & python3.11

|

| 95 |

+

- pytorch: torch2.2.0

|

| 96 |

+

- CUDA: 11.8 & 12.1

|

| 97 |

+

- CUDNN: 8+

|

| 98 |

+

- GPU:Nvidia-V100 16G & Nvidia-A10 24G & Nvidia-A100 40G & Nvidia-A100 80G

|

| 99 |

+

|

| 100 |

+

We need about 60GB available on disk (for saving weights), please check!

|

| 101 |

+

|

| 102 |

+

#### b. Weights

|

| 103 |

+

We'd better place the [weights](#model-zoo) along the specified path:

|

| 104 |

+

|

| 105 |

+

```

|

| 106 |

+

📦 models/

|

| 107 |

+

├── 📂 Diffusion_Transformer/

|

| 108 |

+

│ ├── 📂 CogVideoX-Fun-V1.1-2b-InP/

|

| 109 |

+

│ └── 📂 CogVideoX-Fun-V1.1-5b-InP/

|

| 110 |

+

├── 📂 Personalized_Model/

|

| 111 |

+

│ └── your trained trainformer model / your trained lora model (for UI load)

|

| 112 |

+

```

|

| 113 |

+

|

| 114 |

+

# Video Result

|

| 115 |

+

The results displayed are all based on image.

|

| 116 |

+

|

| 117 |

+

### CogVideoX-Fun-V1.1-5B

|

| 118 |

+

|

| 119 |

+

Resolution-1024

|

| 120 |

+

|

| 121 |

+

<table border="0" style="width: 100%; text-align: left; margin-top: 20px;">

|

| 122 |

+

<tr>

|

| 123 |

+

<td>

|

| 124 |

+

<video src="https://github.com/user-attachments/assets/34e7ec8f-293e-4655-bb14-5e1ee476f788" width="100%" controls autoplay loop></video>

|

| 125 |

+

</td>

|

| 126 |

+

<td>

|

| 127 |

+

<video src="https://github.com/user-attachments/assets/7809c64f-eb8c-48a9-8bdc-ca9261fd5434" width="100%" controls autoplay loop></video>

|

| 128 |

+

</td>

|

| 129 |

+

<td>

|

| 130 |

+

<video src="https://github.com/user-attachments/assets/8e76aaa4-c602-44ac-bcb4-8b24b72c386c" width="100%" controls autoplay loop></video>

|

| 131 |

+

</td>

|

| 132 |

+

<td>

|

| 133 |

+

<video src="https://github.com/user-attachments/assets/19dba894-7c35-4f25-b15c-384167ab3b03" width="100%" controls autoplay loop></video>

|

| 134 |

+

</td>

|

| 135 |

+

</tr>

|

| 136 |

+

</table>

|

| 137 |

+

|

| 138 |

+

|

| 139 |

+

Resolution-768

|

| 140 |

+

|

| 141 |

+

<table border="0" style="width: 100%; text-align: left; margin-top: 20px;">

|

| 142 |

+

<tr>

|

| 143 |

+

<td>

|

| 144 |

+

<video src="https://github.com/user-attachments/assets/0bc339b9-455b-44fd-8917-80272d702737" width="100%" controls autoplay loop></video>

|

| 145 |

+

</td>

|

| 146 |

+

<td>

|

| 147 |

+

<video src="https://github.com/user-attachments/assets/70a043b9-6721-4bd9-be47-78b7ec5c27e9" width="100%" controls autoplay loop></video>

|

| 148 |

+

</td>

|

| 149 |

+

<td>

|

| 150 |

+

<video src="https://github.com/user-attachments/assets/d5dd6c09-14f3-40f8-8b6d-91e26519b8ac" width="100%" controls autoplay loop></video>

|

| 151 |

+

</td>

|

| 152 |

+

<td>

|

| 153 |

+

<video src="https://github.com/user-attachments/assets/9327e8bc-4f17-46b0-b50d-38c250a9483a" width="100%" controls autoplay loop></video>

|

| 154 |

+

</td>

|

| 155 |

+

</tr>

|

| 156 |

+

</table>

|

| 157 |

+

|

| 158 |

+

Resolution-512

|

| 159 |

+

|

| 160 |

+

<table border="0" style="width: 100%; text-align: left; margin-top: 20px;">

|

| 161 |

+

<tr>

|

| 162 |

+

<td>

|

| 163 |

+

<video src="https://github.com/user-attachments/assets/ef407030-8062-454d-aba3-131c21e6b58c" width="100%" controls autoplay loop></video>

|

| 164 |

+

</td>

|

| 165 |

+

<td>

|

| 166 |

+

<video src="https://github.com/user-attachments/assets/7610f49e-38b6-4214-aa48-723ae4d1b07e" width="100%" controls autoplay loop></video>

|

| 167 |

+

</td>

|

| 168 |

+

<td>

|

| 169 |

+

<video src="https://github.com/user-attachments/assets/1fff0567-1e15-415c-941e-53ee8ae2c841" width="100%" controls autoplay loop></video>

|

| 170 |

+

</td>

|

| 171 |

+

<td>

|

| 172 |

+

<video src="https://github.com/user-attachments/assets/bcec48da-b91b-43a0-9d50-cf026e00fa4f" width="100%" controls autoplay loop></video>

|

| 173 |

+

</td>

|

| 174 |

+

</tr>

|

| 175 |

+

</table>

|

| 176 |

+

|

| 177 |

+

### CogVideoX-Fun-V1.1-5B-Pose

|

| 178 |

+

|

| 179 |

+

<table border="0" style="width: 100%; text-align: left; margin-top: 20px;">

|

| 180 |

+

<tr>

|

| 181 |

+

<td>

|

| 182 |

+

Resolution-512

|

| 183 |

+

</td>

|

| 184 |

+

<td>

|

| 185 |

+

Resolution-768

|

| 186 |

+

</td>

|

| 187 |

+

<td>

|

| 188 |

+

Resolution-1024

|

| 189 |

+

</td>

|

| 190 |

+

<tr>

|

| 191 |

+

<td>

|

| 192 |

+

<video src="https://github.com/user-attachments/assets/a746df51-9eb7-4446-bee5-2ee30285c143" width="100%" controls autoplay loop></video>

|

| 193 |

+

</td>

|

| 194 |

+

<td>

|

| 195 |

+

<video src="https://github.com/user-attachments/assets/db295245-e6aa-43be-8c81-32cb411f1473" width="100%" controls autoplay loop></video>

|

| 196 |

+

</td>

|

| 197 |

+

<td>

|

| 198 |

+

<video src="https://github.com/user-attachments/assets/ec9875b2-fde0-48e1-ab7e-490cee51ef40" width="100%" controls autoplay loop></video>

|

| 199 |

+

</td>

|

| 200 |

+

</tr>

|

| 201 |

+

</table>

|

| 202 |

+

|

| 203 |

+

### CogVideoX-Fun-V1.1-2B

|

| 204 |

+

|

| 205 |

+

Resolution-768

|

| 206 |

+

|

| 207 |

+

<table border="0" style="width: 100%; text-align: left; margin-top: 20px;">

|

| 208 |

+

<tr>

|

| 209 |

+

<td>

|

| 210 |

+

<video src="https://github.com/user-attachments/assets/03235dea-980e-4fc5-9c41-e40a5bc1b6d0" width="100%" controls autoplay loop></video>

|

| 211 |

+

</td>

|

| 212 |

+

<td>

|

| 213 |

+

<video src="https://github.com/user-attachments/assets/f7302648-5017-47db-bdeb-4d893e620b37" width="100%" controls autoplay loop></video>

|

| 214 |

+

</td>

|

| 215 |

+

<td>

|

| 216 |

+

<video src="https://github.com/user-attachments/assets/cbadf411-28fa-4b87-813d-da63ff481904" width="100%" controls autoplay loop></video>

|

| 217 |

+

</td>

|

| 218 |

+

<td>

|

| 219 |

+

<video src="https://github.com/user-attachments/assets/87cc9d0b-b6fe-4d2d-b447-174513d169ab" width="100%" controls autoplay loop></video>

|

| 220 |

+

</td>

|

| 221 |

+

</tr>

|

| 222 |

+

</table>

|

| 223 |

+

|

| 224 |

+

### CogVideoX-Fun-V1.1-2B-Pose

|

| 225 |

+

|

| 226 |

+

<table border="0" style="width: 100%; text-align: left; margin-top: 20px;">

|

| 227 |

+

<tr>

|

| 228 |

+

<td>

|

| 229 |

+

Resolution-512

|

| 230 |

+

</td>

|

| 231 |

+

<td>

|

| 232 |

+

Resolution-768

|

| 233 |

+

</td>

|

| 234 |

+

<td>

|

| 235 |

+

Resolution-1024

|

| 236 |

+

</td>

|

| 237 |

+

<tr>

|

| 238 |

+

<td>

|

| 239 |

+

<video src="https://github.com/user-attachments/assets/487bcd7b-1b7f-4bb4-95b5-96a6b6548b3e" width="100%" controls autoplay loop></video>

|

| 240 |

+

</td>

|

| 241 |

+

<td>

|

| 242 |

+

<video src="https://github.com/user-attachments/assets/2710fd18-8489-46e4-8086-c237309ae7f6" width="100%" controls autoplay loop></video>

|

| 243 |

+

</td>

|

| 244 |

+

<td>

|

| 245 |

+

<video src="https://github.com/user-attachments/assets/b79513db-7747-4512-b86c-94f9ca447fe2" width="100%" controls autoplay loop></video>

|

| 246 |

+

</td>

|

| 247 |

+

</tr>

|

| 248 |

+

</table>

|

| 249 |

+

|

| 250 |

+

# How to use

|

| 251 |

+

|

| 252 |

+

<h3 id="video-gen">1. Inference </h3>

|

| 253 |

+

|

| 254 |

+

#### a. Using Python Code

|

| 255 |

+

- Step 1: Download the corresponding [weights](#model-zoo) and place them in the models folder.

|

| 256 |

+

- Step 2: Modify prompt, neg_prompt, guidance_scale, and seed in the predict_t2v.py file.

|

| 257 |

+

- Step 3: Run the predict_t2v.py file, wait for the generated results, and save the results in the samples/cogvideox-fun-videos-t2v folder.

|

| 258 |

+

- Step 4: If you want to combine other backbones you have trained with Lora, modify the predict_t2v.py and Lora_path in predict_t2v.py depending on the situation.

|

| 259 |

+

|

| 260 |

+

#### b. Using webui

|

| 261 |

+

- Step 1: Download the corresponding [weights](#model-zoo) and place them in the models folder.

|

| 262 |

+

- Step 2: Run the app.py file to enter the graph page.

|

| 263 |

+

- Step 3: Select the generated model based on the page, fill in prompt, neg_prompt, guidance_scale, and seed, click on generate, wait for the generated result, and save the result in the samples folder.

|

| 264 |

+

|

| 265 |

+

#### c. From ComfyUI

|

| 266 |

+

Please refer to [ComfyUI README](comfyui/README.md) for details.

|

| 267 |

+

|

| 268 |

+

### 2. Model Training

|

| 269 |

+

A complete CogVideoX-Fun training pipeline should include data preprocessing, and Video DiT training.

|

| 270 |

+

|

| 271 |

+

<h4 id="data-preprocess">a. data preprocessing</h4>

|

| 272 |

+

|

| 273 |

+

We have provided a simple demo of training the Lora model through image data, which can be found in the [wiki](https://github.com/aigc-apps/CogVideoX-Fun/wiki/Training-Lora) for details.

|

| 274 |

+

|

| 275 |

+

A complete data preprocessing link for long video segmentation, cleaning, and description can refer to [README](cogvideox/video_caption/README.md) in the video captions section.

|

| 276 |

+

|

| 277 |

+

If you want to train a text to image and video generation model. You need to arrange the dataset in this format.

|

| 278 |

+

|

| 279 |

+

```

|

| 280 |

+

📦 project/

|

| 281 |

+

├── 📂 datasets/

|

| 282 |

+

│ ├── 📂 internal_datasets/

|

| 283 |

+

│ ├── 📂 train/

|

| 284 |

+

│ │ ├── 📄 00000001.mp4

|

| 285 |

+

│ │ ├── 📄 00000002.jpg

|

| 286 |

+

│ │ └── 📄 .....

|

| 287 |

+

│ └── 📄 json_of_internal_datasets.json

|

| 288 |

+

```

|

| 289 |

+

|

| 290 |

+

The json_of_internal_datasets.json is a standard JSON file. The file_path in the json can to be set as relative path, as shown in below:

|

| 291 |

+

```json

|

| 292 |

+

[

|

| 293 |

+

{

|

| 294 |

+

"file_path": "train/00000001.mp4",

|

| 295 |

+