Upload folder using huggingface_hub

Browse files- .gitattributes +1 -0

- LICENSE.md +58 -0

- README.md +99 -0

- comparison.png +0 -0

- feature_extractor/preprocessor_config.json +28 -0

- image_encoder/config.json +23 -0

- image_encoder/model.fp16.safetensors +3 -0

- image_encoder/model.safetensors +3 -0

- model_index.json +25 -0

- output_tile.gif +3 -0

- scheduler/scheduler_config.json +20 -0

- svd_xt.safetensors +3 -0

- svd_xt_image_decoder.safetensors +3 -0

- unet/config.json +38 -0

- unet/diffusion_pytorch_model.fp16.safetensors +3 -0

- unet/diffusion_pytorch_model.safetensors +3 -0

- vae/config.json +24 -0

- vae/diffusion_pytorch_model.fp16.safetensors +3 -0

- vae/diffusion_pytorch_model.safetensors +3 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

output_tile.gif filter=lfs diff=lfs merge=lfs -text

|

LICENSE.md

ADDED

|

@@ -0,0 +1,58 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

STABILITY AI COMMUNITY LICENSE AGREEMENT

|

| 2 |

+

|

| 3 |

+

Last Updated: July 5, 2024

|

| 4 |

+

|

| 5 |

+

1. INTRODUCTION

|

| 6 |

+

|

| 7 |

+

This Agreement applies to any individual person or entity (“You”, “Your” or “Licensee”) that uses or distributes any portion or element of the Stability AI Materials or Derivative Works thereof for any Research & Non-Commercial or Commercial purpose. Capitalized terms not otherwise defined herein are defined in Section V below.

|

| 8 |

+

|

| 9 |

+

This Agreement is intended to allow research, non-commercial, and limited commercial uses of the Models free of charge. In order to ensure that certain limited commercial uses of the Models continue to be allowed, this Agreement preserves free access to the Models for people or organizations generating annual revenue of less than US $1,000,000 (or local currency equivalent).

|

| 10 |

+

|

| 11 |

+

By clicking “I Accept” or by using or distributing or using any portion or element of the Stability Materials or Derivative Works, You agree that You have read, understood and are bound by the terms of this Agreement. If You are acting on behalf of a company, organization or other entity, then “You” includes you and that entity, and You agree that You: (i) are an authorized representative of such entity with the authority to bind such entity to this Agreement, and (ii) You agree to the terms of this Agreement on that entity’s behalf.

|

| 12 |

+

|

| 13 |

+

2. RESEARCH & NON-COMMERCIAL USE LICENSE

|

| 14 |

+

|

| 15 |

+

Subject to the terms of this Agreement, Stability AI grants You a non-exclusive, worldwide, non-transferable, non-sublicensable, revocable and royalty-free limited license under Stability AI’s intellectual property or other rights owned by Stability AI embodied in the Stability AI Materials to use, reproduce, distribute, and create Derivative Works of, and make modifications to, the Stability AI Materials for any Research or Non-Commercial Purpose. “Research Purpose” means academic or scientific advancement, and in each case, is not primarily intended for commercial advantage or monetary compensation to You or others. “Non-Commercial Purpose” means any purpose other than a Research Purpose that is not primarily intended for commercial advantage or monetary compensation to You or others, such as personal use (i.e., hobbyist) or evaluation and testing.

|

| 16 |

+

|

| 17 |

+

3. COMMERCIAL USE LICENSE

|

| 18 |

+

|

| 19 |

+

Subject to the terms of this Agreement (including the remainder of this Section III), Stability AI grants You a non-exclusive, worldwide, non-transferable, non-sublicensable, revocable and royalty-free limited license under Stability AI’s intellectual property or other rights owned by Stability AI embodied in the Stability AI Materials to use, reproduce, distribute, and create Derivative Works of, and make modifications to, the Stability AI Materials for any Commercial Purpose. “Commercial Purpose” means any purpose other than a Research Purpose or Non-Commercial Purpose that is primarily intended for commercial advantage or monetary compensation to You or others, including but not limited to, (i) creating, modifying, or distributing Your product or service, including via a hosted service or application programming interface, and (ii) for Your business’s or organization’s internal operations.

|

| 20 |

+

If You are using or distributing the Stability AI Materials for a Commercial Purpose, You must register with Stability AI at (https://stability.ai/community-license). If at any time You or Your Affiliate(s), either individually or in aggregate, generate more than USD $1,000,000 in annual revenue (or the equivalent thereof in Your local currency), regardless of whether that revenue is generated directly or indirectly from the Stability AI Materials or Derivative Works, any licenses granted to You under this Agreement shall terminate as of such date. You must request a license from Stability AI at (https://stability.ai/enterprise) , which Stability AI may grant to You in its sole discretion. If you receive Stability AI Materials, or any Derivative Works thereof, from a Licensee as part of an integrated end user product, then Section III of this Agreement will not apply to you.

|

| 21 |

+

|

| 22 |

+

4. GENERAL TERMS

|

| 23 |

+

|

| 24 |

+

Your Research, Non-Commercial, and Commercial License(s) under this Agreement are subject to the following terms.

|

| 25 |

+

a. Distribution & Attribution. If You distribute or make available the Stability AI Materials or a Derivative Work to a third party, or a product or service that uses any portion of them, You shall: (i) provide a copy of this Agreement to that third party, (ii) retain the following attribution notice within a "Notice" text file distributed as a part of such copies: "This Stability AI Model is licensed under the Stability AI Community License, Copyright © Stability AI Ltd. All Rights Reserved”, and (iii) prominently display “Powered by Stability AI” on a related website, user interface, blogpost, about page, or product documentation. If You create a Derivative Work, You may add your own attribution notice(s) to the “Notice” text file included with that Derivative Work, provided that You clearly indicate which attributions apply to the Stability AI Materials and state in the “Notice” text file that You changed the Stability AI Materials and how it was modified.

|

| 26 |

+

b. Use Restrictions. Your use of the Stability AI Materials and Derivative Works, including any output or results of the Stability AI Materials or Derivative Works, must comply with applicable laws and regulations (including Trade Control Laws and equivalent regulations) and adhere to the Documentation and Stability AI’s AUP, which is hereby incorporated by reference. Furthermore, You will not use the Stability AI Materials or Derivative Works, or any output or results of the Stability AI Materials or Derivative Works, to create or improve any foundational generative AI model (excluding the Models or Derivative Works).

|

| 27 |

+

c. Intellectual Property.

|

| 28 |

+

(i) Trademark License. No trademark licenses are granted under this Agreement, and in connection with the Stability AI Materials or Derivative Works, You may not use any name or mark owned by or associated with Stability AI or any of its Affiliates, except as required under Section IV(a) herein.

|

| 29 |

+

(ii) Ownership of Derivative Works. As between You and Stability AI, You are the owner of Derivative Works You create, subject to Stability AI’s ownership of the Stability AI Materials and any Derivative Works made by or for Stability AI.

|

| 30 |

+

(iii) Ownership of Outputs. As between You and Stability AI, You own any outputs generated from the Models or Derivative Works to the extent permitted by applicable law.

|

| 31 |

+

(iv) Disputes. If You or Your Affiliate(s) institute litigation or other proceedings against Stability AI (including a cross-claim or counterclaim in a lawsuit) alleging that the Stability AI Materials, Derivative Works or associated outputs or results, or any portion of any of the foregoing, constitutes infringement of intellectual property or other rights owned or licensable by You, then any licenses granted to You under this Agreement shall terminate as of the date such litigation or claim is filed or instituted. You will indemnify and hold harmless Stability AI from and against any claim by any third party arising out of or related to Your use or distribution of the Stability AI Materials or Derivative Works in violation of this Agreement.

|

| 32 |

+

(v) Feedback. From time to time, You may provide Stability AI with verbal and/or written suggestions, comments or other feedback related to Stability AI’s existing or prospective technology, products or services (collectively, “Feedback”). You are not obligated to provide Stability AI with Feedback, but to the extent that You do, You hereby grant Stability AI a perpetual, irrevocable, royalty-free, fully-paid, sub-licensable, transferable, non-exclusive, worldwide right and license to exploit the Feedback in any manner without restriction. Your Feedback is provided “AS IS” and You make no warranties whatsoever about any Feedback.

|

| 33 |

+

d. Disclaimer Of Warranty. UNLESS REQUIRED BY APPLICABLE LAW, THE STABILITY AI MATERIALS AND ANY OUTPUT AND RESULTS THEREFROM ARE PROVIDED ON AN "AS IS" BASIS, WITHOUT WARRANTIES OF ANY KIND, EITHER EXPRESS OR IMPLIED, INCLUDING, WITHOUT LIMITATION, ANY WARRANTIES OF TITLE, NON-INFRINGEMENT, MERCHANTABILITY, OR FITNESS FOR A PARTICULAR PURPOSE. YOU ARE SOLELY RESPONSIBLE FOR DETERMINING THE APPROPRIATENESS OR LAWFULNESS OF USING OR REDISTRIBUTING THE STABILITY AI MATERIALS, DERIVATIVE WORKS OR ANY OUTPUT OR RESULTS AND ASSUME ANY RISKS ASSOCIATED WITH YOUR USE OF THE STABILITY AI MATERIALS, DERIVATIVE WORKS AND ANY OUTPUT AND RESULTS.

|

| 34 |

+

e. Limitation Of Liability. IN NO EVENT WILL STABILITY AI OR ITS AFFILIATES BE LIABLE UNDER ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, TORT, NEGLIGENCE, PRODUCTS LIABILITY, OR OTHERWISE, ARISING OUT OF THIS AGREEMENT, FOR ANY LOST PROFITS OR ANY DIRECT, INDIRECT, SPECIAL, CONSEQUENTIAL, INCIDENTAL, EXEMPLARY OR PUNITIVE DAMAGES, EVEN IF STABILITY AI OR ITS AFFILIATES HAVE BEEN ADVISED OF THE POSSIBILITY OF ANY OF THE FOREGOING.

|

| 35 |

+

f. Term And Termination. The term of this Agreement will commence upon Your acceptance of this Agreement or access to the Stability AI Materials and will continue in full force and effect until terminated in accordance with the terms and conditions herein. Stability AI may terminate this Agreement if You are in breach of any term or condition of this Agreement. Upon termination of this Agreement, You shall delete and cease use of any Stability AI Materials or Derivative Works. Section IV(d), (e), and (g) shall survive the termination of this Agreement.

|

| 36 |

+

g. Governing Law. This Agreement will be governed by and constructed in accordance with the laws of the United States and the State of California without regard to choice of law principles, and the UN Convention on Contracts for International Sale of Goods does not apply to this Agreement.

|

| 37 |

+

|

| 38 |

+

5. DEFINITIONS

|

| 39 |

+

|

| 40 |

+

“Affiliate(s)” means any entity that directly or indirectly controls, is controlled by, or is under common control with the subject entity; for purposes of this definition, “control” means direct or indirect ownership or control of more than 50% of the voting interests of the subject entity.

|

| 41 |

+

|

| 42 |

+

"Agreement" means this Stability AI Community License Agreement.

|

| 43 |

+

|

| 44 |

+

“AUP” means the Stability AI Acceptable Use Policy available at (https://stability.ai/use-policy), as may be updated from time to time.

|

| 45 |

+

|

| 46 |

+

"Derivative Work(s)” means (a) any derivative work of the Stability AI Materials as recognized by U.S. copyright laws and (b) any modifications to a Model, and any other model created which is based on or derived from the Model or the Model’s output, including “fine tune” and “low-rank adaptation” models derived from a Model or a Model’s output, but do not include the output of any Model.

|

| 47 |

+

|

| 48 |

+

“Documentation” means any specifications, manuals, documentation, and other written information provided by Stability AI related to the Software or Models.

|

| 49 |

+

|

| 50 |

+

“Model(s)" means, collectively, Stability AI’s proprietary models and algorithms, including machine-learning models, trained model weights and other elements of the foregoing listed on Stability’s Core Models Webpage available at (https://stability.ai/core-models), as may be updated from time to time.

|

| 51 |

+

|

| 52 |

+

"Stability AI" or "we" means Stability AI Ltd. and its Affiliates.

|

| 53 |

+

|

| 54 |

+

"Software" means Stability AI’s proprietary software made available under this Agreement now or in the future.

|

| 55 |

+

|

| 56 |

+

“Stability AI Materials” means, collectively, Stability’s proprietary Models, Software and Documentation (and any portion or combination thereof) made available under this Agreement.

|

| 57 |

+

|

| 58 |

+

“Trade Control Laws” means any applicable U.S. and non-U.S. export control and trade sanctions laws and regulations.

|

README.md

ADDED

|

@@ -0,0 +1,99 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

pipeline_tag: image-to-video

|

| 3 |

+

license: other

|

| 4 |

+

license_name: stable-video-diffusion-community

|

| 5 |

+

license_link: LICENSE.md

|

| 6 |

+

---

|

| 7 |

+

|

| 8 |

+

# Stable Video Diffusion Image-to-Video Model Card

|

| 9 |

+

|

| 10 |

+

<!-- Provide a quick summary of what the model is/does. -->

|

| 11 |

+

|

| 12 |

+

Stable Video Diffusion (SVD) Image-to-Video is a diffusion model that takes in a still image as a conditioning frame, and generates a video from it.

|

| 13 |

+

|

| 14 |

+

Please note: For commercial use, please refer to https://stability.ai/license.

|

| 15 |

+

|

| 16 |

+

## Model Details

|

| 17 |

+

|

| 18 |

+

### Model Description

|

| 19 |

+

|

| 20 |

+

(SVD) Image-to-Video is a latent diffusion model trained to generate short video clips from an image conditioning.

|

| 21 |

+

This model was trained to generate 25 frames at resolution 576x1024 given a context frame of the same size, finetuned from [SVD Image-to-Video [14 frames]](https://huggingface.co/stabilityai/stable-video-diffusion-img2vid).

|

| 22 |

+

We also finetune the widely used [f8-decoder](https://huggingface.co/docs/diffusers/api/models/autoencoderkl#loading-from-the-original-format) for temporal consistency.

|

| 23 |

+

For convenience, we additionally provide the model with the

|

| 24 |

+

standard frame-wise decoder [here](https://huggingface.co/stabilityai/stable-video-diffusion-img2vid-xt/blob/main/svd_xt_image_decoder.safetensors).

|

| 25 |

+

|

| 26 |

+

|

| 27 |

+

- **Developed by:** Stability AI

|

| 28 |

+

- **Funded by:** Stability AI

|

| 29 |

+

- **Model type:** Generative image-to-video model

|

| 30 |

+

- **Finetuned from model:** SVD Image-to-Video [14 frames]

|

| 31 |

+

|

| 32 |

+

### Model Sources

|

| 33 |

+

|

| 34 |

+

For research purposes, we recommend our `generative-models` Github repository (https://github.com/Stability-AI/generative-models),

|

| 35 |

+

which implements the most popular diffusion frameworks (both training and inference).

|

| 36 |

+

|

| 37 |

+

- **Repository:** https://github.com/Stability-AI/generative-models

|

| 38 |

+

- **Paper:** https://stability.ai/research/stable-video-diffusion-scaling-latent-video-diffusion-models-to-large-datasets

|

| 39 |

+

|

| 40 |

+

|

| 41 |

+

## Evaluation

|

| 42 |

+

|

| 43 |

+

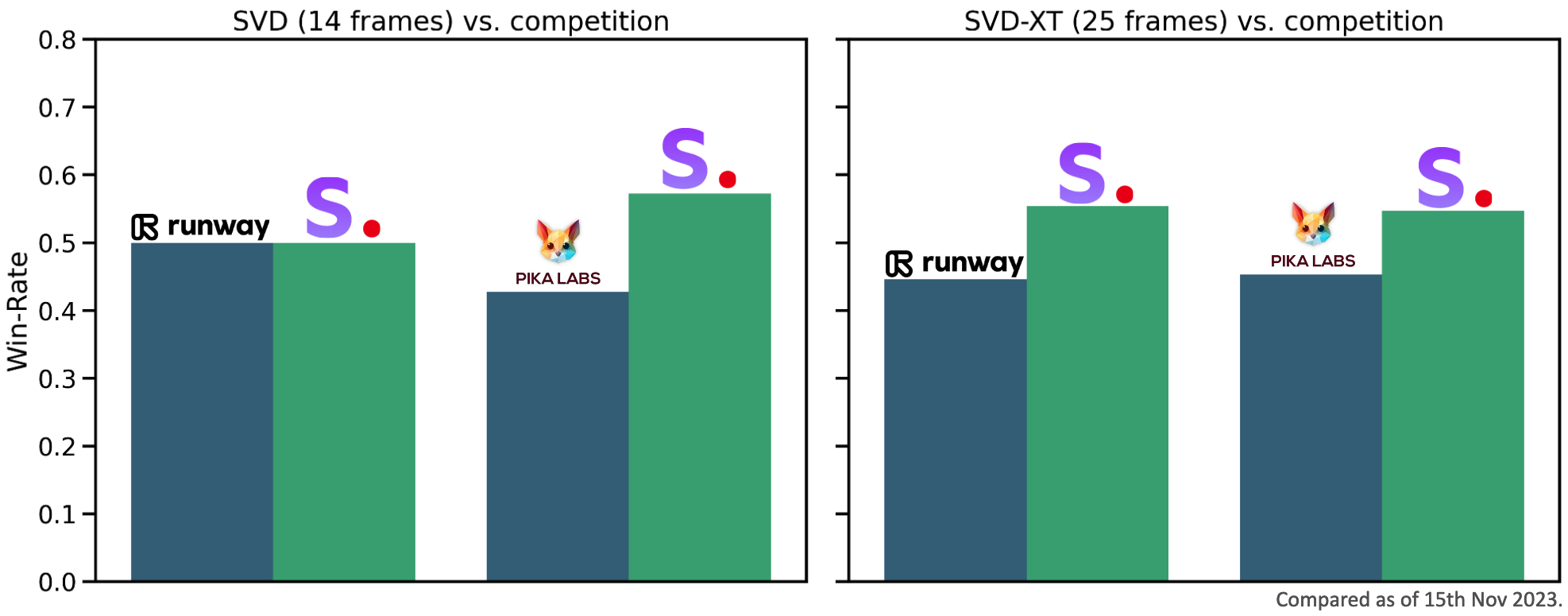

The chart above evaluates user preference for SVD-Image-to-Video over [GEN-2](https://research.runwayml.com/gen2) and [PikaLabs](https://www.pika.art/).

|

| 44 |

+

SVD-Image-to-Video is preferred by human voters in terms of video quality. For details on the user study, we refer to the [research paper](https://stability.ai/research/stable-video-diffusion-scaling-latent-video-diffusion-models-to-large-datasets)

|

| 45 |

+

|

| 46 |

+

## Uses

|

| 47 |

+

|

| 48 |

+

### Direct Use

|

| 49 |

+

|

| 50 |

+

The model is intended for both non-commercial and commercial usage. You can use this model for non-commercial or research purposes under this [license](https://huggingface.co/stabilityai/stable-video-diffusion-img2vid-xt/blob/main/LICENSE.md). Possible research areas and tasks include

|

| 51 |

+

|

| 52 |

+

- Research on generative models.

|

| 53 |

+

- Safe deployment of models which have the potential to generate harmful content.

|

| 54 |

+

- Probing and understanding the limitations and biases of generative models.

|

| 55 |

+

- Generation of artworks and use in design and other artistic processes.

|

| 56 |

+

- Applications in educational or creative tools.

|

| 57 |

+

|

| 58 |

+

For commercial use, please refer to https://stability.ai/license.

|

| 59 |

+

|

| 60 |

+

Excluded uses are described below.

|

| 61 |

+

|

| 62 |

+

### Out-of-Scope Use

|

| 63 |

+

|

| 64 |

+

The model was not trained to be factual or true representations of people or events,

|

| 65 |

+

and therefore using the model to generate such content is out-of-scope for the abilities of this model.

|

| 66 |

+

The model should not be used in any way that violates Stability AI's [Acceptable Use Policy](https://stability.ai/use-policy).

|

| 67 |

+

|

| 68 |

+

## Limitations and Bias

|

| 69 |

+

|

| 70 |

+

### Limitations

|

| 71 |

+

- The generated videos are rather short (<= 4sec), and the model does not achieve perfect photorealism.

|

| 72 |

+

- The model may generate videos without motion, or very slow camera pans.

|

| 73 |

+

- The model cannot be controlled through text.

|

| 74 |

+

- The model cannot render legible text.

|

| 75 |

+

- Faces and people in general may not be generated properly.

|

| 76 |

+

- The autoencoding part of the model is lossy.

|

| 77 |

+

|

| 78 |

+

|

| 79 |

+

### Recommendations

|

| 80 |

+

|

| 81 |

+

The model is intended for both non-commercial and commercial usage.

|

| 82 |

+

|

| 83 |

+

## How to Get Started with the Model

|

| 84 |

+

|

| 85 |

+

Check out https://github.com/Stability-AI/generative-models

|

| 86 |

+

|

| 87 |

+

# Appendix:

|

| 88 |

+

|

| 89 |

+

All considered potential data sources were included for final training, with none held out as the proposed data filtering methods described in the SVD paper handle the quality control/filtering of the dataset. With regards to safety/NSFW filtering, sources considered were either deemed safe or filtered with the in-house NSFW filters.

|

| 90 |

+

No explicit human labor is involved in training data preparation. However, human evaluation for model outputs and quality was extensively used to evaluate model quality and performance. The evaluations were performed with third-party contractor platforms (Amazon Sagemaker, Amazon Mechanical Turk, Prolific) with fluent English-speaking contractors from various countries, primarily from the USA, UK, and Canada. Each worker was paid $12/hr for the time invested in the evaluation.

|

| 91 |

+

No other third party was involved in the development of this model; the model was fully developed in-house at Stability AI.

|

| 92 |

+

Training the SVD checkpoints required a total of approximately 200,000 A100 80GB hours. The majority of the training occurred on 48 * 8 A100s, while some stages took more/less than that. The resulting CO2 emission is ~19,000kg CO2 eq., and energy consumed is ~64000 kWh.

|

| 93 |

+

The released checkpoints (SVD/SVD-XT) are image-to-video models that generate short videos/animations closely following the given input image. Since the model relies on an existing supplied image, the potential risks of disclosing specific material or novel unsafe content are minimal. This was also evaluated by third-party independent red-teaming services, which agree with our conclusion to a high degree of confidence (>90% in various areas of safety red-teaming). The external evaluations were also performed for trustworthiness, leading to >95% confidence in real, trustworthy videos.

|

| 94 |

+

With the default settings at the time of release, SVD takes ~100s for generation, and SVD-XT takes ~180s on an A100 80GB card. Several optimizations to trade off quality / memory / speed can be done to perform faster inference or inference on lower VRAM cards.

|

| 95 |

+

The information related to the model and its development process and usage protocols can be found in the GitHub repo, associated research paper, and HuggingFace model page/cards.

|

| 96 |

+

The released model inference & demo code has image-level watermarking enabled by default, which can be used to detect the outputs. This is done via the imWatermark Python library.

|

| 97 |

+

The model can be used to generate videos from static initial images. However, we prohibit unlawful, obscene, or misleading uses of the model consistent with the terms of our license and Acceptable Use Policy. For the open-weights release, our training data filtering mitigations alleviate this risk to some extent. These restrictions are explicitly enforced on user-facing interfaces at stablevideo.com, where a warning is issued. We do not take any responsibility for third-party interfaces. Submitting initial images that bypass input filters to tease out offensive or inappropriate content listed above is also prohibited. Safety filtering checks at stablevideo.com run on model inputs and outputs independently. More details on our user-facing interfaces can be found here: https://www.stablevideo.com/faq. Beyond the Acceptable Use Policy and other mitigations and conditions described here, the model is not subject to additional model behavior interventions of the type described in the Foundation Model Transparency Index.

|

| 98 |

+

For stablevideo.com, we store preference data in the form of upvotes/downvotes on user-generated videos, and we have a pairwise ranker that runs while a user generates videos. This usage data is solely used for improving Stability AI’s future image/video models and services. No other third-party entities are given access to the usage data beyond Stability AI and maintainers of stablevideo.com.

|

| 99 |

+

For usage statistics of SVD, we refer interested users to HuggingFace model download/usage statistics as a primary indicator. Third-party applications also have reported model usage statistics. We might also consider releasing aggregate usage statistics of stablevideo.com on reaching some milestones.

|

comparison.png

ADDED

|

feature_extractor/preprocessor_config.json

ADDED

|

@@ -0,0 +1,28 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"crop_size": {

|

| 3 |

+

"height": 224,

|

| 4 |

+

"width": 224

|

| 5 |

+

},

|

| 6 |

+

"do_center_crop": true,

|

| 7 |

+

"do_convert_rgb": true,

|

| 8 |

+

"do_normalize": true,

|

| 9 |

+

"do_rescale": true,

|

| 10 |

+

"do_resize": true,

|

| 11 |

+

"feature_extractor_type": "CLIPFeatureExtractor",

|

| 12 |

+

"image_mean": [

|

| 13 |

+

0.48145466,

|

| 14 |

+

0.4578275,

|

| 15 |

+

0.40821073

|

| 16 |

+

],

|

| 17 |

+

"image_processor_type": "CLIPImageProcessor",

|

| 18 |

+

"image_std": [

|

| 19 |

+

0.26862954,

|

| 20 |

+

0.26130258,

|

| 21 |

+

0.27577711

|

| 22 |

+

],

|

| 23 |

+

"resample": 3,

|

| 24 |

+

"rescale_factor": 0.00392156862745098,

|

| 25 |

+

"size": {

|

| 26 |

+

"shortest_edge": 224

|

| 27 |

+

}

|

| 28 |

+

}

|

image_encoder/config.json

ADDED

|

@@ -0,0 +1,23 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_name_or_path": "/home/suraj_huggingface_co/.cache/huggingface/hub/models--diffusers--svd-xt/snapshots/9703ded20c957c340781ee710b75660826deb487/image_encoder",

|

| 3 |

+

"architectures": [

|

| 4 |

+

"CLIPVisionModelWithProjection"

|

| 5 |

+

],

|

| 6 |

+

"attention_dropout": 0.0,

|

| 7 |

+

"dropout": 0.0,

|

| 8 |

+

"hidden_act": "gelu",

|

| 9 |

+

"hidden_size": 1280,

|

| 10 |

+

"image_size": 224,

|

| 11 |

+

"initializer_factor": 1.0,

|

| 12 |

+

"initializer_range": 0.02,

|

| 13 |

+

"intermediate_size": 5120,

|

| 14 |

+

"layer_norm_eps": 1e-05,

|

| 15 |

+

"model_type": "clip_vision_model",

|

| 16 |

+

"num_attention_heads": 16,

|

| 17 |

+

"num_channels": 3,

|

| 18 |

+

"num_hidden_layers": 32,

|

| 19 |

+

"patch_size": 14,

|

| 20 |

+

"projection_dim": 1024,

|

| 21 |

+

"torch_dtype": "float16",

|

| 22 |

+

"transformers_version": "4.34.0.dev0"

|

| 23 |

+

}

|

image_encoder/model.fp16.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:ae616c24393dd1854372b0639e5541666f7521cbe219669255e865cb7f89466a

|

| 3 |

+

size 1264217240

|

image_encoder/model.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:ed1e5af7b4042ca30ec29999a4a5cfcac90b7fb610fd05ace834f2dcbb763eab

|

| 3 |

+

size 2528371296

|

model_index.json

ADDED

|

@@ -0,0 +1,25 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_class_name": "StableVideoDiffusionPipeline",

|

| 3 |

+

"_diffusers_version": "0.24.0.dev0",

|

| 4 |

+

"_name_or_path": "diffusers/svd-xt",

|

| 5 |

+

"feature_extractor": [

|

| 6 |

+

"transformers",

|

| 7 |

+

"CLIPImageProcessor"

|

| 8 |

+

],

|

| 9 |

+

"image_encoder": [

|

| 10 |

+

"transformers",

|

| 11 |

+

"CLIPVisionModelWithProjection"

|

| 12 |

+

],

|

| 13 |

+

"scheduler": [

|

| 14 |

+

"diffusers",

|

| 15 |

+

"EulerDiscreteScheduler"

|

| 16 |

+

],

|

| 17 |

+

"unet": [

|

| 18 |

+

"diffusers",

|

| 19 |

+

"UNetSpatioTemporalConditionModel"

|

| 20 |

+

],

|

| 21 |

+

"vae": [

|

| 22 |

+

"diffusers",

|

| 23 |

+

"AutoencoderKLTemporalDecoder"

|

| 24 |

+

]

|

| 25 |

+

}

|

output_tile.gif

ADDED

|

Git LFS Details

|

scheduler/scheduler_config.json

ADDED

|

@@ -0,0 +1,20 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_class_name": "EulerDiscreteScheduler",

|

| 3 |

+

"_diffusers_version": "0.24.0.dev0",

|

| 4 |

+

"beta_end": 0.012,

|

| 5 |

+

"beta_schedule": "scaled_linear",

|

| 6 |

+

"beta_start": 0.00085,

|

| 7 |

+

"clip_sample": false,

|

| 8 |

+

"interpolation_type": "linear",

|

| 9 |

+

"num_train_timesteps": 1000,

|

| 10 |

+

"prediction_type": "v_prediction",

|

| 11 |

+

"set_alpha_to_one": false,

|

| 12 |

+

"sigma_max": 700.0,

|

| 13 |

+

"sigma_min": 0.002,

|

| 14 |

+

"skip_prk_steps": true,

|

| 15 |

+

"steps_offset": 1,

|

| 16 |

+

"timestep_spacing": "leading",

|

| 17 |

+

"timestep_type": "continuous",

|

| 18 |

+

"trained_betas": null,

|

| 19 |

+

"use_karras_sigmas": true

|

| 20 |

+

}

|

svd_xt.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:b2652c23d64a1da5f14d55011b9b6dce55f2e72e395719f1cd1f8a079b00a451

|

| 3 |

+

size 9559625980

|

svd_xt_image_decoder.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:99aa889bf6d1ca28e026755b83ba37e3072ad79b45dd4c94fae14bee7482263b

|

| 3 |

+

size 9503252964

|

unet/config.json

ADDED

|

@@ -0,0 +1,38 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_class_name": "UNetSpatioTemporalConditionModel",

|

| 3 |

+

"_diffusers_version": "0.24.0.dev0",

|

| 4 |

+

"_name_or_path": "/home/suraj_huggingface_co/.cache/huggingface/hub/models--diffusers--svd-xt/snapshots/9703ded20c957c340781ee710b75660826deb487/unet",

|

| 5 |

+

"addition_time_embed_dim": 256,

|

| 6 |

+

"block_out_channels": [

|

| 7 |

+

320,

|

| 8 |

+

640,

|

| 9 |

+

1280,

|

| 10 |

+

1280

|

| 11 |

+

],

|

| 12 |

+

"cross_attention_dim": 1024,

|

| 13 |

+

"down_block_types": [

|

| 14 |

+

"CrossAttnDownBlockSpatioTemporal",

|

| 15 |

+

"CrossAttnDownBlockSpatioTemporal",

|

| 16 |

+

"CrossAttnDownBlockSpatioTemporal",

|

| 17 |

+

"DownBlockSpatioTemporal"

|

| 18 |

+

],

|

| 19 |

+

"in_channels": 8,

|

| 20 |

+

"layers_per_block": 2,

|

| 21 |

+

"num_attention_heads": [

|

| 22 |

+

5,

|

| 23 |

+

10,

|

| 24 |

+

20,

|

| 25 |

+

20

|

| 26 |

+

],

|

| 27 |

+

"num_frames": 25,

|

| 28 |

+

"out_channels": 4,

|

| 29 |

+

"projection_class_embeddings_input_dim": 768,

|

| 30 |

+

"sample_size": 96,

|

| 31 |

+

"transformer_layers_per_block": 1,

|

| 32 |

+

"up_block_types": [

|

| 33 |

+

"UpBlockSpatioTemporal",

|

| 34 |

+

"CrossAttnUpBlockSpatioTemporal",

|

| 35 |

+

"CrossAttnUpBlockSpatioTemporal",

|

| 36 |

+

"CrossAttnUpBlockSpatioTemporal"

|

| 37 |

+

]

|

| 38 |

+

}

|

unet/diffusion_pytorch_model.fp16.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:9fbc02e90f37d422f5e3a4aeaee95f6629dc8c45ca211b951626e930daf2bddf

|

| 3 |

+

size 3049435868

|

unet/diffusion_pytorch_model.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:7783d82729af04f26ded4641a5952617fe331fc46add332fb9e47674fecc6ad7

|

| 3 |

+

size 6098682464

|

vae/config.json

ADDED

|

@@ -0,0 +1,24 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_class_name": "AutoencoderKLTemporalDecoder",

|

| 3 |

+

"_diffusers_version": "0.24.0.dev0",

|

| 4 |

+

"_name_or_path": "/home/suraj_huggingface_co/.cache/huggingface/hub/models--diffusers--svd-xt/snapshots/9703ded20c957c340781ee710b75660826deb487/vae",

|

| 5 |

+

"block_out_channels": [

|

| 6 |

+

128,

|

| 7 |

+

256,

|

| 8 |

+

512,

|

| 9 |

+

512

|

| 10 |

+

],

|

| 11 |

+

"down_block_types": [

|

| 12 |

+

"DownEncoderBlock2D",

|

| 13 |

+

"DownEncoderBlock2D",

|

| 14 |

+

"DownEncoderBlock2D",

|

| 15 |

+

"DownEncoderBlock2D"

|

| 16 |

+

],

|

| 17 |

+

"force_upcast": true,

|

| 18 |

+

"in_channels": 3,

|

| 19 |

+

"latent_channels": 4,

|

| 20 |

+

"layers_per_block": 2,

|

| 21 |

+

"out_channels": 3,

|

| 22 |

+

"sample_size": 768,

|

| 23 |

+

"scaling_factor": 0.18215

|

| 24 |

+

}

|

vae/diffusion_pytorch_model.fp16.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:af602cd0eb4ad6086ec94fbf1438dfb1be5ec9ac03fd0215640854e90d6463a3

|

| 3 |

+

size 195531910

|

vae/diffusion_pytorch_model.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:5d92aa595a53d9da9faf594f09910ee869d5d567c8bb0362d5095673c69997d6

|

| 3 |

+

size 391017740

|