Update model

Browse files- README.md +287 -1

- exp/enh_train_enh_skim_causal_small_raw/149epoch.pth +3 -0

- exp/enh_train_enh_skim_causal_small_raw/RESULTS.md +20 -0

- exp/enh_train_enh_skim_causal_small_raw/config.yaml +184 -0

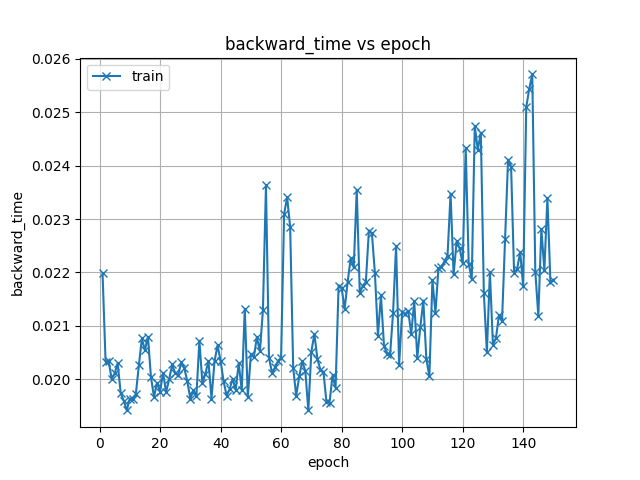

- exp/enh_train_enh_skim_causal_small_raw/images/backward_time.png +0 -0

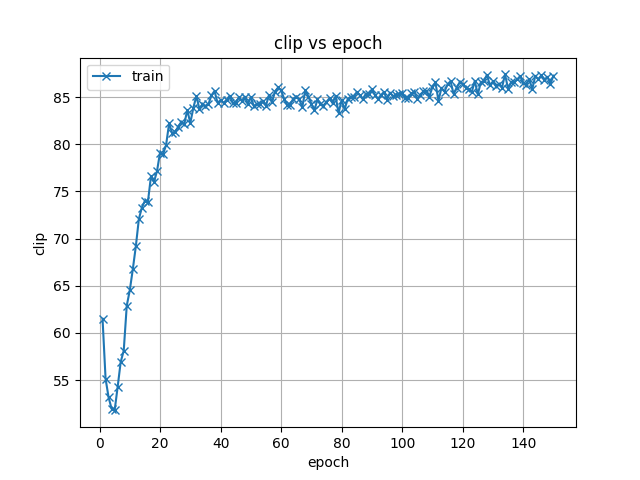

- exp/enh_train_enh_skim_causal_small_raw/images/clip.png +0 -0

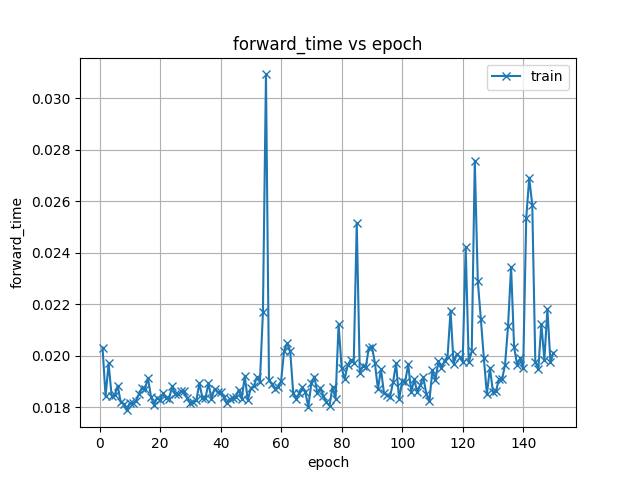

- exp/enh_train_enh_skim_causal_small_raw/images/forward_time.png +0 -0

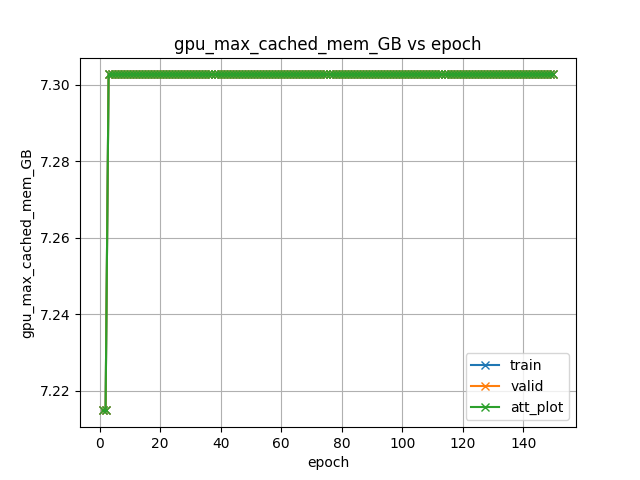

- exp/enh_train_enh_skim_causal_small_raw/images/gpu_max_cached_mem_GB.png +0 -0

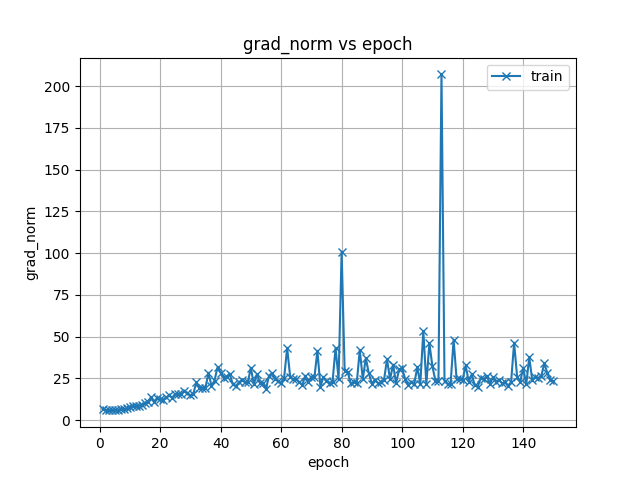

- exp/enh_train_enh_skim_causal_small_raw/images/grad_norm.png +0 -0

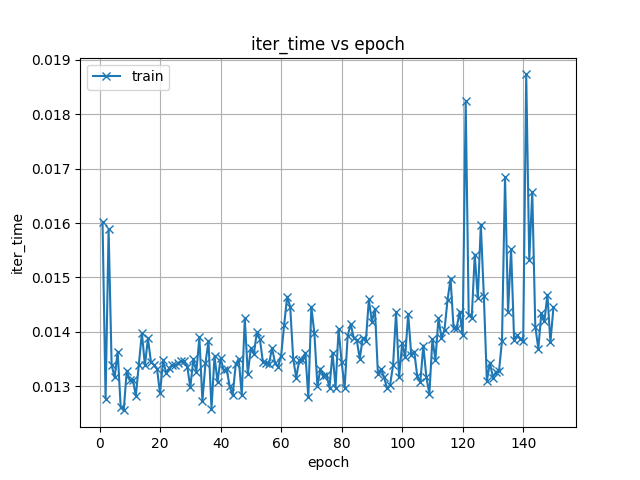

- exp/enh_train_enh_skim_causal_small_raw/images/iter_time.png +0 -0

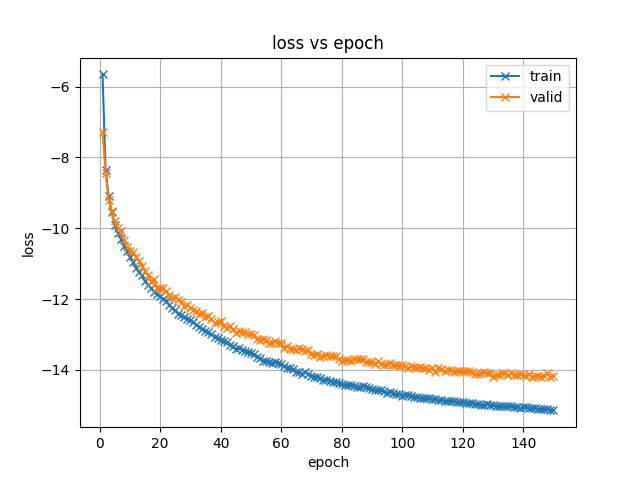

- exp/enh_train_enh_skim_causal_small_raw/images/loss.png +0 -0

- exp/enh_train_enh_skim_causal_small_raw/images/loss_scale.png +0 -0

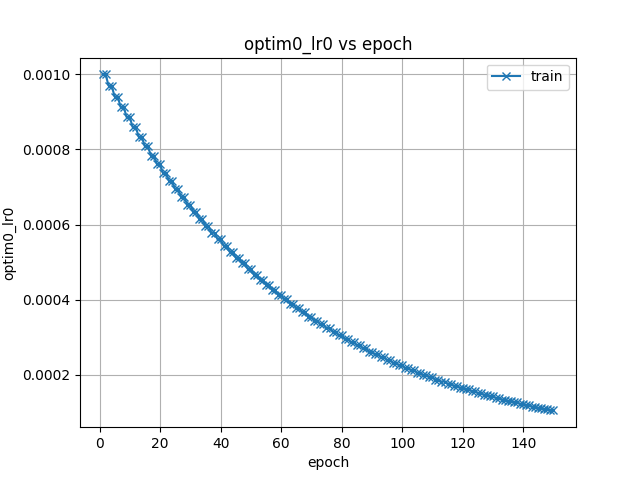

- exp/enh_train_enh_skim_causal_small_raw/images/optim0_lr0.png +0 -0

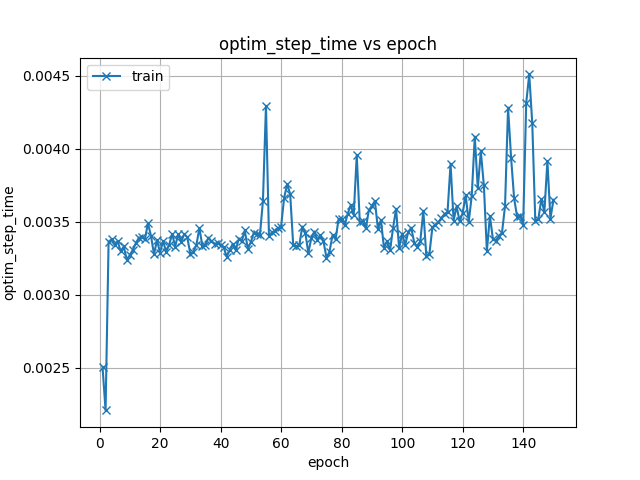

- exp/enh_train_enh_skim_causal_small_raw/images/optim_step_time.png +0 -0

- exp/enh_train_enh_skim_causal_small_raw/images/si_snr_loss.png +0 -0

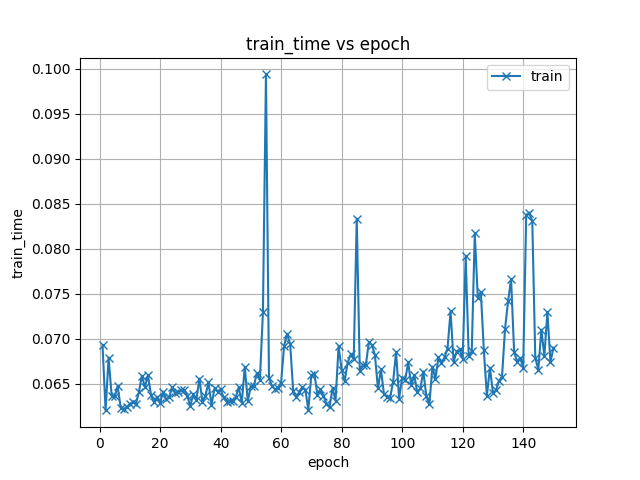

- exp/enh_train_enh_skim_causal_small_raw/images/train_time.png +0 -0

- meta.yaml +8 -0

README.md

CHANGED

|

@@ -1,3 +1,289 @@

|

|

| 1 |

---

|

| 2 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 3 |

---

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

+

tags:

|

| 3 |

+

- espnet

|

| 4 |

+

- audio

|

| 5 |

+

- audio-to-audio

|

| 6 |

+

language: en

|

| 7 |

+

datasets:

|

| 8 |

+

- wsj0_2mix

|

| 9 |

+

license: cc-by-4.0

|

| 10 |

---

|

| 11 |

+

|

| 12 |

+

## ESPnet2 ENH model

|

| 13 |

+

|

| 14 |

+

### `lichenda/wsj0_2mix_skim_small_causal`

|

| 15 |

+

|

| 16 |

+

This model was trained by Chenda Li using wsj0_2mix recipe in [espnet](https://github.com/espnet/espnet/).

|

| 17 |

+

|

| 18 |

+

### Demo: How to use in ESPnet2

|

| 19 |

+

|

| 20 |

+

Follow the [ESPnet installation instructions](https://espnet.github.io/espnet/installation.html)

|

| 21 |

+

if you haven't done that already.

|

| 22 |

+

|

| 23 |

+

```bash

|

| 24 |

+

cd espnet

|

| 25 |

+

git checkout 3897ed8380bfb526d5c5dd2197eccbffbba7d8f8

|

| 26 |

+

pip install -e .

|

| 27 |

+

cd egs2/wsj0_2mix/enh1

|

| 28 |

+

./run.sh --skip_data_prep false --skip_train true --download_model lichenda/wsj0_2mix_skim_small_causal

|

| 29 |

+

```

|

| 30 |

+

|

| 31 |

+

<!-- Generated by ./scripts/utils/show_enh_score.sh -->

|

| 32 |

+

# RESULTS

|

| 33 |

+

## Environments

|

| 34 |

+

- date: `Wed May 10 20:30:26 CST 2023`

|

| 35 |

+

- python version: `3.9.16 (main, Mar 8 2023, 14:00:05) [GCC 11.2.0]`

|

| 36 |

+

- espnet version: `espnet 202304`

|

| 37 |

+

- pytorch version: `pytorch 2.0.1`

|

| 38 |

+

- Git hash: `3897ed8380bfb526d5c5dd2197eccbffbba7d8f8`

|

| 39 |

+

- Commit date: `Tue May 9 13:27:37 2023 +0800`

|

| 40 |

+

|

| 41 |

+

|

| 42 |

+

## enh_train_enh_skim_causal_small_raw

|

| 43 |

+

|

| 44 |

+

config: conf/tuning/train_enh_skim_causal_small.yaml

|

| 45 |

+

|

| 46 |

+

|dataset|STOI|SAR|SDR|SIR|SI_SNR|

|

| 47 |

+

|---|---|---|---|---|---|

|

| 48 |

+

|enhanced_cv_min_8k|93.41|15.54|14.92|24.87|14.51|

|

| 49 |

+

|enhanced_tt_min_8k|94.20|15.00|14.33|24.18|13.92|

|

| 50 |

+

|

| 51 |

+

## ENH config

|

| 52 |

+

|

| 53 |

+

<details><summary>expand</summary>

|

| 54 |

+

|

| 55 |

+

```

|

| 56 |

+

config: conf/tuning/train_enh_skim_causal_small.yaml

|

| 57 |

+

print_config: false

|

| 58 |

+

log_level: INFO

|

| 59 |

+

dry_run: false

|

| 60 |

+

iterator_type: chunk

|

| 61 |

+

output_dir: exp/enh_train_enh_skim_causal_small_raw

|

| 62 |

+

ngpu: 1

|

| 63 |

+

seed: 0

|

| 64 |

+

num_workers: 4

|

| 65 |

+

num_att_plot: 3

|

| 66 |

+

dist_backend: nccl

|

| 67 |

+

dist_init_method: env://

|

| 68 |

+

dist_world_size: null

|

| 69 |

+

dist_rank: null

|

| 70 |

+

local_rank: 0

|

| 71 |

+

dist_master_addr: null

|

| 72 |

+

dist_master_port: null

|

| 73 |

+

dist_launcher: null

|

| 74 |

+

multiprocessing_distributed: false

|

| 75 |

+

unused_parameters: false

|

| 76 |

+

sharded_ddp: false

|

| 77 |

+

cudnn_enabled: true

|

| 78 |

+

cudnn_benchmark: false

|

| 79 |

+

cudnn_deterministic: true

|

| 80 |

+

collect_stats: false

|

| 81 |

+

write_collected_feats: false

|

| 82 |

+

max_epoch: 150

|

| 83 |

+

patience: 50

|

| 84 |

+

val_scheduler_criterion:

|

| 85 |

+

- valid

|

| 86 |

+

- loss

|

| 87 |

+

early_stopping_criterion:

|

| 88 |

+

- valid

|

| 89 |

+

- loss

|

| 90 |

+

- min

|

| 91 |

+

best_model_criterion:

|

| 92 |

+

- - valid

|

| 93 |

+

- si_snr_loss

|

| 94 |

+

- min

|

| 95 |

+

- - valid

|

| 96 |

+

- loss

|

| 97 |

+

- min

|

| 98 |

+

keep_nbest_models: 1

|

| 99 |

+

nbest_averaging_interval: 0

|

| 100 |

+

grad_clip: 5.0

|

| 101 |

+

grad_clip_type: 2.0

|

| 102 |

+

grad_noise: false

|

| 103 |

+

accum_grad: 1

|

| 104 |

+

no_forward_run: false

|

| 105 |

+

resume: true

|

| 106 |

+

train_dtype: float32

|

| 107 |

+

use_amp: false

|

| 108 |

+

log_interval: null

|

| 109 |

+

use_matplotlib: true

|

| 110 |

+

use_tensorboard: true

|

| 111 |

+

create_graph_in_tensorboard: false

|

| 112 |

+

use_wandb: false

|

| 113 |

+

wandb_project: null

|

| 114 |

+

wandb_id: null

|

| 115 |

+

wandb_entity: null

|

| 116 |

+

wandb_name: null

|

| 117 |

+

wandb_model_log_interval: -1

|

| 118 |

+

detect_anomaly: false

|

| 119 |

+

pretrain_path: null

|

| 120 |

+

init_param: []

|

| 121 |

+

ignore_init_mismatch: false

|

| 122 |

+

freeze_param: []

|

| 123 |

+

num_iters_per_epoch: null

|

| 124 |

+

batch_size: 16

|

| 125 |

+

valid_batch_size: null

|

| 126 |

+

batch_bins: 1000000

|

| 127 |

+

valid_batch_bins: null

|

| 128 |

+

train_shape_file:

|

| 129 |

+

- exp/enh_stats_8k/train/speech_mix_shape

|

| 130 |

+

- exp/enh_stats_8k/train/speech_ref1_shape

|

| 131 |

+

- exp/enh_stats_8k/train/speech_ref2_shape

|

| 132 |

+

valid_shape_file:

|

| 133 |

+

- exp/enh_stats_8k/valid/speech_mix_shape

|

| 134 |

+

- exp/enh_stats_8k/valid/speech_ref1_shape

|

| 135 |

+

- exp/enh_stats_8k/valid/speech_ref2_shape

|

| 136 |

+

batch_type: folded

|

| 137 |

+

valid_batch_type: null

|

| 138 |

+

fold_length:

|

| 139 |

+

- 80000

|

| 140 |

+

- 80000

|

| 141 |

+

- 80000

|

| 142 |

+

sort_in_batch: descending

|

| 143 |

+

sort_batch: descending

|

| 144 |

+

multiple_iterator: false

|

| 145 |

+

chunk_length: 32000,16000,8000

|

| 146 |

+

chunk_shift_ratio: 0.5

|

| 147 |

+

num_cache_chunks: 1024

|

| 148 |

+

chunk_excluded_key_prefixes: []

|

| 149 |

+

train_data_path_and_name_and_type:

|

| 150 |

+

- - dump/raw/tr_min_8k/wav.scp

|

| 151 |

+

- speech_mix

|

| 152 |

+

- sound

|

| 153 |

+

- - dump/raw/tr_min_8k/spk1.scp

|

| 154 |

+

- speech_ref1

|

| 155 |

+

- sound

|

| 156 |

+

- - dump/raw/tr_min_8k/spk2.scp

|

| 157 |

+

- speech_ref2

|

| 158 |

+

- sound

|

| 159 |

+

valid_data_path_and_name_and_type:

|

| 160 |

+

- - dump/raw/cv_min_8k/wav.scp

|

| 161 |

+

- speech_mix

|

| 162 |

+

- sound

|

| 163 |

+

- - dump/raw/cv_min_8k/spk1.scp

|

| 164 |

+

- speech_ref1

|

| 165 |

+

- sound

|

| 166 |

+

- - dump/raw/cv_min_8k/spk2.scp

|

| 167 |

+

- speech_ref2

|

| 168 |

+

- sound

|

| 169 |

+

allow_variable_data_keys: false

|

| 170 |

+

max_cache_size: 0.0

|

| 171 |

+

max_cache_fd: 32

|

| 172 |

+

valid_max_cache_size: null

|

| 173 |

+

exclude_weight_decay: false

|

| 174 |

+

exclude_weight_decay_conf: {}

|

| 175 |

+

optim: adam

|

| 176 |

+

optim_conf:

|

| 177 |

+

lr: 0.001

|

| 178 |

+

eps: 1.0e-08

|

| 179 |

+

weight_decay: 0

|

| 180 |

+

scheduler: steplr

|

| 181 |

+

scheduler_conf:

|

| 182 |

+

step_size: 2

|

| 183 |

+

gamma: 0.97

|

| 184 |

+

init: xavier_uniform

|

| 185 |

+

model_conf:

|

| 186 |

+

stft_consistency: false

|

| 187 |

+

loss_type: mask_mse

|

| 188 |

+

mask_type: null

|

| 189 |

+

criterions:

|

| 190 |

+

- name: si_snr

|

| 191 |

+

conf: {}

|

| 192 |

+

wrapper: pit

|

| 193 |

+

wrapper_conf:

|

| 194 |

+

weight: 1.0

|

| 195 |

+

independent_perm: true

|

| 196 |

+

speech_volume_normalize: null

|

| 197 |

+

rir_scp: null

|

| 198 |

+

rir_apply_prob: 1.0

|

| 199 |

+

noise_scp: null

|

| 200 |

+

noise_apply_prob: 1.0

|

| 201 |

+

noise_db_range: '13_15'

|

| 202 |

+

short_noise_thres: 0.5

|

| 203 |

+

use_reverberant_ref: false

|

| 204 |

+

num_spk: 1

|

| 205 |

+

num_noise_type: 1

|

| 206 |

+

sample_rate: 8000

|

| 207 |

+

force_single_channel: false

|

| 208 |

+

dynamic_mixing: false

|

| 209 |

+

utt2spk: null

|

| 210 |

+

dynamic_mixing_gain_db: 0.0

|

| 211 |

+

encoder: conv

|

| 212 |

+

encoder_conf:

|

| 213 |

+

channel: 128

|

| 214 |

+

kernel_size: 8

|

| 215 |

+

stride: 4

|

| 216 |

+

separator: skim

|

| 217 |

+

separator_conf:

|

| 218 |

+

causal: true

|

| 219 |

+

num_spk: 2

|

| 220 |

+

layer: 3

|

| 221 |

+

nonlinear: relu

|

| 222 |

+

unit: 384

|

| 223 |

+

segment_size: 50

|

| 224 |

+

dropout: 0.0

|

| 225 |

+

mem_type: hc

|

| 226 |

+

seg_overlap: false

|

| 227 |

+

decoder: conv

|

| 228 |

+

decoder_conf:

|

| 229 |

+

channel: 128

|

| 230 |

+

kernel_size: 8

|

| 231 |

+

stride: 4

|

| 232 |

+

mask_module: multi_mask

|

| 233 |

+

mask_module_conf: {}

|

| 234 |

+

preprocessor: null

|

| 235 |

+

preprocessor_conf: {}

|

| 236 |

+

required:

|

| 237 |

+

- output_dir

|

| 238 |

+

version: '202304'

|

| 239 |

+

distributed: false

|

| 240 |

+

```

|

| 241 |

+

|

| 242 |

+

</details>

|

| 243 |

+

|

| 244 |

+

|

| 245 |

+

|

| 246 |

+

### Citing ESPnet

|

| 247 |

+

|

| 248 |

+

```BibTex

|

| 249 |

+

@inproceedings{watanabe2018espnet,

|

| 250 |

+

author={Shinji Watanabe and Takaaki Hori and Shigeki Karita and Tomoki Hayashi and Jiro Nishitoba and Yuya Unno and Nelson Yalta and Jahn Heymann and Matthew Wiesner and Nanxin Chen and Adithya Renduchintala and Tsubasa Ochiai},

|

| 251 |

+

title={{ESPnet}: End-to-End Speech Processing Toolkit},

|

| 252 |

+

year={2018},

|

| 253 |

+

booktitle={Proceedings of Interspeech},

|

| 254 |

+

pages={2207--2211},

|

| 255 |

+

doi={10.21437/Interspeech.2018-1456},

|

| 256 |

+

url={http://dx.doi.org/10.21437/Interspeech.2018-1456}

|

| 257 |

+

}

|

| 258 |

+

|

| 259 |

+

|

| 260 |

+

@inproceedings{ESPnet-SE,

|

| 261 |

+

author = {Chenda Li and Jing Shi and Wangyou Zhang and Aswin Shanmugam Subramanian and Xuankai Chang and

|

| 262 |

+

Naoyuki Kamo and Moto Hira and Tomoki Hayashi and Christoph B{"{o}}ddeker and Zhuo Chen and Shinji Watanabe},

|

| 263 |

+

title = {ESPnet-SE: End-To-End Speech Enhancement and Separation Toolkit Designed for {ASR} Integration},

|

| 264 |

+

booktitle = {{IEEE} Spoken Language Technology Workshop, {SLT} 2021, Shenzhen, China, January 19-22, 2021},

|

| 265 |

+

pages = {785--792},

|

| 266 |

+

publisher = {{IEEE}},

|

| 267 |

+

year = {2021},

|

| 268 |

+

url = {https://doi.org/10.1109/SLT48900.2021.9383615},

|

| 269 |

+

doi = {10.1109/SLT48900.2021.9383615},

|

| 270 |

+

timestamp = {Mon, 12 Apr 2021 17:08:59 +0200},

|

| 271 |

+

biburl = {https://dblp.org/rec/conf/slt/Li0ZSCKHHBC021.bib},

|

| 272 |

+

bibsource = {dblp computer science bibliography, https://dblp.org}

|

| 273 |

+

}

|

| 274 |

+

|

| 275 |

+

|

| 276 |

+

```

|

| 277 |

+

|

| 278 |

+

or arXiv:

|

| 279 |

+

|

| 280 |

+

```bibtex

|

| 281 |

+

@misc{watanabe2018espnet,

|

| 282 |

+

title={ESPnet: End-to-End Speech Processing Toolkit},

|

| 283 |

+

author={Shinji Watanabe and Takaaki Hori and Shigeki Karita and Tomoki Hayashi and Jiro Nishitoba and Yuya Unno and Nelson Yalta and Jahn Heymann and Matthew Wiesner and Nanxin Chen and Adithya Renduchintala and Tsubasa Ochiai},

|

| 284 |

+

year={2018},

|

| 285 |

+

eprint={1804.00015},

|

| 286 |

+

archivePrefix={arXiv},

|

| 287 |

+

primaryClass={cs.CL}

|

| 288 |

+

}

|

| 289 |

+

```

|

exp/enh_train_enh_skim_causal_small_raw/149epoch.pth

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:5aa8ece7fda5c0a5fdf2d37540bd12fe80f7ad38834462e500d8c3722de532ff

|

| 3 |

+

size 31528663

|

exp/enh_train_enh_skim_causal_small_raw/RESULTS.md

ADDED

|

@@ -0,0 +1,20 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<!-- Generated by ./scripts/utils/show_enh_score.sh -->

|

| 2 |

+

# RESULTS

|

| 3 |

+

## Environments

|

| 4 |

+

- date: `Wed May 10 20:30:26 CST 2023`

|

| 5 |

+

- python version: `3.9.16 (main, Mar 8 2023, 14:00:05) [GCC 11.2.0]`

|

| 6 |

+

- espnet version: `espnet 202304`

|

| 7 |

+

- pytorch version: `pytorch 2.0.1`

|

| 8 |

+

- Git hash: `3897ed8380bfb526d5c5dd2197eccbffbba7d8f8`

|

| 9 |

+

- Commit date: `Tue May 9 13:27:37 2023 +0800`

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

## enh_train_enh_skim_causal_small_raw

|

| 13 |

+

|

| 14 |

+

config: conf/tuning/train_enh_skim_causal_small.yaml

|

| 15 |

+

|

| 16 |

+

|dataset|STOI|SAR|SDR|SIR|SI_SNR|

|

| 17 |

+

|---|---|---|---|---|---|

|

| 18 |

+

|enhanced_cv_min_8k|93.41|15.54|14.92|24.87|14.51|

|

| 19 |

+

|enhanced_tt_min_8k|94.20|15.00|14.33|24.18|13.92|

|

| 20 |

+

|

exp/enh_train_enh_skim_causal_small_raw/config.yaml

ADDED

|

@@ -0,0 +1,184 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

config: conf/tuning/train_enh_skim_causal_small.yaml

|

| 2 |

+

print_config: false

|

| 3 |

+

log_level: INFO

|

| 4 |

+

dry_run: false

|

| 5 |

+

iterator_type: chunk

|

| 6 |

+

output_dir: exp/enh_train_enh_skim_causal_small_raw

|

| 7 |

+

ngpu: 1

|

| 8 |

+

seed: 0

|

| 9 |

+

num_workers: 4

|

| 10 |

+

num_att_plot: 3

|

| 11 |

+

dist_backend: nccl

|

| 12 |

+

dist_init_method: env://

|

| 13 |

+

dist_world_size: null

|

| 14 |

+

dist_rank: null

|

| 15 |

+

local_rank: 0

|

| 16 |

+

dist_master_addr: null

|

| 17 |

+

dist_master_port: null

|

| 18 |

+

dist_launcher: null

|

| 19 |

+

multiprocessing_distributed: false

|

| 20 |

+

unused_parameters: false

|

| 21 |

+

sharded_ddp: false

|

| 22 |

+

cudnn_enabled: true

|

| 23 |

+

cudnn_benchmark: false

|

| 24 |

+

cudnn_deterministic: true

|

| 25 |

+

collect_stats: false

|

| 26 |

+

write_collected_feats: false

|

| 27 |

+

max_epoch: 150

|

| 28 |

+

patience: 50

|

| 29 |

+

val_scheduler_criterion:

|

| 30 |

+

- valid

|

| 31 |

+

- loss

|

| 32 |

+

early_stopping_criterion:

|

| 33 |

+

- valid

|

| 34 |

+

- loss

|

| 35 |

+

- min

|

| 36 |

+

best_model_criterion:

|

| 37 |

+

- - valid

|

| 38 |

+

- si_snr_loss

|

| 39 |

+

- min

|

| 40 |

+

- - valid

|

| 41 |

+

- loss

|

| 42 |

+

- min

|

| 43 |

+

keep_nbest_models: 1

|

| 44 |

+

nbest_averaging_interval: 0

|

| 45 |

+

grad_clip: 5.0

|

| 46 |

+

grad_clip_type: 2.0

|

| 47 |

+

grad_noise: false

|

| 48 |

+

accum_grad: 1

|

| 49 |

+

no_forward_run: false

|

| 50 |

+

resume: true

|

| 51 |

+

train_dtype: float32

|

| 52 |

+

use_amp: false

|

| 53 |

+

log_interval: null

|

| 54 |

+

use_matplotlib: true

|

| 55 |

+

use_tensorboard: true

|

| 56 |

+

create_graph_in_tensorboard: false

|

| 57 |

+

use_wandb: false

|

| 58 |

+

wandb_project: null

|

| 59 |

+

wandb_id: null

|

| 60 |

+

wandb_entity: null

|

| 61 |

+

wandb_name: null

|

| 62 |

+

wandb_model_log_interval: -1

|

| 63 |

+

detect_anomaly: false

|

| 64 |

+

pretrain_path: null

|

| 65 |

+

init_param: []

|

| 66 |

+

ignore_init_mismatch: false

|

| 67 |

+

freeze_param: []

|

| 68 |

+

num_iters_per_epoch: null

|

| 69 |

+

batch_size: 16

|

| 70 |

+

valid_batch_size: null

|

| 71 |

+

batch_bins: 1000000

|

| 72 |

+

valid_batch_bins: null

|

| 73 |

+

train_shape_file:

|

| 74 |

+

- exp/enh_stats_8k/train/speech_mix_shape

|

| 75 |

+

- exp/enh_stats_8k/train/speech_ref1_shape

|

| 76 |

+

- exp/enh_stats_8k/train/speech_ref2_shape

|

| 77 |

+

valid_shape_file:

|

| 78 |

+

- exp/enh_stats_8k/valid/speech_mix_shape

|

| 79 |

+

- exp/enh_stats_8k/valid/speech_ref1_shape

|

| 80 |

+

- exp/enh_stats_8k/valid/speech_ref2_shape

|

| 81 |

+

batch_type: folded

|

| 82 |

+

valid_batch_type: null

|

| 83 |

+

fold_length:

|

| 84 |

+

- 80000

|

| 85 |

+

- 80000

|

| 86 |

+

- 80000

|

| 87 |

+

sort_in_batch: descending

|

| 88 |

+

sort_batch: descending

|

| 89 |

+

multiple_iterator: false

|

| 90 |

+

chunk_length: 32000,16000,8000

|

| 91 |

+

chunk_shift_ratio: 0.5

|

| 92 |

+

num_cache_chunks: 1024

|

| 93 |

+

chunk_excluded_key_prefixes: []

|

| 94 |

+

train_data_path_and_name_and_type:

|

| 95 |

+

- - dump/raw/tr_min_8k/wav.scp

|

| 96 |

+

- speech_mix

|

| 97 |

+

- sound

|

| 98 |

+

- - dump/raw/tr_min_8k/spk1.scp

|

| 99 |

+

- speech_ref1

|

| 100 |

+

- sound

|

| 101 |

+

- - dump/raw/tr_min_8k/spk2.scp

|

| 102 |

+

- speech_ref2

|

| 103 |

+

- sound

|

| 104 |

+

valid_data_path_and_name_and_type:

|

| 105 |

+

- - dump/raw/cv_min_8k/wav.scp

|

| 106 |

+

- speech_mix

|

| 107 |

+

- sound

|

| 108 |

+

- - dump/raw/cv_min_8k/spk1.scp

|

| 109 |

+

- speech_ref1

|

| 110 |

+

- sound

|

| 111 |

+

- - dump/raw/cv_min_8k/spk2.scp

|

| 112 |

+

- speech_ref2

|

| 113 |

+

- sound

|

| 114 |

+

allow_variable_data_keys: false

|

| 115 |

+

max_cache_size: 0.0

|

| 116 |

+

max_cache_fd: 32

|

| 117 |

+

valid_max_cache_size: null

|

| 118 |

+

exclude_weight_decay: false

|

| 119 |

+

exclude_weight_decay_conf: {}

|

| 120 |

+

optim: adam

|

| 121 |

+

optim_conf:

|

| 122 |

+

lr: 0.001

|

| 123 |

+

eps: 1.0e-08

|

| 124 |

+

weight_decay: 0

|

| 125 |

+

scheduler: steplr

|

| 126 |

+

scheduler_conf:

|

| 127 |

+

step_size: 2

|

| 128 |

+

gamma: 0.97

|

| 129 |

+

init: xavier_uniform

|

| 130 |

+

model_conf:

|

| 131 |

+

stft_consistency: false

|

| 132 |

+

loss_type: mask_mse

|

| 133 |

+

mask_type: null

|

| 134 |

+

criterions:

|

| 135 |

+

- name: si_snr

|

| 136 |

+

conf: {}

|

| 137 |

+

wrapper: pit

|

| 138 |

+

wrapper_conf:

|

| 139 |

+

weight: 1.0

|

| 140 |

+

independent_perm: true

|

| 141 |

+

speech_volume_normalize: null

|

| 142 |

+

rir_scp: null

|

| 143 |

+

rir_apply_prob: 1.0

|

| 144 |

+

noise_scp: null

|

| 145 |

+

noise_apply_prob: 1.0

|

| 146 |

+

noise_db_range: '13_15'

|

| 147 |

+

short_noise_thres: 0.5

|

| 148 |

+

use_reverberant_ref: false

|

| 149 |

+

num_spk: 1

|

| 150 |

+

num_noise_type: 1

|

| 151 |

+

sample_rate: 8000

|

| 152 |

+

force_single_channel: false

|

| 153 |

+

dynamic_mixing: false

|

| 154 |

+

utt2spk: null

|

| 155 |

+

dynamic_mixing_gain_db: 0.0

|

| 156 |

+

encoder: conv

|

| 157 |

+

encoder_conf:

|

| 158 |

+

channel: 128

|

| 159 |

+

kernel_size: 8

|

| 160 |

+

stride: 4

|

| 161 |

+

separator: skim

|

| 162 |

+

separator_conf:

|

| 163 |

+

causal: true

|

| 164 |

+

num_spk: 2

|

| 165 |

+

layer: 3

|

| 166 |

+

nonlinear: relu

|

| 167 |

+

unit: 384

|

| 168 |

+

segment_size: 50

|

| 169 |

+

dropout: 0.0

|

| 170 |

+

mem_type: hc

|

| 171 |

+

seg_overlap: false

|

| 172 |

+

decoder: conv

|

| 173 |

+

decoder_conf:

|

| 174 |

+

channel: 128

|

| 175 |

+

kernel_size: 8

|

| 176 |

+

stride: 4

|

| 177 |

+

mask_module: multi_mask

|

| 178 |

+

mask_module_conf: {}

|

| 179 |

+

preprocessor: null

|

| 180 |

+

preprocessor_conf: {}

|

| 181 |

+

required:

|

| 182 |

+

- output_dir

|

| 183 |

+

version: '202304'

|

| 184 |

+

distributed: false

|

exp/enh_train_enh_skim_causal_small_raw/images/backward_time.png

ADDED

|

exp/enh_train_enh_skim_causal_small_raw/images/clip.png

ADDED

|

exp/enh_train_enh_skim_causal_small_raw/images/forward_time.png

ADDED

|

exp/enh_train_enh_skim_causal_small_raw/images/gpu_max_cached_mem_GB.png

ADDED

|

exp/enh_train_enh_skim_causal_small_raw/images/grad_norm.png

ADDED

|

exp/enh_train_enh_skim_causal_small_raw/images/iter_time.png

ADDED

|

exp/enh_train_enh_skim_causal_small_raw/images/loss.png

ADDED

|

exp/enh_train_enh_skim_causal_small_raw/images/loss_scale.png

ADDED

|

exp/enh_train_enh_skim_causal_small_raw/images/optim0_lr0.png

ADDED

|

exp/enh_train_enh_skim_causal_small_raw/images/optim_step_time.png

ADDED

|

exp/enh_train_enh_skim_causal_small_raw/images/si_snr_loss.png

ADDED

|

exp/enh_train_enh_skim_causal_small_raw/images/train_time.png

ADDED

|

meta.yaml

ADDED

|

@@ -0,0 +1,8 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

espnet: '202304'

|

| 2 |

+

files:

|

| 3 |

+

model_file: exp/enh_train_enh_skim_causal_small_raw/149epoch.pth

|

| 4 |

+

python: "3.9.16 (main, Mar 8 2023, 14:00:05) \n[GCC 11.2.0]"

|

| 5 |

+

timestamp: 1684303881.014626

|

| 6 |

+

torch: 2.0.1

|

| 7 |

+

yaml_files:

|

| 8 |

+

train_config: exp/enh_train_enh_skim_causal_small_raw/config.yaml

|