Commit

·

ff5cc82

1

Parent(s):

a430b6b

Update README.md

Browse files

README.md

CHANGED

|

@@ -11,46 +11,27 @@ tags:

|

|

| 11 |

|

| 12 |

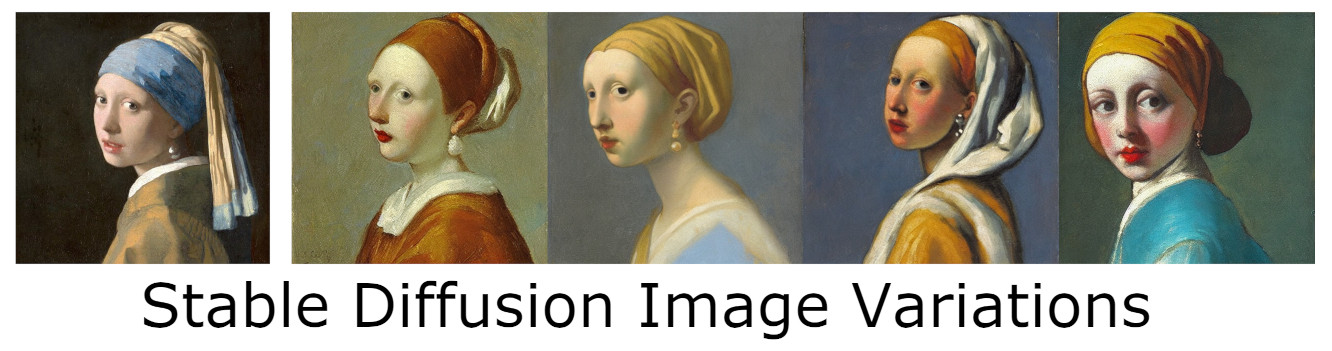

# Stable Diffusion Image Variations Model Card

|

| 13 |

|

|

|

|

|

|

|

| 14 |

This version of Stable Diffusion has been fine tuned from [CompVis/stable-diffusion-v1-3-original](https://huggingface.co/CompVis/stable-diffusion-v-1-3-original) to accept CLIP image embedding rather than text embeddings. This allows the creation of "image variations" similar to DALLE-2 using Stable Diffusion. This version of the weights has been ported to huggingface Diffusers, to use this with the Diffusers library requires the [Lambda Diffusers repo](https://github.com/LambdaLabsML/lambda-diffusers).

|

| 15 |

|

| 16 |

|

| 17 |

|

| 18 |

## Example

|

| 19 |

|

| 20 |

-

|

| 21 |

-

|

| 22 |

-

```bash

|

| 23 |

-

git clone https://github.com/LambdaLabsML/lambda-diffusers.git

|

| 24 |

-

cd lambda-diffusers

|

| 25 |

-

python -m venv .venv

|

| 26 |

-

source .venv/bin/activate

|

| 27 |

-

pip install -r requirements.txt

|

| 28 |

-

```

|

| 29 |

-

|

| 30 |

-

Then run the following python code:

|

| 31 |

|

| 32 |

```python

|

| 33 |

-

from

|

| 34 |

-

from lambda_diffusers import StableDiffusionImageEmbedPipeline

|

| 35 |

from PIL import Image

|

| 36 |

-

import torch

|

| 37 |

|

| 38 |

-

device = "cuda"

|

| 39 |

-

|

| 40 |

-

|

| 41 |

-

|

| 42 |

-

|

| 43 |

-

num_samples = 4

|

| 44 |

-

image = pipe(num_samples*[im], guidance_scale=3.0)

|

| 45 |

-

image = image["sample"]

|

| 46 |

-

|

| 47 |

-

base_path = Path("outputs/im2im")

|

| 48 |

-

base_path.mkdir(exist_ok=True, parents=True)

|

| 49 |

-

for idx, im in enumerate(image):

|

| 50 |

-

im.save(base_path/f"{idx:06}.jpg")

|

| 51 |

```

|

| 52 |

|

| 53 |

-

|

| 54 |

# Training

|

| 55 |

|

| 56 |

**Training Data**

|

|

@@ -140,4 +121,48 @@ Specifically, the checker compares the class probability of harmful concepts in

|

|

| 140 |

The concepts are passed into the model with the generated image and compared to a hand-engineered weight for each NSFW concept.

|

| 141 |

|

| 142 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 143 |

*This model card was written by: Justin Pinkney and is based on the [Stable Diffusion model card](https://huggingface.co/CompVis/stable-diffusion-v1-4).*

|

|

|

|

| 11 |

|

| 12 |

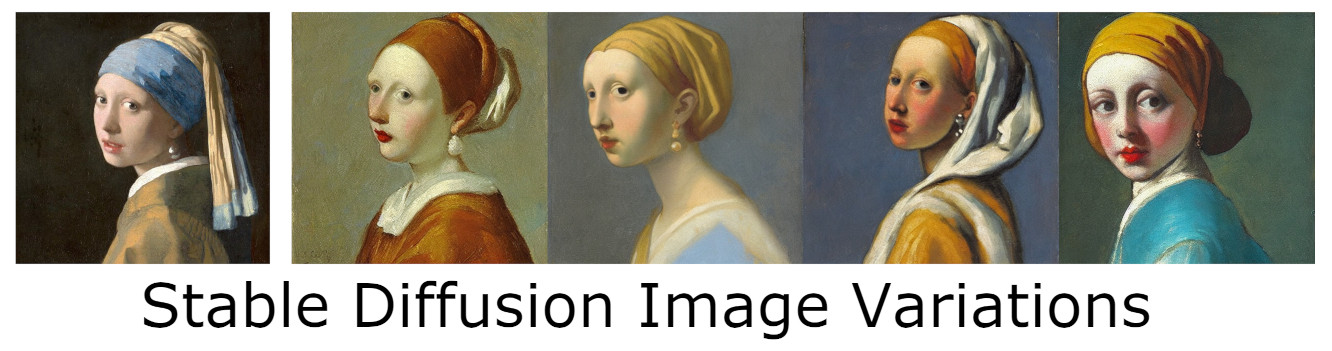

# Stable Diffusion Image Variations Model Card

|

| 13 |

|

| 14 |

+

🧨🎉 Image Variations is now natively supported in 🤗 Diffusers! 🎉🧨

|

| 15 |

+

|

| 16 |

This version of Stable Diffusion has been fine tuned from [CompVis/stable-diffusion-v1-3-original](https://huggingface.co/CompVis/stable-diffusion-v-1-3-original) to accept CLIP image embedding rather than text embeddings. This allows the creation of "image variations" similar to DALLE-2 using Stable Diffusion. This version of the weights has been ported to huggingface Diffusers, to use this with the Diffusers library requires the [Lambda Diffusers repo](https://github.com/LambdaLabsML/lambda-diffusers).

|

| 17 |

|

| 18 |

|

| 19 |

|

| 20 |

## Example

|

| 21 |

|

| 22 |

+

Make sure you are using a version of Diffusers >=0.8.0 (for older version see the old instructions at the bottom of this model card)

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 23 |

|

| 24 |

```python

|

| 25 |

+

from diffusers import StableDiffusionImageVariationPipeline

|

|

|

|

| 26 |

from PIL import Image

|

|

|

|

| 27 |

|

| 28 |

+

device = "cuda:0"

|

| 29 |

+

sd_pipe = StableDiffusionImageVariationPipeline.from_pretrained("lambdalabs/sd-image-variations-diffusers")

|

| 30 |

+

sd_pipe = sd_pipe.to(device)

|

| 31 |

+

out = sd_pipe(image=Image.open("path/to/image.jpg"))

|

| 32 |

+

out["images"][0].save("result.jpg")

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

```

|

| 34 |

|

|

|

|

| 35 |

# Training

|

| 36 |

|

| 37 |

**Training Data**

|

|

|

|

| 121 |

The concepts are passed into the model with the generated image and compared to a hand-engineered weight for each NSFW concept.

|

| 122 |

|

| 123 |

|

| 124 |

+

## Old instructions

|

| 125 |

+

|

| 126 |

+

If you are using a diffusers version <0.8.0 there is no `StableDiffusionImageVariationPipeline`,

|

| 127 |

+

in this case you need to use an older revision (`2ddbd90b14bc5892c19925b15185e561bc8e5d0a`) in conjunction with the lambda-diffusers repo:

|

| 128 |

+

|

| 129 |

+

|

| 130 |

+

First clone [Lambda Diffusers](https://github.com/LambdaLabsML/lambda-diffusers) and install any requirements (in a virtual environment in the example below):

|

| 131 |

+

|

| 132 |

+

```bash

|

| 133 |

+

git clone https://github.com/LambdaLabsML/lambda-diffusers.git

|

| 134 |

+

cd lambda-diffusers

|

| 135 |

+

python -m venv .venv

|

| 136 |

+

source .venv/bin/activate

|

| 137 |

+

pip install -r requirements.txt

|

| 138 |

+

```

|

| 139 |

+

|

| 140 |

+

Then run the following python code:

|

| 141 |

+

|

| 142 |

+

```python

|

| 143 |

+

from pathlib import Path

|

| 144 |

+

from lambda_diffusers import StableDiffusionImageEmbedPipeline

|

| 145 |

+

from PIL import Image

|

| 146 |

+

import torch

|

| 147 |

+

|

| 148 |

+

device = "cuda" if torch.cuda.is_available() else "cpu"

|

| 149 |

+

pipe = StableDiffusionImageEmbedPipeline.from_pretrained(

|

| 150 |

+

"lambdalabs/sd-image-variations-diffusers",

|

| 151 |

+

revision="2ddbd90b14bc5892c19925b15185e561bc8e5d0a",

|

| 152 |

+

)

|

| 153 |

+

pipe = pipe.to(device)

|

| 154 |

+

|

| 155 |

+

im = Image.open("your/input/image/here.jpg")

|

| 156 |

+

num_samples = 4

|

| 157 |

+

image = pipe(num_samples*[im], guidance_scale=3.0)

|

| 158 |

+

image = image["sample"]

|

| 159 |

+

|

| 160 |

+

base_path = Path("outputs/im2im")

|

| 161 |

+

base_path.mkdir(exist_ok=True, parents=True)

|

| 162 |

+

for idx, im in enumerate(image):

|

| 163 |

+

im.save(base_path/f"{idx:06}.jpg")

|

| 164 |

+

```

|

| 165 |

+

|

| 166 |

+

|

| 167 |

+

|

| 168 |

*This model card was written by: Justin Pinkney and is based on the [Stable Diffusion model card](https://huggingface.co/CompVis/stable-diffusion-v1-4).*

|