Update README.md

Browse files

README.md

CHANGED

|

@@ -22,21 +22,16 @@ pip install diffusers transformers

|

|

| 22 |

### Text to image

|

| 23 |

|

| 24 |

```python

|

| 25 |

-

from diffusers import

|

| 26 |

import torch

|

| 27 |

|

| 28 |

-

|

| 29 |

-

|

| 30 |

-

|

| 31 |

-

t2i_pipe = DiffusionPipeline.from_pretrained("kandinsky-community/kandinsky-2-1", torch_dtype=torch.float16)

|

| 32 |

-

t2i_pipe.to("cuda")

|

| 33 |

|

| 34 |

prompt = "A alien cheeseburger creature eating itself, claymation, cinematic, moody lighting"

|

| 35 |

negative_prompt = "low quality, bad quality"

|

| 36 |

|

| 37 |

-

|

| 38 |

-

|

| 39 |

-

image = t2i_pipe(prompt, image_embeds=image_embeds, negative_image_embeds=negative_image_embeds).images[0]

|

| 40 |

image.save("cheeseburger_monster.png")

|

| 41 |

```

|

| 42 |

|

|

@@ -46,43 +41,27 @@ image.save("cheeseburger_monster.png")

|

|

| 46 |

### Text Guided Image-to-Image Generation

|

| 47 |

|

| 48 |

```python

|

| 49 |

-

from diffusers import

|

| 50 |

import torch

|

| 51 |

-

|

| 52 |

-

from PIL import Image

|

| 53 |

import requests

|

| 54 |

from io import BytesIO

|

|

|

|

|

|

|

| 55 |

|

| 56 |

-

|

| 57 |

-

response = requests.get(url)

|

| 58 |

-

original_image = Image.open(BytesIO(response.content)).convert("RGB")

|

| 59 |

-

original_image = original_image.resize((768, 512))

|

| 60 |

-

|

| 61 |

-

# create prior

|

| 62 |

-

pipe_prior = KandinskyPriorPipeline.from_pretrained(

|

| 63 |

-

"kandinsky-community/kandinsky-2-1-prior", torch_dtype=torch.float16

|

| 64 |

-

)

|

| 65 |

-

pipe_prior.to("cuda")

|

| 66 |

|

| 67 |

-

|

| 68 |

-

pipe = KandinskyImg2ImgPipeline.from_pretrained("kandinsky-community/kandinsky-2-1", torch_dtype=torch.float16)

|

| 69 |

-

pipe.to("cuda")

|

| 70 |

|

| 71 |

prompt = "A fantasy landscape, Cinematic lighting"

|

| 72 |

negative_prompt = "low quality, bad quality"

|

| 73 |

|

| 74 |

-

|

| 75 |

-

|

| 76 |

-

|

| 77 |

-

|

| 78 |

-

|

| 79 |

-

image_embeds=image_embeds,

|

| 80 |

-

negative_image_embeds=negative_image_embeds,

|

| 81 |

-

height=768,

|

| 82 |

-

width=768,

|

| 83 |

-

strength=0.3,

|

| 84 |

-

)

|

| 85 |

|

|

|

|

| 86 |

out.images[0].save("fantasy_land.png")

|

| 87 |

```

|

| 88 |

|

|

@@ -92,41 +71,27 @@ out.images[0].save("fantasy_land.png")

|

|

| 92 |

### Text Guided Inpainting Generation

|

| 93 |

|

| 94 |

```python

|

| 95 |

-

from diffusers import

|

| 96 |

from diffusers.utils import load_image

|

| 97 |

import torch

|

| 98 |

import numpy as np

|

| 99 |

|

| 100 |

-

|

| 101 |

-

|

| 102 |

-

)

|

| 103 |

-

pipe_prior.to("cuda")

|

| 104 |

|

| 105 |

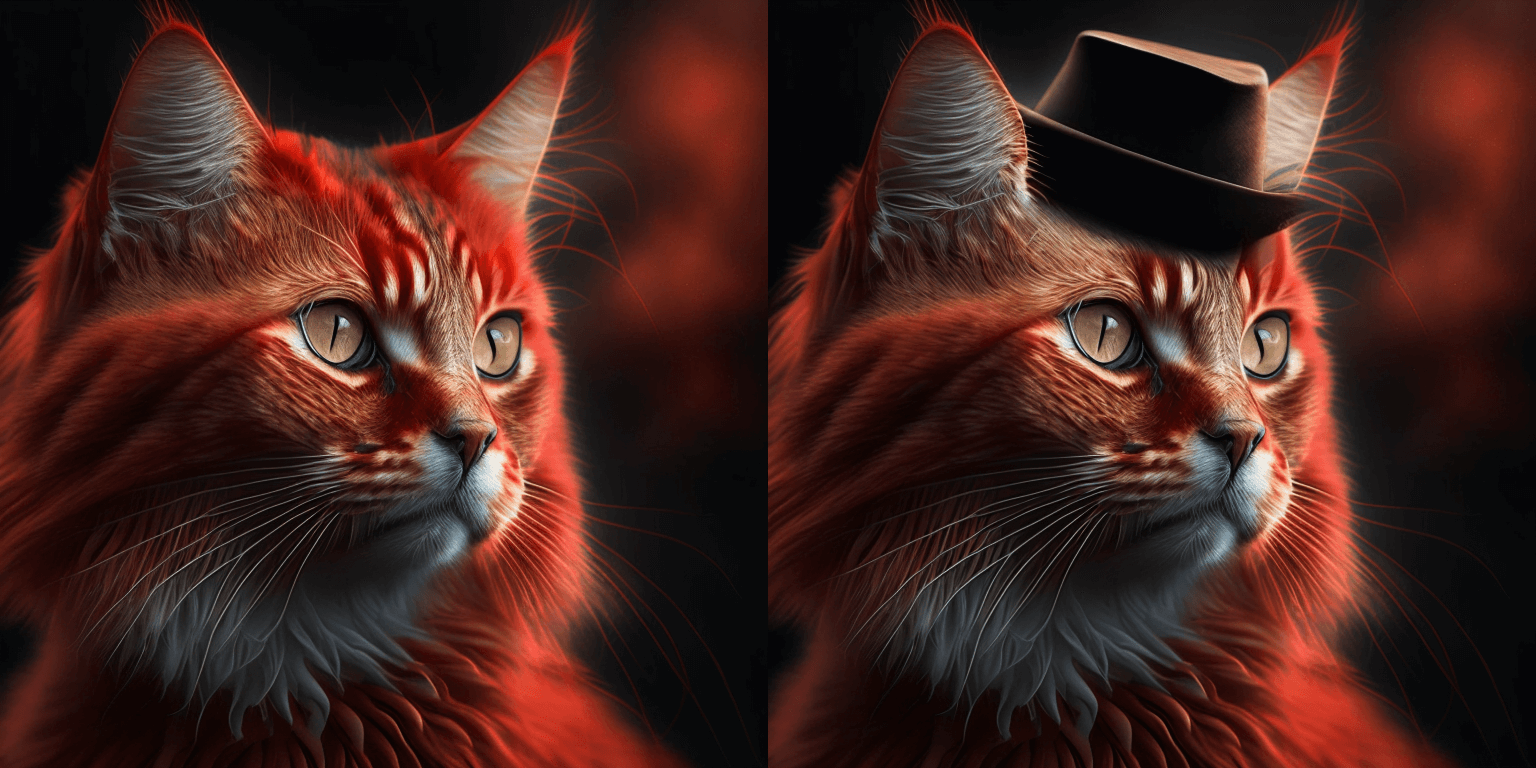

prompt = "a hat"

|

| 106 |

-

|

| 107 |

-

|

| 108 |

-

pipe = KandinskyInpaintPipeline.from_pretrained("kandinsky-community/kandinsky-2-1-inpaint", torch_dtype=torch.float16)

|

| 109 |

-

pipe.to("cuda")

|

| 110 |

|

| 111 |

-

|

| 112 |

"https://huggingface.co/datasets/hf-internal-testing/diffusers-images/resolve/main" "/kandinsky/cat.png"

|

| 113 |

)

|

| 114 |

|

| 115 |

-

mask = np.

|

| 116 |

# Let's mask out an area above the cat's head

|

| 117 |

-

mask[:250, 250:-250] =

|

| 118 |

-

|

| 119 |

-

out = pipe(

|

| 120 |

-

prompt,

|

| 121 |

-

image=init_image,

|

| 122 |

-

mask_image=mask,

|

| 123 |

-

**prior_output,

|

| 124 |

-

height=768,

|

| 125 |

-

width=768,

|

| 126 |

-

num_inference_steps=150,

|

| 127 |

-

)

|

| 128 |

|

| 129 |

-

image =

|

| 130 |

image.save("cat_with_hat.png")

|

| 131 |

```

|

| 132 |

|

|

@@ -173,6 +138,20 @@ image.save("starry_cat.png")

|

|

| 173 |

```

|

| 174 |

|

| 175 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 176 |

|

| 177 |

## Model Architecture

|

| 178 |

|

|

|

|

| 22 |

### Text to image

|

| 23 |

|

| 24 |

```python

|

| 25 |

+

from diffusers import AutoPipelineForText2Image

|

| 26 |

import torch

|

| 27 |

|

| 28 |

+

pipe = AutoPipelineForText2Image.from_pretrained("kandinsky-community/kandinsky-2-1", torch_dtype=torch.float16)

|

| 29 |

+

pipe.enable_model_cpu_offload()

|

|

|

|

|

|

|

|

|

|

| 30 |

|

| 31 |

prompt = "A alien cheeseburger creature eating itself, claymation, cinematic, moody lighting"

|

| 32 |

negative_prompt = "low quality, bad quality"

|

| 33 |

|

| 34 |

+

image = pipe(prompt=prompt, negative_prompt=negative_prompt, prior_guidance_scale =1.0, height=768, width=768).images[0]

|

|

|

|

|

|

|

| 35 |

image.save("cheeseburger_monster.png")

|

| 36 |

```

|

| 37 |

|

|

|

|

| 41 |

### Text Guided Image-to-Image Generation

|

| 42 |

|

| 43 |

```python

|

| 44 |

+

from diffusers import AutoPipelineForImage2Image

|

| 45 |

import torch

|

|

|

|

|

|

|

| 46 |

import requests

|

| 47 |

from io import BytesIO

|

| 48 |

+

from PIL import Image

|

| 49 |

+

import os

|

| 50 |

|

| 51 |

+

pipe = AutoPipelineForImage2Image.from_pretrained("kandinsky-community/kandinsky-2-1", torch_dtype=torch.float16)

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 52 |

|

| 53 |

+

pipe.enable_model_cpu_offload()

|

|

|

|

|

|

|

| 54 |

|

| 55 |

prompt = "A fantasy landscape, Cinematic lighting"

|

| 56 |

negative_prompt = "low quality, bad quality"

|

| 57 |

|

| 58 |

+

url = "https://raw.githubusercontent.com/CompVis/stable-diffusion/main/assets/stable-samples/img2img/sketch-mountains-input.jpg"

|

| 59 |

+

|

| 60 |

+

response = requests.get(url)

|

| 61 |

+

original_image = Image.open(BytesIO(response.content)).convert("RGB")

|

| 62 |

+

original_image.thumbnail((768, 768))

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 63 |

|

| 64 |

+

image = pipe(prompt=prompt, image=original_image, strength=0.3).images[0]

|

| 65 |

out.images[0].save("fantasy_land.png")

|

| 66 |

```

|

| 67 |

|

|

|

|

| 71 |

### Text Guided Inpainting Generation

|

| 72 |

|

| 73 |

```python

|

| 74 |

+

from diffusers import AutoPipelineForInpainting

|

| 75 |

from diffusers.utils import load_image

|

| 76 |

import torch

|

| 77 |

import numpy as np

|

| 78 |

|

| 79 |

+

pipe = AutoPipelineForInpainting.from_pretrained("kandinsky-community/kandinsky-2-1-inpaint", torch_dtype=torch.float16)

|

| 80 |

+

pipe.enable_model_cpu_offload()

|

|

|

|

|

|

|

| 81 |

|

| 82 |

prompt = "a hat"

|

| 83 |

+

negative_prompt = "low quality, bad quality"

|

|

|

|

|

|

|

|

|

|

| 84 |

|

| 85 |

+

original_image = load_image(

|

| 86 |

"https://huggingface.co/datasets/hf-internal-testing/diffusers-images/resolve/main" "/kandinsky/cat.png"

|

| 87 |

)

|

| 88 |

|

| 89 |

+

mask = np.zeros((768, 768), dtype=np.float32)

|

| 90 |

# Let's mask out an area above the cat's head

|

| 91 |

+

mask[:250, 250:-250] = 1

|

| 92 |

+

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 93 |

|

| 94 |

+

image = pipe(prompt=prompt, image=original_image, mask_image=mask).images[0]

|

| 95 |

image.save("cat_with_hat.png")

|

| 96 |

```

|

| 97 |

|

|

|

|

| 138 |

```

|

| 139 |

|

| 140 |

|

| 141 |

+

__<font color=red>Breaking change on the mask input:</font>__

|

| 142 |

+

|

| 143 |

+

We introduced a breaking change for Kandinsky inpainting pipeline in the following pull request: https://github.com/huggingface/diffusers/pull/4207. Previously we accepted a mask format where black pixels represent the masked-out area. We have changed to use white pixels to represent masks instead in order to have a unified mask format across all our pipelines.

|

| 144 |

+

Please upgrade your inpainting code to follow the above. If you are using Kandinsky Inpaint in production. You now need to change the mask to:

|

| 145 |

+

|

| 146 |

+

```python

|

| 147 |

+

# For PIL input

|

| 148 |

+

import PIL.ImageOps

|

| 149 |

+

mask = PIL.ImageOps.invert(mask)

|

| 150 |

+

|

| 151 |

+

# For PyTorch and Numpy input

|

| 152 |

+

mask = 1 - mask

|

| 153 |

+

```

|

| 154 |

+

|

| 155 |

|

| 156 |

## Model Architecture

|

| 157 |

|