Commit

•

13ed643

1

Parent(s):

dfae973

Fixed bug

Browse filesremoved binary suspected files

- README.txt +1 -0

- convert.py +113 -0

- train.py +14 -0

- train/F1_curve.png +0 -0

- train/PR_curve.png +0 -0

- train/P_curve.png +0 -0

- train/R_curve.png +0 -0

- train/args.yaml +97 -0

- train/confusion_matrix.png +0 -0

- train/confusion_matrix_normalized.png +0 -0

- weights/best.pt → train/events.out.tfevents.1687303014.jongkook90-desktop.37493.0 +2 -2

- train/labels.jpg +0 -0

- train/labels_correlogram.jpg +0 -0

- train/results.csv +4 -0

- train/results.png +0 -0

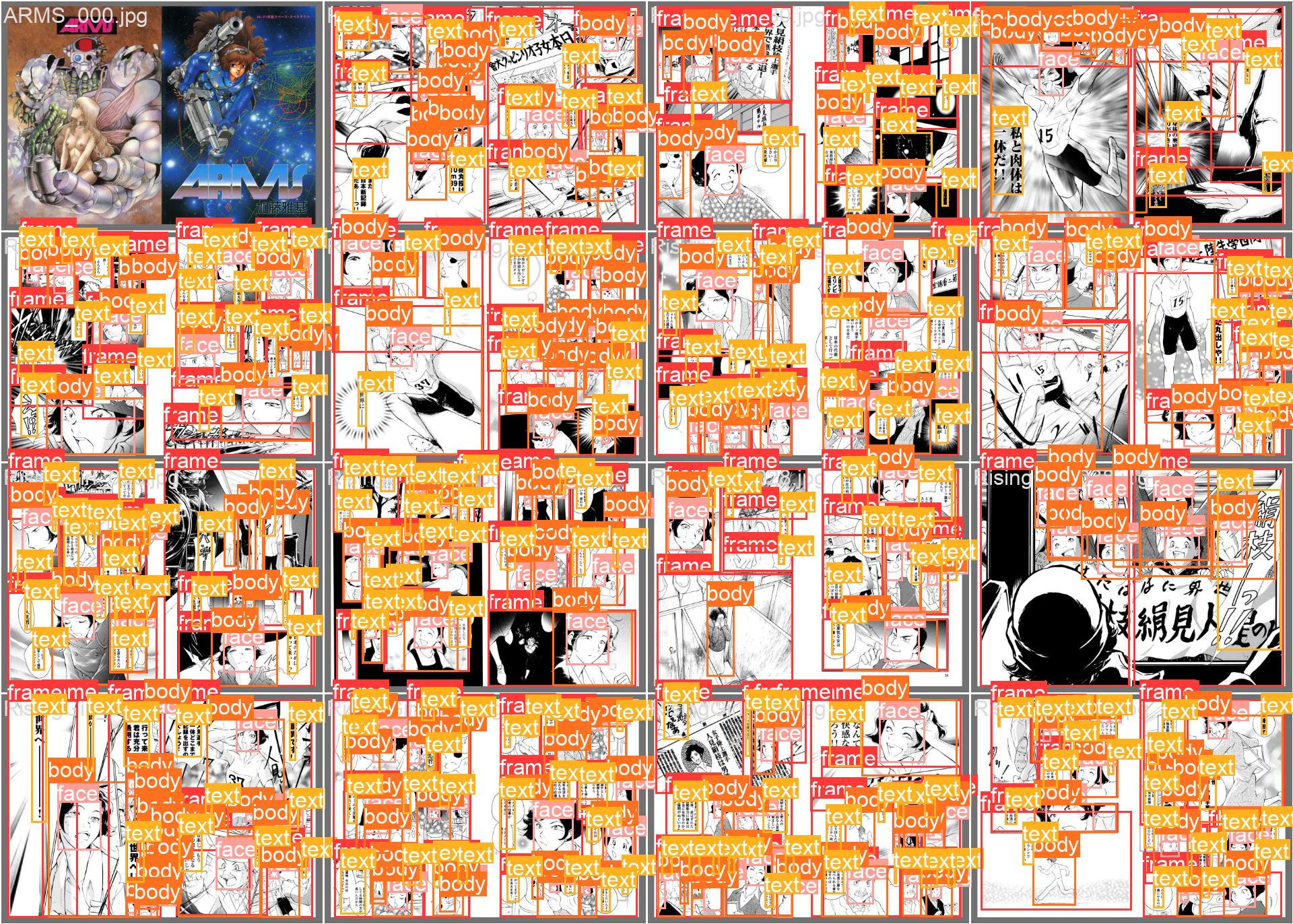

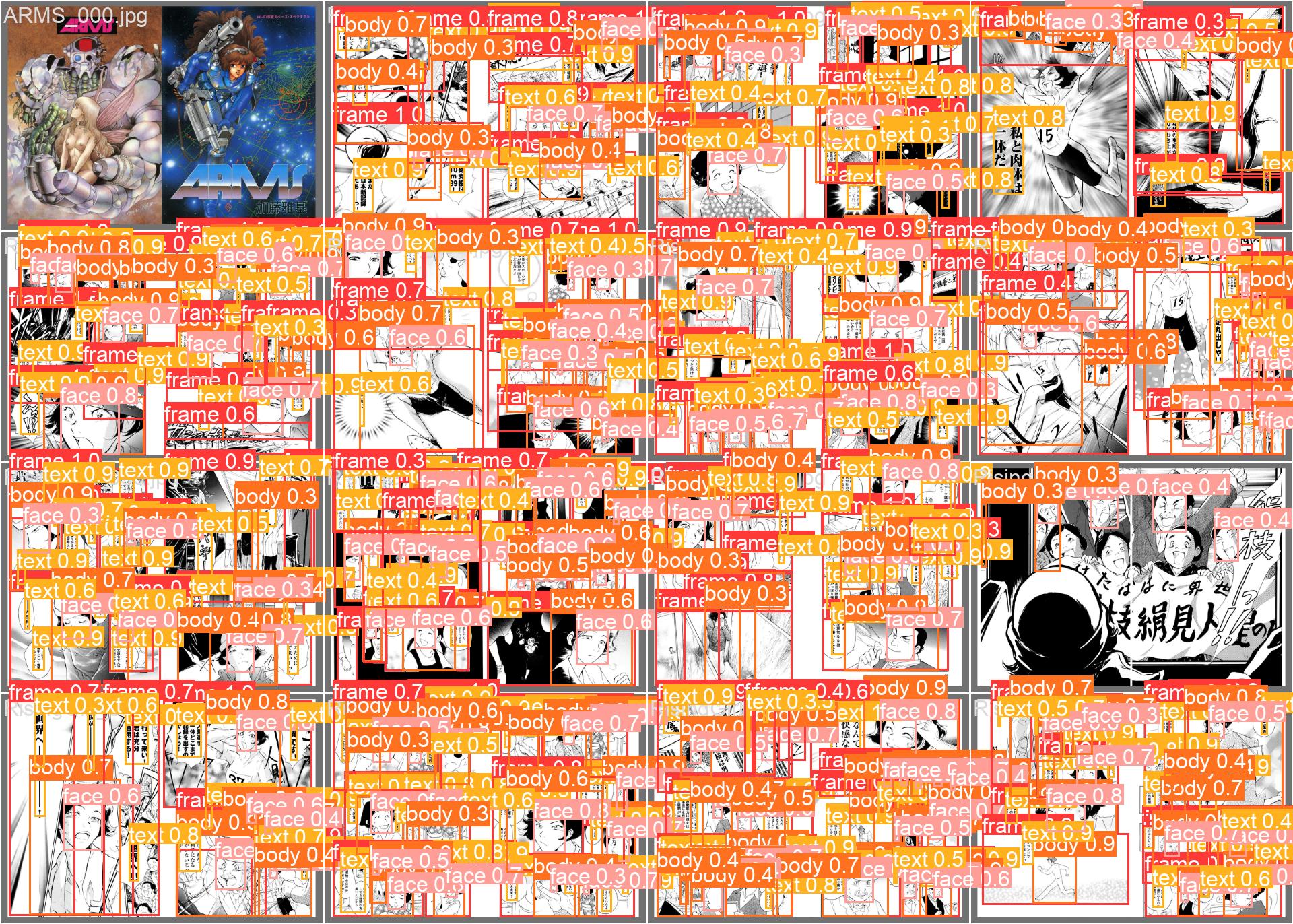

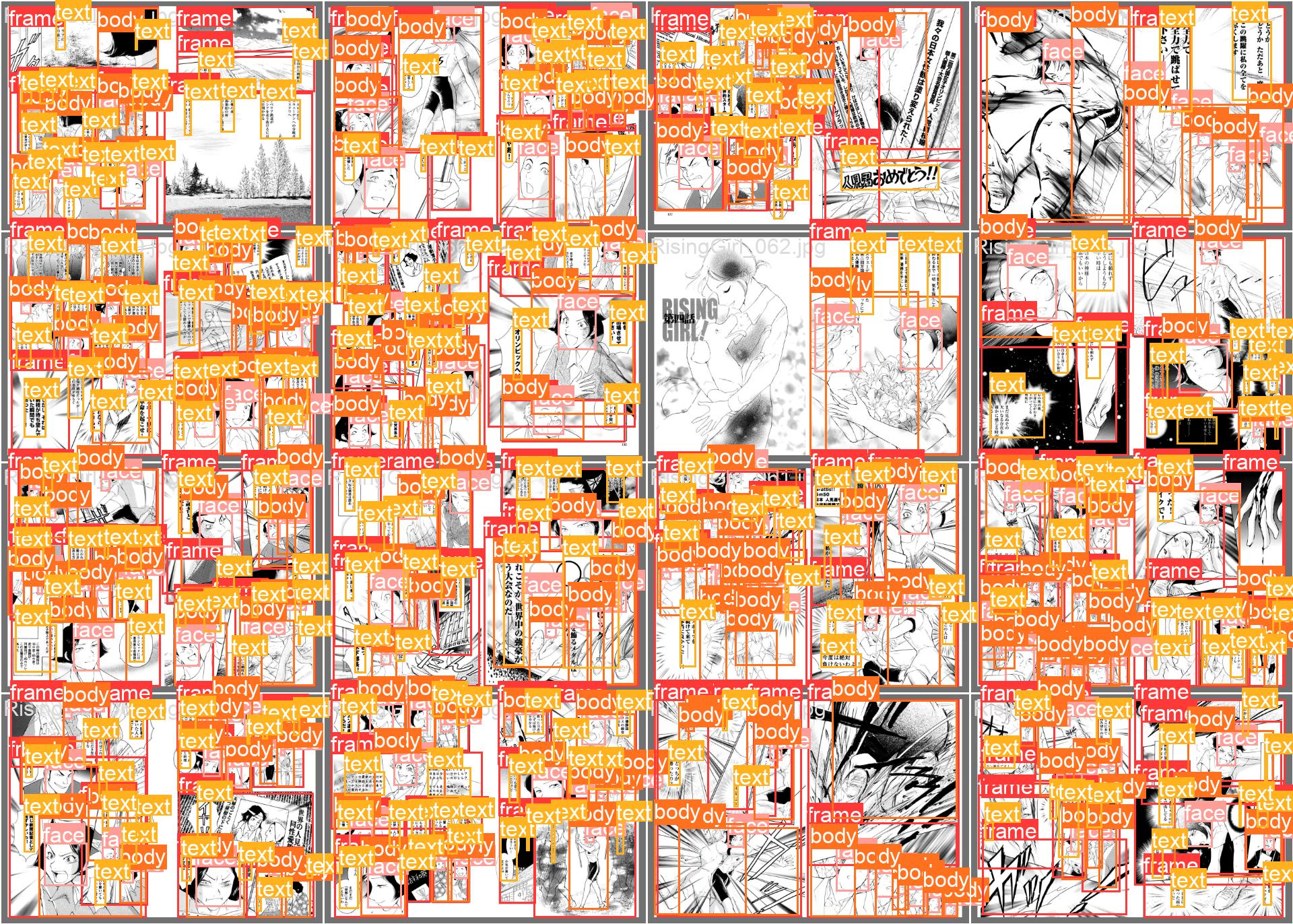

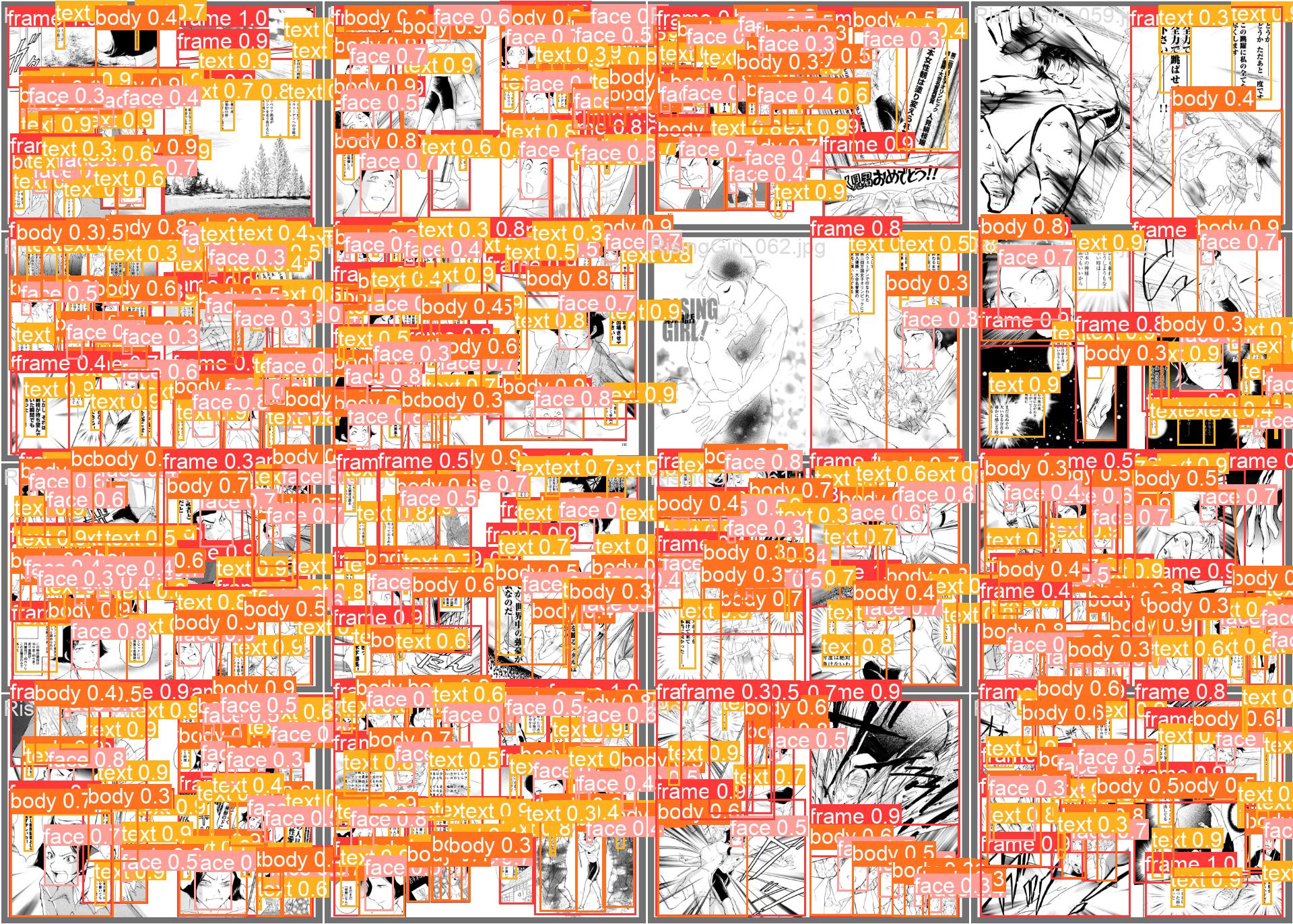

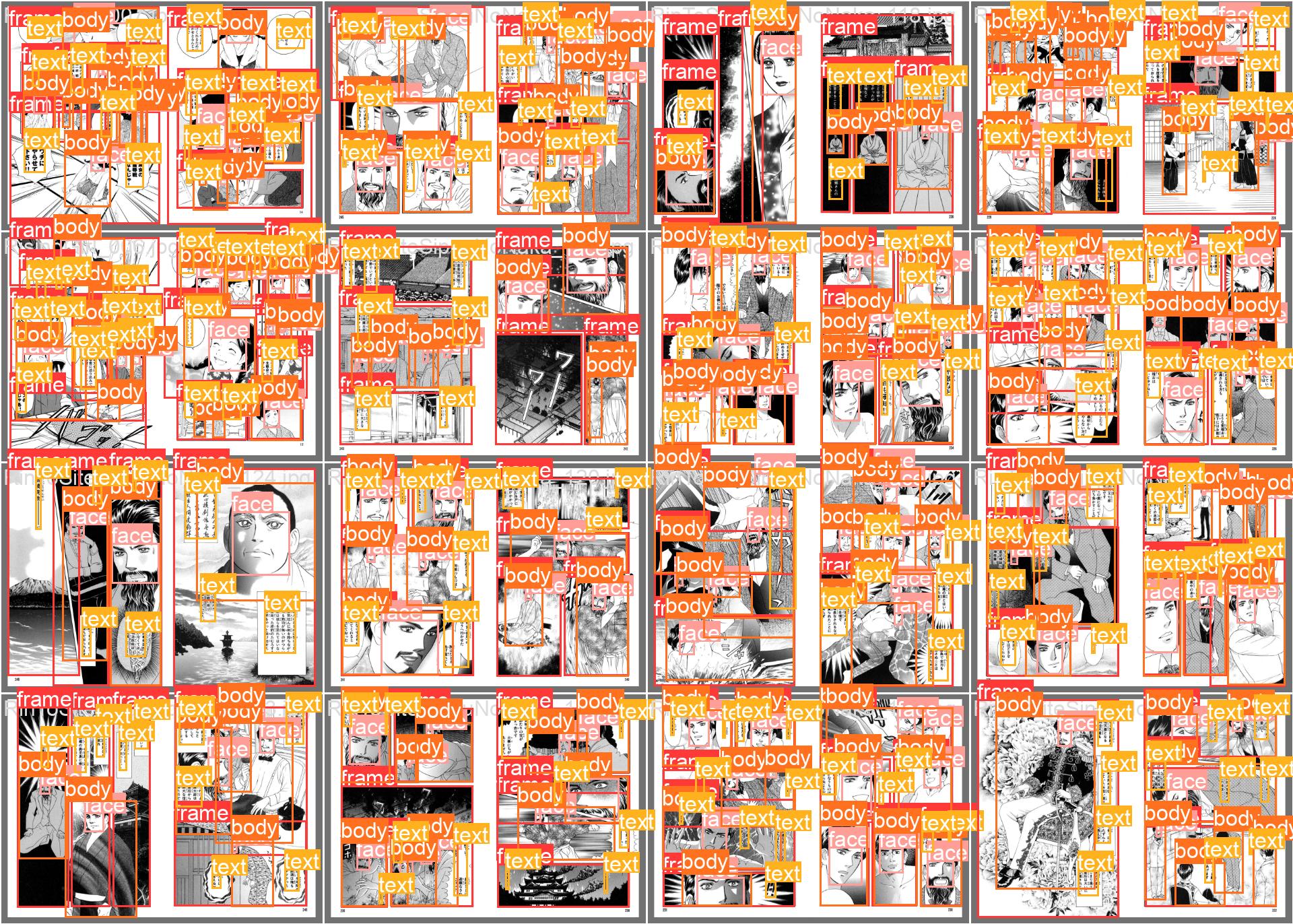

- train/val_batch0_labels.jpg +0 -0

- train/val_batch0_pred.jpg +0 -0

- train/val_batch1_labels.jpg +0 -0

- train/val_batch1_pred.jpg +0 -0

- train/val_batch2_labels.jpg +0 -0

- train/val_batch2_pred.jpg +0 -0

- train/weights/best.onnx +3 -0

- train/weights/best.pt +3 -0

- {weights → train/weights}/last.pt +2 -2

- train_log.txt +45 -24

- weights/README.md +0 -3

README.txt

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

https://github.com/ultralytics/ultralytics/commit/9d1e5567de48453f168013ff1032810bd95d39fe

|

convert.py

ADDED

|

@@ -0,0 +1,113 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import xml.etree.ElementTree as ET

|

| 2 |

+

import os

|

| 3 |

+

from PIL import Image

|

| 4 |

+

from IPython.display import display

|

| 5 |

+

import json

|

| 6 |

+

import pandas as pd

|

| 7 |

+

import yaml

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

## Generate label file

|

| 11 |

+

|

| 12 |

+

class Manga():

|

| 13 |

+

def __init__(self, path, image_path, output_path):

|

| 14 |

+

|

| 15 |

+

manga_name = path.split("/")[-1][3:-4]

|

| 16 |

+

print(manga_name)

|

| 17 |

+

self.image_path = lambda page: f"{image_path}/{manga_name}/{('000'+page)[-3:]}.jpg"

|

| 18 |

+

self.output_path = lambda obj_id, ext: f"{output_path}/{obj_id}.{ext}"

|

| 19 |

+

self.manga_name = manga_name

|

| 20 |

+

|

| 21 |

+

tree = ET.parse(path)

|

| 22 |

+

self.root = tree.getroot()

|

| 23 |

+

self.characters = [x.get('id') for x in self.root.find("characters")]

|

| 24 |

+

self.pages = {

|

| 25 |

+

page.get('index') :

|

| 26 |

+

{

|

| 27 |

+

"frame": {frame.attrib["id"]: frame.attrib for frame in page.findall("frame")},

|

| 28 |

+

"face": {face.attrib["id"]: face.attrib for face in page.findall("face")},

|

| 29 |

+

"body": {body.attrib["id"]: body.attrib for body in page.findall("body")},

|

| 30 |

+

"text": {text.attrib["id"]: {**text.attrib, "text":text.text} for text in page.findall("text")},

|

| 31 |

+

}

|

| 32 |

+

for page in self.root.find("pages")

|

| 33 |

+

}

|

| 34 |

+

self.pages_size = {

|

| 35 |

+

page.get('index') :

|

| 36 |

+

{

|

| 37 |

+

"page_size": (int(page.get("width")), int(page.get("height")))

|

| 38 |

+

}

|

| 39 |

+

for page in self.root.find("pages")

|

| 40 |

+

}

|

| 41 |

+

for page_id, page, elems, v in self._loop_over_elements():

|

| 42 |

+

self._parse_int(v)

|

| 43 |

+

v["position"] = self._calc_location(v)

|

| 44 |

+

v["size"] = self._calc_wh(v)

|

| 45 |

+

v["page_id"] = page_id

|

| 46 |

+

|

| 47 |

+

|

| 48 |

+

def _loop_over_elements(self):

|

| 49 |

+

for page_id, page in self.pages.items():

|

| 50 |

+

for elems in page.values():

|

| 51 |

+

for v in elems.values():

|

| 52 |

+

yield (page_id, page, elems, v)

|

| 53 |

+

@staticmethod

|

| 54 |

+

def _parse_int(obj):

|

| 55 |

+

for k in ["xmin", "xmax", "ymin", "ymax"]:

|

| 56 |

+

obj[k] = int(obj[k])

|

| 57 |

+

@staticmethod

|

| 58 |

+

def _calc_location(obj):

|

| 59 |

+

return (0.5 * (obj["xmin"]+obj["xmax"]), 0.5 * (obj["ymin"]+obj["ymax"]))

|

| 60 |

+

@staticmethod

|

| 61 |

+

def _calc_wh(obj):

|

| 62 |

+

return (obj["xmax"]-obj["xmin"]) , (obj["ymax"]-obj["ymin"])

|

| 63 |

+

|

| 64 |

+

def _get_image(self, obj):

|

| 65 |

+

image_path = self.image_path(obj["page_id"])

|

| 66 |

+

image = Image.open(image_path)

|

| 67 |

+

trimmed_image = image.crop((obj["xmin"],obj["ymin"], obj["xmax"], obj["ymax"]))

|

| 68 |

+

return trimmed_image

|

| 69 |

+

|

| 70 |

+

|

| 71 |

+

import json

|

| 72 |

+

path = './annotations_ko'

|

| 73 |

+

for xml_file in os.listdir(path):

|

| 74 |

+

if xml_file.endswith('.xml'):

|

| 75 |

+

xml_path = f"{path}/{xml_file}"

|

| 76 |

+

print(xml_path)

|

| 77 |

+

m = Manga(xml_path, './images/', "./json_features")

|

| 78 |

+

|

| 79 |

+

parts = {0:"frame", 1:"face", 2:"body", 3:"text"}

|

| 80 |

+

|

| 81 |

+

for page_id in m.pages.keys():

|

| 82 |

+

lines = []

|

| 83 |

+

orig_path = m.image_path(page_id)

|

| 84 |

+

new_path = "./yaml_yolo/labels/" + orig_path.replace("./images//","").replace("/","_").replace(".jpg",".txt")

|

| 85 |

+

for part_id, part in parts.items():

|

| 86 |

+

page = m.pages[page_id]

|

| 87 |

+

w, h = m.pages_size[page_id]["page_size"]

|

| 88 |

+

line = [(part_id, v["position"][0]/w, v["position"][1]/h, v["size"][0]/w, v["size"][1]/h) for k, v in page[part].items()]

|

| 89 |

+

if len(line)>0:

|

| 90 |

+

labels = "\n".join([" ".join(str(y) for y in x) for x in line])

|

| 91 |

+

lines.append(labels)

|

| 92 |

+

if len(lines)>0:

|

| 93 |

+

lines = "\n".join(lines)

|

| 94 |

+

print(orig_path, new_path)

|

| 95 |

+

print(lines)

|

| 96 |

+

with open(new_path, "wt") as f:

|

| 97 |

+

f.write(lines)

|

| 98 |

+

|

| 99 |

+

|

| 100 |

+

|

| 101 |

+

## Ganerate yaml file

|

| 102 |

+

|

| 103 |

+

cfg = {

|

| 104 |

+

"path": "../datasets/manga109",

|

| 105 |

+

"train": "images/train",

|

| 106 |

+

"val": "images/train",

|

| 107 |

+

"test": None,

|

| 108 |

+

"names":{0:"frame", 1:"face", 2:"body", 3:"text"},

|

| 109 |

+

}

|

| 110 |

+

|

| 111 |

+

with open('./yaml_yolo/manga109.yaml', 'w') as f:

|

| 112 |

+

yaml.dump(cfg, f)

|

| 113 |

+

|

train.py

ADDED

|

@@ -0,0 +1,14 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from ultralytics import YOLO

|

| 2 |

+

|

| 3 |

+

# Load a model

|

| 4 |

+

# model = YOLO("yolov8n.yaml") # build a new model from scratch

|

| 5 |

+

# model = YOLO("yolov8n.pt") # load a pretrained model (recommended for training)

|

| 6 |

+

model = YOLO("yolov8m.yaml") # build a new model from scratch

|

| 7 |

+

model = YOLO("yolov8m.pt") # load a pretrained model (recommended for training)

|

| 8 |

+

|

| 9 |

+

# Use the model

|

| 10 |

+

# model.train(data="coco128.yaml", epochs=3) # train the model

|

| 11 |

+

model.train(data="./datasets/manga109/manga109.yaml", epochs=3) # train the model

|

| 12 |

+

metrics = model.val() # evaluate model performance on the validation set

|

| 13 |

+

# results = model("https://ultralytics.com/images/bus.jpg") # predict on an image

|

| 14 |

+

path = model.export(format="onnx") # export the model to ONNX format

|

train/F1_curve.png

ADDED

|

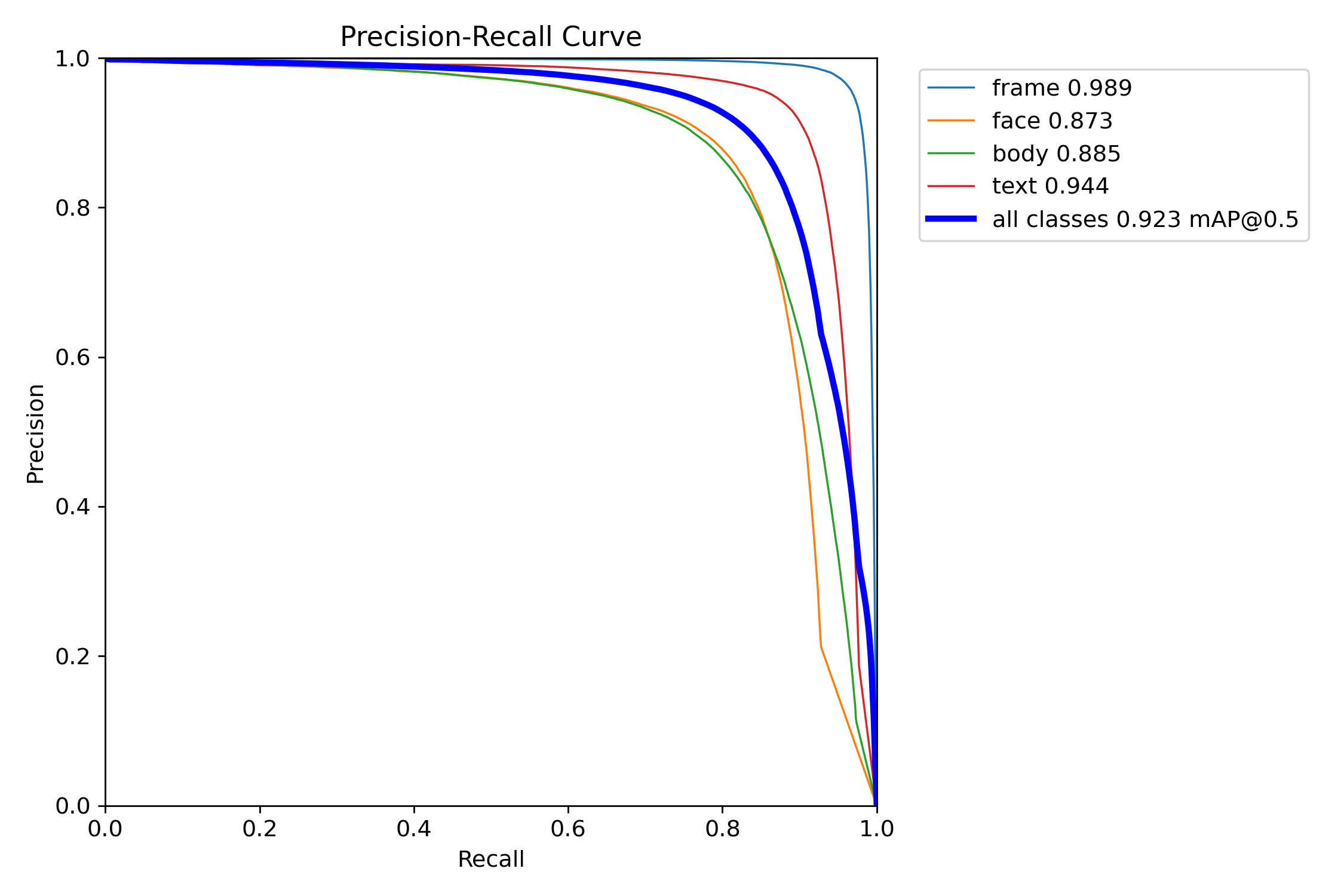

train/PR_curve.png

ADDED

|

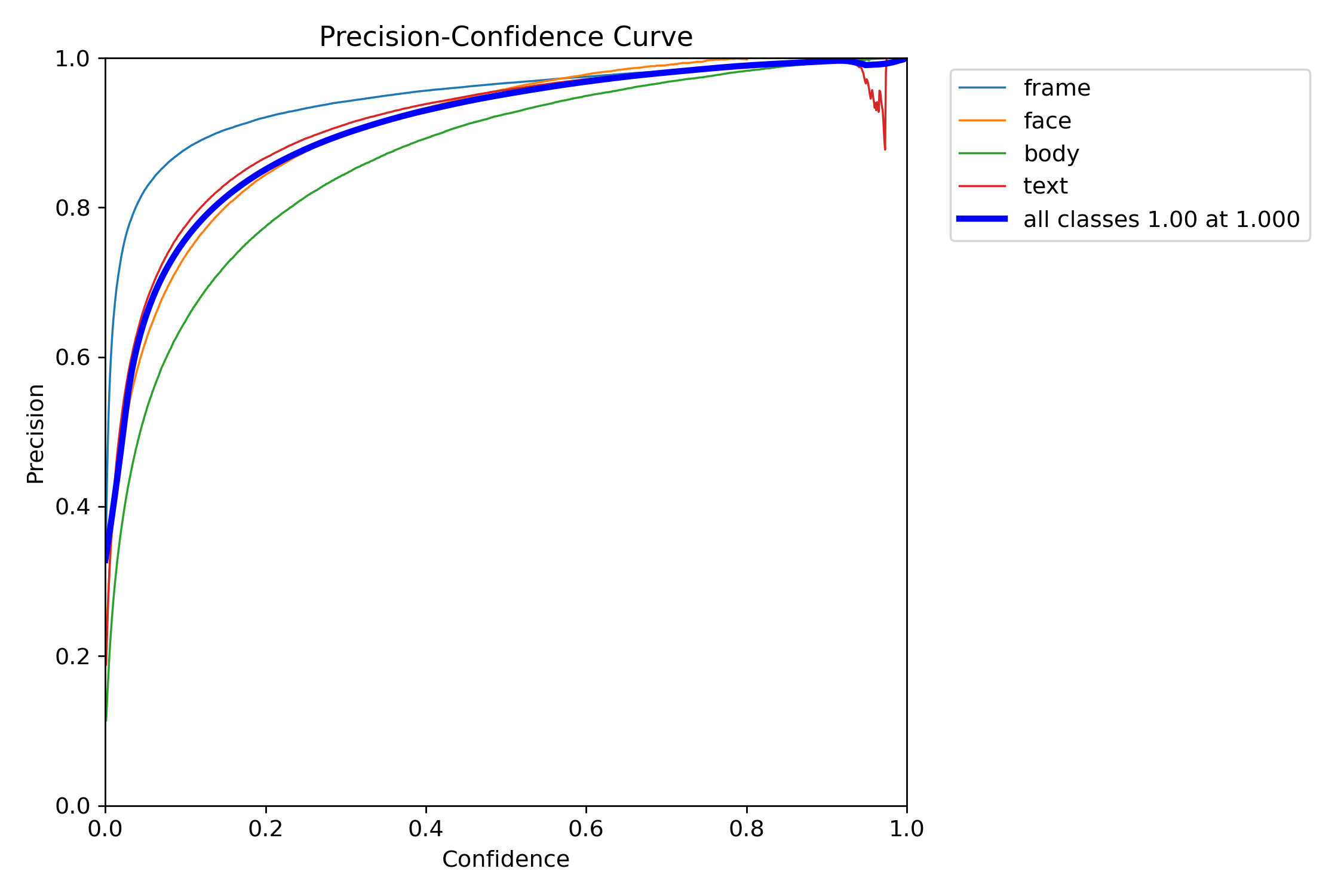

train/P_curve.png

ADDED

|

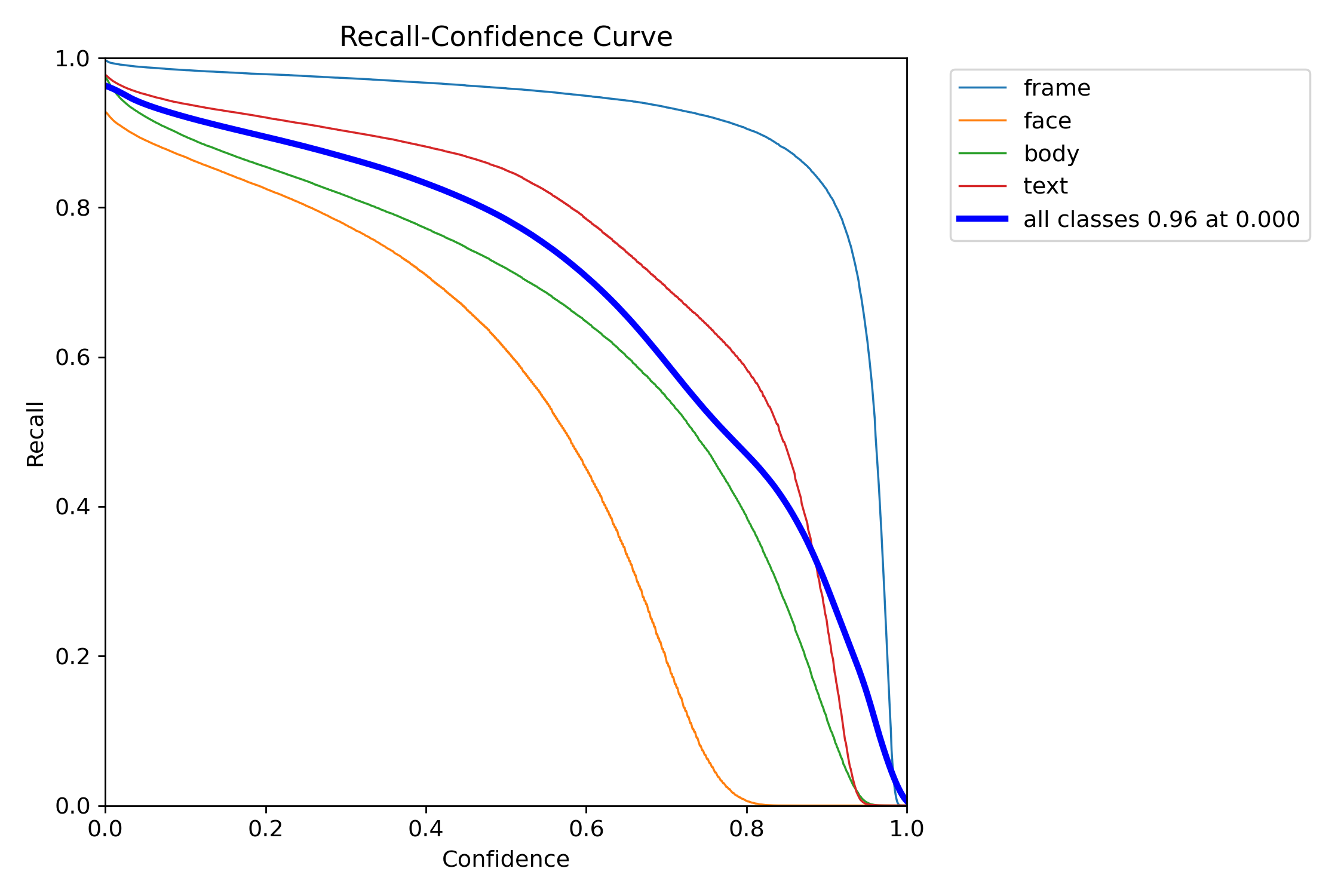

train/R_curve.png

ADDED

|

train/args.yaml

ADDED

|

@@ -0,0 +1,97 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

task: detect

|

| 2 |

+

mode: train

|

| 3 |

+

model: yolov8m.pt

|

| 4 |

+

data: ./datasets/manga109/manga109.yaml

|

| 5 |

+

epochs: 3

|

| 6 |

+

patience: 50

|

| 7 |

+

batch: 16

|

| 8 |

+

imgsz: 640

|

| 9 |

+

save: true

|

| 10 |

+

save_period: -1

|

| 11 |

+

cache: false

|

| 12 |

+

device: null

|

| 13 |

+

workers: 8

|

| 14 |

+

project: null

|

| 15 |

+

name: null

|

| 16 |

+

exist_ok: false

|

| 17 |

+

pretrained: true

|

| 18 |

+

optimizer: auto

|

| 19 |

+

verbose: true

|

| 20 |

+

seed: 0

|

| 21 |

+

deterministic: true

|

| 22 |

+

single_cls: false

|

| 23 |

+

rect: false

|

| 24 |

+

cos_lr: false

|

| 25 |

+

close_mosaic: 0

|

| 26 |

+

resume: false

|

| 27 |

+

amp: true

|

| 28 |

+

fraction: 1.0

|

| 29 |

+

profile: false

|

| 30 |

+

overlap_mask: true

|

| 31 |

+

mask_ratio: 4

|

| 32 |

+

dropout: 0.0

|

| 33 |

+

val: true

|

| 34 |

+

split: val

|

| 35 |

+

save_json: false

|

| 36 |

+

save_hybrid: false

|

| 37 |

+

conf: null

|

| 38 |

+

iou: 0.7

|

| 39 |

+

max_det: 300

|

| 40 |

+

half: false

|

| 41 |

+

dnn: false

|

| 42 |

+

plots: true

|

| 43 |

+

source: null

|

| 44 |

+

show: false

|

| 45 |

+

save_txt: false

|

| 46 |

+

save_conf: false

|

| 47 |

+

save_crop: false

|

| 48 |

+

show_labels: true

|

| 49 |

+

show_conf: true

|

| 50 |

+

vid_stride: 1

|

| 51 |

+

line_width: null

|

| 52 |

+

visualize: false

|

| 53 |

+

augment: false

|

| 54 |

+

agnostic_nms: false

|

| 55 |

+

classes: null

|

| 56 |

+

retina_masks: false

|

| 57 |

+

boxes: true

|

| 58 |

+

format: torchscript

|

| 59 |

+

keras: false

|

| 60 |

+

optimize: false

|

| 61 |

+

int8: false

|

| 62 |

+

dynamic: false

|

| 63 |

+

simplify: false

|

| 64 |

+

opset: null

|

| 65 |

+

workspace: 4

|

| 66 |

+

nms: false

|

| 67 |

+

lr0: 0.01

|

| 68 |

+

lrf: 0.01

|

| 69 |

+

momentum: 0.937

|

| 70 |

+

weight_decay: 0.0005

|

| 71 |

+

warmup_epochs: 3.0

|

| 72 |

+

warmup_momentum: 0.8

|

| 73 |

+

warmup_bias_lr: 0.1

|

| 74 |

+

box: 7.5

|

| 75 |

+

cls: 0.5

|

| 76 |

+

dfl: 1.5

|

| 77 |

+

pose: 12.0

|

| 78 |

+

kobj: 1.0

|

| 79 |

+

label_smoothing: 0.0

|

| 80 |

+

nbs: 64

|

| 81 |

+

hsv_h: 0.015

|

| 82 |

+

hsv_s: 0.7

|

| 83 |

+

hsv_v: 0.4

|

| 84 |

+

degrees: 0.0

|

| 85 |

+

translate: 0.1

|

| 86 |

+

scale: 0.5

|

| 87 |

+

shear: 0.0

|

| 88 |

+

perspective: 0.0

|

| 89 |

+

flipud: 0.0

|

| 90 |

+

fliplr: 0.5

|

| 91 |

+

mosaic: 1.0

|

| 92 |

+

mixup: 0.0

|

| 93 |

+

copy_paste: 0.0

|

| 94 |

+

cfg: null

|

| 95 |

+

v5loader: false

|

| 96 |

+

tracker: botsort.yaml

|

| 97 |

+

save_dir: runs/detect/train5

|

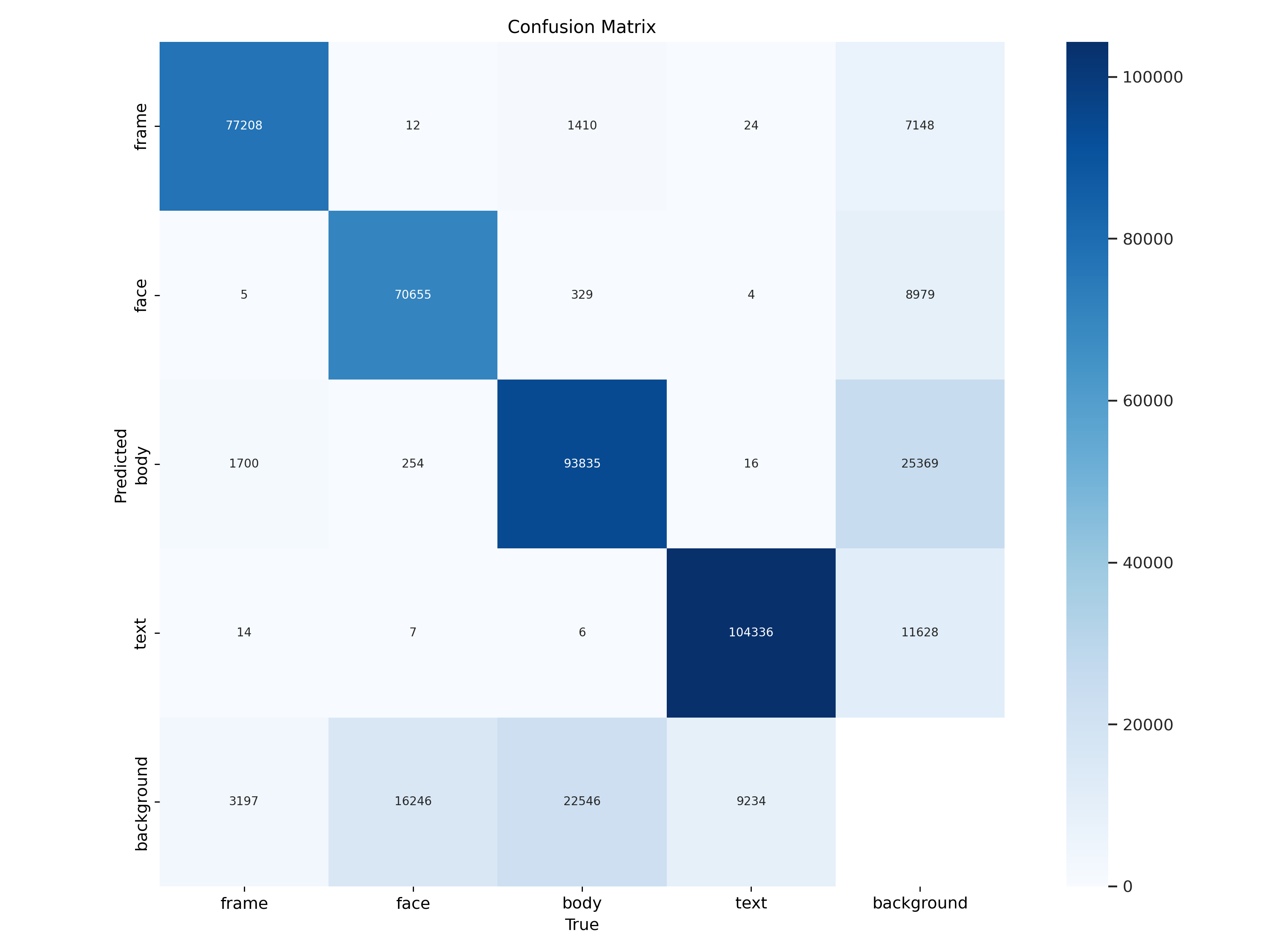

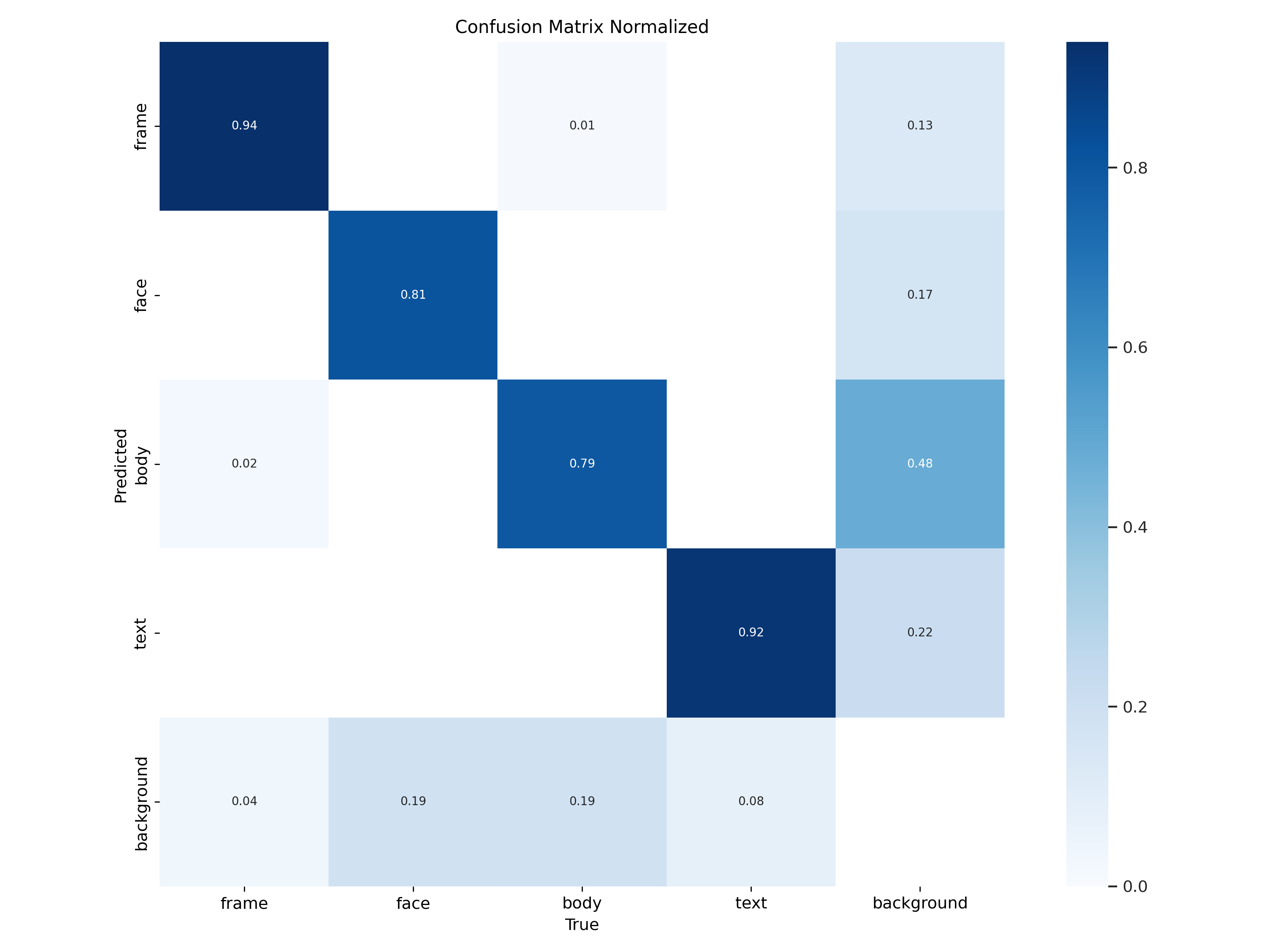

train/confusion_matrix.png

ADDED

|

train/confusion_matrix_normalized.png

ADDED

|

weights/best.pt → train/events.out.tfevents.1687303014.jongkook90-desktop.37493.0

RENAMED

|

@@ -1,3 +1,3 @@

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

-

oid sha256:

|

| 3 |

-

size

|

|

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:8d43759de4ce7e130ce2d6ba60e04ee594cac8d9af20f1ff36080e303308ad64

|

| 3 |

+

size 250830

|

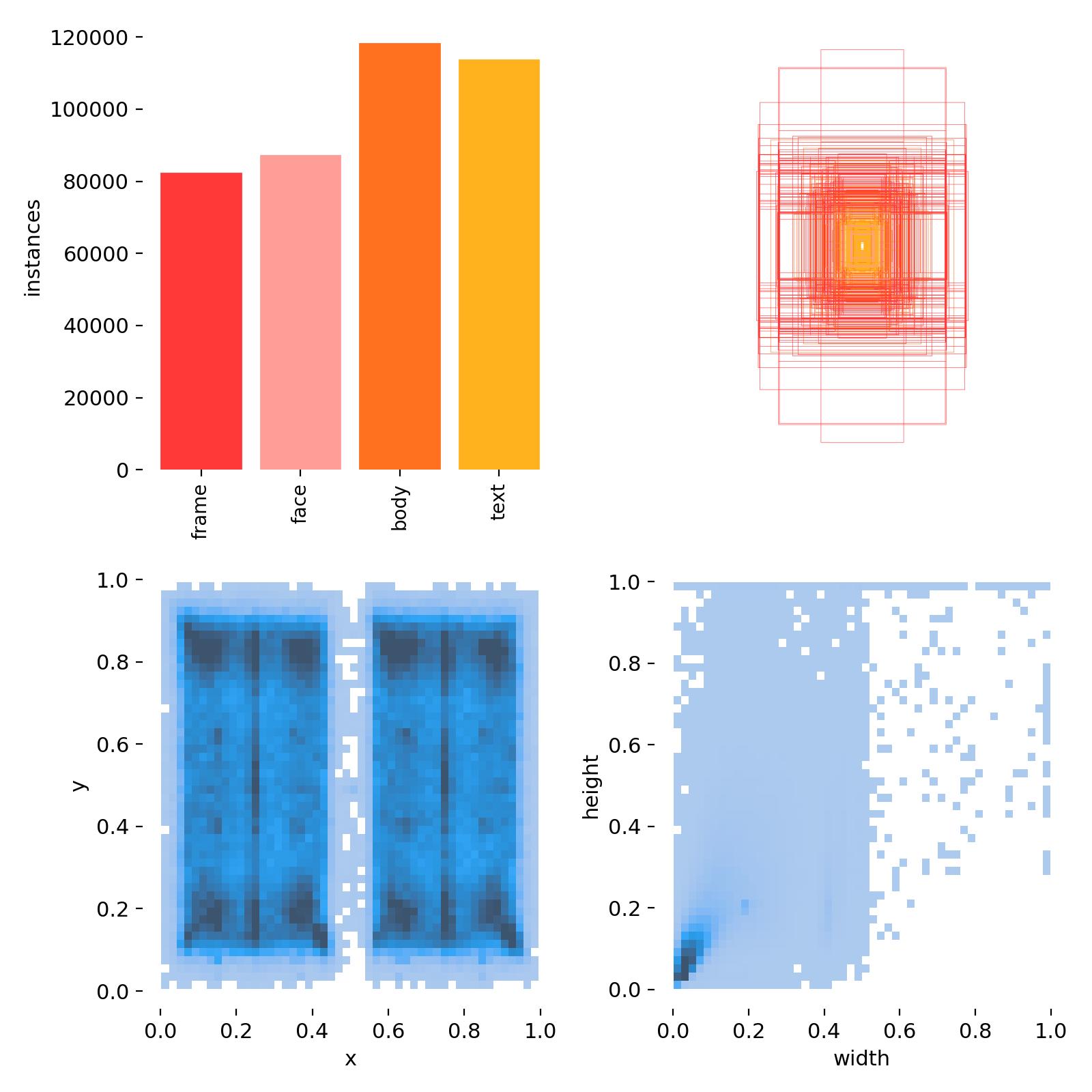

train/labels.jpg

ADDED

|

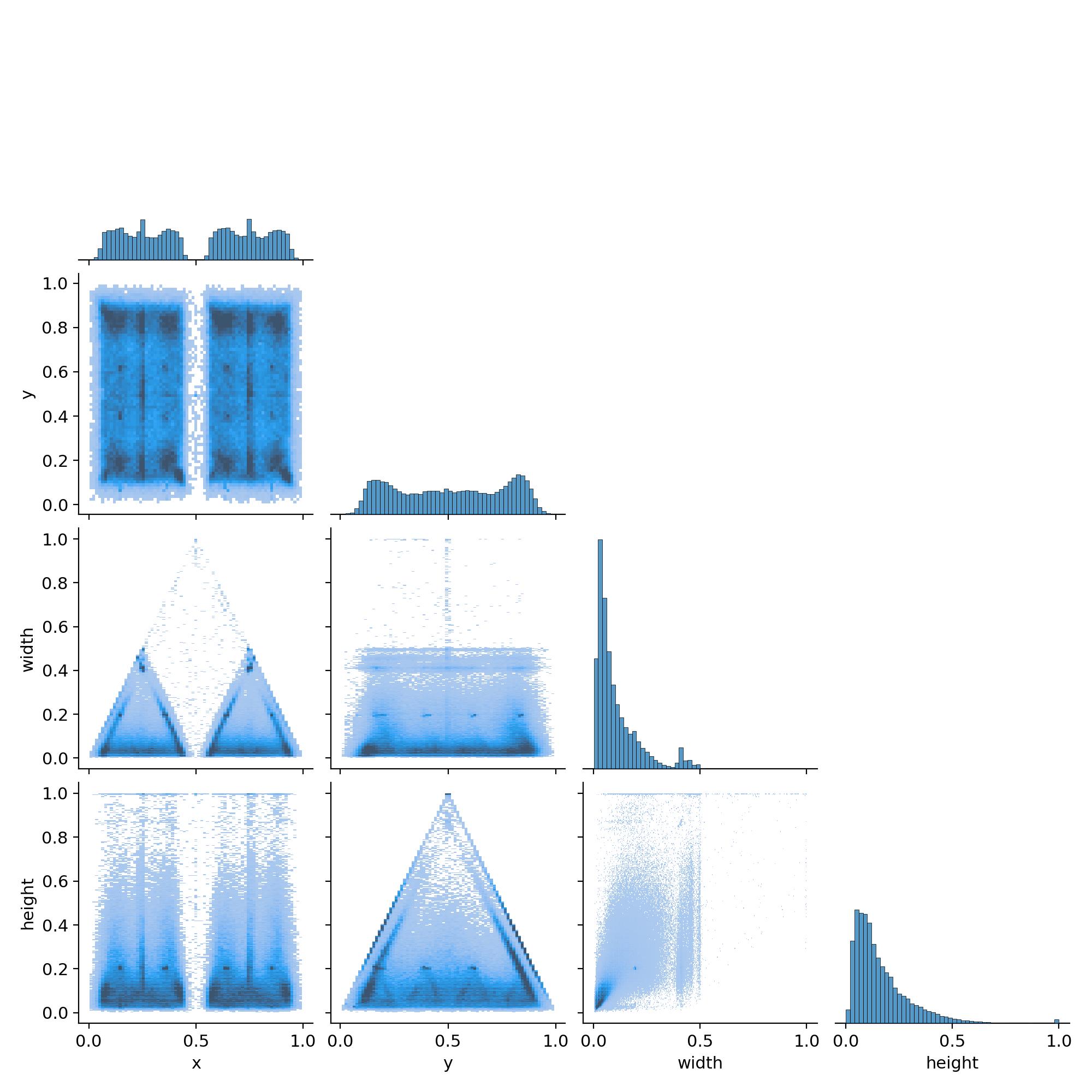

train/labels_correlogram.jpg

ADDED

|

train/results.csv

ADDED

|

@@ -0,0 +1,4 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

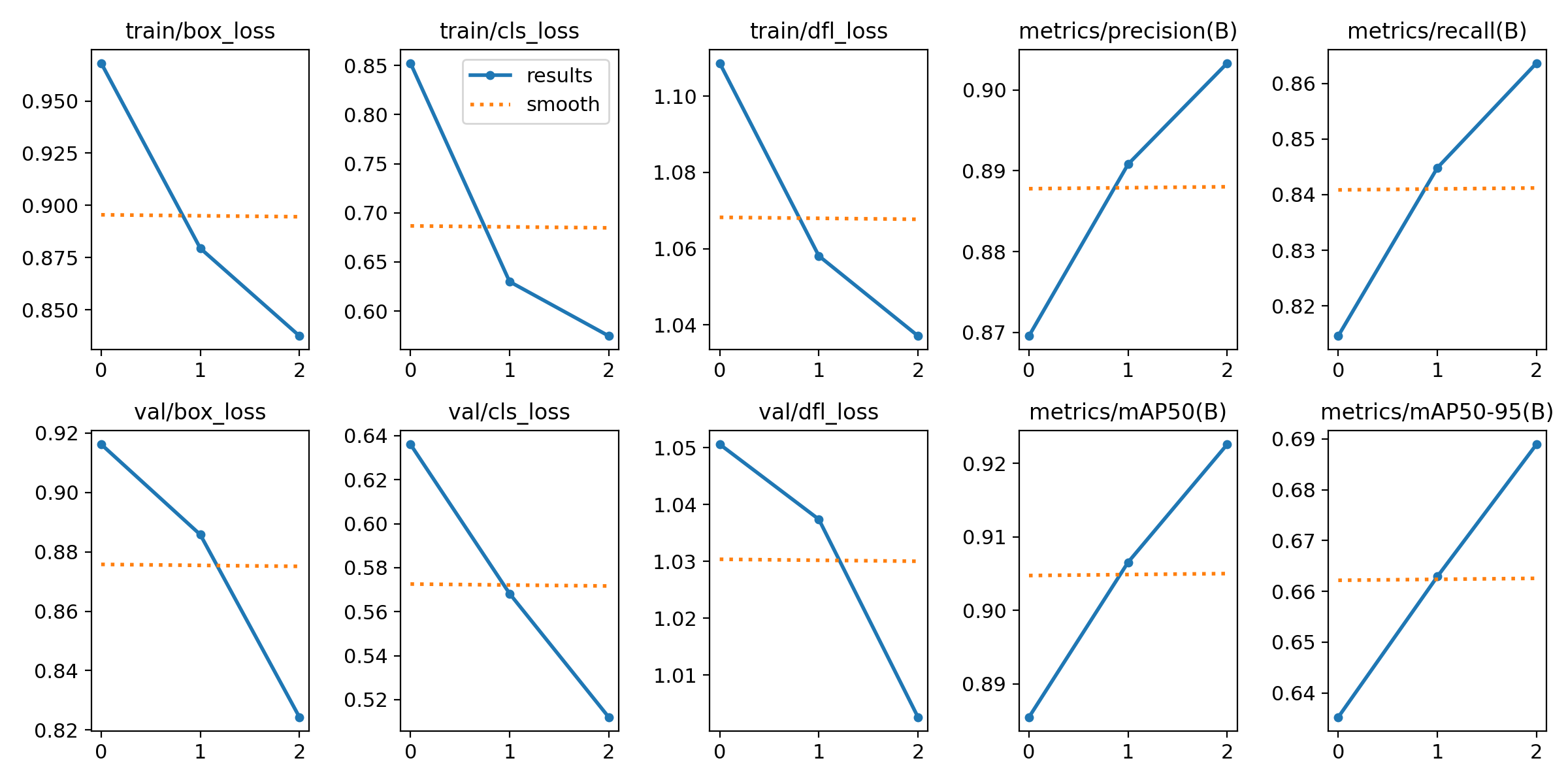

| 1 |

+

epoch, train/box_loss, train/cls_loss, train/dfl_loss, metrics/precision(B), metrics/recall(B), metrics/mAP50(B), metrics/mAP50-95(B), val/box_loss, val/cls_loss, val/dfl_loss, lr/pg0, lr/pg1, lr/pg2

|

| 2 |

+

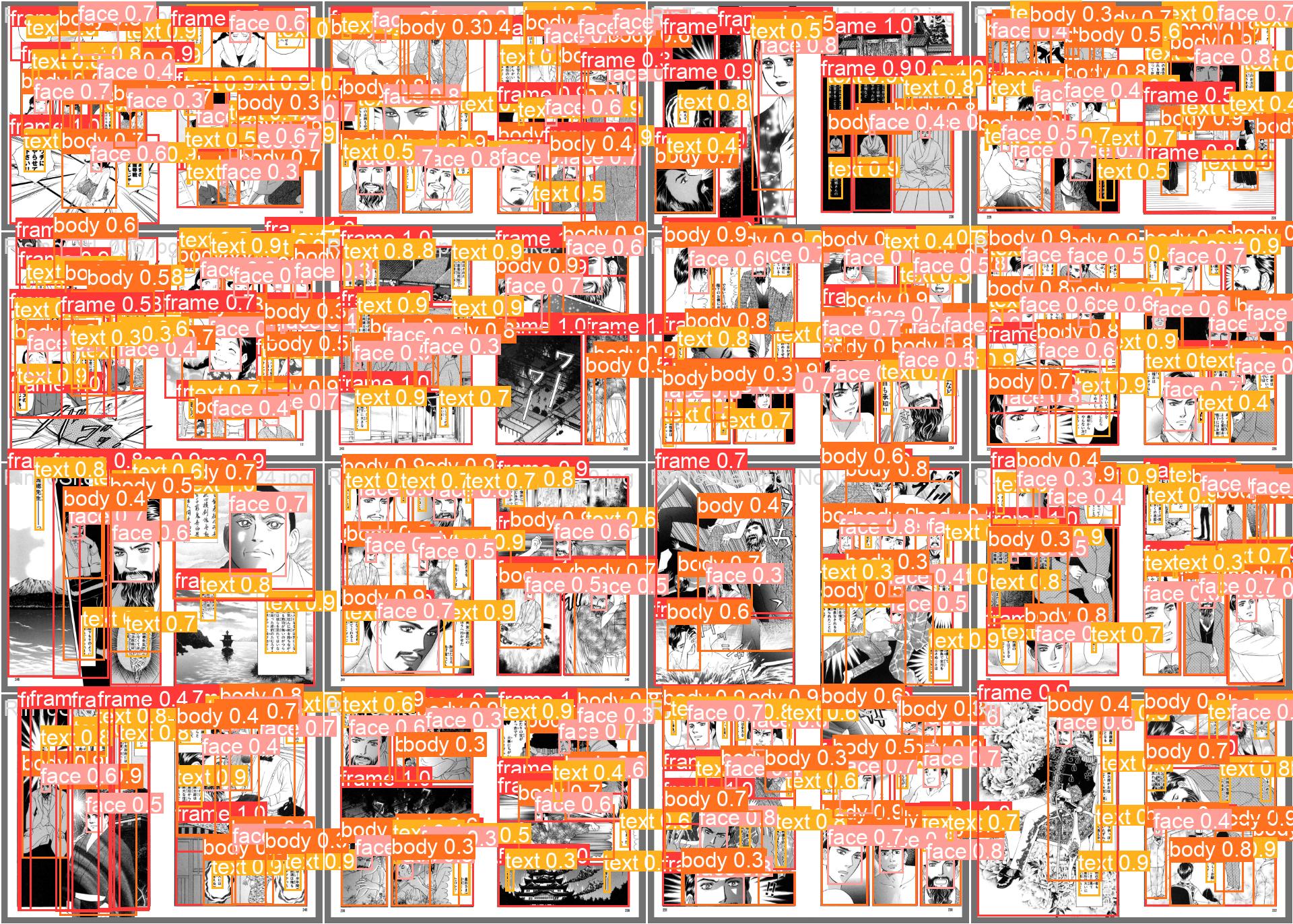

0, 0.96814, 0.85244, 1.1087, 0.86958, 0.8146, 0.88546, 0.63524, 0.91621, 0.63611, 1.0506, 0.00041588, 0.00041588, 0.00041588

|

| 3 |

+

1, 0.87945, 0.62993, 1.0581, 0.89082, 0.84483, 0.90655, 0.66293, 0.88578, 0.56822, 1.0374, 0.00055781, 0.00055781, 0.00055781

|

| 4 |

+

2, 0.83749, 0.57467, 1.0371, 0.90332, 0.86365, 0.9226, 0.68896, 0.82432, 0.51203, 1.0026, 0.00042473, 0.00042473, 0.00042473

|

train/results.png

ADDED

|

train/val_batch0_labels.jpg

ADDED

|

train/val_batch0_pred.jpg

ADDED

|

train/val_batch1_labels.jpg

ADDED

|

train/val_batch1_pred.jpg

ADDED

|

train/val_batch2_labels.jpg

ADDED

|

train/val_batch2_pred.jpg

ADDED

|

train/weights/best.onnx

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:a91eec11d8797c89f9db542f263c8d39263d249165964b5ef37beea8fe1ad3ba

|

| 3 |

+

size 103596340

|

train/weights/best.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:e74ad5b1c48c437da0b78d2318d0a25314fcee635359a239d5c70bd2f8da0ce5

|

| 3 |

+

size 52011990

|

{weights → train/weights}/last.pt

RENAMED

|

@@ -1,3 +1,3 @@

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

-

oid sha256:

|

| 3 |

-

size

|

|

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:9dc6c9c385a5db8e3b0a1756ca18da650d3677294740d909626ce7cf93d18486

|

| 3 |

+

size 52011990

|

train_log.txt

CHANGED

|

@@ -1,25 +1,46 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

| 13 |

-

|

| 14 |

-

|

| 15 |

-

|

| 16 |

-

|

| 17 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 18 |

Ultralytics YOLOv8.0.120 🚀 Python-3.9.7 torch-2.0.0+cu118 CUDA:0 (NVIDIA GeForce RTX 3090, 24265MiB)

|

| 19 |

-

Model summary (fused):

|

| 20 |

-

|

| 21 |

-

|

| 22 |

-

|

| 23 |

-

|

| 24 |

-

|

| 25 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Logging results to runs/detect/train5

|

| 2 |

+

Starting training for 3 epochs...

|

| 3 |

+

|

| 4 |

+

Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size

|

| 5 |

+

1/3 8.35G 0.9681 0.8524 1.109 449 640: 100%|██████████| 533/533 [03:36<00:00, 2.47it/s]

|

| 6 |

+

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 267/267 [01:08<00:00, 3.89it/s]

|

| 7 |

+

all 8519 401038 0.87 0.815 0.885 0.635

|

| 8 |

+

|

| 9 |

+

Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size

|

| 10 |

+

2/3 9.16G 0.8795 0.6299 1.058 600 640: 100%|██████████| 533/533 [01:36<00:00, 5.54it/s]

|

| 11 |

+

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 267/267 [01:08<00:00, 3.90it/s]

|

| 12 |

+

all 8519 401038 0.891 0.845 0.907 0.663

|

| 13 |

+

|

| 14 |

+

Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size

|

| 15 |

+

3/3 10.2G 0.8375 0.5747 1.037 590 640: 100%|██████████| 533/533 [01:34<00:00, 5.63it/s]

|

| 16 |

+

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 267/267 [02:00<00:00, 2.21it/s]Bn

|

| 17 |

+

all 8519 401038 0.903 0.864 0.923 0.689

|

| 18 |

+

|

| 19 |

+

3 epochs completed in 0.191 hours.

|

| 20 |

+

Optimizer stripped from runs/detect/train5/weights/last.pt, 52.0MB

|

| 21 |

+

Optimizer stripped from runs/detect/train5/weights/best.pt, 52.0MB

|

| 22 |

+

|

| 23 |

+

Validating runs/detect/train5/weights/best.pt...

|

| 24 |

+

Ultralytics YOLOv8.0.120 🚀 Python-3.9.7 torch-2.0.0+cu118 CUDA:0 (NVIDIA GeForce RTX 3090, 24265MiB)

|

| 25 |

+

Model summary (fused): 218 layers, 25842076 parameters, 0 gradients

|

| 26 |

+

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 267/267 [01:56<00:00, 2.29it/s]

|

| 27 |

+

all 8519 401038 0.903 0.864 0.923 0.689

|

| 28 |

+

frame 8519 82124 0.944 0.972 0.989 0.942

|

| 29 |

+

face 8519 87174 0.903 0.77 0.873 0.467

|

| 30 |

+

body 8519 118126 0.852 0.811 0.885 0.642

|

| 31 |

+

text 8519 113614 0.915 0.9 0.944 0.704

|

| 32 |

+

Speed: 0.1ms preprocess, 2.1ms inference, 0.0ms loss, 0.8ms postprocess per image

|

| 33 |

+

Results saved to runs/detect/train5

|

| 34 |

Ultralytics YOLOv8.0.120 🚀 Python-3.9.7 torch-2.0.0+cu118 CUDA:0 (NVIDIA GeForce RTX 3090, 24265MiB)

|

| 35 |

+

Model summary (fused): 218 layers, 25842076 parameters, 0 gradients

|

| 36 |

+

val: Scanning /media/jongkook90/Morpho DB/dataset-manga109-ko/yolov8-frame/datasets/manga109/labels/train.cache... 8155 images, 364 backgrounds, 0 corrupt: 100%|██████████| 8519/8519 [00:00<?, ?it/s]

|

| 37 |

+

Class Images Instances Box(P R mAP50 mAP50-95): 0%| | 2/533 [00:08<43:38, 4.93s/it]WARNING ⚠️ NMS time limit 1.300s exceeded

|

| 38 |

+

Class Images Instances Box(P R mAP50 mAP50-95): 1%| | 3/533 [00:14<47:58, 5.43s/it]WARNING ⚠️ NMS time limit 1.300s exceeded

|

| 39 |

+

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 533/533 [02:06<00:00, 4.22it/s]

|

| 40 |

+

all 8519 401038 0.904 0.861 0.92 0.688

|

| 41 |

+

frame 8519 82124 0.944 0.969 0.986 0.941

|

| 42 |

+

face 8519 87174 0.903 0.769 0.87 0.467

|

| 43 |

+

body 8519 118126 0.852 0.809 0.882 0.641

|

| 44 |

+

text 8519 113614 0.916 0.897 0.941 0.704

|

| 45 |

+

Speed: 0.1ms preprocess, 4.1ms inference, 0.0ms loss, 1.5ms postprocess per image

|

| 46 |

+

Results saved to runs/detect/val3

|

weights/README.md

DELETED

|

@@ -1,3 +0,0 @@

|

|

| 1 |

-

yolov8 3epoch training

|

| 2 |

-

|

| 3 |

-

TODO: data augmentation, train result check

|

|

|

|

|

|

|

|

|

|

|

|