Create README.md

Browse files

README.md

ADDED

|

@@ -0,0 +1,409 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

pipeline_tag: object-detection

|

| 3 |

+

tags:

|

| 4 |

+

- bioinformatics

|

| 5 |

+

- metadata

|

| 6 |

+

- image analysis

|

| 7 |

+

- applied machine learning

|

| 8 |

+

- contrast enhancement

|

| 9 |

+

- detectron2

|

| 10 |

+

- fish

|

| 11 |

+

- images

|

| 12 |

+

- museum specimens

|

| 13 |

+

- metadata generation

|

| 14 |

+

- object detection

|

| 15 |

+

license: mit

|

| 16 |

+

---

|

| 17 |

+

|

| 18 |

+

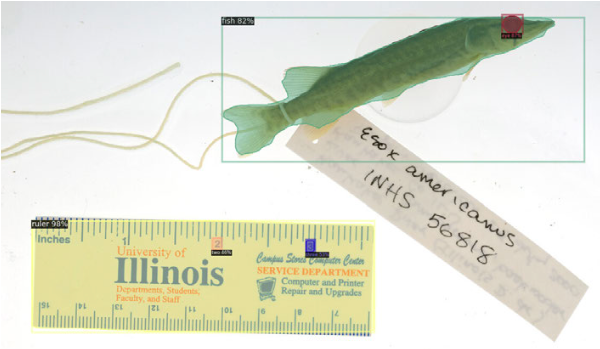

# Model Card for Drexel Metadata Generator

|

| 19 |

+

|

| 20 |

+

This model was designed to generate metadata for images of [museum] fish specimens. In addition to the metadata and quality metrics achieved with our initial model (detailed in [Automatic Metadata Generation for Fish Specimen Image Collections](https://ieeexplore.ieee.org/document/9651834), [bioRXiv](https://doi.org/10.1101/2021.10.04.463070

|

| 21 |

+

)), this updated model also generates various geometric and statistical properties on the mask generated over the biological specimen presented. Some examples of the new analytical features include convex area, eccentricity, perimeter, and skew. The updates to our model further improve on the accuracy, and time and labor cost over human generation.

|

| 22 |

+

|

| 23 |

+

<!--

|

| 24 |

+

This modelcard aims to be a base template for new models. It has been generated using [this raw template](https://github.com/huggingface/huggingface_hub/blob/main/src/huggingface_hub/templates/modelcard_template.md?plain=1). -->

|

| 25 |

+

|

| 26 |

+

## Model Details

|

| 27 |

+

|

| 28 |

+

### Model Description

|

| 29 |

+

|

| 30 |

+

<!-- Provide a longer summary of what this model is. -->

|

| 31 |

+

This model is based on [Facebook AI Research’s (FAIR) detectron tool](https://github.com/facebookresearch/detectron2) (the implementation of the Mask R-CNN architecture). There are five object classes identifiable by the model: fish, fish eyes, rulers, and the numbers two and three on rulers, as shown in Fig. 2, below. Of note is its capability to return pixel-by-pixel masks over detected objects.

|

| 32 |

+

|

| 33 |

+

||

|

| 34 |

+

|:--|

|

| 35 |

+

|**Figure 2.**|

|

| 36 |

+

|

| 37 |

+

Furthermore, detectron places no restriction on the number of object classes, can classify an arbitrary number of objects within a given image, and it is relatively straightforward to train it on COCO format datasets.

|

| 38 |

+

Objects that have a confidence score of at least 30% are maintained for analysis.

|

| 39 |

+

See the tables below for the number of class instances in the full (aggregate), INHS, and UWZM training datasets.

|

| 40 |

+

|

| 41 |

+

| **Table 1:** Aggregate training dataset | | **Table 2:** INHS Training Dataset | | **Table 3:** UWZM Training Dataset |

|

| 42 |

+

| ---- | ---- | ---- | ---- | ---- |

|

| 43 |

+

|

| 44 |

+

| Class | Number of Instances| | Class | Number of Instances| | Class | Number of Instances|

|

| 45 |

+

| --- | --- | ----- | --- | --- | ---- | --- | --- |

|

| 46 |

+

| Fish | 391| | Fish | 312| | Fish | 79|

|

| 47 |

+

| Ruler | 1095| | Ruler | 1016| | Ruler | 79|

|

| 48 |

+

| Eye | 550 | | Eye | 471 | | Eye | 79 |

|

| 49 |

+

| Two | 194 | | Two | 115 | | Two | 79 |

|

| 50 |

+

| Three | 194 | | Three | 115 | | Three | 79 |

|

| 51 |

+

|

| 52 |

+

|

| 53 |

+

See the [Glossary](#Glossary) below for a detailed list of the properties generated by the model.

|

| 54 |

+

|

| 55 |

+

|

| 56 |

+

- **Developed by:** Joel Pepper and Kevin Karnani

|

| 57 |

+

- **Shared by [optional]:** [More Information Needed]

|

| 58 |

+

- **Model type:** [More Information Needed]

|

| 59 |

+

- **Language(s) (NLP):** [More Information Needed]

|

| 60 |

+

- **License:** MIT <!-- As listed on the repo -->

|

| 61 |

+

- **Finetuned from model:** [detectron2 v0.6](https://github.com/facebookresearch/detectron2)

|

| 62 |

+

|

| 63 |

+

### Model Sources [optional]

|

| 64 |

+

|

| 65 |

+

<!-- Provide the basic links for the model. -->

|

| 66 |

+

|

| 67 |

+

- **Repository:** [hdr-bgnn/drexel_metadata](https://github.com/hdr-bgnn/drexel_metadata/)

|

| 68 |

+

- **Paper:** [Computational metadata generation methods for biological specimen image collections](https://doi.org/10.1007/s00799-022-00342-1) ([preprint](https://doi.org/10.21203/rs.3.rs-1506561/v1))

|

| 69 |

+

- **Demo [optional]:** [More Information Needed]

|

| 70 |

+

|

| 71 |

+

## Uses

|

| 72 |

+

|

| 73 |

+

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

|

| 74 |

+

|

| 75 |

+

### Direct Use

|

| 76 |

+

|

| 77 |

+

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

|

| 78 |

+

|

| 79 |

+

Object detection is currently being performed on 5 detection classes (fish, fish eyes, rulers, and the twos and threes found on rulers). The current setup is performed on the INHS and UWZM biological specimen image repositories.

|

| 80 |

+

|

| 81 |

+

#### Current Criteria

|

| 82 |

+

1. Image must contain a fish species (no eels, seashells, butterflies, seahorses, snakes, etc).

|

| 83 |

+

2. Image must contain only 1 of each class (except eyes).

|

| 84 |

+

3. Specimen body must lie alone the image plane from a side view.

|

| 85 |

+

4. Ruler must be consistent (only two ruler types, INHS + UWZM, were used in training set).

|

| 86 |

+

5. Fish must not be obscured by another object (petri dish for example).

|

| 87 |

+

6. Whole body of fish must be present (no heads, tails, or standalone features).

|

| 88 |

+

7. Fish body must not be folded and should have no curvature.

|

| 89 |

+

8. These do not need to be adhered to if properly set up/modified for a specific use case.

|

| 90 |

+

|

| 91 |

+

|

| 92 |

+

### Downstream Use [optional]

|

| 93 |

+

|

| 94 |

+

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

|

| 95 |

+

|

| 96 |

+

[More Information Needed]

|

| 97 |

+

|

| 98 |

+

### Out-of-Scope Use

|

| 99 |

+

|

| 100 |

+

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

|

| 101 |

+

|

| 102 |

+

[More Information Needed]

|

| 103 |

+

|

| 104 |

+

## Bias, Risks, and Limitations

|

| 105 |

+

|

| 106 |

+

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

|

| 107 |

+

|

| 108 |

+

This model was trained solely for use on fish specimens.

|

| 109 |

+

|

| 110 |

+

<!-- [More Information Needed] -->

|

| 111 |

+

|

| 112 |

+

The authors have declared that no conflict of interest exist.

|

| 113 |

+

|

| 114 |

+

|

| 115 |

+

### Recommendations

|

| 116 |

+

|

| 117 |

+

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

|

| 118 |

+

|

| 119 |

+

<!-- Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations. -->

|

| 120 |

+

|

| 121 |

+

|

| 122 |

+

## How to Get Started with the Model

|

| 123 |

+

|

| 124 |

+

<!-- Use the code below to get started with the model. -->

|

| 125 |

+

|

| 126 |

+

|

| 127 |

+

### Dependencies

|

| 128 |

+

|

| 129 |

+

Every dependency is stored in a Pipfile. To set this up, run the following commands:

|

| 130 |

+

|

| 131 |

+

```bash

|

| 132 |

+

pip install pipenv

|

| 133 |

+

pipenv install

|

| 134 |

+

```

|

| 135 |

+

|

| 136 |

+

There may be OS dependent installations one may need to perform.

|

| 137 |

+

|

| 138 |

+

|

| 139 |

+

### Running

|

| 140 |

+

|

| 141 |

+

To generate the metadata, run the following command:

|

| 142 |

+

```bash

|

| 143 |

+

pipenv run python3 gen_metadata.py [file_or_dir_name]

|

| 144 |

+

```

|

| 145 |

+

|

| 146 |

+

Usage:

|

| 147 |

+

```

|

| 148 |

+

gen_metadata.py [-h] [--device {cpu,cuda}] [--outfname OUTFNAME] [--maskfname MASKFNAME] [--visfname VISFNAME]

|

| 149 |

+

file_or_directory [limit]

|

| 150 |

+

```

|

| 151 |

+

|

| 152 |

+

The `limit` parameter will limit

|

| 153 |

+

the number of files processed in the directory. The `limit` positional argument is only applicable when passing a directory.

|

| 154 |

+

|

| 155 |

+

#### Device Configuration

|

| 156 |

+

By default `gen_metadata.py` requires a GPU (cuda).

|

| 157 |

+

To use a CPU instead pass the `--device cpu` argument to `gen_metadata.py`.

|

| 158 |

+

|

| 159 |

+

#### Single File Usage

|

| 160 |

+

The following three arguments are only supported when processing a single image file:

|

| 161 |

+

- `--outfname <filename>` - When passed the script will save the output metadata JSON to `<filename>` instead of printing to the console (the default behavior when processing one file).

|

| 162 |

+

- `--maskfname <filename>` - Enables logic to save an output mask to `<filename>` for the single input file.

|

| 163 |

+

- `--visfname <filename>` - Changes the script to save the output visualization to `<filename>` instead of the hard coded location.

|

| 164 |

+

|

| 165 |

+

These arguments are meant to simplify adding `gen_metadata.py` to a workflow that process files individually.

|

| 166 |

+

|

| 167 |

+

|

| 168 |

+

### Running with Singularity

|

| 169 |

+

A Docker container is automatically built for each **drexel_metadata** release. This container has the requirements installed and includes the model file.

|

| 170 |

+

To run the singularity container for a specific version follow this pattern:

|

| 171 |

+

```

|

| 172 |

+

singularity run docker://ghcr.io/hdr-bgnn/drexel_metadata:<release> gen_metadata.py ...

|

| 173 |

+

```

|

| 174 |

+

|

| 175 |

+

|

| 176 |

+

## Training Details

|

| 177 |

+

|

| 178 |

+

### Training Data

|

| 179 |

+

|

| 180 |

+

<!-- This should link to a Data Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

|

| 181 |

+

- University of Wisconsin Zoological Museum: [Fishes Collection](https://uwzm.integrativebiology.wisc.edu/fishes-collection/) (2022)

|

| 182 |

+

- Illinois Natural History Survey: [INHS Fish Collection](https://fish.inhs.illinois.edu/) (2022)

|

| 183 |

+

|

| 184 |

+

Labeled by hand using [makesense.ai](https://github.com/SkalskiP/make-sense/) (Skalski, P.: Make Sense. https://github.com/SkalskiP/make-sense/ (2019)).

|

| 185 |

+

|

| 186 |

+

Initially, we had 64 examples of each class from the UWZM collection in the training set. One issue that we encountered was the lack of catfish (_notorus genus_) in the training set, which led to a high count of undetected eyes in the testing set. Visually it is difficult even for humans to determine the location of catfish eyes given that they are either very close to the color of the skin or do not look like normal fish eyes. Thus, 15 catfish images from each image dataset were added to the training set.

|

| 187 |

+

|

| 188 |

+

### Training Procedure

|

| 189 |

+

|

| 190 |

+

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

|

| 191 |

+

|

| 192 |

+

Setup:

|

| 193 |

+

|

| 194 |

+

1. Create a COCO JSON training set using the images and labels.

|

| 195 |

+

1. This is done currently using [makesense](https://makesense.ai) in their polygon object detection mode.

|

| 196 |

+

2. The labels currently used are: `fish, ruler, eye, two, three` in that exact order.

|

| 197 |

+

3. Save as a COCO JSON after performing manual segmentations and labeling. Then, place in [datasets](datasets/).

|

| 198 |

+

2. In the [config](config/) directory, create a JSON file with a key name of the image directory on your local system, and then a value of an array of dataset names in the [datasets](datasets/) folder.

|

| 199 |

+

1. For multiple image collections, have multiple keys.

|

| 200 |

+

2. For multiple datasets for the same collection, append to the respective value array.

|

| 201 |

+

3. For example: `{"image_folder_name": ["dataset_name.json"]}`.

|

| 202 |

+

3. In the [train](train_model.py) script, set the `conf` variable at the top of the `main()` function to load the JSON file name created in the previous step.

|

| 203 |

+

4. Create a text file named `overall_prefix.txt` file in the [config](config/) folder. This file should have the absolute path to the directory in which all the image repositories will be stored.

|

| 204 |

+

1. Currently it is `/usr/local/bgnn/`. There are various image folders like `tulane`, `inhs_filtered`, `uwzm_filtered`, etc.

|

| 205 |

+

5. To edit the learning rate, batch size, or any other base training configuration, edit the [base training configurations](config/Base-RCNN-FPN.yaml) file.

|

| 206 |

+

6. To edit the number of iterations, dropoffs, or any model specific configurations, edit the [model training configurations](config/mask_rcnn_R_50_FPN_3x.yaml) file.

|

| 207 |

+

|

| 208 |

+

Finally, to train the model, run the following command:

|

| 209 |

+

|

| 210 |

+

```bash

|

| 211 |

+

pipenv run python3 train_model.py

|

| 212 |

+

```

|

| 213 |

+

|

| 214 |

+

#### Preprocessing [optional]

|

| 215 |

+

|

| 216 |

+

[More Information Needed]

|

| 217 |

+

|

| 218 |

+

|

| 219 |

+

#### Training Hyperparameters

|

| 220 |

+

|

| 221 |

+

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

|

| 222 |

+

|

| 223 |

+

#### Speeds, Sizes, Times [optional]

|

| 224 |

+

|

| 225 |

+

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

|

| 226 |

+

|

| 227 |

+

[More Information Needed]

|

| 228 |

+

|

| 229 |

+

## Evaluation

|

| 230 |

+

|

| 231 |

+

<!-- This section describes the evaluation protocols and provides the results. -->

|

| 232 |

+

|

| 233 |

+

### Testing Data, Factors & Metrics

|

| 234 |

+

|

| 235 |

+

#### Testing Data

|

| 236 |

+

|

| 237 |

+

<!-- This should link to a Data Card if possible. -->

|

| 238 |

+

|

| 239 |

+

[More Information Needed]

|

| 240 |

+

|

| 241 |

+

#### Factors

|

| 242 |

+

|

| 243 |

+

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

|

| 244 |

+

|

| 245 |

+

[More Information Needed]

|

| 246 |

+

|

| 247 |

+

#### Metrics

|

| 248 |

+

|

| 249 |

+

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

|

| 250 |

+

|

| 251 |

+

[More Information Needed]

|

| 252 |

+

|

| 253 |

+

### Results

|

| 254 |

+

|

| 255 |

+

[More Information Needed]

|

| 256 |

+

|

| 257 |

+

#### Summary

|

| 258 |

+

|

| 259 |

+

|

| 260 |

+

|

| 261 |

+

## Model Examination

|

| 262 |

+

|

| 263 |

+

<!-- Relevant interpretability work for the model goes here -->

|

| 264 |

+

|

| 265 |

+

### Goal

|

| 266 |

+

|

| 267 |

+

To develop a tool to check the validity of metadata associated with an image, and generate things that are missing. Also includes various geometric and statistical properties on the mask generated over the biological specimen presented.

|

| 268 |

+

|

| 269 |

+

### Metadata Generation

|

| 270 |

+

|

| 271 |

+

The metadata generated is extremely specific to our use case. In addition, we perform additional image processing techniques to improve our accuracies that may not work for other use cases. These include:

|

| 272 |

+

|

| 273 |

+

1. Image scaling when a fish is detected but not an eye, in an attempt to lower missing eyes.

|

| 274 |

+

2. Selection of highest confidence fish bounding box given our criterion of single fish in an image.

|

| 275 |

+

3. Contrast enhancement (CLAHE)

|

| 276 |

+

|

| 277 |

+

The metadata generated produces various statistical and geometric properties of a biological specimen image or collection in a JSON format. When a single file is passed, the data is yielded to the console (stdout). When a directory is passed, the data is stored in a JSON file.

|

| 278 |

+

|

| 279 |

+

|

| 280 |

+

## Environmental Impact

|

| 281 |

+

|

| 282 |

+

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

|

| 283 |

+

|

| 284 |

+

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://doi.org/10.48550/arXiv.1910.09700).

|

| 285 |

+

|

| 286 |

+

- **Hardware Type:** [More Information Needed]

|

| 287 |

+

- **Hours used:** [More Information Needed]

|

| 288 |

+

- **Cloud Provider:** [More Information Needed]

|

| 289 |

+

- **Compute Region:** [More Information Needed]

|

| 290 |

+

- **Carbon Emitted:** [More Information Needed]

|

| 291 |

+

|

| 292 |

+

## Technical Specifications [optional]

|

| 293 |

+

|

| 294 |

+

### Model Architecture and Objective

|

| 295 |

+

|

| 296 |

+

[More Information Needed]

|

| 297 |

+

|

| 298 |

+

### Compute Infrastructure

|

| 299 |

+

|

| 300 |

+

[More Information Needed]

|

| 301 |

+

|

| 302 |

+

#### Hardware

|

| 303 |

+

|

| 304 |

+

[More Information Needed]

|

| 305 |

+

|

| 306 |

+

#### Software

|

| 307 |

+

|

| 308 |

+

[More Information Needed]

|

| 309 |

+

|

| 310 |

+

## Citation

|

| 311 |

+

|

| 312 |

+

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

|

| 313 |

+

|

| 314 |

+

Karnani, K., Pepper, J., Bakiş, Y. et al. Computational metadata generation methods for biological specimen image collections. Int J Digit Libr (2022). https://doi.org/10.1007/s00799-022-00342-1

|

| 315 |

+

|

| 316 |

+

### Associated Publication

|

| 317 |

+

|

| 318 |

+

J. Pepper, J. Greenberg, Y. Bakiş, X. Wang, H. Bart and D. Breen, "Automatic Metadata Generation for Fish Specimen Image Collections," 2021 ACM/IEEE Joint Conference on Digital Libraries (JCDL), 2021, pp. 31-40, doi: [10.1109/JCDL52503.2021.00015](https://doi.org/10.1109/JCDL52503.2021.00015).

|

| 319 |

+

|

| 320 |

+

|

| 321 |

+

**BibTeX:**

|

| 322 |

+

|

| 323 |

+

@article{KPB2022,

|

| 324 |

+

|

| 325 |

+

title = "Computational metadata generation methods for biological specimen image collections",

|

| 326 |

+

|

| 327 |

+

author = "Karnani, K., Pepper, J., Bakiş, Y. et al.",

|

| 328 |

+

|

| 329 |

+

journal = "Int J Digit Libr",

|

| 330 |

+

|

| 331 |

+

year = "2022"

|

| 332 |

+

|

| 333 |

+

}

|

| 334 |

+

<!--

|

| 335 |

+

**APA:**

|

| 336 |

+

|

| 337 |

+

[More Information Needed] -->

|

| 338 |

+

|

| 339 |

+

## Glossary

|

| 340 |

+

|

| 341 |

+

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

|

| 342 |

+

|

| 343 |

+

### Properties Generated

|

| 344 |

+

| **Property** | **Association** | **Type** | **Explanation** |

|

| 345 |

+

|----------------------------------|--------------------------|-------------------|-------------------------------------------------------------------------------------------------------------------------------------------------------------|

|

| 346 |

+

| has\_fish | Overall Image | Boolean | Whether a fish was found in the image. |

|

| 347 |

+

| fish\_count | Overall Image | Integer | The quantity of fish present. |

|

| 348 |

+

| has\_ruler | Overall Image | Boolean | Whether a ruler was found in the image. |

|

| 349 |

+

| ruler\_bbox | Overall Image | 4 Tuple | The bounding box of the ruler (if found). |

|

| 350 |

+

| scale* | Overall Image | Float | The scale of the image in $\frac{\mathrm{pixels}}{\mathrm{cm}}$. |

|

| 351 |

+

| bbox | Per Fish | 4 Tuple | The top left and bottom right coordinates of the bounding box for a fish. |

|

| 352 |

+

| background.mean | Per Fish | Float | The mean intensity of the background within a given fish's bounding box. |

|

| 353 |

+

| background.std | Per Fish | Float | The standard deviation of the background within a given fish's bounding box. |

|

| 354 |

+

| foreground.mean | Per Fish | Float | The mean intensity of the foreground within a given fish's bounding box. |

|

| 355 |

+

| foreground.std | Per Fish | Float | The standard deviation of the foreground within a given fish's bounding box. |

|

| 356 |

+

| contrast* | Per Fish | Float | The contrast between foreground and background intensities within a given fish's bounding box. |

|

| 357 |

+

| centroid | Per Fish | 4 Tuple | The centroid of a given fish's bitmask. |

|

| 358 |

+

| primary\_axis* | Per Fish | 2D Vector | The unit length primary axis (eigenvector) for the bitmask of a given fish. |

|

| 359 |

+

| clock\_value* | Per Fish | Integer | Fish's primary axis converted into an integer "clock value" between 1 and 12. |

|

| 360 |

+

| oriented\_length* | Per Fish | Float | The length of the fish bounding box in centimeters. |

|

| 361 |

+

| mask | Per Fish | 2D Matrix | The bitmask of a fish in 0's and 1's. |

|

| 362 |

+

| pixel\_analysis\_failed | Per Fish | Boolean | Whether the pixel analysis process failed for a given fish. If true, detectron's mask and bounding box were used for metadata generation. |

|

| 363 |

+

| score | Per Fish | Float | The percent confidence score output by detectron for a given fish. |

|

| 364 |

+

| has\_eye | Per Fish | Boolean | Whether an eye was found for a given fish. |

|

| 365 |

+

| eye\_center | Per Fish | 2 Tuple | The centroid of a fish's eye. |

|

| 366 |

+

| side* | Per Fish | String | The side (i.e. 'left' or 'right') of the fish that is facing the camera (dependent on finding its eye). |

|

| 367 |

+

| area | Per Fish | Float | Area of fish in $\mathrm{cm^2}$. |

|

| 368 |

+

| cont\_length | Per Fish | Float | The longest contiguous length of the fish in centimeters. |

|

| 369 |

+

| cont\_width | Per Fish | Float | The longest contiguous width of the fish in centimeters. |

|

| 370 |

+

| convex\_area | Per Fish | Float | Area of convex hull image (smallest convex polygon that encloses the fish) in $\mathrm{cm^2}$. |

|

| 371 |

+

| eccentricity | Per Fish | Float | Ratio of the focal distance over the major axis length of the ellipse that has the same second-moments as the fish. |

|

| 372 |

+

| extent | Per Fish | Float | Ratio of pixels of fish to pixels in the total bounding box. Computed as $\frac{\mathrm{area}}{\mathrm{rows} * \mathrm{cols}}$ |

|

| 373 |

+

| feret\_diameter\_max | Per Fish | Float | The longest distance between points around the fish’s convex hull contour. |

|

| 374 |

+

| kurtosis | Per Fish | 2D Vector | The sharpness of the peaks of the frequency-distribution curve of mask pixel coordinates. |

|

| 375 |

+

| major\_axis\_length | Per Fish | Float | The length of the major axis of the ellipse that has the same normalized second central moments as the fish. |

|

| 376 |

+

| mask.encoding | Per Fish | String | The 8-way Freeman Encoding of the outline of the fish. |

|

| 377 |

+

| mask.start\_coord | Per Fish | 2D Vector | The starting coordinate of the Freeman encoded mask. |

|

| 378 |

+

| minor\_axis\_length | Per Fish | Float | The length of the minor axis of the ellipse that has the same normalized second central moments as the fish. |

|

| 379 |

+

| oriented\_width | Per Fish | Float | The width of the fish bounding box in centimeters. |

|

| 380 |

+

| perimeter | Per Fish | Float | The approximation of the contour in centimeters as a line through the centers of border pixels using 8-connectivity. |

|

| 381 |

+

| skew | Per Fish | 2D Vector | The measure of asymmetry of the frequency-distribution curve of mask pixel coordinates. |

|

| 382 |

+

| solidity | Per Fish | Float | The ratio of pixels in the fish to pixels of the convex hull image. |

|

| 383 |

+

| std | Per Fish | Float | The standard deviation of the mask pixel coordinate distribution. |

|

| 384 |

+

|

| 385 |

+

|

| 386 |

+

## More Information

|

| 387 |

+

|

| 388 |

+

Research supported by NSF Office of Advanced Cyberinfrastructure (OAC) #1940233 and #1940322.

|

| 389 |

+

|

| 390 |

+

### Authors and Affiliations:

|

| 391 |

+

**Computer Science Department, Drexel University, Philadelphia, PA, USA**

|

| 392 |

+

|

| 393 |

+

Kevin Karnani, Joel Pepper (corresponding author) & David E. Breen

|

| 394 |

+

|

| 395 |

+

**Biodiversity Research Institute, Tulane University, New Orleans, LA, USA**

|

| 396 |

+

|

| 397 |

+

Yasin Bakiş, Xiaojun Wang & Henry Bart Jr.

|

| 398 |

+

|

| 399 |

+

**Information Science Department, Drexel University, Philadelphia, PA, USA**

|

| 400 |

+

|

| 401 |

+

Jane Greenberg

|

| 402 |

+

|

| 403 |

+

## Model Card Authors [optional]

|

| 404 |

+

|

| 405 |

+

[More Information Needed]

|

| 406 |

+

|

| 407 |

+

## Model Card Contact

|

| 408 |

+

|

| 409 |

+

[More Information Needed]

|