Commit

•

e3e7026

1

Parent(s):

df45c2c

Update README.md

Browse files

README.md

CHANGED

|

@@ -62,13 +62,16 @@ Given an edit instruction and an original text, our model can generate the edite

|

|

| 62 |

|

| 63 |

|

| 64 |

|

|

|

|

|

|

|

|

|

|

| 65 |

## Usage

|

| 66 |

```python

|

| 67 |

from transformers import AutoTokenizer, T5ForConditionalGeneration

|

| 68 |

|

| 69 |

-

tokenizer = AutoTokenizer.from_pretrained("grammarly/coedit-

|

| 70 |

-

model = T5ForConditionalGeneration.from_pretrained("grammarly/coedit-

|

| 71 |

-

input_text = 'Fix grammatical errors in this sentence: New kinds of vehicles will be invented with new technology than today.'

|

| 72 |

input_ids = tokenizer(input_text, return_tensors="pt").input_ids

|

| 73 |

outputs = model.generate(input_ids, max_length=256)

|

| 74 |

edited_text = tokenizer.decode(outputs[0], skip_special_tokens=True)[0]

|

|

|

|

| 62 |

|

| 63 |

|

| 64 |

|

| 65 |

+

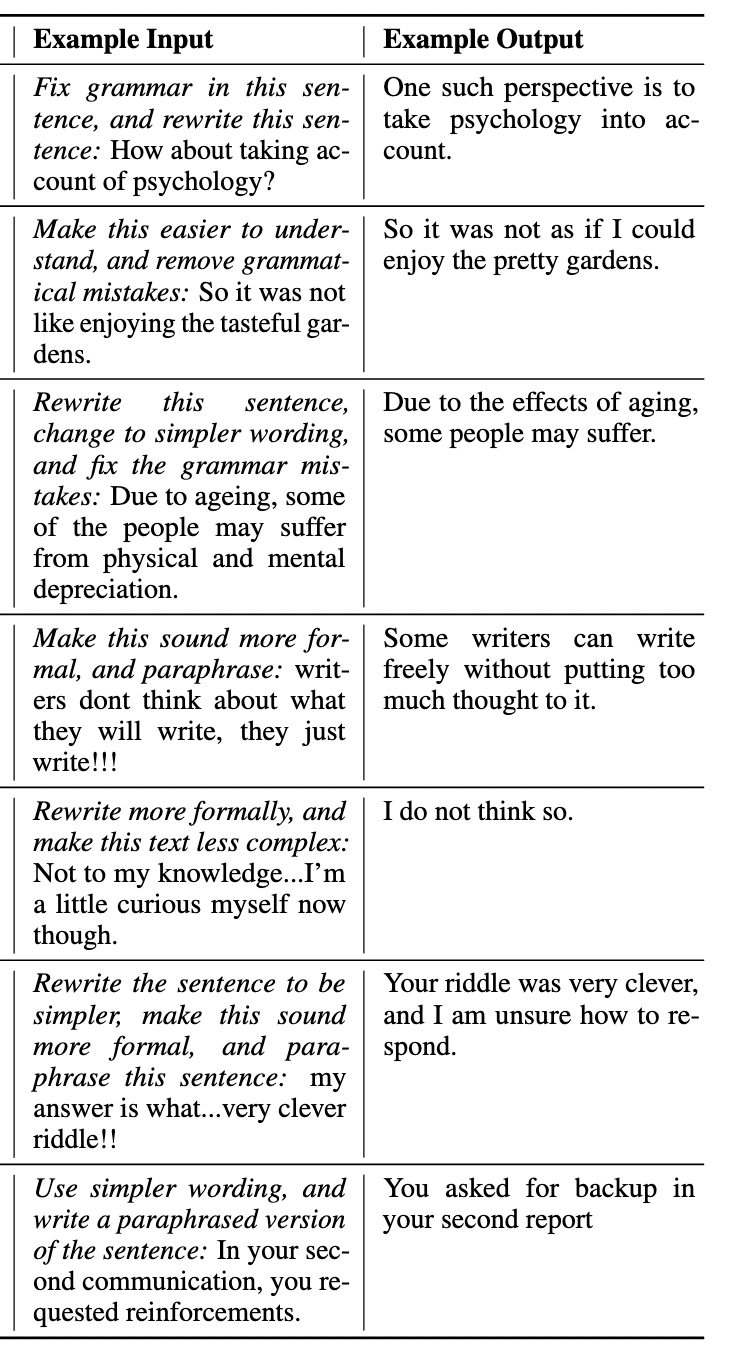

This model can also perform edits on composite instructions, as shown below:

|

| 66 |

+

|

| 67 |

+

|

| 68 |

## Usage

|

| 69 |

```python

|

| 70 |

from transformers import AutoTokenizer, T5ForConditionalGeneration

|

| 71 |

|

| 72 |

+

tokenizer = AutoTokenizer.from_pretrained("grammarly/coedit-xl-composite")

|

| 73 |

+

model = T5ForConditionalGeneration.from_pretrained("grammarly/coedit-xl-composite")

|

| 74 |

+

input_text = 'Fix grammatical errors in this sentence and make it simpler: New kinds of vehicles will be invented with new technology than today.'

|

| 75 |

input_ids = tokenizer(input_text, return_tensors="pt").input_ids

|

| 76 |

outputs = model.generate(input_ids, max_length=256)

|

| 77 |

edited_text = tokenizer.decode(outputs[0], skip_special_tokens=True)[0]

|