Update README.md

Browse files

README.md

CHANGED

|

@@ -1,3 +1,36 @@

|

|

| 1 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 2 |

|

| 3 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

language: multilingual

|

| 3 |

+

datasets:

|

| 4 |

+

- common_voice

|

| 5 |

+

tags:

|

| 6 |

+

- speech

|

| 7 |

+

- automatic-speech-recognition

|

| 8 |

+

license: apache-2.0

|

| 9 |

+

---

|

| 10 |

|

| 11 |

+

# Wav2Vec2-1B-XLS-R

|

| 12 |

+

|

| 13 |

+

[Facebook's Wav2Vec2 XLS-R](https://ai.facebook.com/blog/)

|

| 14 |

+

|

| 15 |

+

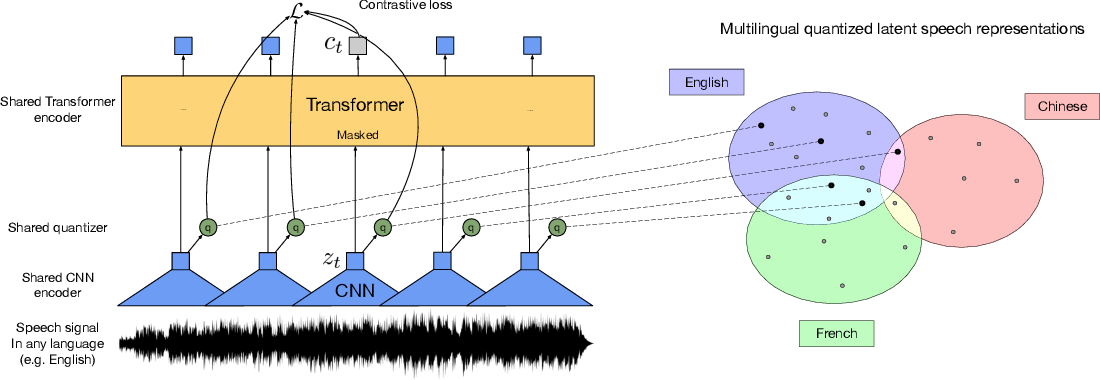

XLS-R is Facebook AI's large-scale multilingual pretrained model for speech (the "XLM-R for Speech"), pretrained on 436k hours of unlabeled speech with the wav2vec 2.0 approach, in 128 languages. When using the model make sure that your speech input is sampled at 16Khz. Note that this model should be fine-tuned on a downstream task, like Automatic Speech Recognition, Translation or Classification. Check out [this blog](https://huggingface.co/blog/fine-tune-wav2vec2-english) for more information about ASR.

|

| 16 |

+

|

| 17 |

+

[XLS-R Paper](https://arxiv.org/abs/)

|

| 18 |

+

|

| 19 |

+

Authors: ...

|

| 20 |

+

|

| 21 |

+

**Abstract**

|

| 22 |

+

This paper presents XLS-R, a large-scale study on learning cross-lingual speech representations with wav2vec 2.0.

|

| 23 |

+

Models are pretrained on 436K hours of publicly available speech audio in 128 languages, an order of magnitude more public data than the largest known prior work.

|

| 24 |

+

The best \xlsrp{} model sets a new state of the art on a wide range of tasks, domains, data regimes and languages, both high and low-resource.

|

| 25 |

+

On the CoVoST-2 speech translation benchmark, we improve the previous state of the art by an average of 5.5 BLEU over 21 translation directions into English.

|

| 26 |

+

In speech recognition, we improve over the state of the art on five languages of BABEL by XX.X average WER. On MLS and VoxPopuli, we beat previous work by XX.X and YY.Y WER respectively on average. We also outperform the best prior work on VoxLingua107 language identification by 1% error rate.

|

| 27 |

+

We hope \xlsrp{} can help improve speech processing tasks for many more languages of the world.

|

| 28 |

+

Models and code are available at [www.github.com/pytorch/fairseq/tree/master/examples/wav2vec](www.github.com/pytorch/fairseq/tree/master/examples/wav2vec).

|

| 29 |

+

|

| 30 |

+

The original model can be found under https://github.com/pytorch/fairseq/tree/master/examples/wav2vec#wav2vec-20.

|

| 31 |

+

|

| 32 |

+

# Usage

|

| 33 |

+

|

| 34 |

+

See [this notebook](https://colab.research.google.com/github/patrickvonplaten/notebooks/blob/master/Fine_Tune_XLSR_Wav2Vec2_on_Turkish_ASR_with_%F0%9F%A4%97_Transformers.ipynb) for more information on how to fine-tune the model.

|

| 35 |

+

|

| 36 |

+

|