ArantxaCasanova

commited on

Commit

•

a00ee36

1

Parent(s):

03f1c62

First model version

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- BigGAN_PyTorch/BigGAN.py +711 -0

- BigGAN_PyTorch/BigGANdeep.py +734 -0

- BigGAN_PyTorch/LICENSE +21 -0

- BigGAN_PyTorch/README.md +144 -0

- BigGAN_PyTorch/TFHub/README.md +14 -0

- BigGAN_PyTorch/TFHub/biggan_v1.py +441 -0

- BigGAN_PyTorch/TFHub/converter.py +558 -0

- BigGAN_PyTorch/animal_hash.py +2652 -0

- BigGAN_PyTorch/config_files/COCO_Stuff/BigGAN/unconditional_biggan_res128.json +44 -0

- BigGAN_PyTorch/config_files/COCO_Stuff/BigGAN/unconditional_biggan_res256.json +44 -0

- BigGAN_PyTorch/config_files/COCO_Stuff/IC-GAN/icgan_res128_ddp.json +51 -0

- BigGAN_PyTorch/config_files/COCO_Stuff/IC-GAN/icgan_res256_ddp.json +51 -0

- BigGAN_PyTorch/config_files/ImageNet-LT/BigGAN/biggan_res128.json +48 -0

- BigGAN_PyTorch/config_files/ImageNet-LT/BigGAN/biggan_res256.json +48 -0

- BigGAN_PyTorch/config_files/ImageNet-LT/BigGAN/biggan_res64.json +48 -0

- BigGAN_PyTorch/config_files/ImageNet-LT/cc_IC-GAN/cc_icgan_res128.json +56 -0

- BigGAN_PyTorch/config_files/ImageNet-LT/cc_IC-GAN/cc_icgan_res256.json +56 -0

- BigGAN_PyTorch/config_files/ImageNet-LT/cc_IC-GAN/cc_icgan_res64.json +56 -0

- BigGAN_PyTorch/config_files/ImageNet/BigGAN/biggan_res128.json +40 -0

- BigGAN_PyTorch/config_files/ImageNet/BigGAN/biggan_res256_half_cap.json +40 -0

- BigGAN_PyTorch/config_files/ImageNet/BigGAN/biggan_res64.json +40 -0

- BigGAN_PyTorch/config_files/ImageNet/IC-GAN/icgan_res128.json +48 -0

- BigGAN_PyTorch/config_files/ImageNet/IC-GAN/icgan_res256.json +47 -0

- BigGAN_PyTorch/config_files/ImageNet/IC-GAN/icgan_res256_halfcap.json +47 -0

- BigGAN_PyTorch/config_files/ImageNet/IC-GAN/icgan_res64.json +48 -0

- BigGAN_PyTorch/config_files/ImageNet/cc_IC-GAN/cc_icgan_res128.json +48 -0

- BigGAN_PyTorch/config_files/ImageNet/cc_IC-GAN/cc_icgan_res256.json +47 -0

- BigGAN_PyTorch/config_files/ImageNet/cc_IC-GAN/cc_icgan_res256_halfcap.json +48 -0

- BigGAN_PyTorch/config_files/ImageNet/cc_IC-GAN/cc_icgan_res64.json +48 -0

- BigGAN_PyTorch/diffaugment_utils.py +119 -0

- BigGAN_PyTorch/imagenet_lt/ImageNet_LT_train.txt +0 -0

- BigGAN_PyTorch/imagenet_lt/ImageNet_LT_val.txt +0 -0

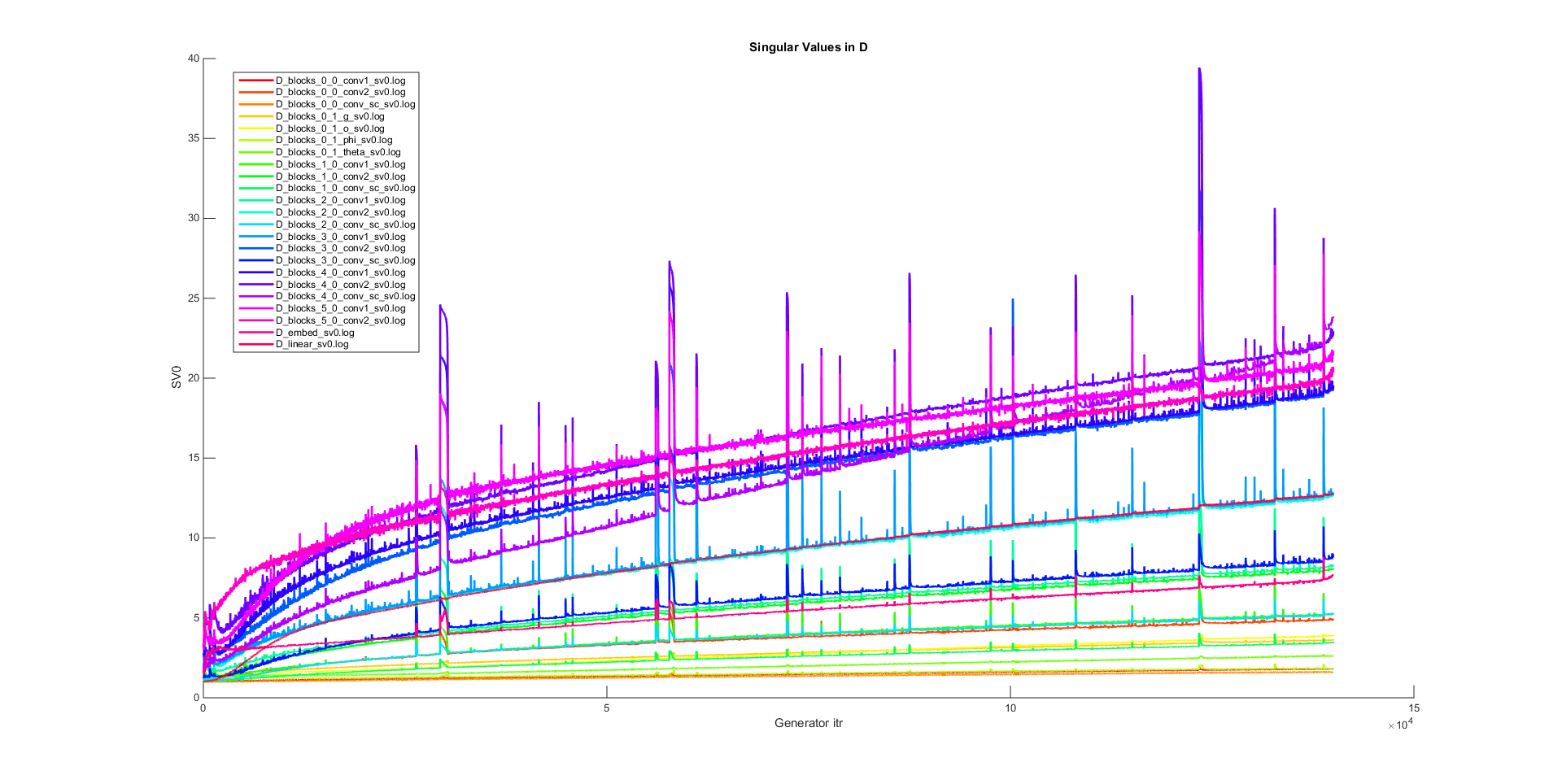

- BigGAN_PyTorch/imgs/D Singular Values.png +0 -0

- BigGAN_PyTorch/imgs/DeepSamples.png +0 -0

- BigGAN_PyTorch/imgs/DogBall.png +0 -0

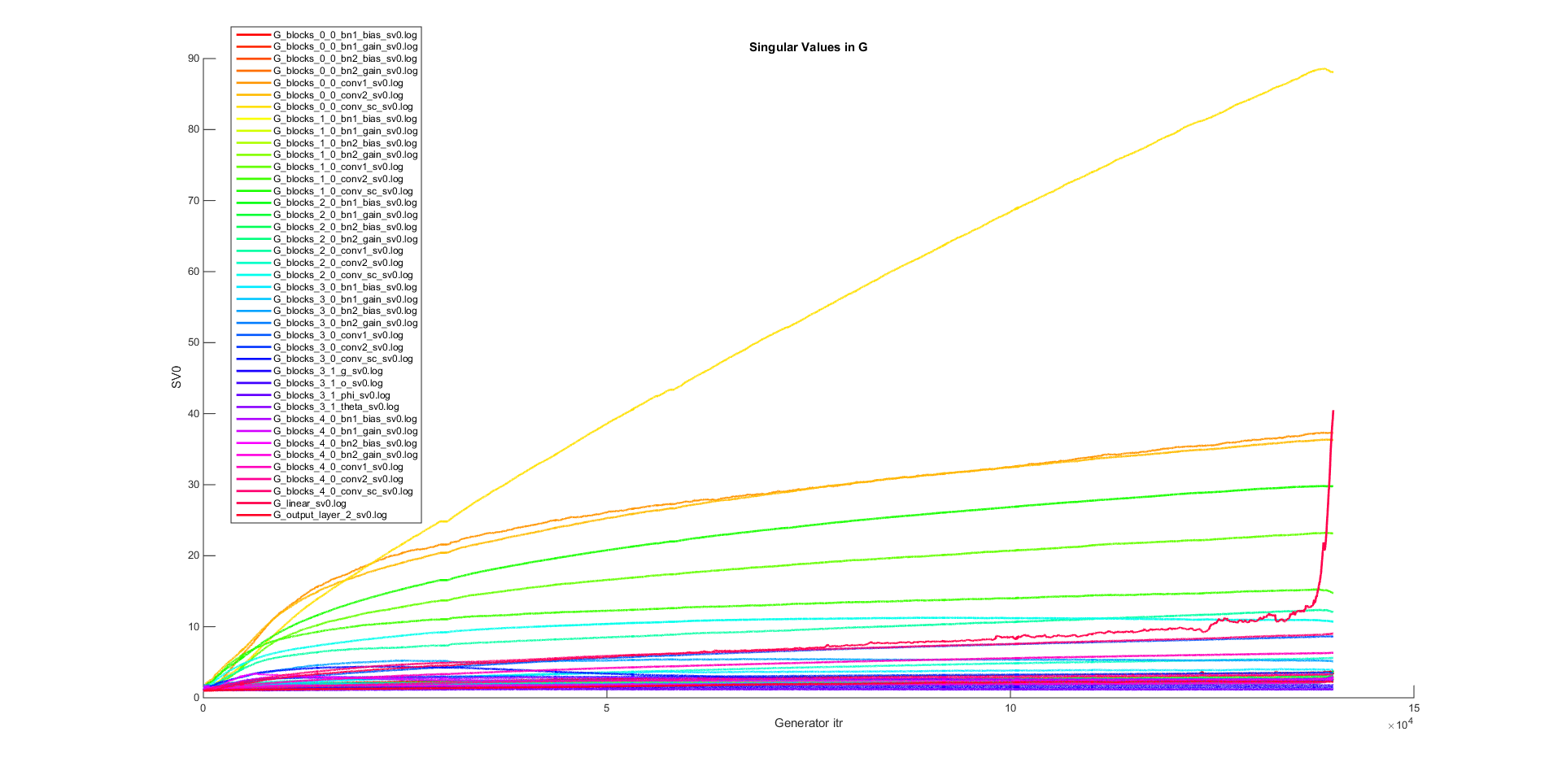

- BigGAN_PyTorch/imgs/G Singular Values.png +0 -0

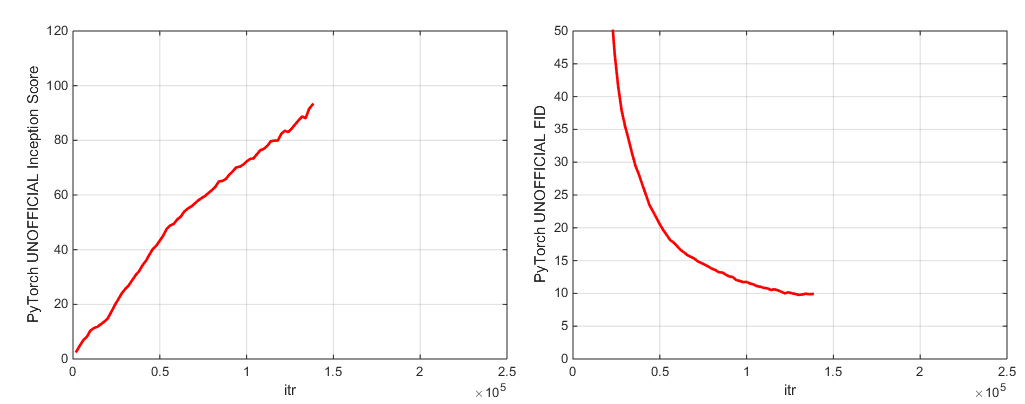

- BigGAN_PyTorch/imgs/IS_FID.png +0 -0

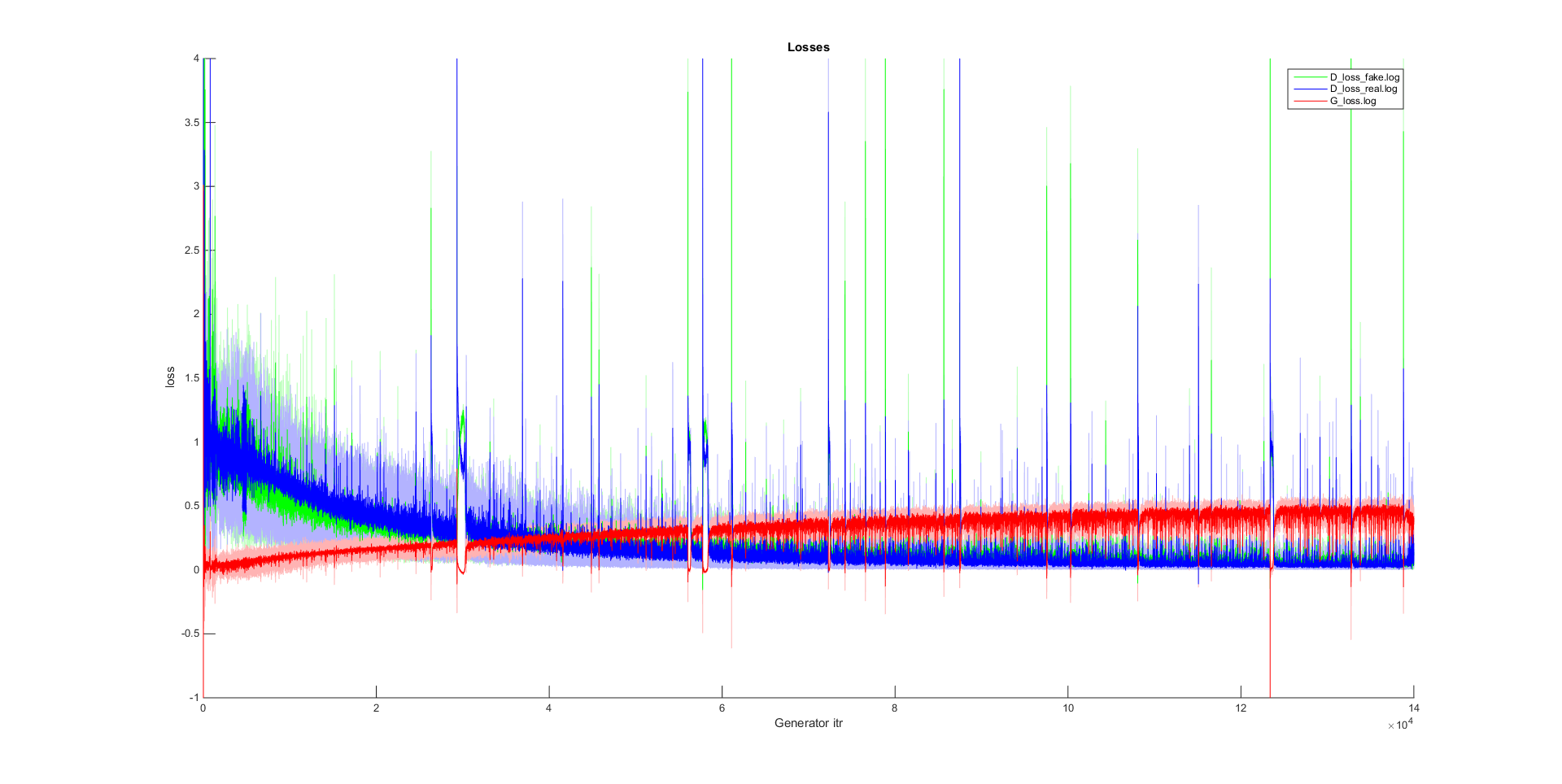

- BigGAN_PyTorch/imgs/Losses.png +0 -0

- BigGAN_PyTorch/imgs/header_image.jpg +0 -0

- BigGAN_PyTorch/imgs/interp_sample.jpg +0 -0

- BigGAN_PyTorch/layers.py +616 -0

- BigGAN_PyTorch/logs/BigGAN_ch96_bs256x8.jsonl +68 -0

- BigGAN_PyTorch/logs/compare_IS.m +97 -0

- BigGAN_PyTorch/logs/metalog.txt +3 -0

- BigGAN_PyTorch/logs/process_inception_log.m +27 -0

- BigGAN_PyTorch/logs/process_training.m +117 -0

- BigGAN_PyTorch/losses.py +43 -0

- BigGAN_PyTorch/make_hdf5.py +193 -0

- BigGAN_PyTorch/run.py +75 -0

- BigGAN_PyTorch/scripts/launch_BigGAN_bs256x8.sh +26 -0

BigGAN_PyTorch/BigGAN.py

ADDED

|

@@ -0,0 +1,711 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Copyright (c) Facebook, Inc. and its affiliates.

|

| 2 |

+

# All rights reserved.

|

| 3 |

+

#

|

| 4 |

+

# All contributions by Andy Brock:

|

| 5 |

+

# Copyright (c) 2019 Andy Brock

|

| 6 |

+

#

|

| 7 |

+

# MIT License

|

| 8 |

+

|

| 9 |

+

import numpy as np

|

| 10 |

+

import math

|

| 11 |

+

import functools

|

| 12 |

+

import os

|

| 13 |

+

|

| 14 |

+

import torch

|

| 15 |

+

import torch.nn as nn

|

| 16 |

+

from torch.nn import init

|

| 17 |

+

import torch.optim as optim

|

| 18 |

+

import torch.nn.functional as F

|

| 19 |

+

|

| 20 |

+

# from torch.nn import Parameter as P

|

| 21 |

+

import sys

|

| 22 |

+

|

| 23 |

+

sys.path.insert(1, os.path.join(sys.path[0], ".."))

|

| 24 |

+

import BigGAN_PyTorch.layers as layers

|

| 25 |

+

|

| 26 |

+

# from sync_batchnorm import SynchronizedBatchNorm2d as SyncBatchNorm2d

|

| 27 |

+

from BigGAN_PyTorch.diffaugment_utils import DiffAugment

|

| 28 |

+

|

| 29 |

+

# Architectures for G

|

| 30 |

+

# Attention is passed in in the format '32_64' to mean applying an attention

|

| 31 |

+

# block at both resolution 32x32 and 64x64. Just '64' will apply at 64x64.

|

| 32 |

+

def G_arch(ch=64, attention="64", ksize="333333", dilation="111111"):

|

| 33 |

+

arch = {}

|

| 34 |

+

arch[512] = {

|

| 35 |

+

"in_channels": [ch * item for item in [16, 16, 8, 8, 4, 2, 1]],

|

| 36 |

+

"out_channels": [ch * item for item in [16, 8, 8, 4, 2, 1, 1]],

|

| 37 |

+

"upsample": [True] * 7,

|

| 38 |

+

"resolution": [8, 16, 32, 64, 128, 256, 512],

|

| 39 |

+

"attention": {

|

| 40 |

+

2 ** i: (2 ** i in [int(item) for item in attention.split("_")])

|

| 41 |

+

for i in range(3, 10)

|

| 42 |

+

},

|

| 43 |

+

}

|

| 44 |

+

arch[256] = {

|

| 45 |

+

"in_channels": [ch * item for item in [16, 16, 8, 8, 4, 2]],

|

| 46 |

+

"out_channels": [ch * item for item in [16, 8, 8, 4, 2, 1]],

|

| 47 |

+

"upsample": [True] * 6,

|

| 48 |

+

"resolution": [8, 16, 32, 64, 128, 256],

|

| 49 |

+

"attention": {

|

| 50 |

+

2 ** i: (2 ** i in [int(item) for item in attention.split("_")])

|

| 51 |

+

for i in range(3, 9)

|

| 52 |

+

},

|

| 53 |

+

}

|

| 54 |

+

arch[128] = {

|

| 55 |

+

"in_channels": [ch * item for item in [16, 16, 8, 4, 2]],

|

| 56 |

+

"out_channels": [ch * item for item in [16, 8, 4, 2, 1]],

|

| 57 |

+

"upsample": [True] * 5,

|

| 58 |

+

"resolution": [8, 16, 32, 64, 128],

|

| 59 |

+

"attention": {

|

| 60 |

+

2 ** i: (2 ** i in [int(item) for item in attention.split("_")])

|

| 61 |

+

for i in range(3, 8)

|

| 62 |

+

},

|

| 63 |

+

}

|

| 64 |

+

arch[64] = {

|

| 65 |

+

"in_channels": [ch * item for item in [16, 16, 8, 4]],

|

| 66 |

+

"out_channels": [ch * item for item in [16, 8, 4, 2]],

|

| 67 |

+

"upsample": [True] * 4,

|

| 68 |

+

"resolution": [8, 16, 32, 64],

|

| 69 |

+

"attention": {

|

| 70 |

+

2 ** i: (2 ** i in [int(item) for item in attention.split("_")])

|

| 71 |

+

for i in range(3, 7)

|

| 72 |

+

},

|

| 73 |

+

}

|

| 74 |

+

arch[32] = {

|

| 75 |

+

"in_channels": [ch * item for item in [4, 4, 4]],

|

| 76 |

+

"out_channels": [ch * item for item in [4, 4, 4]],

|

| 77 |

+

"upsample": [True] * 3,

|

| 78 |

+

"resolution": [8, 16, 32],

|

| 79 |

+

"attention": {

|

| 80 |

+

2 ** i: (2 ** i in [int(item) for item in attention.split("_")])

|

| 81 |

+

for i in range(3, 6)

|

| 82 |

+

},

|

| 83 |

+

}

|

| 84 |

+

|

| 85 |

+

return arch

|

| 86 |

+

|

| 87 |

+

|

| 88 |

+

class Generator(nn.Module):

|

| 89 |

+

def __init__(

|

| 90 |

+

self,

|

| 91 |

+

G_ch=64,

|

| 92 |

+

dim_z=128,

|

| 93 |

+

bottom_width=4,

|

| 94 |

+

resolution=128,

|

| 95 |

+

G_kernel_size=3,

|

| 96 |

+

G_attn="64",

|

| 97 |

+

n_classes=1000,

|

| 98 |

+

num_G_SVs=1,

|

| 99 |

+

num_G_SV_itrs=1,

|

| 100 |

+

G_shared=True,

|

| 101 |

+

shared_dim=0,

|

| 102 |

+

hier=False,

|

| 103 |

+

cross_replica=False,

|

| 104 |

+

mybn=False,

|

| 105 |

+

G_activation=nn.ReLU(inplace=False),

|

| 106 |

+

G_lr=5e-5,

|

| 107 |

+

G_B1=0.0,

|

| 108 |

+

G_B2=0.999,

|

| 109 |

+

adam_eps=1e-8,

|

| 110 |

+

BN_eps=1e-5,

|

| 111 |

+

SN_eps=1e-12,

|

| 112 |

+

G_mixed_precision=False,

|

| 113 |

+

G_fp16=False,

|

| 114 |

+

G_init="ortho",

|

| 115 |

+

skip_init=False,

|

| 116 |

+

no_optim=False,

|

| 117 |

+

G_param="SN",

|

| 118 |

+

norm_style="bn",

|

| 119 |

+

class_cond=True,

|

| 120 |

+

embedded_optimizer=True,

|

| 121 |

+

instance_cond=False,

|

| 122 |

+

G_shared_feat=True,

|

| 123 |

+

shared_dim_feat=2048,

|

| 124 |

+

**kwargs

|

| 125 |

+

):

|

| 126 |

+

super(Generator, self).__init__()

|

| 127 |

+

# Channel width mulitplier

|

| 128 |

+

self.ch = G_ch

|

| 129 |

+

# Dimensionality of the latent space

|

| 130 |

+

self.dim_z = dim_z

|

| 131 |

+

# The initial spatial dimensions

|

| 132 |

+

self.bottom_width = bottom_width

|

| 133 |

+

# Resolution of the output

|

| 134 |

+

self.resolution = resolution

|

| 135 |

+

# Kernel size?

|

| 136 |

+

self.kernel_size = G_kernel_size

|

| 137 |

+

# Attention?

|

| 138 |

+

self.attention = G_attn

|

| 139 |

+

# number of classes, for use in categorical conditional generation

|

| 140 |

+

self.n_classes = n_classes

|

| 141 |

+

# Use shared embeddings?

|

| 142 |

+

self.G_shared = G_shared

|

| 143 |

+

# Dimensionality of the shared embedding? Unused if not using G_shared

|

| 144 |

+

self.shared_dim = shared_dim if shared_dim > 0 else dim_z

|

| 145 |

+

# Hierarchical latent space?

|

| 146 |

+

self.hier = hier

|

| 147 |

+

# Cross replica batchnorm?

|

| 148 |

+

self.cross_replica = cross_replica

|

| 149 |

+

# Use my batchnorm?

|

| 150 |

+

self.mybn = mybn

|

| 151 |

+

# nonlinearity for residual blocks

|

| 152 |

+

self.activation = G_activation

|

| 153 |

+

# Initialization style

|

| 154 |

+

self.init = G_init

|

| 155 |

+

# Parameterization style

|

| 156 |

+

self.G_param = G_param

|

| 157 |

+

# Normalization style

|

| 158 |

+

self.norm_style = norm_style

|

| 159 |

+

# Epsilon for BatchNorm?

|

| 160 |

+

self.BN_eps = BN_eps

|

| 161 |

+

# Epsilon for Spectral Norm?

|

| 162 |

+

self.SN_eps = SN_eps

|

| 163 |

+

# fp16?

|

| 164 |

+

self.fp16 = G_fp16

|

| 165 |

+

# Use embeddings for instance features?

|

| 166 |

+

self.G_shared_feat = G_shared_feat

|

| 167 |

+

self.shared_dim_feat = shared_dim_feat

|

| 168 |

+

# Architecture dict

|

| 169 |

+

self.arch = G_arch(self.ch, self.attention)[resolution]

|

| 170 |

+

|

| 171 |

+

# If using hierarchical latents, adjust z

|

| 172 |

+

if self.hier:

|

| 173 |

+

# Number of places z slots into

|

| 174 |

+

self.num_slots = len(self.arch["in_channels"]) + 1

|

| 175 |

+

self.z_chunk_size = self.dim_z // self.num_slots

|

| 176 |

+

# Recalculate latent dimensionality for even splitting into chunks

|

| 177 |

+

self.dim_z = self.z_chunk_size * self.num_slots

|

| 178 |

+

else:

|

| 179 |

+

self.num_slots = 1

|

| 180 |

+

self.z_chunk_size = 0

|

| 181 |

+

|

| 182 |

+

# Which convs, batchnorms, and linear layers to use

|

| 183 |

+

if self.G_param == "SN":

|

| 184 |

+

self.which_conv = functools.partial(

|

| 185 |

+

layers.SNConv2d,

|

| 186 |

+

kernel_size=3,

|

| 187 |

+

padding=1,

|

| 188 |

+

num_svs=num_G_SVs,

|

| 189 |

+

num_itrs=num_G_SV_itrs,

|

| 190 |

+

eps=self.SN_eps,

|

| 191 |

+

)

|

| 192 |

+

self.which_linear = functools.partial(

|

| 193 |

+

layers.SNLinear,

|

| 194 |

+

num_svs=num_G_SVs,

|

| 195 |

+

num_itrs=num_G_SV_itrs,

|

| 196 |

+

eps=self.SN_eps,

|

| 197 |

+

)

|

| 198 |

+

else:

|

| 199 |

+

self.which_conv = functools.partial(nn.Conv2d, kernel_size=3, padding=1)

|

| 200 |

+

self.which_linear = nn.Linear

|

| 201 |

+

|

| 202 |

+

# We use a non-spectral-normed embedding here regardless;

|

| 203 |

+

# For some reason applying SN to G's embedding seems to randomly cripple G

|

| 204 |

+

self.which_embedding = nn.Embedding

|

| 205 |

+

bn_linear = (

|

| 206 |

+

functools.partial(self.which_linear, bias=False)

|

| 207 |

+

if self.G_shared

|

| 208 |

+

else self.which_embedding

|

| 209 |

+

)

|

| 210 |

+

if not class_cond and not instance_cond:

|

| 211 |

+

input_sz_bn = self.n_classes

|

| 212 |

+

else:

|

| 213 |

+

input_sz_bn = self.z_chunk_size

|

| 214 |

+

if class_cond:

|

| 215 |

+

input_sz_bn += self.shared_dim

|

| 216 |

+

if instance_cond:

|

| 217 |

+

input_sz_bn += self.shared_dim_feat

|

| 218 |

+

self.which_bn = functools.partial(

|

| 219 |

+

layers.ccbn,

|

| 220 |

+

which_linear=bn_linear,

|

| 221 |

+

cross_replica=self.cross_replica,

|

| 222 |

+

mybn=self.mybn,

|

| 223 |

+

input_size=input_sz_bn,

|

| 224 |

+

norm_style=self.norm_style,

|

| 225 |

+

eps=self.BN_eps,

|

| 226 |

+

)

|

| 227 |

+

|

| 228 |

+

# Prepare model

|

| 229 |

+

# If not using shared embeddings, self.shared is just a passthrough

|

| 230 |

+

self.shared = (

|

| 231 |

+

self.which_embedding(n_classes, self.shared_dim)

|

| 232 |

+

if G_shared

|

| 233 |

+

else layers.identity()

|

| 234 |

+

)

|

| 235 |

+

self.shared_feat = (

|

| 236 |

+

self.which_linear(2048, self.shared_dim_feat)

|

| 237 |

+

if G_shared_feat

|

| 238 |

+

else layers.identity()

|

| 239 |

+

)

|

| 240 |

+

# First linear layer

|

| 241 |

+

self.linear = self.which_linear(

|

| 242 |

+

self.dim_z // self.num_slots,

|

| 243 |

+

self.arch["in_channels"][0] * (self.bottom_width ** 2),

|

| 244 |

+

)

|

| 245 |

+

|

| 246 |

+

# self.blocks is a doubly-nested list of modules, the outer loop intended

|

| 247 |

+

# to be over blocks at a given resolution (resblocks and/or self-attention)

|

| 248 |

+

# while the inner loop is over a given block

|

| 249 |

+

self.blocks = []

|

| 250 |

+

for index in range(len(self.arch["out_channels"])):

|

| 251 |

+

self.blocks += [

|

| 252 |

+

[

|

| 253 |

+

layers.GBlock(

|

| 254 |

+

in_channels=self.arch["in_channels"][index],

|

| 255 |

+

out_channels=self.arch["out_channels"][index],

|

| 256 |

+

which_conv=self.which_conv,

|

| 257 |

+

which_bn=self.which_bn,

|

| 258 |

+

activation=self.activation,

|

| 259 |

+

upsample=(

|

| 260 |

+

functools.partial(F.interpolate, scale_factor=2)

|

| 261 |

+

if self.arch["upsample"][index]

|

| 262 |

+

else None

|

| 263 |

+

),

|

| 264 |

+

)

|

| 265 |

+

]

|

| 266 |

+

]

|

| 267 |

+

|

| 268 |

+

# If attention on this block, attach it to the end

|

| 269 |

+

if self.arch["attention"][self.arch["resolution"][index]]:

|

| 270 |

+

print(

|

| 271 |

+

"Adding attention layer in G at resolution %d"

|

| 272 |

+

% self.arch["resolution"][index]

|

| 273 |

+

)

|

| 274 |

+

self.blocks[-1] += [

|

| 275 |

+

layers.Attention(self.arch["out_channels"][index], self.which_conv)

|

| 276 |

+

]

|

| 277 |

+

|

| 278 |

+

# Turn self.blocks into a ModuleList so that it's all properly registered.

|

| 279 |

+

self.blocks = nn.ModuleList([nn.ModuleList(block) for block in self.blocks])

|

| 280 |

+

|

| 281 |

+

# output layer: batchnorm-relu-conv.

|

| 282 |

+

# Consider using a non-spectral conv here

|

| 283 |

+

self.output_layer = nn.Sequential(

|

| 284 |

+

layers.bn(

|

| 285 |

+

self.arch["out_channels"][-1],

|

| 286 |

+

cross_replica=self.cross_replica,

|

| 287 |

+

mybn=self.mybn,

|

| 288 |

+

),

|

| 289 |

+

self.activation,

|

| 290 |

+

self.which_conv(self.arch["out_channels"][-1], 3),

|

| 291 |

+

)

|

| 292 |

+

|

| 293 |

+

# Initialize weights. Optionally skip init for testing.

|

| 294 |

+

if not skip_init:

|

| 295 |

+

self.init_weights()

|

| 296 |

+

|

| 297 |

+

# Set up optimizer

|

| 298 |

+

# If this is an EMA copy, no need for an optim, so just return now

|

| 299 |

+

if no_optim or not embedded_optimizer:

|

| 300 |

+

return

|

| 301 |

+

self.lr, self.B1, self.B2, self.adam_eps = G_lr, G_B1, G_B2, adam_eps

|

| 302 |

+

if G_mixed_precision:

|

| 303 |

+

print("Using fp16 adam in G...")

|

| 304 |

+

import utils

|

| 305 |

+

|

| 306 |

+

self.optim = utils.Adam16(

|

| 307 |

+

params=self.parameters(),

|

| 308 |

+

lr=self.lr,

|

| 309 |

+

betas=(self.B1, self.B2),

|

| 310 |

+

weight_decay=0,

|

| 311 |

+

eps=self.adam_eps,

|

| 312 |

+

)

|

| 313 |

+

else:

|

| 314 |

+

self.optim = optim.Adam(

|

| 315 |

+

params=self.parameters(),

|

| 316 |

+

lr=self.lr,

|

| 317 |

+

betas=(self.B1, self.B2),

|

| 318 |

+

weight_decay=0,

|

| 319 |

+

eps=self.adam_eps,

|

| 320 |

+

)

|

| 321 |

+

|

| 322 |

+

# LR scheduling, left here for forward compatibility

|

| 323 |

+

# self.lr_sched = {'itr' : 0}# if self.progressive else {}

|

| 324 |

+

# self.j = 0

|

| 325 |

+

|

| 326 |

+

# Initialize

|

| 327 |

+

def init_weights(self):

|

| 328 |

+

self.param_count = 0

|

| 329 |

+

for module in self.modules():

|

| 330 |

+

if (

|

| 331 |

+

isinstance(module, nn.Conv2d)

|

| 332 |

+

or isinstance(module, nn.Linear)

|

| 333 |

+

or isinstance(module, nn.Embedding)

|

| 334 |

+

):

|

| 335 |

+

if self.init == "ortho":

|

| 336 |

+

init.orthogonal_(module.weight)

|

| 337 |

+

elif self.init == "N02":

|

| 338 |

+

init.normal_(module.weight, 0, 0.02)

|

| 339 |

+

elif self.init in ["glorot", "xavier"]:

|

| 340 |

+

init.xavier_uniform_(module.weight)

|

| 341 |

+

else:

|

| 342 |

+

print("Init style not recognized...")

|

| 343 |

+

self.param_count += sum(

|

| 344 |

+

[p.data.nelement() for p in module.parameters()]

|

| 345 |

+

)

|

| 346 |

+

print("Param count for G" "s initialized parameters: %d" % self.param_count)

|

| 347 |

+

|

| 348 |

+

# Get conditionings

|

| 349 |

+

|

| 350 |

+

def get_condition_embeddings(self, cl=None, feat=None):

|

| 351 |

+

c_embed = []

|

| 352 |

+

if cl is not None:

|

| 353 |

+

c_embed.append(self.shared(cl))

|

| 354 |

+

if feat is not None:

|

| 355 |

+

c_embed.append(self.shared_feat(feat))

|

| 356 |

+

if len(c_embed) > 0:

|

| 357 |

+

c_embed = torch.cat(c_embed, dim=-1)

|

| 358 |

+

return c_embed

|

| 359 |

+

|

| 360 |

+

# Note on this forward function: we pass in a y vector which has

|

| 361 |

+

# already been passed through G.shared to enable easy class-wise

|

| 362 |

+

# interpolation later. If we passed in the one-hot and then ran it through

|

| 363 |

+

# G.shared in this forward function, it would be harder to handle.

|

| 364 |

+

def forward(self, z, label=None, feats=None):

|

| 365 |

+

y = self.get_condition_embeddings(label, feats)

|

| 366 |

+

# If hierarchical, concatenate zs and ys

|

| 367 |

+

if self.hier:

|

| 368 |

+

zs = torch.split(z, self.z_chunk_size, 1)

|

| 369 |

+

z = zs[0]

|

| 370 |

+

ys = [torch.cat([y, item], 1) for item in zs[1:]]

|

| 371 |

+

else:

|

| 372 |

+

ys = [y] * len(self.blocks)

|

| 373 |

+

|

| 374 |

+

# First linear layer

|

| 375 |

+

h = self.linear(z)

|

| 376 |

+

# Reshape

|

| 377 |

+

h = h.view(h.size(0), -1, self.bottom_width, self.bottom_width)

|

| 378 |

+

|

| 379 |

+

# Loop over blocks

|

| 380 |

+

for index, blocklist in enumerate(self.blocks):

|

| 381 |

+

# Second inner loop in case block has multiple layers

|

| 382 |

+

for block in blocklist:

|

| 383 |

+

h = block(h, ys[index])

|

| 384 |

+

|

| 385 |

+

# Apply batchnorm-relu-conv-tanh at output

|

| 386 |

+

return torch.tanh(self.output_layer(h))

|

| 387 |

+

|

| 388 |

+

|

| 389 |

+

# Discriminator architecture, same paradigm as G's above

|

| 390 |

+

def D_arch(ch=64, attention="64", ksize="333333", dilation="111111"):

|

| 391 |

+

arch = {}

|

| 392 |

+

arch[256] = {

|

| 393 |

+

"in_channels": [3] + [ch * item for item in [1, 2, 4, 8, 8, 16]],

|

| 394 |

+

"out_channels": [item * ch for item in [1, 2, 4, 8, 8, 16, 16]],

|

| 395 |

+

"downsample": [True] * 6 + [False],

|

| 396 |

+

"resolution": [128, 64, 32, 16, 8, 4, 4],

|

| 397 |

+

"attention": {

|

| 398 |

+

2 ** i: 2 ** i in [int(item) for item in attention.split("_")]

|

| 399 |

+

for i in range(2, 8)

|

| 400 |

+

},

|

| 401 |

+

}

|

| 402 |

+

arch[128] = {

|

| 403 |

+

"in_channels": [3] + [ch * item for item in [1, 2, 4, 8, 16]],

|

| 404 |

+

"out_channels": [item * ch for item in [1, 2, 4, 8, 16, 16]],

|

| 405 |

+

"downsample": [True] * 5 + [False],

|

| 406 |

+

"resolution": [64, 32, 16, 8, 4, 4],

|

| 407 |

+

"attention": {

|

| 408 |

+

2 ** i: 2 ** i in [int(item) for item in attention.split("_")]

|

| 409 |

+

for i in range(2, 8)

|

| 410 |

+

},

|

| 411 |

+

}

|

| 412 |

+

arch[64] = {

|

| 413 |

+

"in_channels": [3] + [ch * item for item in [1, 2, 4, 8]],

|

| 414 |

+

"out_channels": [item * ch for item in [1, 2, 4, 8, 16]],

|

| 415 |

+

"downsample": [True] * 4 + [False],

|

| 416 |

+

"resolution": [32, 16, 8, 4, 4],

|

| 417 |

+

"attention": {

|

| 418 |

+

2 ** i: 2 ** i in [int(item) for item in attention.split("_")]

|

| 419 |

+

for i in range(2, 7)

|

| 420 |

+

},

|

| 421 |

+

}

|

| 422 |

+

arch[32] = {

|

| 423 |

+

"in_channels": [3] + [item * ch for item in [4, 4, 4]],

|

| 424 |

+

"out_channels": [item * ch for item in [4, 4, 4, 4]],

|

| 425 |

+

"downsample": [True, True, False, False],

|

| 426 |

+

"resolution": [16, 16, 16, 16],

|

| 427 |

+

"attention": {

|

| 428 |

+

2 ** i: 2 ** i in [int(item) for item in attention.split("_")]

|

| 429 |

+

for i in range(2, 6)

|

| 430 |

+

},

|

| 431 |

+

}

|

| 432 |

+

return arch

|

| 433 |

+

|

| 434 |

+

|

| 435 |

+

class Discriminator(nn.Module):

|

| 436 |

+

def __init__(

|

| 437 |

+

self,

|

| 438 |

+

D_ch=64,

|

| 439 |

+

D_wide=True,

|

| 440 |

+

resolution=128,

|

| 441 |

+

D_kernel_size=3,

|

| 442 |

+

D_attn="64",

|

| 443 |

+

n_classes=1000,

|

| 444 |

+

num_D_SVs=1,

|

| 445 |

+

num_D_SV_itrs=1,

|

| 446 |

+

D_activation=nn.ReLU(inplace=False),

|

| 447 |

+

D_lr=2e-4,

|

| 448 |

+

D_B1=0.0,

|

| 449 |

+

D_B2=0.999,

|

| 450 |

+

adam_eps=1e-8,

|

| 451 |

+

SN_eps=1e-12,

|

| 452 |

+

output_dim=1,

|

| 453 |

+

D_mixed_precision=False,

|

| 454 |

+

D_fp16=False,

|

| 455 |

+

D_init="ortho",

|

| 456 |

+

skip_init=False,

|

| 457 |

+

D_param="SN",

|

| 458 |

+

class_cond=True,

|

| 459 |

+

embedded_optimizer=True,

|

| 460 |

+

instance_cond=False,

|

| 461 |

+

instance_sz=2048,

|

| 462 |

+

**kwargs

|

| 463 |

+

):

|

| 464 |

+

super(Discriminator, self).__init__()

|

| 465 |

+

# Width multiplier

|

| 466 |

+

self.ch = D_ch

|

| 467 |

+

# Use Wide D as in BigGAN and SA-GAN or skinny D as in SN-GAN?

|

| 468 |

+

self.D_wide = D_wide

|

| 469 |

+

# Resolution

|

| 470 |

+

self.resolution = resolution

|

| 471 |

+

# Kernel size

|

| 472 |

+

self.kernel_size = D_kernel_size

|

| 473 |

+

# Attention?

|

| 474 |

+

self.attention = D_attn

|

| 475 |

+

# Number of classes

|

| 476 |

+

self.n_classes = n_classes

|

| 477 |

+

# Activation

|

| 478 |

+

self.activation = D_activation

|

| 479 |

+

# Initialization style

|

| 480 |

+

self.init = D_init

|

| 481 |

+

# Parameterization style

|

| 482 |

+

self.D_param = D_param

|

| 483 |

+

# Epsilon for Spectral Norm?

|

| 484 |

+

self.SN_eps = SN_eps

|

| 485 |

+

# Fp16?

|

| 486 |

+

self.fp16 = D_fp16

|

| 487 |

+

# Architecture

|

| 488 |

+

self.arch = D_arch(self.ch, self.attention)[resolution]

|

| 489 |

+

|

| 490 |

+

# Which convs, batchnorms, and linear layers to use

|

| 491 |

+

# No option to turn off SN in D right now

|

| 492 |

+

if self.D_param == "SN":

|

| 493 |

+

self.which_conv = functools.partial(

|

| 494 |

+

layers.SNConv2d,

|

| 495 |

+

kernel_size=3,

|

| 496 |

+

padding=1,

|

| 497 |

+

num_svs=num_D_SVs,

|

| 498 |

+

num_itrs=num_D_SV_itrs,

|

| 499 |

+

eps=self.SN_eps,

|

| 500 |

+

)

|

| 501 |

+

self.which_linear = functools.partial(

|

| 502 |

+

layers.SNLinear,

|

| 503 |

+

num_svs=num_D_SVs,

|

| 504 |

+

num_itrs=num_D_SV_itrs,

|

| 505 |

+

eps=self.SN_eps,

|

| 506 |

+

)

|

| 507 |

+

self.which_embedding = functools.partial(

|

| 508 |

+

layers.SNEmbedding,

|

| 509 |

+

num_svs=num_D_SVs,

|

| 510 |

+

num_itrs=num_D_SV_itrs,

|

| 511 |

+

eps=self.SN_eps,

|

| 512 |

+

)

|

| 513 |

+

# Prepare model

|

| 514 |

+

# self.blocks is a doubly-nested list of modules, the outer loop intended

|

| 515 |

+

# to be over blocks at a given resolution (resblocks and/or self-attention)

|

| 516 |

+

self.blocks = []

|

| 517 |

+

for index in range(len(self.arch["out_channels"])):

|

| 518 |

+

self.blocks += [

|

| 519 |

+

[

|

| 520 |

+

layers.DBlock(

|

| 521 |

+

in_channels=self.arch["in_channels"][index],

|

| 522 |

+

out_channels=self.arch["out_channels"][index],

|

| 523 |

+

which_conv=self.which_conv,

|

| 524 |

+

wide=self.D_wide,

|

| 525 |

+

activation=self.activation,

|

| 526 |

+

preactivation=(index > 0),

|

| 527 |

+

downsample=(

|

| 528 |

+

nn.AvgPool2d(2) if self.arch["downsample"][index] else None

|

| 529 |

+

),

|

| 530 |

+

)

|

| 531 |

+

]

|

| 532 |

+

]

|

| 533 |

+

# If attention on this block, attach it to the end

|

| 534 |

+

if self.arch["attention"][self.arch["resolution"][index]]:

|

| 535 |

+

print(

|

| 536 |

+

"Adding attention layer in D at resolution %d"

|

| 537 |

+

% self.arch["resolution"][index]

|

| 538 |

+

)

|

| 539 |

+

self.blocks[-1] += [

|

| 540 |

+

layers.Attention(self.arch["out_channels"][index], self.which_conv)

|

| 541 |

+

]

|

| 542 |

+

# Turn self.blocks into a ModuleList so that it's all properly registered.

|

| 543 |

+

self.blocks = nn.ModuleList([nn.ModuleList(block) for block in self.blocks])

|

| 544 |

+

# Linear output layer. The output dimension is typically 1, but may be

|

| 545 |

+

# larger if we're e.g. turning this into a VAE with an inference output

|

| 546 |

+

self.linear = self.which_linear(self.arch["out_channels"][-1], output_dim)

|

| 547 |

+

# Embedding for projection discrimination

|

| 548 |

+

if class_cond and instance_cond:

|

| 549 |

+

self.linear_feat = self.which_linear(

|

| 550 |

+

instance_sz, self.arch["out_channels"][-1] // 2

|

| 551 |

+

)

|

| 552 |

+

self.embed = self.which_embedding(

|

| 553 |

+

self.n_classes, self.arch["out_channels"][-1] // 2

|

| 554 |

+

)

|

| 555 |

+

elif class_cond:

|

| 556 |

+

# Embedding for projection discrimination

|

| 557 |

+

self.embed = self.which_embedding(

|

| 558 |

+

self.n_classes, self.arch["out_channels"][-1]

|

| 559 |

+

)

|

| 560 |

+

elif instance_cond:

|

| 561 |

+

self.linear_feat = self.which_linear(

|

| 562 |

+

instance_sz, self.arch["out_channels"][-1]

|

| 563 |

+

)

|

| 564 |

+

|

| 565 |

+

# Initialize weights

|

| 566 |

+

if not skip_init:

|

| 567 |

+

self.init_weights()

|

| 568 |

+

|

| 569 |

+

# Set up optimizer

|

| 570 |

+

if embedded_optimizer:

|

| 571 |

+

self.lr, self.B1, self.B2, self.adam_eps = D_lr, D_B1, D_B2, adam_eps

|

| 572 |

+

if D_mixed_precision:

|

| 573 |

+

print("Using fp16 adam in D...")

|

| 574 |

+

import utils

|

| 575 |

+

|

| 576 |

+

self.optim = utils.Adam16(

|

| 577 |

+

params=self.parameters(),

|

| 578 |

+

lr=self.lr,

|

| 579 |

+

betas=(self.B1, self.B2),

|

| 580 |

+

weight_decay=0,

|

| 581 |

+

eps=self.adam_eps,

|

| 582 |

+

)

|

| 583 |

+

else:

|

| 584 |

+

self.optim = optim.Adam(

|

| 585 |

+

params=self.parameters(),

|

| 586 |

+

lr=self.lr,

|

| 587 |

+

betas=(self.B1, self.B2),

|

| 588 |

+

weight_decay=0,

|

| 589 |

+

eps=self.adam_eps,

|

| 590 |

+

)

|

| 591 |

+

# LR scheduling, left here for forward compatibility

|

| 592 |

+

# self.lr_sched = {'itr' : 0}# if self.progressive else {}

|

| 593 |

+

# self.j = 0

|

| 594 |

+

|

| 595 |

+

# Initialize

|

| 596 |

+

def init_weights(self):

|

| 597 |

+

self.param_count = 0

|

| 598 |

+

for module in self.modules():

|

| 599 |

+

if (

|

| 600 |

+

isinstance(module, nn.Conv2d)

|

| 601 |

+

or isinstance(module, nn.Linear)

|

| 602 |

+

or isinstance(module, nn.Embedding)

|

| 603 |

+

):

|

| 604 |

+

if self.init == "ortho":

|

| 605 |

+

init.orthogonal_(module.weight)

|

| 606 |

+

elif self.init == "N02":

|

| 607 |

+

init.normal_(module.weight, 0, 0.02)

|

| 608 |

+

elif self.init in ["glorot", "xavier"]:

|

| 609 |

+

init.xavier_uniform_(module.weight)

|

| 610 |

+

else:

|

| 611 |

+

print("Init style not recognized...")

|

| 612 |

+

self.param_count += sum(

|

| 613 |

+

[p.data.nelement() for p in module.parameters()]

|

| 614 |

+

)

|

| 615 |

+

print("Param count for D" "s initialized parameters: %d" % self.param_count)

|

| 616 |

+

|

| 617 |

+

def forward(self, x, y=None, feat=None):

|

| 618 |

+

# Stick x into h for cleaner for loops without flow control

|

| 619 |

+

h = x

|

| 620 |

+

# Loop over blocks

|

| 621 |

+

for index, blocklist in enumerate(self.blocks):

|

| 622 |

+

for block in blocklist:

|

| 623 |

+

h = block(h)

|

| 624 |

+

# Apply global sum pooling as in SN-GAN

|

| 625 |

+

h = torch.sum(self.activation(h), [2, 3])

|

| 626 |

+

# Get initial class-unconditional output

|

| 627 |

+

out = self.linear(h)

|

| 628 |

+

# Condition on both class and instance features

|

| 629 |

+

if y is not None and feat is not None:

|

| 630 |

+

out = out + torch.sum(

|

| 631 |

+

torch.cat([self.embed(y), self.linear_feat(feat)], dim=-1) * h,

|

| 632 |

+

1,

|

| 633 |

+

keepdim=True,

|

| 634 |

+

)

|

| 635 |

+

# Condition on class only

|

| 636 |

+

elif y is not None:

|

| 637 |

+

# Get projection of final featureset onto class vectors and add to evidence

|

| 638 |

+

out = out + torch.sum(self.embed(y) * h, 1, keepdim=True)

|

| 639 |

+

# Condition on instance features only

|

| 640 |

+

elif feat is not None:

|

| 641 |

+

out = out + torch.sum(self.linear_feat(feat) * h, 1, keepdim=True)

|

| 642 |

+

return out

|

| 643 |

+

|

| 644 |

+

|

| 645 |

+

# Parallelized G_D to minimize cross-gpu communication

|

| 646 |

+

# Without this, Generator outputs would get all-gathered and then rebroadcast.

|

| 647 |

+

class G_D(nn.Module):

|

| 648 |

+

def __init__(self, G, D, optimizer_G=None, optimizer_D=None):

|

| 649 |

+

super(G_D, self).__init__()

|

| 650 |

+

self.G = G

|

| 651 |

+

self.D = D

|

| 652 |

+

self.optimizer_G = optimizer_G

|

| 653 |

+

self.optimizer_D = optimizer_D

|

| 654 |

+

|

| 655 |

+

def forward(

|

| 656 |

+

self,

|

| 657 |

+

z,

|

| 658 |

+

gy,

|

| 659 |

+

feats_g=None,

|

| 660 |

+

x=None,

|

| 661 |

+

dy=None,

|

| 662 |

+

feats=None,

|

| 663 |

+

train_G=False,

|

| 664 |

+

return_G_z=False,

|

| 665 |

+

split_D=False,

|

| 666 |

+

policy=False,

|

| 667 |

+

DA=False,

|

| 668 |

+

):

|

| 669 |

+

# If training G, enable grad tape

|

| 670 |

+

with torch.set_grad_enabled(train_G):

|

| 671 |

+

# Get Generator output given noise

|

| 672 |

+

G_z = self.G(z, gy, feats_g)

|

| 673 |

+

# Cast as necessary

|

| 674 |

+

# if self.G.fp16 and not self.D.fp16:

|

| 675 |

+

# G_z = G_z.float()

|

| 676 |

+

# if self.D.fp16 and not self.G.fp16:

|

| 677 |

+

# G_z = G_z.half()

|

| 678 |

+

# Split_D means to run D once with real data and once with fake,

|

| 679 |

+

# rather than concatenating along the batch dimension.

|

| 680 |

+

if split_D:

|

| 681 |

+

D_fake = self.D(G_z, gy, feats_g)

|

| 682 |

+

if x is not None:

|

| 683 |

+

D_real = self.D(x, dy, feats)

|

| 684 |

+

return D_fake, D_real

|

| 685 |

+

else:

|

| 686 |

+

if return_G_z:

|

| 687 |

+

return D_fake, G_z

|

| 688 |

+

else:

|

| 689 |

+

return D_fake

|

| 690 |

+

# If real data is provided, concatenate it with the Generator's output

|

| 691 |

+

# along the batch dimension for improved efficiency.

|

| 692 |

+

else:

|

| 693 |

+

D_input = torch.cat([G_z, x], 0) if x is not None else G_z

|

| 694 |

+

D_class = torch.cat([gy, dy], 0) if dy is not None else gy

|

| 695 |

+

if feats_g is not None:

|

| 696 |

+

D_feats = (

|

| 697 |

+

torch.cat([feats_g, feats], 0) if feats is not None else feats_g

|

| 698 |

+

)

|

| 699 |

+

else:

|

| 700 |

+

D_feats = None

|

| 701 |

+

if DA:

|

| 702 |

+

D_input = DiffAugment(D_input, policy=policy)

|

| 703 |

+

# Get Discriminator output

|

| 704 |

+

D_out = self.D(D_input, D_class, D_feats)

|

| 705 |

+

if x is not None:

|

| 706 |

+

return torch.split(D_out, [G_z.shape[0], x.shape[0]]) # D_fake, D_real

|

| 707 |

+

else:

|

| 708 |

+

if return_G_z:

|

| 709 |

+

return D_out, G_z

|

| 710 |

+

else:

|

| 711 |

+

return D_out

|

BigGAN_PyTorch/BigGANdeep.py

ADDED

|

@@ -0,0 +1,734 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|