import from zenodo

Browse files- README.md +50 -0

- exp/tts_stats_raw_phn_tacotron_g2p_en_no_space/train/feats_stats.npz +0 -0

- exp/tts_train_gst_transformer_raw_phn_tacotron_g2p_en_no_space/config.yaml +255 -0

- exp/tts_train_gst_transformer_raw_phn_tacotron_g2p_en_no_space/images/backward_time.png +0 -0

- exp/tts_train_gst_transformer_raw_phn_tacotron_g2p_en_no_space/images/bce_loss.png +0 -0

- exp/tts_train_gst_transformer_raw_phn_tacotron_g2p_en_no_space/images/decoder_alpha.png +0 -0

- exp/tts_train_gst_transformer_raw_phn_tacotron_g2p_en_no_space/images/enc_dec_attn_loss.png +0 -0

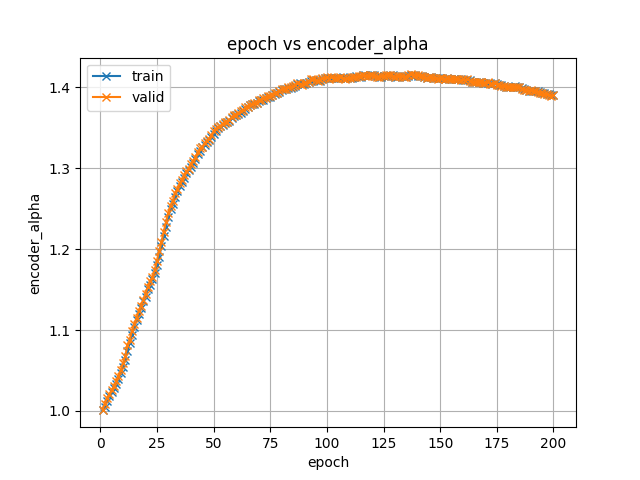

- exp/tts_train_gst_transformer_raw_phn_tacotron_g2p_en_no_space/images/encoder_alpha.png +0 -0

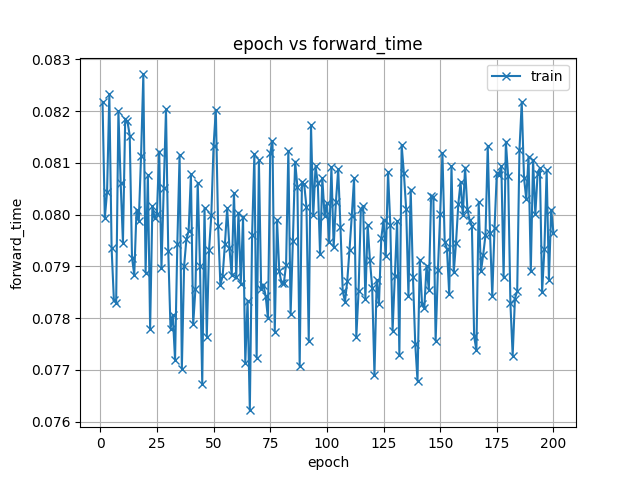

- exp/tts_train_gst_transformer_raw_phn_tacotron_g2p_en_no_space/images/forward_time.png +0 -0

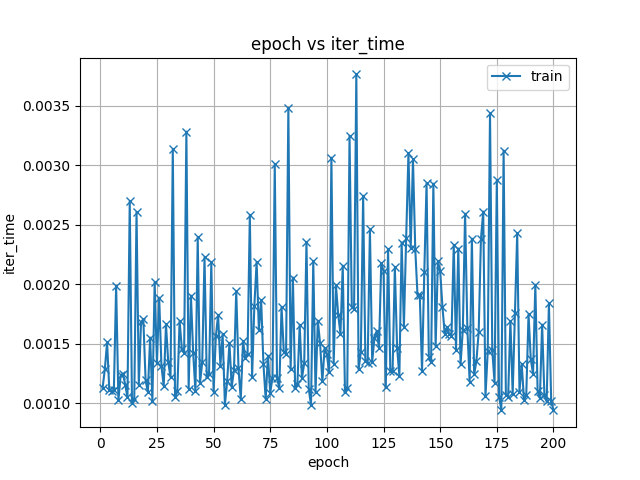

- exp/tts_train_gst_transformer_raw_phn_tacotron_g2p_en_no_space/images/iter_time.png +0 -0

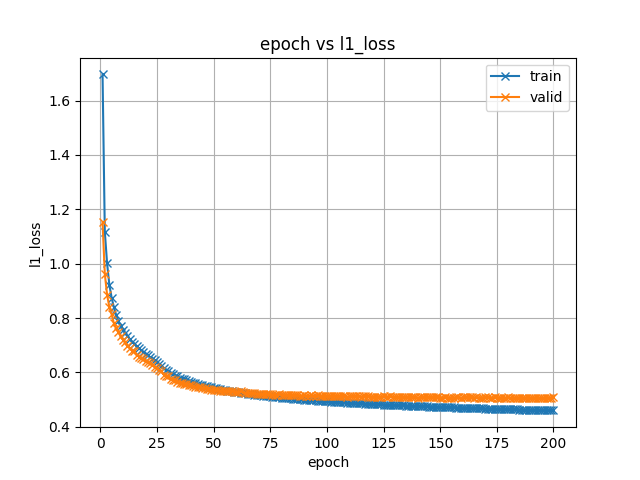

- exp/tts_train_gst_transformer_raw_phn_tacotron_g2p_en_no_space/images/l1_loss.png +0 -0

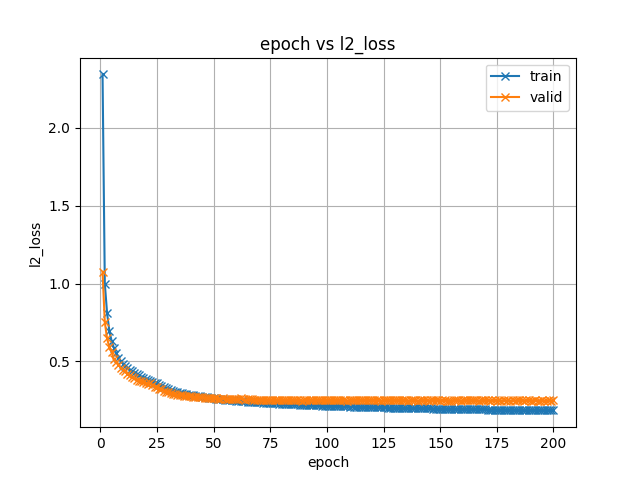

- exp/tts_train_gst_transformer_raw_phn_tacotron_g2p_en_no_space/images/l2_loss.png +0 -0

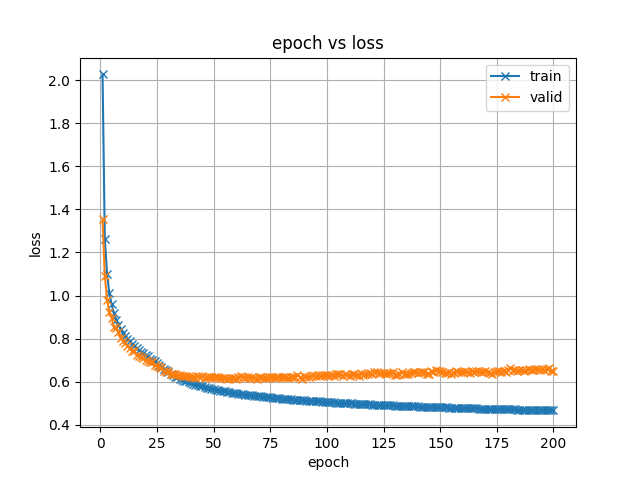

- exp/tts_train_gst_transformer_raw_phn_tacotron_g2p_en_no_space/images/loss.png +0 -0

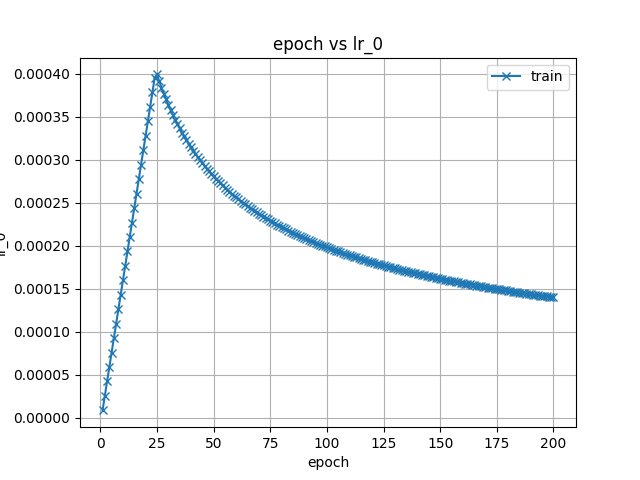

- exp/tts_train_gst_transformer_raw_phn_tacotron_g2p_en_no_space/images/lr_0.png +0 -0

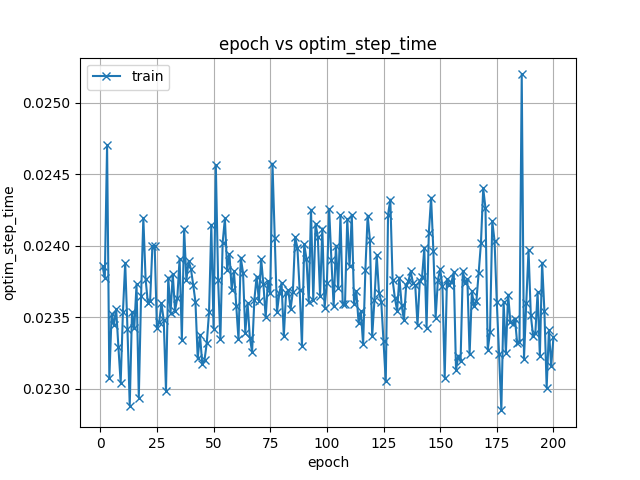

- exp/tts_train_gst_transformer_raw_phn_tacotron_g2p_en_no_space/images/optim_step_time.png +0 -0

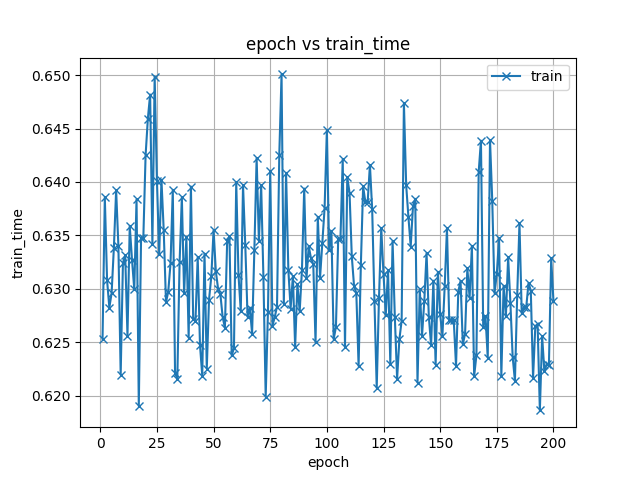

- exp/tts_train_gst_transformer_raw_phn_tacotron_g2p_en_no_space/images/train_time.png +0 -0

- exp/tts_train_gst_transformer_raw_phn_tacotron_g2p_en_no_space/train.loss.ave_5best.pth +3 -0

- meta.yaml +8 -0

README.md

ADDED

|

@@ -0,0 +1,50 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

tags:

|

| 3 |

+

- espnet

|

| 4 |

+

- audio

|

| 5 |

+

- text-to-speech

|

| 6 |

+

language: en

|

| 7 |

+

datasets:

|

| 8 |

+

- vctk

|

| 9 |

+

license: cc-by-4.0

|

| 10 |

+

---

|

| 11 |

+

## Example ESPnet2 TTS model

|

| 12 |

+

### `kan-bayashi/vctk_gst_transformer`

|

| 13 |

+

♻️ Imported from https://zenodo.org/record/4037456/

|

| 14 |

+

|

| 15 |

+

This model was trained by kan-bayashi using vctk/tts1 recipe in [espnet](https://github.com/espnet/espnet/).

|

| 16 |

+

### Demo: How to use in ESPnet2

|

| 17 |

+

```python

|

| 18 |

+

# coming soon

|

| 19 |

+

```

|

| 20 |

+

### Citing ESPnet

|

| 21 |

+

```BibTex

|

| 22 |

+

@inproceedings{watanabe2018espnet,

|

| 23 |

+

author={Shinji Watanabe and Takaaki Hori and Shigeki Karita and Tomoki Hayashi and Jiro Nishitoba and Yuya Unno and Nelson {Enrique Yalta Soplin} and Jahn Heymann and Matthew Wiesner and Nanxin Chen and Adithya Renduchintala and Tsubasa Ochiai},

|

| 24 |

+

title={{ESPnet}: End-to-End Speech Processing Toolkit},

|

| 25 |

+

year={2018},

|

| 26 |

+

booktitle={Proceedings of Interspeech},

|

| 27 |

+

pages={2207--2211},

|

| 28 |

+

doi={10.21437/Interspeech.2018-1456},

|

| 29 |

+

url={http://dx.doi.org/10.21437/Interspeech.2018-1456}

|

| 30 |

+

}

|

| 31 |

+

@inproceedings{hayashi2020espnet,

|

| 32 |

+

title={{Espnet-TTS}: Unified, reproducible, and integratable open source end-to-end text-to-speech toolkit},

|

| 33 |

+

author={Hayashi, Tomoki and Yamamoto, Ryuichi and Inoue, Katsuki and Yoshimura, Takenori and Watanabe, Shinji and Toda, Tomoki and Takeda, Kazuya and Zhang, Yu and Tan, Xu},

|

| 34 |

+

booktitle={Proceedings of IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP)},

|

| 35 |

+

pages={7654--7658},

|

| 36 |

+

year={2020},

|

| 37 |

+

organization={IEEE}

|

| 38 |

+

}

|

| 39 |

+

```

|

| 40 |

+

or arXiv:

|

| 41 |

+

```bibtex

|

| 42 |

+

@misc{watanabe2018espnet,

|

| 43 |

+

title={ESPnet: End-to-End Speech Processing Toolkit},

|

| 44 |

+

author={Shinji Watanabe and Takaaki Hori and Shigeki Karita and Tomoki Hayashi and Jiro Nishitoba and Yuya Unno and Nelson Enrique Yalta Soplin and Jahn Heymann and Matthew Wiesner and Nanxin Chen and Adithya Renduchintala and Tsubasa Ochiai},

|

| 45 |

+

year={2018},

|

| 46 |

+

eprint={1804.00015},

|

| 47 |

+

archivePrefix={arXiv},

|

| 48 |

+

primaryClass={cs.CL}

|

| 49 |

+

}

|

| 50 |

+

```

|

exp/tts_stats_raw_phn_tacotron_g2p_en_no_space/train/feats_stats.npz

ADDED

|

Binary file (1.4 kB). View file

|

|

|

exp/tts_train_gst_transformer_raw_phn_tacotron_g2p_en_no_space/config.yaml

ADDED

|

@@ -0,0 +1,255 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

config: conf/tuning/train_gst_transformer.yaml

|

| 2 |

+

print_config: false

|

| 3 |

+

log_level: INFO

|

| 4 |

+

dry_run: false

|

| 5 |

+

iterator_type: sequence

|

| 6 |

+

output_dir: exp/tts_train_gst_transformer_raw_phn_tacotron_g2p_en_no_space

|

| 7 |

+

ngpu: 1

|

| 8 |

+

seed: 0

|

| 9 |

+

num_workers: 1

|

| 10 |

+

num_att_plot: 3

|

| 11 |

+

dist_backend: nccl

|

| 12 |

+

dist_init_method: env://

|

| 13 |

+

dist_world_size: 4

|

| 14 |

+

dist_rank: 0

|

| 15 |

+

local_rank: 0

|

| 16 |

+

dist_master_addr: localhost

|

| 17 |

+

dist_master_port: 37313

|

| 18 |

+

dist_launcher: null

|

| 19 |

+

multiprocessing_distributed: true

|

| 20 |

+

cudnn_enabled: true

|

| 21 |

+

cudnn_benchmark: false

|

| 22 |

+

cudnn_deterministic: true

|

| 23 |

+

collect_stats: false

|

| 24 |

+

write_collected_feats: false

|

| 25 |

+

max_epoch: 200

|

| 26 |

+

patience: null

|

| 27 |

+

val_scheduler_criterion:

|

| 28 |

+

- valid

|

| 29 |

+

- loss

|

| 30 |

+

early_stopping_criterion:

|

| 31 |

+

- valid

|

| 32 |

+

- loss

|

| 33 |

+

- min

|

| 34 |

+

best_model_criterion:

|

| 35 |

+

- - valid

|

| 36 |

+

- loss

|

| 37 |

+

- min

|

| 38 |

+

- - train

|

| 39 |

+

- loss

|

| 40 |

+

- min

|

| 41 |

+

keep_nbest_models: 5

|

| 42 |

+

grad_clip: 1.0

|

| 43 |

+

grad_clip_type: 2.0

|

| 44 |

+

grad_noise: false

|

| 45 |

+

accum_grad: 2

|

| 46 |

+

no_forward_run: false

|

| 47 |

+

resume: true

|

| 48 |

+

train_dtype: float32

|

| 49 |

+

use_amp: false

|

| 50 |

+

log_interval: null

|

| 51 |

+

pretrain_path: []

|

| 52 |

+

pretrain_key: []

|

| 53 |

+

num_iters_per_epoch: 1000

|

| 54 |

+

batch_size: 20

|

| 55 |

+

valid_batch_size: null

|

| 56 |

+

batch_bins: 9000000

|

| 57 |

+

valid_batch_bins: null

|

| 58 |

+

train_shape_file:

|

| 59 |

+

- exp/tts_stats_raw_phn_tacotron_g2p_en_no_space/train/text_shape.phn

|

| 60 |

+

- exp/tts_stats_raw_phn_tacotron_g2p_en_no_space/train/speech_shape

|

| 61 |

+

valid_shape_file:

|

| 62 |

+

- exp/tts_stats_raw_phn_tacotron_g2p_en_no_space/valid/text_shape.phn

|

| 63 |

+

- exp/tts_stats_raw_phn_tacotron_g2p_en_no_space/valid/speech_shape

|

| 64 |

+

batch_type: numel

|

| 65 |

+

valid_batch_type: null

|

| 66 |

+

fold_length:

|

| 67 |

+

- 150

|

| 68 |

+

- 240000

|

| 69 |

+

sort_in_batch: descending

|

| 70 |

+

sort_batch: descending

|

| 71 |

+

multiple_iterator: false

|

| 72 |

+

chunk_length: 500

|

| 73 |

+

chunk_shift_ratio: 0.5

|

| 74 |

+

num_cache_chunks: 1024

|

| 75 |

+

train_data_path_and_name_and_type:

|

| 76 |

+

- - dump/raw/tr_no_dev/text

|

| 77 |

+

- text

|

| 78 |

+

- text

|

| 79 |

+

- - dump/raw/tr_no_dev/wav.scp

|

| 80 |

+

- speech

|

| 81 |

+

- sound

|

| 82 |

+

valid_data_path_and_name_and_type:

|

| 83 |

+

- - dump/raw/dev/text

|

| 84 |

+

- text

|

| 85 |

+

- text

|

| 86 |

+

- - dump/raw/dev/wav.scp

|

| 87 |

+

- speech

|

| 88 |

+

- sound

|

| 89 |

+

allow_variable_data_keys: false

|

| 90 |

+

max_cache_size: 0.0

|

| 91 |

+

valid_max_cache_size: null

|

| 92 |

+

optim: adam

|

| 93 |

+

optim_conf:

|

| 94 |

+

lr: 1.0

|

| 95 |

+

scheduler: noamlr

|

| 96 |

+

scheduler_conf:

|

| 97 |

+

model_size: 512

|

| 98 |

+

warmup_steps: 12000

|

| 99 |

+

token_list:

|

| 100 |

+

- <blank>

|

| 101 |

+

- <unk>

|

| 102 |

+

- AH0

|

| 103 |

+

- T

|

| 104 |

+

- N

|

| 105 |

+

- S

|

| 106 |

+

- R

|

| 107 |

+

- IH1

|

| 108 |

+

- D

|

| 109 |

+

- L

|

| 110 |

+

- .

|

| 111 |

+

- Z

|

| 112 |

+

- DH

|

| 113 |

+

- K

|

| 114 |

+

- W

|

| 115 |

+

- M

|

| 116 |

+

- AE1

|

| 117 |

+

- EH1

|

| 118 |

+

- AA1

|

| 119 |

+

- IH0

|

| 120 |

+

- IY1

|

| 121 |

+

- AH1

|

| 122 |

+

- B

|

| 123 |

+

- P

|

| 124 |

+

- V

|

| 125 |

+

- ER0

|

| 126 |

+

- F

|

| 127 |

+

- HH

|

| 128 |

+

- AY1

|

| 129 |

+

- EY1

|

| 130 |

+

- UW1

|

| 131 |

+

- IY0

|

| 132 |

+

- AO1

|

| 133 |

+

- OW1

|

| 134 |

+

- G

|

| 135 |

+

- ','

|

| 136 |

+

- NG

|

| 137 |

+

- SH

|

| 138 |

+

- Y

|

| 139 |

+

- JH

|

| 140 |

+

- AW1

|

| 141 |

+

- UH1

|

| 142 |

+

- TH

|

| 143 |

+

- ER1

|

| 144 |

+

- CH

|

| 145 |

+

- '?'

|

| 146 |

+

- OW0

|

| 147 |

+

- OW2

|

| 148 |

+

- EH2

|

| 149 |

+

- EY2

|

| 150 |

+

- UW0

|

| 151 |

+

- IH2

|

| 152 |

+

- OY1

|

| 153 |

+

- AY2

|

| 154 |

+

- ZH

|

| 155 |

+

- AW2

|

| 156 |

+

- EH0

|

| 157 |

+

- IY2

|

| 158 |

+

- AA2

|

| 159 |

+

- AE0

|

| 160 |

+

- AH2

|

| 161 |

+

- AE2

|

| 162 |

+

- AO0

|

| 163 |

+

- AO2

|

| 164 |

+

- AY0

|

| 165 |

+

- UW2

|

| 166 |

+

- UH2

|

| 167 |

+

- AA0

|

| 168 |

+

- AW0

|

| 169 |

+

- EY0

|

| 170 |

+

- '!'

|

| 171 |

+

- UH0

|

| 172 |

+

- ER2

|

| 173 |

+

- OY2

|

| 174 |

+

- ''''

|

| 175 |

+

- OY0

|

| 176 |

+

- <sos/eos>

|

| 177 |

+

odim: null

|

| 178 |

+

model_conf: {}

|

| 179 |

+

use_preprocessor: true

|

| 180 |

+

token_type: phn

|

| 181 |

+

bpemodel: null

|

| 182 |

+

non_linguistic_symbols: null

|

| 183 |

+

cleaner: tacotron

|

| 184 |

+

g2p: g2p_en_no_space

|

| 185 |

+

feats_extract: fbank

|

| 186 |

+

feats_extract_conf:

|

| 187 |

+

fs: 24000

|

| 188 |

+

fmin: 80

|

| 189 |

+

fmax: 7600

|

| 190 |

+

n_mels: 80

|

| 191 |

+

hop_length: 300

|

| 192 |

+

n_fft: 2048

|

| 193 |

+

win_length: 1200

|

| 194 |

+

normalize: global_mvn

|

| 195 |

+

normalize_conf:

|

| 196 |

+

stats_file: exp/tts_stats_raw_phn_tacotron_g2p_en_no_space/train/feats_stats.npz

|

| 197 |

+

tts: transformer

|

| 198 |

+

tts_conf:

|

| 199 |

+

embed_dim: 0

|

| 200 |

+

eprenet_conv_layers: 0

|

| 201 |

+

eprenet_conv_filts: 0

|

| 202 |

+

eprenet_conv_chans: 0

|

| 203 |

+

dprenet_layers: 2

|

| 204 |

+

dprenet_units: 256

|

| 205 |

+

adim: 512

|

| 206 |

+

aheads: 8

|

| 207 |

+

elayers: 6

|

| 208 |

+

eunits: 1024

|

| 209 |

+

dlayers: 6

|

| 210 |

+

dunits: 1024

|

| 211 |

+

positionwise_layer_type: conv1d

|

| 212 |

+

positionwise_conv_kernel_size: 1

|

| 213 |

+

postnet_layers: 5

|

| 214 |

+

postnet_filts: 5

|

| 215 |

+

postnet_chans: 256

|

| 216 |

+

use_gst: true

|

| 217 |

+

gst_heads: 8

|

| 218 |

+

gst_tokens: 256

|

| 219 |

+

use_masking: true

|

| 220 |

+

bce_pos_weight: 5.0

|

| 221 |

+

use_scaled_pos_enc: true

|

| 222 |

+

encoder_normalize_before: false

|

| 223 |

+

decoder_normalize_before: false

|

| 224 |

+

reduction_factor: 1

|

| 225 |

+

init_type: xavier_uniform

|

| 226 |

+

init_enc_alpha: 1.0

|

| 227 |

+

init_dec_alpha: 1.0

|

| 228 |

+

eprenet_dropout_rate: 0.0

|

| 229 |

+

dprenet_dropout_rate: 0.5

|

| 230 |

+

postnet_dropout_rate: 0.5

|

| 231 |

+

transformer_enc_dropout_rate: 0.1

|

| 232 |

+

transformer_enc_positional_dropout_rate: 0.1

|

| 233 |

+

transformer_enc_attn_dropout_rate: 0.1

|

| 234 |

+

transformer_dec_dropout_rate: 0.1

|

| 235 |

+

transformer_dec_positional_dropout_rate: 0.1

|

| 236 |

+

transformer_dec_attn_dropout_rate: 0.1

|

| 237 |

+

transformer_enc_dec_attn_dropout_rate: 0.1

|

| 238 |

+

use_guided_attn_loss: true

|

| 239 |

+

num_heads_applied_guided_attn: 2

|

| 240 |

+

num_layers_applied_guided_attn: 2

|

| 241 |

+

modules_applied_guided_attn:

|

| 242 |

+

- encoder-decoder

|

| 243 |

+

guided_attn_loss_lambda: 10.0

|

| 244 |

+

pitch_extract: null

|

| 245 |

+

pitch_extract_conf: {}

|

| 246 |

+

pitch_normalize: null

|

| 247 |

+

pitch_normalize_conf: {}

|

| 248 |

+

energy_extract: null

|

| 249 |

+

energy_extract_conf: {}

|

| 250 |

+

energy_normalize: null

|

| 251 |

+

energy_normalize_conf: {}

|

| 252 |

+

required:

|

| 253 |

+

- output_dir

|

| 254 |

+

- token_list

|

| 255 |

+

distributed: true

|

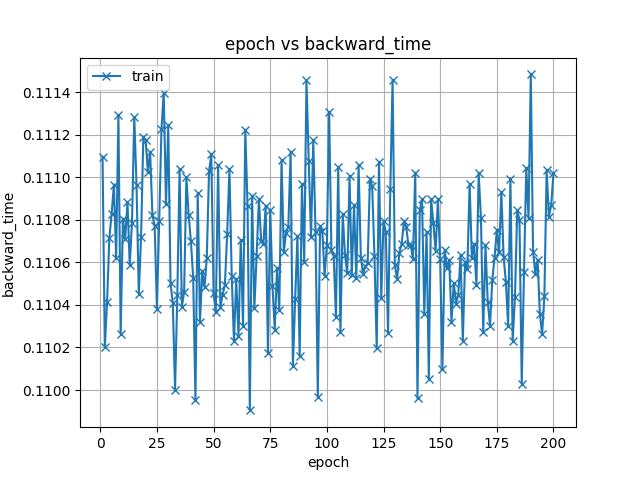

exp/tts_train_gst_transformer_raw_phn_tacotron_g2p_en_no_space/images/backward_time.png

ADDED

|

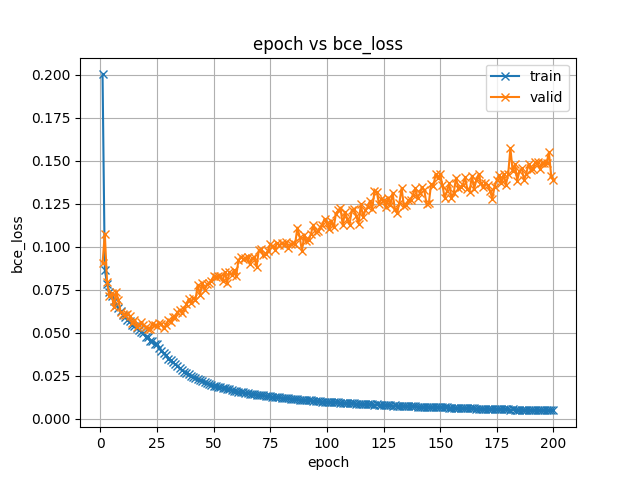

exp/tts_train_gst_transformer_raw_phn_tacotron_g2p_en_no_space/images/bce_loss.png

ADDED

|

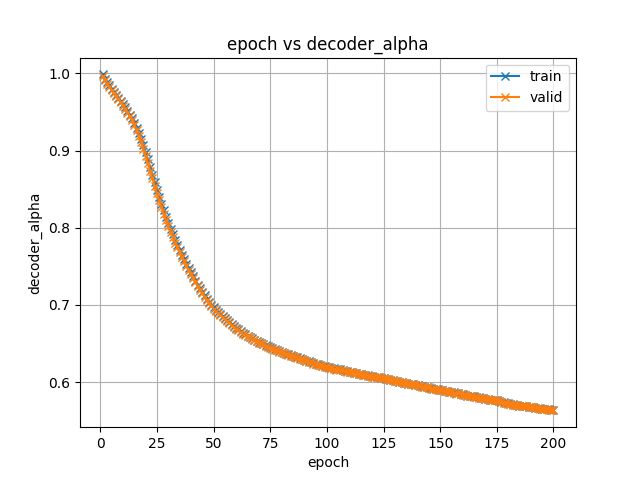

exp/tts_train_gst_transformer_raw_phn_tacotron_g2p_en_no_space/images/decoder_alpha.png

ADDED

|

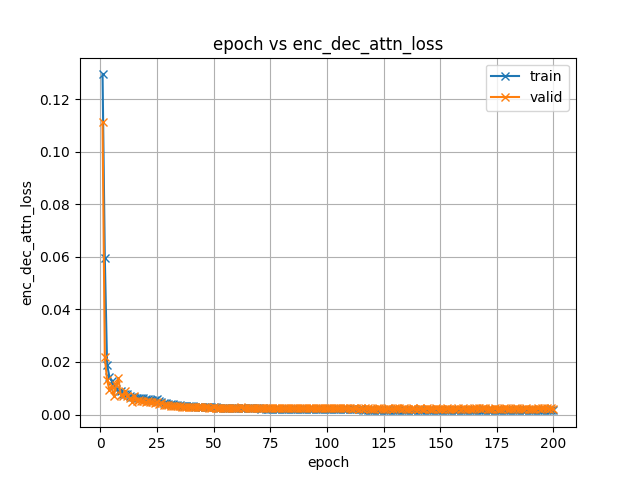

exp/tts_train_gst_transformer_raw_phn_tacotron_g2p_en_no_space/images/enc_dec_attn_loss.png

ADDED

|

exp/tts_train_gst_transformer_raw_phn_tacotron_g2p_en_no_space/images/encoder_alpha.png

ADDED

|

exp/tts_train_gst_transformer_raw_phn_tacotron_g2p_en_no_space/images/forward_time.png

ADDED

|

exp/tts_train_gst_transformer_raw_phn_tacotron_g2p_en_no_space/images/iter_time.png

ADDED

|

exp/tts_train_gst_transformer_raw_phn_tacotron_g2p_en_no_space/images/l1_loss.png

ADDED

|

exp/tts_train_gst_transformer_raw_phn_tacotron_g2p_en_no_space/images/l2_loss.png

ADDED

|

exp/tts_train_gst_transformer_raw_phn_tacotron_g2p_en_no_space/images/loss.png

ADDED

|

exp/tts_train_gst_transformer_raw_phn_tacotron_g2p_en_no_space/images/lr_0.png

ADDED

|

exp/tts_train_gst_transformer_raw_phn_tacotron_g2p_en_no_space/images/optim_step_time.png

ADDED

|

exp/tts_train_gst_transformer_raw_phn_tacotron_g2p_en_no_space/images/train_time.png

ADDED

|

exp/tts_train_gst_transformer_raw_phn_tacotron_g2p_en_no_space/train.loss.ave_5best.pth

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:4a81017ab6d9ed149046a03ed392a29d04f31ab48362300fadf40d444bd649d0

|

| 3 |

+

size 135933083

|

meta.yaml

ADDED

|

@@ -0,0 +1,8 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

espnet: 0.8.0

|

| 2 |

+

files:

|

| 3 |

+

model_file: exp/tts_train_gst_transformer_raw_phn_tacotron_g2p_en_no_space/train.loss.ave_5best.pth

|

| 4 |

+

python: "3.7.3 (default, Mar 27 2019, 22:11:17) \n[GCC 7.3.0]"

|

| 5 |

+

timestamp: 1600477168.465888

|

| 6 |

+

torch: 1.5.1

|

| 7 |

+

yaml_files:

|

| 8 |

+

train_config: exp/tts_train_gst_transformer_raw_phn_tacotron_g2p_en_no_space/config.yaml

|