Commit

•

3ca532e

1

Parent(s):

df8d078

Upload 4 files

Browse files- Model_Evaluation_UdacityGenAIAWS.ipynb +307 -0

- Model_FineTuning.ipynb +875 -0

- Screenshot 2024-05-22 164003.png +0 -0

- Screenshot 2024-05-22 171622.png +0 -0

Model_Evaluation_UdacityGenAIAWS.ipynb

ADDED

|

@@ -0,0 +1,307 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

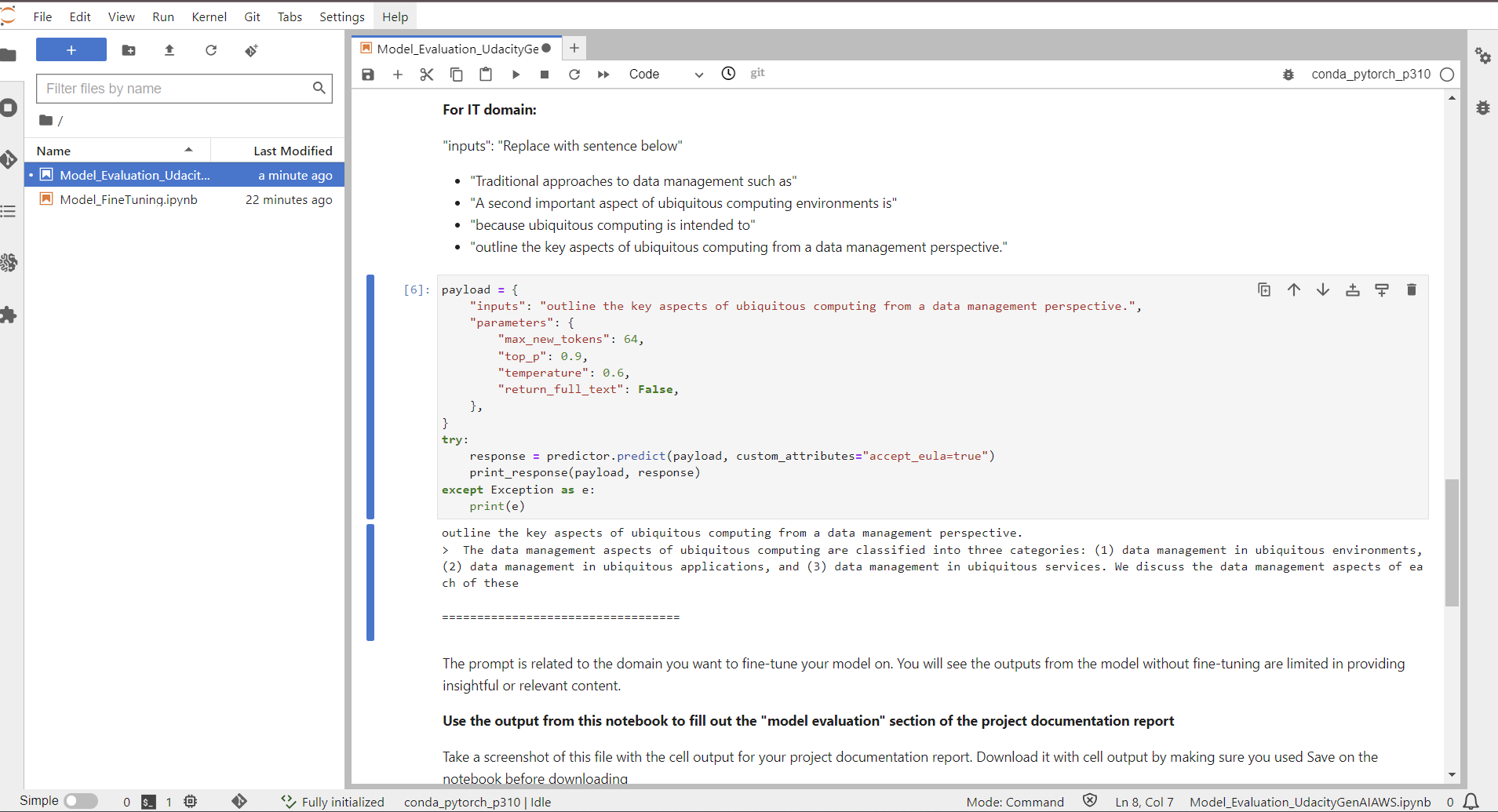

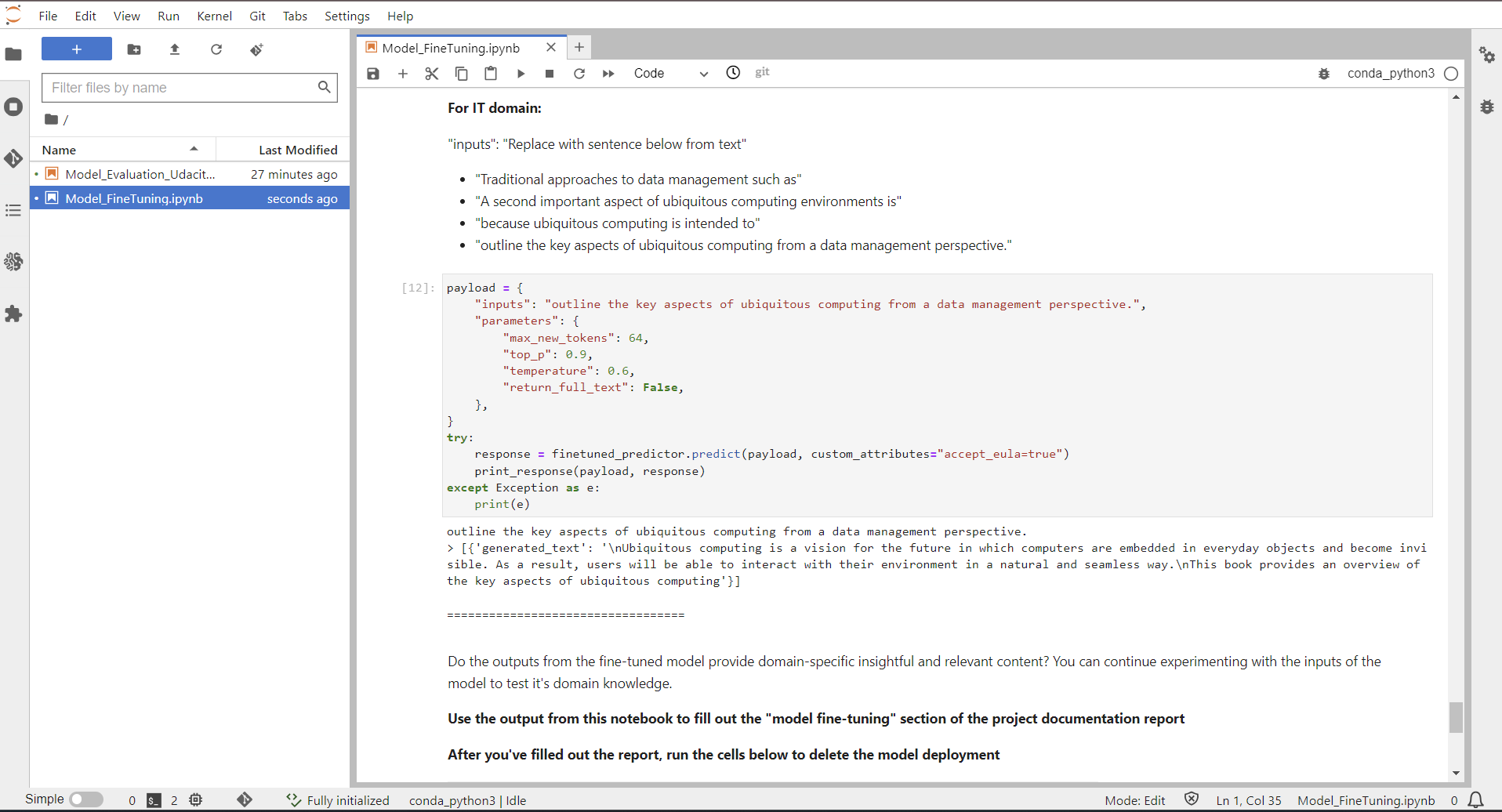

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"cells": [

|

| 3 |

+

{

|

| 4 |

+

"cell_type": "markdown",

|

| 5 |

+

"metadata": {},

|

| 6 |

+

"source": [

|

| 7 |

+

"#### Step 3: LLM Model Evaluation"

|

| 8 |

+

]

|

| 9 |

+

},

|

| 10 |

+

{

|

| 11 |

+

"cell_type": "markdown",

|

| 12 |

+

"metadata": {},

|

| 13 |

+

"source": [

|

| 14 |

+

"In this notebook, you'll deploy the Meta Llama 2 7B model and evaluate it's text generation capabilities and domain knowledge. You'll use the SageMaker Python SDK for Foundation Models and deploy the model for inference. \n",

|

| 15 |

+

"\n",

|

| 16 |

+

"The Llama 2 7B Foundation model performs the task of text generation. It takes a text string as input and predicts next words in the sequence. "

|

| 17 |

+

]

|

| 18 |

+

},

|

| 19 |

+

{

|

| 20 |

+

"cell_type": "markdown",

|

| 21 |

+

"metadata": {},

|

| 22 |

+

"source": [

|

| 23 |

+

"#### Set Up\n",

|

| 24 |

+

"There are some initial steps required for setup. If you recieve warnings after running these cells, you can ignore them as they won't impact the code running in the notebook. Run the cell below to ensure you're using the latest version of the Sagemaker Python client library. Restart the Kernel after you run this cell. "

|

| 25 |

+

]

|

| 26 |

+

},

|

| 27 |

+

{

|

| 28 |

+

"cell_type": "code",

|

| 29 |

+

"execution_count": 1,

|

| 30 |

+

"metadata": {

|

| 31 |

+

"tags": []

|

| 32 |

+

},

|

| 33 |

+

"outputs": [],

|

| 34 |

+

"source": [

|

| 35 |

+

"!pip install ipywidgets==7.0.0 --quiet\n",

|

| 36 |

+

"!pip install --upgrade sagemaker datasets --quiet"

|

| 37 |

+

]

|

| 38 |

+

},

|

| 39 |

+

{

|

| 40 |

+

"cell_type": "markdown",

|

| 41 |

+

"metadata": {},

|

| 42 |

+

"source": [

|

| 43 |

+

"***! Restart the notebook kernel now after running the above cell and before you run any cells below !*** "

|

| 44 |

+

]

|

| 45 |

+

},

|

| 46 |

+

{

|

| 47 |

+

"cell_type": "markdown",

|

| 48 |

+

"metadata": {},

|

| 49 |

+

"source": [

|

| 50 |

+

"To deploy the model on Amazon Sagemaker, we need to setup and authenticate the use of AWS services. Yo'll uuse the execution role associated with the current notebook instance as the AWS account role with SageMaker access. Validate your role is the Sagemaker IAM role you created for the project by running the next cell. "

|

| 51 |

+

]

|

| 52 |

+

},

|

| 53 |

+

{

|

| 54 |

+

"cell_type": "code",

|

| 55 |

+

"execution_count": 1,

|

| 56 |

+

"metadata": {

|

| 57 |

+

"tags": []

|

| 58 |

+

},

|

| 59 |

+

"outputs": [

|

| 60 |

+

{

|

| 61 |

+

"name": "stdout",

|

| 62 |

+

"output_type": "stream",

|

| 63 |

+

"text": [

|

| 64 |

+

"sagemaker.config INFO - Not applying SDK defaults from location: /etc/xdg/sagemaker/config.yaml\n",

|

| 65 |

+

"sagemaker.config INFO - Not applying SDK defaults from location: /home/ec2-user/.config/sagemaker/config.yaml\n",

|

| 66 |

+

"arn:aws:iam::558778471579:role/service-role/SageMaker-ProjectSagemakerRole\n",

|

| 67 |

+

"us-west-2\n",

|

| 68 |

+

"<sagemaker.session.Session object at 0x7ff7ca50a530>\n"

|

| 69 |

+

]

|

| 70 |

+

}

|

| 71 |

+

],

|

| 72 |

+

"source": [

|

| 73 |

+

"import sagemaker, boto3, json\n",

|

| 74 |

+

"from sagemaker.session import Session\n",

|

| 75 |

+

"\n",

|

| 76 |

+

"sagemaker_session = Session()\n",

|

| 77 |

+

"aws_role = sagemaker_session.get_caller_identity_arn()\n",

|

| 78 |

+

"aws_region = boto3.Session().region_name\n",

|

| 79 |

+

"sess = sagemaker.Session()\n",

|

| 80 |

+

"print(aws_role)\n",

|

| 81 |

+

"print(aws_region)\n",

|

| 82 |

+

"print(sess)"

|

| 83 |

+

]

|

| 84 |

+

},

|

| 85 |

+

{

|

| 86 |

+

"cell_type": "markdown",

|

| 87 |

+

"metadata": {},

|

| 88 |

+

"source": [

|

| 89 |

+

"## 2. Select Text Generation Model Meta Llama 2 7B\n",

|

| 90 |

+

"Run the next cell to set variables that contain the values of the name of the model we want to load and the version of the model ."

|

| 91 |

+

]

|

| 92 |

+

},

|

| 93 |

+

{

|

| 94 |

+

"cell_type": "code",

|

| 95 |

+

"execution_count": 3,

|

| 96 |

+

"metadata": {

|

| 97 |

+

"tags": []

|

| 98 |

+

},

|

| 99 |

+

"outputs": [],

|

| 100 |

+

"source": [

|

| 101 |

+

"(model_id, model_version,) = (\"meta-textgeneration-llama-2-7b\",\"2.*\",)"

|

| 102 |

+

]

|

| 103 |

+

},

|

| 104 |

+

{

|

| 105 |

+

"cell_type": "markdown",

|

| 106 |

+

"metadata": {},

|

| 107 |

+

"source": [

|

| 108 |

+

"Running the next cell deploys the model\n",

|

| 109 |

+

"This Python code is used to deploy a machine learning model using Amazon SageMaker's JumpStart library. \n",

|

| 110 |

+

"\n",

|

| 111 |

+

"1. Import the `JumpStartModel` class from the `sagemaker.jumpstart.model` module.\n",

|

| 112 |

+

"\n",

|

| 113 |

+

"2. Create an instance of the `JumpStartModel` class using the `model_id` and `model_version` variables created in the previous cell. This object represents the machine learning model you want to deploy.\n",

|

| 114 |

+

"\n",

|

| 115 |

+

"3. Call the `deploy` method on the `JumpStartModel` instance. This method deploys the model on Amazon SageMaker and returns a `Predictor` object.\n",

|

| 116 |

+

"\n",

|

| 117 |

+

"The `Predictor` object (`predictor`) can be used to make predictions with the deployed model. The `deploy` method will automatically choose an endpoint name, instance type, and other deployment parameters. If you want to specify these parameters, you can pass them as arguments to the `deploy` method.\n",

|

| 118 |

+

"\n",

|

| 119 |

+

"**The next cell will take some time to run.** It is deploying a large language model, and that takes time. You'll see dashes (--) while it is being deployed. Please be patient! You'll see an exclamation point at the end of the dashes (---!) when the model is deployed and then you can continue running the next cells. \n",

|

| 120 |

+

"\n",

|

| 121 |

+

"You might see a warning \"For forward compatibility, pin to model_version...\" You can ignore this warning, just wait for the model to deploy. \n"

|

| 122 |

+

]

|

| 123 |

+

},

|

| 124 |

+

{

|

| 125 |

+

"cell_type": "code",

|

| 126 |

+

"execution_count": 4,

|

| 127 |

+

"metadata": {

|

| 128 |

+

"tags": []

|

| 129 |

+

},

|

| 130 |

+

"outputs": [

|

| 131 |

+

{

|

| 132 |

+

"name": "stderr",

|

| 133 |

+

"output_type": "stream",

|

| 134 |

+

"text": [

|

| 135 |

+

"For forward compatibility, pin to model_version='2.*' in your JumpStartModel or JumpStartEstimator definitions. Note that major version upgrades may have different EULA acceptance terms and input/output signatures.\n",

|

| 136 |

+

"Using vulnerable JumpStart model 'meta-textgeneration-llama-2-7b' and version '2.1.8'.\n",

|

| 137 |

+

"Using model 'meta-textgeneration-llama-2-7b' with wildcard version identifier '2.*'. You can pin to version '2.1.8' for more stable results. Note that models may have different input/output signatures after a major version upgrade.\n"

|

| 138 |

+

]

|

| 139 |

+

},

|

| 140 |

+

{

|

| 141 |

+

"name": "stdout",

|

| 142 |

+

"output_type": "stream",

|

| 143 |

+

"text": [

|

| 144 |

+

"----------------!"

|

| 145 |

+

]

|

| 146 |

+

}

|

| 147 |

+

],

|

| 148 |

+

"source": [

|

| 149 |

+

"from sagemaker.jumpstart.model import JumpStartModel\n",

|

| 150 |

+

"\n",

|

| 151 |

+

"model = JumpStartModel(model_id=model_id, model_version=model_version, instance_type=\"ml.g5.2xlarge\")\n",

|

| 152 |

+

"predictor = model.deploy()\n"

|

| 153 |

+

]

|

| 154 |

+

},

|

| 155 |

+

{

|

| 156 |

+

"cell_type": "markdown",

|

| 157 |

+

"metadata": {},

|

| 158 |

+

"source": [

|

| 159 |

+

"#### Invoke the endpoint, query and parse response\n",

|

| 160 |

+

"The next step is to invoke the model endpoint, send a query to the endpoint, and recieve a response from the model. \n",

|

| 161 |

+

"\n",

|

| 162 |

+

"Running the next cell defines a function that will be used to parse and print the response from the model. "

|

| 163 |

+

]

|

| 164 |

+

},

|

| 165 |

+

{

|

| 166 |

+

"cell_type": "code",

|

| 167 |

+

"execution_count": 5,

|

| 168 |

+

"metadata": {

|

| 169 |

+

"tags": []

|

| 170 |

+

},

|

| 171 |

+

"outputs": [],

|

| 172 |

+

"source": [

|

| 173 |

+

"def print_response(payload, response):\n",

|

| 174 |

+

" print(payload[\"inputs\"])\n",

|

| 175 |

+

" print(f\"> {response[0]['generation']}\")\n",

|

| 176 |

+

" print(\"\\n==================================\\n\")"

|

| 177 |

+

]

|

| 178 |

+

},

|

| 179 |

+

{

|

| 180 |

+

"cell_type": "markdown",

|

| 181 |

+

"metadata": {},

|

| 182 |

+

"source": [

|

| 183 |

+

"The model takes a text string as input and predicts next words in the sequence, the input we send it is the prompt. \n",

|

| 184 |

+

"\n",

|

| 185 |

+

"The prompt we send the model should relate to the domain we'd like to fine-tune the model on. This way we'll identify the model's domain knowledge before it's fine-tuned, and then we can run the same prompts on the fine-tuned model. \n",

|

| 186 |

+

"\n",

|

| 187 |

+

"**Replace \"inputs\"** in the next cell with the input to send the model based on the domain you've chosen. \n",

|

| 188 |

+

"\n",

|

| 189 |

+

"**For financial domain:**\n",

|

| 190 |

+

"\n",

|

| 191 |

+

" \"inputs\": \"Replace with sentence below\" \n",

|

| 192 |

+

"- \"The investment tests performed indicate\"\n",

|

| 193 |

+

"- \"the relative volume for the long out of the money options, indicates\"\n",

|

| 194 |

+

"- \"The results for the short in the money options\"\n",

|

| 195 |

+

"- \"The results are encouraging for aggressive investors\"\n",

|

| 196 |

+

"\n",

|

| 197 |

+

"**For medical domain:** \n",

|

| 198 |

+

"\n",

|

| 199 |

+

" \"inputs\": \"Replace with sentence below\" \n",

|

| 200 |

+

"- \"Myeloid neoplasms and acute leukemias derive from\"\n",

|

| 201 |

+

"- \"Genomic characterization is essential for\"\n",

|

| 202 |

+

"- \"Certain germline disorders may be associated with\"\n",

|

| 203 |

+

"- \"In contrast to targeted approaches, genome-wide sequencing\"\n",

|

| 204 |

+

"\n",

|

| 205 |

+

"**For IT domain:** \n",

|

| 206 |

+

"\n",

|

| 207 |

+

" \"inputs\": \"Replace with sentence below\" \n",

|

| 208 |

+

"- \"Traditional approaches to data management such as\"\n",

|

| 209 |

+

"- \"A second important aspect of ubiquitous computing environments is\"\n",

|

| 210 |

+

"- \"because ubiquitous computing is intended to\" \n",

|

| 211 |

+

"- \"outline the key aspects of ubiquitous computing from a data management perspective.\""

|

| 212 |

+

]

|

| 213 |

+

},

|

| 214 |

+

{

|

| 215 |

+

"cell_type": "code",

|

| 216 |

+

"execution_count": 6,

|

| 217 |

+

"metadata": {

|

| 218 |

+

"tags": []

|

| 219 |

+

},

|

| 220 |

+

"outputs": [

|

| 221 |

+

{

|

| 222 |

+

"name": "stdout",

|

| 223 |

+

"output_type": "stream",

|

| 224 |

+

"text": [

|

| 225 |

+

"outline the key aspects of ubiquitous computing from a data management perspective.\n",

|

| 226 |

+

"> The data management aspects of ubiquitous computing are classified into three categories: (1) data management in ubiquitous environments, (2) data management in ubiquitous applications, and (3) data management in ubiquitous services. We discuss the data management aspects of each of these\n",

|

| 227 |

+

"\n",

|

| 228 |

+

"==================================\n",

|

| 229 |

+

"\n"

|

| 230 |

+

]

|

| 231 |

+

}

|

| 232 |

+

],

|

| 233 |

+

"source": [

|

| 234 |

+

"payload = {\n",

|

| 235 |

+

" \"inputs\": \"outline the key aspects of ubiquitous computing from a data management perspective.\",\n",

|

| 236 |

+

" \"parameters\": {\n",

|

| 237 |

+

" \"max_new_tokens\": 64,\n",

|

| 238 |

+

" \"top_p\": 0.9,\n",

|

| 239 |

+

" \"temperature\": 0.6,\n",

|

| 240 |

+

" \"return_full_text\": False,\n",

|

| 241 |

+

" },\n",

|

| 242 |

+

"}\n",

|

| 243 |

+

"try:\n",

|

| 244 |

+

" response = predictor.predict(payload, custom_attributes=\"accept_eula=true\")\n",

|

| 245 |

+

" print_response(payload, response)\n",

|

| 246 |

+

"except Exception as e:\n",

|

| 247 |

+

" print(e)"

|

| 248 |

+

]

|

| 249 |

+

},

|

| 250 |

+

{

|

| 251 |

+

"cell_type": "markdown",

|

| 252 |

+

"metadata": {},

|

| 253 |

+

"source": [

|

| 254 |

+

"The prompt is related to the domain you want to fine-tune your model on. You will see the outputs from the model without fine-tuning are limited in providing insightful or relevant content.\n",

|

| 255 |

+

"\n",

|

| 256 |

+

"**Use the output from this notebook to fill out the \"model evaluation\" section of the project documentation report**\n",

|

| 257 |

+

"\n",

|

| 258 |

+

"Take a screenshot of this file with the cell output for your project documentation report. Download it with cell output by making sure you used Save on the notebook before downloading \n",

|

| 259 |

+

"\n",

|

| 260 |

+

"**After you've filled out the report, run the cells below to delete the model deployment** \n",

|

| 261 |

+

"\n",

|

| 262 |

+

"`IF YOU FAIL TO RUN THE CELLS BELOW YOU WILL RUN OUT OF BUDGET TO COMPLETE THE PROJECT`"

|

| 263 |

+

]

|

| 264 |

+

},

|

| 265 |

+

{

|

| 266 |

+

"cell_type": "code",

|

| 267 |

+

"execution_count": null,

|

| 268 |

+

"metadata": {

|

| 269 |

+

"tags": []

|

| 270 |

+

},

|

| 271 |

+

"outputs": [],

|

| 272 |

+

"source": [

|

| 273 |

+

"# Delete the SageMaker endpoint and the attached resources\n",

|

| 274 |

+

"predictor.delete_model()\n",

|

| 275 |

+

"predictor.delete_endpoint()"

|

| 276 |

+

]

|

| 277 |

+

},

|

| 278 |

+

{

|

| 279 |

+

"cell_type": "markdown",

|

| 280 |

+

"metadata": {},

|

| 281 |

+

"source": [

|

| 282 |

+

"Verify your model endpoint was deleted by visiting the Sagemaker dashboard and choosing `endpoints` under 'Inference' in the left navigation menu. If you see your endpoint still there, choose the endpoint, and then under \"Actions\" select **Delete**"

|

| 283 |

+

]

|

| 284 |

+

}

|

| 285 |

+

],

|

| 286 |

+

"metadata": {

|

| 287 |

+

"kernelspec": {

|

| 288 |

+

"display_name": "conda_pytorch_p310",

|

| 289 |

+

"language": "python",

|

| 290 |

+

"name": "conda_pytorch_p310"

|

| 291 |

+

},

|

| 292 |

+

"language_info": {

|

| 293 |

+

"codemirror_mode": {

|

| 294 |

+

"name": "ipython",

|

| 295 |

+

"version": 3

|

| 296 |

+

},

|

| 297 |

+

"file_extension": ".py",

|

| 298 |

+

"mimetype": "text/x-python",

|

| 299 |

+

"name": "python",

|

| 300 |

+

"nbconvert_exporter": "python",

|

| 301 |

+

"pygments_lexer": "ipython3",

|

| 302 |

+

"version": "3.10.14"

|

| 303 |

+

}

|

| 304 |

+

},

|

| 305 |

+

"nbformat": 4,

|

| 306 |

+

"nbformat_minor": 4

|

| 307 |

+

}

|

Model_FineTuning.ipynb

ADDED

|

@@ -0,0 +1,875 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"cells": [

|

| 3 |

+

{

|

| 4 |

+

"cell_type": "markdown",

|

| 5 |

+

"metadata": {},

|

| 6 |

+

"source": [

|

| 7 |

+

"#### Step 3: Model Fine-tuning\n",

|

| 8 |

+

"In this notebook, you'll fine-tune the Meta Llama 2 7B large language model, deploy the fine-tuned model, and test it's text generation and domain knowledge capabilities. \n",

|

| 9 |

+

"\n",

|

| 10 |

+

"Fine-tuning refers to the process of taking a pre-trained language model and retraining it for a different but related task using specific data. This approach is also known as transfer learning, which involves transferring the knowledge learned from one task to another. Large language models (LLMs) like Llama 2 7B are trained on massive amounts of unlabeled data and can be fine-tuned on domain domain datasets, making the model perform better on that specific domain.\n",

|

| 11 |

+

"\n",

|

| 12 |

+

"Input: A train and an optional validation directory. Each directory contains a CSV/JSON/TXT file.\n",

|

| 13 |

+

"For CSV/JSON files, the train or validation data is used from the column called 'text' or the first column if no column called 'text' is found.\n",

|

| 14 |

+

"The number of files under train and validation should equal to one.\n",

|

| 15 |

+

"\n",

|

| 16 |

+

"- **You'll choose your dataset below based on the domain you've chosen**\n",

|

| 17 |

+

"\n",

|

| 18 |

+

"Output: A trained model that can be deployed for inference.\\\n",

|

| 19 |

+

"After you've fine-tuned the model, you'll evaluate it with the same input you used in project step 2: model evaluation. \n",

|

| 20 |

+

"\n",

|

| 21 |

+

"---"

|

| 22 |

+

]

|

| 23 |

+

},

|

| 24 |

+

{

|

| 25 |

+

"cell_type": "markdown",

|

| 26 |

+

"metadata": {},

|

| 27 |

+

"source": [

|

| 28 |

+

"#### Set up\n",

|

| 29 |

+

"\n",

|

| 30 |

+

"---\n",

|

| 31 |

+

"Install and import the necessary packages. Restart the kernel after executing the cell below. \n",

|

| 32 |

+

"\n",

|

| 33 |

+

"---"

|

| 34 |

+

]

|

| 35 |

+

},

|

| 36 |

+

{

|

| 37 |

+

"cell_type": "code",

|

| 38 |

+

"execution_count": 1,

|

| 39 |

+

"metadata": {

|

| 40 |

+

"tags": []

|

| 41 |

+

},

|

| 42 |

+

"outputs": [

|

| 43 |

+

{

|

| 44 |

+

"name": "stdout",

|

| 45 |

+

"output_type": "stream",

|

| 46 |

+

"text": [

|

| 47 |

+

"Requirement already satisfied: sagemaker in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (2.219.0)\n",

|

| 48 |

+

"Collecting sagemaker\n",

|

| 49 |

+

" Using cached sagemaker-2.221.0-py3-none-any.whl.metadata (14 kB)\n",

|

| 50 |

+

"Collecting datasets\n",

|

| 51 |

+

" Using cached datasets-2.19.1-py3-none-any.whl.metadata (19 kB)\n",

|

| 52 |

+

"Requirement already satisfied: attrs<24,>=23.1.0 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from sagemaker) (23.2.0)\n",

|

| 53 |

+

"Requirement already satisfied: boto3<2.0,>=1.33.3 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from sagemaker) (1.34.101)\n",

|

| 54 |

+

"Requirement already satisfied: cloudpickle==2.2.1 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from sagemaker) (2.2.1)\n",

|

| 55 |

+

"Requirement already satisfied: google-pasta in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from sagemaker) (0.2.0)\n",

|

| 56 |

+

"Requirement already satisfied: numpy<2.0,>=1.9.0 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from sagemaker) (1.22.4)\n",

|

| 57 |

+

"Requirement already satisfied: protobuf<5.0,>=3.12 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from sagemaker) (4.25.3)\n",

|

| 58 |

+

"Requirement already satisfied: smdebug-rulesconfig==1.0.1 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from sagemaker) (1.0.1)\n",

|

| 59 |

+

"Requirement already satisfied: importlib-metadata<7.0,>=1.4.0 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from sagemaker) (6.11.0)\n",

|

| 60 |

+

"Requirement already satisfied: packaging>=20.0 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from sagemaker) (21.3)\n",

|

| 61 |

+

"Requirement already satisfied: pandas in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from sagemaker) (2.2.1)\n",

|

| 62 |

+

"Requirement already satisfied: pathos in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from sagemaker) (0.3.2)\n",

|

| 63 |

+

"Requirement already satisfied: schema in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from sagemaker) (0.7.7)\n",

|

| 64 |

+

"Requirement already satisfied: PyYAML~=6.0 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from sagemaker) (6.0.1)\n",

|

| 65 |

+

"Requirement already satisfied: jsonschema in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from sagemaker) (4.21.1)\n",

|

| 66 |

+

"Requirement already satisfied: platformdirs in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from sagemaker) (4.2.0)\n",

|

| 67 |

+

"Requirement already satisfied: tblib<4,>=1.7.0 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from sagemaker) (3.0.0)\n",

|

| 68 |

+

"Requirement already satisfied: urllib3<3.0.0,>=1.26.8 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from sagemaker) (2.2.1)\n",

|

| 69 |

+

"Requirement already satisfied: requests in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from sagemaker) (2.31.0)\n",

|

| 70 |

+

"Requirement already satisfied: docker in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from sagemaker) (6.1.3)\n",

|

| 71 |

+

"Requirement already satisfied: tqdm in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from sagemaker) (4.66.2)\n",

|

| 72 |

+

"Requirement already satisfied: psutil in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from sagemaker) (5.9.8)\n",

|

| 73 |

+

"Requirement already satisfied: filelock in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from datasets) (3.13.3)\n",

|

| 74 |

+

"Requirement already satisfied: pyarrow>=12.0.0 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from datasets) (15.0.2)\n",

|

| 75 |

+

"Requirement already satisfied: pyarrow-hotfix in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from datasets) (0.6)\n",

|

| 76 |

+

"Requirement already satisfied: dill<0.3.9,>=0.3.0 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from datasets) (0.3.8)\n",

|

| 77 |

+

"Collecting xxhash (from datasets)\n",

|

| 78 |

+

" Using cached xxhash-3.4.1-cp310-cp310-manylinux_2_17_x86_64.manylinux2014_x86_64.whl.metadata (12 kB)\n",

|

| 79 |

+

"Requirement already satisfied: multiprocess in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from datasets) (0.70.16)\n",

|

| 80 |

+

"Requirement already satisfied: fsspec<=2024.3.1,>=2023.1.0 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from fsspec[http]<=2024.3.1,>=2023.1.0->datasets) (2024.3.1)\n",

|

| 81 |

+

"Requirement already satisfied: aiohttp in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from datasets) (3.9.3)\n",

|

| 82 |

+

"Collecting huggingface-hub>=0.21.2 (from datasets)\n",

|

| 83 |

+

" Using cached huggingface_hub-0.23.1-py3-none-any.whl.metadata (12 kB)\n",

|

| 84 |

+

"Requirement already satisfied: botocore<1.35.0,>=1.34.101 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from boto3<2.0,>=1.33.3->sagemaker) (1.34.101)\n",

|

| 85 |

+

"Requirement already satisfied: jmespath<2.0.0,>=0.7.1 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from boto3<2.0,>=1.33.3->sagemaker) (1.0.1)\n",

|

| 86 |

+

"Requirement already satisfied: s3transfer<0.11.0,>=0.10.0 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from boto3<2.0,>=1.33.3->sagemaker) (0.10.1)\n",

|

| 87 |

+

"Requirement already satisfied: aiosignal>=1.1.2 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from aiohttp->datasets) (1.3.1)\n",

|

| 88 |

+

"Requirement already satisfied: frozenlist>=1.1.1 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from aiohttp->datasets) (1.4.1)\n",

|

| 89 |

+

"Requirement already satisfied: multidict<7.0,>=4.5 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from aiohttp->datasets) (6.0.5)\n",

|

| 90 |

+

"Requirement already satisfied: yarl<2.0,>=1.0 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from aiohttp->datasets) (1.9.4)\n",

|

| 91 |

+

"Requirement already satisfied: async-timeout<5.0,>=4.0 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from aiohttp->datasets) (4.0.3)\n",

|

| 92 |

+

"Requirement already satisfied: typing-extensions>=3.7.4.3 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from huggingface-hub>=0.21.2->datasets) (4.10.0)\n",

|

| 93 |

+

"Requirement already satisfied: zipp>=0.5 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from importlib-metadata<7.0,>=1.4.0->sagemaker) (3.17.0)\n",

|

| 94 |

+

"Requirement already satisfied: pyparsing!=3.0.5,>=2.0.2 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from packaging>=20.0->sagemaker) (3.1.2)\n",

|

| 95 |

+

"Requirement already satisfied: charset-normalizer<4,>=2 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from requests->sagemaker) (3.3.2)\n",

|

| 96 |

+

"Requirement already satisfied: idna<4,>=2.5 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from requests->sagemaker) (3.6)\n",

|

| 97 |

+

"Requirement already satisfied: certifi>=2017.4.17 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from requests->sagemaker) (2024.2.2)\n",

|

| 98 |

+

"Requirement already satisfied: websocket-client>=0.32.0 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from docker->sagemaker) (1.7.0)\n",

|

| 99 |

+

"Requirement already satisfied: six in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from google-pasta->sagemaker) (1.16.0)\n",

|

| 100 |

+

"Requirement already satisfied: jsonschema-specifications>=2023.03.6 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from jsonschema->sagemaker) (2023.12.1)\n",

|

| 101 |

+

"Requirement already satisfied: referencing>=0.28.4 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from jsonschema->sagemaker) (0.34.0)\n",

|

| 102 |

+

"Requirement already satisfied: rpds-py>=0.7.1 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from jsonschema->sagemaker) (0.18.0)\n",

|

| 103 |

+

"Requirement already satisfied: python-dateutil>=2.8.2 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from pandas->sagemaker) (2.9.0)\n",

|

| 104 |

+

"Requirement already satisfied: pytz>=2020.1 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from pandas->sagemaker) (2024.1)\n",

|

| 105 |

+

"Requirement already satisfied: tzdata>=2022.7 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from pandas->sagemaker) (2024.1)\n",

|

| 106 |

+

"Requirement already satisfied: ppft>=1.7.6.8 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from pathos->sagemaker) (1.7.6.8)\n",

|

| 107 |

+

"Requirement already satisfied: pox>=0.3.4 in /home/ec2-user/anaconda3/envs/python3/lib/python3.10/site-packages (from pathos->sagemaker) (0.3.4)\n",

|

| 108 |

+

"Using cached sagemaker-2.221.0-py3-none-any.whl (1.5 MB)\n",

|

| 109 |

+

"Using cached datasets-2.19.1-py3-none-any.whl (542 kB)\n",

|

| 110 |

+

"Using cached huggingface_hub-0.23.1-py3-none-any.whl (401 kB)\n",

|

| 111 |

+

"Using cached xxhash-3.4.1-cp310-cp310-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (194 kB)\n",

|

| 112 |

+

"Installing collected packages: xxhash, huggingface-hub, datasets, sagemaker\n",

|

| 113 |

+

" Attempting uninstall: sagemaker\n",

|

| 114 |

+

" Found existing installation: sagemaker 2.219.0\n",

|

| 115 |

+

" Uninstalling sagemaker-2.219.0:\n",

|

| 116 |

+

" Successfully uninstalled sagemaker-2.219.0\n",

|

| 117 |

+

"Successfully installed datasets-2.19.1 huggingface-hub-0.23.1 sagemaker-2.221.0 xxhash-3.4.1\n"

|

| 118 |

+

]

|

| 119 |

+

}

|

| 120 |

+

],

|

| 121 |

+

"source": [

|

| 122 |

+

"!pip install --upgrade sagemaker datasets"

|

| 123 |

+

]

|

| 124 |

+

},

|

| 125 |

+

{

|

| 126 |

+

"cell_type": "markdown",

|

| 127 |

+

"metadata": {},

|

| 128 |

+

"source": [

|

| 129 |

+

"Select the model to fine-tune"

|

| 130 |

+

]

|

| 131 |

+

},

|

| 132 |

+

{

|

| 133 |

+

"cell_type": "code",

|

| 134 |

+

"execution_count": 2,

|

| 135 |

+

"metadata": {

|

| 136 |

+

"tags": []

|

| 137 |

+

},

|

| 138 |

+

"outputs": [],

|

| 139 |

+

"source": [

|

| 140 |

+

"model_id, model_version = \"meta-textgeneration-llama-2-7b\", \"2.*\""

|

| 141 |

+

]

|

| 142 |

+

},

|

| 143 |

+

{

|

| 144 |

+

"cell_type": "markdown",

|

| 145 |

+

"metadata": {},

|

| 146 |

+

"source": [

|

| 147 |

+

"In the cell below, choose the training dataset text for the domain you've chosen and update the code in the cell below: \n",

|

| 148 |

+

"\n",

|

| 149 |

+

"To create a finance domain expert model: \n",

|

| 150 |

+

"\n",

|

| 151 |

+

"- `\"training\": f\"s3://genaiwithawsproject2024/training-datasets/finance\"`\n",

|

| 152 |

+

"\n",

|

| 153 |

+

"To create a medical domain expert model: \n",

|

| 154 |

+

"\n",

|

| 155 |

+

"- `\"training\": f\"s3://genaiwithawsproject2024/training-datasets/medical\"`\n",

|

| 156 |

+

"\n",

|

| 157 |

+

"To create an IT domain expert model: \n",

|

| 158 |

+

"\n",

|

| 159 |

+

"- `\"training\": f\"s3://genaiwithawsproject2024/training-datasets/it\"`"

|

| 160 |

+

]

|

| 161 |

+

},

|

| 162 |

+

{

|

| 163 |

+

"cell_type": "code",

|

| 164 |

+

"execution_count": null,

|

| 165 |

+

"metadata": {

|

| 166 |

+

"tags": []

|

| 167 |

+

},

|

| 168 |

+

"outputs": [

|

| 169 |

+

{

|

| 170 |

+

"name": "stdout",

|

| 171 |

+

"output_type": "stream",

|

| 172 |

+

"text": [

|

| 173 |

+

"sagemaker.config INFO - Not applying SDK defaults from location: /etc/xdg/sagemaker/config.yaml\n",

|

| 174 |

+

"sagemaker.config INFO - Not applying SDK defaults from location: /home/ec2-user/.config/sagemaker/config.yaml\n"

|

| 175 |

+

]

|

| 176 |

+

},

|

| 177 |

+

{

|

| 178 |

+

"name": "stderr",

|

| 179 |

+

"output_type": "stream",

|

| 180 |

+

"text": [

|

| 181 |

+

"Using model 'meta-textgeneration-llama-2-7b' with wildcard version identifier '*'. You can pin to version '4.1.0' for more stable results. Note that models may have different input/output signatures after a major version upgrade.\n",

|

| 182 |

+

"INFO:sagemaker:Creating training-job with name: meta-textgeneration-llama-2-7b-2024-05-22-11-21-47-115\n"

|

| 183 |

+

]

|

| 184 |

+

},

|

| 185 |

+

{

|

| 186 |

+

"name": "stdout",

|

| 187 |

+

"output_type": "stream",

|

| 188 |

+

"text": [

|

| 189 |

+

"2024-05-22 11:21:47 Starting - Starting the training job...\n",

|

| 190 |

+

"2024-05-22 11:22:06 Pending - Training job waiting for capacity...\n",

|

| 191 |

+

"2024-05-22 11:22:20 Pending - Preparing the instances for training...\n",

|

| 192 |

+

"2024-05-22 11:22:51 Downloading - Downloading input data.....................\n",

|

| 193 |

+

"2024-05-22 11:27:42 Training - Training image download completed. Training in progress..\u001b[34mbash: cannot set terminal process group (-1): Inappropriate ioctl for device\u001b[0m\n",

|

| 194 |

+

"\u001b[34mbash: no job control in this shell\u001b[0m\n",

|

| 195 |

+

"\u001b[34m2024-05-22 11:27:44,151 sagemaker-training-toolkit INFO Imported framework sagemaker_pytorch_container.training\u001b[0m\n",

|

| 196 |

+

"\u001b[34m2024-05-22 11:27:44,169 sagemaker-training-toolkit INFO No Neurons detected (normal if no neurons installed)\u001b[0m\n",

|

| 197 |

+

"\u001b[34m2024-05-22 11:27:44,178 sagemaker_pytorch_container.training INFO Block until all host DNS lookups succeed.\u001b[0m\n",

|

| 198 |

+

"\u001b[34m2024-05-22 11:27:44,181 sagemaker_pytorch_container.training INFO Invoking user training script.\u001b[0m\n",

|

| 199 |

+

"\u001b[34m2024-05-22 11:27:53,654 sagemaker-training-toolkit INFO Installing dependencies from requirements.txt:\u001b[0m\n",

|

| 200 |

+

"\u001b[34m/opt/conda/bin/python3.10 -m pip install -r requirements.txt\u001b[0m\n",

|

| 201 |

+

"\u001b[34mProcessing ./lib/accelerate/accelerate-0.21.0-py3-none-any.whl (from -r requirements.txt (line 1))\u001b[0m\n",

|

| 202 |

+

"\u001b[34mProcessing ./lib/bitsandbytes/bitsandbytes-0.39.1-py3-none-any.whl (from -r requirements.txt (line 2))\u001b[0m\n",

|

| 203 |

+

"\u001b[34mProcessing ./lib/black/black-23.7.0-cp310-cp310-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (from -r requirements.txt (line 3))\u001b[0m\n",

|

| 204 |

+

"\u001b[34mProcessing ./lib/brotli/Brotli-1.0.9-cp310-cp310-manylinux_2_5_x86_64.manylinux1_x86_64.manylinux_2_12_x86_64.manylinux2010_x86_64.whl (from -r requirements.txt (line 4))\u001b[0m\n",

|

| 205 |

+

"\u001b[34mProcessing ./lib/datasets/datasets-2.14.1-py3-none-any.whl (from -r requirements.txt (line 5))\u001b[0m\n",

|

| 206 |

+

"\u001b[34mProcessing ./lib/docstring-parser/docstring_parser-0.16-py3-none-any.whl (from -r requirements.txt (line 6))\u001b[0m\n",

|

| 207 |

+

"\u001b[34mProcessing ./lib/fire/fire-0.5.0.tar.gz\u001b[0m\n",

|

| 208 |

+

"\u001b[34mPreparing metadata (setup.py): started\u001b[0m\n",

|

| 209 |

+

"\u001b[34mPreparing metadata (setup.py): finished with status 'done'\u001b[0m\n",

|

| 210 |

+

"\u001b[34mProcessing ./lib/huggingface-hub/huggingface_hub-0.20.3-py3-none-any.whl (from -r requirements.txt (line 8))\u001b[0m\n",

|

| 211 |

+

"\u001b[34mProcessing ./lib/inflate64/inflate64-0.3.1-cp310-cp310-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (from -r requirements.txt (line 9))\u001b[0m\n",

|

| 212 |

+

"\u001b[34mProcessing ./lib/loralib/loralib-0.1.1-py3-none-any.whl (from -r requirements.txt (line 10))\u001b[0m\n",

|

| 213 |

+

"\u001b[34mProcessing ./lib/multivolumefile/multivolumefile-0.2.3-py3-none-any.whl (from -r requirements.txt (line 11))\u001b[0m\n",

|

| 214 |

+

"\u001b[34mProcessing ./lib/mypy-extensions/mypy_extensions-1.0.0-py3-none-any.whl (from -r requirements.txt (line 12))\u001b[0m\n",

|

| 215 |

+

"\u001b[34mProcessing ./lib/nvidia-cublas-cu12/nvidia_cublas_cu12-12.1.3.1-py3-none-manylinux1_x86_64.whl (from -r requirements.txt (line 13))\u001b[0m\n",

|

| 216 |

+

"\u001b[34mProcessing ./lib/nvidia-cuda-cupti-cu12/nvidia_cuda_cupti_cu12-12.1.105-py3-none-manylinux1_x86_64.whl (from -r requirements.txt (line 14))\u001b[0m\n",

|

| 217 |

+

"\u001b[34mProcessing ./lib/nvidia-cuda-nvrtc-cu12/nvidia_cuda_nvrtc_cu12-12.1.105-py3-none-manylinux1_x86_64.whl (from -r requirements.txt (line 15))\u001b[0m\n",

|

| 218 |

+

"\u001b[34mProcessing ./lib/nvidia-cuda-runtime-cu12/nvidia_cuda_runtime_cu12-12.1.105-py3-none-manylinux1_x86_64.whl (from -r requirements.txt (line 16))\u001b[0m\n",

|

| 219 |

+

"\u001b[34mProcessing ./lib/nvidia-cudnn-cu12/nvidia_cudnn_cu12-8.9.2.26-py3-none-manylinux1_x86_64.whl (from -r requirements.txt (line 17))\u001b[0m\n",

|

| 220 |

+

"\u001b[34mProcessing ./lib/nvidia-cufft-cu12/nvidia_cufft_cu12-11.0.2.54-py3-none-manylinux1_x86_64.whl (from -r requirements.txt (line 18))\u001b[0m\n",

|

| 221 |

+

"\u001b[34mProcessing ./lib/nvidia-curand-cu12/nvidia_curand_cu12-10.3.2.106-py3-none-manylinux1_x86_64.whl (from -r requirements.txt (line 19))\u001b[0m\n",

|

| 222 |

+

"\u001b[34mProcessing ./lib/nvidia-cusolver-cu12/nvidia_cusolver_cu12-11.4.5.107-py3-none-manylinux1_x86_64.whl (from -r requirements.txt (line 20))\u001b[0m\n",

|

| 223 |

+