Llama-2-7b performance on AWS Inferentia2 (Latency & Througput)

How fast is Llama-2-7b on Inferentia2? Let’s figure out!

For this benchmark we will use the following configurations:

| Model type | batch_size | sequence_length |

|---|---|---|

| Llama2 7B BS1 | 1 | 4096 |

| Llama2 7B BS4 | 4 | 4096 |

| Llama2 7B BS8 | 8 | 4096 |

| Llama2 7B BS16 | 16 | 4096 |

| Llama2 7B BS32 | 24 | 4096 |

Note: all models are compiled to use 6 devices corresponding to 12 cores on the inf2.48xlarge instance.

Note: please refer to the inferentia2 product page for details on the available instances.

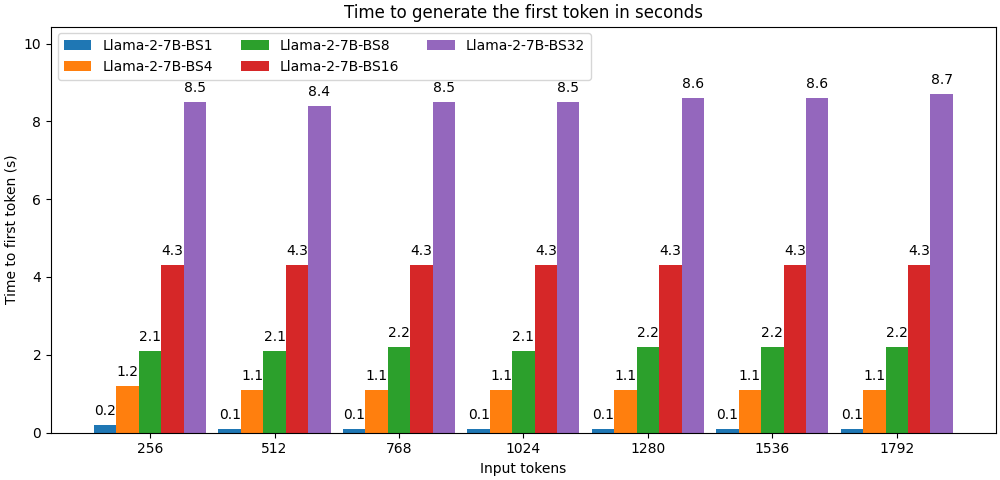

Time to first token

The time to first token is the time required to process the input tokens and generate the first output token. It is a very important metric, as it corresponds to the latency directly perceived by the user when streaming generated tokens.

We test the time to first token for increasing context sizes, from a typical Q/A usage, to heavy Retrieval Augmented Generation (RAG) use-cases.

Time to first token is expressed in seconds.

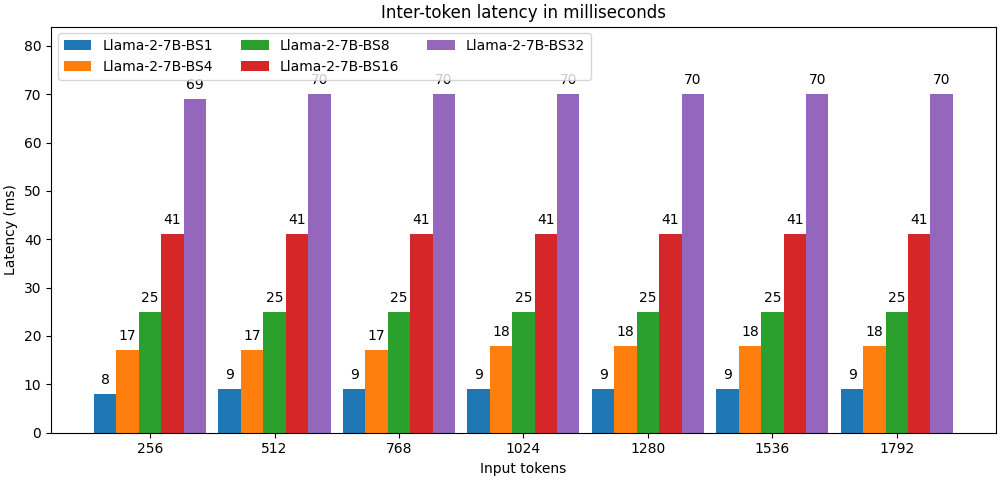

Inter-token Latency

The inter-token latency corresponds to the average time elapsed between two generated tokens.

It is expressed in milliseconds.

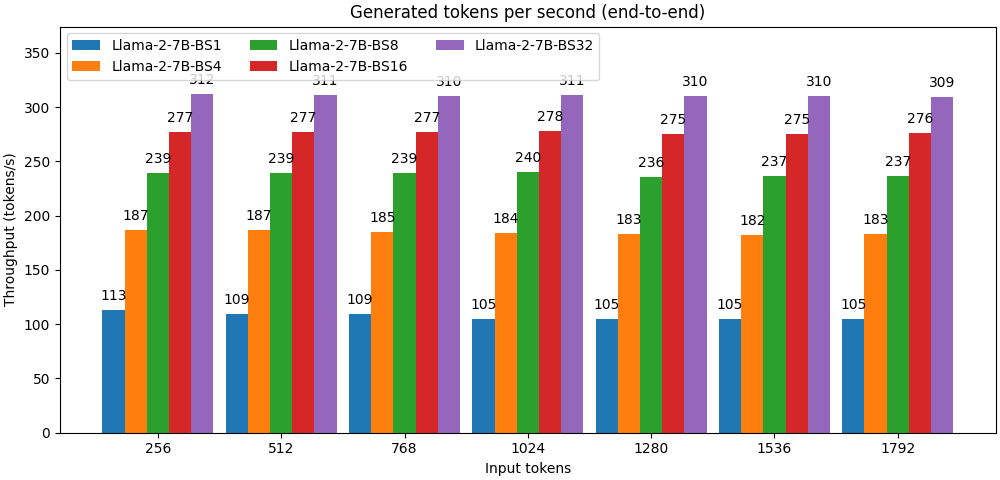

Throughput

Unlike some other benchmarks, we evaluate the throughput using generated tokens only, by dividing their number by the end-to-end latency.

Throughput is expressed in tokens/second.