code

stringlengths 38

801k

| repo_path

stringlengths 6

263

|

|---|---|

# +

"""

17. How to compute the mean squared error on a truth and predicted series?

"""

"""

Difficulty Level: L2

"""

"""

Compute the mean squared error of truth and pred series.

"""

"""

Input

"""

"""

truth = pd.Series(range(10))

pred = pd.Series(range(10)) + np.random.random(10)

"""

# Input

truth = pd.Series(range(10))

pred = pd.Series(range(10)) + np.random.random(10)

# Solution

np.mean((truth-pred)**2)

| pset_pandas_ext/101problems/solutions/nb/p17.ipynb |

# ---

# jupyter:

# jupytext:

# text_representation:

# extension: .py

# format_name: light

# format_version: '1.5'

# jupytext_version: 1.14.4

# kernelspec:

# display_name: Python 3

# language: python

# name: python3

# ---

# ---

# layout: post

# title: "Entropy와 Gini계수"

# author: "<NAME>"

# categories: Data분석

# tags: [DecisionTree, 의사결정나무, 불순도, Entropy와, Gini, 엔트로피, 지니계수, InformationGain, information]

# image: 03_entropy_gini.png

# ---

# ## **목적**

# - 지난번 포스팅에 ensemble 모델에 관하여 이야기하면서 약한 모형으로 의사결정나무를 많이 사용하는 것을 알 수 있었습니다. 이번에는 의사결정 나무를 만들기 위하여 사용되는 Entropy와 gini index에 대해서 알아보도록 하겠습니다.

# <br/>

# <br/>

#

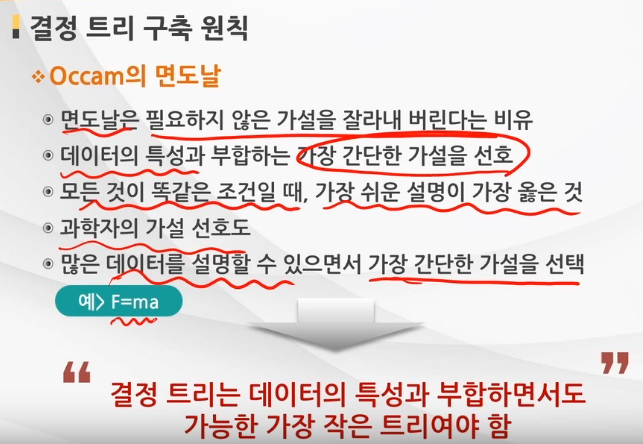

# ### **트리 구축의 원칙**

#

# > 출처 : https://m.blog.naver.com/PostView.naver?isHttpsRedirect=true&blogId=ehdrndd&logNo=221158124011

# - 결정 트리를 구축할 때는 Occamm의 면도날처럼 데이터의 특성을 가장 잘 반영하는 간단한 가설을 먼저 채택하도록 되어있습니다. 어떻게 간단하고 합리적인 트리를 만들 수 있을 지 알아보겠습니다.

# <br>

# <br>

# ---

#

# ### **1. 결정 트리**

# 의사결정나무를 효율적으로 만들기 위해서는 변수의 기준에 따라 불순도/불확실성을 낮추는 방식으로 선택하여 만들게 됩니다.<br>

# 이에 불순도(Impurity) / 불확실성(Uncertainty)를 감소하는 것을 Information gain이라고 하며 이것을 최소화시키기 위하여 Gini Index와 Entropy라는 개념을 사용하게 되고 의사결정 나무의 종류에 따라 다르게 쓰입니다.<br>

# sklearn에서 default로 쓰이는 건 gini계수이며 이는 CART(Classificatioin And Regression Tree)에 쓰입니다.<br>

# ID3 그리고 이것을 개선한 C4.5, C5.0에서는 Entropy를 계산한다고 합니다. <br>

# CART tree는 항상 2진 분류를 하는 방식으로 나타나며, Entropy 혹은 Entropy 기반으로 계산되는 Information gain으로 계산되며 다중 분리가 됩니다. <br>

#

# - Gini계수와 Entropy 모두 높을수록 불순도가 높아져 분류를 하기 어렵습니다. <br>

#

#

# |비 고|ID3|C4.5, C5|CART|

# |:---:|:---:|:---:|:---:|

# |평가지수|Entropy|Information gain|Gini Index(범주), 분산의 차이(수치)|

# |분리방식|다지분리|다지분리(범주) 및 이진분리(수치)|항상2진 분리|

# |비고|수치형 데이터 못 다룸|||

#

# <br>

# <br>

# > 출처/참고자료 : https://ko.wikipedia.org/wiki/%EA%B2%B0%EC%A0%95_%ED%8A%B8%EB%A6%AC_%ED%95%99%EC%8A%B5%EB%B2%95 <br>

# > 출처/참고자료 : https://m.blog.naver.com/PostView.naver?isHttpsRedirect=true&blogId=trashx&logNo=60099037740 <br>

# > 출처/참고자료 : https://ratsgo.github.io/machine%20learning/2017/03/26/tree/

# ---

#

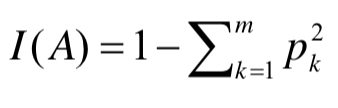

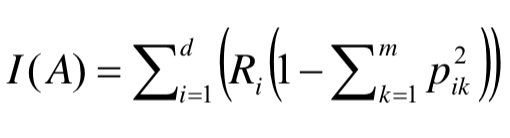

# ### **1. Gini Index**

# 일단 sklearn의 DecisionTreeClassifier의 default 값인 Gini 계수에 대해서 먼저 설명하겠습니다. <br>

# 우선 Gini index의 공식입니다. <br>

#

# - 영역의 데이터 비율을 제곱하여 더한 값을 1에서 빼주게 된다.<br>

#  <br>

# <br>

# - 두개 영역 이상이 되면 비율의 제곱의 비율을 곱하여 1에서 빼주게 된다.<br>

#

# > 출처 : https://soobarkbar.tistory.com/17

#

# <br>

#

# - 최대값을 보게되면 1 - ( (1/2)^2 + (1/2)^2 ) = 0.5

# - 최소값을 보게되면 1 - ( 1^2 + 0^2 ) = 0

# +

import os

import sys

import warnings

import math

import random

import numpy as np

import pandas as pd

import scipy

from sklearn import tree

from sklearn.tree import DecisionTreeClassifier

import matplotlib as mpl

from matplotlib import pyplot as plt

from plotnine import *

import graphviz

from sklearn.preprocessing import OneHotEncoder

# %matplotlib inline

warnings.filterwarnings("ignore")

# -

tennis = pd.read_csv("data/tennis.csv", index_col = "Day")

tennis

# - 위와 같은 데이터가 있다고 할 때, 우리는 어떤 요인이 가장 확실한(불확실성이 적은) 변수일지 생각을 하고 트리를 만들어야합니다.

# <br>

# <br>

#

# 아무것도 나누지 않았을 때 gini계수를 구하는 함수를 만든 후 얼마인지 출력해보겠습니다

def get_unique_dict(df) :

return {x : list(df[x].unique()) for x in ["Outlook", "Temperature", "Humidity", "Wind"]}

def get_gini(df, y_col) :

Ys = df[y_col].value_counts()

total_row = len(df)

return 1 - np.sum([np.square(len(df[df[y_col] == y]) / total_row) for y in Ys.index])

def gini_split(df, y_col, col, feature) :

r1 = len(df[df[col] == feature])

Y1 = dict(df[df[col] == feature][y_col].value_counts())

r2 = len(df[df[col] != feature])

Y2 = dict(df[df[col] != feature][y_col].value_counts())

ratio = r1 / (r1 + r2)

gi1 = 1 - np.sum([np.square(len(df[(df[col] == feature) & (df[y_col] == x)]) / r1) for x, y in Y1.items()])

gi2 = 1 - np.sum([np.square(len(df[(df[col] != feature) & (df[y_col] == x)]) / r2) for x, y in Y2.items()])

return (ratio * gi1) + ((1-ratio) * gi2)

# 어떤 기준으로 나누었을 때 gini계수를 구하는 함수를 만들어 예시로 Outlook이 Sunny일 때 gini 계수를 구해보겠습니다.

get_gini(tennis, "PlayTennis")

# 아무것도 나누지 않았을 때보다, Sunny로 나누었을 때 gini계수가 줄어드는 것을 볼 수 있습니다.<br>

# 이 때 이 차이값을 Information gain(정보획득)이라고 합니다. 그리고 정보획득량이 많은 쪽을 선택하여 트리의 구조를 만들기 시작합니다.

split_point = ["Outlook", "Sunny"]

print("{}, {} 기준 split 후 gini 계수 : {}".format(*split_point, gini_split(tennis, "PlayTennis", *split_point)))

print("information gain : {}".format(get_gini(tennis, "PlayTennis") - gini_split(tennis, "PlayTennis", *split_point)))

# - 이제 모든 변수에 대해서 각각의 gini계수를 구하여 정보획득량이 많은, 즉 gini계수가 적은 변수를 선정하여 트리를 만들어갑니다.

y_col = "PlayTennis"

unique_dict = get_unique_dict(tennis)

unique_dict

[f"col : {idx}, split_feature : {v} : gini_index = {gini_split(tennis, y_col, idx, v)}" for idx, val in unique_dict.items() for v in val]

gini_df = pd.DataFrame([[idx, v, gini_split(tennis, y_col, idx, v)] for idx, val in unique_dict.items() for v in val], columns = ["cat1", "cat2", "gini"])

print(gini_df.iloc[gini_df["gini"].argmax()])

print(gini_df.iloc[gini_df["gini"].argmin()])

# ---

# 임의로 x, y좌표를 생성하여 정보들이 얼마나 흩어져있는지 확인해보겠습니다.

def generate_xy(df, split_col = None, split_value = None) :

if split_col == None :

return df.assign(x = [random.random() for _ in range(len(df))], y = [random.random() for _ in range(len(df))])

else :

tmp_ = df[df[split_col] == split_value]

tmp__ = df[df[split_col] != split_value]

return pd.concat([tmp_.assign(x = [random.random() / 2 for _ in range(len(tmp_))], y = [random.random() for _ in range(len(tmp_))]),

tmp__.assign(x = [(random.random() / 2) + 0.5 for _ in range(len(tmp__))], y = [random.random() for _ in range(len(tmp__))])] )

# - 아무런 기준을 두지 않았을 때는 정보를 구분할 수 있는 정보가 없습니다.

p = (

ggplot(data = generate_xy(tennis), mapping = aes(x = "x", y = "y", color = y_col)) +

geom_point() +

theme_bw()

)

p.save(filename = "../assets/img/2021-06-01-Entropy/1.jpg")

#

# - Outlook이 Overcast로 나누었을 때, Yes 4개가 확실히 구분되는 것을 볼 수 있습니다.

split_list = ["Outlook", "Overcast"]

p = (

ggplot(data = generate_xy(tennis, *split_list), mapping = aes(x = "x", y = "y", color = y_col)) +

geom_point() +

geom_vline(xintercept = 0.5, color = "red", alpha = 0.7) +

theme_bw()

)

p.save(filename = "../assets/img/2021-06-01-Entropy/2.jpg")

#

# - 정보획득량이 가장 큰 Temperature가 Mild로 나누었을 때입니다.

split_list = ["Temperature", "Mild"]

p = (

ggplot(data = generate_xy(tennis, *split_list), mapping = aes(x = "x", y = "y", color = y_col)) +

geom_point() +

geom_vline(xintercept = 0.5, color = "red", alpha = 0.7) +

theme_bw()

)

p.save(filename = "../assets/img/2021-06-01-Entropy/3.jpg")

#

# - Outlook이 Sunny, Rain으로 각각 나누었을 때입니다.

split_list = ["Outlook", "Sunny"]

p = (

ggplot(data = generate_xy(tennis, *split_list), mapping = aes(x = "x", y = "y", color = y_col)) +

geom_point() +

geom_vline(xintercept = 0.5, color = "red", alpha = 0.7) +

theme_bw()

)

p.save(filename = "../assets/img/2021-06-01-Entropy/4.jpg")

#

split_list = ["Outlook", "Rain"]

p = (

ggplot(data = generate_xy(tennis, *split_list), mapping = aes(x = "x", y = "y", color = y_col)) +

geom_point() +

geom_vline(xintercept = 0.5, color = "red", alpha = 0.7) +

theme_bw()

)

p.save("../assets/img/2021-06-01-Entropy/5.jpg")

#

# #### **실제 tree 모델과 비교하기 위하여 OneHotEncoding 후 트리모형을 돌려보도록 하겠습니다.

cols = ["Outlook", "Temperature", "Humidity", "Wind"]

oe = OneHotEncoder()

Xs = pd.get_dummies(tennis[cols])

Ys = tennis[y_col]

dt_gini = DecisionTreeClassifier(criterion="gini")

dt_gini.fit(Xs, Ys)

def save_graphviz(grp, grp_num) :

p = graphviz.Source(grp)

p.save(filename = f"../assets/img/2021-06-01-Entropy/{grp_num}")

p.render(filename = f"../assets/img/2021-06-01-Entropy/{grp_num}", format = "jpg")

grp = tree.export_graphviz(dt_gini, out_file = None, feature_names=Xs.columns,

class_names=Ys.unique(),

filled=True)

save_graphviz(grp, 6)

#

# #### **실제로 이 순서가 맞는지 확인해보겠습니다**

get_gini(tennis, "PlayTennis")

gini_df.iloc[gini_df["gini"].argmin()]

tennis_node1 = tennis[tennis["Outlook"] != "Overcast"]

[print(f"col : {idx}, split_feature : {v} : gini_index = {gini_split(tennis_node1, y_col, idx, v)}") for idx, val in get_unique_dict(tennis_node1).items() for v in val]

gini_df = pd.DataFrame([[idx, v, gini_split(tennis_node1, y_col, idx, v)] for idx, val in get_unique_dict(tennis_node1).items() for v in val], columns = ["cat1", "cat2", "gini"])

print("")

print("gini index : {}".format(get_gini(tennis_node1, y_col)))

print(gini_df.iloc[gini_df["gini"].argmin()])

tennis_node2 = tennis[(tennis["Outlook"] != "Overcast") & (tennis["Humidity"] == "High")]

[print(f"col : {idx}, split_feature : {v} : gini_index = {gini_split(tennis_node2, y_col, idx, v)}") for idx, val in get_unique_dict(tennis_node2).items() for v in val]

gini_df = pd.DataFrame([[idx, v, gini_split(tennis_node2, y_col, idx, v)] for idx, val in get_unique_dict(tennis_node2).items() for v in val], columns = ["cat1", "cat2", "gini"])

print("")

print("gini index : {}".format(get_gini(tennis_node2, y_col)))

gini_df.iloc[gini_df["gini"].argmin()]

# #### - gini계수가 0이면 가장 끝쪽에 있는 terminal node가 됩니다.(데이터가 많으면 overfitting을 막기위하여 가지치기 컨셉이 활용됩니다)

tennis_ter1 = tennis[tennis["Outlook"] == "Overcast"]

[print(f"col : {idx}, split_feature : {v} : gini_index = {gini_split(tennis_ter1, y_col, idx, v)}") for idx, val in get_unique_dict(tennis_ter1).items() for v in val]

gini_df = pd.DataFrame([[idx, v, gini_split(tennis_ter1, y_col, idx, v)] for idx, val in get_unique_dict(tennis_ter1).items() for v in val], columns = ["cat1", "cat2", "gini"])

gini_df.iloc[gini_df["gini"].argmin()]

# ---

#

# ### **2. Entropy**

# 다음은 ID3, C4.5 등 트리에서 정보획득량을 측정하기 위해 쓰이는 Entropy입니다.<br>

# 우선 Entropy의 공식입니다. <br>

#

# - 영역의 데이터 비율을 제곱하여 더한 값을 1에서 빼주게 된다.<br>

#

# <br>

max_entropy = (-1 * ((0.5*np.log2(0.5)) + (0.5*np.log2(0.5))))

min_entropy = (-1 * ((1*np.log2(1))))

print(f"Entropy의 최대값 : {max_entropy}")

print(f"Entropy의 최대값 : {min_entropy}")

tennis

def get_entropy(df, y_col) :

Ys = df[y_col].value_counts()

total_row = len(df)

(-1 * ((0.5*np.log2(0.5)) + (0.5*np.log2(0.5))))

return -1 * np.sum([(len(df[df[y_col] == y]) / total_row) * np.log2(len(df[df[y_col] == y]) / total_row) for y in Ys.index])

get_entropy(tennis, y_col)

def entropy_split(df, y_col, col, feature) :

r1 = len(df[df[col] == feature])

Y1 = dict(df[df[col] == feature][y_col].value_counts())

r2 = len(df[df[col] != feature])

Y2 = dict(df[df[col] != feature][y_col].value_counts())

ratio = r1 / (r1 + r2)

ent1 = np.sum([(len(df[(df[col] == feature) & (df[y_col] == x)]) / r1) * np.log2(len(df[(df[col] == feature) & (df[y_col] == x)]) / r1) for x, y in Y1.items()])

ent2 = np.sum([(len(df[(df[col] != feature) & (df[y_col] == x)]) / r2) * np.log2(len(df[(df[col] != feature) & (df[y_col] == x)]) / r2) for x, y in Y2.items()])

return -1 * ((ratio * ent1) + ((1-ratio) * ent2))

entropy_split(tennis, "PlayTennis", "Outlook", "Sunny")

# Entropy 역시 gini index와 똑같은 개념으로 아무것도 나누지 않았을 때보다, Sunny로 나누었을 때 줄어드는 것을 볼 수 있습니다.<br>

# 이 때 차이값(Information gain)을 이용하여 트리를 만들면 ID3, C4.5 등의 트리 구조를 만들게 됩니다.

[f"col : {idx}, split_feature : {v} : Entropy = {entropy_split(tennis, y_col, idx, v)}" for idx, val in get_unique_dict(tennis).items() for v in val]

entropy_df = pd.DataFrame([[idx, v, entropy_split(tennis, y_col, idx, v)] for idx, val in unique_dict.items() for v in val], columns = ["cat1", "cat2", "entropy"])

print(entropy_df.iloc[entropy_df["entropy"].argmin()])

print(entropy_df.iloc[gini_df["gini"].argmax()])

# #### **실제 tree 모델과 비교하기 위하여 OneHotEncoding 후 트리모형을 돌려보도록 하겠습니다.**

dt_entropy = DecisionTreeClassifier(criterion="entropy")

dt_entropy.fit(Xs, Ys)

grp = tree.export_graphviz(dt_entropy, out_file = None, feature_names=Xs.columns,

class_names=Ys.unique(),

filled=True)

save_graphviz(grp, 7)

#

# #### **실제로 이 순서가 맞는지 확인해보겠습니다**

get_entropy(tennis, "PlayTennis")

entropy_df.iloc[entropy_df["entropy"].argmin()]

tennis_ter1 = tennis[tennis["Outlook"] == "Overcast"]

[print(f"col : {idx}, split_feature : {v} : entropy = {entropy_split(tennis_ter1, y_col, idx, v)}") for idx, val in get_unique_dict(tennis_ter1).items() for v in val]

entropy_df = pd.DataFrame([[idx, v, entropy_split(tennis, y_col, idx, v)] for idx, val in get_unique_dict(tennis_ter1).items() for v in val], columns = ["cat1", "cat2", "entropy"])

entropy_df.iloc[entropy_df["entropy"].argmin()]

tennis_ter1 = tennis[tennis["Outlook"] != "Overcast"]

[print(f"col : {idx}, split_feature : {v} : entropy = {entropy_split(tennis_ter1, y_col, idx, v)}") for idx, val in get_unique_dict(tennis_ter1).items() for v in val]

entropy_df = pd.DataFrame([[idx, v, entropy_split(tennis, y_col, idx, v)] for idx, val in get_unique_dict(tennis_ter1).items() for v in val], columns = ["cat1", "cat2", "entropy"])

entropy_df.iloc[entropy_df["entropy"].argmin()]

# ---

# ### **마지막으로 gini index와 entropy를 활용한 tree가 어떻게 노드가 나뉘었는지 보고 포스팅 마치겠습니다.**

#

# <br>

# <br>

#

# ---

#

# <br>

#

# - code : [https://github.com/Chanjun-kim/Chanjun-kim.github.io/blob/main/_ipynb/2021-06-01-Entropy.ipynb](https://github.com/Chanjun-kim/Chanjun-kim.github.io/blob/main/_ipynb/2021-06-01-Entropy.ipynb) <br>

# - 참고 자료 : [https://m.blog.naver.com/PostView.naver?isHttpsRedirect=true&blogId=ehdrndd&logNo=221158124011](https://m.blog.naver.com/PostView.naver?isHttpsRedirect=true&blogId=ehdrndd&logNo=221158124011)

| _ipynb/2021-06-01-Entropy.ipynb |

# ---

# jupyter:

# jupytext:

# text_representation:

# extension: .py

# format_name: light

# format_version: '1.5'

# jupytext_version: 1.14.4

# kernelspec:

# display_name: Python 3

# name: python3

# ---

# + id="KWRAbsYfeuQn" colab_type="code" outputId="67dc3c10-c34c-4fc5-fe46-d89eb7499296" colab={"base_uri": "https://localhost:8080/", "height": 73}

import torch

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

# + id="6tkM3eZGfObT" colab_type="code" outputId="ae73e9d8-8b1f-4844-b16d-35f31b4db3f7" colab={"base_uri": "https://localhost:8080/", "height": 237}

data = pd.read_csv('Admission_Predict.csv', index_col=0)

data.head()

# + id="9Yb8b2rFfhNj" colab_type="code" outputId="5023441a-5dee-442c-fc91-03f535e49a1a" colab={"base_uri": "https://localhost:8080/", "height": 35}

data.shape

# + id="hv3oA4QkfwAk" colab_type="code" outputId="03e59d28-63c8-4c00-9338-d87f7bea74b9" colab={"base_uri": "https://localhost:8080/", "height": 300}

data.describe()

# + id="dWnd5ID-ixMb" colab_type="code" outputId="b34221c5-c503-4bfc-b705-1cb9b5c94a3a" colab={"base_uri": "https://localhost:8080/", "height": 128}

# !pip3 install --upgrade pandas

# + id="UiA1Bb3afx3w" colab_type="code" outputId="349e166f-187c-43df-de1e-43763e0686e3" colab={"base_uri": "https://localhost:8080/", "height": 206}

data = data.rename(index=str, columns={"Chance of Admit " : "admit_probability"})

data = data.reset_index()

data = data[['GRE Score', 'TOEFL Score', 'University Rating', 'LOR', 'SOP', 'CGPA', 'Research', 'admit_probability']]

data.head()

# + id="GSWnEB3egrRy" colab_type="code" outputId="7e758766-bc73-4486-8276-7d79ed8d31c1" colab={"base_uri": "https://localhost:8080/", "height": 513}

plt.figure(figsize = (8,8))

fig = sns.regplot(x='GRE Score', y='TOEFL Score', data=data)

plt.title("GRE v/s TOEFL Score")

plt.show(fig)

# + id="YKnNpLs9kkuP" colab_type="code" outputId="c77d5569-a383-4ca1-cfe1-1d056930dc4f" colab={"base_uri": "https://localhost:8080/", "height": 513}

plt.figure(figsize = (8,8))

fig = sns.regplot(x='GRE Score', y='CGPA', data=data)

plt.title("GRE Score v/s CGPA")

plt.show(fig)

# + id="hTtP96Tzlg1s" colab_type="code" outputId="95ed8581-b575-4a87-a435-61aedaf8ca7e" colab={"base_uri": "https://localhost:8080/", "height": 514}

plt.figure(figsize = (8,8))

fig = sns.scatterplot(x='admit_probability', y='CGPA', data=data, hue='Research')

plt.title("Scatterplot")

plt.show(fig)

# + id="NwPubRvXlqsK" colab_type="code" outputId="97c6450a-cf14-42c4-db90-88e1db861a15" colab={"base_uri": "https://localhost:8080/", "height": 684}

plt.figure(figsize = (10,10))

fig = sns.heatmap(data.corr(), annot=True, linewidths=0.05, fmt='.2f')

plt.title("Heatmap")

plt.show(fig)

# + [markdown] id="XiONHwAVp7c2" colab_type="text"

# #Data Preprocessing

# + id="1PyGC_ZsopzU" colab_type="code" colab={}

from sklearn import preprocessing

# + id="AEqJXE3xqClI" colab_type="code" colab={}

data[['GRE Score', 'TOEFL Score', 'LOR', 'SOP', 'CGPA']] = \

preprocessing.scale(data[['GRE Score', 'TOEFL Score', 'LOR', 'SOP', 'CGPA']])

# + id="hWL4ba0gqdYn" colab_type="code" outputId="bc091656-a260-464d-a7a6-3bb29539853f" colab={"base_uri": "https://localhost:8080/", "height": 363}

data.sample(10)

# + id="_3HZX3PXqgQf" colab_type="code" colab={}

col = ['GRE Score', 'TOEFL Score', 'LOR', 'SOP', 'CGPA', 'Research']

features = data[col]

# + id="MvScGNqTq0Dj" colab_type="code" outputId="74e1a5d4-00d8-4459-b07f-a88df61ef6de" colab={"base_uri": "https://localhost:8080/", "height": 206}

features.head()

# + id="O1YiruP9q5uQ" colab_type="code" colab={}

target = data[['admit_probability']]

# + id="MGv2czmHrB_N" colab_type="code" colab={}

y = target.copy()

# + id="1U62J-6OrG76" colab_type="code" outputId="e0e0f410-cb4e-4120-8ee9-9ea23386fe2c" colab={"base_uri": "https://localhost:8080/", "height": 424}

y.replace(to_replace = target[target >= 0.85], value = int(2), inplace=True)

y.replace(to_replace = target[target >= 0.65], value = int(1), inplace=True)

y.replace(to_replace = target[target < 0.65], value = int(0), inplace=False)

# + id="GFLAEjF_rkhs" colab_type="code" colab={}

target = y

# + id="CGlU8xvYrn3x" colab_type="code" outputId="c8295a98-7c46-4679-d9dd-341f649238fc" colab={"base_uri": "https://localhost:8080/", "height": 235}

target['admit_probability'].unique

# + id="T8PCIEUzrutl" colab_type="code" colab={}

from sklearn.model_selection import train_test_split

# + id="O5JRZmqEr74q" colab_type="code" colab={}

X_train, x_test, Y_train, y_test = train_test_split(features, target, test_size=0.2)

# + id="wDoseJgYsIK0" colab_type="code" colab={}

xtrain = torch.from_numpy(X_train.values).float()

xtest = torch.from_numpy(x_test.values).float()

# + id="P8xHDxl5sphm" colab_type="code" outputId="d50a6855-8f67-4cc7-8552-d92777f7309b" colab={"base_uri": "https://localhost:8080/", "height": 35}

xtrain.shape

# + id="-5BnkQJBstPT" colab_type="code" outputId="b61fefa0-3510-488f-fb61-0a81fd4b7a1b" colab={"base_uri": "https://localhost:8080/", "height": 35}

xtrain.dtype

# + id="4dWDyiuHsuxe" colab_type="code" colab={}

ytrain = torch.from_numpy(Y_train.values).view(-1, 1)[0].long()

ytest = torch.from_numpy(y_test.values).view(-1, 1)[0].long()

# + [markdown] id="b84js1JBt9RO" colab_type="text"

# #Making the model

# + id="JGOSf7qYuYpZ" colab_type="code" colab={}

import torch.nn as nn

import torch.nn.functional as F

# + id="c28rnvpvtcil" colab_type="code" colab={"base_uri": "https://localhost:8080/", "height": 35} outputId="885be287-62e5-431a-b461-2ffc16986a59"

input_size = xtrain.shape[0]

output_size = len(target['admit_probability'].unique())

# xtrain = xtrain.unsqueeze_(2)

input_size

# + id="Uet491eLuSRI" colab_type="code" colab={}

class Net(nn.Module):

def __init__(self, hidden_size, activation_fn = 'relu', apply_dropout=False):

super(Net, self).__init__()

self.fc1 = nn.Linear(input_size, hidden_size)

self.fc2 = nn.Linear(hidden_size, hidden_size)

self.fc3 = nn.Linear(hidden_size, output_size)

self.hidden_size = hidden_size

self.activation_fn = activation_fn

self.dropout = None

if apply_dropout:

self.dropout = nn.dropout(0.2)

def forward(self, x):

activation_fn = None

if self.activation_fn == 'sigmoid':

activation_fn = F.torch.sigmoid

elif self.activation_fn == 'tanh':

activation_fn = F.torch.tanh

elif self.activation_fn == 'relu':

activation_fn = F.relu

x = activation_fn(self.fc1(x))

x = activation_fn(self.fc2(x))

if self.dropout != None:

x = self.dropout(x)

x = self.fc3(x)

return F.log_softmax(x, dim=-1)

# + id="iZA6zASk0cT5" colab_type="code" colab={}

import torch.optim as optim

# + id="s8FTknbH05yl" colab_type="code" colab={}

def train_and_evaluate_model(model, learn_rate=0.001):

epoch_data = []

epochs = 1001

optimizer = optim.Adam(model.parameters(), lr=learn_rate)

loss_fn = nn.NLLLoss()

test_accuracy = 0.0

for epoch in range(1, epochs):

optimizer.zero_grad()

model.train()

ypred = model(xtrain)

loss = loss_fn(ypred, ytrain)

loss.backward()

optimizer.step()

model.eval()

ypred_test = model(xtest)

loss_test = loss_fn(ypred_test, ytest)

_, pred = ypred_test.data.max(1)

test_accuracy = pred.eq(ytest.data).sum().item() / y_test.values.size

epoch_data.append([epoch, loss.data.item(), loss_test.data.item(), test_accuracy])

if epoch % 100 == 0:

print('epoch - %d (%d%%) train loss - %.2f test loss - %.2f test accuracy - %.4f'\

% (epoch, epoch/150 * 10, loss.data.item(), loss_test.data.item(),

test_accuracy))

return {

'model' : model,

'epoch_data' : epoch_data,

'num_epochs' : epochs,

'optimizer' : optimizer,

'loss_fn' : loss_fn,

'test_accuracy' : test_accuracy,

'_, pred' : ypred_test.data.max(1),

'actual_test_label' : ytest,

}

# + [markdown] id="zuocRRPP5hGB" colab_type="text"

# #Training the model

# + id="5u4n9Dtl5PJe" colab_type="code" outputId="3ee678ae-3279-4361-a5ba-68c46d111523" colab={"base_uri": "https://localhost:8080/", "height": 108}

signet = Net(hidden_size=50, activation_fn = 'sigmoid')

signet

# + id="4fg8bGhx8AGO" colab_type="code" colab={}

result_signet = train_and_evaluate_model(signet)

# + id="CQj6BnxK8IgA" colab_type="code" colab={}

| AdmissionPredictorNN.ipynb |

# ---

# jupyter:

# jupytext:

# text_representation:

# extension: .py

# format_name: light

# format_version: '1.5'

# jupytext_version: 1.14.4

# kernelspec:

# display_name: Python 3

# name: python3

# ---

# + id="Agebac83jwLd"

import pandas as pd

import numpy as np

import io

import matplotlib.pyplot as plt

from google.colab import files

# + colab={"resources": {"http://localhost:8080/nbextensions/google.colab/files.js": {"data": "<KEY>", "ok": true, "headers": [["content-type", "application/javascript"]], "status": 200, "status_text": ""}}, "base_uri": "https://localhost:8080/", "height": 72} id="Ze5DNKHyl2-U" outputId="2b3e03d8-4389-44da-aa5f-3cf994b101f7"

uploaded = files.upload()

# + colab={"base_uri": "https://localhost:8080/", "height": 224} id="IGVoGF6jmdGD" outputId="c5d0a36a-a812-42df-8e40-d06b6dad2235"

df_original = pd.read_csv(io.BytesIO(uploaded['property-tax-report.csv']))

df = df_original

df.head()

# + id="VYWKuHKNpc-O" colab={"base_uri": "https://localhost:8080/", "height": 224} outputId="5972ddcb-6e10-4d55-f995-70a0ed2ee612"

df['2021'] = 0

df['2020'] = 0

df['2019'] = 0

df.head()

# + id="7CaYdSq5qOh0"

df.dropna(inplace=True)

indexes_to_drop = []

for index, row in df.iterrows():

if row['TAX_ASSESSMENT_YEAR'] == 2021:

df.at[index, '2021'] = row['CURRENT_LAND_VALUE']

df.at[index, '2020'] = row['PREVIOUS_LAND_VALUE']

elif row['TAX_ASSESSMENT_YEAR'] == 2020:

df.at[index, '2020'] = row['CURRENT_LAND_VALUE']

df.at[index, '2019'] = row['PREVIOUS_LAND_VALUE']

else:

continue

df.head()

# + id="PpLhG80aGCey"

count_2019_one = 0

count_2020_one = 0

count_2021_one = 0

sum_2019_one = 0

sum_2020_one = 0

sum_2021_one = 0

count_2019_two = 0

count_2020_two = 0

count_2021_two = 0

sum_2019_two = 0

sum_2020_two = 0

sum_2021_two = 0

count_2019_multi = 0

count_2020_multi = 0

count_2021_multi = 0

sum_2019_multi = 0

sum_2020_multi = 0

sum_2021_multi = 0

for index, row in df.iterrows():

if row['ZONING_CLASSIFICATION'] == 'One-Family Dwelling':

if(row['2019']):

count_2019_one +=1

sum_2019_one += row['2019']

if(row['2020']):

count_2020_one +=1

sum_2020_one += row['2020']

if(row['2021']):

count_2021_one +=1

sum_2021_one += row['2021']

elif row['ZONING_CLASSIFICATION'] == 'Two-Family Dwelling':

if(row['2019']):

count_2019_two +=1

sum_2019_two += row['2019']

if(row['2020']):

count_2020_two +=1

sum_2020_two += row['2020']

if(row['2021']):

count_2021_two +=1

sum_2021_two += row['2021']

elif row['ZONING_CLASSIFICATION'] == 'Multiple Dwelling':

if(row['2019']):

count_2019_multi +=1

sum_2019_multi += row['2019']

if(row['2020']):

count_2020_multi +=1

sum_2020_multi += row['2020']

if(row['2021']):

count_2021_multi +=1

sum_2021_multi += row['2021']

avg_2019_one = sum_2019_one/count_2019_one

avg_2020_one = sum_2020_one/count_2020_one

avg_2021_one = sum_2021_one/count_2021_one

avg_2019_two = sum_2019_two/count_2019_two

avg_2020_two = sum_2020_two/count_2020_two

avg_2021_two = sum_2021_two/count_2021_two

avg_2019_multi = sum_2019_multi/count_2019_multi

avg_2020_multi = sum_2020_multi/count_2020_multi

avg_2021_multi = sum_2021_multi/count_2021_multi

data = {'Zone_Classification':['One-Family Dwelling', 'Two-Family Dwelling', 'Multiple Dwelling'],

'2019':[avg_2019_one, avg_2019_two, avg_2019_multi], '2020':[avg_2020_one, avg_2020_two, avg_2020_multi], '2021':[avg_2021_one, avg_2021_two, avg_2021_multi]}

# + id="c6S2Knn4qObz" colab={"base_uri": "https://localhost:8080/", "height": 142} outputId="48bea29f-6251-4308-8e4d-66fe43b6b6d0"

results = pd.DataFrame(data)

results

# + id="87f20zVdqOT0" colab={"base_uri": "https://localhost:8080/", "height": 293} outputId="9d841cbc-063d-4f5a-f0c8-cf32420afcf4"

x = np.arange(3)

y1 = [avg_2019_one, avg_2020_one, avg_2021_one]

y2 = [avg_2019_two, avg_2020_two, avg_2021_two]

y3 = [avg_2019_multi, avg_2020_multi, avg_2021_multi]

width = 0.20

plt.bar(x-0.2, y1, width)

plt.bar(x, y2, width)

plt.bar(x+0.2, y3, width)

plt.xticks(x, ['One-Family', 'Two-Family', 'Multiple'])

plt.legend(["2019", "2020", "2021"])

plt.ylabel("Land Values (xe6)")

# + id="UjuqT2EYqODg"

| notebooks/LandCostAveragePerYear.ipynb |

# +

# Copyright 2010-2018 Google LLC

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# [START program]

"""Simple Vehicles Routing Problem."""

# [START import]

from __future__ import print_function

from ortools.constraint_solver import routing_enums_pb2

from ortools.constraint_solver import pywrapcp

# [END import]

# [START data_model]

def create_data_model():

"""Stores the data for the problem."""

data = {}

data['distance_matrix'] = [

[

0, 548, 776, 696, 582, 274, 502, 194, 308, 194, 536, 502, 388, 354,

468, 776, 662

],

[

548, 0, 684, 308, 194, 502, 730, 354, 696, 742, 1084, 594, 480, 674,

1016, 868, 1210

],

[

776, 684, 0, 992, 878, 502, 274, 810, 468, 742, 400, 1278, 1164,

1130, 788, 1552, 754

],

[

696, 308, 992, 0, 114, 650, 878, 502, 844, 890, 1232, 514, 628, 822,

1164, 560, 1358

],

[

582, 194, 878, 114, 0, 536, 764, 388, 730, 776, 1118, 400, 514, 708,

1050, 674, 1244

],

[

274, 502, 502, 650, 536, 0, 228, 308, 194, 240, 582, 776, 662, 628,

514, 1050, 708

],

[

502, 730, 274, 878, 764, 228, 0, 536, 194, 468, 354, 1004, 890, 856,

514, 1278, 480

],

[

194, 354, 810, 502, 388, 308, 536, 0, 342, 388, 730, 468, 354, 320,

662, 742, 856

],

[

308, 696, 468, 844, 730, 194, 194, 342, 0, 274, 388, 810, 696, 662,

320, 1084, 514

],

[

194, 742, 742, 890, 776, 240, 468, 388, 274, 0, 342, 536, 422, 388,

274, 810, 468

],

[

536, 1084, 400, 1232, 1118, 582, 354, 730, 388, 342, 0, 878, 764,

730, 388, 1152, 354

],

[

502, 594, 1278, 514, 400, 776, 1004, 468, 810, 536, 878, 0, 114,

308, 650, 274, 844

],

[

388, 480, 1164, 628, 514, 662, 890, 354, 696, 422, 764, 114, 0, 194,

536, 388, 730

],

[

354, 674, 1130, 822, 708, 628, 856, 320, 662, 388, 730, 308, 194, 0,

342, 422, 536

],

[

468, 1016, 788, 1164, 1050, 514, 514, 662, 320, 274, 388, 650, 536,

342, 0, 764, 194

],

[

776, 868, 1552, 560, 674, 1050, 1278, 742, 1084, 810, 1152, 274,

388, 422, 764, 0, 798

],

[

662, 1210, 754, 1358, 1244, 708, 480, 856, 514, 468, 354, 844, 730,

536, 194, 798, 0

],

]

data['num_vehicles'] = 4

# [START starts_ends]

data['starts'] = [1, 2, 15, 16]

data['ends'] = [0, 0, 0, 0]

# [END starts_ends]

return data

# [END data_model]

# [START solution_printer]

def print_solution(data, manager, routing, solution):

"""Prints solution on console."""

max_route_distance = 0

for vehicle_id in range(data['num_vehicles']):

index = routing.Start(vehicle_id)

plan_output = 'Route for vehicle {}:\n'.format(vehicle_id)

route_distance = 0

while not routing.IsEnd(index):

plan_output += ' {} -> '.format(manager.IndexToNode(index))

previous_index = index

index = solution.Value(routing.NextVar(index))

route_distance += routing.GetArcCostForVehicle(

previous_index, index, vehicle_id)

plan_output += '{}\n'.format(manager.IndexToNode(index))

plan_output += 'Distance of the route: {}m\n'.format(route_distance)

print(plan_output)

max_route_distance = max(route_distance, max_route_distance)

print('Maximum of the route distances: {}m'.format(max_route_distance))

# [END solution_printer]

"""Entry point of the program."""

# Instantiate the data problem.

# [START data]

data = create_data_model()

# [END data]

# Create the routing index manager.

# [START index_manager]

manager = pywrapcp.RoutingIndexManager(len(data['distance_matrix']),

data['num_vehicles'], data['starts'],

data['ends'])

# [END index_manager]

# Create Routing Model.

# [START routing_model]

routing = pywrapcp.RoutingModel(manager)

# [END routing_model]

# Create and register a transit callback.

# [START transit_callback]

def distance_callback(from_index, to_index):

"""Returns the distance between the two nodes."""

# Convert from routing variable Index to distance matrix NodeIndex.

from_node = manager.IndexToNode(from_index)

to_node = manager.IndexToNode(to_index)

return data['distance_matrix'][from_node][to_node]

transit_callback_index = routing.RegisterTransitCallback(distance_callback)

# [END transit_callback]

# Define cost of each arc.

# [START arc_cost]

routing.SetArcCostEvaluatorOfAllVehicles(transit_callback_index)

# [END arc_cost]

# Add Distance constraint.

# [START distance_constraint]

dimension_name = 'Distance'

routing.AddDimension(

transit_callback_index,

0, # no slack

2000, # vehicle maximum travel distance

True, # start cumul to zero

dimension_name)

distance_dimension = routing.GetDimensionOrDie(dimension_name)

distance_dimension.SetGlobalSpanCostCoefficient(100)

# [END distance_constraint]

# Setting first solution heuristic.

# [START parameters]

search_parameters = pywrapcp.DefaultRoutingSearchParameters()

search_parameters.first_solution_strategy = (

routing_enums_pb2.FirstSolutionStrategy.PATH_CHEAPEST_ARC)

# [END parameters]

# Solve the problem.

# [START solve]

solution = routing.SolveWithParameters(search_parameters)

# [END solve]

# Print solution on console.

# [START print_solution]

if solution:

print_solution(data, manager, routing, solution)

# [END print_solution]

| examples/notebook/constraint_solver/vrp_starts_ends.ipynb |

# ---

# jupyter:

# jupytext:

# text_representation:

# extension: .py

# format_name: light

# format_version: '1.5'

# jupytext_version: 1.14.4

# kernelspec:

# display_name: Python 3

# language: python

# name: python3

# ---

# + [markdown] deletable=true editable=true

# # Numpy 介紹

# + deletable=true editable=true

# 起手式

import numpy as np

# + [markdown] deletable=true editable=true

# ### 建立 ndarray

# + deletable=true editable=true

np.array([1,2,3,4])

# + deletable=true editable=true

x = _

# + deletable=true editable=true

y = np.array([[1.,2,3],[4,5,6]])

y

# + [markdown] deletable=true editable=true

# 看 ndarray 的第一件事情: shape , dtype

# + deletable=true editable=true

x.shape

# + deletable=true editable=true

y.shape

# + deletable=true editable=true

x.dtype

# + deletable=true editable=true

y.dtype

# + [markdown] deletable=true editable=true

# ### 有時候,可以看圖

# + deletable=true editable=true

# import matplotlib

# %matplotlib inline

import matplotlib.pyplot as plt

# 畫圖

plt.plot(x, 'x');

# + [markdown] deletable=true editable=true

# ### 有很多其他建立的方式

# + deletable=true editable=true

# 建立 0 array

np.zeros_like(y)

# + deletable=true editable=true

np.zeros((10,10))

# + deletable=true editable=true

# 跟 range 差不多

x = np.arange(0, 10, 0.1)

# 亂數

y = np.random.uniform(-1,1, size=x.shape)

plt.plot(x, y)

# + [markdown] deletable=true editable=true

# 這是一堆資料

# * 資料有什麼資訊?

# * 資料有什麼限制?

# * 這些限制有什麼意義?好處?

# * 以前碰過什麼類似的東西?

# * 可以套用在哪些東西上面?

# * 可以怎麼用(運算)?

# + [markdown] deletable=true editable=true

# 最簡單的計算是 **逐項計算**

# see also `np.vectorize`

# + deletable=true editable=true

x = np.linspace(0, 2* np.pi, 1000)

plt.plot(x, np.sin(x))

# + [markdown] deletable=true editable=true

# ## Q0:

# 畫出 $y=x^2+1$ 或其他函數的圖形

# 用

# ```python

# # plt.plot?

# ```

# 看看 plot 還有什麼參數可以玩

# + deletable=true editable=true

#可以用 %run -i 跑參考範例

# %run -i q0.py

# + deletable=true editable=true

# 或者看看參考範例

# #%load q0.py

# + [markdown] deletable=true editable=true

# ## Q1:

# 試試看圖片。

# 使用

# ```python

# from PIL import Image

# # 讀入 PIL Image (這張圖是從 openclipart 來的 cc0)

# img = Image.open('img/Green-Rolling-Hills-Landscape-800px.png')

# # 圖片轉成 ndarray

# img_array = np.array(img)

# # ndarray 轉成 PIL Image

# Image.fromarray(img_array)

# ```

# 看看這個圖片的內容, dtype 和 shape

# + deletable=true editable=true

# 參考答案

# #%load q1.py

# + [markdown] deletable=true editable=true

# ### Indexing

# 可以用類似 list 的 indexing

# + deletable=true editable=true

a = np.arange(30)

a

# + deletable=true editable=true

a[5]

# + deletable=true editable=true

a[3:7]

# + deletable=true editable=true

# 列出所有奇數項

a[1::2]

# + deletable=true editable=true

# 還可以用來設定值

a[1::2] = -1

a

# + deletable=true editable=true

# 或是

a[1::2] = -a[::2]-1

a

# + [markdown] deletable=true editable=true

# ## Q2

# 給定

# ```python

# x = np.arange(30)

# a = np.arange(30)

# a[1::2] = -a[1::2]

# ```

# 畫出下面的圖

# + deletable=true editable=true

# %run -i q2.py

# #%load q2.py

# + [markdown] deletable=true editable=true

# ### ndarray 也可以

# + deletable=true editable=true

b = np.array([[1,2,3], [4,5,6], [7,8,9]])

b

# + deletable=true editable=true

b[1][2]

# + deletable=true editable=true

b[1,2]

# + deletable=true editable=true

b[1]

# + [markdown] deletable=true editable=true

# ## Q3

# 動手試試看各種情況

# 比方

# ```python

# b = np.random.randint(0,99, size=(10,10))

# b[::2, 2]

# ```

# + [markdown] deletable=true editable=true

# ### Fancy indexing

# + deletable=true editable=true

b = np.random.randint(0,99, size=(5,10))

b

# + [markdown] deletable=true editable=true

# 試試看下面的結果

#

# 想一下是怎麼一回事(numpy 在想什麼?)

# + deletable=true editable=true

b[[1,3]]

# + deletable=true editable=true

b[(1,3)]

# + deletable=true editable=true

b[[1,2], [3,4]]

# + deletable=true editable=true

b[[(1,2),(3,4)]]

# + deletable=true editable=true

b[[True, False, False, True, False]]

# + [markdown] deletable=true editable=true

# ## Q4

# 把 `b` 中的偶數都變成 `-1`

# + deletable=true editable=true

#參考範例

# %run -i q4.py

# + [markdown] deletable=true editable=true

# # 用圖形來練習

# + deletable=true editable=true

# 還記得剛才的

from PIL import Image

img = Image.open('img/Green-Rolling-Hills-Landscape-800px.png')

img_array = np.array(img)

Image.fromarray(img_array)

# + deletable=true editable=true

# 用來顯示圖片的函數

from IPython.display import display

def show(img_array):

display(Image.fromarray(img_array))

# + [markdown] deletable=true editable=true

# ## Q

# * 將圖片縮小成一半

# * 擷取中間一小塊

# * 圖片上下顛倒

# * 左右鏡射

# * 去掉綠色

# * 將圖片放大兩倍

# * 貼另外一張圖到大圖中

# ```python

# from urllib.request import urlopen

# url = "https://raw.githubusercontent.com/playcanvas/engine/master/examples/images/animation.png"

# simg = Image.open(urlopen(url))

# ```

# * 紅綠交換

# * 團片變成黑白 參考 `Y=0.299R+0.587G+0.114B`

# * 會碰到什麼困難? 要如何解決

# + deletable=true editable=true

# 將圖片縮小成一半

# %run -i q_half.py

# + deletable=true editable=true

# 將圖片放大

# %run -i q_scale2.py

# + deletable=true editable=true

# 圖片上下顛倒

show(img_array[::-1])

# + deletable=true editable=true

# %run -i q_paste.py

# + deletable=true editable=true

# %run -i q_grayscale.py

# + [markdown] deletable=true editable=true

# ## Q

# * 挖掉個圓圈? (300,300)中心,半徑 100

# * 旋轉九十度? x,y 互換?

# + deletable=true editable=true

# 用迴圈畫圓

# %run -i q_slow_circle.py

# + deletable=true editable=true

# 用 fancy index 畫圓

# %run -i q_fast_circle.py

# + [markdown] deletable=true editable=true

# ### indexing 的其他用法

# + deletable=true editable=true

# 還可以做模糊化

a = img_array.astype(float)

for i in range(10):

a[1:,1:] = (a[1:,1:]+a[:-1,1:]+a[1:,:-1]+a[:-1,:-1])/4

show(a.astype('uint8'))

# + deletable=true editable=true

# 求邊界

a = img_array.astype(float)

a = a @ [0.299, 0.587, 0.114, 0]

a = np.abs((a[1:]-a[:-1]))*2

show(a.astype('uint8'))

# + [markdown] deletable=true editable=true

# ## Reshaping

# `.flatten` 拉平看看資料在電腦中如何儲存?

#

#

#

# 查看

# `.reshape, .T, np.rot00, .swapaxes .rollaxis`

# 然後再做一下上面的事情

# + deletable=true editable=true

# reshaping 的應用

R,G,B,A = img_array.reshape(-1,4).T

plt.hist((R,G,B,A), color="rgby");

# + [markdown] deletable=true editable=true

# ## 堆疊在一起

# 查看 `np.vstack` `np.hstack` `np.concatenate` 然後試試看

# + deletable=true editable=true

# 例子

show(np.hstack([img_array, img_array2]))

# + deletable=true editable=true

# 例子

np.concatenate([img_array, img_array2], axis=2).shape

# + [markdown] deletable=true editable=true

# ## 作用在整個 array/axis 的函數

# + deletable=true editable=true

np.max([1,2,3,4])

# + deletable=true editable=true

np.sum([1,2,3,4])

# + deletable=true editable=true

np.mean([1,2,3,4])

# + deletable=true editable=true

np.min([1,2,3,4])

# + [markdown] deletable=true editable=true

# 多重意義的運用, 水平平均,整合垂直平均

# + deletable=true editable=true

x_mean = img_array.astype(float).min(axis=0, keepdims=True)

print(x_mean.dtype, x_mean.shape)

y_mean = img_array.astype(float).min(axis=1, keepdims=True)

print(y_mean.dtype, y_mean.shape)

# 自動 broadcast

xy_combined = ((x_mean+y_mean)/2).astype('uint8')

show(xy_combined)

# + [markdown] deletable=true editable=true

# ## Tensor 乘法

# + [markdown] deletable=true editable=true

# 先從點積開始

# + deletable=true editable=true

# = 1*4 + 2*5 + 4*6

np.dot([1,2,3], [4,5,6])

# + deletable=true editable=true

u=np.array([1,2,3])

v=np.array([4,5,6])

print( u@v )

print( (u*v).sum() )

# + [markdown] deletable=true editable=true

# ### 矩陣乘法

# 如果忘記矩陣乘法是什麼了, 參考這裡 http://matrixmultiplication.xyz/

# 或者 http://eli.thegreenplace.net/2015/visualizing-matrix-multiplication-as-a-linear-combination/

#

#

# 矩陣乘法可以看成是:

# * 所有組合(其他軸)的內積(共有軸)

# * 多個行向量線性組合

# * 代入線性方程式 [A1-矩陣與基本列運算.ipynb](A1-矩陣與基本列運算.ipynb)

# * 用 numpy 來理解

# ```python

# np.sum(a[:,:, np.newaxis] * b[np.newaxis, : , :], axis=1)

# dot(a, b)[i,k] = sum(a[i,:] * b[:, k])

# ```

#

# ### 高維度

# 要如何推廣?

# * tensordot, tensor contraction, a.shape=(3,4,5), b.shape=(4,5,6), axis = 2 時等價於

# ```python

# np.sum(a[..., np.newaxis] * b[np.newaxis, ...], axis=(1, 2))

# tensordot(a,b)[i,k]=sum(a[i, ...]* b[..., k])

# ```

# https://en.wikipedia.org/wiki/Tensor_contraction

#

# * dot

# ```python

# dot(a, b)[i,j,k,m] = sum(a[i,j,:] * b[k,:,m])

# np.tensordot(a,b, axes=(-1,-2))

# ```

# * matmul 最後兩個 index 當成 matrix

# ```python

# a=np.random.random(size=(3,4,5))

# b=np.random.random(size=(3,5,7))

# (a @ b).shape

# np.sum(a[..., np.newaxis] * np.moveaxis(b[..., np.newaxis], -1,-3), axis=-2)

# ```

# * einsum https://en.wikipedia.org/wiki/Einstein_notation

# ```python

# np.einsum('ii', a) # trace(a)

# np.einsum('ii->i', a) #diag(a)

# np.einsum('ijk,jkl', a, b) # tensordot(a,b)

# np.einsum('ijk,ikl->ijl', a,b ) # matmul(a,b)

# ```

# + deletable=true editable=true

A=np.random.randint(0,10, size=(5,3))

A

# + deletable=true editable=true

B=np.random.randint(0,10, size=(3,7))

B

# + deletable=true editable=true

A.dot(B)

# + [markdown] deletable=true editable=true

# ## Q

# * 手動算算看 A,B 的 dot

# * 試試看其他的乘法

# + [markdown] deletable=true editable=true

# ### 小結

# + [markdown] deletable=true editable=true

# numpy 以 ndarray 為中心

# * 最基本的運算是逐項運算(用 `np.vectorize`把一般的函數變成逐項運算 )

# * indexing 很好用

# * reshaping

# * 整合的操作與計算

| Week01/01-Numpy.ipynb |

# ---

# jupyter:

# jupytext:

# text_representation:

# extension: .py

# format_name: light

# format_version: '1.5'

# jupytext_version: 1.14.4

# kernelspec:

# display_name: Python 3

# language: python

# name: python3

# ---

import pandas as pd

import numpy as np

import math

import tensorflow as tf

import matplotlib.pyplot as plt

print(pd.__version__)

import progressbar

from tensorflow import keras

# ### Load of the test data

from process import loaddata

class_data = loaddata("../data/classifier/100-high-ene.csv")

np.random.shuffle(class_data)

y = class_data[:,-7:-4]

x = class_data[:,1:7]

# ### Model Load

model = keras.models.load_model('../models/classificationandregression/large_mse250.h5')

# ### Test of the Classification&Regression NN

model.fit(x, y)

# ### Test spectrum

#

# A quick way of saying how well the network is doing. We reproduce the electrons final spectrum using the Neural Network's prediction and we compare it to the real "spectrum", the one obtained from OSIRIS.

def energy_spectrum(energy_array, bins):

energy_array = np.array(energy_array)

plt.hist(energy_array, bins, histtype=u'step')

plt.yscale("log")

plt.show()

final_e = []

for y_ in y:

final_e.append(np.linalg.norm(y_))

energy_spectrum(final_e, 75)

prediction = model.predict(x)

from tensorflow import keras

final_e_nn = []

bar = progressbar.ProgressBar(maxval=len(prediction),

widgets=[progressbar.Bar('=', '[', ']'), ' ',

progressbar.Percentage(),

" of {0}".format(len(prediction))])

bar.start()

for i, pred in enumerate(prediction):

final_e_nn.append(np.linalg.norm(pred))

bar.update(i+1)

bar.finish()

plt.hist(final_e, bins=100, alpha = 0.5, color = 'mediumslateblue', label='Electrons spectrum', density = True)

plt.legend(loc='upper right')

plt.yscale('log')

plt.savefig('../plots/onenetwork/highene/electronspectrum.png')

plt.savefig('../plots/onenetwork/highene/electronspectrum.pdf')

plt.show()

plt.hist(final_e_nn, bins=100, alpha = 0.5, color = 'indianred', label='NN prediction', density = True)

plt.legend(loc='upper right')

plt.yscale('log')

plt.savefig('../plots/onenetwork/highene/NNprediction.png')

plt.savefig('../plots/onenetwork/highene/NNprediction.pdf')

plt.show()

plt.hist(final_e_nn, bins=100, alpha = 0.5, color = 'mediumslateblue', label='NN prediction', density = True)

plt.hist(final_e, bins=100, alpha = 0.5, color = 'indianred', label='Electron Momentum from simulations', density = True)

plt.legend(loc = 'upper right')

plt.yscale('log')

plt.savefig('../plots/onenetwork/highene/comparison.png')

plt.savefig('../plots/onenetwork/highene/comparison.pdf')

plt.show()

| notebooks/Cool ideas...but not working/Test_NeuralNetwork-Highenergy_RegressionSpectrum.ipynb |

-- -*- coding: utf-8 -*-

-- ---

-- jupyter:

-- jupytext:

-- text_representation:

-- extension: .hs

-- format_name: light

-- format_version: '1.5'

-- jupytext_version: 1.14.4

-- kernelspec:

-- display_name: Haskell

-- language: haskell

-- name: haskell

-- ---

-- # 5. List comprehensions

-- ### 5.1 Basic concepts

-- $\displaystyle \big\{x2 \mid x\in\{1 . . 5\} \big\}$

--

-- $\{1,4,9,16,25\}$

[x^2 | x <- [1..5]]

-- 표현식 `x <- [1..5]` 에서 식 `[1..5]`가 제너레이터(generator)에 해당됨.

[(x,y) | x <- [1,2,3], y <- [4,5]]

[(x,y) | y <- [4,5], x <- [1,2,3]]

[(x,y) | x <- [1,2,3], y <- [1,2,3]]

:type concat

concat :: [[a]] -> [a]

concat x2 = [x | x1 <- x2, x <- x1]

-- concat xss = [x | xs <- xss, x <- xs]

concat [[1,2],[3,4,5],[6,7,8,9]]

firsts :: [(a,b)] -> [a]

firsts ps = [x | (x, _) <- ps]

firsts [(1,'a'), (2,'b'), (3,'c')] -- [1,2,3]

length :: [a] -> Int

length xs = sum [1 | _ <- xs]

length [1,2,3,4,5] -- 5

xs = [1,2,3,4,5]

[1 | _ <- xs]

sum [1, 2, 3, 4, 5]

-- ### 5.2 Guards (조건)

[x | x <- [1..10], even x]

[x | x <- [1..10], odd x]

-- 표현식 `even x` 와 `odd x` 는 가드라고 부른다.

factors :: Int -> [Int]

factors n = [x | x <- [1..n], n `mod` x == 0]

factors 15 -- [1,3,5,15]

factors 7 -- [1,7]

prime :: Int -> Bool

prime n = factors n == [1, n]

prime 15

prime 7

primes' :: Int -> [Int]

primes' t = [x | x <- [2..t], prime x]

primes' 40

find :: Eq a => a -> [(a, b)] -> [b]

find k t = [v | (k',v) <- t, k == k']

find 'a' [('a',1), ('b',2), ('c',3), ('b',4)] -- [1]

find 'b' [('a',1), ('b',2), ('c',3), ('b',4)] -- [2,4]

find 'c' [('a',1), ('b',2), ('c',3), ('b',4)] -- [3]

find 'd' [('a',1), ('b',2), ('c',3), ('b',4)] -- []

find' :: Eq b => b -> [(a, b)] -> [a]

find' t k = [v | (v, t') <- k, t == t']

find' 1 [('a',1), ('b',2), ('c',3), ('b',4)] -- ['a']

find' 2 [('a',1), ('b',2), ('c',3), ('b',4)] -- ['b']

find' 3 [('a',1), ('b',2), ('c',3), ('b',4)] -- ['c']

find' 4 [('a',1), ('b',2), ('c',3), ('b',4)] -- ['d']

--

find''

find'' :: Eq a => a -> [(a,(b,c))] -> [(b,c)]

find'' k t = [v | (k',(v)) <- t, k == k']

find'' 'a' [('a',(1,2)), ('b',(3,4)), ('c',(5,6)), ('b',(7,8))] -- ['a']

-- 결론은

-- #### [처음 식 리스트 중 하나를 이용한 값(표현식) | 처음 식 리스트 중 하나 <- 처음 식, 조건] 구조로 이루어짐.

-- ### 5.3 The `zip` function

zip ['a','b','c'] [1,2,3,4]

:type zip

pairs :: [a] -> [(a, a)]

pairs ns = zip ns (tail ns)

xs = [1,2,3,4]

tail xs

zip [1,2,3] [2,3,4]

pairs [1,2,3,4] -- [(1,2),(2,3),(3,4)]

-- * `[1,2,3,4,5,6,7,8]` 을 인접한 수끼리 묶은 튜플 리스트를 만들고 싶다면

-- `zip [1,2,3,4,5,6,7] [2,3,4,5,6,7,8]`

zip [1,2,3,4,5,6,7] [2,3,4,5,6,7,8]

sorted :: Ord a => [a] -> Bool

sorted xs = and [x <= y | (x,y) <- pairs xs]

sorted [1,2,3,4] -- True

sorted [1,3,2,4] -- False

:type and

and [True, False]

sorted :: Ord a => [a] -> [a]

sorted xs = [if x > y then x else y | (x,y) <- pairs xs]

sorted [1,2,3,4] -- True

sorted [1,3,2,4] -- False

positions :: Eq a => a -> [a] -> [Int] -- zip a b 사용

positions x xs = [i | (x', i) <- zip xs [0..], x == x']

zip [True, False, True, False] [0..]

positions False [True, False, True, False] -- [1,3]

-- ### 5.4 String comprehensions

("abc" :: String) == (['a','b','c'] :: [Char])

"abcde" !! 2

take 3 "abcde"

length "abcde"

-- +

import Data.Char (isLower)

lowers :: String -> Int

lowers cs = length [c | c <- cs, isLower c]

-- -

lowers "Haskell" -- 6

lowers "LaTeX" -- 2

count :: Char -> String -> Int

count c cs = sum [1 | c' <- cs, c == c']

count' c cs = length [c' | c' <- cs, c == c']

count 's' "Mississippi"

count' 's' "Mississippi"

-- ### 5.5 The Caesar cipher

| PiHchap05.ipynb |

# ---

# jupyter:

# jupytext:

# text_representation:

# extension: .py

# format_name: light

# format_version: '1.5'

# jupytext_version: 1.14.4

# kernelspec:

# display_name: Python [conda env:pg]

# language: python

# name: conda-env-pg-py

# ---

# + [markdown] toc=true

# <h1>Inhaltsverzeichnis<span class="tocSkip"></span></h1>

# <div class="toc"><ul class="toc-item"></ul></div>

# -

import pygimli as pg

import matplotlib.pyplot as plt

import pygimli.meshtools as mt

import os

from os import system

import numpy as np

def callTriangle(filename,

quality=33,

triangle='triangle',

verbose=True):

filebody = filename.replace('.poly', '')

syscal = triangle + ' -pq' + str(quality)

syscal += 'Aa ' + filebody + '.poly'

if verbose:

print(syscal)

system(syscal)

world = mt.createWorld(start=[-2e5, -2e5],

end=[2e5, 2e5],

layers=[0, 75e3],

area=[0, 1e7, 0],

marker=[1, 2, 3],

worldMarker=False)

blockleft = mt.createRectangle(start=[-22e3, 7e3],

end=[-4e3, 9e3],

marker=4,

markerPosition=[-18e3, 8e3],

area=1e4, boundaryMarker=10)

blockright = mt.createRectangle(start=[10e3, 7e3],

end=[22e3, 9e3],

marker=5,

markerPosition=[18e3, 8e3],

area=1e4, boundaryMarker=12)

geom = world + blockleft + blockright

ax, _ = pg.show(geom,

showNodes=False,

boundaryMarker=False)

ax.set_ylim(ax.get_ylim()[::-1]);

mt.exportPLC(geom,

'../meshes/commemi2d2.poly',

float_format='.8e')

callTriangle('../meshes/commemi2d2.poly',

quality=34.2,

verbose=False)

mesh = mt.createMesh(geom, quality=34.2)

ax, _ = pg.show(mesh)

ax.set_ylim(ax.get_ylim()[::-1]);

| Notebooks/COMMEMI_2D_2.ipynb |

# ---

# jupyter:

# jupytext:

# text_representation:

# extension: .py

# format_name: light

# format_version: '1.5'

# jupytext_version: 1.14.4

# kernelspec:

# display_name: Python 3

# language: python

# name: python3

# ---

import numpy as np

from load_data_df import *

# # Data Paths

aes_data_dir = '../aes'

uart_data_dir = '../uart'

or1200_data_dir = '../or1200'

picorv32_data_dir = '../picorv32'

# # Plot Settings

# +

# Plot Settings

FIG_WIDTH = 12

FIG_HEIGHT = 6

HIST_SAVE_AS_PDF = True

AES_FP_SAVE_AS_PDF = False

UART_FP_SAVE_AS_PDF = False

OR1200_FP_SAVE_AS_PDF = False

# Plot PDF Filenames

HIST_PDF_FILENAME = 'cntr_sizes_histogram.pdf'

AES_FP_PDF_FILENAME = 'aes_fps_vs_time.pdf'

UART_FP_PDF_FILENAME = 'uart_fps_vs_time.pdf'

OR1200_FP_PDF_FILENAME = 'or1200_fps_vs_time.pdf'

# -

# # Plot Counter Size Histogram

# +

def plt_histogram(data, ax, title, color_index):

bins = [0,8,16,32,64,128,256] # your bins

# Create Histogram

hist, bin_edges = np.histogram(data,bins) # make the histogram

# Make x-tick labels list

xtick_labels = ['{}'.format(bins[i]) for i,j in enumerate(hist)]

xtick_labels[0] = 1

# Plot Histogram

ax.bar(\

range(len(hist)),\

hist,\

width = 1,\

align = 'center',\

tick_label = xtick_labels, \

color = current_palette[color_index])

# Format Histogram

ax.set_title(title, fontweight='bold', fontsize=14)

ax.set_xlabel('Register Size (# bits)', fontsize=12)

ax.set_ylabel('# Registers', fontsize=12)

ax.grid(axis='y', alpha=0.5)

ax.set_ylim(0, 400)

# Text on the top of each barplot

for i in range(len(hist)):

ax.text(x = i , y = hist[i] + 10, s = hist[i], size = 12, horizontalalignment='center')

# Load Data

aes_counter_sizes = load_counter_sizes(aes_data_dir)

uart_counter_sizes = load_counter_sizes(uart_data_dir)

or1200_counter_sizes = load_counter_sizes(or1200_data_dir)

picorv32_counter_sizes = load_counter_sizes(picorv32_data_dir)

# Create Figure

sns.set()

current_palette = sns.color_palette()

fig, axes = plt.subplots(1, 4, figsize=(14, 3))

plt_histogram(aes_counter_sizes['Coalesced Sizes'], axes[0], 'AES', 0)

plt_histogram(uart_counter_sizes['Coalesced Sizes'], axes[1], 'UART', 1)

plt_histogram(or1200_counter_sizes['Coalesced Sizes'], axes[2], 'OR1200', 2)

plt_histogram(picorv32_counter_sizes['Coalesced Sizes'], axes[3], 'RISC-V', 3)

# Show

plt.tight_layout(h_pad=1)

plt.show()

# +

def plt_histogram_grouped(data_1, data_2, data_3, data_4, ax):

bins = [0,8,16,32,64,128,256] # your bins

# Create Histograms

hist_1, bin_edges_1 = np.histogram(data_1, bins) # make the histogram

hist_2, bin_edges_2 = np.histogram(data_2, bins) # make the histogram

hist_3, bin_edges_3 = np.histogram(data_3, bins) # make the histogram

hist_4, bin_edges_4 = np.histogram(data_4, bins) # make the histogram

# Make x-tick labels list

xtick_labels = ['{}'.format(bins[i]) for i,j in enumerate(hist_1)]

xtick_labels[0] = 1

# Set bar widths

bar_width = 0.2

r_1 = range(len(hist_1))

r_2 = [x + bar_width for x in r_1]

r_2end = [x + bar_width + 0.1 for x in r_1]

r_3 = [x + bar_width for x in r_2]

r_4 = [x + bar_width for x in r_3]

# Plot Histogram

ax.bar(r_1, hist_1, color=current_palette[0], width=bar_width, edgecolor='white', label='AES')

ax.bar(r_2, hist_2, color=current_palette[1], width=bar_width, edgecolor='white', label='UART')

ax.bar(r_3, hist_3, color=current_palette[2], width=bar_width, edgecolor='white', label='RISC-V')

ax.bar(r_4, hist_4, color='#FAC000', width=bar_width, edgecolor='white', label='OR1200')

# Format Histogram

plt.xticks(r_2end, xtick_labels, fontsize=14)

plt.yticks(range(0, 401, 100), range(0, 401, 100), fontsize=14)

plt.legend(fontsize=14)

ax.set_xlabel('Register Size (# bits)', fontsize=14)

ax.set_ylabel('# Registers', fontsize=14)

ax.grid(axis='y', alpha=0.5)

ax.set_ylim(0, 400)

# Text on the top of each barplot

for i in range(len(hist_1)):

ax.text(x = i , y = hist_1[i] + 10, s = hist_1[i], size = 12, horizontalalignment='center')

ax.text(x = i + bar_width, y = hist_2[i] + 10, s = hist_2[i], size = 12, horizontalalignment='center')

ax.text(x = i + (2*bar_width), y = hist_3[i] + 10, s = hist_3[i], size = 12, horizontalalignment='center')

ax.text(x = i + (3*bar_width), y = hist_4[i] + 10, s = hist_4[i], size = 12, horizontalalignment='center')

# Load Data

aes_counter_sizes = load_counter_sizes(aes_data_dir)

uart_counter_sizes = load_counter_sizes(uart_data_dir)

picorv32_counter_sizes = load_counter_sizes(picorv32_data_dir)

or1200_counter_sizes = load_counter_sizes(or1200_data_dir)

# Create Figure

sns.set_style(style="whitegrid")

current_palette = sns.color_palette()

fig, axes = plt.subplots(1, 1, figsize=(8, 4))

plt_histogram_grouped(\

aes_counter_sizes['Coalesced Sizes'],\

uart_counter_sizes['Coalesced Sizes'],\

picorv32_counter_sizes['Coalesced Sizes'],\

or1200_counter_sizes['Coalesced Sizes'],\

axes)

# Save Histogram to PDF

plt.tight_layout(h_pad=1)

if HIST_SAVE_AS_PDF:

plt.savefig(HIST_PDF_FILENAME, format='pdf')

plt.show()

| circuits/plots/exp_001_design_complexities.ipynb |

# ---

# jupyter:

# jupytext:

# text_representation:

# extension: .py

# format_name: light

# format_version: '1.5'

# jupytext_version: 1.14.4

# kernelspec:

# display_name: Python 3

# language: python

# name: python3

# ---

# +

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

from sklearn.metrics import mean_squared_error

from scipy import stats

plt.rcParams['figure.figsize'] = [12,6]

import warnings

warnings.filterwarnings('ignore')

# + [markdown] heading_collapsed=true

# # Trend

# + hidden=true

df = pd.read_excel('India_Exchange_Rate_Dataset.xls', index_col=0, parse_dates=True)

df.head()

# + hidden=true

df.plot()

plt.show()

# + [markdown] heading_collapsed=true hidden=true

# ## Detecting Trend using Hodrick-Prescott Filter

# + hidden=true

from statsmodels.tsa.filters.hp_filter import hpfilter

# + hidden=true

cycle, trend = hpfilter(df.EXINUS, lamb=129600)

# + hidden=true

trend.plot()

plt.title('Trend Plot')

plt.show()

# + hidden=true

cycle.plot()

plt.title('Cyclic plot')

plt.show()

# + [markdown] heading_collapsed=true hidden=true

# ## Detrending Time Series

# + [markdown] hidden=true

# 1. Pandas Differencing

# 2. SciPy Signal

# 3. Hp filter

# + [markdown] heading_collapsed=true

# # Seasonality

# + [markdown] hidden=true

# ## Seasonal Decomposition

# + hidden=true

from statsmodels.tsa.seasonal import seasonal_decompose

result = seasonal_decompose(df.EXINUS, model='multiplicative', extrapolate_trend='freq')

# + hidden=true

result.plot();

# + hidden=true

deseason = df.EXINUS - result.seasonal

deseason.plot()

plt.title("Deseason Data")

plt.show()

# + [markdown] heading_collapsed=true

# # Smoothing Methods

# + [markdown] heading_collapsed=true hidden=true

# ## Simple Exponential Smoothing

# + hidden=true

facebook = pd.read_csv('https://raw.githubusercontent.com/Apress/hands-on-time-series-analylsis-python/master/Data/FB.csv',

parse_dates=True, index_col=0)

facebook.head()

# + hidden=true

X = facebook['Close']

train = X.iloc[:-30]

test = X.iloc[-30:]

# + hidden=true

from statsmodels.tsa.api import SimpleExpSmoothing

# + hidden=true

ses = SimpleExpSmoothing(X).fit(smoothing_level=0.9)

# + hidden=true

ses.summary()

# + hidden=true

preds = ses.forecast(30)

rmse = np.sqrt(mean_squared_error(test, preds))

print('RMSE:', rmse)

# + [markdown] heading_collapsed=true hidden=true

# ## Double Exponential Smoothing

# + hidden=true

from statsmodels.tsa.api import ExponentialSmoothing, Holt

# + hidden=true

model1 = Holt(train, damped_trend=False).fit(smoothing_level=0.9, smoothing_trend=0.6,

damping_trend=0.1, optimized=False)

model1.summary()

# + hidden=true

preds = model1.forecast(30)

rmse = np.sqrt(mean_squared_error(test, preds))

print('RMSE:', rmse)

# + hidden=true

model_auto = Holt(train).fit(optimized=True, use_brute=True)

model_auto.summary()

# + hidden=true

preds = model_auto.forecast(30)

rmse = np.sqrt(mean_squared_error(test, preds))

print('RMSE:', rmse)

# + [markdown] hidden=true

# ## Triple Exponential Smoothing

# + hidden=true

model2 = ExponentialSmoothing(train, trend='mul',

damped_trend=False,

seasonal_periods=3).fit(smoothing_level=0.9,

smoothing_trend=0.6,

damping_trend=0.6,

use_boxcox=False,

use_basinhopping=True,

optimized=False)

# + hidden=true

model2.summary()

# -

# # Regression Extension Techniques for Time Series Data

from pmdarima import auto_arima

from statsmodels.tsa.stattools import adfuller

adfuller(facebook['Close'])

# - Fail to reject the null hypothesis. It means data is non-stationary

| Time Series Analysis.ipynb |

# ---

# jupyter:

# jupytext:

# text_representation:

# extension: .py

# format_name: light

# format_version: '1.5'

# jupytext_version: 1.14.4

# kernelspec:

# display_name: Python 3

# language: python

# name: python3

# ---

# # Self-Driving Car Engineer Nanodegree

#

#

# ## Project: **Finding Lane Lines on the Road**

# ***

# In this project, you will use the tools you learned about in the lesson to identify lane lines on the road. You can develop your pipeline on a series of individual images, and later apply the result to a video stream (really just a series of images). Check out the video clip "raw-lines-example.mp4" (also contained in this repository) to see what the output should look like after using the helper functions below.

#

# Once you have a result that looks roughly like "raw-lines-example.mp4", you'll need to get creative and try to average and/or extrapolate the line segments you've detected to map out the full extent of the lane lines. You can see an example of the result you're going for in the video "P1_example.mp4". Ultimately, you would like to draw just one line for the left side of the lane, and one for the right.

#

# In addition to implementing code, there is a brief writeup to complete. The writeup should be completed in a separate file, which can be either a markdown file or a pdf document. There is a [write up template](https://github.com/udacity/CarND-LaneLines-P1/blob/master/writeup_template.md) that can be used to guide the writing process. Completing both the code in the Ipython notebook and the writeup template will cover all of the [rubric points](https://review.udacity.com/#!/rubrics/322/view) for this project.

#

# ---

# Let's have a look at our first image called 'test_images/solidWhiteRight.jpg'. Run the 2 cells below (hit Shift-Enter or the "play" button above) to display the image.

#

# **Note: If, at any point, you encounter frozen display windows or other confounding issues, you can always start again with a clean slate by going to the "Kernel" menu above and selecting "Restart & Clear Output".**

#

# ---

# **The tools you have are color selection, region of interest selection, grayscaling, Gaussian smoothing, Canny Edge Detection and Hough Tranform line detection. You are also free to explore and try other techniques that were not presented in the lesson. Your goal is piece together a pipeline to detect the line segments in the image, then average/extrapolate them and draw them onto the image for display (as below). Once you have a working pipeline, try it out on the video stream below.**

#

# ---

#

# <figure>

# <img src="examples/line-segments-example.jpg" width="380" alt="Combined Image" />

# <figcaption>

# <p></p>

# <p style="text-align: center;"> Your output should look something like this (above) after detecting line segments using the helper functions below </p>

# </figcaption>

# </figure>

# <p></p>

# <figure>

# <img src="examples/laneLines_thirdPass.jpg" width="380" alt="Combined Image" />

# <figcaption>

# <p></p>

# <p style="text-align: center;"> Your goal is to connect/average/extrapolate line segments to get output like this</p>

# </figcaption>

# </figure>

# **Run the cell below to import some packages. If you get an `import error` for a package you've already installed, try changing your kernel (select the Kernel menu above --> Change Kernel). Still have problems? Try relaunching Jupyter Notebook from the terminal prompt. Also, consult the forums for more troubleshooting tips.**

# ## Import Packages

#importing some useful packages

import matplotlib.pyplot as plt

import matplotlib.image as mpimg

from ipywidgets import widgets

import numpy as np

import os

import cv2

# %matplotlib inline

# ## Read in an Image

# +

#reading in an image

image = mpimg.imread('test_images/solidWhiteRight.jpg')

#printing out some stats and plotting

print('This image is:', type(image), 'with dimensions:', image.shape)

plt.imshow(image) # if you wanted to show a single color channel image called 'gray', for example, call as plt.imshow(gray, cmap='gray')

# -

# ## Ideas for Lane Detection Pipeline

# **Some OpenCV functions (beyond those introduced in the lesson) that might be useful for this project are:**

#

# `cv2.inRange()` for color selection

# `cv2.fillPoly()` for regions selection

# `cv2.line()` to draw lines on an image given endpoints

# `cv2.addWeighted()` to coadd / overlay two images

# `cv2.cvtColor()` to grayscale or change color

# `cv2.imwrite()` to output images to file

# `cv2.bitwise_and()` to apply a mask to an image

#

# **Check out the OpenCV documentation to learn about these and discover even more awesome functionality!**

# ## Helper Functions

# Below are some helper functions to help get you started. They should look familiar from the lesson!

# +

import math

class FindLanes(object):

def __init__(self):

left_lane = [0,0,0,0]

right_lane = [0,0,0,0]

def grayscale(self, img):

"""Applies the Grayscale transform

This will return an image with only one color channel

but NOTE: to see the returned image as grayscale

(assuming your grayscaled image is called 'gray')

you should call plt.imshow(gray, cmap='gray')"""

return cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

# Or use BGR2GRAY if you read an image with cv2.imread()

# return cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

def canny(self, img, low_threshold, high_threshold):

"""Applies the Canny transform"""

return cv2.Canny(img, low_threshold, high_threshold)

def gaussian_blur(self, img, kernel_size):

"""Applies a Gaussian Noise kernel"""

return cv2.GaussianBlur(img, (kernel_size, kernel_size), 0)

def region_of_interest(self, img, vertices):

"""

Applies an image mask.

Only keeps the region of the image defined by the polygon

formed from `vertices`. The rest of the image is set to black.

`vertices` should be a numpy array of integer points.

"""

#defining a blank mask to start with

mask = np.zeros_like(img)

#defining a 3 channel or 1 channel color to fill the mask with depending on the input image

if len(img.shape) > 2:

channel_count = img.shape[2] # i.e. 3 or 4 depending on your image

ignore_mask_color = (255,) * channel_count

else:

ignore_mask_color = 255

#filling pixels inside the polygon defined by "vertices" with the fill color

cv2.fillPoly(mask, vertices, ignore_mask_color)

#returning the image only where mask pixels are nonzero

masked_image = cv2.bitwise_and(img, mask)

return masked_image

def draw_lines(self, img, lines, color=[255, 0, 0], thickness=2, interp_tol=10):

"""

NOTE: this is the function you might want to use as a starting point once you want to

average/extrapolate the line segments you detect to map out the full

extent of the lane (going from the result shown in raw-lines-example.mp4

to that shown in P1_example.mp4).

Think about things like separating line segments by their

slope ((y2-y1)/(x2-x1)) to decide which segments are part of the left

line vs. the right line. Then, you can average the position of each of

the lines and extrapolate to the top and bottom of the lane.

This function draws `lines` with `color` and `thickness`.

Lines are drawn on the image inplace (mutates the image).

If you want to make the lines semi-transparent, think about combining

this function with the weighted_img() function below

"""

"""

Left Lane: > 0.0

Right Lane: < 0.0

"""

right_slopes = []

left_slopes = []

right_intercepts = []

left_intercepts = []

x_min_interp = 0

y_max = img.shape[0]

y_min_left = img.shape[0] + 1

y_min_right = img.shape[0] + 1

y_min_interp = 320 + 15 #top of ROI plus some offset for aesthetics

for line in lines:

for x1,y1,x2,y2 in line:

current_slope = (y2-y1)/(x2-x1)

# For each detected line, seperate lines into left and right lanes.

# Calculate the current slope and intercept and keep a history for averaging.

if current_slope < 0.0 and current_slope > -math.inf:

right_slopes.append(current_slope)

right_intercepts.append(y1 - current_slope*x1)

y_min_right = min(y_min_right, y1, y2)

if current_slope > 0.0 and current_slope < math.inf:

left_slopes.append(current_slope)

left_intercepts.append(y1 - current_slope*x1)

y_min_left = min(y_min_left, y1, y2)

# Calculate the average of the slopes, intercepts, x_min and x_max

# Interpolate the average line to the end of the region of interest (using equation of slopes)

if len(left_slopes) > 0:

ave_left_slope = sum(left_slopes) / len(left_slopes)

ave_intercept = sum(left_intercepts) / len(left_intercepts)

x_min=int((y_min_left - ave_intercept)/ ave_left_slope)

x_max = int((y_max - ave_intercept)/ ave_left_slope)

x_min_interp = int(((x_min*y_min_interp) - (x_min*y_max) - (x_max*y_min_interp) + (x_max*y_min_left))/(y_min_left - y_max))

self.left_lane = [x_min_interp, y_min_interp, x_max, y_max]

# Draw the left lane line

cv2.line(img, (self.left_lane[0], self.left_lane[1]), (self.left_lane[2], self.left_lane[3]), [255, 0, 0], 12)

if len(right_slopes) > 0:

ave_right_slope = sum(right_slopes) / len(right_slopes)

ave_intercept = sum(right_intercepts) / len(right_intercepts)

x_min = int((y_min_right - ave_intercept)/ ave_right_slope)

x_max = int((y_max - ave_intercept)/ ave_right_slope)

x_min_interp = int(((x_min*y_min_interp) - (x_min*y_max) - (x_max*y_min_interp) + (x_max*y_min_right))/(y_min_right - y_max))

self.right_lane = [x_min_interp, y_min_interp, x_max, y_max]

# Draw the right lane line

cv2.line(img, (self.right_lane[0], self.right_lane[1]), (self.right_lane[2], self.right_lane[3]), [255, 0, 0], 12)

def hough_lines(self, img, rho, theta, threshold, min_line_len, max_line_gap):

"""

`img` should be the output of a Canny transform.

Returns an image with hough lines drawn.

"""

lines = cv2.HoughLinesP(img, rho, theta, threshold, np.array([]), minLineLength=min_line_len, maxLineGap=max_line_gap)

line_img = np.zeros((img.shape[0], img.shape[1], 3), dtype=np.uint8)

self.draw_lines(line_img, lines)

return line_img

# Python 3 has support for cool math symbols.

def weighted_img(self, img, initial_img, α=0.8, β=1., γ=0.):

"""

`img` is the output of the hough_lines(), An image with lines drawn on it.

Should be a blank image (all black) with lines drawn on it.

`initial_img` should be the image before any processing.

The result image is computed as follows:

initial_img * α + img * β + γ

NOTE: initial_img and img must be the same shape!

"""

return cv2.addWeighted(initial_img, α, img, β, γ)

# -

# ## Test Images

#

# Build your pipeline to work on the images in the directory "test_images"

# **You should make sure your pipeline works well on these images before you try the videos.**

import os

os.listdir("test_images/")

# ## Build a Lane Finding Pipeline

#

#

# Build the pipeline and run your solution on all test_images. Make copies into the `test_images_output` directory, and you can use the images in your writeup report.

#

# Try tuning the various parameters, especially the low and high Canny thresholds as well as the Hough lines parameters.

# +

# TODO: Build your pipeline that will draw lane lines on the test_images

# then save them to the test_images_output directory.

# Load test images

dir = "test_images/"

out_dir = "test_images_output/"

input = os.listdir(dir)

# Define globals

kernel_size = 3

canny_thresh = [75,150]

rho = 2

theta = np.pi/180

threshold = 90

min_line_length = 20

max_line_gap = 20

fl = FindLanes()

# Setup pipeline

def process_image(image):

# Preprocess image

gray = fl.grayscale(image)

plt.imsave(out_dir + "gray.jpg", gray, cmap="gray")

blur = fl.gaussian_blur(gray, kernel_size)

plt.imsave(out_dir + "blur.jpg", blur, cmap="gray")

# Find edges

edges = fl.canny(blur, canny_thresh[0], canny_thresh[1])