repo_name

stringlengths 9

75

| topic

stringclasses 30

values | issue_number

int64 1

203k

| title

stringlengths 1

976

| body

stringlengths 0

254k

| state

stringclasses 2

values | created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| url

stringlengths 38

105

| labels

listlengths 0

9

| user_login

stringlengths 1

39

| comments_count

int64 0

452

|

|---|---|---|---|---|---|---|---|---|---|---|---|

mkhorasani/Streamlit-Authenticator

|

streamlit

| 182 |

Error Username/password is incorrect

|

I try using the demo app and also locally with my code. Even if the user is in the config.yaml file, it does not log in.

|

closed

|

2024-07-30T09:56:35Z

|

2024-08-01T13:42:52Z

|

https://github.com/mkhorasani/Streamlit-Authenticator/issues/182

|

[

"help wanted"

] |

vladyskai

| 6 |

K3D-tools/K3D-jupyter

|

jupyter

| 212 |

Usage in The Littlest JupyterHub

|

I'm new to hosting JupyterHub online in general. I followed the tutorial [here](http://tljh.jupyter.org/en/latest/install/google.html) to install JupyterHub on Google Cloud. I then installed k3d using pip. However, the widget doesn't show up in a notebook. This seems to only apply to k3d widgets, as other ipywidgets like the Buttons work fine. Any thought on what might cause this?

|

closed

|

2020-03-25T04:25:52Z

|

2020-03-26T17:28:51Z

|

https://github.com/K3D-tools/K3D-jupyter/issues/212

|

[] |

panangam

| 2 |

saulpw/visidata

|

pandas

| 2,162 |

Odd fuzzy matching in command palette

|

I love the command palette! 🙂

Why is it when I type "open" and "open file" `open-file` is **not** suggested -- but it is for "file"?

|

open

|

2023-12-07T21:57:46Z

|

2025-01-08T04:56:57Z

|

https://github.com/saulpw/visidata/issues/2162

|

[

"bug"

] |

reagle

| 4 |

ScrapeGraphAI/Scrapegraph-ai

|

machine-learning

| 285 |

Subprocess-exited-with-error

|

**Describe the bug**

When I attempt to pip install the package I get the following error.

register_finder(pkgutil.ImpImporter, find_on_path)

^^^^^^^^^^^^^^^^^^^

note: This error originates from a subprocess, and is likely not a problem with pip.

error: subprocess-exited-with-error

× Getting requirements to build wheel did not run successfully.

│ exit code: 1

╰─> See above for output.

**To Reproduce**

Steps to reproduce the behavior:

- Type pip install scrapegraph-ai

- See error

**Expected behavior**

I excpeted the python package to be installed

**Desktop (please complete the following information):**

- OS: Windows (WSL)

|

closed

|

2024-05-22T16:11:07Z

|

2024-05-26T07:26:05Z

|

https://github.com/ScrapeGraphAI/Scrapegraph-ai/issues/285

|

[] |

jondoescoding

| 6 |

mljar/mljar-supervised

|

scikit-learn

| 268 |

Add more eval metrics

|

Please add support for more eval metrics.

|

closed

|

2020-12-09T09:39:11Z

|

2021-04-07T15:29:40Z

|

https://github.com/mljar/mljar-supervised/issues/268

|

[

"enhancement",

"help wanted"

] |

pplonski

| 6 |

QuivrHQ/quivr

|

api

| 2,731 |

Remove URL crawling and pywright to separate service

|

closed

|

2024-06-25T13:07:59Z

|

2024-09-28T20:06:17Z

|

https://github.com/QuivrHQ/quivr/issues/2731

|

[

"enhancement",

"Stale"

] |

linear[bot]

| 2 |

|

inventree/InvenTree

|

django

| 8,530 |

[FR] Add labor time for build orders

|

### Please verify that this feature request has NOT been suggested before.

- [x] I checked and didn't find a similar feature request

### Problem statement

Time is money. When building thinks the cost of time will add to the cost of the bought components. To get a good inside to the real cost of the product, it is essential to involve its build time as well and accumulate it over the whole project.

### Suggested solution

We can add values of time spend when we complete a build output. When the build is done there will be a total build time. Finally it can be average or range for each part. Same as the cost component.

Now we have a good price in indication for the bought components, but the build time is as much of a value as the money we spent on the components we bought.

### Describe alternatives you've considered

Now we have a good price in indication for the bought components, but the build time is as much of a value as the money we spent on the components we bought.

### Examples of other systems

_No response_

### Do you want to develop this?

- [ ] I want to develop this.

|

open

|

2024-11-20T07:55:45Z

|

2024-12-01T19:55:23Z

|

https://github.com/inventree/InvenTree/issues/8530

|

[

"enhancement",

"pricing",

"roadmap",

"feature"

] |

MIOsystems

| 6 |

Lightning-AI/LitServe

|

rest-api

| 275 |

Add Support to Huggingface Diffusers!

|

Add real-time support for serving diffusion models via the Hugging Face `diffusers` library.

https://huggingface.co/docs/diffusers/en/index

|

closed

|

2024-09-08T14:22:52Z

|

2024-09-08T15:08:25Z

|

https://github.com/Lightning-AI/LitServe/issues/275

|

[

"enhancement",

"help wanted"

] |

KaifAhmad1

| 2 |

slackapi/python-slack-sdk

|

asyncio

| 899 |

Happy Holidays! The team is taking a break until January 4th

|

## Happy Holidays! ❄️ ⏳

The maintainers are taking a break for the holidays and will be back to help with all your questions, issues, and feature requests in the new year. We hope you also find some time to relax and recharge. If you open an issue, please be patient and rest assured that the team will respond as soon as we can after we're back.

|

closed

|

2020-12-21T21:52:14Z

|

2021-01-05T03:03:07Z

|

https://github.com/slackapi/python-slack-sdk/issues/899

|

[

"discussion"

] |

aoberoi

| 0 |

gee-community/geemap

|

streamlit

| 1,174 |

Down image bug

|

<!-- Please search existing issues to avoid creating duplicates. -->

### Environment Information

- geemap version:0.15.4

- Python version:3.7

- Operating System:windows

### Description

When I execute the code to download the image, the downloaded image will appear empty. I downloaded the same image on gee. The image itself is OK. So i think, maybe geeemap have some bug? Thank you

Code:

image_t0=ee.Image("LANDSAT/LC08/C02/T1_L2/LC08_122026_20220106").select(['SR_B4', 'SR_B3', 'SR_B2'])

geemap.download_ee_image(image_t0, "image_t0_122026.tif", scale=30)

### What I Did

```

Paste the command(s) you ran and the output.

If there was a crash, please include the traceback here.

```

|

closed

|

2022-08-02T12:49:40Z

|

2022-08-03T21:50:48Z

|

https://github.com/gee-community/geemap/issues/1174

|

[

"bug"

] |

kgju

| 1 |

jacobgil/pytorch-grad-cam

|

computer-vision

| 49 |

how to visual the specific channels of feature?

|

good work! i have question about how to visual the specific of feature?

Like this, visual the first channel to third channel

|

closed

|

2020-10-27T13:21:38Z

|

2021-04-26T05:09:12Z

|

https://github.com/jacobgil/pytorch-grad-cam/issues/49

|

[] |

lao-ling-jie

| 10 |

wkentaro/labelme

|

computer-vision

| 870 |

how to create the chinese exe

|

how to create the chinese file, which file need to modify

|

closed

|

2021-05-26T02:41:07Z

|

2021-05-27T02:37:35Z

|

https://github.com/wkentaro/labelme/issues/870

|

[] |

Enn29

| 7 |

Evil0ctal/Douyin_TikTok_Download_API

|

web-scraping

| 78 |

修改了config.ini使用自己的服务器,开放了2333端口,docker部署的使用api没法请求到接口

|

网页端是正常..

|

closed

|

2022-09-17T01:09:20Z

|

2022-11-09T21:07:15Z

|

https://github.com/Evil0ctal/Douyin_TikTok_Download_API/issues/78

|

[

"help wanted"

] |

zeku2022

| 9 |

iperov/DeepFaceLab

|

machine-learning

| 554 |

Traininig uses CPU not GPU. Is it how its supposed to be?

|

THIS IS NOT TECH SUPPORT FOR NEWBIE FAKERS

POST ONLY ISSUES RELATED TO BUGS OR CODE

## Expected behavior

I want to run the program with my GPU, i bought a new graphic card for this, but it is not using GPU while training.

## Actual behavior

firstly, before i write here, i searched every topics.

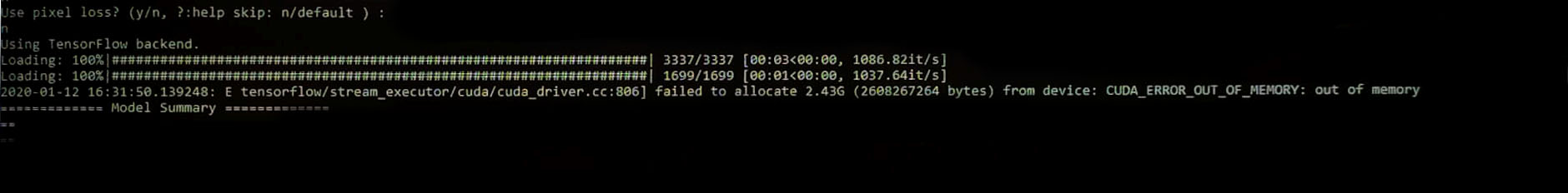

When i run the Train SAEHD, it first says this error (photo 1):

tensorflow/stream_executor/cuda/cuda_driver.cc806] failed to allocate 2.43 G (2608267264 bytes) from device: CUDA_ERROR_OUT_OF_MEMORY: out of memory

and i get rid of this error doing these-unfortunately it just helps the erase the error, nothing more.(before that photo, train was also not work, reducing batch size helped to work but..):

https://github.com/iperov/DeepFaceLab/issues/369#issuecomment-524539937

> try batch size of 2, dims 128

----

> Try lowering the batch size, i.e start from 4 and double until you get the error.

>

> If this doesn't work, changing line 144 in `nnlib.py` to `config.gpu_options.allow_growth = False` seemed to stop this error appearing for me."

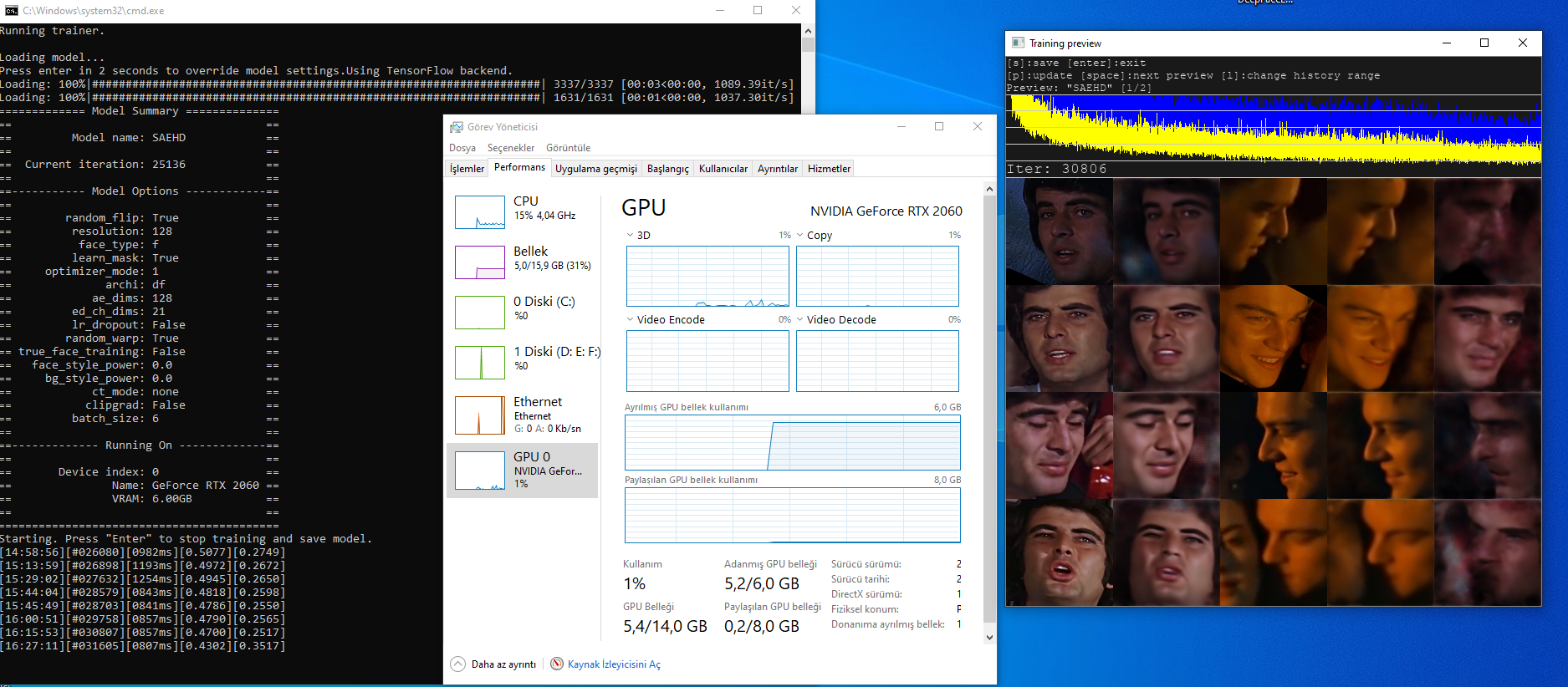

Train model is working but it's not using my GPU now. It uses RAM and CPU. Is it should be like this or how can i work my GPU? This is how i train now;

IT uses shared GPU Ram but not processor. Also not actual G-RAM it uses from my normal RAM.

## Other relevant information

Batch size=6

**Operating system and version:**

AMD Ryzen 3600 processor

Nvidia RTX 2060 6GB Graphic card

DFL build is the latest one (10.1)

Windows 10 64-bit, (i formatted several days ago, is it possible any other driver or program missing?)

**Python version:**

Python 3.8 64 bit.

|

closed

|

2020-01-13T14:42:35Z

|

2020-03-28T05:42:18Z

|

https://github.com/iperov/DeepFaceLab/issues/554

|

[] |

Glaewion

| 1 |

reloadware/reloadium

|

pandas

| 134 |

Does it support hot reloading of third-party libraries when using pycharm+pytest?

|

The reloadable paths is configured on the reloadium pycharm plugin.

When pytest is used to execute a testcase and a third-party library file is modified, but hot reloading cannot be performed. The following information is displayed:

```shell

xxx has been modified but is not loaded yet

Hot reloading not needed.

```

|

closed

|

2023-04-14T00:47:17Z

|

2023-04-14T11:00:52Z

|

https://github.com/reloadware/reloadium/issues/134

|

[] |

god-chaos

| 1 |

fastapi-users/fastapi-users

|

fastapi

| 1,466 |

Make user router flexible enough to pick few routes only

|

Hi 👋🏻

I was using fastapi users in one of my project and tried my best to customize according to requirement however I now come to deadend where `fastapi_users.get_users_router` includes multiple routes and we can't pick from one of the route or exlucde some of them.

In my case, I would like to change dependency of user patch route `PATCH /users/{id}` to change from superuser to authenticated user but I can't change that and also can't exclude that specific route while including router.

|

open

|

2024-11-15T08:29:48Z

|

2025-01-19T12:25:02Z

|

https://github.com/fastapi-users/fastapi-users/issues/1466

|

[

"bug"

] |

jd-solanki

| 5 |

Farama-Foundation/PettingZoo

|

api

| 480 |

[Proposal] Depend on OpenSpiel for Classic environment internals

|

OpenSpiels implementations of classic environments are all written in C and are dramatically faster than hours (and in some cases are better tested). They also have C implementations of most PettingZoo's classic environments. We would accordingly like to replace these with OpenSpiels over time.

|

closed

|

2021-09-11T21:36:07Z

|

2023-09-27T18:18:21Z

|

https://github.com/Farama-Foundation/PettingZoo/issues/480

|

[

"enhancement"

] |

jkterry1

| 2 |

pytest-dev/pytest-django

|

pytest

| 449 |

Fix building the docs on RTD

|

The building of the docs is currently broken: https://github.com/pytest-dev/pytest-django/pull/447#issuecomment-273681521

|

closed

|

2017-01-19T19:38:03Z

|

2017-01-28T23:16:56Z

|

https://github.com/pytest-dev/pytest-django/issues/449

|

[] |

blueyed

| 6 |

gradio-app/gradio

|

data-science

| 10,736 |

Nested functions with generic type annotations using python 3.12 syntax is not supported

|

### Describe the bug

The easiest way to explain this issue is with an example of a concrete failing function:

```python

def confirmation_harness[T](x: T) -> Callable[..., T]:

def _wrapped_fn() -> T:

return x

return _wrapped_fn

```

In this case gradio errors out with `NameError: name 'T' is not defined` because the occurrence of T in the inner function is not recognized.

A workaround is to use the old pre-3.12 way where `T` is defined as a stand alone variable like this:

````python

....

T = TypeVar('T')

def confirmation_harness(x: T) -> Callable[..., T]:

def _wrapped_fn() -> T:

return x

return _wrapped_fn

````

while this works it is not the recommended way of defining generics anymore, so I think the new way should be supported

### Have you searched existing issues? 🔎

- [x] I have searched and found no existing issues

### Reproduction

```python

import gradio as gr

```

### Screenshot

_No response_

### Logs

```shell

Traceback (most recent call last):

File "C:\Users\Jacki\repositories\ultimate-rvc\src\ultimate_rvc\web\main.py", line 452, in <module>

app = render_app()

^^^^^^^^^^^^

File "C:\Users\Jacki\repositories\ultimate-rvc\src\ultimate_rvc\web\main.py", line 403, in render_app

render_manage_audio_tab(

File "C:\Users\Jacki\repositories\ultimate-rvc\src\ultimate_rvc\web\tabs\manage\audio.py", line 294, in render

all_audio_click = all_audio_btn.click(

^^^^^^^^^^^^^^^^^^^^

File "C:\Users\Jacki\repositories\ultimate-rvc\uv\.venv\Lib\site-packages\gradio\events.py", line 670, in event_trigger

dep, dep_index = root_block.set_event_trigger(

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\Jacki\repositories\ultimate-rvc\uv\.venv\Lib\site-packages\gradio\blocks.py", line 803, in set_event_trigger

check_function_inputs_match(fn, inputs, inputs_as_dict)

File "C:\Users\Jacki\repositories\ultimate-rvc\uv\.venv\Lib\site-packages\gradio\utils.py", line 1007, in check_function_inputs_match

parameter_types = get_type_hints(fn)

^^^^^^^^^^^^^^^^^^

File "C:\Users\Jacki\repositories\ultimate-rvc\uv\.venv\Lib\site-packages\gradio\utils.py", line 974, in get_type_hints

return typing.get_type_hints(fn)

^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\Jacki\AppData\Local\Programs\Python\Python312\Lib\typing.py", line 2310, in get_type_hints

hints[name] = _eval_type(value, globalns, localns, type_params)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\Jacki\AppData\Local\Programs\Python\Python312\Lib\typing.py", line 415, in _eval_type

return t._evaluate(globalns, localns, type_params, recursive_guard=recursive_guard)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\Jacki\AppData\Local\Programs\Python\Python312\Lib\typing.py", line 947, in _evaluate

eval(self.__forward_code__, globalns, localns),

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "<string>", line 1, in <module>

NameError: name 'T' is not defined

```

### System Info

```shell

windows 11

python 3.12

gradio 5.20.0

```

### Severity

I can work around it

|

open

|

2025-03-05T22:32:29Z

|

2025-03-05T22:32:29Z

|

https://github.com/gradio-app/gradio/issues/10736

|

[

"bug"

] |

JackismyShephard

| 0 |

huggingface/datasets

|

computer-vision

| 6,622 |

multi-GPU map does not work

|

### Describe the bug

Here is the code for single-GPU processing: https://pastebin.com/bfmEeK2y

Here is the code for multi-GPU processing: https://pastebin.com/gQ7i5AQy

Here is the video showing that the multi-GPU mapping does not work as expected (there are so many things wrong here, it's better to watch the 3-minute video than explain here):

https://youtu.be/RNbdPkSppc4

### Steps to reproduce the bug

-

### Expected behavior

-

### Environment info

x2 RTX A4000

|

closed

|

2024-01-27T20:06:08Z

|

2024-02-08T11:18:21Z

|

https://github.com/huggingface/datasets/issues/6622

|

[] |

kopyl

| 1 |

OpenInterpreter/open-interpreter

|

python

| 1,168 |

Improve mouse movement and OCR speed

|

### Is your feature request related to a problem? Please describe.

When selecting a button or text, the mouse moves very slowly. Like in pyautogui, you can increase the speed to about 0.1seconds.

OI doesn't allow it which I'm aware of.

Also for OCR, It takes a long time before I can select a button or field.

I'm currently automating the generation of coupons on Kajabi. I need it to be faster than my employee

Also, how does OI decide which text to click on in the case when there are 2 or more texts that have the same text. For example, a button that says 'coupon' versus a label that says 'coupon'.

### Describe the solution you'd like

Have a duration field for mouse movement and click to improve the mouse movement speed

Improve screen-shot+ OCR speed. - To speed it up, we could in python specify the approximate location Region of interest, rather than screen capture the entire screen then run OCR.

### Describe alternatives you've considered

_No response_

### Additional context

_No response_

|

open

|

2024-04-03T14:37:20Z

|

2024-04-03T14:37:20Z

|

https://github.com/OpenInterpreter/open-interpreter/issues/1168

|

[] |

augmentedstartups

| 0 |

CorentinJ/Real-Time-Voice-Cloning

|

deep-learning

| 613 |

is there a way to configure it so that, rather than differentiate voices by speakers, it creates a composite voice trained on a few different speakers?

|

ive spent the last week or so having a ton of fun with this project and ive noticed that if you continuously train the encoder on one speaker it will tend to make any future attempts at voice cloning sound closer to the original voice it was trained on.

im a brainless monkey though and have no idea what im doing so im wondering if anyone else here has attempted this.

|

closed

|

2020-12-04T00:04:48Z

|

2020-12-05T08:03:04Z

|

https://github.com/CorentinJ/Real-Time-Voice-Cloning/issues/613

|

[] |

austin23cook

| 1 |

collerek/ormar

|

pydantic

| 415 |

Database transaction is not rollbacked

|

```python

async with transponder_.Meta.database.transaction():

sub_ = await Fusion.objects.create(

...

)

raise Exception("hello")

```

After the above code is executed, I still create a Fusion record in mysql

|

closed

|

2021-11-08T06:33:52Z

|

2021-11-15T11:47:18Z

|

https://github.com/collerek/ormar/issues/415

|

[

"bug"

] |

tufbel

| 1 |

matplotlib/matplotlib

|

data-visualization

| 28,794 |

[Doc]: Frame grabbing docs outdated?

|

### Documentation Link

https://matplotlib.org/stable/gallery/animation/frame_grabbing_sgskip.html

### Problem

An API part that used to accept scalars now expects a sequence.

Running:

``` python

import numpy as np

import matplotlib

matplotlib.use("Agg")

import matplotlib.pyplot as plt

from matplotlib.animation import FFMpegWriter

# Fixing random state for reproducibility

np.random.seed(19680801)

metadata = dict(title='Movie Test', artist='Matplotlib',

comment='Movie support!')

writer = FFMpegWriter(fps=15, metadata=metadata)

fig = plt.figure()

l, = plt.plot([], [], 'k-o')

plt.xlim(-5, 5)

plt.ylim(-5, 5)

x0, y0 = 0, 0

with writer.saving(fig, "writer_test.mp4", 100):

for i in range(100):

x0 += 0.1 * np.random.randn()

y0 += 0.1 * np.random.randn()

l.set_data(x0, y0)

writer.grab_frame()

```

from https://matplotlib.org/stable/gallery/animation/frame_grabbing_sgskip.html

Used to work, but now it produces:

``` bash

RuntimeError Traceback (most recent call last)

Cell In[1], line 30

28 x0 += 0.1 * np.random.randn()

29 y0 += 0.1 * np.random.randn()

---> 30 l.set_data(x0, y0)

31 writer.grab_frame()

File ~/miniconda3/envs/pytorch/lib/python3.12/site-packages/matplotlib/lines.py:665, in Line2D.set_data(self, *args)

662 else:

663 x, y = args

--> 665 self.set_xdata(x)

666 self.set_ydata(y)

File ~/miniconda3/envs/pytorch/lib/python3.12/site-packages/matplotlib/lines.py:1289, in Line2D.set_xdata(self, x)

1276 """

1277 Set the data array for x.

1278

(...)

1286 set_ydata

1287 """

1288 if not np.iterable(x):

-> 1289 raise RuntimeError('x must be a sequence')

1290 self._xorig = copy.copy(x)

1291 self._invalidx = True

RuntimeError: x must be a sequence

```

Which was not a problem in some previous versions.

### Suggested improvement

* We should update the docs with an example illustrating how people should use the new implementation of `FFMpegWriter` .

|

closed

|

2024-09-09T13:34:51Z

|

2024-09-12T09:29:50Z

|

https://github.com/matplotlib/matplotlib/issues/28794

|

[

"Documentation"

] |

v0lta

| 9 |

Lightning-AI/pytorch-lightning

|

pytorch

| 20,603 |

Progress bar is broken when loading trainer state from checkpoint

|

### Bug description

I am using lightning in conjunction with the mosaicML streaming library, which allows for stateful dataloaders for resumption of mid-epoch training. I am therefor passing in train/validation dataloaders manually to the trainer, as opposed to a datamodule. That said, as I am also looking to resume with optimizer state etc., I also pass in the checkpoint. Therefor my training is run as:

```

trainer.fit(

model=lightning_model,

train_dataloaders=train_dataloader,

val_dataloaders=validation_dataloader,

ckpt_path=args.ckpt

)

```

Note that at this stage, if resuming, I have already loaded my dataloader and updated with their state dict.

I have confirmed that the dataloader is still returning len(dataloader) correctly, indicating exactly how many steps are in the epoch.

But, when calling with resume logic, for example resuming from step n. 25 I will see the following in progress bar:

`25/?`

So, it seems that the trainer has (correctly) deduced that the checkpoint is resuming from a global step of 25, but is not calling len(dataloader) anymore to verify how many steps remain.

### What version are you seeing the problem on?

v2.5

### How to reproduce the bug

```python

```

### Error messages and logs

_No response_

### Environment

_No response_

### More info

_No response_

|

open

|

2025-02-25T20:41:58Z

|

2025-02-25T20:42:11Z

|

https://github.com/Lightning-AI/pytorch-lightning/issues/20603

|

[

"bug",

"needs triage",

"ver: 2.5.x"

] |

JLenzy

| 0 |

tensorpack/tensorpack

|

tensorflow

| 673 |

Infinite loop with MultiProcessMapDataZMQ

|

There is an infinite loop with the following code:

```

from tensorpack.dataflow import MultiProcessMapDataZMQ, FakeData

ds = FakeData(((50, 50, 3), (1,)))

import numpy as np

def proc(dp):

img = np.random.randint(0, 255, size=(1012, 1012, 3))

img[img < 120] = 0

return dp

mp_ds = MultiProcessMapDataZMQ(ds, map_func=proc, nr_proc=10, strict=True)

mp_ds.reset_state()

for i, (_, _) in enumerate(mp_ds.get_data()):

if i % 100 == 0:

print(i, end=" . ", flush=True)

```

Important part is `strict=True` and small size of `FakeData`. If change size to (512, 512, 3), then loop ends properly. Probably, there is something with buffer filling and dequeing...

Execution stucks [here](https://github.com/ppwwyyxx/tensorpack/blob/master/tensorpack/dataflow/parallel_map.py#L81).

|

closed

|

2018-02-25T22:58:10Z

|

2018-05-30T20:59:38Z

|

https://github.com/tensorpack/tensorpack/issues/673

|

[

"bug"

] |

vfdev-5

| 11 |

dask/dask

|

scikit-learn

| 11,016 |

Minimal dd.to_datetime to convert a string column no longer works

|

**Describe the issue**:

I feel like I must be do something wrong as this seems like a fairly simple example but I *think* it's an actual bug? (appologies if there's something really obvious in the below example that I'm missing)

**Minimal Complete Verifiable Example**:

```python

import dask.dataframe as dd

df = dd.from_dict(

{

"a": [1, 2, 3],

"dt": [

"2023-01-04T00:00:00",

"2023-04-02T00:00:00",

"2023-01-01T00:00:00",

],

},

npartitions=1,

)

dd.to_datetime(

df["dt"],

format="%Y-%m-%dT%H:%M:%S",

) # <- this throws a ValueError

```

Full error stack:

```

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/home/ben/Documents/dask-issue/.venv/lib/python3.10/site-packages/dask_expr/_collection.py", line 427, in __repr__

return _str_fmt.format(

File "/home/ben/Documents/dask-issue/.venv/lib/python3.10/site-packages/dask_expr/_core.py", line 71, in __str__

s = ", ".join(

File "/home/ben/Documents/dask-issue/.venv/lib/python3.10/site-packages/dask_expr/_core.py", line 74, in <genexpr>

if isinstance(operand, Expr) or operand != self._defaults.get(param)

File "/home/ben/Documents/dask-issue/.venv/lib/python3.10/site-packages/pandas/core/generic.py", line 1576, in __nonzero__

raise ValueError(

ValueError: The truth value of a Series is ambiguous. Use a.empty, a.bool(), a.item(), a.any() or a.all().

>>> dd.to_datetime(df["dt"], format="%Y-%m-%dT%H:%M:%S")

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/home/ben/Documents/dask-issue/.venv/lib/python3.10/site-packages/dask_expr/_collection.py", line 427, in __repr__

return _str_fmt.format(

File "/home/ben/Documents/dask-issue/.venv/lib/python3.10/site-packages/dask_expr/_core.py", line 71, in __str__

s = ", ".join(

File "/home/ben/Documents/dask-issue/.venv/lib/python3.10/site-packages/dask_expr/_core.py", line 74, in <genexpr>

if isinstance(operand, Expr) or operand != self._defaults.get(param)

File "/home/ben/Documents/dask-issue/.venv/lib/python3.10/site-packages/pandas/core/generic.py", line 1576, in __nonzero__

raise ValueError(

ValueError: the truth value of a Series is ambiguous. Use a.empty, a.bool(), a.item(), a.any() or a.all().

```

**Environment**:

Dask version: 2024.3.1

Python version: 3.10.13

Operating System: Linux (Ubuntu)

Install method (conda, pip, source): pip

|

closed

|

2024-03-22T13:41:28Z

|

2024-03-22T17:00:02Z

|

https://github.com/dask/dask/issues/11016

|

[

"needs triage"

] |

benrutter

| 0 |

MaartenGr/BERTopic

|

nlp

| 1,893 |

list index out of range error in topic reduction

|

I am using the following code for topic modelling with bertopic version 0.16.0 and getting list index out of range error in topic reduction

|

open

|

2024-03-28T00:32:19Z

|

2024-03-29T07:27:42Z

|

https://github.com/MaartenGr/BERTopic/issues/1893

|

[] |

akalra03

| 1 |

errbotio/errbot

|

automation

| 1,298 |

Plugin config through chat cannot contain a line break after the plugin name

|

### I am...

* [x] Reporting a bug

* [ ] Suggesting a new feature

* [ ] Requesting help with running my bot

* [ ] Requesting help writing plugins

* [ ] Here about something else

### I am running...

* Errbot version: 5.1.1

* OS version: Ubuntu 18.04.1

* Python version: 3.6.6

* Using a virtual environment: yes/no

### Issue description

When running `!plugin config <plugin-name>`, you get a help message:

>Default configuration for this plugin (you can copy and paste this directly as a command):

When configuring a plugin through chat (Slack backend), there can't be a line break after `!plugin config <plugin-name>`. This means that, because it contains a line break, you cannot copy and paste the default configuration template as the message suggests. If you put the configuration dict on the same line, all works fine; if you put the config on new line, you get:

>Unknown plugin or the plugin could not load \<plugin-name\>.

### Steps to reproduce

Configure a plugin from chat using the Slack backend (I'm not sure if applies to all backends), making sure to include a line break between the plugin name and config dict, like:

```

!plugin config foobar

{...}

```

You will get an error: `Unknown plugin or the plugin could not load <plugin-name>`.

Now remove the line break and try configuring again:

```

!plugin config foobar {...}

```

The configuration will succeed.

|

open

|

2019-02-26T14:54:38Z

|

2019-06-18T06:10:18Z

|

https://github.com/errbotio/errbot/issues/1298

|

[

"type: bug"

] |

sheluchin

| 0 |

graphistry/pygraphistry

|

pandas

| 202 |

[BUG] as_files consistency checking

|

When using `as_files=True`:

* should compare settings

* should check for use-after-delete

|

open

|

2021-01-27T01:07:27Z

|

2021-07-16T19:47:53Z

|

https://github.com/graphistry/pygraphistry/issues/202

|

[

"bug"

] |

lmeyerov

| 1 |

postmanlabs/httpbin

|

api

| 47 |

Create a "Last-Modified" endpoint

|

Create an endpoint that allows you to specify a date/time, and will respond with a `Last-Modified` header containing that date.

If the request is sent with an `If-Modified-Since` header, a `304 Not Modified` response should be returned.

Does this sound like something that could be useful?

|

closed

|

2012-05-18T15:18:37Z

|

2018-04-26T17:50:56Z

|

https://github.com/postmanlabs/httpbin/issues/47

|

[

"feature-request"

] |

johtso

| 0 |

babysor/MockingBird

|

pytorch

| 183 |

用colab和谷歌云服务器预处理时,出现了AssertionError

|

~/MockingBird$ python pre.py dateAISHELL3 -d aishell3

Traceback (most recent call last):

File "pre.py", line 57, in <module>

assert args.datasets_root.exists()

AssertionError

colab和谷歌云都出现了相同的错误,版本都是用git clone 抓取的最新版

|

closed

|

2021-10-31T10:03:31Z

|

2021-12-26T03:23:50Z

|

https://github.com/babysor/MockingBird/issues/183

|

[] |

kulu2001

| 2 |

bmoscon/cryptofeed

|

asyncio

| 814 |

Add Kucoin L3 feed support

|

Kucoin supports L3 feed according to docs https://github.com/Kucoin-academy/best-practice/blob/master/README_EN.md

Would be nice to get it as not many exchanges support L3 data

|

closed

|

2022-03-28T17:57:52Z

|

2022-03-28T19:09:46Z

|

https://github.com/bmoscon/cryptofeed/issues/814

|

[

"Feature Request"

] |

anovv

| 1 |

gevent/gevent

|

asyncio

| 1,792 |

arm64 crossompile error

|

checking build system type... x86_64-pc-linux-gnu

checking host system type... Invalid configuration `aarch64-openwrt-linux': machine `aarch64-openwrt' not recognized

is there any solution from cross compilation ?

|

closed

|

2021-05-20T12:55:12Z

|

2021-05-21T11:23:33Z

|

https://github.com/gevent/gevent/issues/1792

|

[] |

erdoukki

| 5 |

allenai/allennlp

|

nlp

| 4,739 |

Potential bug: The maxpool in cnn_encoder can be triggered by pad tokens.

|

## Description

When using a text_field_embedder -> cnn_encoder (without seq2seq_encoder), the output of the embedder (and mask) get fed directly into the cnn_encoder. The pad tokens will get masked (set to 0), but it's still possible that after applying the mask followed by the CNN, the PAD tokens are those with highest activations. This could lead to the same exact datapoint getting different predictions if's part of a batch vs single prediction.

## Related issues or possible duplicates

- None

## Environment

OS: NA

Python version: NA

## Steps to reproduce

This can be reproduced by replacing

https://github.com/allenai/allennlp/blob/00bb6c59b3ac8fdc78dfe8d5b9b645ce8ed085c0/allennlp/modules/seq2vec_encoders/cnn_encoder.py#L113

```

filter_outputs.append(self._activation(convolution_layer(tokens)).max(dim=2)[0])

```

with

```

activated_outputs, max_indices = self._activation(convolution_layer(tokens)).max(dim=2)

```

and checking the indices for the same example inside of a batch vs unpadded.

## Possible solution:

We could resolve this by adding a large negative value to all CNN outputs for masked tokens, similarly to what they do in the transformers library (https://github.com/huggingface/transformers/issues/542, https://github.com/huggingface/transformers/blob/c912ba5f69a47396244c64deada5c2b8a258e2b8/src/transformers/modeling_bert.py#L262), but I have not been able to figure out how to do this efficiently.

|

closed

|

2020-10-19T23:30:31Z

|

2020-11-05T23:50:04Z

|

https://github.com/allenai/allennlp/issues/4739

|

[

"bug"

] |

MichalMalyska

| 6 |

JaidedAI/EasyOCR

|

machine-learning

| 377 |

How to train on custom data set?

|

Please reply.

Thanks in advance.

|

closed

|

2021-02-16T08:44:08Z

|

2021-07-02T08:54:30Z

|

https://github.com/JaidedAI/EasyOCR/issues/377

|

[] |

fahimnawaz7

| 1 |

inducer/pudb

|

pytest

| 477 |

Console history across sessions

|

Is it possible to record the python console history when in the debugger, so as to make it accessible accross sessions?

So if it run pudb once and input `a + b` and then restart the debugger in a new session I can press Ctrl + P and find `a + b` there?

I currently use my own fork of ipdb with certain modifications to make this happen (since ipdb as of right now does not support history across sessions), but I find pudb to be a lot nicer. So I was wondering if the addition of this functionality is being considered?

Thanks in advance for your help!!

|

closed

|

2021-10-22T12:40:10Z

|

2021-11-02T21:42:30Z

|

https://github.com/inducer/pudb/issues/477

|

[] |

dvd42

| 4 |

kizniche/Mycodo

|

automation

| 982 |

Atlas Gravity Analog pH Sensor Calibration

|

Hi Kyle

Huge fan.

Is it possible to calibrate or add calibration for the Atlas Analog probes?

Regards

Todd

|

closed

|

2021-04-15T14:58:05Z

|

2021-04-15T15:03:09Z

|

https://github.com/kizniche/Mycodo/issues/982

|

[] |

cactuscrawford

| 2 |

plotly/dash

|

flask

| 3,004 |

dropdowns ignore values when options are not set

|

Thank you so much for helping improve the quality of Dash!

We do our best to catch bugs during the release process, but we rely on your help to find the ones that slip through.

**Describe your context**

dash==2.18.1

dash-bootstrap-components==1.6.0

dash-extensions==1.0.15

dash-cytoscape==1.0.1

plotly==5.24.1

- replace the result of `pip list | grep dash` below

```

dash 2.18.1

dash-bootstrap-components 1.6.0

dash-core-components 2.0.0

dash_cytoscape 1.0.1

dash_dangerously_set_inner_html 0.0.2

dash-extensions 1.0.15

dash-html-components 2.0.0

dash-leaflet 1.0.15

dash-mantine-components 0.12.1

dash-quill 0.0.4

dash-svg 0.0.12

dash-table 5.0.0

```

- if frontend related, tell us your Browser, Version and OS

- OS: Windows

- Browser: Edge

- Version: 128.0.2739.67

**Describe the bug**

On my dashboard I use dropdowns where the options are dynamically updated based on the underlying dataset. In order to facilitate quick links throughout the site, I use URL params to pre-set the dropdowns to certain values.

It has been a very simple solution for me for years now.

Up to dash 2.16.1, this all works fine. On any higher versions the pre-set value is ignored.

**Expected behavior**

I am able to set the value of a dropdown without an options set, and once the options are updated, the pre-set value shows up as selected if it is part of the options set.

**Minimal Example***

```

from dash import Dash, dcc, html, Input, Output, callback

preset_value = 'NYC' # from url params

app = Dash(__name__)

app.layout = html.Div([

dcc.Store(id='demo-store'),

dcc.Interval(id='demo-interval', interval=1000, n_intervals=0),

dcc.Dropdown(

value=preset_value,

options=[],

id='demo-dropdown'

),

])

@callback(

Output('demo-store', 'data'),

Input('demo-interval', 'n_intervals')

)

def update_output(n):

return [{'label': i, 'value': i} for i in ['NYC', 'MTL', 'LA']]

@callback(

Output('demo-dropdown', 'options'),

Input('demo-store', 'data')

)

def update_output(data):

return data

if __name__ == '__main__':

app.run(debug=True)

```

|

closed

|

2024-09-15T10:28:32Z

|

2024-09-17T12:18:57Z

|

https://github.com/plotly/dash/issues/3004

|

[

"bug",

"P3"

] |

areed145

| 2 |

FujiwaraChoki/MoneyPrinterV2

|

automation

| 33 |

Macbook cannot find .model.js

|

When I run the project using my MacBook, I found that the output address was [/MoneyPrinterV2/venv**\\**Lib**\\**site-packages/TTS/.models.json] ,this Path is not supported in Mac, so I can't locate the real address.

This piece of code might need some adjustments.

```

# Path to the .models.json file

models_json_path = os.path.join(

ROOT_DIR,

venv_site_packages,

"TTS",

".models.json",

)

```

|

closed

|

2024-02-22T06:46:30Z

|

2024-02-22T09:05:13Z

|

https://github.com/FujiwaraChoki/MoneyPrinterV2/issues/33

|

[] |

NicholasChong

| 1 |

TvoroG/pytest-lazy-fixture

|

pytest

| 63 |

Inquiry about the status of pytest-lazy-fixture and offering assistance

|

Hey @TvoroG,

I hope you're doing well. I really find pytest-lazy-fixture a great pytest plugin, but I noticed that it hasn't had a release since 2020, and there are some open issues and pull requests that seem to be unattended.

For this reason, I wonder if the project is abandoned, or if it's still supported.

Specifically, I think that https://github.com/TvoroG/pytest-lazy-fixture/pull/62 is really something worth considering and that would make the project even more useful. I use that approach in pytest-factoryboy, but this project is way more advanced in the way it handles the pytest internals for lazy fixtures.

As an active user and maintainer of pytest-bdd and pytest-factoryboy, I would be interested in contributing to the project's maintenance and further development, including reviewing PRs, keeping the CI up to date with the latest pytests and python versions, making releases, etc.

Would that be something you can consider?

Thanks for your time, and I'm looking forward to your response.

Best regards,

Alessio

|

open

|

2023-07-30T10:19:40Z

|

2025-03-10T16:04:07Z

|

https://github.com/TvoroG/pytest-lazy-fixture/issues/63

|

[] |

youtux

| 10 |

aiortc/aiortc

|

asyncio

| 232 |

Using STUN and TURN Server at the same time

|

Hi,

I am currently trying to define add a stun and a turn server to my configuration, but it seems like it is using only the turn server:

```

pc = RTCPeerConnection(

configuration=RTCConfiguration([

RTCIceServer("stun:stun.l.google:19302"),

RTCIceServer("turn:turnserver.cidaas.de:3478?transport=udp", "user", "pw"),

]))

```

When I am behind a firewall which is blocking the turn server ports I receive the following error:

```

Error handling request

Traceback (most recent call last):

File "/home/tanja/venvs/cidaas/id-services-aggregator/lib/python3.7/site-packages/aiohttp/web_protocol.py", line 418, in start

resp = await task

File "/home/tanja/venvs/cidaas/id-services-aggregator/lib/python3.7/site-packages/aiohttp/web_app.py", line 458, in _handle

resp = await handler(request)

File "/home/tanja/git/cidaas/id-card-utility-backend/server.py", line 104, in offer

await pc.setLocalDescription(answer)

File "/home/tanja/venvs/cidaas/id-services-aggregator/lib/python3.7/site-packages/aiortc/rtcpeerconnection.py", line 666, in setLocalDescription

await self.__gather()

File "/home/tanja/venvs/cidaas/id-services-aggregator/lib/python3.7/site-packages/aiortc/rtcpeerconnection.py", line 865, in __gather

await asyncio.gather(*coros)

File "/home/tanja/venvs/cidaas/id-services-aggregator/lib/python3.7/site-packages/aiortc/rtcicetransport.py", line 174, in gather

await self._connection.gather_candidates()

File "/home/tanja/venvs/cidaas/id-services-aggregator/lib/python3.7/site-packages/aioice/ice.py", line 362, in gather_candidates

addresses=addresses)

File "/home/tanja/venvs/cidaas/id-services-aggregator/lib/python3.7/site-packages/aioice/ice.py", line 749, in get_component_candidates

transport=self.turn_transport)

File "/home/tanja/venvs/cidaas/id-services-aggregator/lib/python3.7/site-packages/aioice/turn.py", line 301, in create_turn_endpoint

await transport._connect()

File "/home/tanja/venvs/cidaas/id-services-aggregator/lib/python3.7/site-packages/aioice/turn.py", line 272, in _connect

self.__relayed_address = await self.__inner_protocol.connect()

File "/home/tanja/venvs/cidaas/id-services-aggregator/lib/python3.7/site-packages/aioice/turn.py", line 80, in connect

response, _ = await self.request(request)

File "/home/tanja/venvs/cidaas/id-services-aggregator/lib/python3.7/site-packages/aioice/turn.py", line 173, in request

return await transaction.run()

File "/home/tanja/venvs/cidaas/id-services-aggregator/lib/python3.7/site-packages/aioice/stun.py", line 250, in run

return await self.__future

aioice.exceptions.TransactionTimeout: STUN transaction timed out

```

when using the following configuration it works:

```

pc = RTCPeerConnection(

configuration=RTCConfiguration([

RTCIceServer("stun:stun.l.google:19302"),

]))

```

But when I use a mobile device using the configuration from above (turn + stun) it works (as the turn server connection is not blocked)

Any idea why it is not possible to use either stun or turn server, depending on which one is possible to use. Or is that expected behavior, if yes, what is the purpos of allowing muliple Server configuration? Shouldn't it give some kind of warning, that stun will not be used?

Or am I missing out some important config parameters?

Best Regards,

Tanja

|

closed

|

2019-12-03T16:06:16Z

|

2019-12-04T09:14:41Z

|

https://github.com/aiortc/aiortc/issues/232

|

[] |

TanjaBayer

| 4 |

deepinsight/insightface

|

pytorch

| 1,812 |

DeprecationWarning : np.float` is a deprecated alias for the builtin `float`.

|

`DeprecationWarning: `np.float` is a deprecated alias for the builtin `float`. To silence this warning, use `float` by itself. Doing this will not modify any behavior and is safe. If you specifically wanted the numpy scalar type, use `np.float64` here.

Deprecated in NumPy 1.20; for more details and guidance: https://numpy.org/devdocs/release/1.20.0-notes.html#deprecations

eps=np.finfo(np.float).eps, random_state=None,`

|

open

|

2021-11-03T07:30:15Z

|

2021-11-03T07:30:15Z

|

https://github.com/deepinsight/insightface/issues/1812

|

[] |

HenryBao91

| 0 |

Avaiga/taipy

|

data-visualization

| 1,826 |

Customized labels for Boolean values in tables

|

### Description

Tables represent Boolean values with a switch component.

The labels are True and False, which makes a lot of sense.

It would be nice to allow for specifying these labels using a control's property.

I can think of 0/1, Off/On, Bad/Good, Male/Female, Disagree/Agree... and plenty of use cases where the UI would help providing semantic support.

### Acceptance Criteria

- [ ] Ensure new code is unit tested, and check code coverage is at least 90%.

- [ ] Create related issue in taipy-doc for documentation and Release Notes.

- [ ] Check if a new demo could be provided based on this, or if legacy demos could be benefit from it.

- [ ] Ensure any change is well documented.

### Code of Conduct

- [X] I have checked the [existing issues](https://github.com/Avaiga/taipy/issues?q=is%3Aissue+).

- [ ] I am willing to work on this issue (optional)

|

closed

|

2024-09-23T15:06:05Z

|

2024-09-24T12:03:10Z

|

https://github.com/Avaiga/taipy/issues/1826

|

[

"🖰 GUI",

"🟨 Priority: Medium",

"✨New feature"

] |

FabienLelaquais

| 0 |

TracecatHQ/tracecat

|

automation

| 653 |

No error handeling in env.sh if openssl is not installed

|

**Lacking error handeling**

A clean fedora 41 workstation install does not have openssl installed.

As the env.sh script assumes this is available and does not check you will get the error message:

_Generating new service key and signing secret...

./env.sh: line 60: openssl: command not found

./env.sh: line 61: openssl: command not found

Generating a Fernet encryption key for the database...

Creating new .env from .env.example..._

**To Reproduce**

Install clean _Fedora-Workstation-Live-x86_64-41-1.4.iso_.

Follow [Docker Compose](https://docs.tracecat.com/self-hosting/deployment-options/docker-compose)

Notice when running script you will get error message command not found.

**Expected behavior**

A clear error message and canceled creation of the .env file.

**Screenshots**

If applicable, add screenshots to help explain your problem.

**Environment (please complete the following information):**

- OS: Fedora Workstation 41 x86.

|

closed

|

2024-12-21T23:56:30Z

|

2024-12-27T00:28:29Z

|

https://github.com/TracecatHQ/tracecat/issues/653

|

[

"bug",

"documentation",

"build"

] |

FestHesten

| 0 |

Evil0ctal/Douyin_TikTok_Download_API

|

api

| 550 |

现在获取单个作品数据接口不行了吗

|

我本地测试报错,Cookie和User-Agent换过

报错信息如下

|

closed

|

2025-02-12T06:28:51Z

|

2025-02-14T02:41:17Z

|

https://github.com/Evil0ctal/Douyin_TikTok_Download_API/issues/550

|

[] |

lao-wen

| 4 |

junyanz/pytorch-CycleGAN-and-pix2pix

|

computer-vision

| 1,044 |

Will training the discriminator model alone help improve my model performance?

|

Currently, I'm doing a task of image to image translation with the following dataset using pix2pix model. The task is to enhance the contrast between the text. I have very less such images and its label pairs. I am getting decent results with 300 manually annotated images. But it is not feasible to generate more image pairs.

Also it is very difficult to create the image pairs. I have created two more different datasets from the above large original image.

**Pixel wise paired dataset**

I have cropped various characters present in the image dataset and created pairs.

**Word pair dataset**

I trained with all three datasets together. It was worsening the performance compared to model trained with full image dataset.

Is it possible to train only the discriminator with the pixelwise and word wise dataset and train the generator and discriminator with the full image dataset?

What do you think will improve the performance in my case? Thanks in advance.

|

closed

|

2020-05-25T10:22:34Z

|

2020-06-01T10:37:42Z

|

https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/1044

|

[] |

kalai2033

| 6 |

pytorch/vision

|

computer-vision

| 8,820 |

Feature extraction blocks

|

deleted!

|

closed

|

2024-12-20T13:19:01Z

|

2025-01-09T18:21:15Z

|

https://github.com/pytorch/vision/issues/8820

|

[] |

jawi289o

| 1 |

RobertCraigie/prisma-client-py

|

asyncio

| 257 |

Support for using within a standalone package

|

## Problem

<!-- A clear and concise description of what the problem is. Ex. I'm always frustrated when [...] -->

It is difficult to use Prisma Client Python in a CLI that will be installed by users as the generation step needs to be ran.

## Suggested solution

<!-- A clear and concise description of what you want to happen. -->

Provide a helper method for running a prisma command and reloading the generated modules, e.g.

```py

import prisma

# from prisma import Client would raise an ImportError here

prisma.run('db', 'push')

from prisma import Client

```

However you would not want to run this every time, we should provide mechanisms for running the command based on a condition. A potential problem with this is managing versions, if you update the schema and release a new version, anyone already using the old version wouldn't have their database updated. A potential solution for this could be hashing the schema and comparing hashes.

|

open

|

2022-01-30T21:45:55Z

|

2022-02-01T18:20:14Z

|

https://github.com/RobertCraigie/prisma-client-py/issues/257

|

[

"kind/feature",

"priority/medium",

"level/unknown"

] |

RobertCraigie

| 0 |

jupyter/nbgrader

|

jupyter

| 942 |

Jupyter/nbgrader learning analytics dashboard?

|

<!--

Thanks for helping to improve nbgrader!

If you are submitting a bug report or looking for support, please use the below

template so we can efficiently solve the problem.

If you are requesting a new feature, feel free to remove irrelevant pieces of

the issue template.

-->

I am curious as to whether there exists, or there are thoughts to develop, a learning analytics dashboard for use with Jupyter and nbgrader in the classroom? For example, if you have students working on a specific notebook during a class/tutorial that has several questions - could one probe how many questions students had answered and, if those questions were autogradable, what the mean/median grades were. Perhaps there is an existing tool in the Jupyter ecosystem but I have not yet come across one (and if there is, please point me in that direction).

If this may be possible and but does not yet exist, I would be more than happy to contribute to the creation of such a tool.

|

open

|

2018-03-14T22:49:01Z

|

2018-04-22T11:28:00Z

|

https://github.com/jupyter/nbgrader/issues/942

|

[

"enhancement"

] |

ttimbers

| 1 |

amidaware/tacticalrmm

|

django

| 1,461 |

Entering a comma in the hours number in the download agent dialog generates an error

|

**Server Info (please complete the following information):**

- OS: Ubuntu 20.04.4 LTS

- Browser: Firefox 110.0 (64-bit)

- RMM Version (as shown in top left of web UI): v0.15.7

**Installation Method:**

- [x] Standard

- [ ] Docker

**Agent Info (please complete the following information):**

- Agent version (as shown in the 'Summary' tab of the agent from web UI): v2.4.4

- Agent OS: Windows 10 Pro, 64 bit v21H2 (build 19044.2728)

**Describe the bug**

The "time expiration (hours)" field expects a number but does not accept numbers with a comma.

**To Reproduce**

Steps to reproduce the behavior:

1. Go to Agents > Install Agent

2. Enter a number with a comma in the token expiration field.

3. Click "Generate and Download exe" and you'll get an error.

**Expected behavior**

I would expect the system to accept numbers with commas. Ideally, this should also be locale aware with the possibility of accepting periods instead of commas.

**Screenshots**

N/A

**Additional context**

The POST request does not have the token value. This implies the frontend is invalidating the input.

POST sent to `https://api.a8n.tools/agents/installer/`:

```json

{

"agenttype": "workstation",

"api": "https://api.a8n.tools",

"client": 6,

"expires": "",

"fileName": "trmm-help-onboarding-workstation-amd64.exe",

"goarch": "amd64",

"installMethod": "exe",

"ping": 0,

"plat": "windows",

"power": 0,

"rdp": 0,

"site": 9

}

```

Stack trace from `/rmm/api/tacticalrmm/tacticalrmm/private/log/django_debug.log`:

```text

[23/Mar/2023 18:57:25] ERROR [django.request:241] Internal Server Error: /agents/installer/

Traceback (most recent call last):

File "/rmm/api/env/lib/python3.10/site-packages/django/core/handlers/exception.py", line 55, in inner

response = get_response(request)

File "/rmm/api/env/lib/python3.10/site-packages/django/core/handlers/base.py", line 197, in _get_response

response = wrapped_callback(request, *callback_args, **callback_kwargs)

File "/rmm/api/env/lib/python3.10/site-packages/django/views/decorators/csrf.py", line 54, in wrapped_view

return view_func(*args, **kwargs)

File "/rmm/api/env/lib/python3.10/site-packages/django/views/generic/base.py", line 103, in view

return self.dispatch(request, *args, **kwargs)

File "/rmm/api/env/lib/python3.10/site-packages/rest_framework/views.py", line 509, in dispatch

response = self.handle_exception(exc)

File "/rmm/api/env/lib/python3.10/site-packages/rest_framework/views.py", line 469, in handle_exception

self.raise_uncaught_exception(exc)

File "/rmm/api/env/lib/python3.10/site-packages/rest_framework/views.py", line 480, in raise_uncaught_exception

raise exc

File "/rmm/api/env/lib/python3.10/site-packages/rest_framework/views.py", line 506, in dispatch

response = handler(request, *args, **kwargs)

File "/rmm/api/env/lib/python3.10/site-packages/rest_framework/decorators.py", line 50, in handler

return func(*args, **kwargs)

File "/rmm/api/tacticalrmm/agents/views.py", line 563, in install_agent

user=installer_user, expiry=dt.timedelta(hours=request.data["expires"])

TypeError: unsupported type for timedelta hours component: str

```

|

closed

|

2023-03-23T19:11:24Z

|

2023-03-23T19:44:14Z

|

https://github.com/amidaware/tacticalrmm/issues/1461

|

[] |

NiceGuyIT

| 1 |

microsoft/MMdnn

|

tensorflow

| 420 |

I tried to convert tf frozen model to ir, but it failed.

|

Platform (like ubuntu 16.04/win10):

docker image mmdnn/mmdnn:cpu.small

Python version:

python 3.5

Source framework with version (like Tensorflow 1.4.1 with GPU):

tf 1.7 with GPU optimized by tensorRT, not very sure the version of tf

Destination framework with version (like CNTK 2.3 with GPU):

I want to convert the frozen pb to ir first, then convert it to saved model for tensorflow serving

Pre-trained model path (webpath or webdisk path):

https://developer.download.nvidia.com/devblogs/tftrt_sample.tar.xz

Running scripts:

```

mmtoir -f tensorflow --frozen_pb resnetV150_TRTFP32.pb --inNodeName input --inputShape 224 224 3 --dstNodeName dstNode -o resnet150-ir

```

> Traceback (most recent call last):

File "/usr/local/bin/mmtoir", line 11, in <module>

sys.exit(_main())

File "/usr/local/lib/python3.5/dist-packages/mmdnn/conversion/_script/convertToIR.py", line 184, in _main

ret = _convert(args)

File "/usr/local/lib/python3.5/dist-packages/mmdnn/conversion/_script/convertToIR.py", line 48, in _convert

parser = TensorflowParser2(args.frozen_pb, args.inputShape, args.inNodeName, args.dstNodeName)

File "/usr/local/lib/python3.5/dist-packages/mmdnn/conversion/tensorflow/tensorflow_frozenparser.py", line 108, in __init__

placeholder_type_enum = dtypes.float32.as_datatype_enum)

File "/usr/local/lib/python3.5/dist-packages/tensorflow/python/tools/strip_unused_lib.py", line 86, in strip_unused

output_node_names)

File "/usr/local/lib/python3.5/dist-packages/tensorflow/python/framework/graph_util_impl.py", line 174, in extract_sub_graph

_assert_nodes_are_present(name_to_node, dest_nodes)

File "/usr/local/lib/python3.5/dist-packages/tensorflow/python/framework/graph_util_impl.py", line 133, in _assert_nodes_are_present

assert d in name_to_node, "%s is not in graph" % d

AssertionError: dstNode is not in graph

Any ideas? Or does mmdnn support converting tensorRT optimized tf frozen model?

Thanks.

|

closed

|

2018-09-20T02:22:23Z

|

2018-09-25T08:20:11Z

|

https://github.com/microsoft/MMdnn/issues/420

|

[] |

elvys-zhang

| 7 |

yt-dlp/yt-dlp

|

python

| 11,875 |

support for 360research / motilaloswal.com

|

### DO NOT REMOVE OR SKIP THE ISSUE TEMPLATE

- [X] I understand that I will be **blocked** if I *intentionally* remove or skip any mandatory\* field

### Checklist

- [X] I'm reporting a new site support request

- [X] I've verified that I have **updated yt-dlp to nightly or master** ([update instructions](https://github.com/yt-dlp/yt-dlp#update-channels))

- [X] I've checked that all provided URLs are playable in a browser with the same IP and same login details

- [X] I've checked that none of provided URLs [violate any copyrights](https://github.com/yt-dlp/yt-dlp/blob/master/CONTRIBUTING.md#is-the-website-primarily-used-for-piracy) or contain any [DRM](https://en.wikipedia.org/wiki/Digital_rights_management) to the best of my knowledge

- [X] I've searched [known issues](https://github.com/yt-dlp/yt-dlp/issues/3766) and the [bugtracker](https://github.com/yt-dlp/yt-dlp/issues?q=) for similar issues **including closed ones**. DO NOT post duplicates

- [X] I've read the [guidelines for opening an issue](https://github.com/yt-dlp/yt-dlp/blob/master/CONTRIBUTING.md#opening-an-issue)

- [X] I've read about [sharing account credentials](https://github.com/yt-dlp/yt-dlp/blob/master/CONTRIBUTING.md#are-you-willing-to-share-account-details-if-needed) and am willing to share it if required

### Region

_No response_

### Example URLs

https://prd.motilaloswal.com/edumo/videos/webinar/154

### Provide a description that is worded well enough to be understood

i want to download videos from the website called 360research(link is pasted above with the desired video that i want to download) the website is like you have to make an account pay a certain amount to access the course and watch it online only but i want to download it to i can refer later on and they(360research website doesnt have an download option) i have tried installing yt dlp and tried downloading using "yt-dlp https://prd.motilaloswal.com/edumo/videos/webinar/154" in the terminal and it shows it is an unsupported URL...

### Provide verbose output that clearly demonstrates the problem

- [X] Run **your** yt-dlp command with **-vU** flag added (`yt-dlp -vU <your command line>`)

- [X] If using API, add `'verbose': True` to `YoutubeDL` params instead

- [X] Copy the WHOLE output (starting with `[debug] Command-line config`) and insert it below

### Complete Verbose Output

```shell

[debug] Command-line config: ['-vU', 'yt-dlp', 'https://prd.motilaloswal.com/edumo/videos/webinar/154']

[debug] Encodings: locale UTF-8, fs utf-8, pref UTF-8, out utf-8, error utf-8, screen utf-8

[debug] yt-dlp version stable@2024.12.13 from yt-dlp/yt-dlp [542166962] (pip)

[debug] Python 3.13.1 (CPython x86_64 64bit) - macOS-14.6.1-x86_64-i386-64bit-Mach-O (OpenSSL 3.4.0 22 Oct 2024)

[debug] exe versions: ffmpeg 7.1 (setts), ffprobe 7.1

[debug] Optional libraries: Cryptodome-3.21.0, brotli-1.1.0, certifi-2024.12.14, mutagen-1.47.0, requests-2.32.3, sqlite3-3.47.2, urllib3-2.2.3, websockets-14.1

[debug] Proxy map: {}

[debug] Request Handlers: urllib, requests, websockets

[debug] Loaded 1837 extractors

[debug] Fetching release info: https://api.github.com/repos/yt-dlp/yt-dlp/releases/latest

Latest version: stable@2024.12.13 from yt-dlp/yt-dlp

yt-dlp is up to date (stable@2024.12.13 from yt-dlp/yt-dlp)

[CommonMistakes] Extracting URL: yt-dlp

ERROR: [CommonMistakes] You've asked yt-dlp to download the URL "yt-dlp". That doesn't make any sense. Simply remove the parameter in your command or configuration.

File "/usr/local/Cellar/yt-dlp/2024.12.13/libexec/lib/python3.13/site-packages/yt_dlp/extractor/common.py", line 742, in extract

ie_result = self._real_extract(url)

File "/usr/local/Cellar/yt-dlp/2024.12.13/libexec/lib/python3.13/site-packages/yt_dlp/extractor/commonmistakes.py", line 25, in _real_extract

raise ExtractorError(msg, expected=True)

[generic] Extracting URL: https://prd.motilaloswal.com/edumo/videos/webinar/154

[generic] 154: Downloading webpage

WARNING: [generic] Falling back on generic information extractor

[generic] 154: Extracting information

[debug] Looking for embeds

ERROR: Unsupported URL: https://prd.motilaloswal.com/edumo/videos/webinar/154

Traceback (most recent call last):

File "/usr/local/Cellar/yt-dlp/2024.12.13/libexec/lib/python3.13/site-packages/yt_dlp/YoutubeDL.py", line 1624, in wrapper

return func(self, *args, **kwargs)

File "/usr/local/Cellar/yt-dlp/2024.12.13/libexec/lib/python3.13/site-packages/yt_dlp/YoutubeDL.py", line 1759, in __extract_info

ie_result = ie.extract(url)

File "/usr/local/Cellar/yt-dlp/2024.12.13/libexec/lib/python3.13/site-packages/yt_dlp/extractor/common.py", line 742, in extract

ie_result = self._real_extract(url)

File "/usr/local/Cellar/yt-dlp/2024.12.13/libexec/lib/python3.13/site-packages/yt_dlp/extractor/generic.py", line 2553, in _real_extract

raise UnsupportedError(url)

yt_dlp.utils.UnsupportedError: Unsupported URL: https://prd.motilaloswal.com/edumo/videos/webinar/154

```

|

open

|

2024-12-22T16:04:42Z

|

2024-12-26T14:07:45Z

|

https://github.com/yt-dlp/yt-dlp/issues/11875

|

[

"site-request",

"account-needed",

"triage",

"can-share-account"

] |

panwariojpg

| 3 |

streamlit/streamlit

|

python

| 10,446 |

Clicking on a cell in a DatetimeColumn produces "This error should never happen. Please report this bug."

|

### Checklist

- [x] I have searched the [existing issues](https://github.com/streamlit/streamlit/issues) for similar issues.

- [x] I added a very descriptive title to this issue.

- [x] I have provided sufficient information below to help reproduce this issue.

### Summary

Using a data editor with DatetimeColumns and creating a new row produces a "This error should never happen. Please report this bug." error (see screenshot below). Happens on every new row created.

### Reproducible Code Example

```Python

from typing import Final

import pandas as pd

import streamlit as st

TOTAL: Final = "total"

user_constraints = pd.DataFrame(

columns=[

"start_time",

"end_time",

"time_unit_in_min",

"departures",

"arrivals",

TOTAL,

],

data={

"start_time": pd.Series(

[],

dtype="datetime64[ns, UTC]",

),

"end_time": pd.Series(

[],

dtype="datetime64[ns, UTC]",

),

"time_unit_in_min": pd.Series([], dtype=int),

"departures": pd.Series([], dtype=int),

"arrivals": pd.Series([], dtype=int),

TOTAL: pd.Series([], dtype=int),

},

)

@st.fragment

def show_user_constraint() -> None:

"""

Displays user-defined capacity constraints for each time segment.

Returns:

None

"""

with st.expander("Capacity constraints"):

inputed_constraints = st.data_editor(

user_constraints,

hide_index=True,

num_rows="dynamic",

column_config={

"start_time": st.column_config.DatetimeColumn(

"Start time",

format="YYYY-MM-DD HH:mm",

width="medium",

timezone="Europe/Helsinki",

),

"end_time": st.column_config.DatetimeColumn(

"End time",

format="YYYY-MM-DD HH:mm",

width="medium",

timezone="Europe/Helsinki",

),

"time_unit_in_min": st.column_config.NumberColumn(

"Time unit in min",

default=60,

),

"departures": st.column_config.NumberColumn(

"Allowed departures",

default=0,

),

"arrivals": st.column_config.NumberColumn(

"Allowed arrivals",

default=0,

),

TOTAL: st.column_config.NumberColumn("Total allowed", default=0),

},

)

if not inputed_constraints.empty and inputed_constraints.notnull().all().all():

st.session_state.capacity_constraint_df = inputed_constraints

```

### Steps To Reproduce

1. Run the app

2. Click on a new row in the "Start time" column.

3. View the problem.

### Expected Behavior

Not to see the popup?

### Current Behavior

### Is this a regression?

~- [ ] Yes, this used to work in a previous version.~

Unsure, can't say for sure.

### Debug info

- Streamlit version: 1.42.0

- Python version: cpython@3.12.8

- Operating System: macOS Sequoia 15.3 (24D60)

- Browser: Happens of Safari and Chrome at least, I think also on Edge but unable to test currently

### Additional Information

Suddenly, I have been experiencing the following issue this issue. I am unsure when this has started happening, but _at least_ after updating to 1.42.0. There seems to be no obvious reason, nothing is logged to the console, only this small box that can be seen in the screenshot. I am also unsure how to reproduce it, but the code I have is provided. I have not tried to make a minimum reproducible example.

|

closed

|

2025-02-19T12:28:03Z

|

2025-02-19T12:46:39Z

|

https://github.com/streamlit/streamlit/issues/10446

|

[

"type:bug",

"status:needs-triage"

] |

christiansegercrantz

| 3 |

dynaconf/dynaconf

|

flask

| 261 |

[RFC] Method or property listing all defined environments

|

**Is your feature request related to a problem? Please describe.**

I'm trying to build a argparse argument that has a list of the available environments as choices to the argument. But I don't see any way to get this at the moment.

**Describe the solution you'd like**

I am proposing 2 features closely related to help with environment choices as a list and to validate that the environment was defined (not just that it is used with defaults or globals).

The first would be a way to get a list of defined environments minus `default` and global. This would make it easy to add to argparse as an argument to choices. I imagine a method or property such as `settings.available_environments` or `settings.defined_environments`.

The second feature would be a method to check if the environment is defined in settings. This could be used for checks in cases you don't use argparse or want to avoid selecting a non-existent environment. Maybe `settings.is_defined_environment('qa')` or similar.

**Describe alternatives you've considered**

I'm currently parsing my settings file keys outside of Dynaconf and discarding `default` and `global`. But this feels hacky.

**Additional context**

Since the environment is lazy loaded I wonder if this would be considered too expensive to do at load time. Maybe it makes sense as a utility outside of the `settings` object? Maybe there is a good way to do this without the feature? Maybe I shouldn't be doing this at all? :thinking:

|

open

|

2019-11-14T05:46:51Z

|

2024-02-05T21:17:08Z

|

https://github.com/dynaconf/dynaconf/issues/261

|

[

"hacktoberfest",

"Not a Bug",

"RFC"

] |

andyshinn

| 4 |

dbfixtures/pytest-postgresql

|

pytest

| 1,061 |

Connect pre-commit.ci

|

Connect pre-commit.ci to the repository

|

closed

|

2025-01-17T12:18:57Z

|

2025-01-17T15:19:20Z

|

https://github.com/dbfixtures/pytest-postgresql/issues/1061

|

[] |

fizyk

| 1 |

marcomusy/vedo

|

numpy

| 158 |

Boolean example

|

Hi everyone,

I have a question regarding one of the capabilities shown in the example galery:

I could not find more detailed information on the Boolean operation between 3d meshes. My first question is, on which geometrical kernel is vtkplotter relying to perform this operation?

Does anyone know if the boolean between two unstructured triangle meshes (mesh origianly coming from a point cloud + delaunay triangulation) would work?

Thanks in advance!

|

closed

|

2020-06-10T08:50:55Z

|

2020-06-18T10:47:52Z

|

https://github.com/marcomusy/vedo/issues/158

|

[] |

pempmu

| 1 |

nteract/papermill

|

jupyter

| 583 |

How do we reuse engines across executions

|

Apologies if this is a duplicate.

I need to execute multiple notebooks in which each notebook is lightweight.

Think of this as a problem of drawing M notebooks from an N-notebook library, and executing the M notebooks.

I am running into two problems:

1. engine startup time is very high relative to notebook execution time

2. the libraries within the notebook cache content from databases that could be shared across notebook executions

It appears that each notebook execution creates a separate thread. Is this correct?

It would be great to be able to instantiate an engine and then feed it notebooks for processing to address the two issues raised. Is this possible today? If not, what would be involved in making it so?

Alternatively, it would be great to send papermill a list of notebooks and have each one processed with the same kernel.

|

closed

|

2021-03-04T21:54:23Z

|

2021-03-11T17:17:43Z

|

https://github.com/nteract/papermill/issues/583

|

[] |

ddreyfus

| 3 |

deepset-ai/haystack

|

pytorch

| 8,760 |

Support for o1-like reasoning model (LRMs)

|

It seems like we will need separation of reasoning content and the actual text completions to better manage multi-round conversations with reasoning (for example: https://api-docs.deepseek.com/guides/reasoning_model). This may have impact on the current structure and functionality of `ChatMessage`, `StreamingChunk` and generators.

My current purposal is to add a new boolean flag or type in both `TextContent` and `StreamingChunk` to indicate if this is a part of the reasoning steps. `ChatMessage.text` should point to the first non-reasoning text content, and we will need to add a new property for `ChatMessage.reasoning`.

For example, this is how the streaming chunks will be like from a reasoning model:

```

StreamingChunk(content: <reasoning-delta1>, is_reasoning: true)

StreamingChunk(content: <reasoning-delta2>, is_reasoning: true)

StreamingChunk(content: <completion-delta1>, is_reasoning: false)

StreamingChunk(content: <completion-delta2>, is_reasoning: false)

```

And user can access the reasoning and completions part using `chat_message.reasoning[s]` and `chat_message.text[s]` respectively from the generator output.

The other option is to have a separate `reasoning_content` field in `StreamingChunk` and `ReasoningContent` class in `ChatMessage._contents`. This is more aligned with the current deepseek-reasoner API but I feel like it is slightly overcomplicated. But I am not exactly sure whether both `reasoning_content` and `content` can appear in one SSE chunk.

I did some research today but there are few reasoning models/APIs available to reach a consensus on what reasoning should be like. I feel like it is probably better to start a discussion thread somewhere and explore the options.

|

open

|

2025-01-22T10:06:45Z

|

2025-02-26T03:47:05Z

|

https://github.com/deepset-ai/haystack/issues/8760

|

[

"P3"

] |

LastRemote

| 12 |

hzwer/ECCV2022-RIFE

|

computer-vision

| 36 |

Not the fastest for multi-frame interpolation

|

Hi,

Thanks for open sourcing the code and contributing to the video frame interpolation community.