repo_name

stringlengths 9

75

| topic

stringclasses 30

values | issue_number

int64 1

203k

| title

stringlengths 1

976

| body

stringlengths 0

254k

| state

stringclasses 2

values | created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| url

stringlengths 38

105

| labels

sequencelengths 0

9

| user_login

stringlengths 1

39

| comments_count

int64 0

452

|

|---|---|---|---|---|---|---|---|---|---|---|---|

google-research/bert | nlp | 566 | What is BERT? | Hello,

I am hearing a lot that I should use BERT for applications instead of word embeddings, but I am not understanding what BERT is? Maybe becuase I do not know transformer.

Could anyone explain, what is BERT? How does it differ from word2vec, Glove and more importantly elmo.

How does bert differ from openai-gpt?

How can I adapt bert to a question-answering model or any classification task?

Any help is highly appreciated.

Thank you.

| open | 2019-04-09T00:39:53Z | 2019-04-09T10:10:11Z | https://github.com/google-research/bert/issues/566 | [] | ghost | 2 |

joke2k/django-environ | django | 17 | environ should be able to infer casting type from the default | If you have `DEBUG = env('DEBUG', default=False)`, having to also specify `env = environ.Env(DEBUG=(bool, False),)` seems redundant. For example, see http://tornado.readthedocs.org/en/latest/options.html#tornado.options.OptionParser.define

> If type is given (one of str, float, int, datetime, or timedelta) or can be inferred from the default, we parse the command line arguments based on the given type.

| closed | 2014-11-22T00:28:20Z | 2018-06-26T20:57:54Z | https://github.com/joke2k/django-environ/issues/17 | [

"enhancement"

] | crccheck | 1 |

3b1b/manim | python | 1,800 | Fullscreen mode leaves out a fully transparent bar at the bottom in Windows 10 | ### Describe the bug

When I use fullscreen mode with manimGL v1.6.1 on Windows 10 there is an empty bar at the bottom of the screen through which I can see whatever is behind the python fullscreen drawing area (i.e. it's not actually fullscreen). That is, if I have a browser open I can see the browser through the bottom bar, or if I have only the desktop, I can see it.

**Code**:

Any example in fullscreen, e.g. from the example_scenes.py.

**Wrong display or Error traceback**:

<!-- the wrong display result of the code you run, or the error Traceback -->

### Additional context

### Environment

**OS System**: Windows 10

**manim version**: master MagnimGL v1.6.1

**python version**: Python 3.9.6

| open | 2022-04-23T20:28:03Z | 2022-08-04T05:22:55Z | https://github.com/3b1b/manim/issues/1800 | [

"bug"

] | vchizhov | 3 |

amidaware/tacticalrmm | django | 1,538 | "Install software" is missing a package that appears at chocolatey.org | **Server Info (please complete the following information):**

- OS: Ubuntu 20.04

- Browser: firefox

- RMM Version (as shown in top left of web UI): v0.15.12

**Installation Method:**

- [X ] Standard

- [ ] Docker

**Agent Info (please complete the following information):**

- Agent version (as shown in the 'Summary' tab of the agent from web UI): Agent v2.4.9

- Agent OS: Win 10/11

**Describe the bug**

A package that appears on https://chocolatey.org/ is not available to install

**To Reproduce**

Steps to reproduce the behavior:

1. Go to software

2. Click on install

3. Search for remarkable

**Expected behavior**

I'd expect to see https://community.chocolatey.org/packages/remarkable

**Screenshots**

This package has only been on choco since Thursday, May 25, 2023 - do I need to refresh the choco package list somehow? | closed | 2023-06-14T12:02:59Z | 2023-12-20T05:02:24Z | https://github.com/amidaware/tacticalrmm/issues/1538 | [

"bug",

"enhancement"

] | moose999 | 12 |

2noise/ChatTTS | python | 400 | 参考 Readme 生成的是电流声 | commit id: e58fe48d2ee99310ce2066005c5108ac86942ad4

步骤

```

git clone https://github.com/2noise/ChatTTS

cd ChatTTS

conda create -n chattts

conda activate chattts

pip install -r requirements.txt

python examples/cmd/run.py "chat T T S is a text to speech model designed for dialogue applications."

```

生成的 `output_audio_0.wav`如下:

[output_audio_0.zip](https://github.com/user-attachments/files/15934697/output_audio_0.zip)

| open | 2024-06-22T01:31:49Z | 2024-06-22T05:28:08Z | https://github.com/2noise/ChatTTS/issues/400 | [

"bug",

"help wanted",

"question"

] | BUG1989 | 2 |

lanpa/tensorboardX | numpy | 392 | ImportError: cannot import name 'summary' | (n2n) [410@mu01 noise2noise-master]$ python config.py validate --dataset-dir=datasets/kodak --network-snapshot=results/network_final-gaussian-n2c.pickle

Traceback (most recent call last):

File "config.py", line 14, in <module>

import validation

File "/home/410/ysn/noise2noise-master/validation.py", line 16, in <module>

import dnnlib.tflib.tfutil as tfutil

File "/home/410/ysn/noise2noise-master/dnnlib/tflib/__init__.py", line 8, in <module>

from . import autosummary

File "/home/410/ysn/noise2noise-master/dnnlib/tflib/autosummary.py", line 28, in <module>

from tensorboard import summary as summary_lib

ImportError: cannot import name 'summary'

| closed | 2019-03-22T13:30:41Z | 2019-05-19T13:46:58Z | https://github.com/lanpa/tensorboardX/issues/392 | [] | YangSN0719 | 1 |

piskvorky/gensim | machine-learning | 2,758 | How to use LdaModel with Callback | <!--

**IMPORTANT**:

- Use the [Gensim mailing list](https://groups.google.com/forum/#!forum/gensim) to ask general or usage questions. Github issues are only for bug reports.

- Check [Recipes&FAQ](https://github.com/RaRe-Technologies/gensim/wiki/Recipes-&-FAQ) first for common answers.

Github bug reports that do not include relevant information and context will be closed without an answer. Thanks!

-->

#### Problem description

I wonder if I can implement early stopping while training LdaModel using Callbacks and throwing exception.

But when I try to use Callback class gensim throws an error about `logger` attribute. If I add `logger` it then requires `get_value` method, i.e. it treats Callback like it's a Metric class. So how do you use it correctly?

#### Steps/code/corpus to reproduce

```

from gensim import corpora, models

from gensim.models.callbacks import Callback

texts = [['Lorem ipsum dolor sit amet, consectetur adipiscing elit'],['sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ']]

bigram = models.Phrases(texts, min_count=5, threshold=100)

trigram = models.Phrases(bigram[texts], threshold=100)

bigram_mod = models.phrases.Phraser(bigram)

trigram_mod = models.phrases.Phraser(trigram)

dictionary = corpora.Dictionary(trigram_mod[bigram_mod[texts]])

corpus = [dictionary.doc2bow(text) for text in texts]

callback = Callback(metrics=['DiffMetric'])

lda_model = models.LdaModel(

corpus, num_topics=3, id2word=dictionary, callbacks=[callback] )

```

> ---------------------------------------------------------------------------

> AttributeError Traceback (most recent call last)

> <ipython-input-2-67d9133f842a> in <module>

> 13

> 14 lda_model = models.LdaModel(

> ---> 15 corpus, num_topics=3, id2word=dictionary, callbacks=[callback] )

>

> ~\Anaconda3\lib\site-packages\gensim\models\ldamodel.py in __init__(self, corpus, num_topics, id2word, distributed, chunksize, passes, update_every, alpha, eta, decay, offset, eval_every, iterations, gamma_threshold, minimum_probability, random_state, ns_conf, minimum_phi_value, per_word_topics, callbacks, dtype)

> 517 if corpus is not None:

> 518 use_numpy = self.dispatcher is not None

> --> 519 self.update(corpus, chunks_as_numpy=use_numpy)

> 520

> 521 def init_dir_prior(self, prior, name):

>

> ~\Anaconda3\lib\site-packages\gensim\models\ldamodel.py in update(self, corpus, chunksize, decay, offset, passes, update_every, eval_every, iterations, gamma_threshold, chunks_as_numpy)

> 945 # pass the list of input callbacks to Callback class

> 946 callback = Callback(self.callbacks)

> --> 947 callback.set_model(self)

> 948 # initialize metrics list to store metric values after every epoch

> 949 self.metrics = defaultdict(list)

>

> ~\Anaconda3\lib\site-packages\gensim\models\callbacks.py in set_model(self, model)

> 482 # store diff diagonals of previous epochs

> 483 self.diff_mat = Queue()

> --> 484 if any(metric.logger == "visdom" for metric in self.metrics):

> 485 if not VISDOM_INSTALLED:

> 486 raise ImportError("Please install Visdom for visualization")

>

> ~\Anaconda3\lib\site-packages\gensim\models\callbacks.py in <genexpr>(.0)

> 482 # store diff diagonals of previous epochs

> 483 self.diff_mat = Queue()

> --> 484 if any(metric.logger == "visdom" for metric in self.metrics):

> 485 if not VISDOM_INSTALLED:

> 486 raise ImportError("Please install Visdom for visualization")

>

> AttributeError: 'Callback' object has no attribute 'logger'

>

#### Versions

Windows-10-10.0.18362-SP0

Python 3.5.6 |Anaconda custom (64-bit)| (default, Aug 26 2018, 16:05:27) [MSC v.1900 64 bit (AMD64)]

NumPy 1.15.2

SciPy 1.1.0

gensim 3.8.1

FAST_VERSION 1 | open | 2020-02-22T13:54:00Z | 2023-06-22T06:07:25Z | https://github.com/piskvorky/gensim/issues/2758 | [] | hellpanderrr | 1 |

google-deepmind/graph_nets | tensorflow | 114 | hi,i want to know whether graph nets will add DropEdge in the future like https://github.com/DropEdge/DropEdge | closed | 2020-04-13T07:33:30Z | 2020-09-30T03:04:04Z | https://github.com/google-deepmind/graph_nets/issues/114 | [] | luyifanlu | 1 |

|

sinaptik-ai/pandas-ai | pandas | 671 | Conversational Answer won,t work | ### System Info

OS version: windows 10

Python version : 3.11

The current version of pandas ai

### 🐛 Describe the bug

In the current version of Pandas AI :

# Conversational Answer won,t work

# when I asked what is the GDP then answer Just 20,0000 But I need The GDP is 20,000 like this .. | closed | 2023-10-22T08:34:21Z | 2024-06-01T00:20:17Z | https://github.com/sinaptik-ai/pandas-ai/issues/671 | [] | Ridoy302583 | 2 |

ageitgey/face_recognition | machine-learning | 613 | Nameerror: pil_image is not defined | * face_recognition version:

* Python version:

* Operating System:

### Description

Describe what you were trying to get done.

Tell us what happened, what went wrong, and what you expected to happen.

IMPORTANT: If your issue is related to a specific picture, include it so others can reproduce the issue.

### What I Did

```

Paste the command(s) you ran and the output.

If there was a crash, please include the traceback here.

```

| closed | 2018-09-01T15:59:51Z | 2018-09-01T16:01:21Z | https://github.com/ageitgey/face_recognition/issues/613 | [] | multikillerr | 0 |

gradio-app/gradio | data-visualization | 10,201 | Accordion - Expanding vertically to the right | - [x] I have searched to see if a similar issue already exists.

I would really like to have the ability to place an accordion vertically and expand to the right. I have scenarios where this would be a better UI solution, as doing so would automatically push the other components to the right of it forward.

I have no idea how to tweak this in CSS to make it work. If you have a simple CSS solution I would appreciate it until we have this feature. I am actually developing something that would really need this feature.

I made this drawing of what it would be like.

| closed | 2024-12-14T23:51:34Z | 2024-12-16T16:46:38Z | https://github.com/gradio-app/gradio/issues/10201 | [] | elismasilva | 1 |

adbar/trafilatura | web-scraping | 604 | New port of readability.js? | The current implementation of `readability_lxml` and the original project [readability.js](https://github.com/mozilla/readability/tree/main) is out of sync, so it would make sense to update the `readability_lxml` implementation to benefit from potentially newer feature and fixes.

[readability.js](https://github.com/mozilla/readability/tree/main) has a big set of [test pages](https://github.com/mozilla/readability/tree/main/test/test-pages) with source.html and expected.html files. As a first step, we could test how `readability_lxml` compares against this test set?

Based on the results we could check if it's better to _fix_ the differences (if there aren't too many), or if a new port based on the current javascript implementation would make more sense. What do you think?

By the way, `readability_lxml` looks like it was first ported to ruby and then to python. Besides using `lxml`, were there any functions added specifically for trafilatura? | open | 2024-05-23T13:49:54Z | 2024-06-05T08:44:47Z | https://github.com/adbar/trafilatura/issues/604 | [

"question"

] | zirkelc | 4 |

miguelgrinberg/Flask-SocketIO | flask | 1,037 | connection refreshing automatically after emit event from Flask server. | I'm trying to create a simple chatroom style application with Flask and Flask-SocketIO. When users type messages and click 'submit' (submitting a form in html), it should create an unordered list of messages. The problem I'm facing is the message gets appended as list item but then after few seconds it disappears(it appears as though connection is refreshed). I want to turn off this behavior. Thanks in advance. :smile:

Here is code snippet :

Flask:

```

@socketio.on('send messages')

def vote(data):

messages = data['messages']

emit('announce messages', {"messages":messages}, broadcast=True)

```

JavaScript:

```

document.addEventListener('DOMContentLoaded', () => {

// Connect to websocket

var socket = io.connect(location.protocol + '//' + document.domain + ':' + location.port);

// When connected

socket.on('connect', () => {

document.querySelector('#form').onsubmit = () => {

const messages = document.querySelector('#messages').value;

socket.emit('send messages', {'messages': messages});

return false;

}

});

socket.on('announce messages', data => {

const li = document.createElement('li');

li.innerHTML = `${data.messages}`;

document.querySelector('#push').append(li);

return false;

});

});

``` | closed | 2019-08-14T06:33:32Z | 2019-08-14T09:03:33Z | https://github.com/miguelgrinberg/Flask-SocketIO/issues/1037 | [

"question"

] | AkshayKumar007 | 2 |

MaartenGr/BERTopic | nlp | 2,189 | fit_transform tries to access embedding_model if representation_model is not None | ### Have you searched existing issues? 🔎

- [X] I have searched and found no existing issues

### Desribe the bug

I was using BERTopic on a cluster of queries with my own embeddings (computed on a model that is hard to pass as a parameter) and it was working as expected.

After trying to use `representation_model = KeyBERTInspired()` and adding ` representation_model=representation_model` to BERTopic as a parameter. I got this error :

```

AttributeError Traceback (most recent call last)

[1] representation_model = KeyBERTInspired()

[2] topic_model = BERTopic(

[3] calculate_probabilities=True,

[4] min_topic_size=1

[5] embedding_model=None,

[6] representation_model=representation_model,

[7] )

----> [8] topics, probs = topic_model.fit_transform(corpus, np.array(corpus_embeddings))

[9] topic_model.get_topic_info()

File ~/query_analysis/bertopic_env/lib/python3.11/site-packages/bertopic/_bertopic.py:493, in BERTopic.fit_transform(self, documents, embeddings, images, y)

[490] self._save_representative_docs(custom_documents)

[491] else:

[492] # Extract topics by calculating c-TF-IDF

--> [493] self._extract_topics(documents, embeddings=embeddings, verbose=self.verbose)

[495] # Reduce topics

[496] if self.nr_topics:

File ~/query_analysis/bertopic_env/lib/python3.11/site-packages/bertopic/_bertopic.py:3991, in BERTopic._extract_topics(self, documents, embeddings, mappings, verbose)

[3989] documents_per_topic = documents.groupby(["Topic"], as_index=False).agg({"Document": " ".join})

[3990] self.c_tf_idf_, words = self._c_tf_idf(documents_per_topic)

-> [3991] self.topic_representations_ = self._extract_words_per_topic(words, documents)

...

[3680] "Make sure to use an embedding model that can either embed documents"

[3681] "or images depending on which you want to embed."

[3682]

AttributeError: 'NoneType' object has no attribute 'embed_documents'

```

### Reproduction

```python

from query import Query

import json

import numpy as np

from sklearn.cluster import DBSCAN

from bertopic import BERTopic

from openai import OpenAI

from bertopic.representation import KeyBERTInspired

representation_model = KeyBERTInspired()

topic_model = BERTopic(

calculate_probabilities=True,

min_topic_size=15,

embedding_model=None,

representation_model=representation_model,

)

topics, probs = topic_model.fit_transform(corpus, np.array(corpus_embeddings))

topic_model.get_topic_info()

```

### BERTopic Version

0.16.4 | open | 2024-10-17T08:10:23Z | 2024-10-18T10:24:13Z | https://github.com/MaartenGr/BERTopic/issues/2189 | [

"bug"

] | rasantangelo | 1 |

Johnserf-Seed/TikTokDownload | api | 565 | 【使用】我想问下。这个在linux命令行下(debian)怎么使用 | 教程好像说的都是windows如何使用。但是我觉得这种会经常更新视频的东西。使用debian进行定时增量更新是更好的选择

但是不知道在debian下。这个程序应该怎么使用

| open | 2023-09-27T08:51:41Z | 2023-10-09T09:24:12Z | https://github.com/Johnserf-Seed/TikTokDownload/issues/565 | [

"无效(invalid)"

] | Whichbfj28 | 10 |

apachecn/ailearning | python | 421 | ApacheCN | http://ailearning.apachecn.org/

ApacheCN 专注于优秀项目维护的开源组织 | closed | 2018-08-24T06:58:15Z | 2021-09-07T17:41:31Z | https://github.com/apachecn/ailearning/issues/421 | [

"Gitalk",

"6666cd76f96956469e7be39d750cc7d9"

] | jiangzhonglian | 2 |

chezou/tabula-py | pandas | 269 | consistency issues across similar pdfs | closed | 2020-11-09T14:33:41Z | 2020-11-09T14:34:28Z | https://github.com/chezou/tabula-py/issues/269 | [] | aestella | 1 |

|

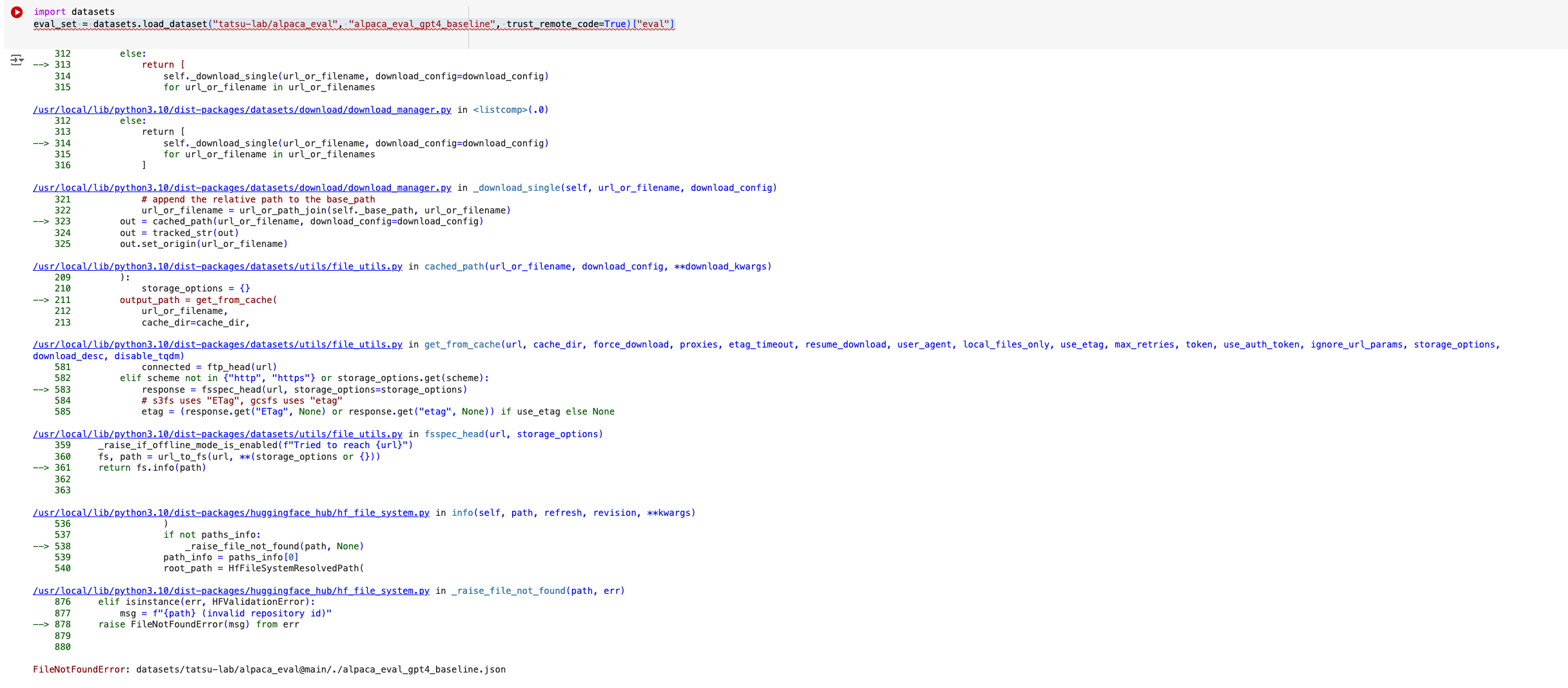

huggingface/datasets | pytorch | 7,107 | load_dataset broken in 2.21.0 | ### Describe the bug

`eval_set = datasets.load_dataset("tatsu-lab/alpaca_eval", "alpaca_eval_gpt4_baseline", trust_remote_code=True)`

used to work till 2.20.0 but doesn't work in 2.21.0

In 2.20.0:

in 2.21.0:

### Steps to reproduce the bug

1. Spin up a new google collab

2. `pip install datasets==2.21.0`

3. `import datasets`

4. `eval_set = datasets.load_dataset("tatsu-lab/alpaca_eval", "alpaca_eval_gpt4_baseline", trust_remote_code=True)`

5. Will throw an error.

### Expected behavior

Try steps 1-5 again but replace datasets version with 2.20.0, it will work

### Environment info

- `datasets` version: 2.21.0

- Platform: Linux-6.1.85+-x86_64-with-glibc2.35

- Python version: 3.10.12

- `huggingface_hub` version: 0.23.5

- PyArrow version: 17.0.0

- Pandas version: 2.1.4

- `fsspec` version: 2024.5.0

| closed | 2024-08-16T14:59:51Z | 2024-08-18T09:28:43Z | https://github.com/huggingface/datasets/issues/7107 | [] | anjor | 4 |

pyeve/eve | flask | 1,360 | Nested unique constraint doesn't work | ### Expected Behavior

The `unique` constraint does not work when is inside of a dict or a list, for example. The problem comes from [this line](https://github.com/pyeve/eve/blob/master/eve/io/mongo/validation.py#L95). The resulting query will find only properties in the root document.

The `unique` constraint won't work also when nested inside lists, as the query will try to find by a numeric index instead of the key of the parent schema.

```json

{

"dict_test": {

"type": "dict",

"schema": {

"string_attribute": {

"type": "string",

"unique": true

}

}

}

}

```

### Actual Behavior

You can create more than one resource of that schema with the same `string_attribute` value.

### Environment

* Python version: 3.7

* Eve version: 1.1

| closed | 2020-02-25T19:19:44Z | 2021-01-22T16:31:03Z | https://github.com/pyeve/eve/issues/1360 | [] | elias-garcia | 0 |

ClimbsRocks/auto_ml | scikit-learn | 4 | FUTURE: train regressors or classifiers | closed | 2016-08-08T00:16:30Z | 2016-08-12T06:12:57Z | https://github.com/ClimbsRocks/auto_ml/issues/4 | [] | ClimbsRocks | 1 |

|

AutoGPTQ/AutoGPTQ | nlp | 678 | How to install auto-gptq in GCC 8.5.0 environment? | I want to install auto-gptq package to finetune my LLM. But when I followed the README, it showed some error.

The error show that need GCC 9.0, but my environment only has GCC 8.5.0 and couldn't update to 5.0 in some reasons.

```

Looking in indexes: https://mirrors.aliyun.com/pypi/simple/

Processing /home/dongna/lsx-project/Qwen-14B/Qwen-main/AutoGPTQ

Preparing metadata (setup.py) ... done

Requirement already satisfied: accelerate>=0.26.0 in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from auto_gptq==0.8.0.dev0+cu118) (0.29.3)

Requirement already satisfied: datasets in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from auto_gptq==0.8.0.dev0+cu118) (2.18.0)

Collecting sentencepiece (from auto_gptq==0.8.0.dev0+cu118)

Using cached https://mirrors.aliyun.com/pypi/packages/4f/d2/18246f43ca730bb81918f87b7e886531eda32d835811ad9f4657c54eee35/sentencepiece-0.2.0-cp312-cp312-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (1.3 MB)

Requirement already satisfied: numpy in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from auto_gptq==0.8.0.dev0+cu118) (1.26.4)

Collecting rouge (from auto_gptq==0.8.0.dev0+cu118)

Using cached https://mirrors.aliyun.com/pypi/packages/32/7c/650ae86f92460e9e8ef969cc5008b24798dcf56a9a8947d04c78f550b3f5/rouge-1.0.1-py3-none-any.whl (13 kB)

Collecting gekko (from auto_gptq==0.8.0.dev0+cu118)

Using cached https://mirrors.aliyun.com/pypi/packages/3f/ed/960a6cec9e588b8c05e830dcb6789750ee88e791e9cd5467f85a12ee7f46/gekko-1.1.1-py3-none-any.whl (13.2 MB)

Requirement already satisfied: torch>=1.13.0 in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from auto_gptq==0.8.0.dev0+cu118) (2.3.0+cu118)

Requirement already satisfied: safetensors in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from auto_gptq==0.8.0.dev0+cu118) (0.4.3)

Requirement already satisfied: transformers>=4.31.0 in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from auto_gptq==0.8.0.dev0+cu118) (4.37.2)

Requirement already satisfied: peft>=0.5.0 in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from auto_gptq==0.8.0.dev0+cu118) (0.7.1)

Requirement already satisfied: tqdm in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from auto_gptq==0.8.0.dev0+cu118) (4.66.2)

Requirement already satisfied: packaging>=20.0 in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from accelerate>=0.26.0->auto_gptq==0.8.0.dev0+cu118) (23.2)

Requirement already satisfied: psutil in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from accelerate>=0.26.0->auto_gptq==0.8.0.dev0+cu118) (5.9.8)

Requirement already satisfied: pyyaml in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from accelerate>=0.26.0->auto_gptq==0.8.0.dev0+cu118) (6.0.1)

Requirement already satisfied: huggingface-hub in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from accelerate>=0.26.0->auto_gptq==0.8.0.dev0+cu118) (0.22.2)

Requirement already satisfied: filelock in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from torch>=1.13.0->auto_gptq==0.8.0.dev0+cu118) (3.13.4)

Requirement already satisfied: typing-extensions>=4.8.0 in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from torch>=1.13.0->auto_gptq==0.8.0.dev0+cu118) (4.11.0)

Requirement already satisfied: sympy in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from torch>=1.13.0->auto_gptq==0.8.0.dev0+cu118) (1.12)

Requirement already satisfied: networkx in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from torch>=1.13.0->auto_gptq==0.8.0.dev0+cu118) (3.3)

Requirement already satisfied: jinja2 in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from torch>=1.13.0->auto_gptq==0.8.0.dev0+cu118) (3.1.3)

Requirement already satisfied: fsspec in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from torch>=1.13.0->auto_gptq==0.8.0.dev0+cu118) (2024.2.0)

Requirement already satisfied: nvidia-cuda-nvrtc-cu11==11.8.89 in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from torch>=1.13.0->auto_gptq==0.8.0.dev0+cu118) (11.8.89)

Requirement already satisfied: nvidia-cuda-runtime-cu11==11.8.89 in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from torch>=1.13.0->auto_gptq==0.8.0.dev0+cu118) (11.8.89)

Requirement already satisfied: nvidia-cuda-cupti-cu11==11.8.87 in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from torch>=1.13.0->auto_gptq==0.8.0.dev0+cu118) (11.8.87)

Requirement already satisfied: nvidia-cudnn-cu11==8.7.0.84 in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from torch>=1.13.0->auto_gptq==0.8.0.dev0+cu118) (8.7.0.84)

Requirement already satisfied: nvidia-cublas-cu11==11.11.3.6 in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from torch>=1.13.0->auto_gptq==0.8.0.dev0+cu118) (11.11.3.6)

Requirement already satisfied: nvidia-cufft-cu11==10.9.0.58 in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from torch>=1.13.0->auto_gptq==0.8.0.dev0+cu118) (10.9.0.58)

Requirement already satisfied: nvidia-curand-cu11==10.3.0.86 in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from torch>=1.13.0->auto_gptq==0.8.0.dev0+cu118) (10.3.0.86)

Requirement already satisfied: nvidia-cusolver-cu11==11.4.1.48 in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from torch>=1.13.0->auto_gptq==0.8.0.dev0+cu118) (11.4.1.48)

Requirement already satisfied: nvidia-cusparse-cu11==11.7.5.86 in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from torch>=1.13.0->auto_gptq==0.8.0.dev0+cu118) (11.7.5.86)

Requirement already satisfied: nvidia-nccl-cu11==2.20.5 in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from torch>=1.13.0->auto_gptq==0.8.0.dev0+cu118) (2.20.5)

Requirement already satisfied: nvidia-nvtx-cu11==11.8.86 in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from torch>=1.13.0->auto_gptq==0.8.0.dev0+cu118) (11.8.86)

Requirement already satisfied: regex!=2019.12.17 in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from transformers>=4.31.0->auto_gptq==0.8.0.dev0+cu118) (2024.4.28)

Requirement already satisfied: requests in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from transformers>=4.31.0->auto_gptq==0.8.0.dev0+cu118) (2.31.0)

Requirement already satisfied: tokenizers<0.19,>=0.14 in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from transformers>=4.31.0->auto_gptq==0.8.0.dev0+cu118) (0.15.2)

Requirement already satisfied: pyarrow>=12.0.0 in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from datasets->auto_gptq==0.8.0.dev0+cu118) (16.0.0)

Requirement already satisfied: pyarrow-hotfix in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from datasets->auto_gptq==0.8.0.dev0+cu118) (0.6)

Requirement already satisfied: dill<0.3.9,>=0.3.0 in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from datasets->auto_gptq==0.8.0.dev0+cu118) (0.3.8)

Requirement already satisfied: pandas in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from datasets->auto_gptq==0.8.0.dev0+cu118) (2.2.2)

Requirement already satisfied: xxhash in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from datasets->auto_gptq==0.8.0.dev0+cu118) (3.4.1)

Requirement already satisfied: multiprocess in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from datasets->auto_gptq==0.8.0.dev0+cu118) (0.70.16)

Requirement already satisfied: aiohttp in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from datasets->auto_gptq==0.8.0.dev0+cu118) (3.9.5)

Requirement already satisfied: six in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from rouge->auto_gptq==0.8.0.dev0+cu118) (1.16.0)

Requirement already satisfied: aiosignal>=1.1.2 in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from aiohttp->datasets->auto_gptq==0.8.0.dev0+cu118) (1.3.1)

Requirement already satisfied: attrs>=17.3.0 in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from aiohttp->datasets->auto_gptq==0.8.0.dev0+cu118) (23.2.0)

Requirement already satisfied: frozenlist>=1.1.1 in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from aiohttp->datasets->auto_gptq==0.8.0.dev0+cu118) (1.4.1)

Requirement already satisfied: multidict<7.0,>=4.5 in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from aiohttp->datasets->auto_gptq==0.8.0.dev0+cu118) (6.0.5)

Requirement already satisfied: yarl<2.0,>=1.0 in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from aiohttp->datasets->auto_gptq==0.8.0.dev0+cu118) (1.9.4)

Requirement already satisfied: charset-normalizer<4,>=2 in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from requests->transformers>=4.31.0->auto_gptq==0.8.0.dev0+cu118) (3.3.2)

Requirement already satisfied: idna<4,>=2.5 in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from requests->transformers>=4.31.0->auto_gptq==0.8.0.dev0+cu118) (3.7)

Requirement already satisfied: urllib3<3,>=1.21.1 in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from requests->transformers>=4.31.0->auto_gptq==0.8.0.dev0+cu118) (2.2.1)

Requirement already satisfied: certifi>=2017.4.17 in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from requests->transformers>=4.31.0->auto_gptq==0.8.0.dev0+cu118) (2024.2.2)

Requirement already satisfied: MarkupSafe>=2.0 in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from jinja2->torch>=1.13.0->auto_gptq==0.8.0.dev0+cu118) (2.1.5)

Requirement already satisfied: python-dateutil>=2.8.2 in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from pandas->datasets->auto_gptq==0.8.0.dev0+cu118) (2.9.0.post0)

Requirement already satisfied: pytz>=2020.1 in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from pandas->datasets->auto_gptq==0.8.0.dev0+cu118) (2024.1)

Requirement already satisfied: tzdata>=2022.7 in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from pandas->datasets->auto_gptq==0.8.0.dev0+cu118) (2024.1)

Requirement already satisfied: mpmath>=0.19 in /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages (from sympy->torch>=1.13.0->auto_gptq==0.8.0.dev0+cu118) (1.3.0)

Building wheels for collected packages: auto_gptq

Building wheel for auto_gptq (setup.py) ... error

error: subprocess-exited-with-error

× python setup.py bdist_wheel did not run successfully.

│ exit code: 1

╰─> [285 lines of output]

conda_cuda_include_dir /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/nvidia/cuda_runtime/include

appending conda cuda include dir /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/nvidia/cuda_runtime/include

running bdist_wheel

running build

running build_py

creating build

creating build/lib.linux-x86_64-cpython-312

creating build/lib.linux-x86_64-cpython-312/auto_gptq

copying auto_gptq/__init__.py -> build/lib.linux-x86_64-cpython-312/auto_gptq

creating build/lib.linux-x86_64-cpython-312/tests

copying tests/__init__.py -> build/lib.linux-x86_64-cpython-312/tests

copying tests/bench_autoawq_autogptq.py -> build/lib.linux-x86_64-cpython-312/tests

copying tests/test_awq_compatibility_generation.py -> build/lib.linux-x86_64-cpython-312/tests

copying tests/test_peft_conversion.py -> build/lib.linux-x86_64-cpython-312/tests

copying tests/test_q4.py -> build/lib.linux-x86_64-cpython-312/tests

copying tests/test_quantization.py -> build/lib.linux-x86_64-cpython-312/tests

copying tests/test_repacking.py -> build/lib.linux-x86_64-cpython-312/tests

copying tests/test_serialization.py -> build/lib.linux-x86_64-cpython-312/tests

copying tests/test_sharded_loading.py -> build/lib.linux-x86_64-cpython-312/tests

copying tests/test_triton.py -> build/lib.linux-x86_64-cpython-312/tests

creating build/lib.linux-x86_64-cpython-312/auto_gptq/eval_tasks

copying auto_gptq/eval_tasks/__init__.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/eval_tasks

copying auto_gptq/eval_tasks/_base.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/eval_tasks

copying auto_gptq/eval_tasks/language_modeling_task.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/eval_tasks

copying auto_gptq/eval_tasks/sequence_classification_task.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/eval_tasks

copying auto_gptq/eval_tasks/text_summarization_task.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/eval_tasks

creating build/lib.linux-x86_64-cpython-312/auto_gptq/modeling

copying auto_gptq/modeling/__init__.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/modeling

copying auto_gptq/modeling/_base.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/modeling

copying auto_gptq/modeling/_const.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/modeling

copying auto_gptq/modeling/_utils.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/modeling

copying auto_gptq/modeling/auto.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/modeling

copying auto_gptq/modeling/baichuan.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/modeling

copying auto_gptq/modeling/bloom.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/modeling

copying auto_gptq/modeling/codegen.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/modeling

copying auto_gptq/modeling/cohere.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/modeling

copying auto_gptq/modeling/decilm.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/modeling

copying auto_gptq/modeling/gemma.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/modeling

copying auto_gptq/modeling/gpt2.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/modeling

copying auto_gptq/modeling/gpt_bigcode.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/modeling

copying auto_gptq/modeling/gpt_neox.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/modeling

copying auto_gptq/modeling/gptj.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/modeling

copying auto_gptq/modeling/internlm.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/modeling

copying auto_gptq/modeling/llama.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/modeling

copying auto_gptq/modeling/longllama.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/modeling

copying auto_gptq/modeling/mistral.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/modeling

copying auto_gptq/modeling/mixtral.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/modeling

copying auto_gptq/modeling/moss.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/modeling

copying auto_gptq/modeling/mpt.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/modeling

copying auto_gptq/modeling/opt.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/modeling

copying auto_gptq/modeling/phi.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/modeling

copying auto_gptq/modeling/qwen.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/modeling

copying auto_gptq/modeling/qwen2.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/modeling

copying auto_gptq/modeling/rw.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/modeling

copying auto_gptq/modeling/stablelmepoch.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/modeling

copying auto_gptq/modeling/starcoder2.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/modeling

copying auto_gptq/modeling/xverse.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/modeling

copying auto_gptq/modeling/yi.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/modeling

creating build/lib.linux-x86_64-cpython-312/auto_gptq/nn_modules

copying auto_gptq/nn_modules/__init__.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/nn_modules

copying auto_gptq/nn_modules/_fused_base.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/nn_modules

copying auto_gptq/nn_modules/fused_gptj_attn.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/nn_modules

copying auto_gptq/nn_modules/fused_llama_attn.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/nn_modules

copying auto_gptq/nn_modules/fused_llama_mlp.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/nn_modules

creating build/lib.linux-x86_64-cpython-312/auto_gptq/quantization

copying auto_gptq/quantization/__init__.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/quantization

copying auto_gptq/quantization/config.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/quantization

copying auto_gptq/quantization/gptq.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/quantization

copying auto_gptq/quantization/quantizer.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/quantization

creating build/lib.linux-x86_64-cpython-312/auto_gptq/utils

copying auto_gptq/utils/__init__.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/utils

copying auto_gptq/utils/accelerate_utils.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/utils

copying auto_gptq/utils/data_utils.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/utils

copying auto_gptq/utils/exllama_utils.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/utils

copying auto_gptq/utils/import_utils.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/utils

copying auto_gptq/utils/marlin_utils.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/utils

copying auto_gptq/utils/modeling_utils.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/utils

copying auto_gptq/utils/peft_utils.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/utils

copying auto_gptq/utils/perplexity_utils.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/utils

creating build/lib.linux-x86_64-cpython-312/auto_gptq/eval_tasks/_utils

copying auto_gptq/eval_tasks/_utils/__init__.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/eval_tasks/_utils

copying auto_gptq/eval_tasks/_utils/classification_utils.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/eval_tasks/_utils

copying auto_gptq/eval_tasks/_utils/generation_utils.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/eval_tasks/_utils

creating build/lib.linux-x86_64-cpython-312/auto_gptq/nn_modules/qlinear

copying auto_gptq/nn_modules/qlinear/__init__.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/nn_modules/qlinear

copying auto_gptq/nn_modules/qlinear/qlinear_cuda.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/nn_modules/qlinear

copying auto_gptq/nn_modules/qlinear/qlinear_cuda_old.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/nn_modules/qlinear

copying auto_gptq/nn_modules/qlinear/qlinear_exllama.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/nn_modules/qlinear

copying auto_gptq/nn_modules/qlinear/qlinear_exllamav2.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/nn_modules/qlinear

copying auto_gptq/nn_modules/qlinear/qlinear_marlin.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/nn_modules/qlinear

copying auto_gptq/nn_modules/qlinear/qlinear_qigen.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/nn_modules/qlinear

copying auto_gptq/nn_modules/qlinear/qlinear_triton.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/nn_modules/qlinear

copying auto_gptq/nn_modules/qlinear/qlinear_tritonv2.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/nn_modules/qlinear

creating build/lib.linux-x86_64-cpython-312/auto_gptq/nn_modules/triton_utils

copying auto_gptq/nn_modules/triton_utils/__init__.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/nn_modules/triton_utils

copying auto_gptq/nn_modules/triton_utils/custom_autotune.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/nn_modules/triton_utils

copying auto_gptq/nn_modules/triton_utils/dequant.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/nn_modules/triton_utils

copying auto_gptq/nn_modules/triton_utils/kernels.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/nn_modules/triton_utils

copying auto_gptq/nn_modules/triton_utils/mixin.py -> build/lib.linux-x86_64-cpython-312/auto_gptq/nn_modules/triton_utils

running build_ext

/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/utils/cpp_extension.py:428: UserWarning: There are no g++ version bounds defined for CUDA version 11.8

warnings.warn(f'There are no {compiler_name} version bounds defined for CUDA version {cuda_str_version}')

building 'autogptq_cuda_64' extension

creating /home/dongna/lsx-project/Qwen-14B/Qwen-main/AutoGPTQ/build/temp.linux-x86_64-cpython-312

creating /home/dongna/lsx-project/Qwen-14B/Qwen-main/AutoGPTQ/build/temp.linux-x86_64-cpython-312/autogptq_extension

creating /home/dongna/lsx-project/Qwen-14B/Qwen-main/AutoGPTQ/build/temp.linux-x86_64-cpython-312/autogptq_extension/cuda_64

/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/utils/cpp_extension.py:1967: UserWarning: TORCH_CUDA_ARCH_LIST is not set, all archs for visible cards are included for compilation.

If this is not desired, please set os.environ['TORCH_CUDA_ARCH_LIST'].

warnings.warn(

Emitting ninja build file /home/dongna/lsx-project/Qwen-14B/Qwen-main/AutoGPTQ/build/temp.linux-x86_64-cpython-312/build.ninja...

Compiling objects...

Allowing ninja to set a default number of workers... (overridable by setting the environment variable MAX_JOBS=N)

[1/2] /usr/local/cuda/bin/nvcc --generate-dependencies-with-compile --dependency-output /home/dongna/lsx-project/Qwen-14B/Qwen-main/AutoGPTQ/build/temp.linux-x86_64-cpython-312/autogptq_extension/cuda_64/autogptq_cuda_kernel_64.o.d -I/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include -I/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/torch/csrc/api/include -I/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/TH -I/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/THC -I/usr/local/cuda/include -I/home/dongna/lsx-project/Qwen-14B/Qwen-main/AutoGPTQ/autogptq_cuda -I/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/nvidia/cuda_runtime/include -I/home/dongna/.virtualenvs/Qwen-14B/include -I/usr/local/miniconda3/include/python3.12 -c -c /home/dongna/lsx-project/Qwen-14B/Qwen-main/AutoGPTQ/autogptq_extension/cuda_64/autogptq_cuda_kernel_64.cu -o /home/dongna/lsx-project/Qwen-14B/Qwen-main/AutoGPTQ/build/temp.linux-x86_64-cpython-312/autogptq_extension/cuda_64/autogptq_cuda_kernel_64.o -D__CUDA_NO_HALF_OPERATORS__ -D__CUDA_NO_HALF_CONVERSIONS__ -D__CUDA_NO_BFLOAT16_CONVERSIONS__ -D__CUDA_NO_HALF2_OPERATORS__ --expt-relaxed-constexpr --compiler-options ''"'"'-fPIC'"'"'' -DTORCH_API_INCLUDE_EXTENSION_H '-DPYBIND11_COMPILER_TYPE="_gcc"' '-DPYBIND11_STDLIB="_libstdcpp"' '-DPYBIND11_BUILD_ABI="_cxxabi1011"' -DTORCH_EXTENSION_NAME=autogptq_cuda_64 -D_GLIBCXX_USE_CXX11_ABI=0 -gencode=arch=compute_89,code=compute_89 -gencode=arch=compute_89,code=sm_89 -ccbin g++ -std=c++17

FAILED: /home/dongna/lsx-project/Qwen-14B/Qwen-main/AutoGPTQ/build/temp.linux-x86_64-cpython-312/autogptq_extension/cuda_64/autogptq_cuda_kernel_64.o

/usr/local/cuda/bin/nvcc --generate-dependencies-with-compile --dependency-output /home/dongna/lsx-project/Qwen-14B/Qwen-main/AutoGPTQ/build/temp.linux-x86_64-cpython-312/autogptq_extension/cuda_64/autogptq_cuda_kernel_64.o.d -I/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include -I/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/torch/csrc/api/include -I/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/TH -I/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/THC -I/usr/local/cuda/include -I/home/dongna/lsx-project/Qwen-14B/Qwen-main/AutoGPTQ/autogptq_cuda -I/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/nvidia/cuda_runtime/include -I/home/dongna/.virtualenvs/Qwen-14B/include -I/usr/local/miniconda3/include/python3.12 -c -c /home/dongna/lsx-project/Qwen-14B/Qwen-main/AutoGPTQ/autogptq_extension/cuda_64/autogptq_cuda_kernel_64.cu -o /home/dongna/lsx-project/Qwen-14B/Qwen-main/AutoGPTQ/build/temp.linux-x86_64-cpython-312/autogptq_extension/cuda_64/autogptq_cuda_kernel_64.o -D__CUDA_NO_HALF_OPERATORS__ -D__CUDA_NO_HALF_CONVERSIONS__ -D__CUDA_NO_BFLOAT16_CONVERSIONS__ -D__CUDA_NO_HALF2_OPERATORS__ --expt-relaxed-constexpr --compiler-options ''"'"'-fPIC'"'"'' -DTORCH_API_INCLUDE_EXTENSION_H '-DPYBIND11_COMPILER_TYPE="_gcc"' '-DPYBIND11_STDLIB="_libstdcpp"' '-DPYBIND11_BUILD_ABI="_cxxabi1011"' -DTORCH_EXTENSION_NAME=autogptq_cuda_64 -D_GLIBCXX_USE_CXX11_ABI=0 -gencode=arch=compute_89,code=compute_89 -gencode=arch=compute_89,code=sm_89 -ccbin g++ -std=c++17

In file included from /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/ATen/core/TensorBase.h:14,

from /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/ATen/core/TensorBody.h:38,

from /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/ATen/core/Tensor.h:3,

from /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/ATen/Tensor.h:3,

from /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/torch/csrc/autograd/function_hook.h:3,

from /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/torch/csrc/autograd/cpp_hook.h:2,

from /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/torch/csrc/autograd/variable.h:6,

from /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/torch/csrc/autograd/autograd.h:3,

from /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/torch/csrc/api/include/torch/autograd.h:3,

from /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/torch/csrc/api/include/torch/all.h:7,

from /home/dongna/lsx-project/Qwen-14B/Qwen-main/AutoGPTQ/autogptq_extension/cuda_64/autogptq_cuda_kernel_64.cu:1:

/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/c10/util/C++17.h:13:2: error: #error "You're trying to build PyTorch with a too old version of GCC. We need GCC 9 or later."

#error \

^~~~~

[2/2] g++ -MMD -MF /home/dongna/lsx-project/Qwen-14B/Qwen-main/AutoGPTQ/build/temp.linux-x86_64-cpython-312/autogptq_extension/cuda_64/autogptq_cuda_64.o.d -fno-strict-overflow -DNDEBUG -O2 -Wall -fPIC -O2 -isystem /usr/local/miniconda3/include -fPIC -O2 -isystem /usr/local/miniconda3/include -fPIC -I/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include -I/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/torch/csrc/api/include -I/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/TH -I/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/THC -I/usr/local/cuda/include -I/home/dongna/lsx-project/Qwen-14B/Qwen-main/AutoGPTQ/autogptq_cuda -I/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/nvidia/cuda_runtime/include -I/home/dongna/.virtualenvs/Qwen-14B/include -I/usr/local/miniconda3/include/python3.12 -c -c /home/dongna/lsx-project/Qwen-14B/Qwen-main/AutoGPTQ/autogptq_extension/cuda_64/autogptq_cuda_64.cpp -o /home/dongna/lsx-project/Qwen-14B/Qwen-main/AutoGPTQ/build/temp.linux-x86_64-cpython-312/autogptq_extension/cuda_64/autogptq_cuda_64.o -DTORCH_API_INCLUDE_EXTENSION_H '-DPYBIND11_COMPILER_TYPE="_gcc"' '-DPYBIND11_STDLIB="_libstdcpp"' '-DPYBIND11_BUILD_ABI="_cxxabi1011"' -DTORCH_EXTENSION_NAME=autogptq_cuda_64 -D_GLIBCXX_USE_CXX11_ABI=0 -std=c++17

FAILED: /home/dongna/lsx-project/Qwen-14B/Qwen-main/AutoGPTQ/build/temp.linux-x86_64-cpython-312/autogptq_extension/cuda_64/autogptq_cuda_64.o

g++ -MMD -MF /home/dongna/lsx-project/Qwen-14B/Qwen-main/AutoGPTQ/build/temp.linux-x86_64-cpython-312/autogptq_extension/cuda_64/autogptq_cuda_64.o.d -fno-strict-overflow -DNDEBUG -O2 -Wall -fPIC -O2 -isystem /usr/local/miniconda3/include -fPIC -O2 -isystem /usr/local/miniconda3/include -fPIC -I/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include -I/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/torch/csrc/api/include -I/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/TH -I/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/THC -I/usr/local/cuda/include -I/home/dongna/lsx-project/Qwen-14B/Qwen-main/AutoGPTQ/autogptq_cuda -I/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/nvidia/cuda_runtime/include -I/home/dongna/.virtualenvs/Qwen-14B/include -I/usr/local/miniconda3/include/python3.12 -c -c /home/dongna/lsx-project/Qwen-14B/Qwen-main/AutoGPTQ/autogptq_extension/cuda_64/autogptq_cuda_64.cpp -o /home/dongna/lsx-project/Qwen-14B/Qwen-main/AutoGPTQ/build/temp.linux-x86_64-cpython-312/autogptq_extension/cuda_64/autogptq_cuda_64.o -DTORCH_API_INCLUDE_EXTENSION_H '-DPYBIND11_COMPILER_TYPE="_gcc"' '-DPYBIND11_STDLIB="_libstdcpp"' '-DPYBIND11_BUILD_ABI="_cxxabi1011"' -DTORCH_EXTENSION_NAME=autogptq_cuda_64 -D_GLIBCXX_USE_CXX11_ABI=0 -std=c++17

In file included from /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/ATen/core/TensorBase.h:14,

from /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/ATen/core/TensorBody.h:38,

from /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/ATen/core/Tensor.h:3,

from /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/ATen/Tensor.h:3,

from /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/torch/csrc/autograd/function_hook.h:3,

from /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/torch/csrc/autograd/cpp_hook.h:2,

from /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/torch/csrc/autograd/variable.h:6,

from /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/torch/csrc/autograd/autograd.h:3,

from /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/torch/csrc/api/include/torch/autograd.h:3,

from /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/torch/csrc/api/include/torch/all.h:7,

from /home/dongna/lsx-project/Qwen-14B/Qwen-main/AutoGPTQ/autogptq_extension/cuda_64/autogptq_cuda_64.cpp:1:

/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/c10/util/C++17.h:13:2: error: #error "You're trying to build PyTorch with a too old version of GCC. We need GCC 9 or later."

#error \

^~~~~

In file included from /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/c10/core/Backend.h:5,

from /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/c10/core/Layout.h:3,

from /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/ATen/core/TensorBody.h:12,

from /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/ATen/core/Tensor.h:3,

from /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/ATen/Tensor.h:3,

from /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/torch/csrc/autograd/function_hook.h:3,

from /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/torch/csrc/autograd/cpp_hook.h:2,

from /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/torch/csrc/autograd/variable.h:6,

from /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/torch/csrc/autograd/autograd.h:3,

from /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/torch/csrc/api/include/torch/autograd.h:3,

from /home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/torch/csrc/api/include/torch/all.h:7,

from /home/dongna/lsx-project/Qwen-14B/Qwen-main/AutoGPTQ/autogptq_extension/cuda_64/autogptq_cuda_64.cpp:1:

/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/c10/core/DispatchKeySet.h:752:73: in ‘constexpr’ expansion of ‘c10::DispatchKeySet(AutogradCPU)’

/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/c10/core/DispatchKeySet.h:236:42: in ‘constexpr’ expansion of ‘c10::toBackendComponent(k)’

/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/c10/core/DispatchKeySet.h:752:73: error: overflow in constant expression [-fpermissive]

constexpr auto autograd_cpu_ks = DispatchKeySet(DispatchKey::AutogradCPU);

^

/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/c10/core/DispatchKeySet.h:753:73: in ‘constexpr’ expansion of ‘c10::DispatchKeySet(AutogradIPU)’

/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/c10/core/DispatchKeySet.h:236:42: in ‘constexpr’ expansion of ‘c10::toBackendComponent(k)’

/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/c10/core/DispatchKeySet.h:753:73: error: overflow in constant expression [-fpermissive]

constexpr auto autograd_ipu_ks = DispatchKeySet(DispatchKey::AutogradIPU);

^

/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/c10/core/DispatchKeySet.h:754:73: in ‘constexpr’ expansion of ‘c10::DispatchKeySet(AutogradXPU)’

/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/c10/core/DispatchKeySet.h:236:42: in ‘constexpr’ expansion of ‘c10::toBackendComponent(k)’

/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/c10/core/DispatchKeySet.h:754:73: error: overflow in constant expression [-fpermissive]

constexpr auto autograd_xpu_ks = DispatchKeySet(DispatchKey::AutogradXPU);

^

/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/c10/core/DispatchKeySet.h:755:75: in ‘constexpr’ expansion of ‘c10::DispatchKeySet(AutogradCUDA)’

/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/c10/core/DispatchKeySet.h:236:42: in ‘constexpr’ expansion of ‘c10::toBackendComponent(k)’

/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/c10/core/DispatchKeySet.h:755:75: error: overflow in constant expression [-fpermissive]

constexpr auto autograd_cuda_ks = DispatchKeySet(DispatchKey::AutogradCUDA);

^

/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/c10/core/DispatchKeySet.h:756:73: in ‘constexpr’ expansion of ‘c10::DispatchKeySet(AutogradXLA)’

/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/c10/core/DispatchKeySet.h:236:42: in ‘constexpr’ expansion of ‘c10::toBackendComponent(k)’

/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/c10/core/DispatchKeySet.h:756:73: error: overflow in constant expression [-fpermissive]

constexpr auto autograd_xla_ks = DispatchKeySet(DispatchKey::AutogradXLA);

^

/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/c10/core/DispatchKeySet.h:757:75: in ‘constexpr’ expansion of ‘c10::DispatchKeySet(AutogradLazy)’

/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/c10/core/DispatchKeySet.h:236:42: in ‘constexpr’ expansion of ‘c10::toBackendComponent(k)’

/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/c10/core/DispatchKeySet.h:757:75: error: overflow in constant expression [-fpermissive]

constexpr auto autograd_lazy_ks = DispatchKeySet(DispatchKey::AutogradLazy);

^

/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/c10/core/DispatchKeySet.h:758:75: in ‘constexpr’ expansion of ‘c10::DispatchKeySet(AutogradMeta)’

/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/c10/core/DispatchKeySet.h:236:42: in ‘constexpr’ expansion of ‘c10::toBackendComponent(k)’

/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/c10/core/DispatchKeySet.h:758:75: error: overflow in constant expression [-fpermissive]

constexpr auto autograd_meta_ks = DispatchKeySet(DispatchKey::AutogradMeta);

^

/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/c10/core/DispatchKeySet.h:759:73: in ‘constexpr’ expansion of ‘c10::DispatchKeySet(AutogradMPS)’

/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/c10/core/DispatchKeySet.h:236:42: in ‘constexpr’ expansion of ‘c10::toBackendComponent(k)’

/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/c10/core/DispatchKeySet.h:759:73: error: overflow in constant expression [-fpermissive]

constexpr auto autograd_mps_ks = DispatchKeySet(DispatchKey::AutogradMPS);

^

/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/c10/core/DispatchKeySet.h:760:73: in ‘constexpr’ expansion of ‘c10::DispatchKeySet(AutogradHPU)’

/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/c10/core/DispatchKeySet.h:236:42: in ‘constexpr’ expansion of ‘c10::toBackendComponent(k)’

/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/c10/core/DispatchKeySet.h:760:73: error: overflow in constant expression [-fpermissive]

constexpr auto autograd_hpu_ks = DispatchKeySet(DispatchKey::AutogradHPU);

^

/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/c10/core/DispatchKeySet.h:762:52: in ‘constexpr’ expansion of ‘c10::DispatchKeySet(AutogradPrivateUse1)’

/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/c10/core/DispatchKeySet.h:236:42: in ‘constexpr’ expansion of ‘c10::toBackendComponent(k)’

/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/c10/core/DispatchKeySet.h:762:52: error: overflow in constant expression [-fpermissive]

DispatchKeySet(DispatchKey::AutogradPrivateUse1);

^

/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/c10/core/DispatchKeySet.h:764:52: in ‘constexpr’ expansion of ‘c10::DispatchKeySet(AutogradPrivateUse2)’

/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/c10/core/DispatchKeySet.h:236:42: in ‘constexpr’ expansion of ‘c10::toBackendComponent(k)’

/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/c10/core/DispatchKeySet.h:764:52: error: overflow in constant expression [-fpermissive]

DispatchKeySet(DispatchKey::AutogradPrivateUse2);

^

/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/c10/core/DispatchKeySet.h:766:52: in ‘constexpr’ expansion of ‘c10::DispatchKeySet(AutogradPrivateUse3)’

/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/c10/core/DispatchKeySet.h:236:42: in ‘constexpr’ expansion of ‘c10::toBackendComponent(k)’

/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/include/c10/core/DispatchKeySet.h:766:52: error: overflow in constant expression [-fpermissive]

DispatchKeySet(DispatchKey::AutogradPrivateUse3);

^

ninja: build stopped: subcommand failed.

Traceback (most recent call last):

File "/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/utils/cpp_extension.py", line 2107, in _run_ninja_build

subprocess.run(

File "/usr/local/miniconda3/lib/python3.12/subprocess.py", line 571, in run

raise CalledProcessError(retcode, process.args,

subprocess.CalledProcessError: Command '['ninja', '-v']' returned non-zero exit status 1.

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "<string>", line 2, in <module>

File "<pip-setuptools-caller>", line 34, in <module>

File "/home/dongna/lsx-project/Qwen-14B/Qwen-main/AutoGPTQ/setup.py", line 247, in <module>

setup(

File "/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/setuptools/__init__.py", line 104, in setup

return distutils.core.setup(**attrs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/setuptools/_distutils/core.py", line 184, in setup

return run_commands(dist)

^^^^^^^^^^^^^^^^^^

File "/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/setuptools/_distutils/core.py", line 200, in run_commands

dist.run_commands()

File "/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/setuptools/_distutils/dist.py", line 969, in run_commands

self.run_command(cmd)

File "/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/setuptools/dist.py", line 967, in run_command

super().run_command(command)

File "/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/setuptools/_distutils/dist.py", line 988, in run_command

cmd_obj.run()

File "/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/wheel/bdist_wheel.py", line 368, in run

self.run_command("build")

File "/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/setuptools/_distutils/cmd.py", line 316, in run_command

self.distribution.run_command(command)

File "/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/setuptools/dist.py", line 967, in run_command

super().run_command(command)

File "/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/setuptools/_distutils/dist.py", line 988, in run_command

cmd_obj.run()

File "/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/setuptools/_distutils/command/build.py", line 132, in run

self.run_command(cmd_name)

File "/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/setuptools/_distutils/cmd.py", line 316, in run_command

self.distribution.run_command(command)

File "/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/setuptools/dist.py", line 967, in run_command

super().run_command(command)

File "/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/setuptools/_distutils/dist.py", line 988, in run_command

cmd_obj.run()

File "/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/setuptools/command/build_ext.py", line 91, in run

_build_ext.run(self)

File "/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/setuptools/_distutils/command/build_ext.py", line 359, in run

self.build_extensions()

File "/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/utils/cpp_extension.py", line 870, in build_extensions

build_ext.build_extensions(self)

File "/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/setuptools/_distutils/command/build_ext.py", line 479, in build_extensions

self._build_extensions_serial()

File "/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/setuptools/_distutils/command/build_ext.py", line 505, in _build_extensions_serial

self.build_extension(ext)

File "/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/setuptools/command/build_ext.py", line 252, in build_extension

_build_ext.build_extension(self, ext)

File "/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/setuptools/_distutils/command/build_ext.py", line 560, in build_extension

objects = self.compiler.compile(

^^^^^^^^^^^^^^^^^^^^^^

File "/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/utils/cpp_extension.py", line 683, in unix_wrap_ninja_compile

_write_ninja_file_and_compile_objects(

File "/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/utils/cpp_extension.py", line 1783, in _write_ninja_file_and_compile_objects

_run_ninja_build(

File "/home/dongna/.virtualenvs/Qwen-14B/lib/python3.12/site-packages/torch/utils/cpp_extension.py", line 2123, in _run_ninja_build

raise RuntimeError(message) from e

RuntimeError: Error compiling objects for extension

[end of output]

note: This error originates from a subprocess, and is likely not a problem with pip.

ERROR: Failed building wheel for auto_gptq

Running setup.py clean for auto_gptq

Failed to build auto_gptq

ERROR: Could not build wheels for auto_gptq, which is required to install pyproject.toml-based projects

```

| closed | 2024-05-24T08:31:50Z | 2024-06-02T09:09:36Z | https://github.com/AutoGPTQ/AutoGPTQ/issues/678 | [] | StephenSX66 | 0 |

hbldh/bleak | asyncio | 700 | WinRT: pairing crashes if accepting too soon | * bleak version: 0.13.0

* Python version: 3.8.2

* Operating System: Windows 10

### Description

Trying to pair and connect to the device, after accepting to pair on the OS pop-up, Bleak fails.

The device I am trying to connect to requires pairing.

If I accept pairing as soon as it pops up, the script fails. If I wait until after services discovery has finished, and only then accept the pairing, it will work OK.

### What I Did

With this code:

```python

# bleak_minimal_example.py

import asyncio

from bleak import BleakScanner

from bleak import BleakClient

address = "AA:AA:AA:AA:AA:AA"

# Characteristics UUIDs

NUM_SENSORS_UUID = "b9e634a8-57ef-11e9-8647-d663bd873d93"

# ===============================================================

# Connect and read

# ===============================================================

async def run_connection(address, debug=False):

async with BleakClient(address) as client:

print(" >> Please accept pairing")

await asyncio.sleep(5.0)

print(" >> Reading...")

num_sensors = await client.read_gatt_char(NUM_SENSORS_UUID, use_cached = True)

print(" Number of sensors: {0}".format(num_sensors.hex()))

# ===============================================================

# MAIN

# ===============================================================

if __name__ == "__main__":

loop = asyncio.get_event_loop()

loop.run_until_complete(run_connection(address))

print ('>> Goodbye!')

exit(0)

```

**Use case 1: fails**

Accepting the pairing before it finished discovering services - fails

1. the script calls BleakClient.connect()

2. connects to the device and Windows pop-up requests to pair

3. Bleak.connect() is still doing the discovery (and it takes long, because the device has many services with many chars.)

4. if I accept the pairing before it finished discovering it fails

The traceback of the error:

```

Traceback (most recent call last):

File "bleak_minimal_example.py", line 86, in <module>

loop.run_until_complete(run_connection(device_address))

File "C:\Program Files\Python38\lib\asyncio\base_events.py", line 616, in run_until_complete

return future.result()

File "bleak_minimal_example.py", line 50, in run_connection

async with BleakClient(address) as client:

File "C:\Program Files\Python38\lib\site-packages\bleak\backends\client.py", line 61, in __aenter__

await self.connect()

File "C:\Program Files\Python38\lib\site-packages\bleak\backends\winrt\client.py", line 258, in connect

await self.get_services()

File "C:\Program Files\Python38\lib\site-packages\bleak\backends\winrt\client.py", line 446, in get_services

await service.get_characteristics_async(

OSError: [WinError -2147418113] Catastrophic failure

```

**Use case 2: succeeds**

Waiting to accept pairing, then succeeds

1. the script calls BleakClient.connect()

2. connects to the device and Windows pop-up requests to pair

3. Bleak.connect() is still doing the discovery (and it takes long, because the device has many services with many chars.)

4. after connect returned I print ('Please accept pairing')

5. I accept the pairing

6. the script reads + writes... all good

### Alternative use of the API:

With the script modified to call pair() before connect():

```

# bleak_minimal_pair_example.py

import asyncio

from bleak import BleakScanner

from bleak import BleakClient

address = "AA:AA:AA:AA:AA:AA"

# Characteristics UUIDs

NUM_SENSORS_UUID = "b9e634a8-57ef-11e9-8647-d663bd873d93"

# ===============================================================

# Connect and read

# ===============================================================

async def run_connection(address, debug=False):

client = BleakClient(address)

if await client.pair():

pritnt("paired")

await client.connect()

print(" >> Reading...")

num_sensors = await client.read_gatt_char(NUM_SENSORS_UUID, use_cached = True)

print(" Number of sensors: {0}".format(num_sensors.hex()))

# ===============================================================

# MAIN

# ===============================================================

if __name__ == "__main__":

loop = asyncio.get_event_loop()

loop.run_until_complete(run_connection(address))

print ('>> Goodbye!')

exit(0)

```

The execution fails as follows:

The traceback of the error:

```

python bleak_minimal_pair_example.py

Traceback (most recent call last):

File "bleak_minimal_pair_example.py", line 47, in <module>

loop.run_until_complete(run_connection(address))

File "C:\Program Files\Python38\lib\asyncio\base_events.py", line 616, in run_until_complete

return future.result()

File "bleak_minimal_pair_example.py", line 31, in run_connection

if await client.pair():

File "C:\Program Files\Python38\lib\site-packages\bleak\backends\winrt\client.py", line 336, in pair

self._requester.device_information.pairing.can_pair

AttributeError: 'NoneType' object has no attribute 'device_information'

``` | open | 2021-12-27T19:17:45Z | 2022-11-22T14:03:54Z | https://github.com/hbldh/bleak/issues/700 | [

"Backend: WinRT"

] | marianacalzon | 2 |

piskvorky/gensim | data-science | 3,019 | can not import ENGLISH_CONNECTOR_WORDS from gensim.models.phrases | #### Problem description

When I try to load the gensim ENGLISH_CONNECTOR_WORDS, it seems to be missing in my current version. I am trying to use common stopwords in my Phrase detection training.

#### Steps/code/corpus to reproduce

Here is what I tried to do by following the [gensim Phrase Detection documnetation](https://radimrehurek.com/gensim/models/phrases.html).

```python

from gensim.models.phrases import Phrases, ENGLISH_CONNECTOR_WORDS

```

Here is the output:

```python

---------------------------------------------------------------------------

ImportError Traceback (most recent call last)

<ipython-input-5-f038e2aaf034> in <module>

----> 1 from gensim.models.phrases import Phrases, ENGLISH_CONNECTOR_WORDS

ImportError: cannot import name 'ENGLISH_CONNECTOR_WORDS' from 'gensim.models.phrases' (/project/venv/lib/python3.9/site-packages/gensim/models/phrases.py)

```

#### Versions

```python

macOS-10.15.7-x86_64-i386-64bit

Python 3.9.0 (default, Oct 30 2020, 16:46:27)

[Clang 12.0.0 (clang-1200.0.32.2)]

Bits 64

NumPy 1.19.4

SciPy 1.6.0

gensim 3.8.3

FAST_VERSION 0

```

| closed | 2021-01-06T02:20:05Z | 2021-01-06T11:08:57Z | https://github.com/piskvorky/gensim/issues/3019 | [] | syedarehaq | 1 |

Kanaries/pygwalker | pandas | 618 | [BUG] Memory growth when using PyGWalker with Streamlit | **Describe the bug**

I observe RAM growth when using PyGWalker with Streamlit framework. The RAM usage constantly grow on page reload (on every app run). When using Streamlit without PyGWalker, RAM usage remain constant (flat, does not grow). It seems like memory is never released, this was observed indirectly (we tracked growth locally, see reproduction below, but we also observe same issue in Azure web app and RAM usage never decline).

**To Reproduce**

We tracked down the issue with isolated Streamlit app with PyGwalker and memory profile (run with `python -m streamlit run app.py`):

```

# app.py

import numpy as np

np.random.seed(seed=1)

import pandas as pd

from memory_profiler import profile

from pygwalker.api.streamlit import StreamlitRenderer

@profile

def app():

# Create random dataframe

df = pd.DataFrame(np.random.randint(0, 100, size=(100, 4)), columns=list("ABCD"))

render = StreamlitRenderer(df)

render.explorer()

app()

```

Observed output for a few consequent reloads from browser (press `R`, rerun):

```

Line # Mem usage Increment Occurrences Line Contents

13 302.6 MiB 23.3 MiB 1 render.explorer()

13 315.4 MiB 23.3 MiB 1 render.explorer()

13 325.8 MiB 23.3 MiB 1 render.explorer()

```

**Expected behavior**

RAM usage to remain at constant level between app reruns.

**Screenshots**

On screenshot you may observe a user activity peaks (cause CPU usage) and growing RAM usage (memory set).

On this screenshot a debug app memory profiling is displayed.

**Versions**

streamlit 1.38.0

pygwalker 0.4.9.3

memory_profiler (latest)

python 3.9.10

browser: chrome 128.0.6613.138 (Official Build) (64-bit)

Tested locally on Windows 11

*Thanks for support!* | open | 2024-09-13T07:23:36Z | 2024-09-29T02:07:33Z | https://github.com/Kanaries/pygwalker/issues/618 | [

"bug",

"good first issue",

"P1"

] | ChrnyaevEK | 3 |

microsoft/MMdnn | tensorflow | 529 | Could not support Slice in pytorch to IR | Platform (like ubuntu 16.04/win10): ubuntu 16.04

Python version:3.6

Source framework with version (like Tensorflow 1.4.1 with GPU):pytorch0.4.0 with GPU

Destination framework with version (like CNTK 2.3 with GPU):caffe

| open | 2018-12-17T07:55:59Z | 2018-12-26T13:48:40Z | https://github.com/microsoft/MMdnn/issues/529 | [

"enhancement"

] | xiaoranchenwai | 1 |

davidsandberg/facenet | tensorflow | 510 | Hi,a question about export embeddings and labels | Hello, I have a quesion bout the labels by export_embeddings.py.

I think the 'label_list' is the same as 'classes'

So I can only ues the label_list to train my own model?

Looking farword your replying sincely

Thanks

@davidsandberg @cjekel

| closed | 2017-10-31T08:04:33Z | 2017-11-07T01:00:42Z | https://github.com/davidsandberg/facenet/issues/510 | [] | xvdehao | 1 |

tortoise/tortoise-orm | asyncio | 1,853 | IntField: constraints not taken into account | **Describe the bug**

The IntField has the property constraints. It contains the constraints `ge` and `le`. These constraints are not taken into account (in any place as far as I can tell) or they should be reflected by a validator.

**To Reproduce**

```py

from tortoise import models, fields, run_async

from tortoise.contrib.test import init_memory_sqlite

class Acc(models.Model):

id = fields.IntField(pk=True)

some = fields.IntField()

async def main():

constraints_id = Acc._meta.fields_map.get('id').constraints # {'ge': -2147483648, 'le': 2147483647}

too_high_id = constraints_id.get('le') + 1

constraints_some = Acc._meta.fields_map.get('some').constraints # {'ge': -2147483648, 'le': 2147483647}

too_low_some = constraints_some.get('ge') - 1

acc = Acc(id=too_high_id, some=too_low_some) # this should throw an error

await acc.save() # or maybe this

if __name__ == '__main__':

run_async(init_memory_sqlite(main)())

```

**Expected behavior**

Either the constraints should match the capabilities of the DB or the constraints should be checked beforehand. And an error should be thrown.

**Additional context**

The produced Pydantic Model by pydantic_model_creator would check those constraints.

| open | 2025-01-17T10:30:36Z | 2025-02-24T10:49:30Z | https://github.com/tortoise/tortoise-orm/issues/1853 | [

"enhancement"

] | markus-96 | 0 |